Abstract

Plant diseases can cause a considerable reduction in the quality and number of agricultural products. Guava, well known to be the tropics’ apple, is one significant fruit cultivated in tropical regions. It is attacked by 177 pathogens, including 167 fungal and others such as bacterial, algal, and nematodes. In addition, postharvest diseases may cause crucial production loss. Due to minor variations in various guava disease symptoms, an expert opinion is required for disease analysis. Improper diagnosis may cause economic losses to farmers’ improper use of pesticides. Automatic detection of diseases in plants once they emerge on the plants’ leaves and fruit is required to maintain high crop fields. In this paper, an artificial intelligence (AI) driven framework is presented to detect and classify the most common guava plant diseases. The proposed framework employs the E color difference image segmentation to segregate the areas infected by the disease. Furthermore, color (RGB, HSV) histogram and textural (LBP) features are applied to extract rich, informative feature vectors. The combination of color and textural features are used to identify and attain similar outcomes compared to individual channels, while disease recognition is performed by employing advanced machine-learning classifiers (Fine KNN, Complex Tree, Boosted Tree, Bagged Tree, Cubic SVM). The proposed framework is evaluated on a high-resolution (18 MP) image dataset of guava leaves and fruit. The best recognition results were obtained by Bagged Tree classifier on a set of RGB, HSV, and LBP features (99% accuracy in recognizing four guava fruit diseases (Canker, Mummification, Dot, and Rust) against healthy fruit). The proposed framework may help the farmers to avoid possible production loss by taking early precautions.

1. Introduction

Guava is grown in tropical and subtropical climates that are conducive to its development [1]. It is high in calcium, vitamin C, nicotinic acid, phosphorus, and soluble fiber, providing an important source of food for many less developed countries. Guava is thought to have originated in South America (Mexico to Peru) [2]. South Asian nations, the Hawaiian Islands, Cuba, Brazil, Pakistan, and India produce most of it.

Mummification, guava wilt, fruit canker, fruit spot, Stem canker, Leaf blight, Rust of guava, Fruit rot, and dry rot are severe diseases that can reduce the overall yield of guava plants. Anthracnose [2], which was first discovered by Mehta in Uttar Pradesh in 1951, is a disease that affects guava plants, the first study of guava wilt was made by Gupta [3], fruit canker was first reported from Bombay [4] caused by Pestalotia psidii Pat, fruit spot caused by alga was first reported by Ruehle [5] and, Guava stem canker was first discovered in Patharchatta [6]. Mitra (1929) reported Dastur, and in 1969 at Vellayani, 40% of the fruit were infected with dry rot [7]. To control these type of diseases, different types of fungicides and chemicals applied on guava crop, but it affects environment badly and cause economic loss.

Pakistan’s main natural resources are agricultural land and water. 25% of agriculture accounts for about 25% of Pakistan’s GDP and hires about 43% of the workforce [8]. In a country’s economic growth, the agricultural sector is critical. The fact that agriculture growth is primarily responsible for Pakistan’s GDP growth has been reported and sponsored. If the agricultural sector’s growth rate is poor, the country will face a shortage of food and other essential raw materials. Plants provide food and are essential sources of energy-rich compounds, vitamins, and minerals; in Pakistan, the Guava is a common fruit. Pakistan is the world’s fourth-largest guava producer. This fruit was produced in Pakistan in 1,784,300 metric tons [9].

In this case, the most important thing is to make an accurate and timely diagnosis of the disease. If these diseases are not correctly identified and managed, they will have a negative impact on the next generation of guavas. Close observation is required by managing intermittent yields to check which disease significantly impacts crop production after harvest time. Experts can identify and classify guava disease based on symptoms. This, however, necessarily requires manual observation and continuous testing, which can be error-prone and costly. A large portion of the agriculturists in underdeveloped countries are uneducated and unaware of non-native diseases. As a result, the former will have to drive long distances to find a well-trained specialist, which could be time-consuming and costly [10]. As a result, plant diseases are a severe hurdle towards achieving agricultural sustainability in developing countries [11,12].

One way to address this problem is to use computer technology for the detection of plant diseases. Such a system would either substitute experts or have a second opinion on an expert’s decision. As this solution is cost-effective and straightforward [13], the farmers can take corrective measures to avoid the disease’s spread [14]. Researchers have developed various diagnostic systems with the help of computer technology to diagnose various crop diseases [14]. These systems feed by RGB images that are red, blue, and green as input then decide either image is healthy or unhealthy if the image fed as input ( ) is unhealthy. Segmentation applied separates them into standard image () and diseased image () as in Equation (1) and feature extraction technique is applied to the diseased part for classification.

The contribution of the research is as follows:

- A Guava disease classification framework based on guava plant images is proposed. The proposed framework separates the Guava images into the diseased image () and non-diseased () image. The proposed approach’s primary goal is to detect the disease present in guava plant images.

- Image-level and disease-level-based feature extraction approaches are used to obtain robust guava disease recognition.

- The corresponding disease-segmented image with a specific label is assigned a class, which gives information about the disease. Four guava diseases, such as Canker, Mummification, Dot, Rust, and one extra target class, “healthy”, are covered in the presented study.

- The proposed framework is evaluated on a high-resolution image dataset.

The high-resolution images cannot be handled using conventional deep convolutional neural network architectures without significant reduction of the resolution, so a large part of the information contained in images is lost, which affects the performance of image segmentation, and classification negatively [15,16]. Therefore, we adopted a combination of computer-vision and machine-learning techniques.

The remaining paper is organized as follows. Recent related work with the summary of existing methodologies is given in Section 2. A proposed method including data pre-processing, feature extraction approaches, segmentation, and classification are presented in Section 3. Section 4 contains the dataset description, experimental results, and analysis. The study is concluded in Section 5.

2. Related Work

Previously, most researchers relied on image processing, pattern classification, and machine-learning techniques, especially in agriculture [17,18,19]. Video cameras are used to capture images from the environment first. Then, to extract useful features from images, some operations performed on the image [20]. The detection of diseased regions in an image is the core objective. There has been an increasing growth of research focusing on plant disease classification in recent years aiming to develop effective plant diagnostics systems for farmers [21,22,23]. A variety of Artificial Intelligence (AI) methods have been adopted in classifying and detection various plant diseases such as olive [24], pomegranate [25], plum [26], rice [27], tomato [28], cassava [29], mango [30], tea leaf [31], apple [32], citrus [33], oranges [34], etc.

For the diagnosis of plant diseases, various methods have been presented. Some of the promising techniques to diagnose the disease in plants are discussed below. In [35] presented a tool for detecting citrus diseases that can be done automatically. They use the algorithm that was denoted as E algorithm that uses the color difference to define the region affected by the disease and the color histogram and textural features used for classification purposes.

The authors in [36] developed a multi-spectral camera system that can detect defects on citrus surfaces in real time by capturing visual and close proximity images from the same scene. In [37], the author proposed novel segmentation techniques to segment the lesion areas affected by anthracnose. Standard and anthracnose effects on fruit were categorized using neural network (NN) classifiers. In [38], texture characteristics are used to identify plant leaf diseases, and a technique to detect unhealthy regions of plant leaves has been suggested. For the segmentation of leaf decay ailment disease in betel vine leaf image-processing and computer-vision algorithms proposed by Dey et al. in [39]; threshold known as Otsu was applied. In [40] cellular automate filter is used to process the input leaf images. For detecting a disease named bacterial blight, which is present in pomegranate fruitlet, an image-processing approach is proposed in [41]. At an early stage, corn/weed conditions were identified in [42] using Back Propagation Neural Network. Pydipati et al. in [43], create a color matrix CCM as a function of texture, using the adverse influence of images in the HSI color space. Moreover, Napoli et al. [44] suggested using the simplified firefly algorithm to search for critical areas in images for recognizing the target areas of interest.

Zhang and Chaisattapagon suggested a color-based weed detection method for Kansas wheat [45].

In [46], color indices were created to distinguish weeds in various environments, including dirt, rubble, and lightening. An algorithm based on statistics collected from local maxima and minima was proposed in [47] to extract leaf/plant shape features.

Crowe and Delwiche [48] used two combined near-infrared (NIR) images of fruit to create an algorithm for analyzing apple and peach defects. Edwards et al. [49] used various pattern recognition models to distinguish surface blemishes on different apple varieties, including multi-layer backpropagation, KNN, and nearest cluster algorithms are both unimodal Gaussian algorithms. They used reflectance spectra of the whole tree to assess the damage caused by citrus blight disease on citrus plants.

An innovative algorithm for lesion area extraction is presented in [50]. To recognize and classify the type of disease, first-order statistical features based on texture are extracted from the lesion region. Objects are then categorized based on their texture characteristics. The authors in [51] describes a legal remote sensing technique for monitoring plant diseases in arable crops at an early stage of disease production from the ground. Khamparia et al. [52] adopted a hybrid method for recognizing crop leaf diseases using the combination of convolutional neural networks (CNNs) and autoencoders.

The related works are summarized in Table 1.

Table 1.

Summary of existing methodologies on plant disease recognition.

Critics (advantages and drawbacks) of the related works are presented in Table 2.

Table 2.

Critics (advantages and drawbacks) of the related works.

3. Methodology

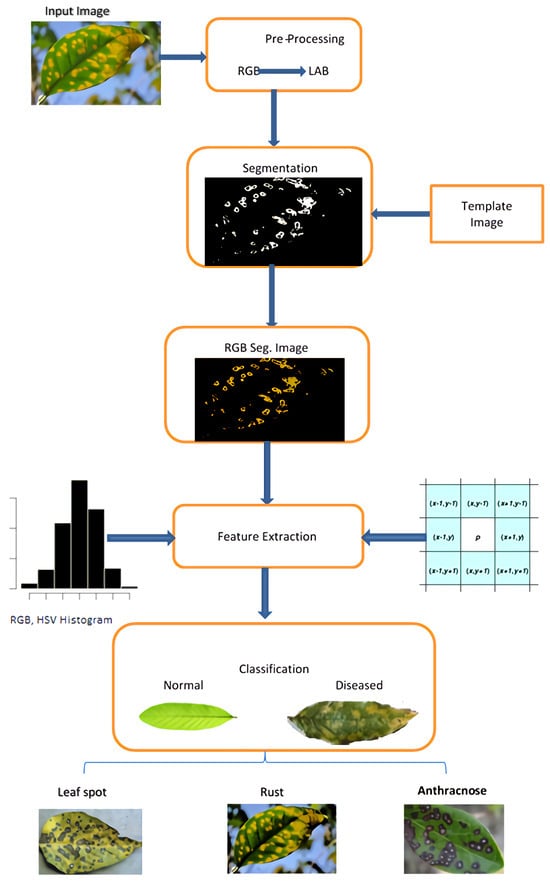

The presented framework uses a computer-vision-based approach to classify guava plants’ leaves and fruit in diseased and non-diseased images. Furthermore, it assigns a diseased image to a particular disease group. Figure 1 depicts the proposed system’s workflow diagram. The following are the stages of our suggested procedure:

Figure 1.

Workflow of Guava disease classification system.

- 1.

- Pre-processing of image.

- 2.

- Segmentation of image.

- 3.

- Feature extraction.

- 4.

- Classification.

3.1. Image Pre-Processing

Researchers used a digital camera to capture guava leaves from various orchards to improve the sample’s uniformity and obtain more accurate image data. Image acquisition plays a significant role in this system. Even if the image were improved, the desired results could not be produced if the image were not captured properly. The obtained images are then resized to pixels size. Image pre-processing techniques enhance the features, reduce distortions, and make the image more compelling. The lowest abstraction stage is color space conversion, and image processing [64].

3.2. Image Enhancement

To improve the quality of digitally stored images, various image-processing methods are used. One improved distribution’s values are mapped to the values of another improved distribution. Histogram equalization is used to improve the contrast of the transformed input image. Because images are captured in various lighting conditions, some images contain bright regions while others contain dark regions, resulting in an unbalanced histogram. The enhanced image is then normalized. The Normalization perform in Equation (2).

where is the gray level cumulative frequency, and represents minimum value of cumulative distribution function. The R × C stands for the total number of pixels in each row and column, and the L stands for the total number of intensities. is the current pixel’s intensity.

3.3. Color Space Transformation

The RGB color range is not advised for color-based detection and color assessment due to the nonuniform features and mixing of chrominance and luminance information [65]. Sometimes some desired information remains invisible in RGB color space; then, color space transformation is applied to those images to acquire explicit information. The proposed algorithm includes the image’s L*, a*, and b* component values. Consequently, the image fed into the device as input must be translated from RGB to LAB color space. First, RGB to XYZ conversion. In the human color vision, the XYZ color space perceives colors [53]. CIE (International Commission on Illumination) created the XYZ color space in 1931 while conducting experiments on human perception [46]. Equation (3) shows how to transform the RGB color space to the XYZ color space using a matrix.

3.4. Image Segmentation

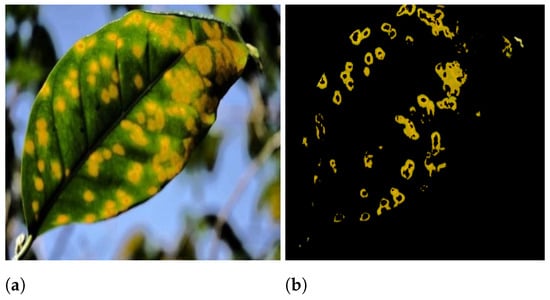

In many practical applications, including object recognition, computer vision, and medical image analysis [45], segmentation is one of the most important and challenging problems. Image segmentation is the process of dividing an image into several parts. Segmentation aims to make an image’s representation more relevant and intuitive by simplifying it. Figure 2a shows the input image and Figure 2b show the diseased part. After conducting segmentation, the diseased region is subjected to feature extraction. The image segmentation process is divided into two steps.

Figure 2.

(a) leaf affected with guava rust (input image), (b) diseased area.

3.4.1. Delta E (E)

We used an algorithm known as E to segment the image by calculating the distance between the colors in the LAB color space [66]. This algorithm saves a prototype of an image’s symptom before segmenting the enhanced image using tolerance and the enhanced image energy difference. The two most important parameters that will determine the system’s efficacy are choosing the retained symptom and the reliability coefficient. As a result, both variables must be calculated very carefully. The color difference E between a*, b* two colors concerning its L*, a*, b*, Equation (11) calculates the variable values.

where

where , and are the 3 input image channels, , and in the LAB color space, are used to display the template image.

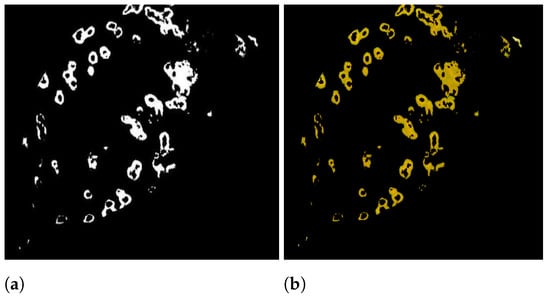

Figure 3a displays the final image after applying segmentation. The disparity in lightness is denoted by the letter . The difference between red and green colors is , and the difference between the colors yellow and blue is .

Figure 3.

(a) Image in binary, (b) Segmented image.

When the threshold is applied to the input image , is obtained in Equation (12).

where T is the measured threshold by E.

3.4.2. Obtaining RGB Image from Binary Image

After the DE algorithm’s acquisition of a binary image. Multiplying the corresponding (one-to-one) elements of the segmented binary image with the input image as equated in Equation (13) yields the colored segmented image.

where × is the multiplicative operator, is a binary image on which segmentation is applied, and is the original image. After the cross-ponding pixels have been multiplied, is the output image.

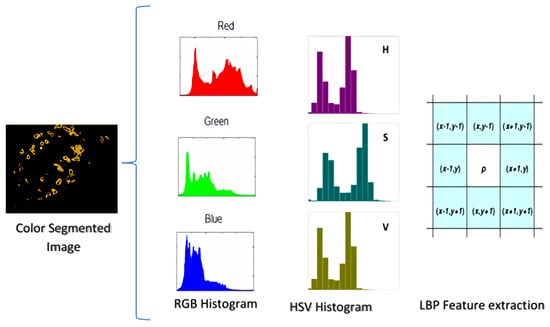

3.5. Feature Extraction

Color seems to be the best descriptor while dealing with plant images that are affected by the disease. Symptoms of diseases are distinguished chromatically. Our proposed technique deals with features based on the colors of input images. These characteristics are obtained from the segmented image’s histogram.

A histogram well and accurately represents the numerical data. It is an estimate of a continuous variable’s probability distribution (quantitative variable). Every channel’s histogram is calculated for feature extraction. Additionally, as shown in Figure 1, the set of features is generated by concatenating features into an array 6.

3.6. RGB and HSV Histogram Features

Plant images are captured in a three-channel RGB color space, with each channel containing unique information. The red channel of a picture contains more data, while the blue channel contains less information. The histogram is a graphical representation of images representing the total number of intensity level frequencies [67]. The frequencies of intensities alter the appearance of images so that each pixel’s position is no longer significant. Color histograms as features have rotation invariance due to this property of histograms. The HSV feature gives the illumination invariance induced by different lighting conditions.

RGB features are calculated as in Equation (14)

where L reflects the total number of gray levels, represents the frequency of the ith gray level of the image’s R, B, and G channels.

The R, G, and B image is translated to the HSV color space, and individual channels are concatenated for the HSV histogram features. The concatenation is shown in Figure 4 and is expressed in Equation (15)

where L refers to the total number of gray levels, represent the value of frequency of i-th gray the level of the image’s H, S, and V channels.

Figure 4.

Illustration of HSV histograms, RGB histograms, and LBP feature extraction.

Table 3 represents a single RGB image with 255 attributes or an HSV image channel equal to gray levels in images.

Table 3.

Feature sets with dimensions.

The feature sets , , , and display the images’ red, green, blue, and concatenated the RGB histograms. Likewise, for both RGB and HSV attributes, the hue, saturation, value, and concatenated HSV histogram features are , , , and , with a dimension of 765. The feature set with 1536 dimensions is the combined set of RGB and HSV features. The {RGB, HSV} combined collection is generated by putting the HSV histogram features first, then the RGB histogram features.

3.7. Local Binary Patterns

The textural identifiers extracted from photographs to construct a features list are referred to as LBP. Moving a frame around the image and evaluating the values of neighboring pixels with the central pixel as a baseline, then defining binary values, creates the LBP feature set. If a neighboring pixel’s value is greater than the center pixel’s value, the value given to the neighboring pixel is 1, otherwise 0. Furthermore, as equated in Equations (16) and (17), the decimal number assigned to the central pixel is determined by the binary of the neighboring pixel.

where denotes the current pixel’s value, and the value of the central pixel, for LBP calculations, P stands for the value of binary numbers and calculates the radius of the window. If p is an even number, it refers to the 8th most significant neighbor and has more weight. As shown in Figure 4, when p is 0, the neighborhood size is 8 since the p-value is the least important.

where T represents the window’s threshold or the window’s central pixel’s shifted value over every pixel. is a phase function that determines each pixel’s locally binary value based on the weighted sum of its neighbors’ pixels.

The LBP histograms are computed after the binary function. Each channel’s LBP is 255, and the combined LBP has 768 dimensions, while the concatenated RGB, HSV, and LBP feature set in f14 has 2304 dimensions. To shape an LBP feature set, LBPs are determined for each RGB image channel as equated in Equation (18).

3.8. Classification

To train classifiers, the extracted features are used. The features of guava fruit images train the classifiers. The proposed system is trained using the K-fold cross-validation technique. has been selected as the value. Different classifiers are used to train the same feature set, such as KNN, SVM, Complex tree, Boosted tree, and Bagged tree, to see which one performs the best. A total dataset of 393 guava images are used for detection and classification, with 77, 83, 76, 70, and 87 from Canker, Mummification, Dot, and Rust images, respectively, and 87 from healthy plant images.

For the classifier (SVM), we used the cubic kernel. For a fine KNN classifier with one neighbor and the distance weight in Euclidean distance, metrics are set to 1. The number of learners is 200, where the learner form of the decision tree is used with the boosted tree ensemble process. With subspace dimension 1, the learning rate is set to 0.1. Boosted algorithms make use of the shallow tree, which takes less time and memory. Consequently, it provides a more approximate solution. A related ensemble approach to Boosted tree ensemble configuration is Learner 200, learner rate set to 0.1, bagged tree and learner sort decision tree, subspace dimension 1. The Bagged approach penetrates deeply into a tree and needs more memory and preparation time, resulting in slow prediction. The generalized error is also estimated by the bagged tree ensemble classifier without the need for additional cross-validation. The learner model of decision tree with the Gini Diversity Index split criterion; is the maximum number of splits is 100 when using the Complex tree.

4. Results and Discussions

4.1. Dataset

With the support of a domain expert, we built a dataset of guava images to illustrate our proposed framework. A high-resolution digital single-lens reflex (DSLR) camera, Canon EOS 1300D (Canon Inc., Tokyo, Japan) with a mm Complementary Metal Oxide Semiconductor (CMOS) 18 MP sensor with a pixel density of 5.43 MP/cm2, was used to capture images. The segmented region’s timbral features are extracted after the color histogram features have been checked for accuracy. Without a standard field of view, the photographs are taken in a variety of lighting conditions. The different groups of the dataset are shown in Table 4. There are a total of 393 images in the dataset: 306 images of diseased guava plants and 87 images of healthy (normal) guava plants. There are four subcategories of infected plant images: Canker (77), Mummification (83), Dot (76), and Rust (70) images. The size of each image is dimensions with 300 dpi resolution. The dataset used for this study is publicly available at [68].

Table 4.

Distribution of classes in the dataset.

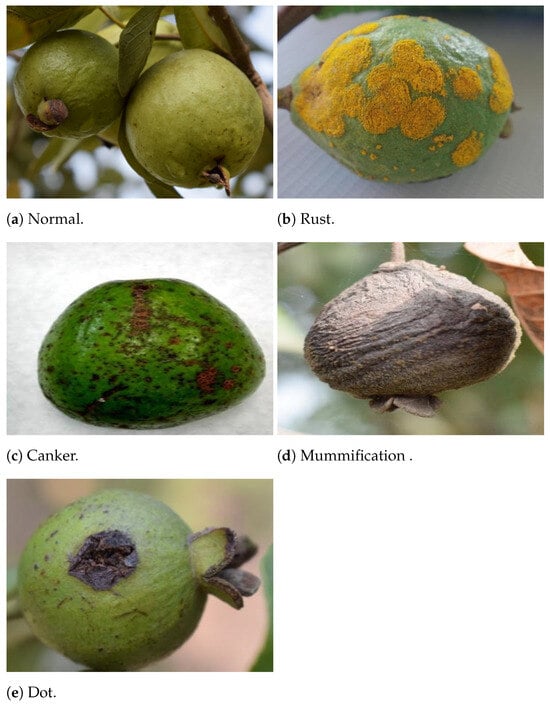

Images sample of Figure 5a are normal, and others in Figure 5b–e are infected from Rust, Canker, Mummification, and Dot, respectively.

Figure 5.

Sample images labeled with each target class (Guava disease).

4.2. Performance Evaluation

4.3. Results

The classifier divides images into normal (N) and diseased (A) categories of classification based on images (P). Furthermore, the classification technique identifies images into different diseases at the disease level (e.g., Can, Mum, Dot, Rus, and Nor refer to Canker, Mummification, Dot, Rust diseases, and normal images, respectively).

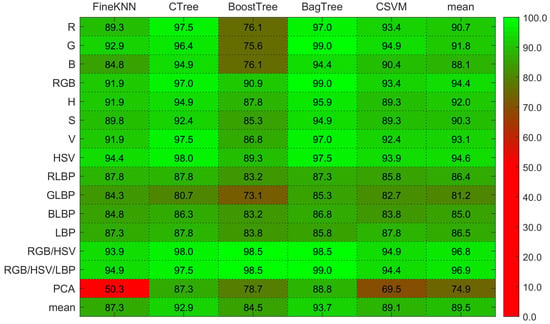

After feature extraction, the classifiers are trained. Table 5, Table 6, Table 7 and Table 8 show the image-level classification results on testing images. Ranks obtained using the Kruskal-Wallis Test based on the mean accuracy and the performance deviating from the mean of one classifier to the mean of other classifiers using hybrid features are presented in Table 9.

Table 5.

Results obtained with different classifiers using RGB histogram features for image-level classification.

Table 6.

Combined results obtained using HSV histogram features for image-level classification.

Table 7.

Results obtained with different classifiers using LBP features for image-level classification.

Table 8.

Results obtained with different classifiers using hybrid features for image-level classification.

Table 9.

Ranks obtained using the Kruskal-Wallis Test based on the mean accuracy and the performance deviating from the mean of one classifier to the mean of other classifiers using hybrid features.

Scale, illumination, and rotation invariance can result from this inconsistency in image acquisition. The image-level accuracy on the LBP features is shown in Table 7. The Fine KNN, Complex Tree, and Bagged Tree ensemble methods are more effective with LBP features. On both color and texture descriptors, the boosted tree ensemble approach performs terribly. Bagged tree and Complex tree outperform other classifiers on color (RGB, HSV) and texture (LBP) attributes.

The image-level classification performance for each separate RGB channel and combined RGB is shown in Table 5. The Bagged tree and Complex tree produce better results on RGB color features, as shown by the results. Cubic SVM performs poorly on the R channel histogram features as compared to Fine KNN. The image-level accuracy for the H, S, and V individual channel features and combined HSV features is shown in Table 6. Fine KNN, Bagged tree, and Complex tree performs well on the HSV color features.

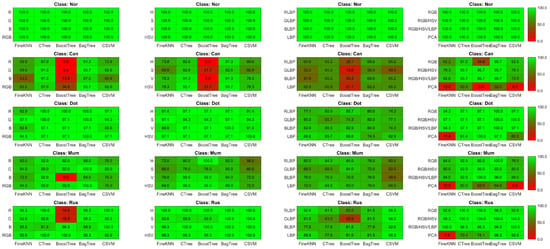

On RGB color properties, Figure 6 shows that the Bagged tree ensemble approach outperforms the Boosted tree ensemble approach, and the analysis shows that the Bagged tree ensemble approach outperforms the Boosted tree ensemble approach. The disease-level accuracy on the H, S, and V individual channels and combined HSV is also shown in Figure 6 and Figure 7. Complex tree outperforms Fine KNN and Cubic SVM when it comes to HSV color features. The accuracy of disease level on the LBP features is also represented in the same heat-maps. With LBP features, the Complex Tree is more accurate.

Figure 6.

Illustration of heat-maps accuracy comparison using RGB histogram, HSV histogram, LBP and hybrid feature vectors for Guava disease recognition.

Figure 7.

Illustration of heat-maps TPR comparison using RGB histogram, HSV histogram, LBP and hybrid feature vectors for Guava disease recognition.

Multiple disease class labels are given to training classifiers to achieve disease recognition (Can, Mum, Dot, Rus, and Nor). The Fine KNN, Complex Tree, SVM, Booted tree, and Bagged tree classifiers are trained on similar selected features. The outcomes of classification on test data images are shown in Figure 6 and Figure 7. The Bagged tree and Complex Tree classifiers outperform the Fine KNN, Cubic SVM, and Booted Tree classifiers at the disease level, identical to the image-level classification results.

The classification results in Table 5, Table 6, Table 7 and Table 8 demonstrate the significance of color features. The outcomes of disease-level success are compared. With the same parameters and classifiers, the combination of features reveals the advantages of color and textural features. Table 8 compares and contrasts the performance of these hybrid features. All the experiments and results represent that color, and textural attributes are essential in identifying plant diseases. Combining these characteristics is also useful for detecting and locating diseased areas in plants.

The Bagged tree ensemble approach had a 99% success rate on the RGB features in disease-level performance, while on the HSV and LBP features, the Complex tree achieved 98% and 87.3% accuracy, accordingly. While the other classifiers, such as Boosted tree and Cubic SVM, perform well on RGB and HSV features, they do not perform well on the LBP features. The bagged tree ensemble method performed better than the boosted tree.

Finally, Figure 7 shows the results for Guava disease recognition. The Boosted tree has achieved the best accuracy of 99% on a set of RGB, HSV, and LBP features while outperforming other classification methods due to its underfitting nature.

Overall, we can observe that using histogram features reduced the overfitting for the Rus target class. However, the normal class, in this case, is still overfitted with 100% accuracy. Similarly, TPR was reduced to 87% with the Can target class. The HSV histogram feature vectors further tackle this entire phenomena with 98.5% accuracy using cubic SVM.

Similarly, LBP feature vectors show the worst accuracy for the Can class using all classifiers with less than 70% accuracy. As opposed to this, the GLBP channel and Complex Tree tend to increase the TPR rate of 100%. The observation with LBP feature vectors was underfitting.

Fusing both histogram and LBP feature vectors with optimal parameter setting overcome the underfitting and overfitting problem with 100% TPR rate using Fine KNN, Boosted Tree and Bagged Tree. However, the remaining two classifiers are bound to a 99.1% TPR rate, respectively.

5. Conclusions

Guava diseases have become a significant problem because they could be responsible for a significant drop in agricultural products’ quality and quantity, thus hindering sustainability in agriculture. Improper diagnosis can lead to substantial financial losses for farmers. In this paper, we presented a framework for recognizing and classifying diseases in guava plants. For evaluation, we used a high-resolution guava leaf and fruit dataset. We used E segmentation to obtain color histogram RGB, HSV, and textural LBP descriptors. We used advanced classifiers such as Fine KNN, Cubic SVM, Complex tree, Boosted tree, and Bagged tree ensemble for image-level and disease-level classification. When using RGB and HSV color features, the Bagged tree ensemble classifier outperformed other classifiers. Furthermore, the Complex tree classifier outperformed other classifiers when using textural (LBP) features. In the case of the Bagged tree ensemble classifier, color features provided the best disease-level discrimination. Overall, the classification accuracy is 99%.

In the future, we intend to extend this work by employing deep learning methods to extract features automatically instead of using handcrafted features.

Author Contributions

Conceptualization, A.A. (Ahmad Almadhor) and H.T.R.; Funding acquisition, B.A. and A.A. (Abdullah Alharbi); Methodology, A.A. (Ahmad Almadhor), H.T.R., M.I.U.L., B.A. and A.A. (Abdullah Alharbi); Software, M.I.U.L., B.A. and A.A. (Abdullah Alharbi); Supervision, M.I.U.L. and R.D.; Validation, H.T.R. and R.D.; Visualization, H.T.R.; Writing—original draft, A.A. (Ahmad Almadhor), H.T.R., M.I.U.L., R.D., B.A. and A.A. (Abdullah Alharbi); Writing—review & editing, H.T.R. and R.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Taif University Researchers Supporting Project number (TURSP-2020/231), Taif University, Taif, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used for this study is publicly available at [68].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mohan, K.; Muralisankar, T.; Uthayakumar, V.; Chandirasekar, R.; Revathi, N.; Ganesan, A.R.; Velmurugan, K.; Sathishkumar, P.; Jayakumar, R.; Seedevi, P. Trends in the extraction, purification, characterisation and biological activities of polysaccharides from tropical and sub-tropical fruits–A comprehensive review. Carbohydr. Polym. 2020, 238, 116185. [Google Scholar] [CrossRef] [PubMed]

- Amusa, N.; Ashaye, O.; Oladapo, M.; Oni, M. Guava fruit anthracnose and the effects on its nutritional and market values in Ibadan, Nigeria. World J. Agric. Sci. 2005, 1, 169–172. [Google Scholar] [CrossRef]

- Gupta, S.D.; Rai, J. Wilt Disease of Guava (Psidium guyava L.). Curr. Sci. 1947, 16, 256–258. [Google Scholar]

- Chibber, H. A working list of diseases of vegetable pests of some of the economic plants, occurring in the Bombay Presidency. Poona Agric. Coll. Mag. 1911, 2, 180–198. [Google Scholar]

- Ruehle, G.; Brewer, C. The FDA Method. Official Method of US Food and Drug Administration, US Department of Agriculture, and National Assn. of Insecticide and Disinfectant Manufacturers for Determination of Phenol Coefficients of Disinfectants; MacNair-Dorland Co.: New York, NY, USA, 1941; pp. 189–201. [Google Scholar]

- Rana, O. Diplodia stem canker, a new disease of guava in Tarai region of Uttar Pradesh. Sci. Cult. 1981, 47, 370–371. [Google Scholar]

- Misra, A. Guava diseases—Their symptoms, causes and management. In Diseases of Fruits and Vegetables: Volume II; Springer: Berlin/Heidelberg, Germany, 2004; pp. 81–119. [Google Scholar]

- Raza, S.A.; Ali, Y.; Mehboob, F. Role of Agriculture in Economic Growth of Pakistan. Int. Res. J. Financ. Econ. 2012, 83, 180–186. [Google Scholar]

- Pariona, A. Top Guava Producing Countries in the World. Worldatlas. 2017. Available online: https://www.worldatlas.com/articles/top-guava-producingcountries-in-the-world.html (accessed on 17 July 2018).

- Pujari, J.D.; Yakkundimath, R.; Byadgi, A.S. Grading and classification of anthracnose fungal disease of fruits based on statistical texture features. Int. J. Adv. Sci. Technol. 2013, 52, 121–132. [Google Scholar]

- Gilligan, C.A. Sustainable agriculture and plant diseases: An epidemiological perspective. Philos. Trans. R. Soc. B Biol. Sci. 2007, 363, 741–759. [Google Scholar] [CrossRef]

- Adenugba, F.; Misra, S.; Maskeliūnas, R.; Damaševičius, R.; Kazanavičius, E. Smart irrigation system for environmental sustainability in Africa: An Internet of Everything (IoE) approach. Math. Biosci. Eng. 2019, 16, 5490–5503. [Google Scholar] [CrossRef]

- Zhou, R.; Kaneko, S.; Tanaka, F.; Kayamori, M.; Shimizu, M. Disease detection of Cercospora Leaf Spot in sugar beet by robust template matching. Comput. Electron. Agric. 2014, 108, 58–70. [Google Scholar] [CrossRef]

- Ali, H.; Lali, M.; Nawaz, M.Z.; Sharif, M.; Saleem, B. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Barr, M. A Novel Technique for Segmentation of High Resolution Remote Sensing Images Based on Neural Networks. Neural Process. Lett. 2020, 52, 679–692. [Google Scholar] [CrossRef]

- Horwath, J.P.; Zakharov, D.N.; Mégret, R.; Stach, E.A. Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. Npj Comput. Mater. 2020, 6. [Google Scholar] [CrossRef]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition—A review. Inf. Process. Agric. 2021, 8, 27–51. [Google Scholar] [CrossRef]

- Gabryel, M.; Damaševičius, R. The Image Classification with Different Types of Image Features; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Zakopane, Poland, 2017; Volume 10245, pp. 497–506. [Google Scholar]

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud Univ. Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Cruz, A.C.; Luvisi, A.; Bellis, L.D.; Ampatzidis, Y. X-FIDO: An Effective Application for Detecting Olive Quick Decline Syndrome with Deep Learning and Data Fusion. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef]

- Pawar, R.; Jadhav, A. Pomogranite disease detection and classification. In Proceedings of the 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017; pp. 2475–2479. [Google Scholar] [CrossRef]

- Zhou, H.; Zhuang, Z.; Liu, Y.; Liu, Y.; Zhang, X. Defect Classification of Green Plums Based on Deep Learning. Sensors 2020, 20, 6993. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Wang, R.; Xie, C.; Liu, L.; Zhang, J.; Li, R.; Wang, F.; Zhou, M.; Liu, W. A Recognition Method for Rice Plant Diseases and Pests Video Detection Based on Deep Convolutional Neural Network. Sensors 2020, 20, 578. [Google Scholar] [CrossRef] [PubMed]

- Fuentes, A.; Yoon, S.; Kim, S.; Park, D. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Ramcharan, A.; Baranowski, K.; McCloskey, P.; Ahmed, B.; Legg, J.; Hughes, D.P. Deep Learning for Image-Based Cassava Disease Detection. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Singh, U.P.; Chouhan, S.S.; Jain, S.; Jain, S. Multilayer Convolution Neural Network for the Classification of Mango Leaves Infected by Anthracnose Disease. IEEE Access 2019, 7, 43721–43729. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Q.; Gao, L. Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model. Symmetry 2019, 11, 343. [Google Scholar] [CrossRef]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-Time Detection of Apple Leaf Diseases Using Deep Learning Approach Based on Improved Convolutional Neural Networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- Iqbal, Z.; Khan, M.A.; Sharif, M.; Shah, J.H.; ur Rehman, M.H.; Javed, K. An automated detection and classification of citrus plant diseases using image processing techniques: A review. Comput. Electron. Agric. 2018, 153, 12–32. [Google Scholar] [CrossRef]

- Capizzi, G.; Lo Sciuto, G.; Napoli, C.; Tramontana, E.; Woźniak, M. A novel neural networks-based texture image processing algorithm for orange defects classification. Int. J. Comput. Sci. Appl. 2016, 13, 45–60. [Google Scholar]

- Lili, N.; Khalid, F.; Borhan, N. Classification of herbs plant diseases via hierarchical dynamic artificial neural network after image removal using kernel regression framework. Int. J. Comput. Sci. Eng. 2011, 3, 15–20. [Google Scholar]

- Aleixos, N.; Blasco, J.; Navarron, F.; Moltó, E. Multispectral inspection of citrus in real-time using machine vision and digital signal processors. Comput. Electron. Agric. 2002, 33, 121–137. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Digital image processing techniques for detecting, quantifying and classifying plant diseases. SpringerPlus 2013, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Arivazhagan, S.; Shebiah, R.N.; Ananthi, S.; Varthini, S.V. Detection of unhealthy region of plant leaves and classification of plant leaf diseases using texture features. Agric. Eng. Int. CIGR J. 2013, 15, 211–217. [Google Scholar]

- Dey, A.K.; Sharma, M.; Meshram, M. Image processing based leaf rot disease, detection of betel vine (Piper betle L.). Procedia Comput. Sci. 2016, 85, 748–754. [Google Scholar] [CrossRef]

- VijayaLakshmi, B.; Mohan, V. Kernel-based PSO and FRVM: An automatic plant leaf type detection using texture, shape, and color features. Comput. Electron. Agric. 2016, 125, 99–112. [Google Scholar] [CrossRef]

- Bhange, M.; Hingoliwala, H. Smart farming: Pomegranate disease detection using image processing. Procedia Comput. Sci. 2015, 58, 280–288. [Google Scholar] [CrossRef]

- Wu, L.; Wen, Y.; Deng, X.; Peng, H. Identification of weed/corn using BP network based on wavelet features and fractal dimension. Sci. Res. Essays 2009, 4, 1194–1200. [Google Scholar]

- Pydipati, R.; Burks, T.; Lee, W. Identification of citrus disease using color texture features and discriminant analysis. Comput. Electron. Agric. 2006, 52, 49–59. [Google Scholar] [CrossRef]

- Napoli, C.; Pappalardo, G.; Tramontana, E.; Marszalek, Z.; Polap, D.; Wozniak, M. Simplified firefly algorithm for 2D image key-points search. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence for Human-like Intelligence (CIHLI), Orlando, FL, USA, 9–12 December 2014. [Google Scholar]

- Zhang, H.; Wu, Q.M.J.; Zheng, Y.; Nguyen, T.M.; Wang, D. Effective fuzzy clustering algorithm with Bayesian model and mean template for image segmentation. IET Image Process. 2014, 8, 571–581. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Guyer, D.; Miles, G.; Gaultney, L.; Schreiber, M. Application of machine vision to shape analysis in leaf and plant identification. Trans. ASAE 1993, 36, 163–172. [Google Scholar] [CrossRef]

- Crowe, T.; Delwiche, M. Real-time defect detection in fruit—Part II: An algorithm and performance of a prototype system. Trans. ASAE 1996, 39, 2309–2317. [Google Scholar] [CrossRef]

- Sweet, H.C.; Edwards, G.J. Citrus blight assessment using a microcomputer; quantifying damage using an apple computer to solve reflectance spectra of entire trees. Fla Sci. 1986, 49, 48–54. [Google Scholar]

- Guru, D.; Mallikarjuna, P.; Manjunath, S. Segmentation and classification of tobacco seedling diseases. In Proceedings of the Fourth Annual ACM Bangalore Conference, Bangalore, India, 25–26 March 2011; pp. 1–5. [Google Scholar]

- Moshou, D.; Bravo, C.; Oberti, R.; West, J.; Ramon, H.; Vougioukas, S.; Bochtis, D. Intelligent multi-sensor system for the detection and treatment of fungal diseases in arable crops. Biosyst. Eng. 2011, 108, 311–321. [Google Scholar] [CrossRef]

- Khamparia, A.; Saini, G.; Gupta, D.; Khanna, A.; Tiwari, S.; de Albuquerque, V.H.C. Seasonal Crops Disease Prediction and Classification Using Deep Convolutional Encoder Network. Circuits Syst. Signal Process. 2020, 39, 818–836. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.F.; Ritenour, M.A.; Bonn, W.G. Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. J. Food Eng. 2009, 93, 183–191. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Hossain, E.; Hossain, M.F.; Rahaman, M.A. A color and texture based approach for the detection and classification of plant leaf disease using KNN classifier. In Proceedings of the International Conference on Electrical, Computer and Communication Engineering (ECCE), Bazar, Bangladesh, 7–9 February 2019; pp. 1–6. [Google Scholar]

- Rauf, H.T.; Saleem, B.A.; Lali, M.I.U.; Khan, M.A.; Sharif, M.; Bukhari, S.A.C. A citrus fruits and leaves dataset for detection and classification of citrus diseases through machine learning. Data Brief 2019, 26, 104340. [Google Scholar] [CrossRef] [PubMed]

- Leitzke Betemps, D.; Vahl de Paula, B.; Parent, S.É.; Galarça, S.P.; Mayer, N.A.; Marodin, G.A.; Rozane, D.E.; Natale, W.; Melo, G.W.B.; Parent, L.E.; et al. Humboldtian diagnosis of peach tree (prunus persica) nutrition using machine-learning and compositional methods. Agronomy 2020, 10, 900. [Google Scholar] [CrossRef]

- Mary, N.A.B.; Singh, A.R.; Athisayamani, S. Classification of Banana Leaf Diseases Using Enhanced Gabor Feature Descriptor. In Inventive Communication and Computational Technologies; Springer: Berlin/Heidelberg, Germany, 2020; pp. 229–242. [Google Scholar]

- Pham, T.N.; Van Tran, L.; Dao, S.V.T. Early Disease Classification of Mango Leaves Using Feed-Forward Neural Network and Hybrid Metaheuristic Feature Selection. IEEE Access 2020, 8, 189960–189973. [Google Scholar] [CrossRef]

- Singh, S.; Gupta, S.; Tanta, A.; Gupta, R. Extraction of Multiple Diseases in Apple Leaf Using Machine Learning. Int. J. Image Graph. 2021, 2140009. [Google Scholar] [CrossRef]

- Kianat, J.; Khan, M.A.; Sharif, M.; Akram, T.; Rehman, A.; Saba, T. A Joint Framework of Feature Reduction and Robust Feature Selection for Cucumber Leaf Diseases Recognition. Optik 2021, 166566. [Google Scholar] [CrossRef]

- Oyewola, D.O.; Dada, E.G.; Misra, S.; Damaševičius, R. Detecting cassava mosaic disease using a deep residual convolutional neural network with distinct block processing. PeerJ Comput. Sci. 2021, 7, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Rehman, Z.U.; Khan, M.A.; Ahmed, F.; Damaševičius, R.; Naqvi, S.R.; Nisar, W.; Javed, K. Recognizing apple leaf diseases using a novel parallel real-time processing framework based on MASK RCNN and transfer learning: An application for smart agriculture. IET Image Process. 2021. [Google Scholar] [CrossRef]

- Lee, K.; Seong, J.; Han, Y.; Lee, W.H. Evaluation of Applicability of Various Color Space Techniques of UAV Images for Evaluating Cool Roof Performance. Energies 2020, 13, 4213. [Google Scholar] [CrossRef]

- Shaik, K.B.; Ganesan, P.; Kalist, V.; Sathish, B.; Jenitha, J.M.M. Comparative study of skin color detection and segmentation in HSV and YCbCr color space. Procedia Comput. Sci. 2015, 57, 41–48. [Google Scholar] [CrossRef]

- Cugmas, B.; Štruc, E. Accuracy of an Affordable Smartphone-Based Teledermoscopy System for Color Measurements in Canine Skin. Sensors 2020, 20, 6234. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vision Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Rauf, H.T. A Guava Fruits and Leaves Dataset for Detection and Classification of Guava Diseases through Machine Learning. Mendeley Data 2021. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).