Hyperspectral Three-Dimensional Fluorescence Imaging Using Snapshot Optical Tomography

Abstract

1. Introduction

2. Related Work

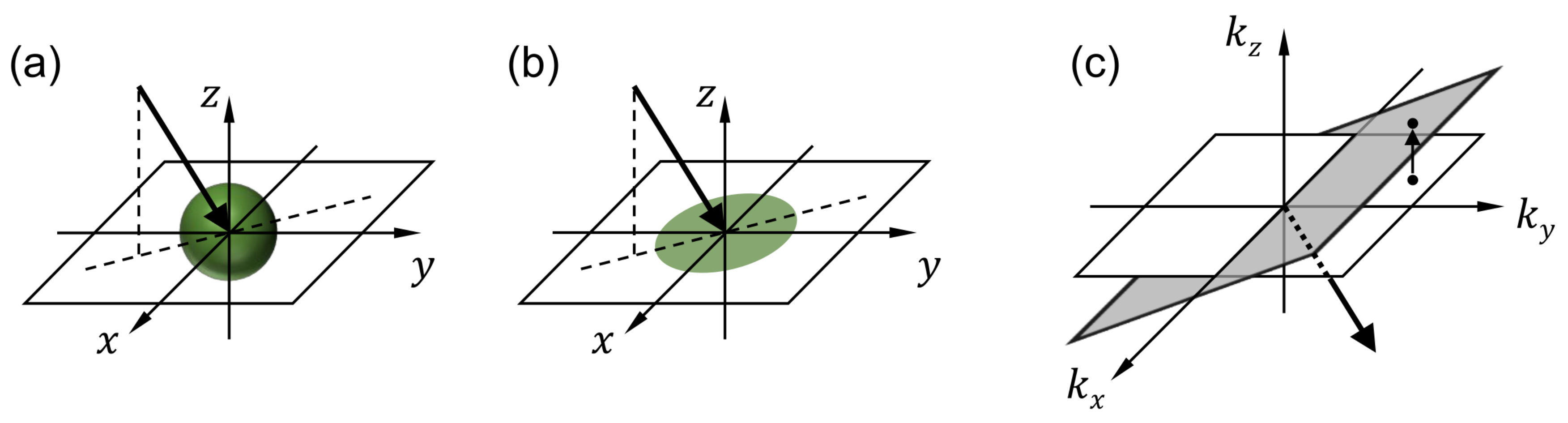

2.1. Snapshot Tomography: Snapshot Volumetric Imaging of 3D Internal Structure

2.2. Hyperspectral Three-Dimensional Imaging

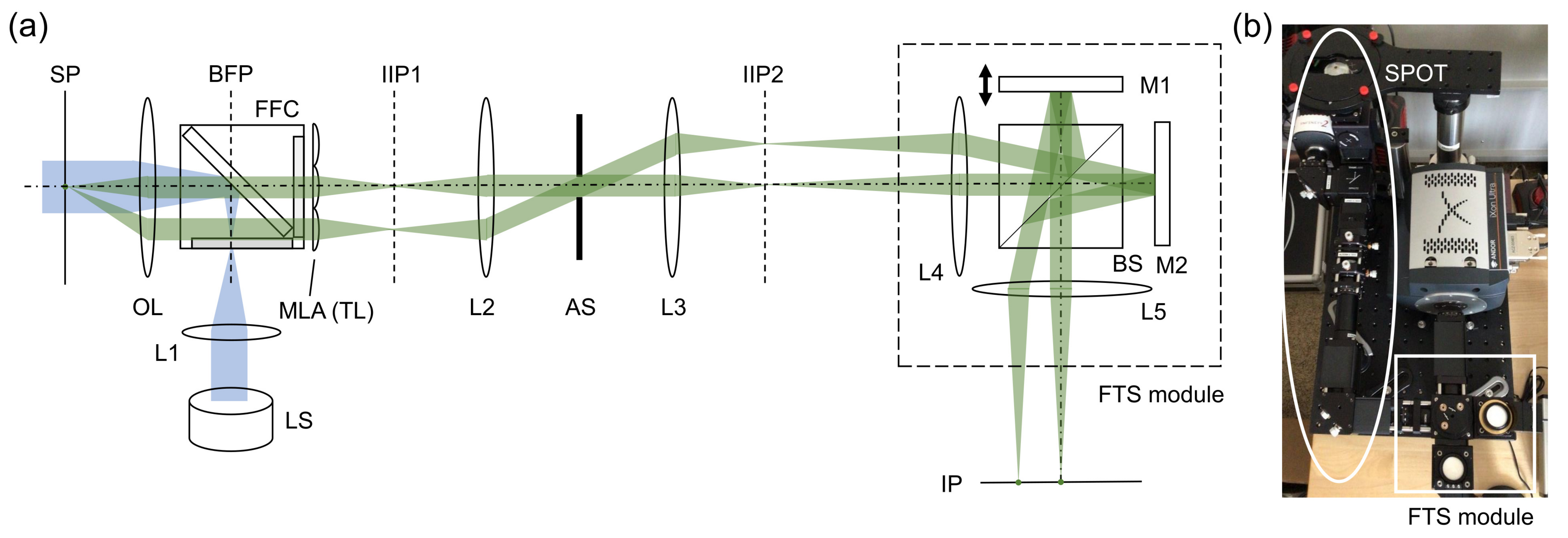

3. Snapshot Projection Optical Tomography (SPOT) Combined with Fourier-Transform Spectroscopy (FTS) Module

3.1. System Design

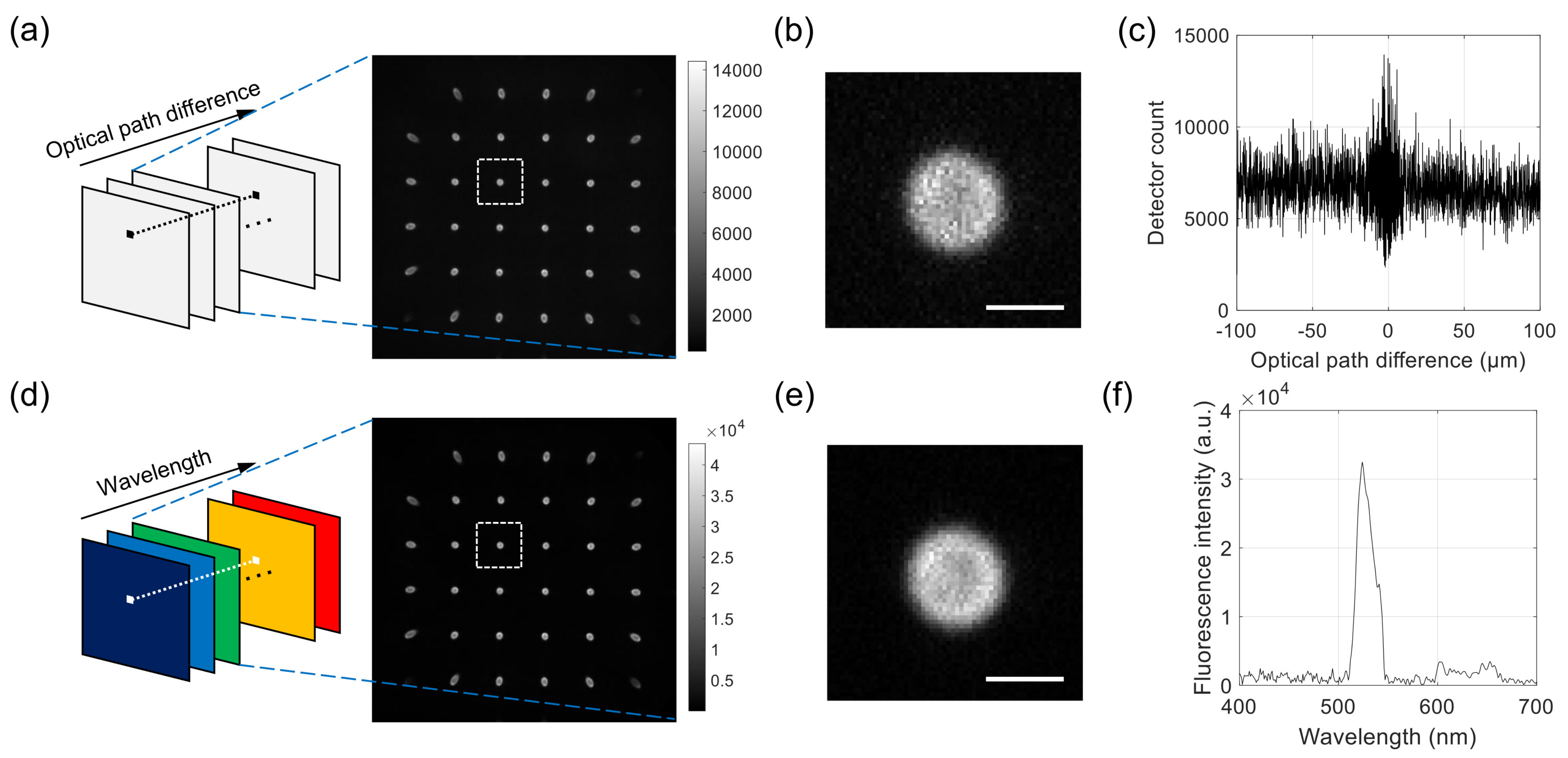

3.2. Data Acquisition

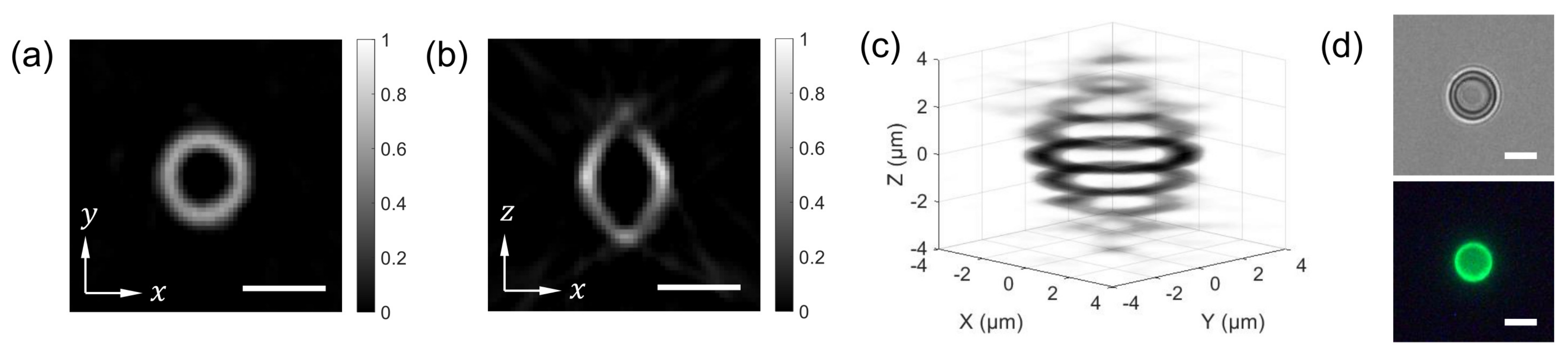

3.3. Data Processing

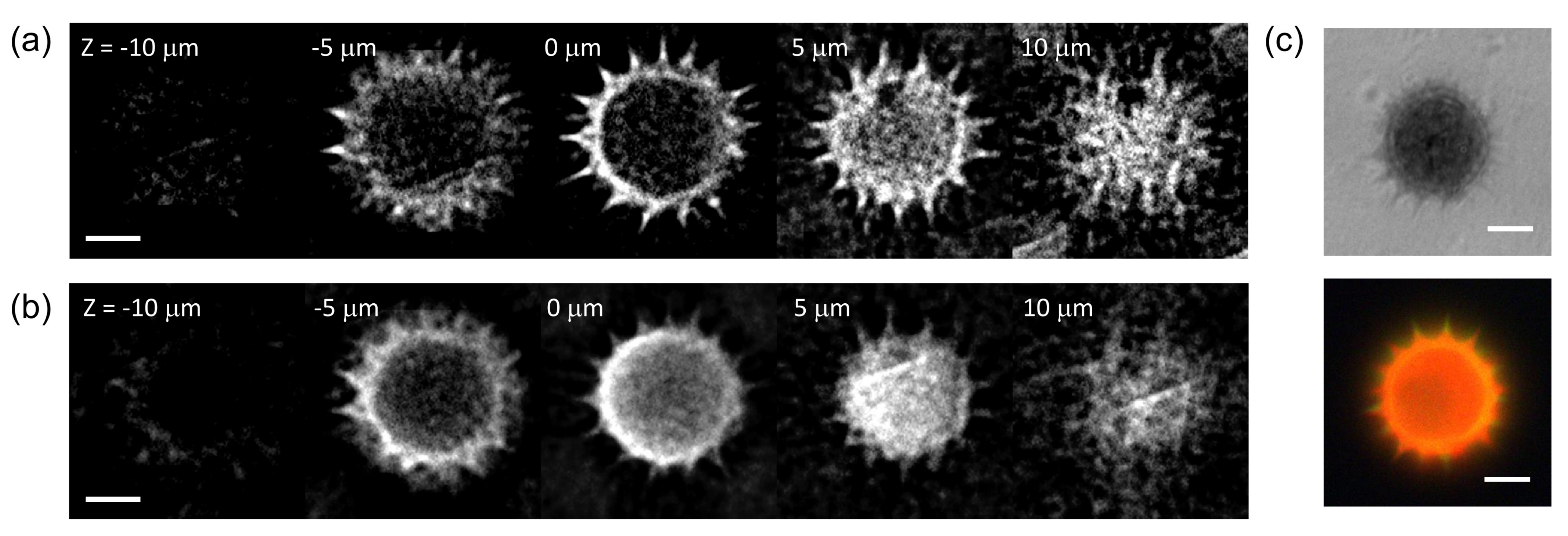

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FTS | Fourier-transform spectroscopy |

| LFM | Light-field microscopy |

| SPOT | Snapshot projection optical tomography |

| MLA | Micro-lens array |

| OPD | Optical path difference |

| sCMOS | Scientific complementary metal–oxide–semiconductor |

| EMCCD | Electron-multiplying charge-coupled-device |

| NUFFT | non-uniform fast Fourier transform |

| CT | Computed tomography |

References

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Gat, N. Imaging spectroscopy using tunable filters: A review. Wavelet Applications VII. International Society for Optics and Photonics. Proc. SPIE 2000, 4056, 50–64. [Google Scholar]

- Griffiths, P.R.; De Haseth, J.A. Fourier Transform Infrared Spectrometry; John Wiley & Sons: Hoboken, NJ, USA, 2007; Volume 171. [Google Scholar]

- McNally, J.G.; Karpova, T.; Cooper, J.; Conchello, J.A. Three-dimensional imaging by deconvolution microscopy. Methods 1999, 19, 373–385. [Google Scholar] [CrossRef] [PubMed]

- Sharpe, J.; Ahlgren, U.; Perry, P.; Hill, B.; Ross, A.; Hecksher-Sørensen, J.; Baldock, R.; Davidson, D. Optical projection tomography as a tool for 3D microscopy and gene expression studies. Science 2002, 296, 541–545. [Google Scholar] [CrossRef]

- Wu, J.; Xiong, B.; Lin, X.; He, J.; Suo, J.; Dai, Q. Snapshot hyperspectral volumetric microscopy. Sci. Rep. 2016, 6, 1–10. [Google Scholar] [CrossRef]

- Sinha, A.; Barbastathis, G.; Liu, W.; Psaltis, D. Imaging using volume holograms. Opt. Eng. 2004, 43, 1959–1972. [Google Scholar] [CrossRef]

- Blanchard, P.M.; Greenaway, A.H. Simultaneous multiplane imaging with a distorted diffraction grating. Appl. Opt. 1999, 38, 6692–6699. [Google Scholar] [CrossRef]

- Yu, J.; Zhou, C.; Jia, W.; Ma, J.; Hu, A.; Wu, J.; Wang, S. Distorted Dammann grating. Opt. Lett. 2013, 38, 474–476. [Google Scholar] [CrossRef]

- Maurer, C.; Khan, S.; Fassl, S.; Bernet, S.; Ritsch-Marte, M. Depth of field multiplexing in microscopy. Opt. Express 2010, 18, 3023–3034. [Google Scholar] [CrossRef] [PubMed]

- Levoy, M.; Ng, R.; Adams, A.; Footer, M.; Horowitz, M. Light field microscopy. ACM Trans. Graph. 2006, 25, 924–934. [Google Scholar] [CrossRef]

- Llavador, A.; Sola-Pikabea, J.; Saavedra, G.; Javidi, B.; Martínez-Corral, M. Resolution improvements in integral microscopy with Fourier plane recording. Opt. Express 2016, 24, 20792–20798. [Google Scholar] [CrossRef]

- Cong, L.; Wang, Z.; Chai, Y.; Hang, W.; Shang, C.; Yang, W.; Bai, L.; Du, J.; Wang, K.; Wen, Q. Rapid whole brain imaging of neural activity in freely behaving larval zebrafish (Danio rerio). eLife 2017, 6, e28158. [Google Scholar] [CrossRef]

- Scrofani, G.; Sola-Pikabea, J.; Llavador, A.; Sanchez-Ortiga, E.; Barreiro, J.; Saavedra, G.; Garcia-Sucerquia, J.; Martínez-Corral, M. FIMic: Design for ultimate 3D-integral microscopy of in-vivo biological samples. Biomed. Opt. Express 2018, 9, 335–346. [Google Scholar] [CrossRef]

- Guo, C.; Liu, W.; Hua, X.; Li, H.; Jia, S. Fourier light-field microscopy. Opt. Express 2019, 27, 25573–25594. [Google Scholar] [CrossRef]

- Sung, Y. Snapshot projection optical tomography. Phys. Rev. Appl. 2020, 13, 054048. [Google Scholar] [CrossRef]

- Sung, Y. Snapshot holographic optical tomography. Phys. Rev. Appl. 2019, 11, 014039. [Google Scholar] [CrossRef]

- Martin, M.C.; Dabat-Blondeau, C.; Unger, M.; Sedlmair, J.; Parkinson, D.Y.; Bechtel, H.A.; Illman, B.; Castro, J.M.; Keiluweit, M.; Buschke, D.; et al. 3D spectral imaging with synchrotron Fourier transform infrared spectro-microtomography. Nat. Methods 2013, 10, 861. [Google Scholar] [CrossRef] [PubMed]

- Obst, M.; Wang, J.; Hitchcock, A.P. Soft X-ray spectro-tomography study of cyanobacterial biomineral nucleation. Geobiology 2009, 7, 577–591. [Google Scholar] [CrossRef]

- Sung, Y. Spectroscopic microtomography in the visible wavelength range. Phys. Rev. Appl. 2018, 10, 054041. [Google Scholar] [CrossRef]

- Morgner, U.; Drexler, W.; Kärtner, F.X.; Li, X.D.; Pitris, C.; Ippen, E.P.; Fujimoto, J.G. Spectroscopic Optical coherence tomography. Opt. Lett. 2000, 25, 111–113. [Google Scholar] [CrossRef] [PubMed]

- Oldenburg, A.L.; Xu, C.; Boppart, S.A. Spectroscopic optical coherence tomography and microscopy. IEEE J. Sel. Top. Quantum Electron. 2007, 13, 1629–1640. [Google Scholar] [CrossRef]

- Jung, J.; Kim, K.; Yoon, J.; Park, Y. Hyperspectral Optical diffraction tomography. Opt. Express 2016, 24, 2006–2012. [Google Scholar] [CrossRef] [PubMed]

- Sinclair, M.B.; Haaland, D.M.; Timlin, J.A.; Jones, H.D. Hyperspectral confocal microscope. Appl. Opt. 2006, 45, 6283–6291. [Google Scholar] [CrossRef] [PubMed]

- Jahr, W.; Schmid, B.; Schmied, C.; Fahrbach, F.O.; Huisken, J. Hyperspectral light sheet microscopy. Nat. Commun. 2015, 6, 1–7. [Google Scholar] [CrossRef]

- Chen, L.; Li, G.; Tang, L.; Zhang, M.; Liu, L.; Liu, A.; McGinty, J.; Ruan, S. Hyperspectral scanning laser optical tomography. J. Biophotonics 2019, 12, e201800221. [Google Scholar] [CrossRef]

- Ozawa, K.; Sato, I.; Yamaguchi, M. Hyperspectral photometric stereo for a single capture. J. Opt. Soc. Am. A 2017, 34, 384–394. [Google Scholar] [CrossRef]

- Heist, S.; Zhang, C.; Reichwald, K.; Kühmstedt, P.; Notni, G.; Tünnermann, A. 5D hyperspectral imaging: Fast and accurate measurement of surface shape and spectral characteristics using structured light. Opt. Express 2018, 26, 23366–23379. [Google Scholar] [CrossRef]

- Cui, Q.; Park, J.; Smith, R.T.; Gao, L. Snapshot hyperspectral light field imaging using image mapping spectrometry. Opt. Lett. 2020, 45, 772–775. [Google Scholar] [CrossRef] [PubMed]

- Dutt, A.; Rokhlin, V. Fast Fourier transforms for nonequispaced data. SIAM J. Sci. Comput. 1993, 14, 1368–1393. [Google Scholar] [CrossRef]

- Lucy, L.B. An iterative technique for the rectification of observed distributions. Astron. J. 1974, 79, 745. [Google Scholar] [CrossRef]

- Richardson, W.H. Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 1972, 62, 55–59. [Google Scholar] [CrossRef]

- Sung, Y.; Dasari, R.R. Deterministic regularization of three-dimensional Optical diffraction tomography. J. Opt. Soc. Am. A 2011, 28, 1554–1561. [Google Scholar] [CrossRef] [PubMed]

- Gerchberg, R.W. Super-resolution through error energy reduction. Opt. Acta 1974, 21, 709–720. [Google Scholar] [CrossRef]

- Papoulis, A. A new algorithm in spectral analysis and band-limited extrapolation. IEEE Trans. Circuits Syst. 1975, 22, 735–742. [Google Scholar] [CrossRef]

- Studer, V.; Bobin, J.; Chahid, M.; Mousavi, H.S.; Candes, E.; Dahan, M. Compressive fluorescence microscopy for biological and hyperspectral imaging. Proc. Natl. Acad. Sci. USA 2012, 109, E1679–E1687. [Google Scholar] [CrossRef]

- Golbabaee, M.; Vandergheynst, P. Hyperspectral image compressed sensing via low-rank and joint-sparse matrix recovery. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2741–2744. [Google Scholar]

- Juntunen, C. Deep Learning Assisted Fourier Transform Imaging Spectroscopy. Master’s Thesis, The University of Wisconsin-Milwaukee, Milwaukee, WI, USA, 2020. [Google Scholar]

- Ipponjima, S.; Umino, Y.; Nagayama, M.; Denda, M. Live imaging of alterations in cellular morphology and organelles during cornification using an epidermal equivalent model. Sci. Rep. 2020, 10, 1–12. [Google Scholar] [CrossRef]

- Deglint, J.L.; Jin, C.; Chao, A.; Wong, A. The feasibility of automated identification of six algae types using feed-forward neural networks and fluorescence-based spectral-morphological features. IEEE Access 2018, 7, 7041–7053. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Juntunen, C.; Woller, I.M.; Sung, Y. Hyperspectral Three-Dimensional Fluorescence Imaging Using Snapshot Optical Tomography. Sensors 2021, 21, 3652. https://doi.org/10.3390/s21113652

Juntunen C, Woller IM, Sung Y. Hyperspectral Three-Dimensional Fluorescence Imaging Using Snapshot Optical Tomography. Sensors. 2021; 21(11):3652. https://doi.org/10.3390/s21113652

Chicago/Turabian StyleJuntunen, Cory, Isabel M. Woller, and Yongjin Sung. 2021. "Hyperspectral Three-Dimensional Fluorescence Imaging Using Snapshot Optical Tomography" Sensors 21, no. 11: 3652. https://doi.org/10.3390/s21113652

APA StyleJuntunen, C., Woller, I. M., & Sung, Y. (2021). Hyperspectral Three-Dimensional Fluorescence Imaging Using Snapshot Optical Tomography. Sensors, 21(11), 3652. https://doi.org/10.3390/s21113652