In this section, we start with the problem statement of WSIS, followed by describing the proposed WS-RCNN framework. Then we detail the key components, including the Attention-Guided Pseudo Labeling and the Entropic Open-Set Loss. Finally, we present some remarks.

3.1. Problem Statement

Given a set of classes of interest

and a training set

, where

is an image and

is the corresponding multi-class label vector, the task of weakly supervised instance segmentation (WSIS) in our work can be roughly stated as to segment, for an input testing image, all the object instances belonging to the classes

. Such a problem setting differs intrinsically from general instance segmentation [

2,

4] in that no pixel-wise instance annotations but only image-level labels are available for model establishment, which makes the task very challenging.

Like general instance segmentation, WSIS can also follow a proposed-based paradigm [

8,

13,

33], which can be epitomized as a three-step pipeline as aforementioned. These approaches can then be viewed as to retrieve true object instances from a pool of proposals according to the assigned scores, central to which is proposal scoring, i.e., how to appropriately assign classification scores to proposals. One commonly-used strategy for proposal scoring is to make use of the well-established localization ability of CNNs [

8,

16,

18]. Specifically, the training set

with image-level labels are firstly taken to train an image-level CNN classifier, from which a collection of class-specific attention maps are derived to assign classification scores to the proposals. For this purpose, it is desired that these attention maps can preserve object shapes, which is however a difficult perceptual grouping task. In addition, the hand-crafted scoring rules adopted by existing methods are also limited as well. These facts motivates us to propose the WS-RCNN framework.

3.2. The Proposed WS-RCNN Framework

The basic idea of WS-RCNN is to deploy a deep network to learn to score proposals under the special setting of weak supervision, instead of relying on heuristic proposal scoring strategies. To achieve this goal, one major obstacle is the absence of proposal-level labels necessitated for training. To conquer this challenge, we develop an effective strategy, called Attention-Guided Pseudo Labeling (AGPL), to take advantage of the attention maps associated with the image-level CNN classifier to infer proposal-level pseudo labels. Furthermore, we introduce an Entropic Open-Set Loss (EOSL) to handle the background issue in training to further improve the robustness of our framework. In the following, we will first present an overview of WS-RCNN, followed by detailing the AGPL stategy and the EOSL loss.

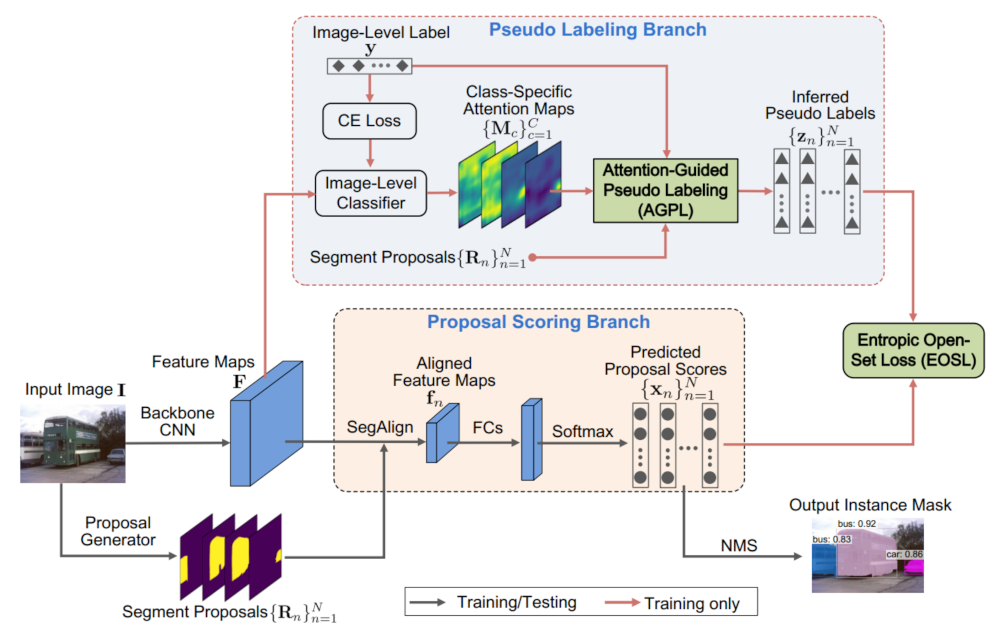

Network Architecture: The overall network architecture of WS-RCNN is shown in

Figure 2. Following the notations above, the input image

sized by

is first fed into a proposal generator (using the off-the-shelf method [

51,

52] in our implementation) to obtain the segment proposals

, where each

is an

binary mask representing a segment proposal with arbitrary shapes (rather than regular bounding-boxes). The image then goes through a backbone CNN for feature extraction, yielding the feature maps

, where

is the size and

M the number of the feature maps. Afterwards, the network bifurcates into two branches, i.e., the proposal scoring branch and the pseudo labeling branch. Notice that these two branches share the same backbone CNN.

In the proposal scoring branch, the features corresponding to each individual proposal are extracted. A standard operation for this task is RoIAlign [

2], widely used in two-stage object detectors, which however cannot be directly applied to our case since it is designed for bounding-box proposals. Therefore, we modify RoIAlign to adapt to segment proposals, resulting in the SegAlign operation (see details below). For each proposal

, the corresponding features can be extracted from

and aligned to a canonical grid via SegAlign, denoted by

(we use h = w = 7; M = 512 in this paper), which is followed by three fully-connected layers (FCs, with the node numbers being 4096, 4096 and C respectively) and a softmax layer to get the proposal-level classification score

.

The pseudo labeling branch is executed for training only, where the feature maps

are followed by an image-level classifier. Then, a set of class-specific attention maps, denoted by

, are extracted from this classifier, where each

reflects the spatial probability of occurrence of object instances belonging to the class c. Among possible choices of attention maps, we adopt the Class Peak Responses [

8] in our implementation due to its excellent localization ability. These attention maps (as well as the image-level label

) are then utilized to infer the proposal-level pseudo class labels

, where

is a one-hot vector

standing for the background class), by the use of AGPL.

Training Strategy: We adopt a two-phase training strategy to train the WS-RCNN model. In the first phase, we train the image-level classifier in the pseudo labeling branch, which is initialized by the model pre-trained on ImageNet. Proposal-level pseudo labels are then inferred from the trained imagelevel classifier using AGPL. In the second phase, we train the proposal scoring branch, where the backbone CNN is reinitialized with the model pre-trained on ImageNet. We will validate the effectiveness of this two-phase training strategy by comparative experiments in

Section 4.3. Notice that since there usually exist significantly more background proposals than target-class ones after pseudo labeling, we always make their numbers identical by uniformly sampling background proposals.

Training Loss: For the training of the image-level classifier (the first-phase training), we use the given image-level labels and the conventional cross-entropy loss function for multi-label classification to establish the training loss.

For the training of the proposal scoring branch (the secondphase training), suppose for the image

labeled with

in the training set

, the proposals obtained are

. For each

, let us denote by

the classification score predicted by the proposal scoring branch, and by

n the pseudo class label inferred by the pseudo labeling branch using AGPL. Given all these, the training loss for the proposal scoring stream can be established by

where

is the proposed Entropic Open-Set Loss (see details in

Section 3.4).

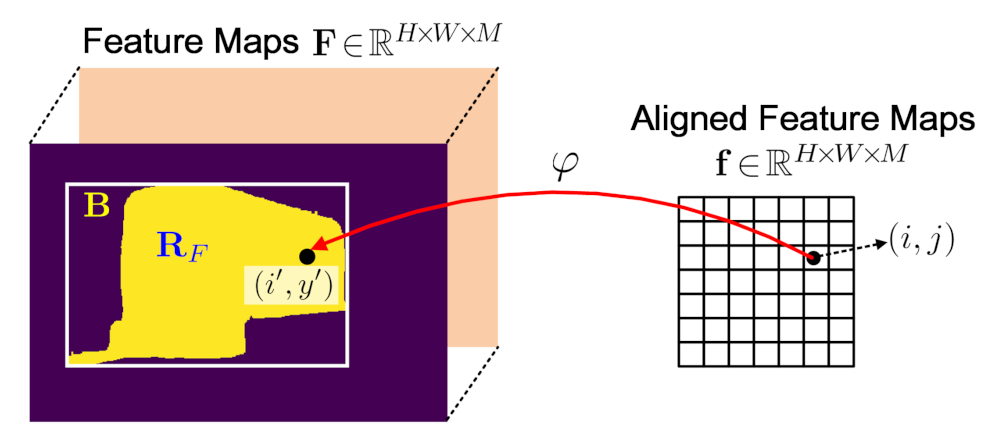

SegAlign: As shown in

Figure 3, following the notations above, suppose for a segment mask

in the

image

, the corresponding receptive field mapped to the feature maps

is

. SegAlign extracts from

the features corresponding to

and maps them to canonical feature maps

, which is basically a modified RoIAlign to adapt to segment masks. Concretely, suppose

is bounded by the rectangle

,

is the bilinear transform from the spatial coordinates

to

, i.e.,

, and

g is the bilinear interpolation function over

. The SegAlign operation can then be defined by

Note we drop the channel dimension of feature maps above without loss of clarity.

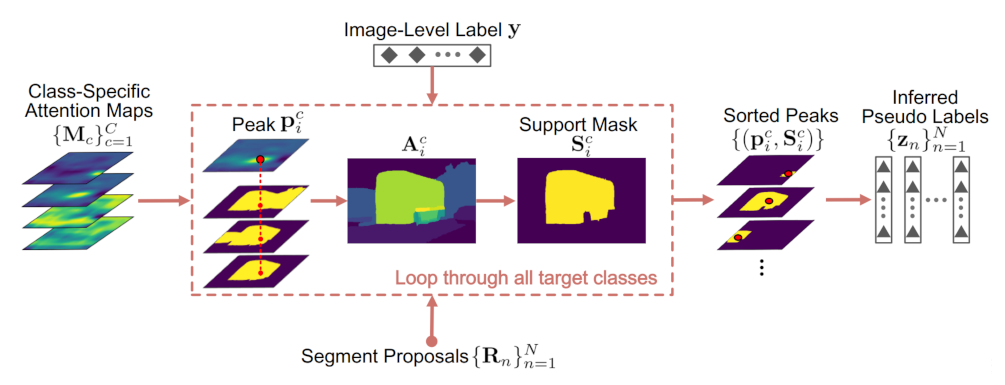

3.3. Attention-Guided Pseudo Labeling

AGPL leverages the localization ability of CNNs and the spatial relationship among proposals to achieve pseudo labeling. As shown in

Figure 4, for the image

, given the class label vector

, the segment proposals

and the class-specific attention maps

, AGPL can be outlined as follows:

(1) For each target class

c (with

), all the local maxima (peaks) are identified from

, denoted as

, where

stands for pixel coordinates and

the number of peaks. For each

, we pick up all the proposals spatially including this point, which are further averaged and thresholded to get a support mask

as follows

where

is the number of picked proposals corresponding to

,

p and

q are pixel indices, and the threshold

is a parameter (we adopt

in our implementation. See

Section 4.4 for parameter study). The resulting peaks

and associated support masks

are then utilized to admit proposals belonging to the class

c.

(2) Sort all the peaks

in the descending order of their values in the attention maps, i.e.,

. Then, for each ordered peak

and the associated

, those proposals which overlap sufficiently with

are labeled as the class

c, i.e.,

if

where IoU stands for the Intersection-over-Union operation. Notice that one proposal is allowed to be exclusively assigned to one class only during the ordered labeling. For clarity, we summarize the AGPL algorithm above in Algorithm 1.

3.4. Entropic Open-Set Loss

Since our WS-RCNN is proposal-based, the proposals after pseudo labeling will unavoidably contain some background proposals, i.e., those labeled as none of the target classes. It is necessary to handle these background proposals in model training, otherwise a model trained only with samples from target classes will be distracted by the unseen background proposals in testing, degrading its robustness. A natural solution is to add a dummy class into the model to accommodate background proposals. However, since the background class is a class of “stuff”, its variance is so large that it is hard to be modeled by any single class.

| Algorithm 1 Attention-Guided Pseudo Labeling (AGPL) |

- Input:

The label vector , the segment proposals and the class-specific attention maps (associated with the image ); the parameter . - Output:

The one-hot pseudo class labels for the proposals. - 1:

Initialize , . - 2:

for allc with (target class) do - 3:

Find the local maxima in ; - 4:

for do - 5:

Calculate the support mask using Equations ( 3) and ( 4); - 6:

; - 7:

end for - 8:

end for - 9:

Sort in the descending order of the values , denoted by the sorted set. - 10:

; - 11:

for alldo - 12:

Find the proposals indexed by satisfying Equation ( 5); - 13:

Set ; - 14:

; - 15:

end for

|

To address this issue, we observe that the task of background handling here is by nature an open-set recognition (OSR) problem, which has been well studied in robust pattern recognition. Hence, we propose to introduce the Entropic Open-Set Loss (OSEL), which is a representative method for OSR [

25], to address our background handling problem. The basic idea of OSEL is to treat the samples from target and background classes separately in establishing the training loss. For target classes, the standard cross-entropy loss is used, while for the background class, an entropic loss is used to encourage predicting uniformly-distributed classification scores. Since the

C scores sum up to 1 (output by the softmax layer), encouraging uniform distribution on background class will make these scores small and therefore suppressed during the Non-maximal Suppression (NMS) procedure. Formally, suppose the predicted score vector of a proposal is

and the corresponding one-hot pseudo label vector is

, the EOSL is defined by

To our knowledge, this is the first work which addresses the background handling problem in object detection/instance segmentation from the perspective of open-set recognition.