A New Adaptive Method for the Extraction of Steel Design Structures from an Integrated Point Cloud

Abstract

1. Introduction

- The development and presentation of a complete integration technology for spatial data generated from two sensory measurements: data from TLS and that from airborne photogrammetry obtained through UAV flights was integrated.

- The comparative analysis of the developed models and the accuracy analysis of the integration process.

- The development and testing of a new adaptive and automatic algorithm for the extraction of the edges of geometric structures from point clouds.

- A new algorithm used to develop a reduced spatial model of a building’s steel structure.

2. Materials and Methods

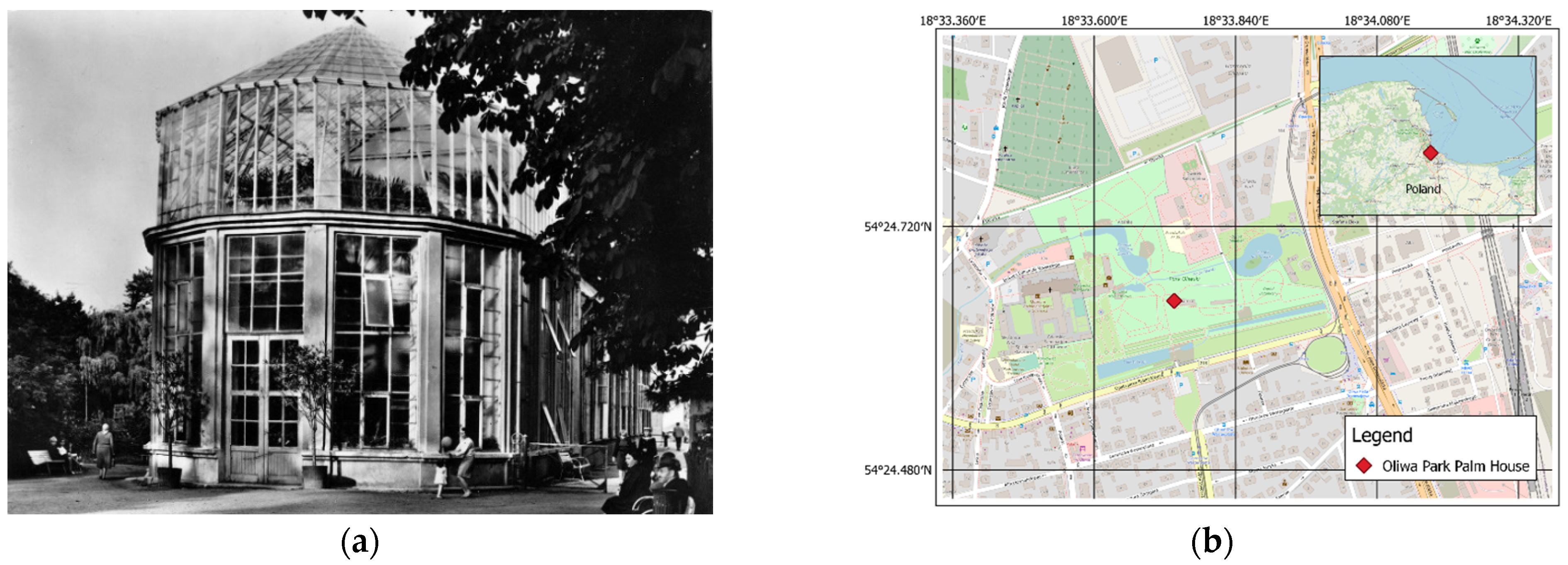

2.1. Object History and Description

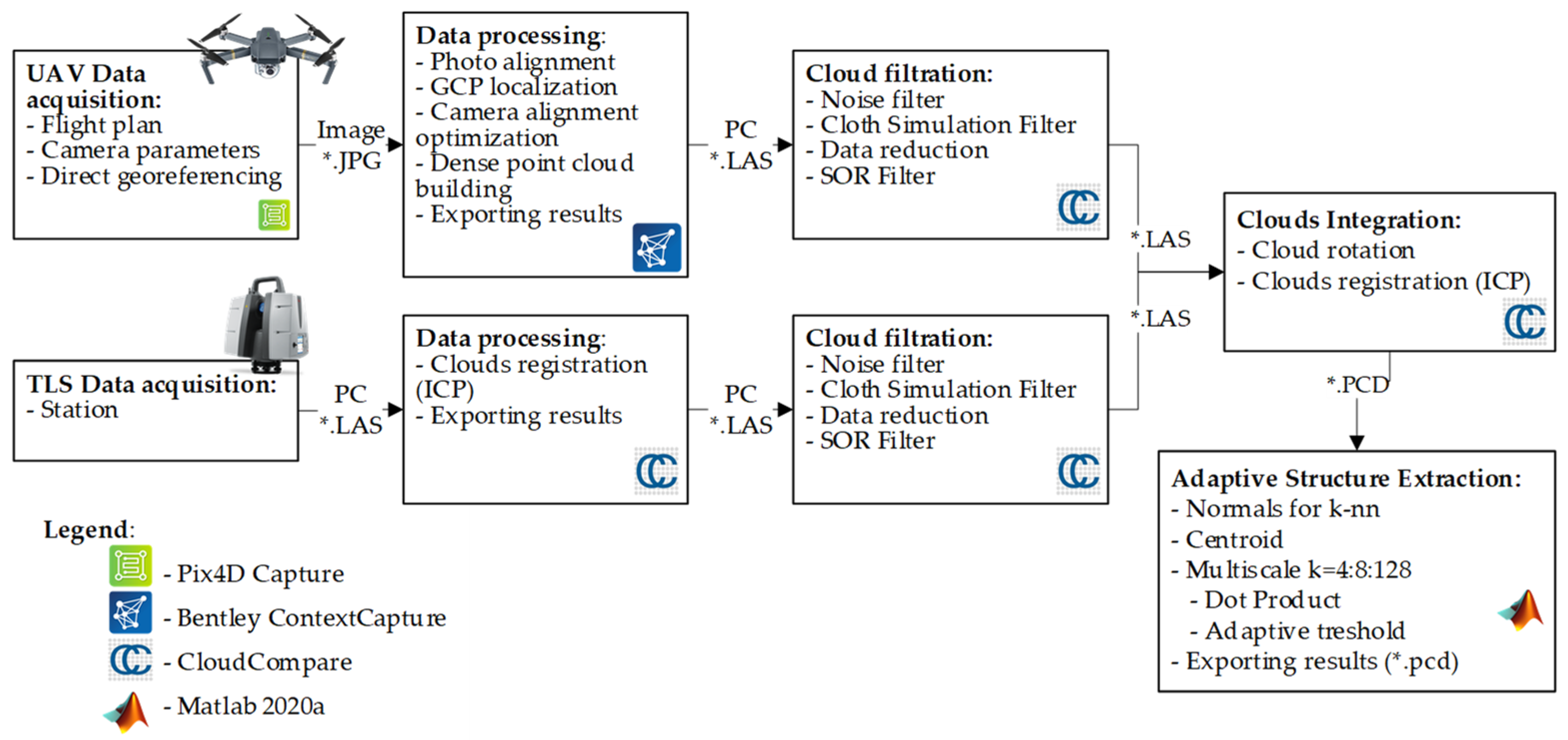

2.2. Process Description

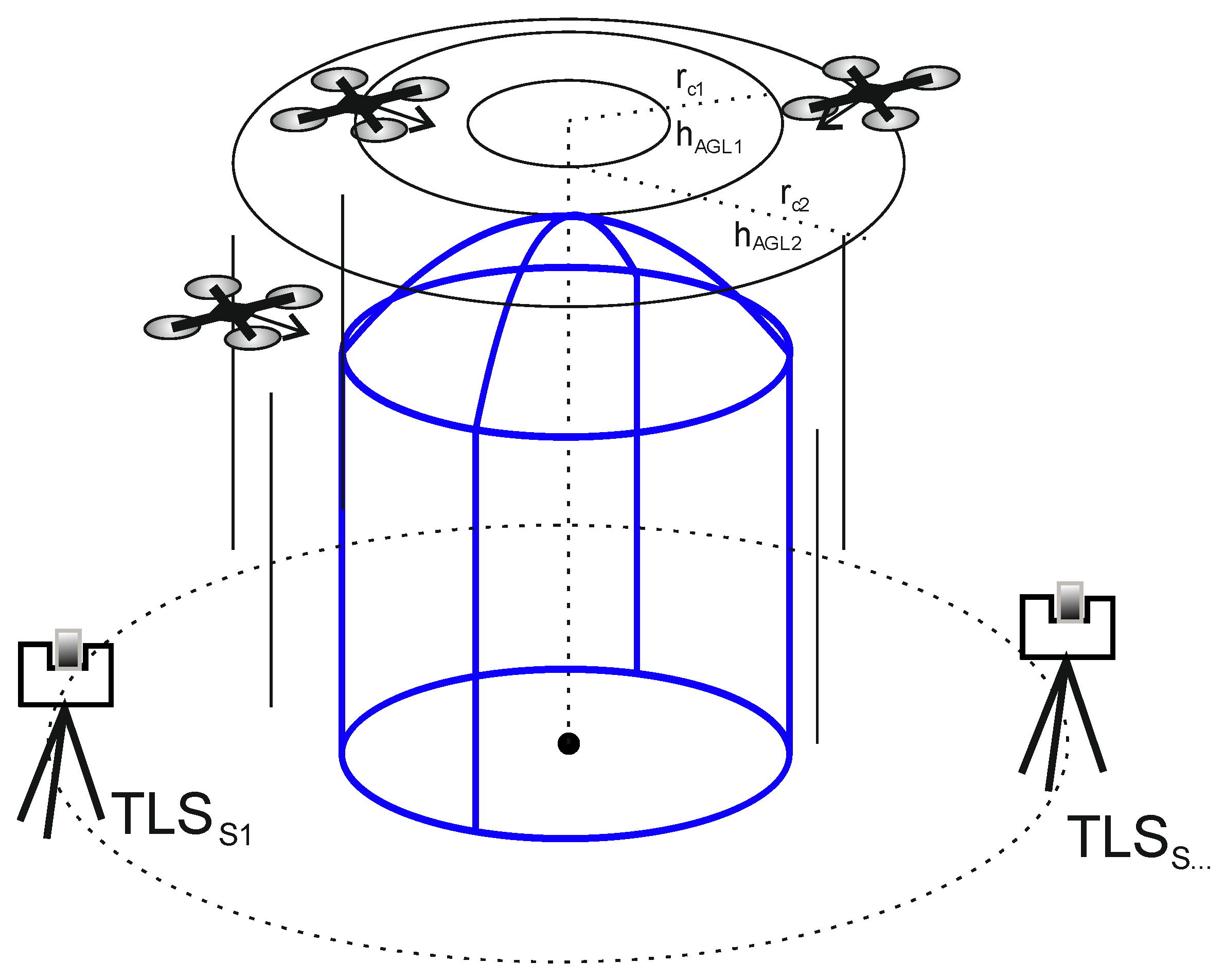

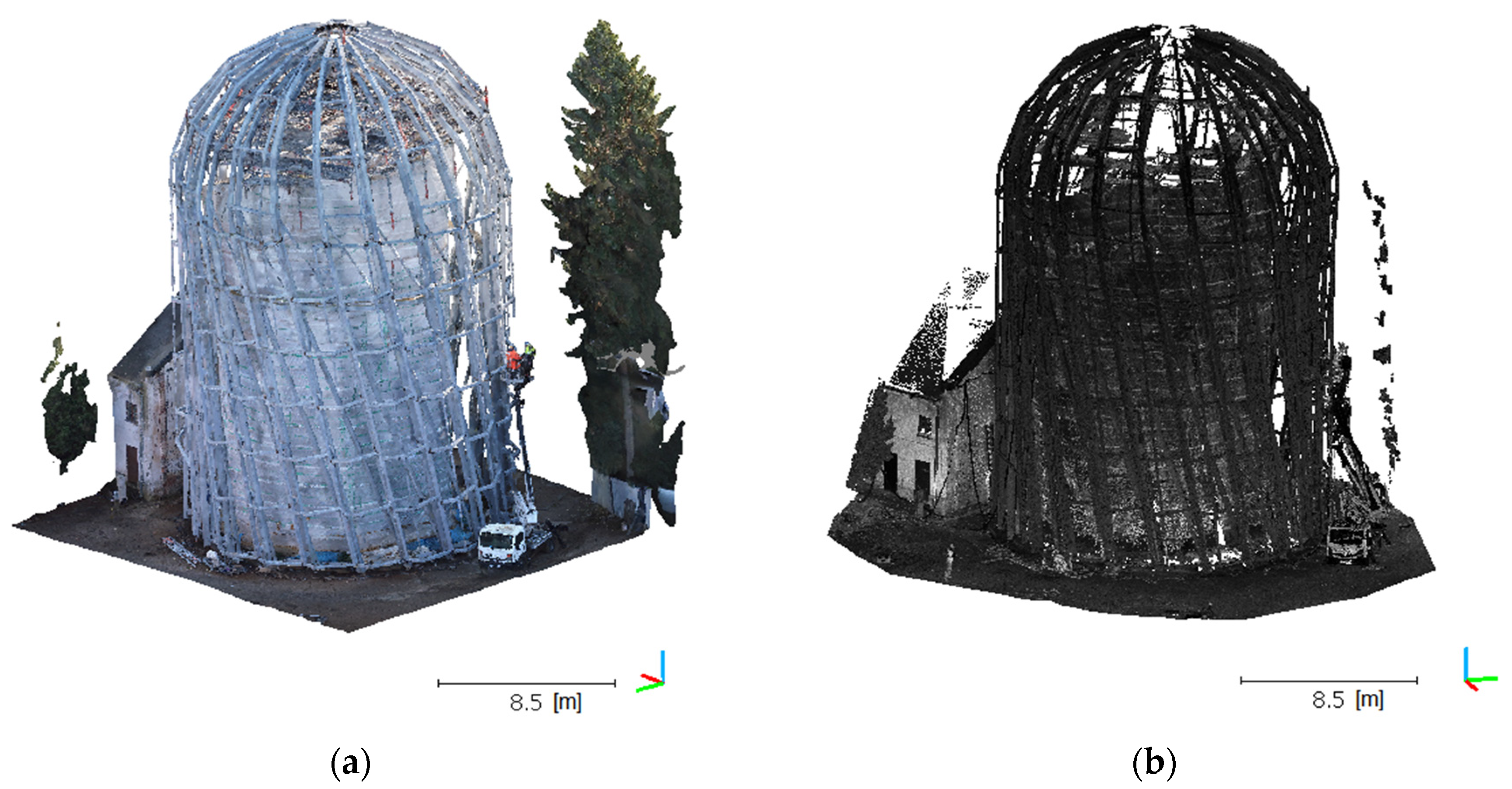

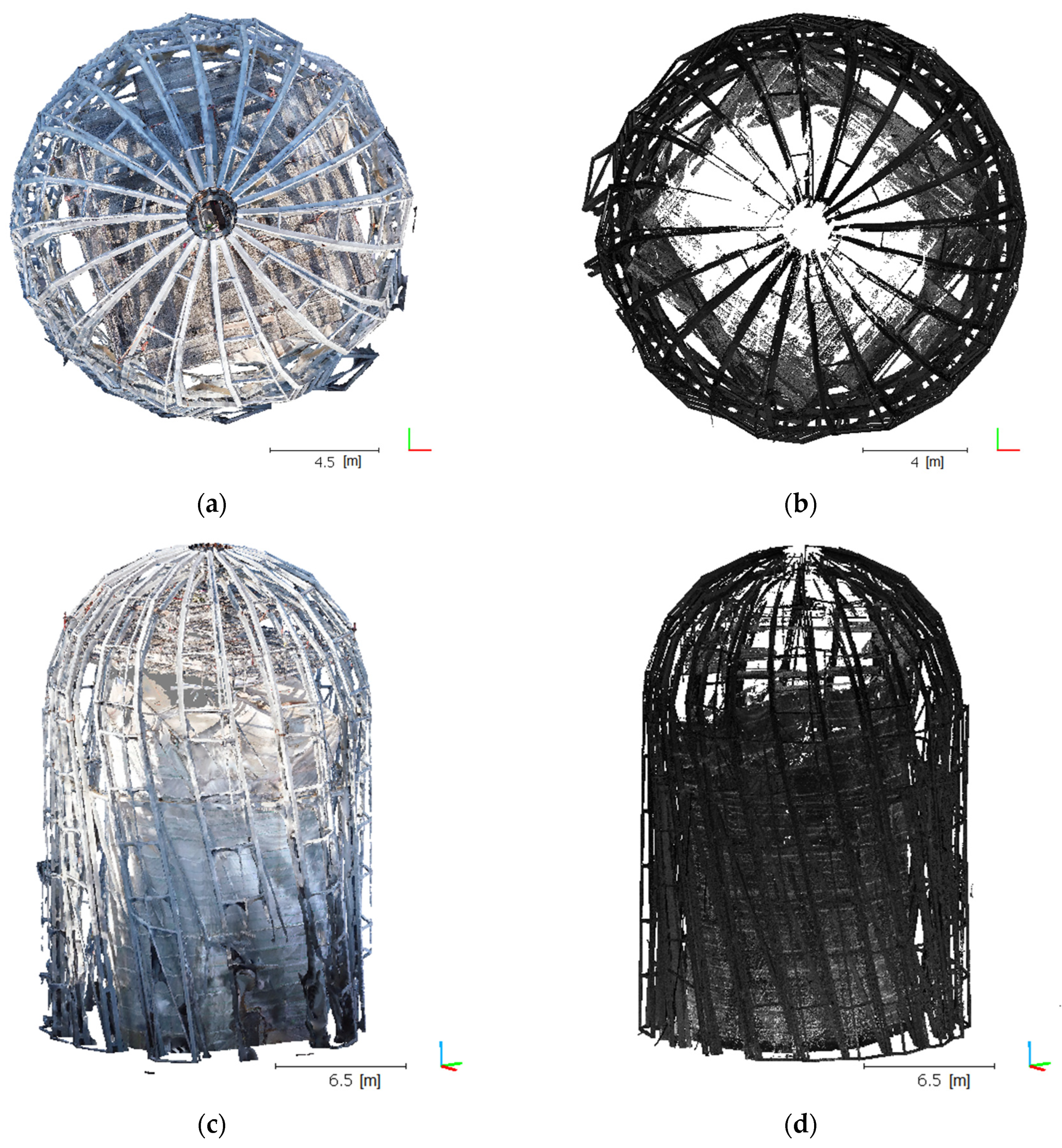

2.3. Data Acquisition

2.3.1. UAV Photogrammetry: Initial Data Processing

2.3.2. TLS Initial Data Processing

2.4. Point Cloud Filtration

2.5. Point Cloud Integration

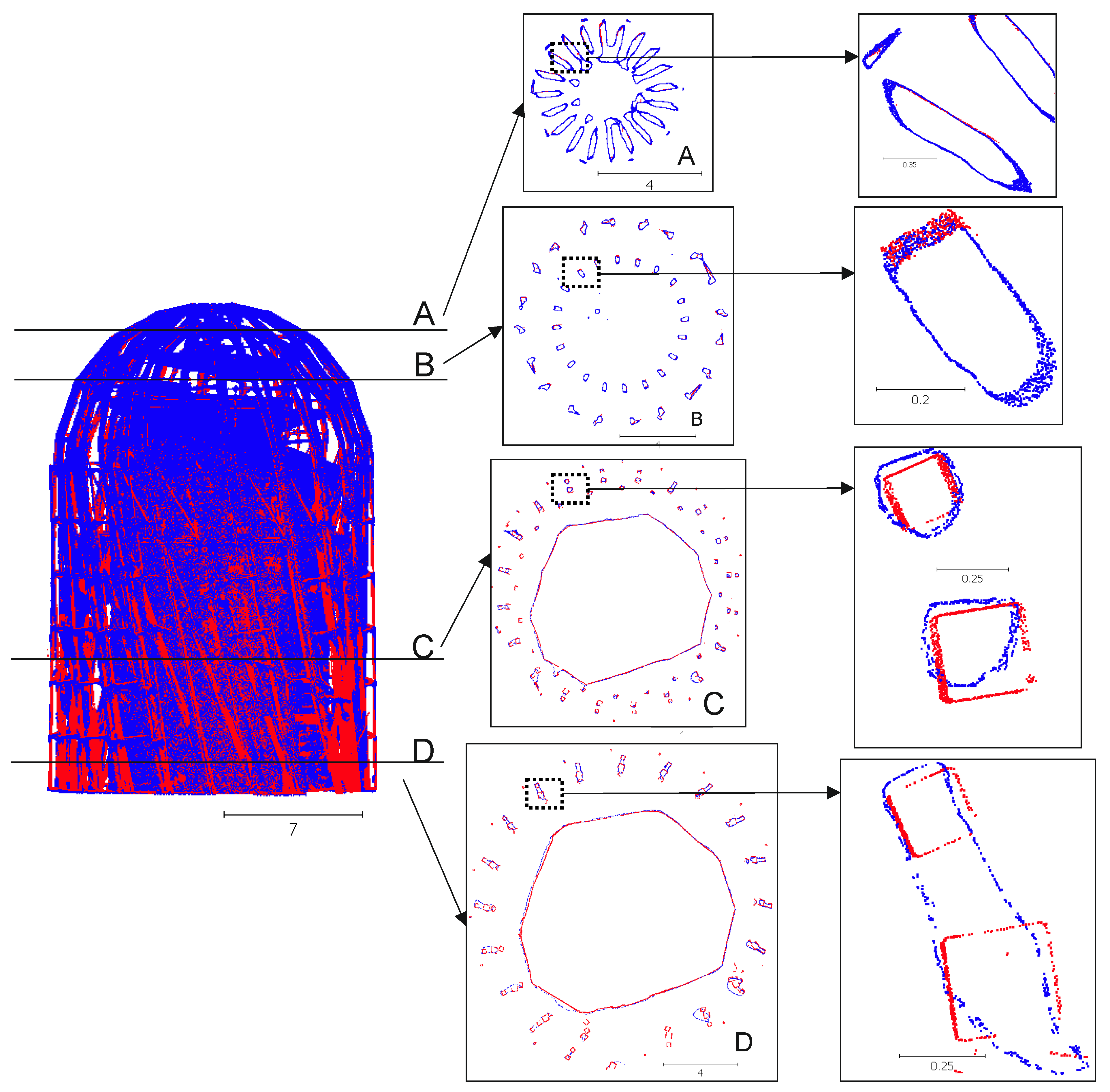

2.6. Adaptive Structure Extraction Algorithm

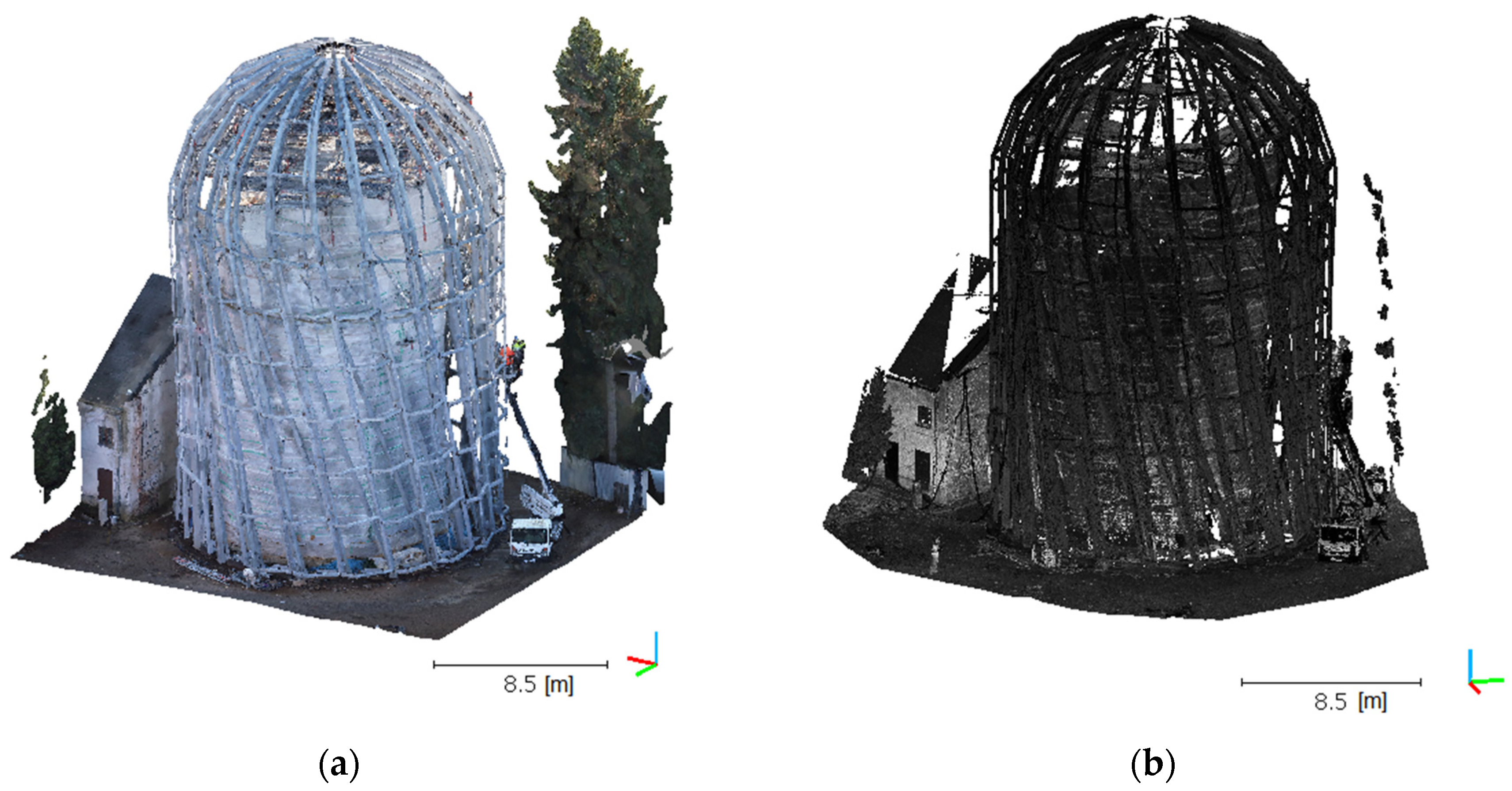

3. Results and Discussion

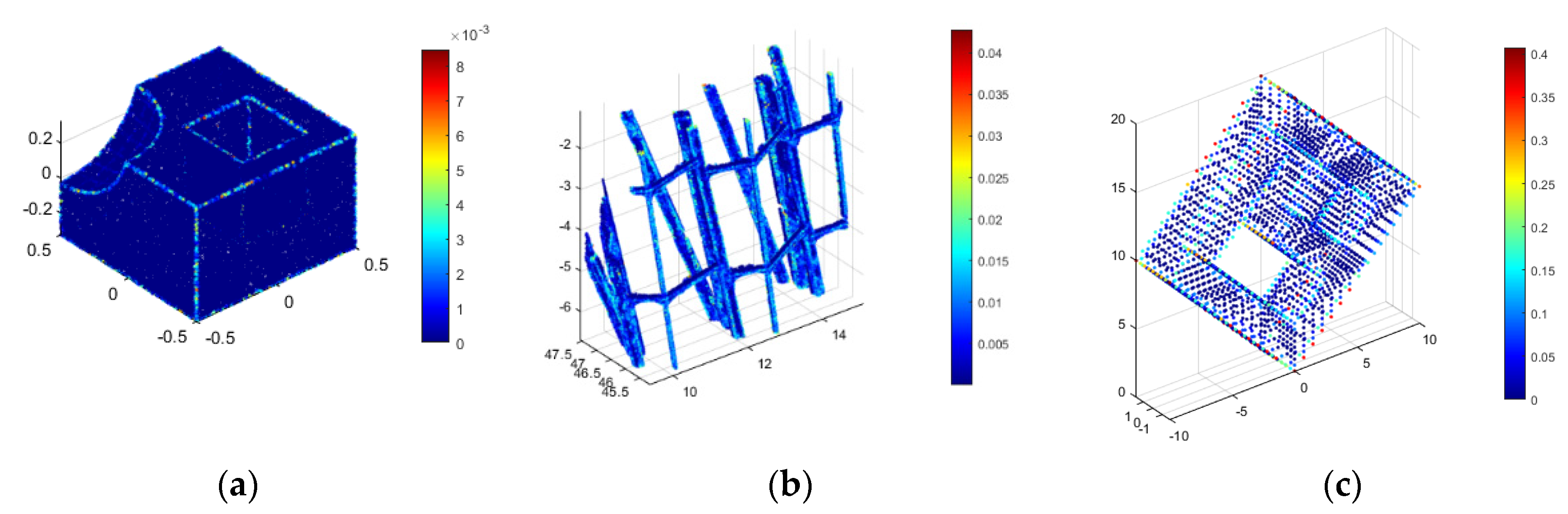

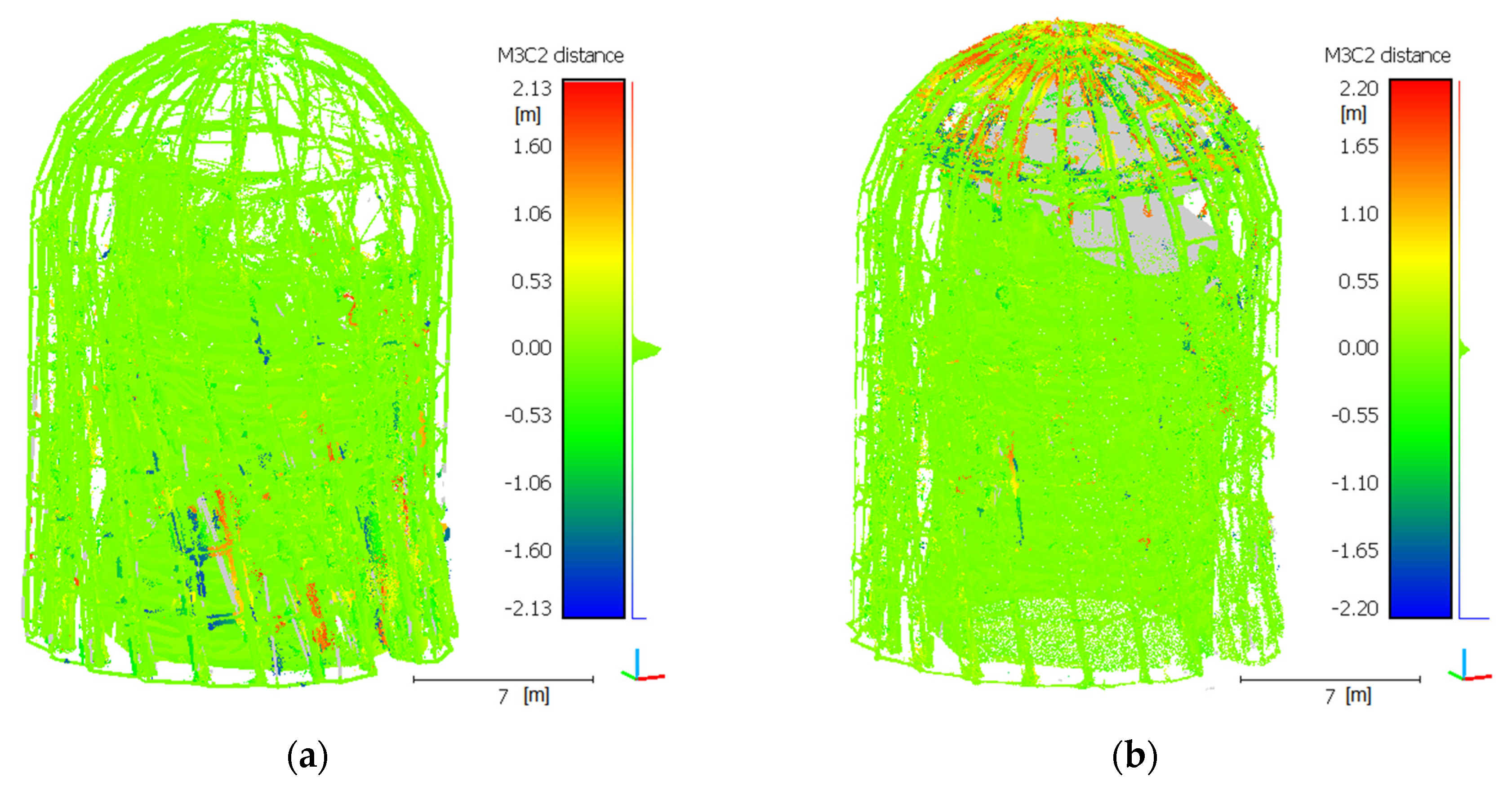

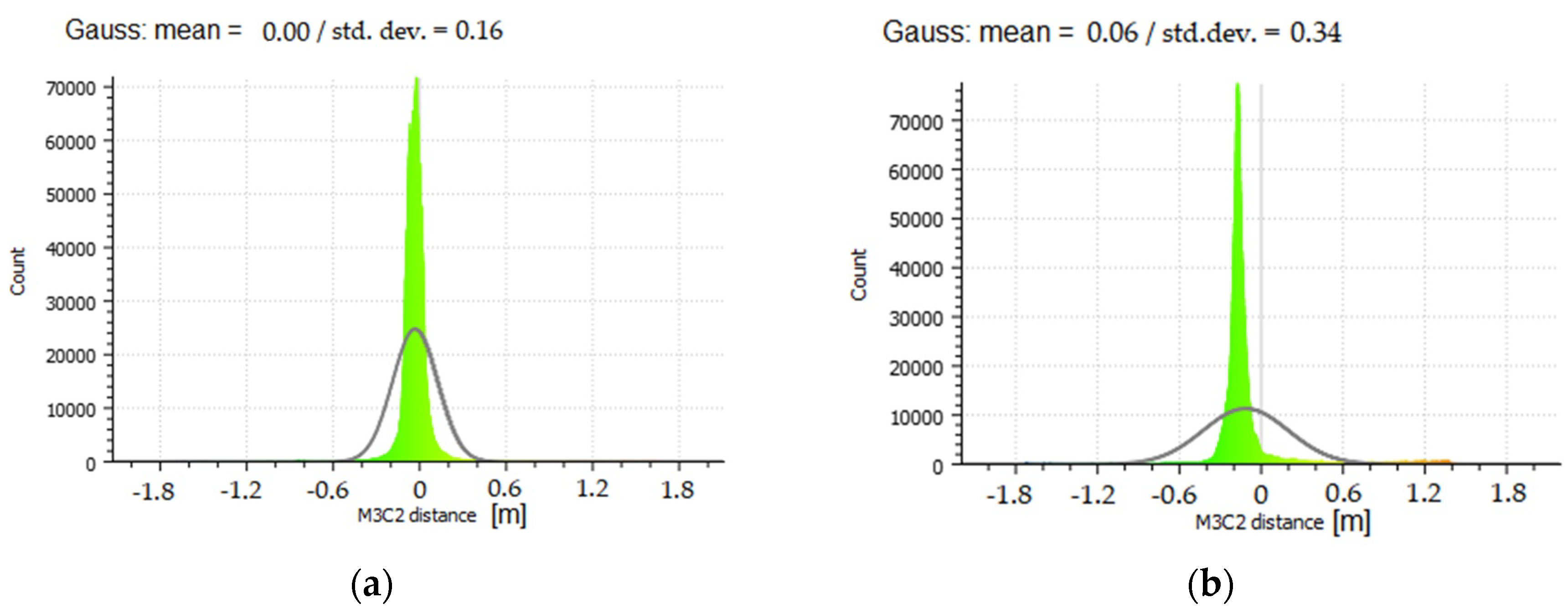

3.1. Integration Quality Assessment

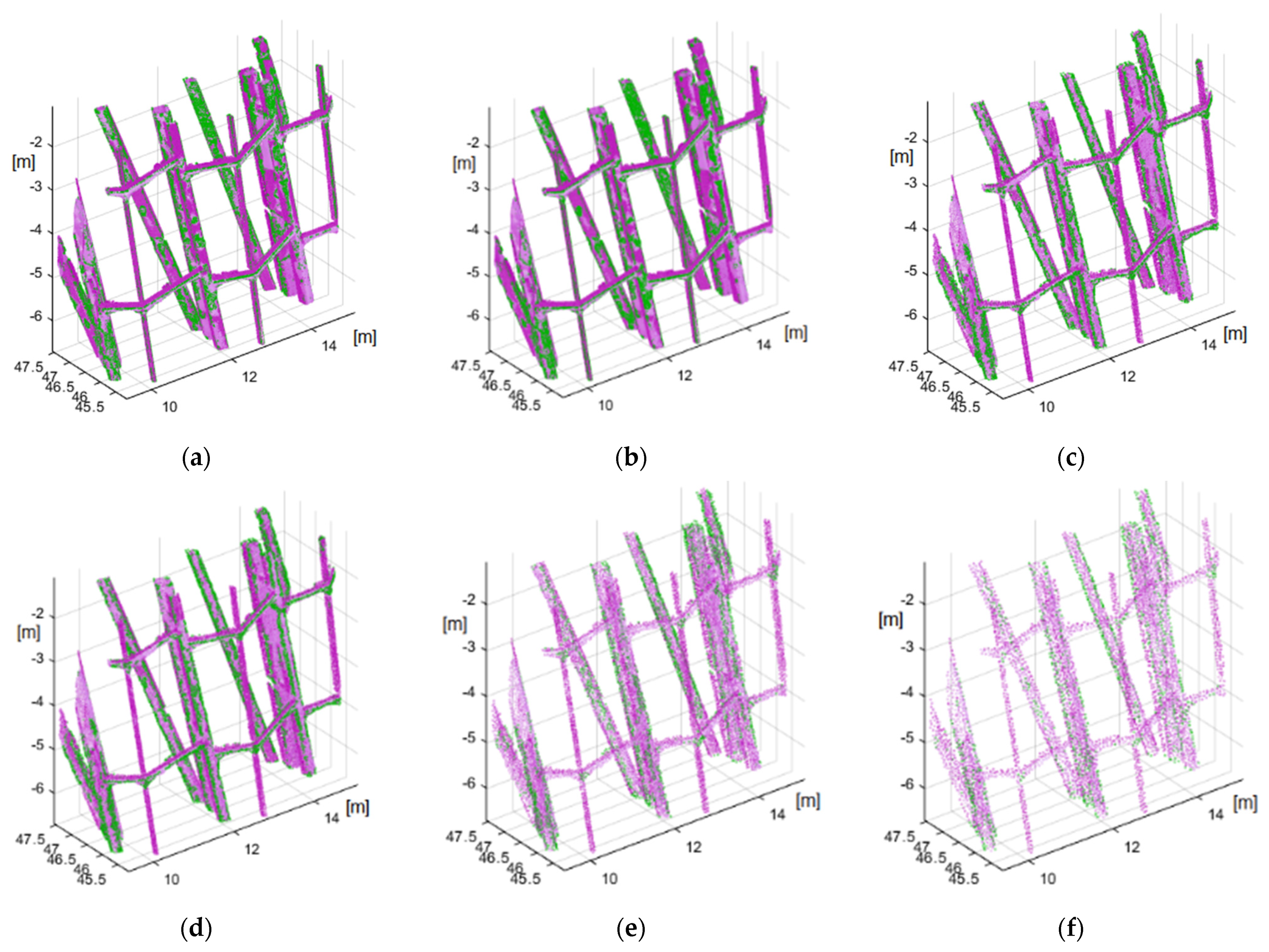

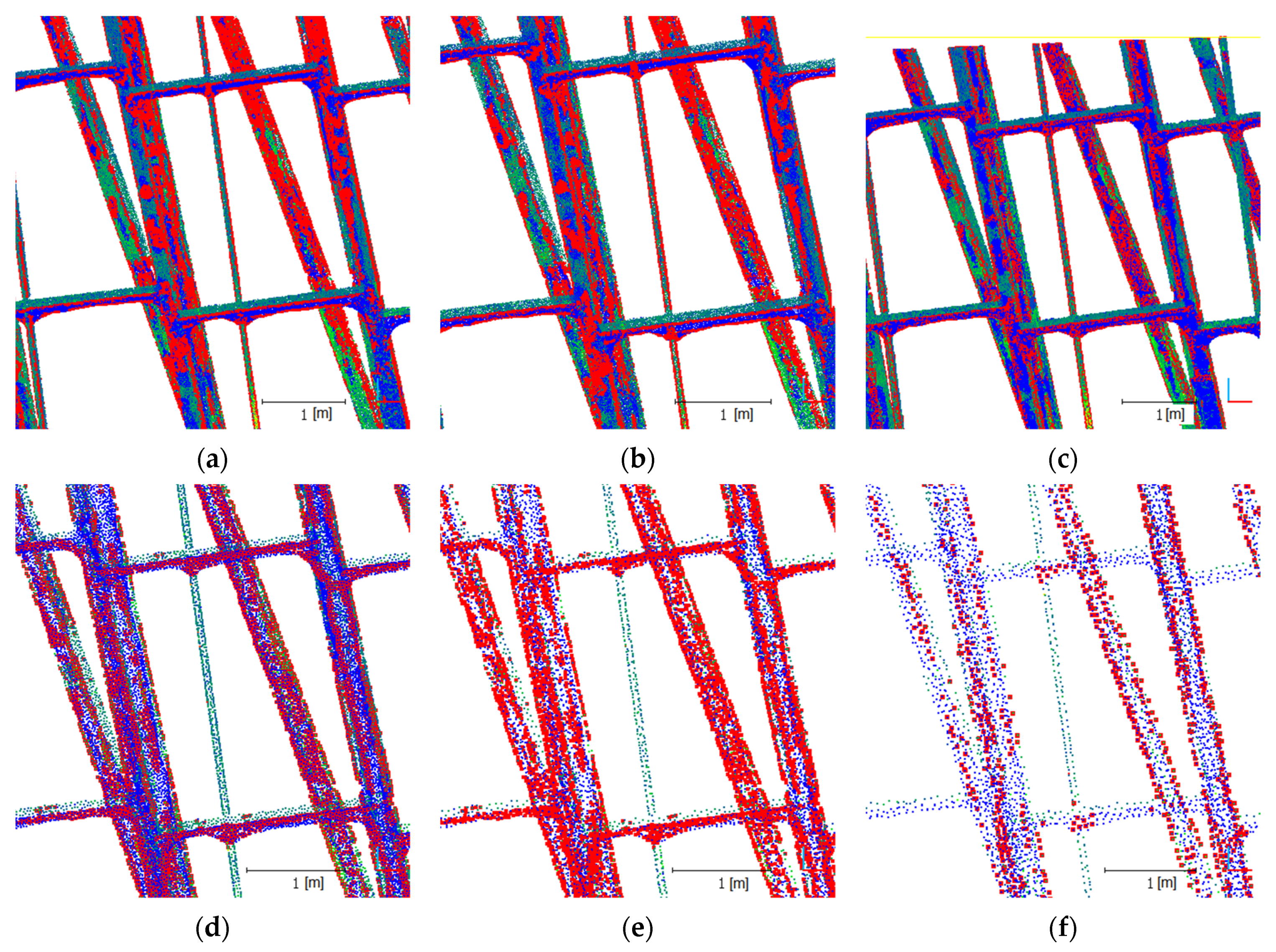

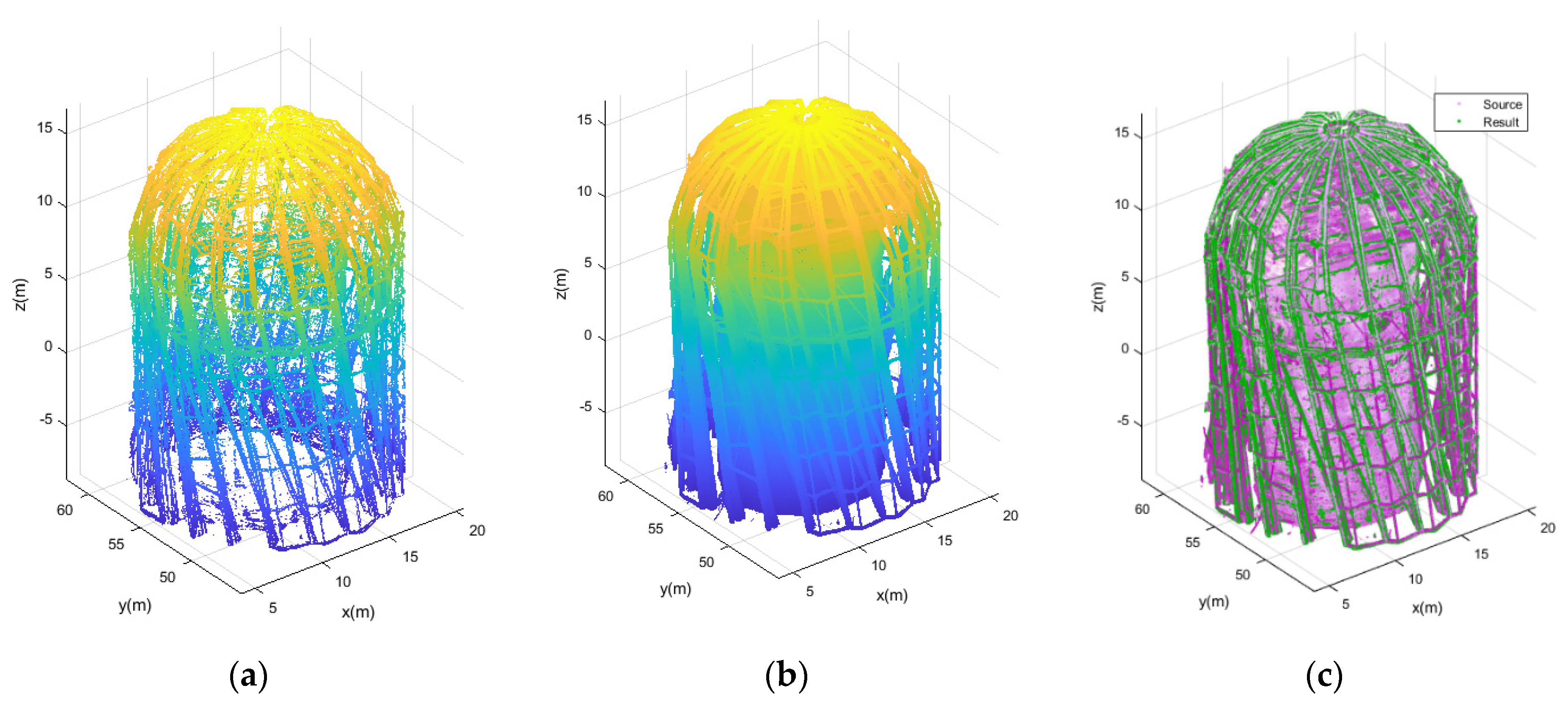

3.2. Structure Extraction

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wójcik, A.; Klapa, P.; Mitka, B.; Piech, I. The use of TLS and UAV methods for measurement of the repose angle of granular materials in terrain conditions. Measurement 2019, 146, 780–791. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F. Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying. Remote. Sens. 2020, 12, 2221. [Google Scholar] [CrossRef]

- Gruszczyński, W.; Matwij, W.; Ćwiąkała, P. Comparison of low-altitude UAV photogrammetry with terrestrial laser scanning as data-source methods for terrain covered in low vegetation. ISPRS J. Photogramm. Remote. Sens. 2017, 126, 168–179. [Google Scholar] [CrossRef]

- Kałuża, T.; Sojka, M.; Strzeliński, P.; Wróżyński, R. Application of Terrestrial Laser Scanning to Tree Trunk Bark Structure Characteristics Evaluation and Analysis of Their Effect on the Flow Resistance Coefficient. Water 2018, 10, 753. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, J.; Lindenbergh, R.; Hofland, B.; Ferreira, V.G. Range Image Technique for Change Analysis of Rock Slopes Using Dense Point Cloud Data. Remote. Sens. 2018, 10, 1792. [Google Scholar] [CrossRef]

- Xu, H.; Li, H.; Yang, X.; Qi, S.; Zhou, J. Integration of Terrestrial Laser Scanning and NURBS Modeling for the Deformation Monitoring of an Earth-Rock Dam. Sensors 2018, 19, 22. [Google Scholar] [CrossRef] [PubMed]

- Gawronek, P.; Makuch, M. TLS Measurement during Static Load Testing of a Railway Bridge. ISPRS Int. J. Geo-Info. 2019, 8, 44. [Google Scholar] [CrossRef]

- Ham, N.; Lee, S.-H. Empirical Study on Structural Safety Diagnosis of Large-Scale Civil Infrastructure Using Laser Scanning and BIM. Sustainability 2018, 10, 4024. [Google Scholar] [CrossRef]

- Suchocki, C.; Błaszczak-Bąk, W. Down-Sampling of Point Clouds for the Technical Diagnostics of Buildings and Structures. Geoscience 2019, 9, 70. [Google Scholar] [CrossRef]

- Ziolkowski, P.; Szulwic, J.; Miskiewicz, M. Deformation Analysis of a Composite Bridge during Proof Loading Using Point Cloud Processing. Sensors 2018, 18, 4332. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Guo, J.; Kim, M.-K. An Application Oriented Scan-to-BIM Framework. Remote. Sens. 2019, 11, 365. [Google Scholar] [CrossRef]

- Cao, Z.; Chen, D.; Shi, Y.; Zhang, Z.; Jin, F.; Yun, T.; Xu, S.; Kang, Z.; Zhang, L. A Flexible Architecture for Extracting Metro Tunnel Cross Sections from Terrestrial Laser Scanning Point Clouds. Remote. Sens. 2019, 11, 297. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Tysiac, P. Combined Close Range Photogrammetry and Terrestrial Laser Scanning for Ship Hull Modelling. Geoscience 2019, 9, 242. [Google Scholar] [CrossRef]

- Sarro, R.; Riquelme, A.; García-Davalillo, J.C.; Mateos, R.M.; Tomás, R.; Pastor, J.L.; Cano, M.; Herrera, G. Rockfall Simulation Based on UAV Photogrammetry Data Obtained during an Emergency Declaration: Application at a Cultural Heritage Site. Remote. Sens. 2018, 10, 1923. [Google Scholar] [CrossRef]

- Ossowski, R.; Przyborski, M.; Tysiac, P. Stability Assessment of Coastal Cliffs Incorporating Laser Scanning Technology and a Numerical Analysis. Remote. Sens. 2019, 11, 1951. [Google Scholar] [CrossRef]

- Tysiac, P. Bringing Bathymetry LiDAR to Coastal Zone Assessment: A Case Study in the Southern Baltic. Remote. Sens. 2020, 12, 3740. [Google Scholar] [CrossRef]

- Mazzanti, P.; Schilirò, L.; Martino, S.; Antonielli, B.; Brizi, E.; Brunetti, A.; Margottini, C.; Mugnozza, G.S. The Contribution of Terrestrial Laser Scanning to the Analysis of Cliff Slope Stability in Sugano (Central Italy). Remote. Sens. 2018, 10, 1475. [Google Scholar] [CrossRef]

- Paleček, V.; Kubíček, P. Assessment of Accuracy in the Identification of Rock Formations from Aerial and Terrestrial Laser-Scanning Data. ISPRS Int. J. Geo-Inf. 2018, 7, 142. [Google Scholar] [CrossRef]

- Puliti, S.; Solberg, S.; Granhus, A. Use of UAV Photogrammetric Data for Estimation of Biophysical Properties in Forest Stands Under Regeneration. Remote. Sens. 2019, 11, 233. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR Derived Canopy Height and DBH with Terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef]

- Surový, P.; Yoshimoto, A.; Panagiotidis, D. Accuracy of Reconstruction of the Tree Stem Surface Using Terrestrial Close-Range Photogrammetry. Remote. Sens. 2016, 8, 123. [Google Scholar] [CrossRef]

- Tompalski, P.; Coops, N.C.; Marshall, P.L.; White, J.C.; Wulder, M.A.; Bailey, T. Combining Multi-Date Airborne Laser Scanning and Digital Aerial Photogrammetric Data for Forest Growth and Yield Modelling. Remote. Sens. 2018, 10, 347. [Google Scholar] [CrossRef]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotský, M. Template for high-resolution river landscape mapping using UAV technology. Measurements 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Assessment of photogrammetric mapping accuracy based on variation ground control points number using unmanned aerial vehicle. Meas. J. Int. Meas. Confed. 2017, 98, 221–227. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Specht, C.; Dabrowski, P.S.; Specht, M.; Lewicka, O.; Makar, A. Using UAV Photogrammetry to Analyse Changes in the Coastal Zone Based on the Sopot Tombolo (Salient) Measurement Project. Sensors 2020, 20, 4000. [Google Scholar] [CrossRef]

- Long, N.; Millescamps, B.; Guillot, B.; Pouget, F.; Bertin, X. Monitoring the Topography of a Dynamic Tidal Inlet Using UAV Imagery. Remote. Sens. 2016, 8, 387. [Google Scholar] [CrossRef]

- Saponaro, M.; Pratola, L.; Capolupo, A.; Saponieri, A.; Damiani, L.; Fratino, U.; Tarantino, E. Data fusion of terrestrial laser scanner and remotely piloted aircraft systems points clouds for monitoring the coastal protection systems. Aquat. Ecosyst. Health Manag. 2020, 23, 1–7. [Google Scholar] [CrossRef]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy Assessment of Point Clouds from LiDAR and Dense Image Matching Acquired Using the UAV Platform for DTM Creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef]

- Wierzbicki, D.; Nienaltowski, M. Accuracy Analysis of a 3D Model of Excavation, Created from Images Acquired with an Action Camera from Low Altitudes. ISPRS Int. J. Geo-Inf. 2019, 8, 83. [Google Scholar] [CrossRef]

- Salehi, S.; Lorenz, S.; Vest Sørensen, E.; Zimmermann, R.; Fensholt, R.; Heincke, B.H.; Kirsch, M.; Gloaguen, R. Integration of Vessel-Based Hyperspectral Scanning and 3D-Photogrammetry for Mobile Mapping of Steep Coastal Cliffs in the Arctic. Remote. Sens. 2018, 10, 175. [Google Scholar] [CrossRef]

- Bujakowski, F.; Falkowski, T. Hydrogeological Analysis Supported by Remote Sensing Methods as A Tool for Assessing the Safety of Embankments (Case Study from Vistula River Valley, Poland). Water 2019, 11, 266. [Google Scholar] [CrossRef]

- Napolitano, R.; Hess, M.; Glisic, B. Integrating Non-Destructive Testing, Laser Scanning, and Numerical Modeling for Damage Assessment: The Room of the Elements. Heritage 2019, 2, 151–168. [Google Scholar] [CrossRef]

- De Regis, M.; Consolino, L.; Bartalini, S.; De Natale, P. Waveguided Approach for Difference Frequency Generation of Broadly-Tunable Continuous-Wave Terahertz Radiation. Appl. Sci. 2018, 8, 2374. [Google Scholar] [CrossRef]

- Markiewicz, J.S.; Podlasiak, P.; Zawieska, D. A New Approach to the Generation of Orthoimages of Cultural Heritage Objects—Integrating TLS and Image Data. Remote. Sens. 2015, 7, 16963–16985. [Google Scholar] [CrossRef]

- Corso, J.; Roca, J.; Buill, F. Geometric Analysis on Stone Façades with Terrestrial Laser Scanner Technology. Geosciences 2017, 7, 103. [Google Scholar] [CrossRef]

- Jarząbek-Rychard, M.; Maas, H.-G. Geometric Refinement of ALS-Data Derived Building Models Using Monoscopic Aerial Images. Remote. Sens. 2017, 9, 282. [Google Scholar] [CrossRef]

- Serna, A.; Marcotegui, B.; Hernández, J. Segmentation of Façades from Urban 3D Point Clouds Using Geometrical and Morphological Attribute-Based Operators. ISPRS Int. J. Geo-Inf. 2016, 5, 6. [Google Scholar] [CrossRef]

- Xie, L.; Zhu, Q.; Hu, H.; Wu, B.; Li, Y.; Zhang, Y.; Zhong, R. Hierarchical Regularization of Building Boundaries in Noisy Aerial Laser Scanning and Photogrammetric Point Clouds. Remote. Sens. 2018, 10, 1996. [Google Scholar] [CrossRef]

- Laefer, D.F.; Truong-Hong, L.; Carr, H.; Singh, M. Crack detection limits in unit based masonry with terrestrial laser scanning. NDT E Int. 2014, 62, 66–76. [Google Scholar] [CrossRef]

- Korumaz, M.; Betti, M.; Conti, A.; Tucci, G.; Bartoli, G.; Bonora, V.; Korumaz, A.G.; Fiorini, L. An integrated Terrestrial Laser Scanner (TLS), Deviation Analysis (DA) and Finite Element (FE) approach for health assessment of historical structures. A minaret case study. Eng. Struct. 2017, 153, 224–238. [Google Scholar] [CrossRef]

- Miśkiewicz, M.; Pyrzowski, Ł.; Sobczyk, B. Short and Long Term Measurements in Assessment of FRP Composite Footbridge Behavior. Materials 2020, 13, 525. [Google Scholar] [CrossRef]

- Miśkiewicz, M.; Sobczyk, B.; Tysiac, P. Non-Destructive Testing of the Longest Span Soil-Steel Bridge in Europe—Field Measurements and FEM Calculations. Materials 2020, 13, 3652. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Zhang, Z.; Zeng, D. A New Simplification Algorithm for Scattered Point Clouds with Feature Preservation. Symmetry 2021, 13, 399. [Google Scholar] [CrossRef]

- Han, H.; Han, X.; Sun, F.; Huang, C. Point cloud simplification with preserved edge based on normal vector. Optics 2015, 126, 2157–2162. [Google Scholar] [CrossRef]

- Zhang, K.; Qiao, S.; Wang, X.; Yang, Y.; Zhang, Y. Feature-Preserved Point Cloud Simplification Based on Natural Quadric Shape Models. Appl. Sci. 2019, 9, 2130. [Google Scholar] [CrossRef]

- Song, H.; Feng, H.-Y. A progressive point cloud simplification algorithm with preserved sharp edge data. Int. J. Adv. Manuf. Technol. 2009, 45, 583–592. [Google Scholar] [CrossRef]

- Fleishman, S.; Cohen-Or, D.; Silva, C.T. Robust moving least-squares fitting with sharp features. ACM Trans. Graph. 2005, 24, 544–552. [Google Scholar] [CrossRef]

- Ii, J.D.; Ochotta, T.; Ha, L.K.; Silva, C.T. Spline-based feature curves from point-sampled geometry. Vis. Comput. 2008, 24, 449–462. [Google Scholar] [CrossRef]

- Öztireli, A.C.; Guennebaud, G.; Gross, M. Feature Preserving Point Set Surfaces based on Non-Linear Kernel Regression. Comput. Graph. Forum 2009, 28, 493–501. [Google Scholar] [CrossRef]

- Xia, S.; Wang, R. A Fast Edge Extraction Method for Mobile Lidar Point Clouds. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 1288–1292. [Google Scholar] [CrossRef]

- Demarsin, K.; Vanderstraeten, D.; Volodine, T.; Roose, D. Detection of closed sharp edges in point clouds using normal estimation and graph theory. Comput. Des. 2007, 39, 276–283. [Google Scholar] [CrossRef]

- Xu, J.; Zhou, M.; Wu, Z.; Shui, W.; Ali, S. Robust surface segmentation and edge feature lines extraction from fractured fragments of relics. J. Comput. Des. Eng. 2015, 2, 79–87. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, C.; Cheng, J.; Chen, B.; Jia, F.; Chen, Z.; Li, J. Line segment extraction for large scale unorganized point clouds. ISPRS J. Photogramm. Remote. Sens. 2015, 102, 172–183. [Google Scholar] [CrossRef]

- Weber, C.; Hahmann, S.; Hagen, H. Sharp feature detection in point clouds. In Proceedings of the 2010 Shape Modeling International Conference, Aix-en-Provence, France, 21–23 June 2010; pp. 175–186. [Google Scholar]

- Weber, C.; Hahmann, S.; Hagen, H. Methods for Feature Detection in Point Clouds. In Proceedings of the OpenAccess Series in Informatics, Kaiserslautern, Germany, 10–11 June 2011. [Google Scholar]

- Gumhold, S.; Macleod, R.; Wang, X. Feature Extraction from Point Clouds. In Proceedings of the 10th International Meshing Roundtable, Newport Beach, CA, USA, 7–11 October 2001. [Google Scholar]

- Feng, C.; Taguchi, Y.; Kamat, V.R. Fast plane extraction in organized point clouds using agglomerative hierarchical clustering. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6218–6225. [Google Scholar]

- Raina, P.; Mudur, S.; Popa, T. Sharpness fields in point clouds using deep learning. Comput. Graph. 2019, 78, 37–53. [Google Scholar] [CrossRef]

- Raina, P.; Mudur, S.; Popa, T. MLS2: Sharpness Field Extraction Using CNN for Surface Reconstruction. In Proceedings of the Proceedings—Graphics Interface, Toronto, ON, Canada, 9–11 May 2018. [Google Scholar]

- Wang, Y.; Du, Z.; Gao, Y.; Li, M.; Dong, W. An Approach to Edge Extraction Based on 3D Point Cloud for Robotic Chamfering. J. Phys. Conf. Ser. 2019, 1267. [Google Scholar] [CrossRef]

- Daniels, J.I.; Ha, L.K.; Ochotta, T.; Silva, C.T. Robust Smooth Feature Extraction from Point Clouds. In Proceedings of the IEEE International Conference on Shape Modeling and Applications 2007 (SMI ’07), Minneapolis, MN, USA, 13–15 June 2007; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA; pp. 123–136. [Google Scholar]

- Tran, T.-T.; Cao, V.-T.; Nguyen, V.T.; Ali, S.; Laurendeau, D. Automatic Method for Sharp Feature Extraction from 3D Data of Man-made Objects. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Lisbon, Portuga, 5–8 January 2014; SciTePress—Science and Technology Publications: Setúbal, Portugal, 2014; pp. 112–119. [Google Scholar]

- Ahmed, S.M.; Tan, Y.Z.; Chew, C.M.; Al Mamun, A.; Wong, F.S. Edge and Corner Detection for Unorganized 3D Point Clouds with Application to Robotic Welding. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 7350–7355. [Google Scholar]

- Xiao, R.; Xu, Y.; Hou, Z.; Chen, C.; Chen, S. An adaptive feature extraction algorithm for multiple typical seam tracking based on vision sensor in robotic arc welding. Sens. Actuators A Phys. 2019, 297, 111533. [Google Scholar] [CrossRef]

- Zhao, W.; Zhao, C.; Wen, Y.; Xiao, S. An Adaptive Corner Extraction Method of Point Cloud for Machine Vision Measuring System. In Proceedings of the 2010 International Conference on Machine Vision and Human-machine Interface, Kaifeng, China, 24–25 April 2010; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2010; pp. 80–83. [Google Scholar]

- Dyrekcja Rozbudowy Miasta Gdanska. Rewitalizacja i Przebudowa Kompleksu Budynków Palmiarni. Available online: https://www.drmg.gdansk.pl/index.php/bup-realizowane/288-rewitalizacja-i-przebudowa-kompleksu-budynkow-palmiarni-w-ogrodzie-botanicznym-w-parku-opackim-im-adama-mickiewicza-w-gdansku-oliwie-etap-i (accessed on 15 October 2020).

- Marchel, Ł.; Specht, C.; Specht, M. Testing the Accuracy of the Modified ICP Algorithm with Multimodal Weighting Factors. Energies 2020, 13, 5939. [Google Scholar] [CrossRef]

- Chen, S.; Truong-Hong, L.C.; O’Keeffe, E.; Laefer, D.F.; Mangina, E. Outlier Detection of Point Clouds Generating from Low-Cost UAVs for Bridge Inspection. In Proceedings of the Life-Cycle Analysis and Assessment in Civil Engineering, Ghent, Belgium, 28–31 October 2018; Frangopol, D.M., Caspeele, R., Taerwe, L., Eds.; CRC Press/Balkema: Boca Raton, FL, USA, 2019; pp. 1969–1975. [Google Scholar]

- Szabó, Z.; Tóth, C.A.; Holb, I.; Szabó, S. Aerial Laser Scanning Data as a Source of Terrain Modeling in a Fluvial Environment: Biasing Factors of Terrain Height Accuracy. Sensors 2020, 20, 2063. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote. Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D Point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Balta, H.; Velagic, J.; Bosschaerts, W.; De Cubber, G.; Siciliano, B. Fast Statistical Outlier Removal Based Method for Large 3D Point Clouds of Outdoor Environments. IFAC-PapersOnLine 2018, 51, 348–353. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Prochazkova, J.; Martisek, D. Notes on Iterative Closest Point Algorithm. In Proceedings of the 17th Conference on Applied Mathematics Aplimat 2018 Proceedings; Slovak University of Technology in Bratislava in Publishing House SPEKTRUM STU, Bratislava, Slovakia, 6–8 February 2018; p. 876. [Google Scholar]

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. In Proceedings of the Proceedings. 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 2724–2729. [Google Scholar]

- He, Y.; Liang, B.; Yang, J.; Li, S.; He, J. An Iterative Closest Points Algorithm for Registration of 3D Laser Scanner Point Clouds with Geometric Features. Sensors 2017, 17, 1862. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Bradley, D.; Roth, G. Adaptive Thresholding using the Integral Image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Hoppe, H.; Derose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. ACM SIGGRAPH Comput. Graph. 1992, 26, 71–78. [Google Scholar] [CrossRef]

- Friedman, J.H.; Bentley, J.L.; Finkel, R.A. An Algorithm for Finding Best Matches in Logarithmic Expected Time. ACM Trans. Math. Softw. 1977, 3, 209–226. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J.C. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. 3. [Google Scholar]

- James, M.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landforms 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

| Series | Distance to Object | Ground Resolution | Reprojection Error |

|---|---|---|---|

| 1 | 1–15 m | 11 mm/pix | 0.71 pix |

| 2 | 1–15 m | 2.4 mm/pix | 0.77 pix |

| Camera locations and error estimates (mean) | |||

| X error (m) | Y error (m) | Z error (m) | |

| 1 | 0.00127 | 0.00137 | 0.00128 |

| 2 | 0.00082 | 0.00084 | 0.00092 |

| Technical Data | Leica P 30 |

|---|---|

| Measurement speed: | Up to 1 MM points per second |

| Range accuracy: | 1.2 mm + 10 ppm over the entire range |

| Angular accuracy: | 8″ horizontally; 8″ vertically |

| 3D position accuracy: | 3 mm at 50 m; 6 mm at 100 m |

| Laser wave length: | 1550 nm (invisible)/658 (visible) |

| Distance noise: | 0.4 mm RMS at 10 m 0.5 mm RMS at 50 m |

| Horizontal field of view: | 360° |

| Vertical field of view: | 270° |

| Name | PX | PY | PZ | Roll | Pitch | Yaw | Scale | PKT |

|---|---|---|---|---|---|---|---|---|

| Stan1 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.0 | 188 |

| Stan2 | 0.000 | 0.000 | 0.000 | 0.000 | 0.011 | 0.000 | 0.0 | 293 |

| Stan3 | 0.001 | 0.000 | 0.003 | 0.007 | −0.015 | 0.005 | 0.0 | 364 |

| Stan4 | 0.001 | 0.001 | 0.004 | 0.011 | −0.015 | 0.005 | 0.0 | 428 |

| Stan5 | 0.001 | 0.001 | 0.007 | 0.002 | 0.024 | 0.005 | 0.0 | 330 |

| Stan6 | 0.000 | 0.001 | 0.008 | 0.017 | −0.003 | 0.001 | 0.0 | 359 |

| Stan7 | −0.001 | 0.000 | 0.011 | 0.015 | −0.021 | 0.002 | 0.0 | 306 |

| Stan8 | −0.002 | 0.000 | 0.008 | 0.025 | −0.009 | 0.009 | 0.0 | 238 |

| Stan9 | −0.001 | −0.001 | 0.007 | 0.014 | 0.017 | 0.006 | 0.0 | 304 |

| Stan10 | −0.001 | −0.001 | 0.003 | 0.005 | 0.012 | 0.003 | 0.0 | 228 |

| Stan11 | −0.001 | −0.001 | 0.005 | −0.003 | 0.005 | 0.002 | 0.0 | 245 |

| Stan12 | −0.001 | −0.002 | 0.002 | −0.006 | −0.018 | −0.011 | 0.0 | 142 |

| Stan13 | 0.000 | −0.002 | 0.003 | 0.001 | 0.003 | 0.005 | 0.0 | 138 |

| Stan14 | −0.001 | −0.001 | 0.006 | −0.009 | −0.008 | 0.004 | 0.0 | 311 |

| Stan15 | −0.004 | −0.001 | 0.004 | −0.015 | −0.003 | −0.004 | 0.0 | 32 |

| Stan16 | −0.009 | 0.000 | 0.009 | 0.001 | −0.024 | 0.010 | 0.0 | 536 |

| Stan17 | −0.010 | 0.000 | 0.009 | −0.002 | -0.025 | 0.014 | 0.0 | 539 |

| Filtration Phase | UAV Point Cloud | TLS Point Cloud |

|---|---|---|

| Initial | 182,505,086 | 103,680,397 |

| Noise filter | 92,214,210 | 60,791,121 |

| CSF | 81,005,411 | 37,192,129 |

| Manual cleaning | 69,029,458 | 24,033,077 |

| Reduction | 24,160,311 | 24,033,077 |

| SOR | 18,806,444 | 23,875,659 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Burdziakowski, P.; Zakrzewska, A. A New Adaptive Method for the Extraction of Steel Design Structures from an Integrated Point Cloud. Sensors 2021, 21, 3416. https://doi.org/10.3390/s21103416

Burdziakowski P, Zakrzewska A. A New Adaptive Method for the Extraction of Steel Design Structures from an Integrated Point Cloud. Sensors. 2021; 21(10):3416. https://doi.org/10.3390/s21103416

Chicago/Turabian StyleBurdziakowski, Pawel, and Angelika Zakrzewska. 2021. "A New Adaptive Method for the Extraction of Steel Design Structures from an Integrated Point Cloud" Sensors 21, no. 10: 3416. https://doi.org/10.3390/s21103416

APA StyleBurdziakowski, P., & Zakrzewska, A. (2021). A New Adaptive Method for the Extraction of Steel Design Structures from an Integrated Point Cloud. Sensors, 21(10), 3416. https://doi.org/10.3390/s21103416