Abstract

Accurately estimating the current state of local traffic scenes is one of the key problems in the development of software components for automated vehicles. In addition to details on free space and drivability, static and dynamic traffic participants and information on the semantics may also be included in the desired representation. Multi-layer grid maps allow the inclusion of all of this information in a common representation. However, most existing grid mapping approaches only process range sensor measurements such as Lidar and Radar and solely model occupancy without semantic states. In order to add sensor redundancy and diversity, it is desired to add vision-based sensor setups in a common grid map representation. In this work, we present a semantic evidential grid mapping pipeline, including estimates for eight semantic classes, that is designed for straightforward fusion with range sensor data. Unlike other publications, our representation explicitly models uncertainties in the evidential model. We present results of our grid mapping pipeline based on a monocular vision setup and a stereo vision setup. Our mapping results are accurate and dense mapping due to the incorporation of a disparity- or depth-based ground surface estimation in the inverse perspective mapping. We conclude this paper by providing a detailed quantitative evaluation based on real traffic scenarios in the KITTI odometry benchmark dataset and demonstrating the advantages compared to other semantic grid mapping approaches.

1. Introduction

Environment perception modules in automated driving aim at solving a wide range of tasks. One of these is the robust and accurate detection and state estimation of other traffic participants in areas that are observable by on-board sensors. For risk assessment of the current scene, information about unobservable areas is also important. Furthermore, drivable areas must be perceived in order to navigate the automated vehicle safely. To reduce computational power, a common framework for solving all of these tasks is desirable. Additionally, it is preferable to use multiple heterogeneous sensors to increase the robustness of the whole system. In the literature, occupancy grid maps are frequently considered, as they enable the detection of other traffic participants while additionally modeling occlusions due to their dense grid structure. Most of the presented methods only include the processing of range sensor measurements such as Lidar and Radar and solely model occupancy without semantic states. Cameras have received less attention in the past couple of years as Lidar sensors have become more and more affordable. However, compared to cameras, Lidar sensors are still more expensive. Furthermore, cameras are superior when it comes to understanding semantic details in the environment. In [1], we presented a semantic evidential fusion approach for multi-layer grid maps by introducing a refined set of hypotheses that allows the joint modeling of occupancy and semantic states in a common representation. In this work, we use the same evidence theoretical framework and present two improved sensor models for stereo vision and monocular vision that can be incorporated in the sensor data fusion presented in [1].

In the remainder of this section, we briefly introduce the terms of the Dempster–Shafer theory (Section 1.1) relevant to this work. We then review past publications on stereo vision-based and monocular vision-based grid mapping, monocular depth estimation and semantic grid mapping in Section 1.2, followed by highlighting our focus for the proposed methods in Section 1.3. In Section 2, we give an overview of our semantic evidential models and the multi-layer grid map representations. We further describe our proposed semantic evidential grid mapping pipelines, depicted in Figure 1, in detail. We evaluate our processing steps based on challenging real traffic scenarios and compare the results of both methods in Section 3. Finally, we conclude this paper and give an outlook to future work in Section 4.

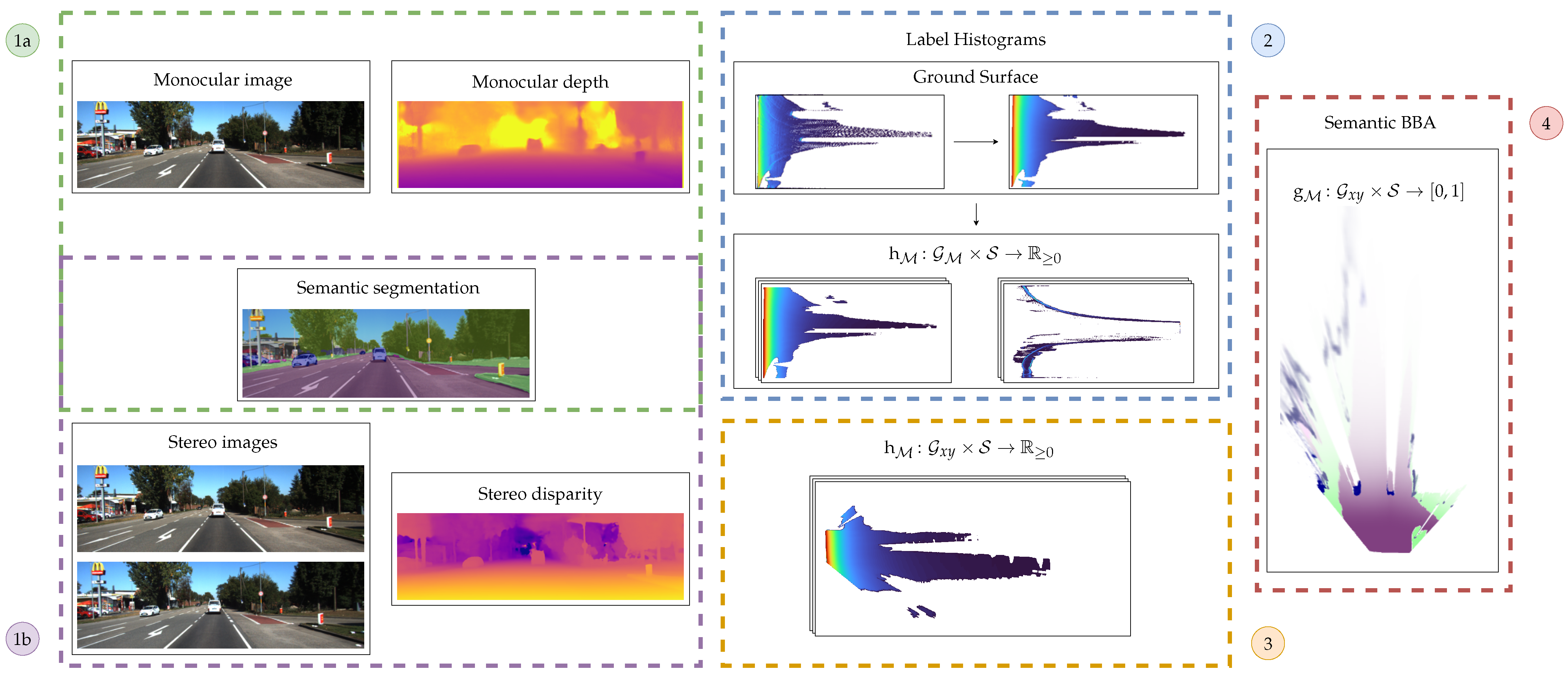

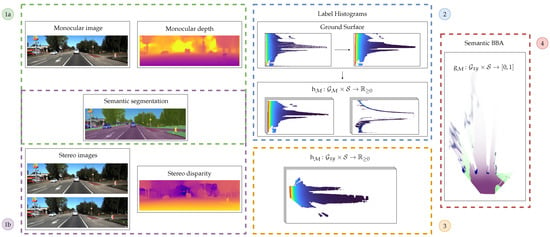

Figure 1.

Overview of the described grid mapping framework. On the front end, both monocular images are processed to obtain depth maps (1a) or stereo images are used to estimate a disparity map (1b). Both of them are accompanied by a pixelwise semantic segmentation image. The images are used as input for a label histogram calculation in a setup-dependant grid in the second step (2). This label histogram is transformed into a cartesian grid (3) and finally transformed into a semantic evidential grid map (4).

1.1. Dempster–Shafer Theory of Evidence (DST)

The Dempster–Shafer theory of evidence (DST), originally introduced in [2], is an extension of Bayes theory and provides a framework to model uncertainty and combine evidence from different sources. For the hypotheses set of interest , called frame of discernment, the basic belief assignment (BBA)

assigns belief masses to all possible combinations of evidence. In contrast to probability measures, the BBA does not define a measure in the measure theoretical sense as it does not satisfy the additivity property. In consequence, the belief mass assigned to the whole set models the amount of total ignorance explicitly. Based on a BBA, lower and upper bounds for the probability mass of a set can be deduced as

where bel(·) and pl(·) are called belief and plausibility, respectively.

1.2. Related Work

Occupancy grid maps, as introduced by Elfes et al. in [3], are often used for dense scene state estimation as they enable explicit modeling of free space and occlusion. While cartesian grid maps are especially suitable for fusing measurements over time as, e.g., presented in [4,5], other coordinate systems are more suitable for modeling sensor characteristics. In [6], Badino et al. compared different tessellations; namely, cartesian, polar and u-disparity grids for modeling free space in stereo-based occupancy grid maps. Perrollaz et al. use a u-disparity grid to estimate a stereo-based occupancy grid map and further considered different measurement models for assigning pixel measurements to grid cells in [7]. Danescu et al. used the grid representation from [8] to estimate a dynamic occupancy grid map with a low-level particle filter in [9]. Yu et al. proposed in [10] to model free space in a v-disparity grid and occupancy in a u-disparity grid before combining both in a stereo-based occupancy grid map using an evidence theoretical framework. As opposed to all previously mentioned works that considered occupancy and free space only, Giovani et al. added one occupancy refinement value denoting the semantic state to their grid map representation in [11]. However, as they did not include the semantics in their evidential hypotheses set, well-established combination rules could not be applied. Recent work on stereo-based grid mapping has been published by Valente et al. in [12] and Thomas et al. in [13]. While Valente et al. only modeled occupancy in a u-disparity grid with a subsequent fusion with Lidar-based occupancy grid maps in the cartesian space, Thomas et al. incorporated semantic hypotheses in an evidential framework. Focusing on estimating a road model, however, the hypotheses set they considered is limited to the static world.

Semantic grid mapping has also been exploited based on measurements from monocular cameras. Erkent et al. estimated in [14] semantic grid maps by fusing pixel-wise semantically labeled images with Lidar-based occupancy grid maps in a deep neural network. Lu et al. directly trained a variational encoder–decoder network on monocular RGB images to obtain a semantic top-view representation in [15]. Both networks result in a semantic grid map representation containing one class per grid cell, thus discarding knowledge about the label estimation distribution and uncertainty.

For transforming measurements from the image domain to a top-view representation, a pixelwise depth estimation is needed. In the last few years, tremendous progress in monocular depth estimation has been witnessed, especially after the wide deployment and improvement of deep neural networks. There are three main approaches for monocular depth estimation with deep neural networks: supervised depth prediction from RGB images, self-supervised (unsupervised) depth prediction with monocular videos and self-supervised depth completion.

Nowadays, with the help of convolutional neural networks (CNN), the results, such as in [16,17,18], have become superior to previous works in terms of speed and accuracy. However, the resolution of the monocular depth estimation in those papers is relatively low. To overcome this predicament, Alhashim et al. present a convolutional neural network for computing a high-resolution depth map given a single RGB image with the help of transfer learning [19]. All the above methods attempt to directly predict each pixel’s depth in an image using models that have been trained offline on a colossal training dataset with the ground truth images of depth information. While these methods have enjoyed great success, to date, they have been restricted to scenes where extensive image collections and their corresponding pixel depths are available. For the case without depth ground truth dataset, an unsupervised learning framework is presented in [20] for the task of monocular depth and camera motion estimation from unstructured video sequences. In [21], the authors generate disparity images from monocular images by training the network with an image reconstruction loss and stereo images training dataset, exploiting epipolar geometry constraints. Finally, Qiao et al. tackle the inverse projection problem in [22] by jointly performing monocular depth estimation and video panoptic segmentation. With their method, they are able to generate 3D point clouds with instance-level semantic estimates for each point.

1.3. Goals of This Work

This work aims to provide two accurate and efficient grid mapping frameworks. One is based on stereo cameras and the other one is based on a monocular camera. In contrast to many past publications on vision-based grid mapping like [6,7,8,10,12], we use a wide range of different semantic classes, which can be provided by vision. Instead of assigning only one semantic label per grid cell as in [11,14,15], we use the hypotheses set introduced in [1] to model uncertainty for eight semantic hypotheses in a consistent evidential framework. In order to achieve a dense and smooth BBA for ground hypotheses, we make use of encapsulated ground surface estimations to approximate the pixel-to-area correspondence in the top-view space. The resulting semantic evidential multi-layer grid map can then be fused with range sensor-based grid maps, as described in [1].

2. Materials and Methods

In this section, we summarize the underlying evidential models, introduce our multi-layer grid map representations in Section 2.1 and introduce all coordinate systems used throughout the mapping pipeline in Section 2.2. Then we introduce how to get the input images for the label histogram calculation in Section 2.3, followed by a detailed description of the label histogram calculation in the u-disparity and u-depth space in Section 2.4. We further explain how the label histogram is transformed to a cartesian grid in Section 2.5. We conclude this section by presenting the calculation of the BBA based on the label histogram in Section 2.6.

2.1. Semantic Evidential Framework

The frame of discernment

consists of the hypotheses car (c), cyclist (), pedestrian (p), other movable object (), non movable object (), street (s), sidewalk () and terrain (t). This hypotheses set can be seen as a refinement of the classical occupancy frame consisting of the two hypotheses occupied and free by considering the hypotheses sets

and

This makes it particularly suitable for the fusion of semantic estimates with range measurements in top-view as outlined in [1]. For the BBA, we consider the hypotheses set consisting of singletons

as all hypotheses combinations are either conflicting by definition or not estimated by the semantic labeling. We define the two-dimensional grid on the rectangular region of interest , where

forms a partition of the interval with equidistant length , origin and size . The BBA on is then represented by the multi-layer grid map

where is the corresponding BBA in the grid cell .

2.2. Coordinate Systems

We use four coordinate systems in our processing chain. The first is the image coordinate system with coordinates rectified according to a pinhole camera model. For mapping stereo vision measurements to the top-view, the u-disparity coordinate system with coordinates is used as an intermediate representation in order to be able to model disparity estimation errors explicitly. When depth is estimated directly as in most of the monocular vision-based methods, a u-depth coordinate system with coordinates is used. For the final grid representation, a cartesian coordinate system with coordinates is used. To indicate the corresponding coordinate system, the considered region of interest is subscripted as , , and , respectively. The same notation is used for the attached grids , , and . Furthermore, we introduce the mappings

for transforming coordinates from one system to another.

2.3. Input Representation

We define a stereo vision measurement

as a tuple of a set of pixels and the three images

which is the pixel-wise semantic labeling image , the disparity image and disparity confidence image .

In the case of measurements stemming from a monocular camera, the disparity image is replaced by the depth image :

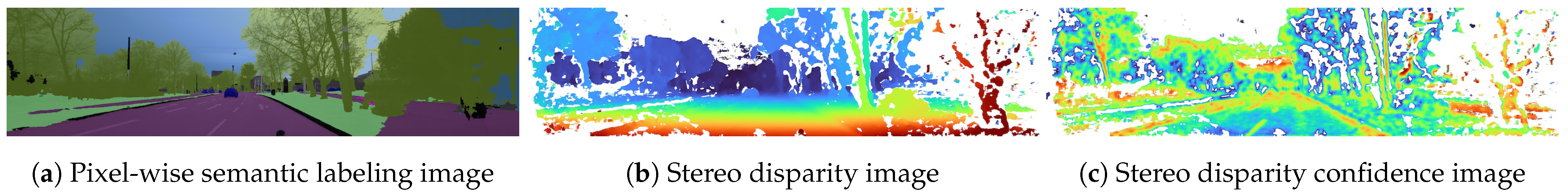

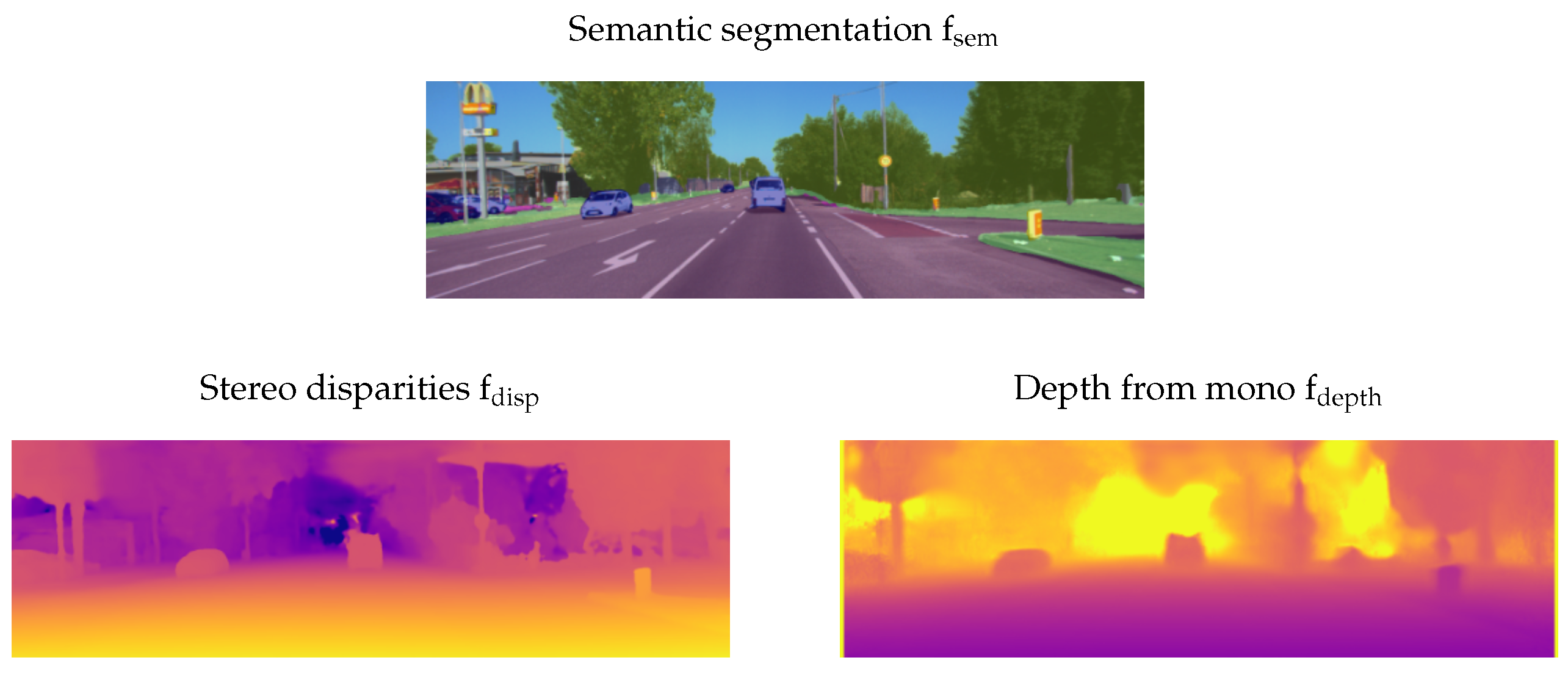

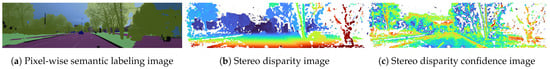

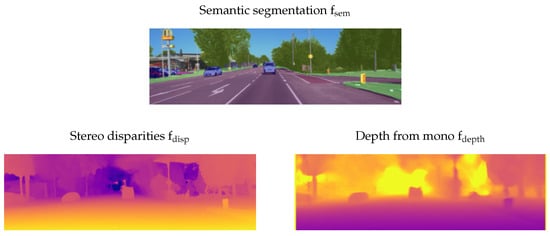

Note that the confidence images may be set to one for all pixels in case the disparity or depth estimation does not output one. In this case, every pixel is attached the same weight in the subsequent grid mapping pipeline. Figure 2 shows an example for the stereo vision measurements that were used in [23].

Figure 2.

The three input images to our stereo vision-based grid mapping pipeline used in [23]. © 2021 IEEE. Reprinted, with permission, from Richter, S.; Beck, J.; Wirges, S.; Stiller, C. Semantic Evidential Grid Mapping based on Stereo Vision. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 14–16 September 2020, pp. 179–184, doi: 10.1109/MFI49285.2020.9235217.

2.4. Label Histogram Calculation

As introduced in [1], we calculate the BBA based on the label histogram, which resembles the contribution of accumulated pixels to a class in a given grid cell. We use a u-disparity grid and a u-depth grid to compute the label histogram for stereo vision-based and monocular vision-based grid mapping, respectively. These discretization spaces have the advantage that disparity and depth estimation uncertainty can be modeled explicitly, as, e.g., outlined in [8]. For the sake of simplicity, we subsequently refer to the measurement grid as . The label histogram

of the measurement on the u-disparity grid or the u-depth grid is given by

where is a window function specifying the contribution of the measurement based on the pixel to the cell and

denotes the indicator function. We apply different measurement models depending on the assigned semantic hypothesis. For the object hypotheses , we treat each pixel measurement P as a point measurement that is the center coordinate of the pixel P. We then calculate the window function based on the inverse sensor model to model spatial uncertainty. Here, X denotes the random variable modeling the actual position that the pixel measurement P is based on. The window function further contains the confidence of the corresponding range estimate . Assuming statistical independence between the spatial uncertainty and the uncertainty of the range estimate, the window function for object classes is finally set to

In order to keep the computational complexity at a minimum, we assume X to be uniformly distributed in a rectangle centered around with size d such that

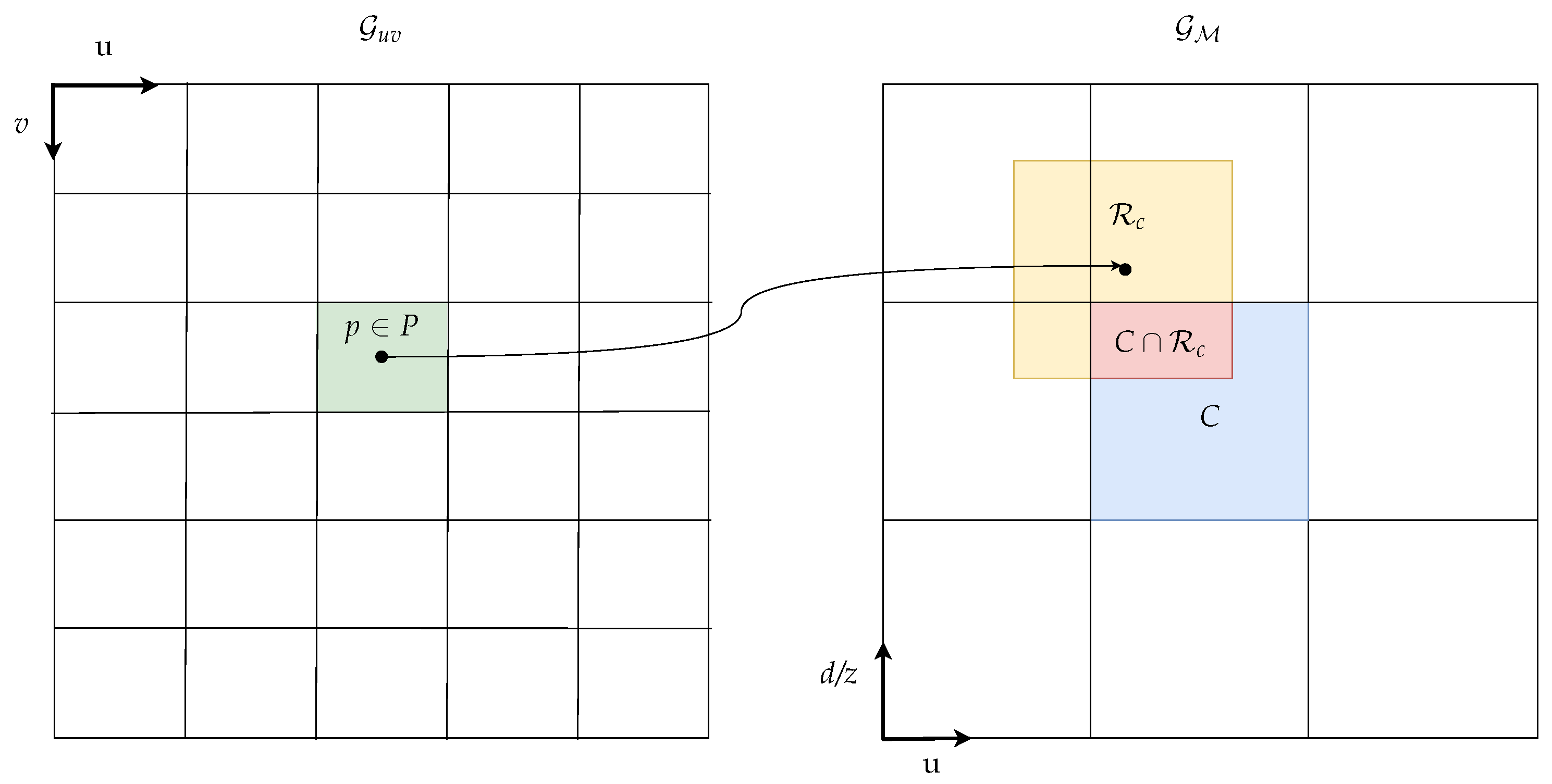

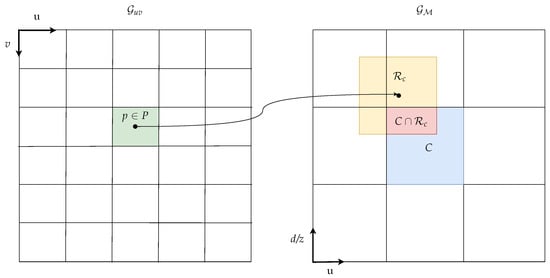

where is the two-dimensional Lebesgue measure. The mapping of pixels with assigned object labels is sketched in Figure 3.

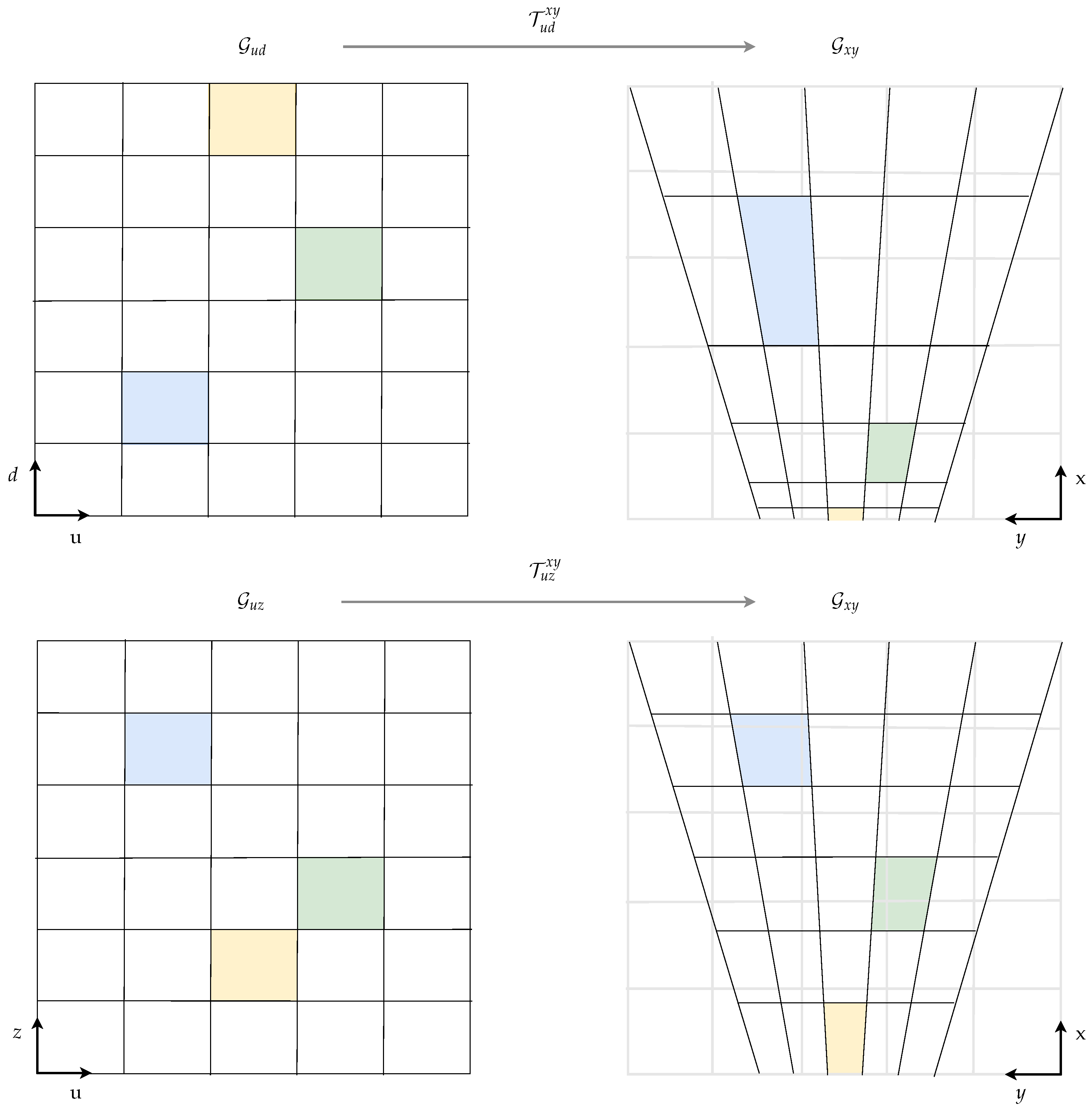

Figure 3.

Mapping of measurements with assigned object labels from image to u-disparity/-depth grid.

Treating pixels with assigned object labels as points is a simplification based on a lack of knowledge about object surfaces. For pixels labeled as ground, however, the surface can be assumed to be locally planar. We use this prior knowledge and propose an approximating pixel-to-area correspondence to obtain dense mapping results for the ground hypotheses. The label histogram for the ground hypotheses is given by

where is the probability density function of the random variable X modeling the measurement position and

is the area in the grid space corresponding to the measurement pixel P. For pixels classified with ground labels, the shape of this area depends on the ground surface. We approximate the resulting label histogram for ground hypotheses by approximating with rectangles based on an encapsulated ground surface estimation in the three steps: ground estimation, pixel area approximation and area integral calculation.

2.4.1. Ground Surface Estimation

A ground surface estimation is obtained based on the current image measurements in two stages. First, the height is averaged over all pixels that correspond to a given grid cell C with an assigned ground label and disparity or depth value exceeding a given threshold. Here, each pixel is treated as a point measurement leading to sparse mapping results, especially at far distances. Furthermore, the disparity or depth estimate might add further sparsity depending on the used method. The quality of the stereo disparity estimation based on pixel matching, for example, heavily relies on the local contrast of the camera image. This leads to poor disparity estimation results in smooth areas, especially on the ground, which results in no height being computed here. The sparse ground estimation is augmented in the second stage using the inpainting method introduced in [24]. This inpainting algorithm is based on the Navier–Stokes equations for fluid dynamics and matches gradients at inpainting region boundaries. To avoid large errors, the inpainting is only done in a neighborhood of the sparse ground estimation defined by the inpainting mask . This mask is computed as

where and are the distance transforms based on the masks defining the observed and unobserved image regions, respectively, and are thresholds defining the interpolation neighborhood. We justify the application of this data augmentation by the assumption that gradient jumps in the height profile would lead to gradient jumps in the pixel intensity and thus imply well-estimated disparity and depth. Consequently, the inpainted regions are restricted to areas without jumps in the height profile.

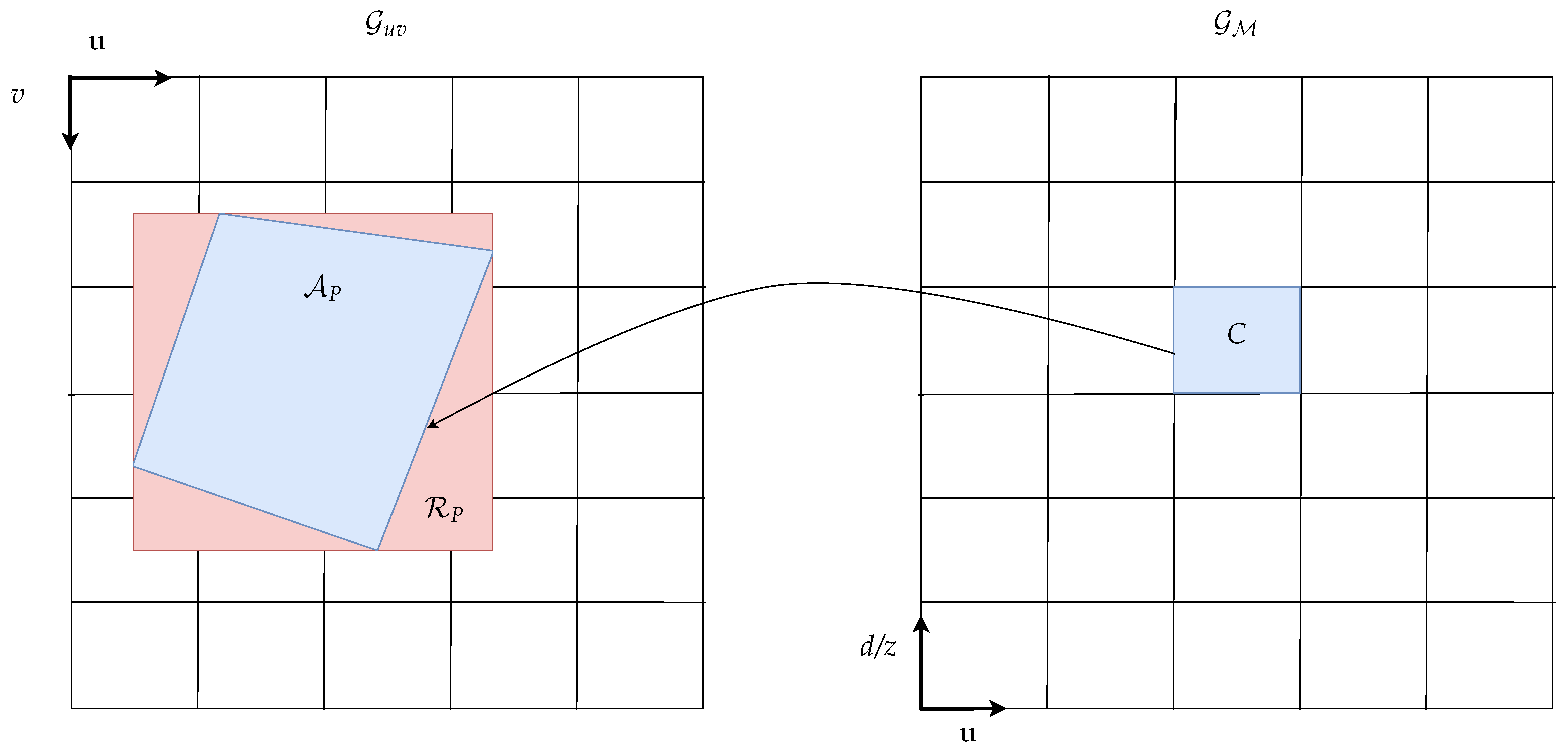

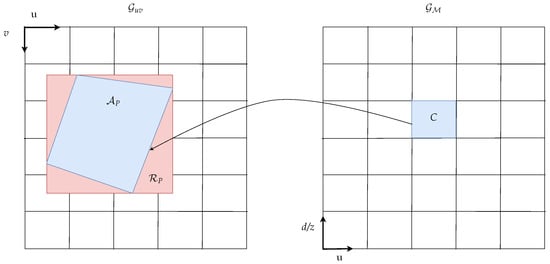

2.4.2. Pixel Area Approximation

Depending on the ground surface relief, a pixel patch may correspond to arbitrarily shaped areas in the grid space. Given a grid cell , the label histogram from Equation (1) for the ground label contains the sum of all pixel portions overlapping with the cell projected into the image domain. To accelerate the mapping process, we approximate the projected cell by a rectangle . Utilizing the estimated ground height, the homogeneous position in cartesian coordinates can be computed based on the lower left cell corner point and the upper right cell corner point in u-disparity space. The lower left sub-pixel and the upper right sub-pixel coordinates are then computed using the perspective mapping as

where K is the pinhole camera matrix. Based on the projected corner points, the projected rectangle is then given by

The grid cell approximation is depicted in Figure 4.

Figure 4.

Mapping of measurements with assigned ground labels from image to u-disparity/-depth grid.

2.4.3. Area Integral Calculation

Finally, the label histogram approximation for the ground labels can efficiently be calculated based on the integral image

as

Note that in the upper equation, and are sub-pixel coordinates and the corresponding integral image is evaluated using bilinear interpolation.

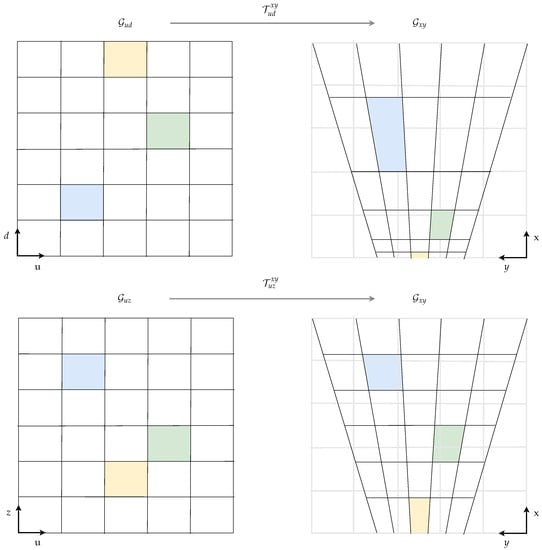

2.5. Grid Transformation to Cartesian Space

The label histogram grid map layers are transformed from u-disparity/-depth space to the cartesian space before the BBA calculation to prevent inconsistencies in the belief assignment due to interpolation artifacts. Note that cartesian grid cells close to the camera, for instance, correspond to many u-disparity grid cells, while one u-disparity grid cell covers several cartesian grid cells at far distances. The relations between the considered tessellations are sketched in Figure 5. Non-regular cell area correspondences occur not only between u-disparity/-depth and cartesian grids. Yguel et al. investigated this effect in detail for the switch from a polar to cartesian coordinate system in [25]. Simple remapping methods lead to the so-called Moiré effect due to undersampling, which is well known in computer graphics. We tackle this issue by applying a well-established upsampling principle in relevant areas. By analyzing the area ratio between a cartesian cell and the corresponding u-disparity/-depth cell, a set of points is chosen lying on an equidistant grid within the cell. The u-disparity/-depth coordinate is calculated for each point, and the label histogram value is computed based on the u-disparity/-depth grid map utilizing bilinear interpolation. The label histogram’s final value for the cartesian cell is a weighted average over all sampled cell points.

Figure 5.

In the cartesian grid on the right-hand side, the grid cells are influenced by the distorted overlayed areas based on the corresponding u-disparity or u-depth grid cell, respectively.

2.6. Basic Belief Assignment

The label histogram is subsequently used to compute a consistent BBA in the measurement grid map . The BBA is computed based on the false-positive probability as

for the relevant hypotheses . Note that can easily be determined based on the confusion matrix of the evaluation data set of the semantic labelling network.

3. Results

We execute our proposed method based on two setups using the Kitti odometry benchmark [26]. In the first case, we calculate stereo disparities based on the two color cameras in the Kitti sensor setup using the guided aggregation net for stereo matching presented by Zhang et al. in [27]. The authors connect a local guided aggregation layer that follows a traditional cost filtering refining thin structures to a semi-global aggregation layer. In the second setup, we only use the left color camera and compute a depth map using the unsupervised method presented by Godard et al. in [21]. Both neural networks are openly available on GitHub and have been trained or at least refined using the Kitti 2015 stereo vision benchmark. For calculating the pixelwise semantic labeling, the neural network proposed by Zhu et al. in [28] was used. Their network architecture is openly available as well and achieves a mean intersection over union (IoU) of 72.8% in the Kitti semantic segmentation benchmark. Note that all of the above choices were made independently of runtime considerations. In both cases, the pixelwise confidences for depth and disparity, respectively, are set to one as the corresponding networks do not output adequate information. In Figure 6, an example of the three used input images is depicted.

Figure 6.

Results of the three neural networks used to generate the input images for our proposed grid mapping pipeline.

The region of interest of our cartesian grid map is 100 in x-direction and 50 in y-direction where the sensor origin is located at (0 , 25 ). The cell size is 10 in both dimensions.

In the remainder of this section, we first present the ground truth that we used to evaluate our method in Section 3.1. We then present some visual results in Section 3.2. Finally, we present a detailed quantitative evaluation in Section 3.3.

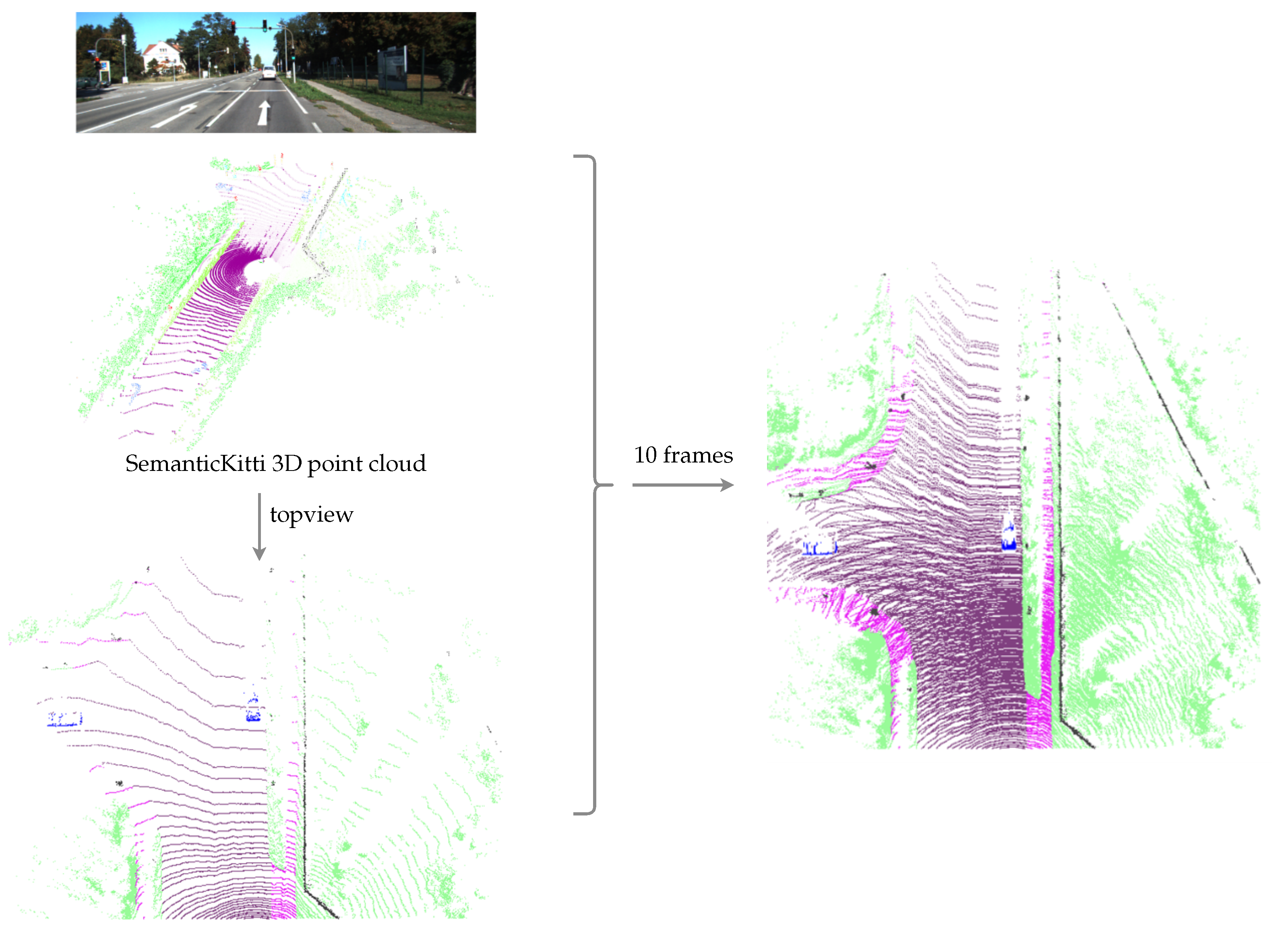

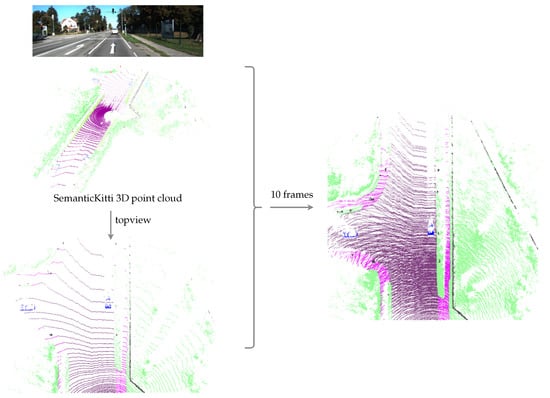

3.1. Ground Truth Generation

We base our quantitative evaluation on the SemanticKITTI dataset presented by Behley et al. in [29]. SemanticKitti extends the Kitti odometry benchmark by annotating the 360° Lidar scans with semantics labels using a set of 28 classes. Here, we merge those classes to obtain semantic labels corresponding to our singleton hypotheses . Using the labeled poses in the Kitti odometry dataset, the point clouds from ten frames are transformed into the current pose, compensating for ego-motion and the subsequent accumulation. This densifies the semantic point cloud around the ego vehicle. The thus accumulated 3D semantically annotated point cloud is mapped into the same grid that is used for our semantic evidential grid map representation. The generation of our ground truth is illustrated in Figure 7. When using multiple frames to build a denser ground truth, some cells covered by dynamic objects are covered by road pixels. In order to remove those conflicts, we use morphological operations to remove the ground labels in those regions, as can be seen in Figure 7 at the locations of the two vehicles that are present in the depicted scene. Subsequently, the grid map containing the ground truth labels is denoted as

assigning both a semantic label or the label “unknown” to each grid cell .

Figure 7.

The generation of the ground truth used for the quantitative evaluation. Three-dimensional semantic point clouds from ten frames are merged and mapped into a top-view grid.

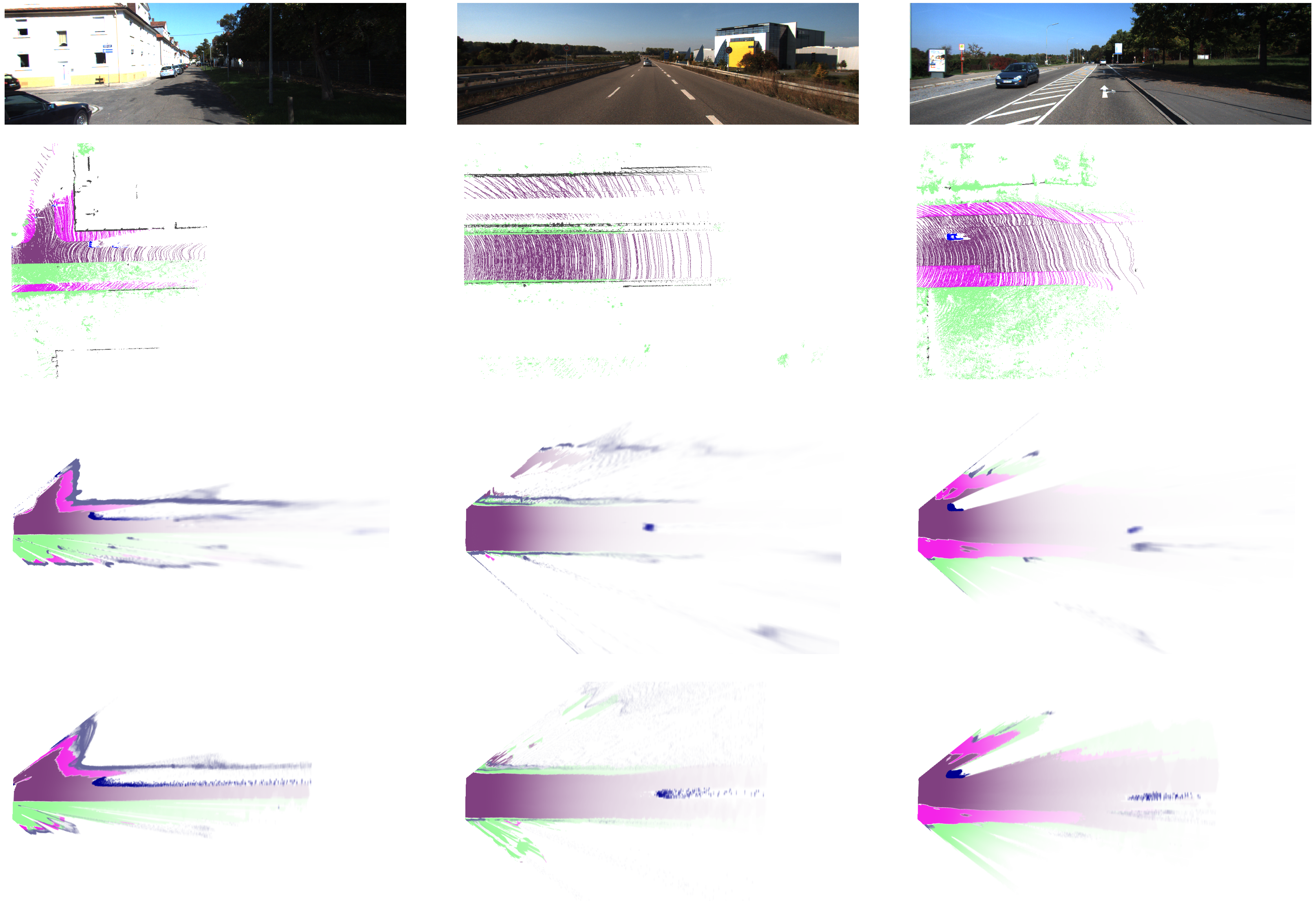

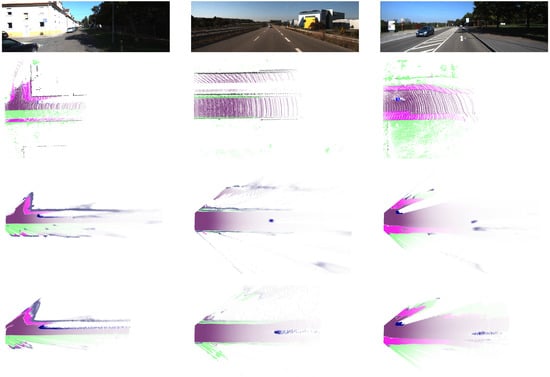

3.2. Visual Evaluation

We process the first 1000 frames for the sequences 00 to 10 in the Kitti odometry dataset. Figure 8 depicts visual impressions of the results for the sequences 00, 01, and 02. The first thing that stands out is that the detection range in both the mono- and stereo-based grid maps surpasses the one in the Lidar-based ground truth. The BBA in our resulting evidential grid maps decreases with the distance to the sensor origin, which aligns with the intuition that the uncertainty is higher at larger distances. The first scenario in the left column was captured in a suburban region in Karlsruhe and contains a series of residences on the left, a t-crossing to the left, and a sidewalk on the right that is separated from the road by terrain. The border of the residences appears to be captured better when using the stereo pipeline. As the sidewalk on the right is covered by shadows leading to low contrast in the corresponding image region, its geometry cannot be captured with both pipelines. The middle column shows a highway scenario with a vehicle in front of the ego vehicle at about 50 distance. The guardrails in both the ego vehicle and the opposite lane can only be captured using the stereo pipeline. The leading vehicle is detected more precisely using the stereo pipeline as well. In the third column, a scenario in a rural area with a vehicle passing on the opposite lane is depicted. There is a sidewalk on each side of the road with adjacent terrain. The rough geometry of the parts can be captured in both the mono and the stereo pipeline. The passing vehicle is detected better using the stereo pipeline, whereas its shape is slightly distorted using the monocular vision pipeline due to higher inaccuracy in the depth estimation. As a general observation, it can be stated that the errors in both camera-based reconstructions are dominated by flying pixels at object boundaries that result from inconsistencies between the pixelwise semantic estimate and the depth or disparity estimation.

Figure 8.

The resulting BBA for stereo and mono images. Each column corresponds to one frame in the Kitti odometry benchmark depicted in the image in the first row. The second row shows the ground truth, the third row shows the results for stereo vision, and the last row shows the results for monovision.

3.3. Quantitative Evaluation

We provide a quantitative evaluation of our method based on the intersection over union per class and the overall ratio of correctly predicted cell states.

3.3.1. Intersection over Union

The intersection over union (IoU) and the mean intersection over union (mIoU) are defined as

where presents the number of true positive cells, the number of false-positive cells, and the number of false-negative cells of the label . In this context, a grid cell is considered as a true positive if the class in the ground truth coincides with the class that has been assigned the highest BBA. Note, hence, that this metric does not consider the measure of uncertainty that is encoded in the BBA. Therefore, we calculate the modified intersection-over-union metrics

based on the modified rates

Table 1 and Table 2 show the above-defined IoU metrics for the sequences 00 to 10 in the Kitti odometry benchmark. The tables contain the numbers for all considered semantic classes except for other movable objects () as it barely occurs in the test sequences. The stereo vision pipeline outperforms the monocular vision pipeline for almost all classes. This is expected as the used stereo disparity estimation is more accurate than the monocular depth estimation. In general, the numbers for both setups are in similar regions as the ones presented in the Lidar-based semantic grid map estimation from Bieder et al. in [30]. They reach a 39.8% mean IoU with their best configuration. Our proposed method reaches 37.4% and 41.0% mean IoU in the monocular and stereo pipeline, respectively. The accuracy for small objects as pedestrians and cyclists is very low as small errors in the range estimations have a high effect compared to the objects size. Comparing the numbers for with incorporating the BBA, it stands out that the modified IoU is significantly higher. For the modified IoU, means of 44.7% and 48.7% are reached in the two setups. The reason for this is that higher uncertainties in wrongly classified cells lower the modified false-positive and false-negative rates and and thus also the denominator in the calculation of . The results show that wrong classifications are attached with a higher uncertainty and that the BBA can be used as a meaningful measure for uncertainty.

Table 1.

Class IoUs () for the stereo vision pipeline in %. The dash indicates that there are no corresponding objects in the sequence. The column on the right contains the mean IoUs ().

Table 2.

Class IoUs () for the monocular vision pipeline in %. The dash indicates that there are no corresponding objects in the sequence. The column on the right contains the mean IoUs ().

3.3.2. Ratio of Correct Labels

As a second class of metrics, we consider the ratio of correctly classified cells

where equals one if the correct label was assigned the highest BBA greater than zero and is one if the highest BBA greater than zero corresponds to the wrong label. The counterpart incorporating the BBA reads

We have calculated as well as for sequences 00 to 10. The results are presented in Table 3 for the stereo vision pipeline and in Table 4 for the monocular vision pipeline. The numbers confirm the tendencies collected in the IoU-based evaluation. The modified ratios based on the BBA are higher than the ones that are based solely on one predicted class per cell and the ratios of the stereo vision pipeline are slightly above the ones of the monocular vision pipeline. Besides the consistency between range estimation and semantic segmentation, the quality of the semantic segmentation itself naturally influences the final results strongly. We found that the majority of the errors in the segmentation occur in the distinction between the road and the sidewalk. Experiments showed that can be improved by up to 10% depending on the sequence when merging the two classes. Besides the Lidar-based semantic top-view maps presented in [30], we can compare our results to the hybrid approach using Lidar and RGB images from Erkent et al. presented in [14]. They achieve a ratio of correctly labeled cells of 81% in their best performing setup, indicating that our approach performs slightly better. However, note that they predict a different set of classes without uncertainty considerations.

Table 3.

Ratio of correctly labeled grid cells for the stereo vision pipeline.

Table 4.

Ratio of correctly labeled grid cells for the monocular vision pipeline.

4. Conclusions

We presented an accurate and efficient framework for semantic evidential grid mapping based on two camera setups: monocular vision and stereo vision. Our resulting top-view representation contains evidential measures for eight semantic hypotheses, which can be seen as a refinement of the classical occupancy hypotheses free and occupied. We explicitly model uncertainties of the sensor setup-dependant range estimation in an intermediate grid representation. The mapping results are dense and smooth, yet not complete as no estimates are given in unobserved areas. In our quantitative evaluation, we showed the benefits of our evidential model by obtaining significantly better error metrics when considering the uncertainties. This is one of the main advantages of our method compared to other publications and enables our pipeline to perform comparably well to competitive ones using more expensive sensors such as Lidar [14,30]. The second advantage is the underlying semantic evidential representation that makes fusion with other sensor types as range sensors straight forward, see [1]. The main bottlenecks in our pipeline are the semantic segmentation and the range estimation in the image domain as well as the consistency between both. Especially the influence of the latter might easily be underestimated as inconsistencies of a few pixels already imply large distortions at higher distances.

In future work, we will focus on developing a refinement method to improve the consistency between range and semantic estimation in the image domain. In this regard, it might also be promising to combine both in a mutual aid network to achieve a higher consistency in the first place. We will then fuse the presented vision-based semantic evidential grid maps with evidential grid maps from range sensors based on the method described in [1]. Furthermore, we will incorporate the fused grid maps into a dynamic grid mapping framework that is able to both accumulate a semantic evidential map as well as track dynamic traffic participants. Finally, we aim at providing a real-time capable implementation of our framework by utilizing massive parallelization on state-of-the-art GPUs.

Author Contributions

Conceptualization, S.R., J.B. and S.W.; methodology, S.R., J.B.; software, S.R., Y.W.; validation, S.R., J.B., S.W. and Y.W.; formal analysis, S.R. and Y.W.; investigation, S.R. and Y.W.; resources, S.R.; data curation, S.R. and Y.W.; writing—original draft preparation, S.R. and Y.W.; writing—review and editing, S.R.; visualization, S.R. and Y.W.; supervision, C.S.; project administration, C.S.; funding acquisition, S.R. All authors have read and agreed to the published version of the manuscript.

Funding

We acknowledge the support from the KIT-Publication Fund of the Karlsruhe Institute of Technology.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Richter, S.; Wirges, S.; Königshof, H.; Stiller, C. Fusion of range measurements and semantic estimates in an evidential framework/Fusion von Distanzmessungen und semantischen Größen im Rahmen der Evidenztheorie. Tm-Tech. Mess. 2019, 86, 102–106. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 42. [Google Scholar]

- Elfes, A. Using Occupancy Grids for Mobile Robot Perception and Navigation. Computer 1989, 22, 46–57. [Google Scholar] [CrossRef]

- Nuss, D.; Reuter, S.; Thom, M.; Yuan, T.; Krehl, G.; Maile, M.; Gern, A.; Dietmayer, K. A Random Finite Set Approach for Dynamic Occupancy Grid Maps with Real-time Application. Int. J. Robot. Res. 2018, 37, 841–866. [Google Scholar] [CrossRef]

- Steyer, S.; Tanzmeister, G.; Wollherr, D. Grid-Based Environment Estimation Using Evidential Mapping and Particle Tracking. IEEE Trans. Intell. Veh. 2018, 3, 384–396. [Google Scholar] [CrossRef]

- Badino, H.; Franke, U. Free Space Computation Using Stochastic Occupancy Grids and Dynamic Programming; Technical Report; Citeseer: University Park, PA, USA, 2007. [Google Scholar]

- Perrollaz, M.; Spalanzani, A.; Aubert, D. Probabilistic Representation of the Uncertainty of Stereo-Vision and Application to Obstacle Detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 313–318. [Google Scholar] [CrossRef]

- Pocol, C.; Nedevschi, S.; Meinecke, M.M. Obstacle Detection Based on Dense Stereovision for Urban ACC Systems. In Proceedings of the 5th International Workshop on Intelligent Transportation, Hamburg, Germany, 18–19 March 2008. [Google Scholar]

- Danescu, R.; Pantilie, C.; Oniga, F.; Nedevschi, S. Particle Grid Tracking System Stereovision Based Obstacle Perception in Driving Environments. IEEE Intell. Transp. Syst. Mag. 2012, 4, 6–20. [Google Scholar] [CrossRef]

- Yu, C.; Cherfaoui, V.; Bonnifait, P. Evidential Occupancy Grid Mapping with Stereo-Vision. In Proceedings of the IEEE Intelligent Vehicles Symposium, Seoul, Korea, 28 June–1 July 2015; pp. 712–717. [Google Scholar] [CrossRef]

- Giovani, B.V.; Victorino, A.C.; Ferreira, J.V. Stereo Vision for Dynamic Urban Environment Perception Using Semantic Context in Evidential Grid. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 2471–2476. [Google Scholar] [CrossRef]

- Valente, M.; Joly, C.; de la Fortelle, A. Fusing Laser Scanner and Stereo Camera in Evidential Grid Maps. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018. [Google Scholar]

- Thomas, J.; Tatsch, J.; Van Ekeren, W.; Rojas, R.; Knoll, A. Semantic grid-based road model estimation for autonomous driving. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar] [CrossRef]

- Erkent, O.; Wolf, C.; Laugier, C.; Gonzalez, D.S.; Cano, V.R. Semantic Grid Estimation with a Hybrid Bayesian and Deep Neural Network Approach. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 888–895. [Google Scholar]

- Lu, C.; van de Molengraft, M.J.G.; Dubbelman, G. Monocular Semantic Occupancy Grid Mapping With Convolutional Variational Encoder–Decoder Networks. IEEE Robot. Autom. Lett. 2019, 4, 445–452. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. Advances in neural information processing systems. arXiv 2014, arXiv:1406.2283. [Google Scholar]

- Liu, F.; Shen, C.; Lin, G.; Reid, I. Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2024–2039. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep ordinal regression network for monocular depth estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2002–2011. [Google Scholar]

- Alhashim, I.; Wonka, P. High quality monocular depth estimation via transfer learning. arXiv 2018, arXiv:1812.11941. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised learning of depth and ego-motion from video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1851–1858. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 270–279. [Google Scholar]

- Qiao, S.; Zhu, Y.; Adam, H.; Yuille, A.; Chen, L.C. ViP-DeepLab: Learning Visual Perception with Depth-aware Video Panoptic Segmentation. arXiv 2020, arXiv:2012.05258. [Google Scholar]

- Richter, S.; Beck, J.; Wirges, S.; Stiller, C. Semantic Evidential Grid Mapping based on Stereo Vision. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 14–16 September 2020; pp. 179–184. [Google Scholar] [CrossRef]

- Bertalmio, M.; Bertozzi, A.; Sapiro, G. Navier-Stokes, Fluid Dynamics, and Image and Video Inpainting. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 1–355. [Google Scholar] [CrossRef]

- Yguel, M.; Aycard, O.; Laugier, C. Efficient GPU-based construction of occupancy grids using several laser range-finders. Int. J. Veh. Auton. Syst. 2008, 6, 48–83. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H.S. GA-Net: Guided Aggregation Net for End-To-End Stereo Matching. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 185–194. [Google Scholar] [CrossRef]

- Zhu, Y.; Sapra, K.; Reda, F.A.; Shih, K.J.; Newsam, S.; Tao, A.; Catanzaro, B. Improving semantic segmentation via video propagation and label relaxation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8856–8865. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Bieder, F.; Wirges, S.; Janosovits, J.; Richter, S.; Wang, Z.; Stiller, C. Exploiting Multi-Layer Grid Maps for Surround-View Semantic Segmentation of Sparse LiDAR Data. arXiv 2020, arXiv:2005.06667. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).