Evaluating the Accuracy of Virtual Reality Trackers for Computing Spatiotemporal Gait Parameters

Abstract

1. Introduction

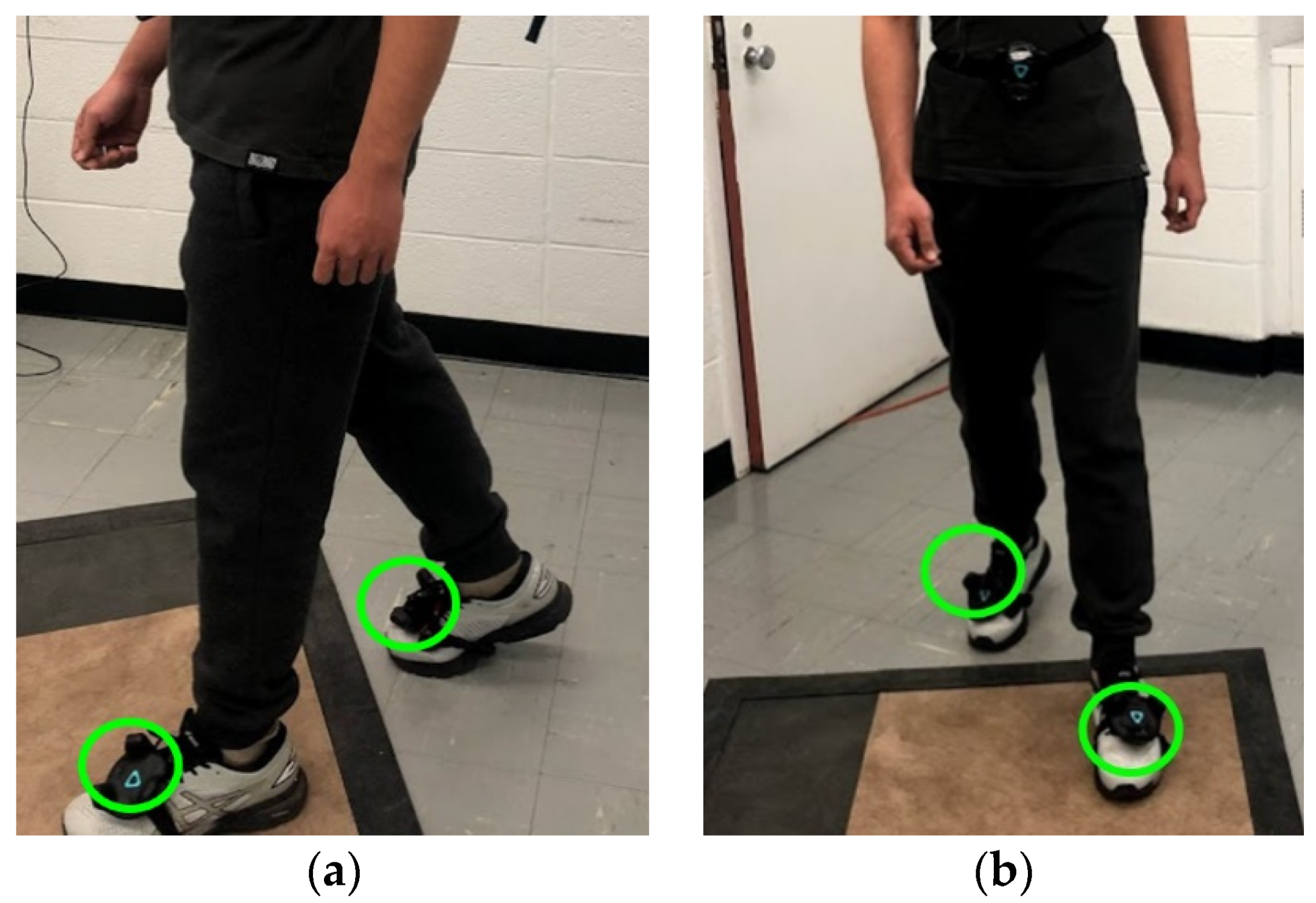

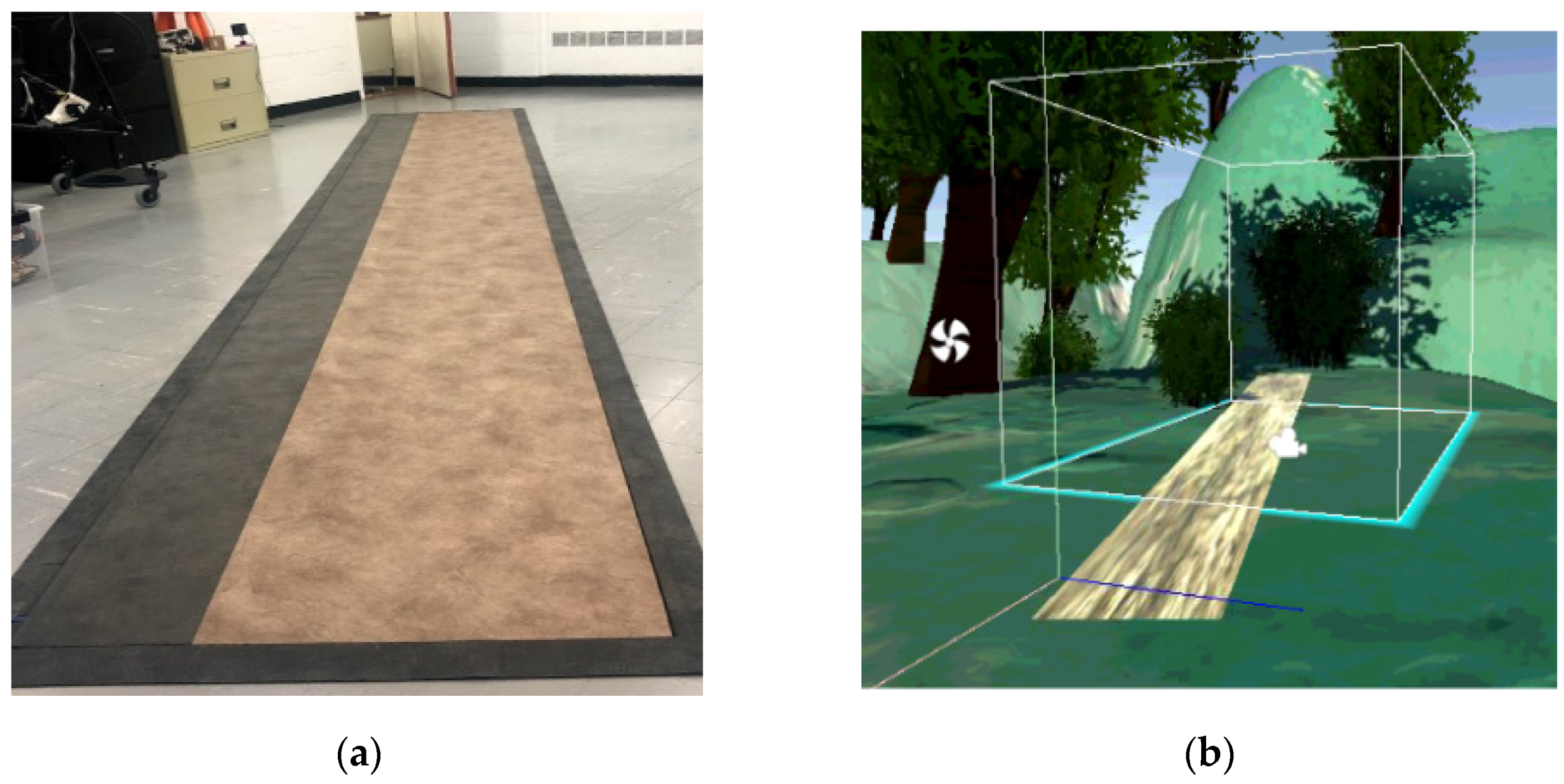

2. Materials and Methods

2.1. Procedure

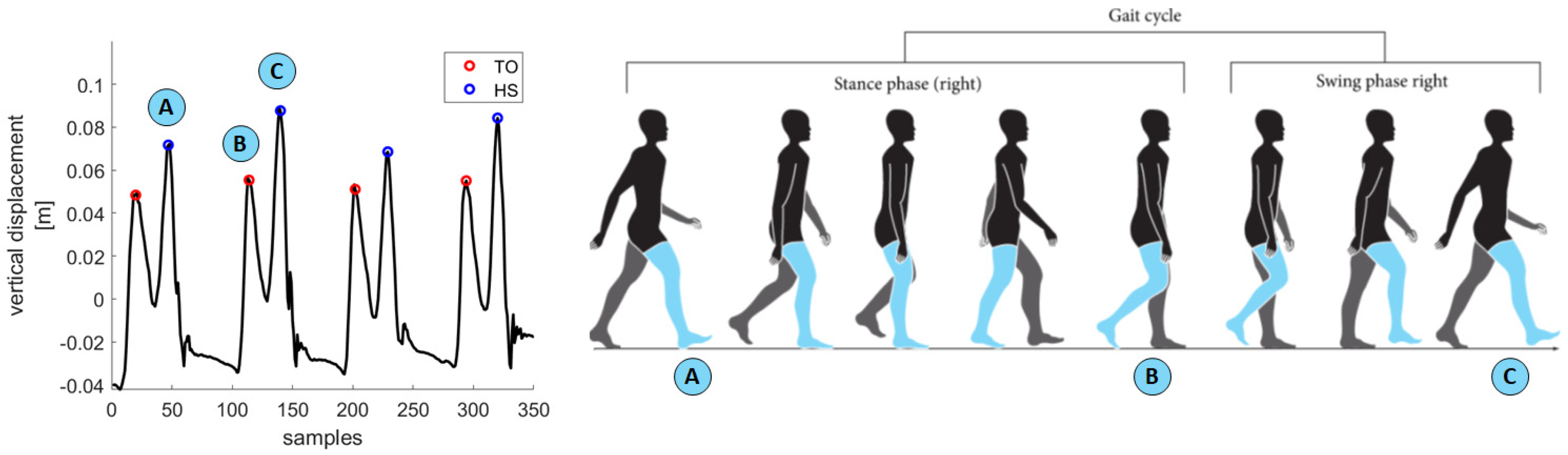

2.2. Event Detection

2.2.1. VR Event Detection

2.2.2. Walkway Event Detection

2.3. Gait Features

2.3.1. VR Gait Features Evaluation

2.3.2. Walkway Gait Features Evaluation

2.4. Validation Procedure

3. Results

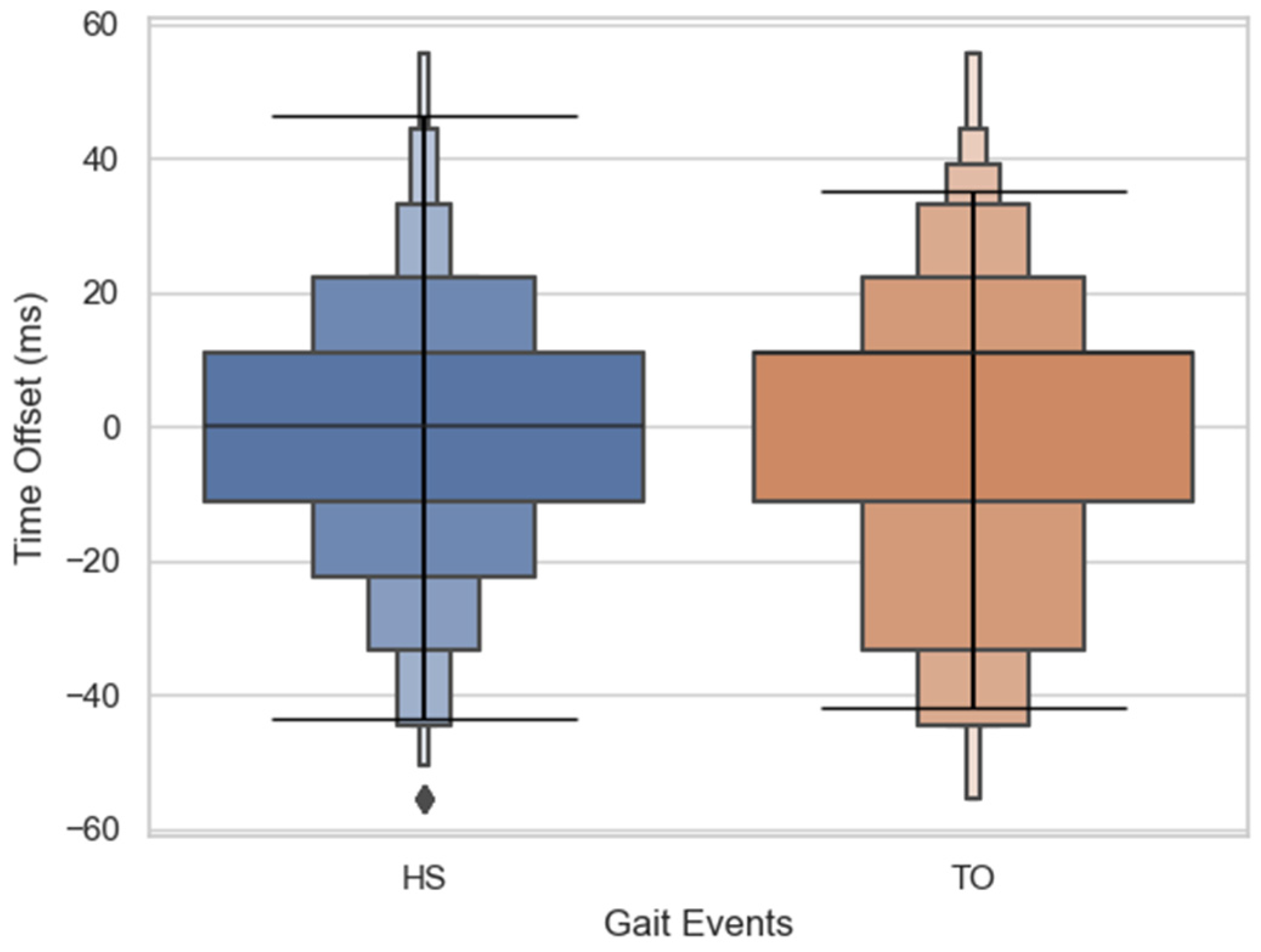

3.1. Validation of Gait Events

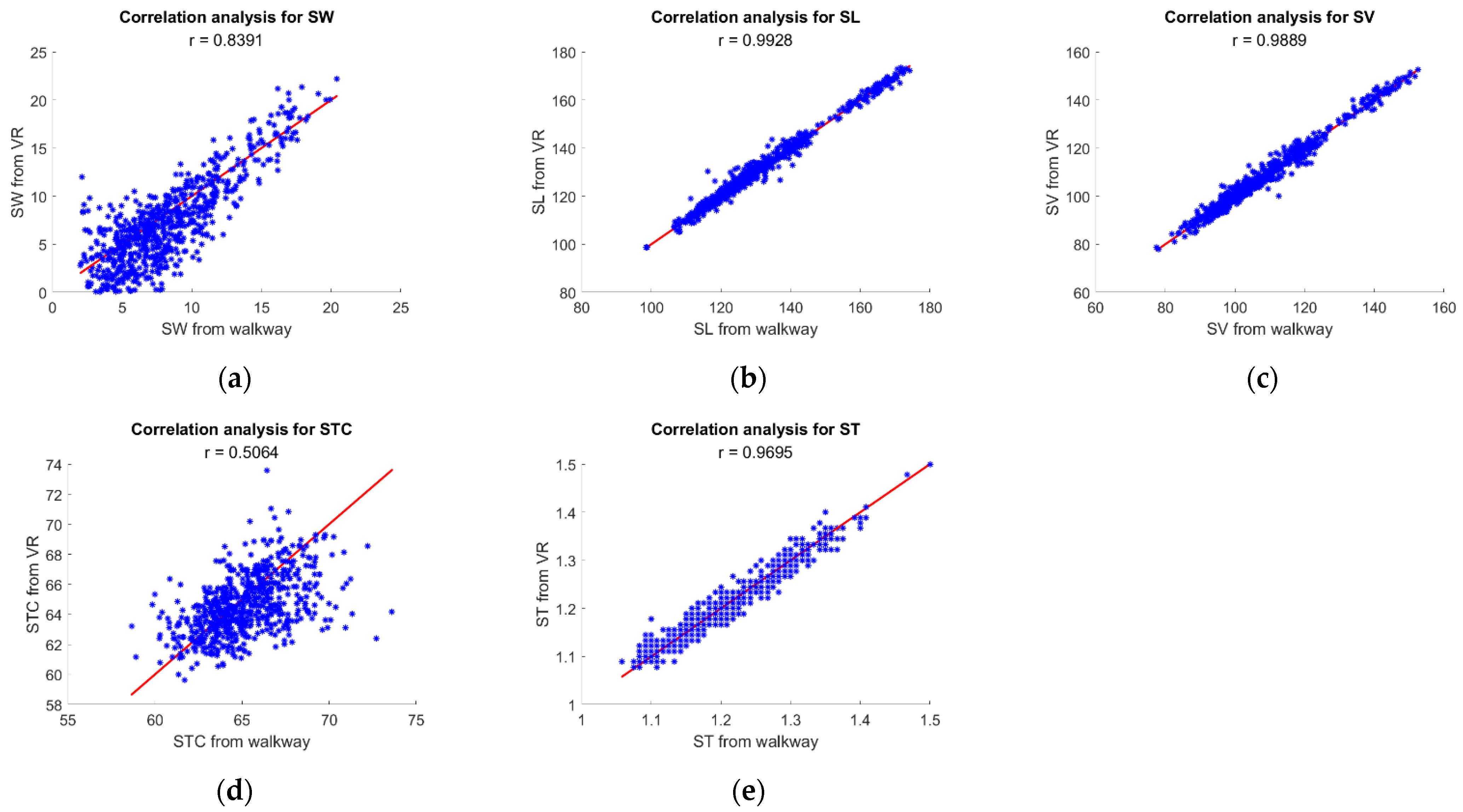

3.2. Validation of Gait Features

4. Discussion

4.1. Gait Events Detection

4.2. Gait Features Evaluation

4.3. Limits

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| US 6 | US 9 | US 10 | US 12 | |||||

|---|---|---|---|---|---|---|---|---|

| Shoe Side | L | R | L | R | L | R | L | R |

| X [mm] | 211.04 | 214.13 | 225.16 | 226.76 | 218.76 | 241.97 | 243.43 | 240.32 |

| Y [mm] | 4.21 | 12.31 | 14.31 | −11.10 | 3.00 | −12.94 | 7.69 | 14.64 |

| Z [mm] | −85.98 | −86.63 | −99.76 | −94.29 | −91.85 | −99.65 | 98.95 | −88.98 |

References

- Pirker, W.; Katzenschlager, R. Gait disorders in adults and the elderly: A clinical guide. Wien. Klin. Wochenschr. 2017, 129, 81–95. [Google Scholar] [CrossRef]

- Ceseracciu, E.; Sawacha, Z.; Cobelli, C. Comparison of markerless and marker-based motion capture technologies through simultaneous data collection during gait: Proof of concept. PLoS ONE 2014, 9. [Google Scholar] [CrossRef]

- Della Croce, U.; Leardini, A.; Chiari, L.; Cappozzo, A. Human movement analysis using stereophotogrammetry Part 4: Assessment of anatomical landmark misplacement and its effects on joint kinematics. Gait Posture 2005, 21, 226–237. [Google Scholar] [CrossRef] [PubMed]

- Sandau, M.; Koblauch, H.; Moeslund, T.B.; Aanæs, H.; Alkjær, T.; Simonsen, E.B. Markerless motion capture can provide reliable 3D gait kinematics in the sagittal and frontal plane. Med. Eng. Phys. 2014, 36, 1168–1175. [Google Scholar] [CrossRef]

- Merriaux, P.; Dupuis, Y.; Boutteau, R.; Vasseur, P.; Savatier, X. A study of vicon system positioning performance. Sensors 2017, 17, 1591. [Google Scholar] [CrossRef]

- Caldas, R.; Mundt, M.; Potthast, W.; Buarque de Lima Neto, F.; Markert, B. A systematic review of gait analysis methods based on inertial sensors and adaptive algorithms. Gait Posture 2017, 57, 204–210. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Cai, Y.; Qian, X.; Ansari, R.; Xu, W.; Chu, K.C.; Huang, M.C. Bring Gait Lab to Everyday Life: Gait Analysis in Terms of Activities of Daily Living. IEEE Internet Things J. 2019. [Google Scholar] [CrossRef]

- Panero, E.; Digo, E.; Agostini, V.; Gastaldi, L. Comparison of Different Motion Capture Setups for Gait Analysis: Validation of spatio-temporal parameters estimation. In Proceedings of the MeMeA 2018—2018 IEEE International Symposium on Medical Measurements and Applications, Rome, Italy, 11–13 June 2018. [Google Scholar]

- Latt, W.T.; Veluvolu, K.C.; Ang, W.T. Drift-free position estimation of periodic or quasi-periodic motion using inertial sensors. Sensors 2011, 11, 5931–5951. [Google Scholar] [CrossRef]

- Thong, Y.K.; Woolfson, M.S.; Crowe, J.A.; Hayes-Gill, B.R.; Jones, D.A. Numerical double integration of acceleration measurements in noise. Meas. J. Int. Meas. Confed. 2004, 36, 73–92. [Google Scholar] [CrossRef]

- Liu, T.; Inoue, Y.; Shibata, K. Development of a wearable sensor system for quantitative gait analysis. Meas. J. Int. Meas. Confed. 2009, 42, 978–988. [Google Scholar] [CrossRef]

- Martin Schepers, H.; van Asseldonk, E.H.F.; Baten, C.T.M.; Veltink, P.H. Ambulatory estimation of foot placement during walking using inertial sensors. J. Biomech. 2010, 43, 3138–3143. [Google Scholar] [CrossRef]

- Lynall, R.C.; Zukowski, L.A.; Plummer, P.; Mihalik, J.P. Reliability and validity of the protokinetics movement analysis software in measuring center of pressure during walking. Gait Posture 2017, 52, 308–311. [Google Scholar] [CrossRef]

- Titianova, E.B.; Pitkänen, K.; Pääkkönen, A.; Sivenius, J.; Tarkka, I.M. Gait characteristics and functional ambulation profile in patients with chronic unilateral stroke. Am. J. Phys. Med. Rehabil. 2003, 82, 778–786. [Google Scholar] [CrossRef]

- Samotus, O.; Parrent, A.; Jog, M. Spinal Cord Stimulation Therapy for Gait Dysfunction in Advanced Parkinson’s Disease Patients. Mov. Disord. 2018, 33, 783–792. [Google Scholar] [CrossRef]

- Chien, S.L.; Lin, S.Z.; Liang, C.C.; Soong, Y.S.; Lin, S.H.; Hsin, Y.L.; Lee, C.W.; Chen, S.Y. The efficacy of quantitative gait analysis by the GAITRite system in evaluation of parkinsonian bradykinesia. Park. Relat. Disord. 2006, 12, 438–442. [Google Scholar] [CrossRef]

- Sveistrup, H. Motor rehabilitation using virtual reality. J. Neuroeng. Rehabil. 2004, 10, 10. [Google Scholar] [CrossRef] [PubMed]

- Adamovich, S.V.; Fluet, G.G.; Tunik, E.; Merians, A.S. Sensorimotor training in virtual reality: A review. NeuroRehabilitation 2009, 25, 29–44. [Google Scholar] [CrossRef] [PubMed]

- Burdea, G.C. Virtual Rehabilitation—Benefits and Challenges. Methods Inf. Med. 2003, 42, 519–523. [Google Scholar] [PubMed]

- Cano Porras, D.; Siemonsma, P.; Inzelberg, R.; Zeilig, G.; Plotnik, M. Advantages of virtual reality in the rehabilitation of balance and gait: Systematic review. Neurology 2018, 90, 1017–1025. [Google Scholar] [CrossRef] [PubMed]

- Niehorster, D.C.; Li, L.; Lappe, M. The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. Iperception 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Lewek, M.D.; Feasel, J.; Wentz, E.; Brooks, F.P.; Whitton, M.C. Use of visual and proprioceptive feedback to improve gait speed and spatiotemporal symmetry following chronic stroke: A case series. Phys. Ther. 2012, 92, 748–756. [Google Scholar] [CrossRef]

- Howard, M.C. A meta-analysis and systematic literature review of virtual reality rehabilitation programs. Comput. Hum. Behav. 2017, 70, 317–327. [Google Scholar] [CrossRef]

- Peruzzi, A.; Zarbo, I.R.; Cereatti, A.; Della Croce, U.; Mirelman, A. An innovative training program based on virtual reality and treadmill: Effects on gait of persons with multiple sclerosis. Disabil. Rehabil. 2017, 39, 1557–1563. [Google Scholar] [CrossRef]

- Wang, B.; Shen, M.; Wang, Y.X.; He, Z.W.; Chi, S.Q.; Yang, Z.H. Effect of virtual reality on balance and gait ability in patients with Parkinson’s disease: A systematic review and meta-analysis. Clin. Rehabil. 2019, 33, 1130–1138. [Google Scholar] [CrossRef] [PubMed]

- van der Veen, S.M.; Bordeleau, M.; Pidcoe, P.E.; France, C.R.; Thomas, J.S. Agreement analysis between vive and vicon systems to monitor lumbar postural changes. Sensors 2019, 19, 3632. [Google Scholar] [CrossRef]

- Borges, M.; Symington, A.; Coltin, B.; Smith, T.; Ventura, R. HTC Vive: Analysis and Accuracy Improvement. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Granqvist, A.; Takala, T.; Hämäläinen, P.; Takatalo, J. Exaggeration of avatar flexibility in virtual reality. In Proceedings of the CHI PLAY 2018—Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play, Melbourne, VIC, Australia, 28–31 October 2018. [Google Scholar]

- Singh, Y.; Prado, A.; Martelli, D.; Petros, F.E.; Ai, X.; Mukherjee, S.; Lalwani, A.K.; Vashista, V.; Agrawal, S.K. Dual-Motor-Task of Catching and Throwing a Ball during Overground Walking in Virtual Reality. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1661–1667. [Google Scholar] [CrossRef]

- Canessa, A.; Casu, P.; Solari, F.; Chessa, M. Comparing real walking in immersive virtual reality and in physical world using gait analysis. In Proceedings of the VISIGRAPP 2019—Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019; Volume 2, pp. 121–128. [Google Scholar]

- Huxham, F.; Gong, J.; Baker, R.; Morris, M.; Iansek, R. Defining spatial parameters for non-linear walking. Gait Posture 2006, 23, 159–163. [Google Scholar] [CrossRef]

- Smith, L.; Preece, S.; Mason, D.; Bramah, C. A comparison of kinematic algorithms to estimate gait events during overground running. Gait Posture 2015, 41, 39–43. [Google Scholar] [CrossRef] [PubMed]

- Rueterbories, J.; Spaich, E.G.; Larsen, B.; Andersen, O.K. Methods for gait event detection and analysis in ambulatory systems. Med. Eng. Phys. 2010, 32, 545–552. [Google Scholar] [CrossRef] [PubMed]

- Blvd, J.W. ProtoKinetics Movement Analysis Software Measurements and Definitions. Available online: https://www.protokinetics.com/pkmas/ (accessed on 11 May 2021).

- Bruening, D.A.; Ridge, S.T. Automated event detection algorithms in pathological gait. Gait Posture 2014, 39, 472–477. [Google Scholar] [CrossRef] [PubMed]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Linden, A. Measuring diagnostic and predictive accuracy in disease management: An introduction to receiver operating characteristic (ROC) analysis. J. Eval. Clin. Pract. 2006, 12, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Storm, F.A.; Buckley, C.J.; Mazzà, C. Gait event detection in laboratory and real life settings: Accuracy of ankle and waist sensor based methods. Gait Posture 2016, 50, 42–46. [Google Scholar] [CrossRef] [PubMed]

- Hori, K.; Mao, Y.; Ono, Y.; Ora, H.; Hirobe, Y.; Sawada, H.; Inaba, A.; Orimo, S.; Miyake, Y. Inertial Measurement Unit-Based Estimation of Foot Trajectory for Clinical Gait Analysis. Front. Physiol. 2020, 10, 1530. [Google Scholar] [CrossRef] [PubMed]

- Kitagawa, N.; Ogihara, N. Estimation of foot trajectory during human walking by a wearable inertial measurement unit mounted to the foot. Gait Posture 2016, 45, 110–114. [Google Scholar] [CrossRef] [PubMed]

- Mo, S.; Chow, D.H.K. Accuracy of three methods in gait event detection during overground running. Gait Posture 2018, 59, 93–98. [Google Scholar] [CrossRef] [PubMed]

- Trojaniello, D.; Cereatti, A.; Pelosin, E.; Avanzino, L.; Mirelman, A.; Hausdorff, J.M.; Croce, U. Della Estimation of step-by-step spatio-temporal parameters of normal and impaired gait using shank-mounted magneto-inertial sensors: Application to elderly, hemiparetic, parkinsonian and choreic gait. J. Neuroeng. Rehabil. 2014, 11, 152. [Google Scholar] [CrossRef] [PubMed]

- Figueiredo, J.; Félix, P.; Costa, L.; Moreno, J.C.; Santos, C.P. Gait Event Detection in Controlled and Real-Life Situations: Repeated Measures from Healthy Subjects. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1945–1956. [Google Scholar] [CrossRef]

- Zeni, J.A.; Richards, J.G.; Higginson, J.S. Two simple methods for determining gait events during treadmill and overground walking using kinematic data. Gait Posture 2008, 27, 710–714. [Google Scholar] [CrossRef] [PubMed]

- Sharples, S.; Cobb, S.; Moody, A.; Wilson, J.R. Virtual reality induced symptoms and effects (VRISE): Comparison of head mounted display (HMD), desktop and projection display systems. Displays 2008, 29, 58–69. [Google Scholar] [CrossRef]

- Ghai, S.; Ghai, I. Virtual Reality Enhances Gait in Cerebral Palsy: A Training Dose-Response Meta-Analysis. Front. Neurol. 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Mirelman, A.; Patritti, B.L.; Bonato, P.; Deutsch, J.E. Effects of virtual reality training on gait biomechanics of individuals post-stroke. Gait Posture 2010, 31, 433–437. [Google Scholar] [CrossRef] [PubMed]

- Moreira, M.C.; De Amorim Lima, A.M.; Ferraz, K.M.; Benedetti Rodrigues, M.A. Use of virtual reality in gait recovery among post stroke patients-a systematic literature review. Disabil. Rehabil. Assist. Technol. 2013, 8, 357–362. [Google Scholar] [CrossRef] [PubMed]

| Camera-Based Systems | IMUs | Walkways | VR | |

|---|---|---|---|---|

| Collection Space | Dedicated lab | Any environment | Flat surfaces | Any indoor space |

| Setup time | 10 to 20 min, depending on markers setup. Plus, initial configuration. | Few minutes for calibration and sensor placement | Few minutes for initial configuration. | Few minutes for initial configuration |

| User preparation | Several minutes to sensors markings and identification | Few minutes for sensor placement | No preparation | Few minutes for sensor placement |

| # of sensors/markers to track a single segment | 3 | 1 | - | 1 |

| Portability | No | Wearable | Portable | Portable |

| Cost | Very expensive | Affordable | Expensive | Affordable |

| Type of measurement | Infrared-based | Inertial and magnetic | Pressure-based | Laser-based |

| Gait features accuracy (feet displacement) | ~0.2 mm | ~50 mm | ~1 mm | To be investigated |

| Post-processing time | Up to one hour, depending on marker setup | Minutes | Minutes | Minutes |

| Covered Gait Features | Spatiotemporal features; 3D-displacement; joint kinematics | Spatiotemporal parameters; joint kinematics | Spatiotemporal features; 2D displacement | Spatiotemporal features; 3D-displacement; joint displacement |

| Gait Events | Heel Strike | Toe Off |

|---|---|---|

| Cutoff Time Window [ms] | 33.3 | 33.3 |

| Mean Offset [ms] | −2.6 ± 16.9 | 4.2 ± 17.1 |

| Mean Absolute Offset [ms] | 13.4 ± 10.5 | 13.7 ± 11.0 |

| Sensitivity [%] | 94.2 | 88.3 |

| SW [cm] | SL [cm] | ST [ms] | SV [cm/sec] | Stance-Swing [%] | |

|---|---|---|---|---|---|

| Mean Offset | −1.0 ± 2.4 | 0.3 ± 1.9 | 0.4 ± 17.4 | 0.2 ± 2.2 | 0.5 ± 2.1 |

| Mean Absolute Error | 2.0 ± 1.6 | 1.4 ± 1.4 | 13.4 ± 11.1 | 1.6 ± 1.5 | 1.6 ± 1.4 |

| RMS Error | 2.6 | 2.0 | 17.4 | 2.2 | 2.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guaitolini, M.; Petros, F.E.; Prado, A.; Sabatini, A.M.; Agrawal, S.K. Evaluating the Accuracy of Virtual Reality Trackers for Computing Spatiotemporal Gait Parameters. Sensors 2021, 21, 3325. https://doi.org/10.3390/s21103325

Guaitolini M, Petros FE, Prado A, Sabatini AM, Agrawal SK. Evaluating the Accuracy of Virtual Reality Trackers for Computing Spatiotemporal Gait Parameters. Sensors. 2021; 21(10):3325. https://doi.org/10.3390/s21103325

Chicago/Turabian StyleGuaitolini, Michelangelo, Fitsum E. Petros, Antonio Prado, Angelo M. Sabatini, and Sunil K. Agrawal. 2021. "Evaluating the Accuracy of Virtual Reality Trackers for Computing Spatiotemporal Gait Parameters" Sensors 21, no. 10: 3325. https://doi.org/10.3390/s21103325

APA StyleGuaitolini, M., Petros, F. E., Prado, A., Sabatini, A. M., & Agrawal, S. K. (2021). Evaluating the Accuracy of Virtual Reality Trackers for Computing Spatiotemporal Gait Parameters. Sensors, 21(10), 3325. https://doi.org/10.3390/s21103325