Collective Perception: A Safety Perspective †

Abstract

1. Introduction

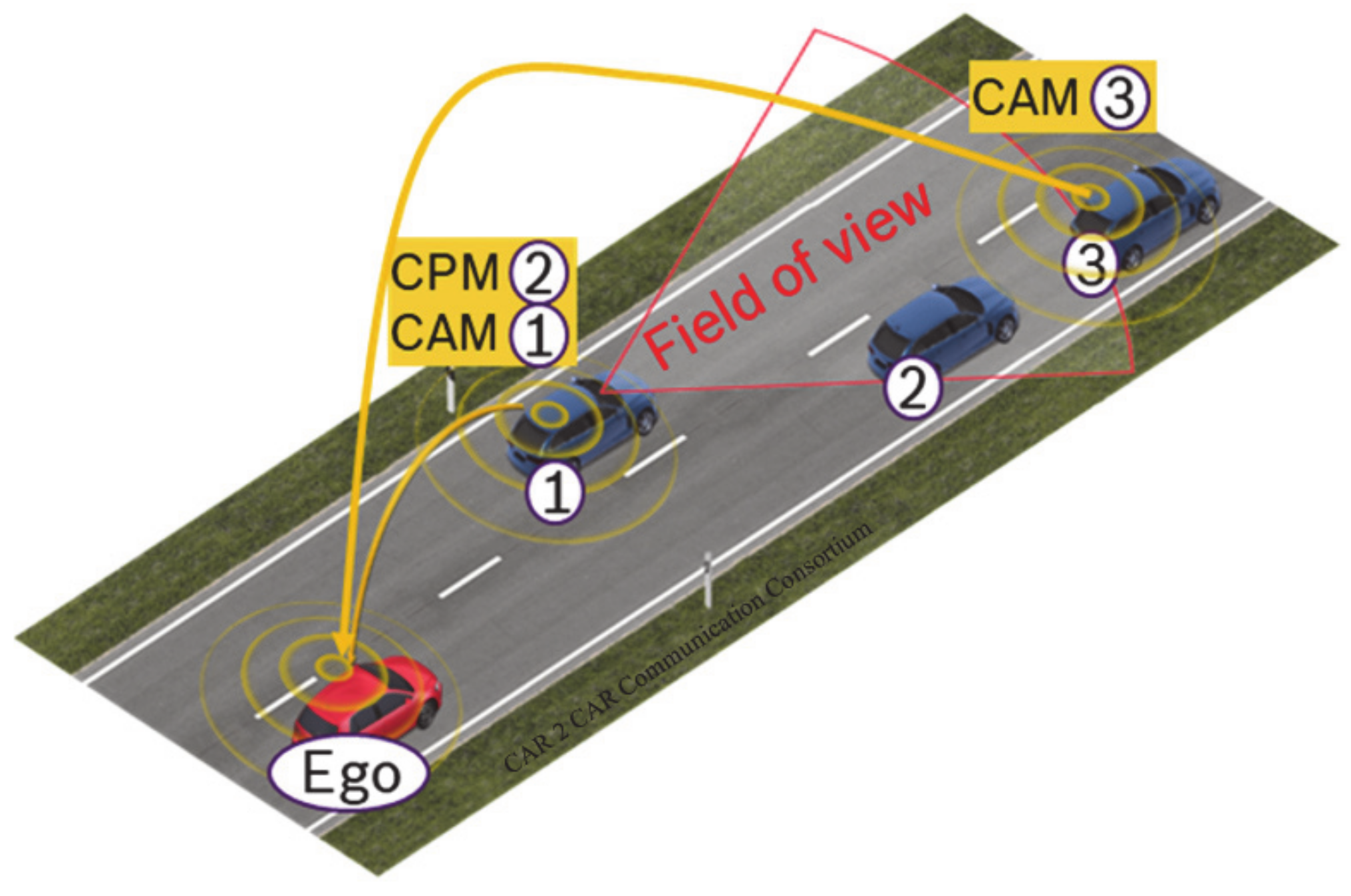

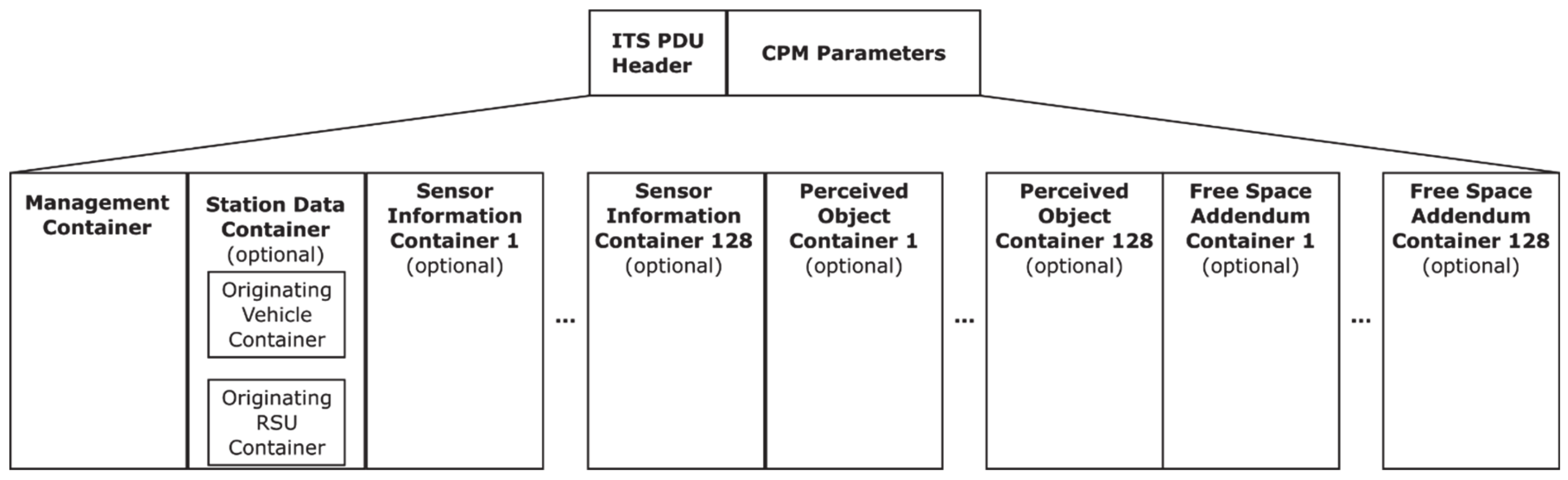

2. Collective Perception

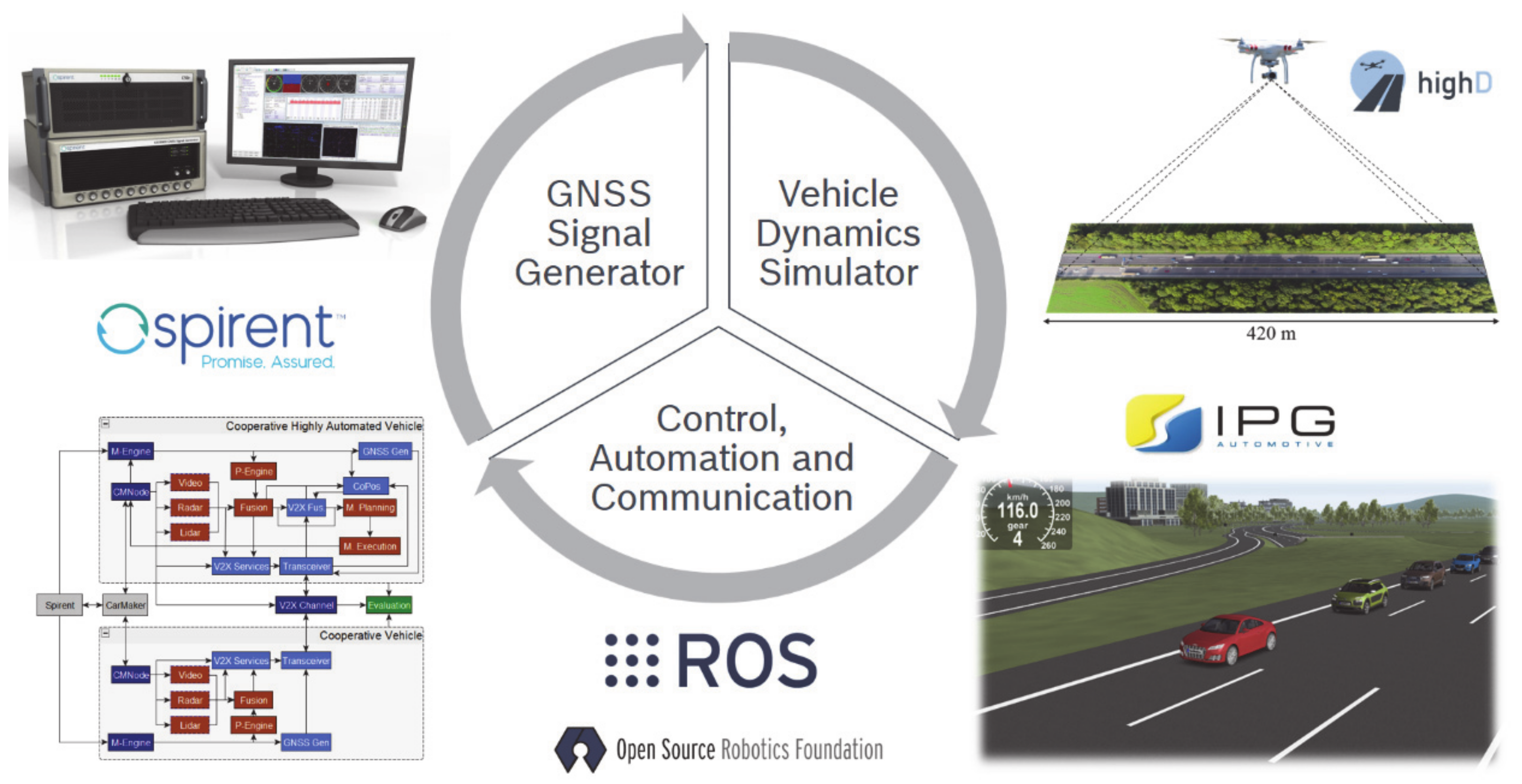

3. Testing Environment

3.1. Traffic and Vehicle Dynamics

3.2. Vehicle Sensors

- Positioning sensors: The main technologies to estimate the ego-position of a traffic participant are satellite navigation, odometry, IMUs, and SLAM. While IMU and odometry are implemented in a simple way in TEPLITS, the state-of-the-art absolute positioning sensor, that is GNSS, is modeled in great detail. For this purpose, the GNSS signal generator SPIRENT GSS7000 developed by Spirent Communications is used. It allows to control a wide range of parameters, such as tropospheric and ionospheric errors, satellite clock and ephemeris errors, obscuration and multipath, receiver antenna characteristics, and RF interference for any given point in time and space. It further allows multi-constellation operation, including GPS, GLONASS, Beidou, and Galileo signals. SLAM has not been implemented in TEPLITS thus far.

- Object tracking sensors: Object tracking sensors, such as radars, LIDARs, and cameras are implemented in CarMaker and the data are outputted over the integrated ROS interfaces. For partially automated vehicles, the human driver must also be considered as an additional or even the primary sensor, releasing driving functions, such as the merging onto a highway. For this reason, the human driver can also be modeled as a sensor in CarMaker [19]. However, it must be noted that the data are processed separately, and not merged into the vehicle’s environmental model. All these object-tracking sensors have also been implemented in ROS based on Bosch internal sensor models for the CarMaker-independent operation of TEPLITS.

- V2X communication: TEPLITS offers two different ways to model the V2X communication: (i) a full stack implementation, and (ii) the application of analytical models developed by the Universidad Miguel Hernandez in Elche, Spain. Generally, the latter is preferable if the focus does not lie on an in-depth analysis of the communication performance, as the full stack software implementation, combined with all the other simulation components, tends to considerably slow down the simulation. The analytical models for 802.11p [24] and C-V2X autonomous mode [25] have been validated with state-of-the-art network simulators and are suitable for most applications.

3.3. Vehicle Perception and Control

4. Evaluation Metrics

4.1. Network-Related Metrics

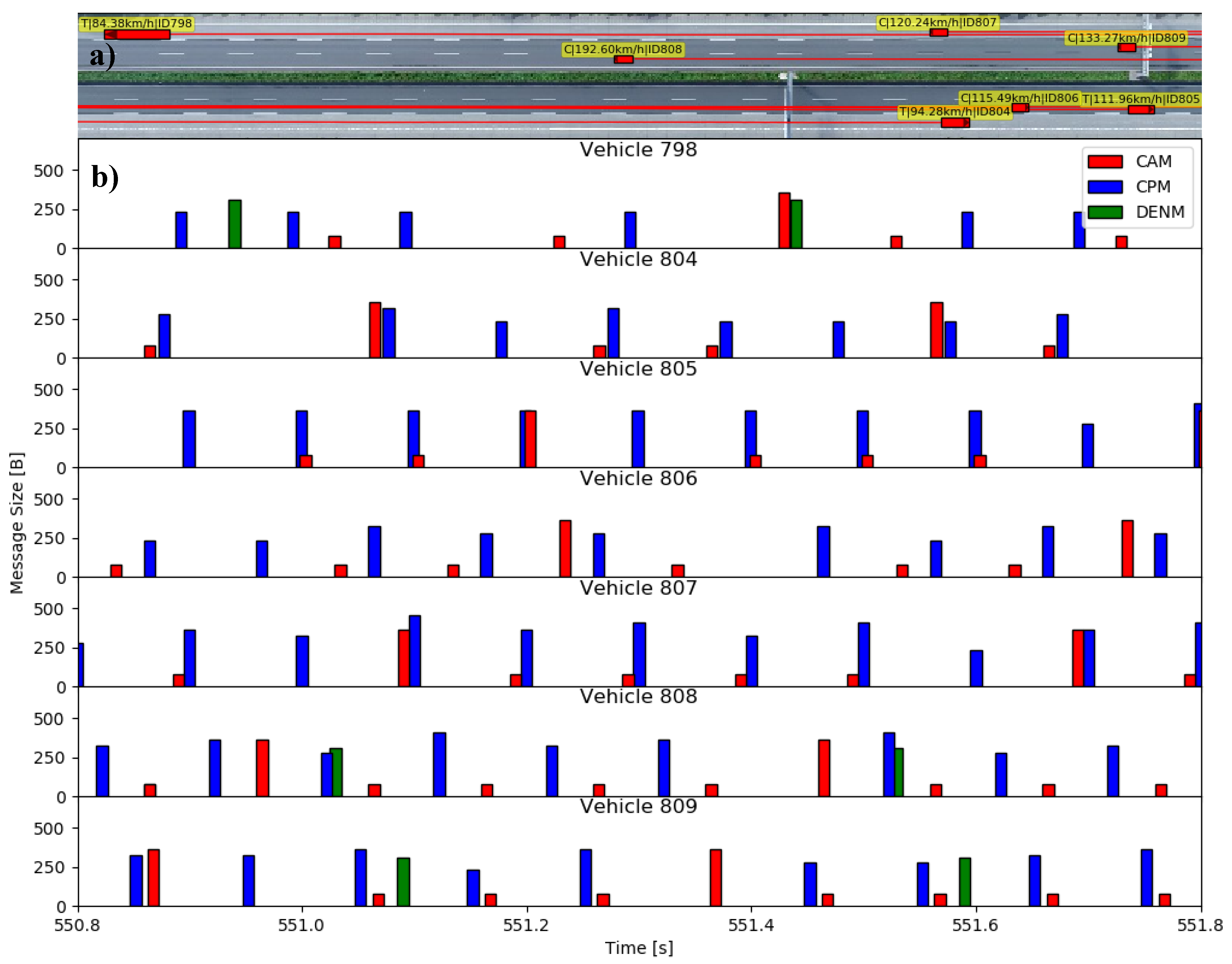

4.1.1. Message Size and Frequency

4.1.2. Channel Busy Ratio (CBR)

4.1.3. Packet Delivery Ratio (PDR)

4.2. Perception-Related Metrics

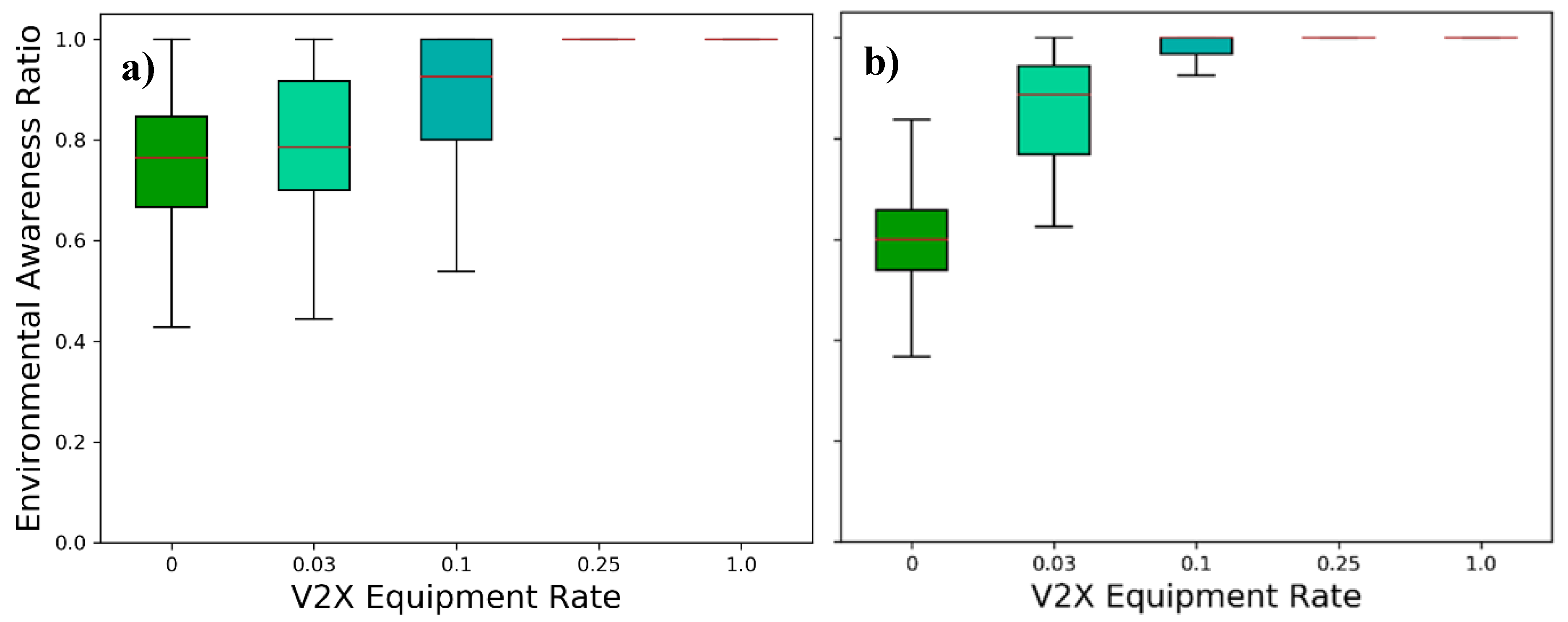

4.2.1. Environmental Awareness Ratio (EAR)

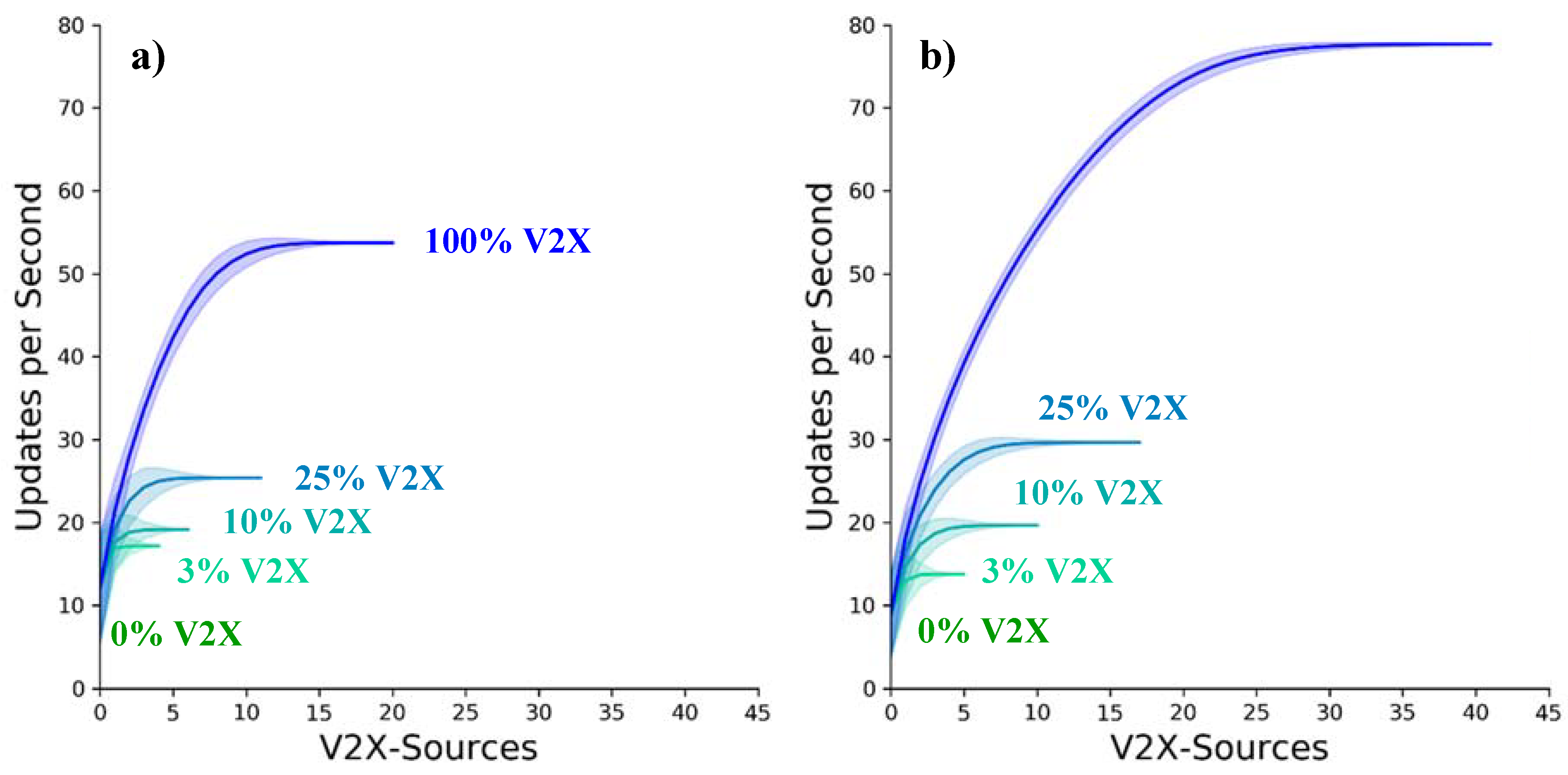

4.2.2. Detected Object Redundancy (DOR), Update Period, and Age of Information (AOI)

4.2.3. Object Tracking Accuracy (OTA)

4.3. Safety, Efficiency, and Comfort Metrics

4.3.1. Environmental Risk Awareness (ERA)

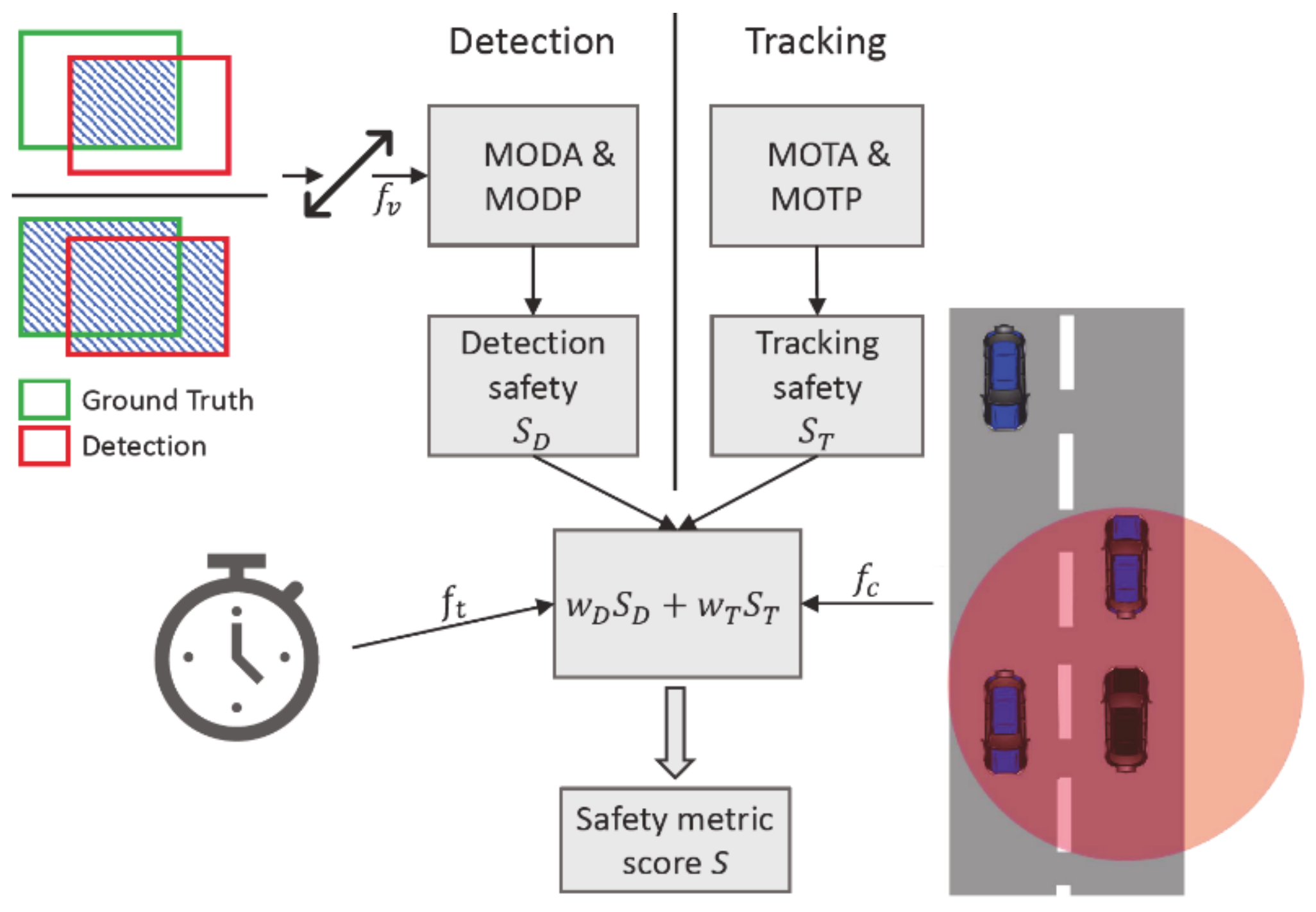

4.3.2. Comprehensive Safety Metric (CSM)

4.3.3. Time to Plan (TTP)

5. Results and Discussion

5.1. Simulation Parameters

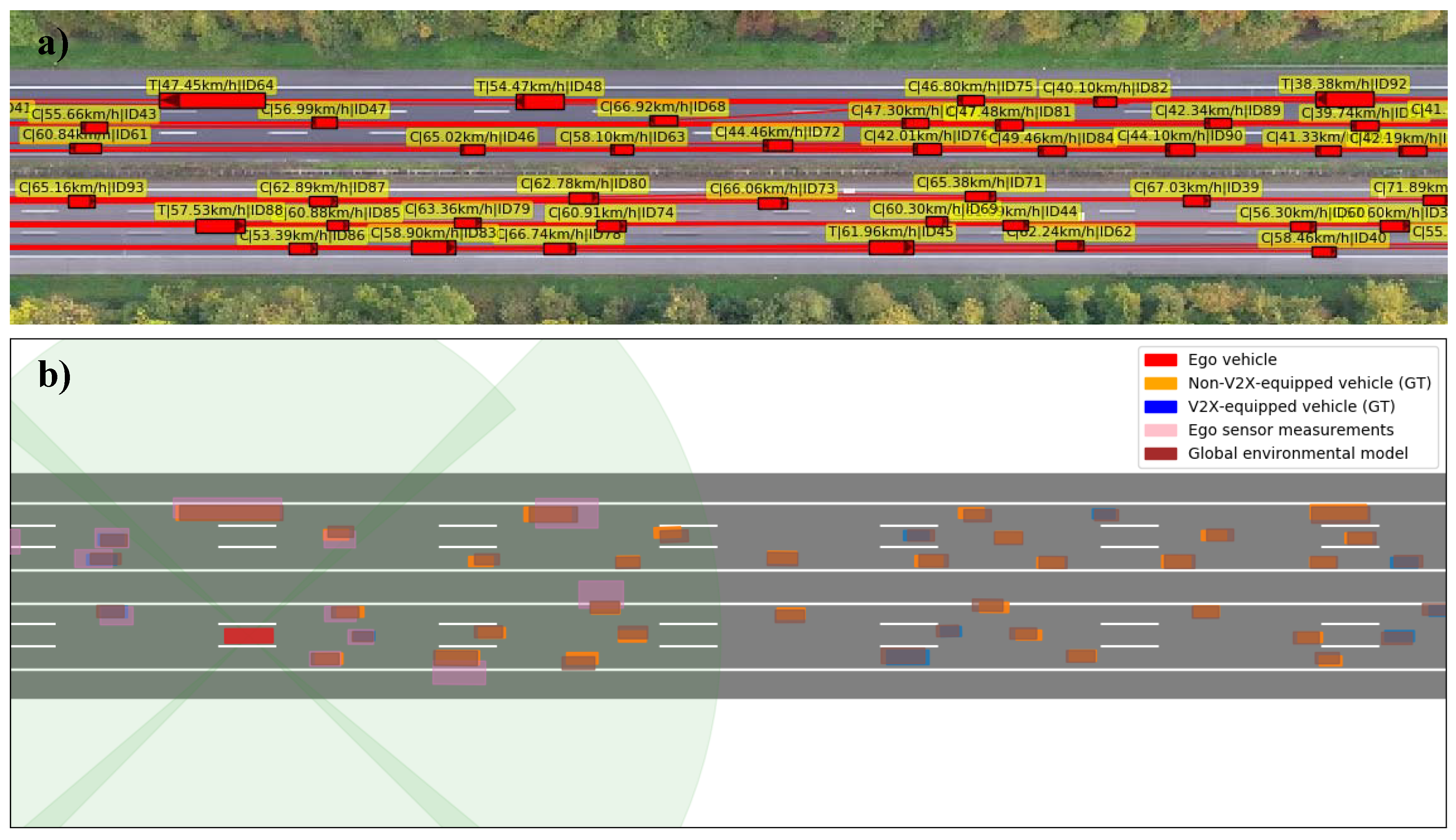

5.1.1. Scenario

5.1.2. Vehicles and Their Perception

5.1.3. V2X Communication

5.2. Effect on the Communication Channel

5.2.1. Message Size and Frequency

5.2.2. Channel Busy Ratio

5.3. Environmental Perception

5.3.1. Environmental Awareness Ratio (EAR)

5.3.2. Detected Object Redundancy (DOR)

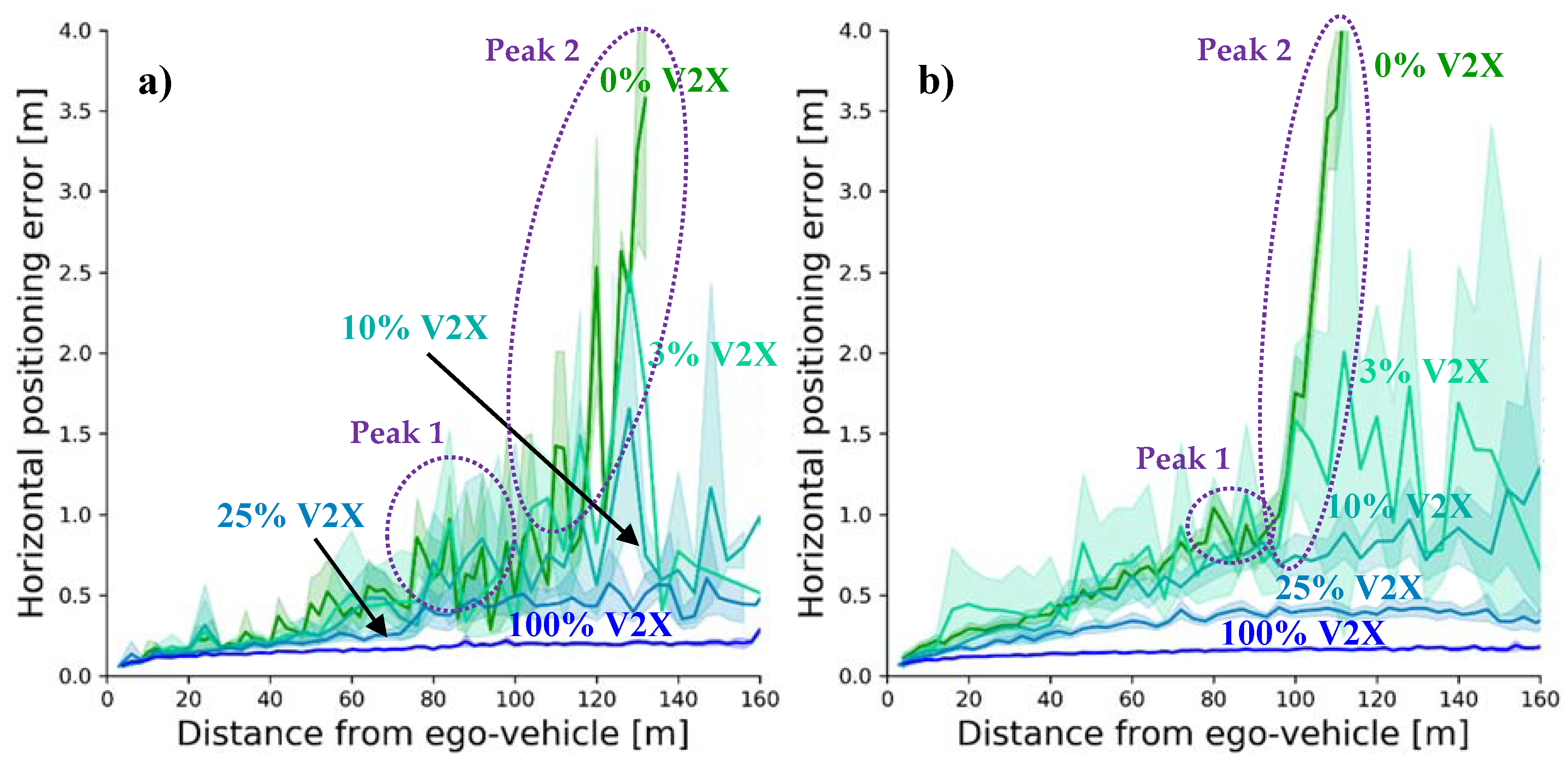

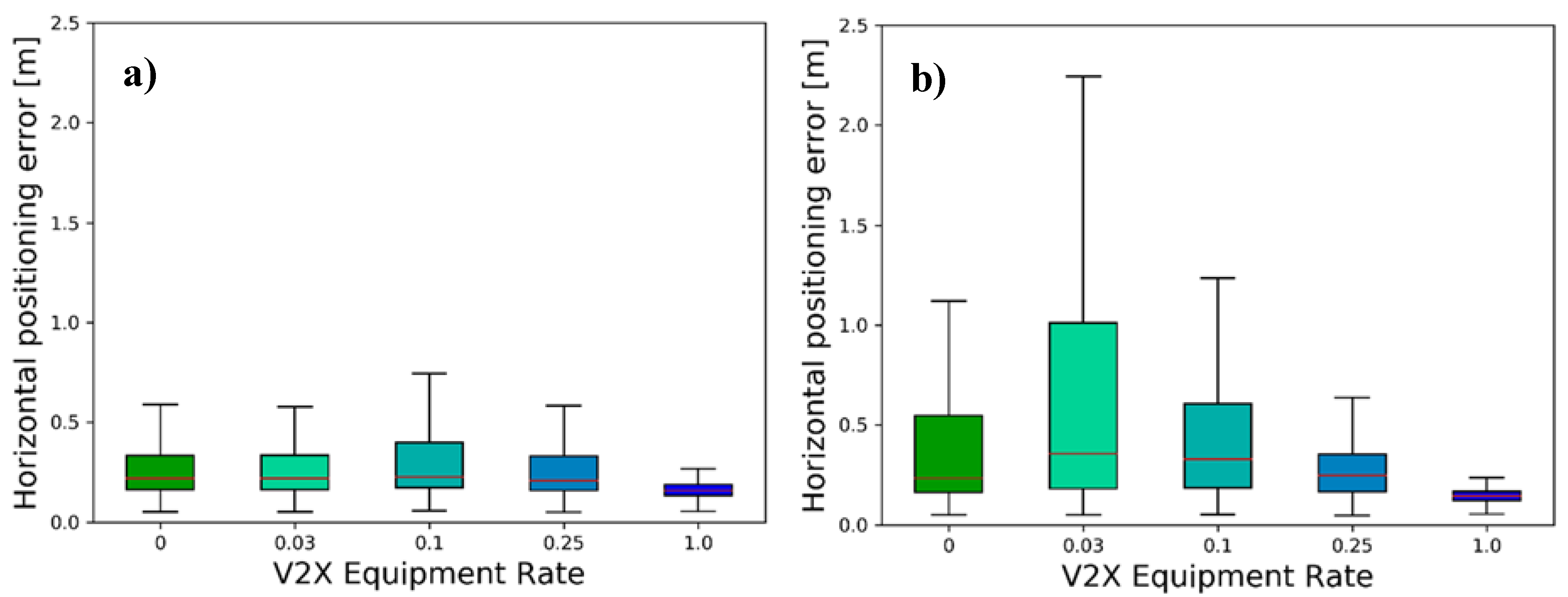

5.3.3. Object Tracking Accuracy (OTA)

5.4. Safety Metrics

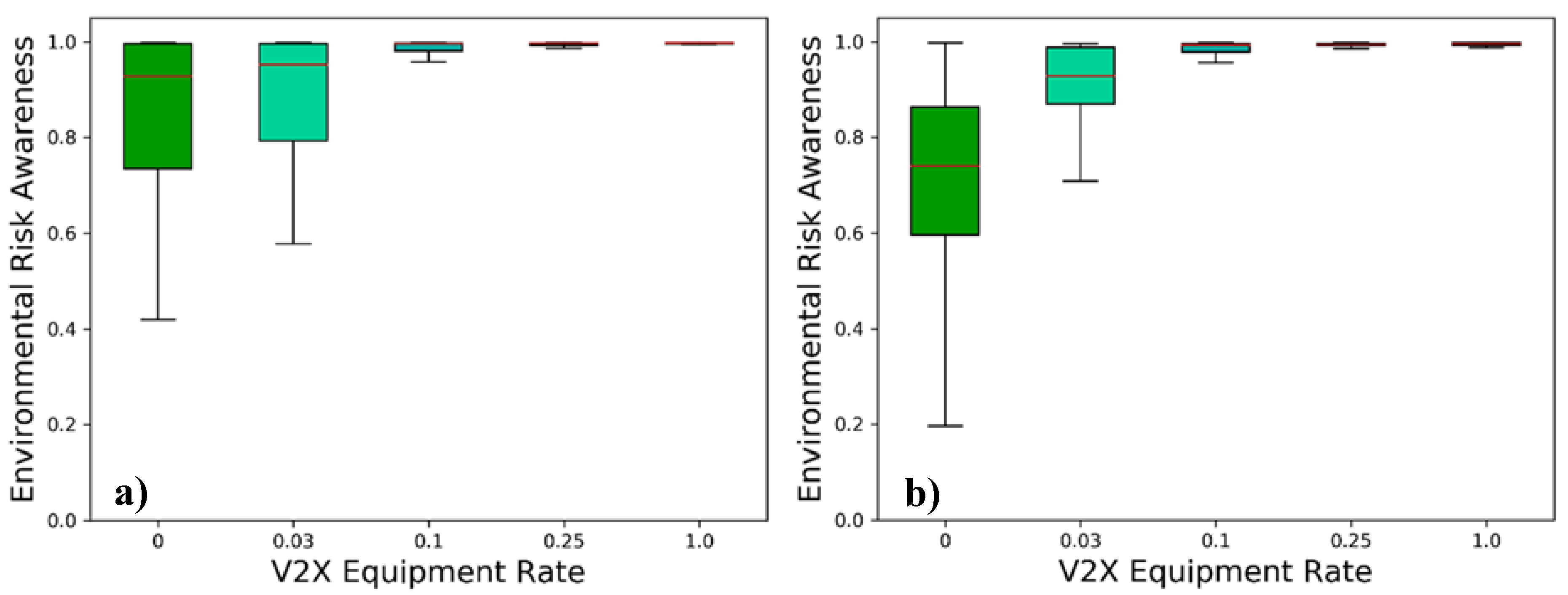

5.4.1. Environmental Risk Awareness (ERA)

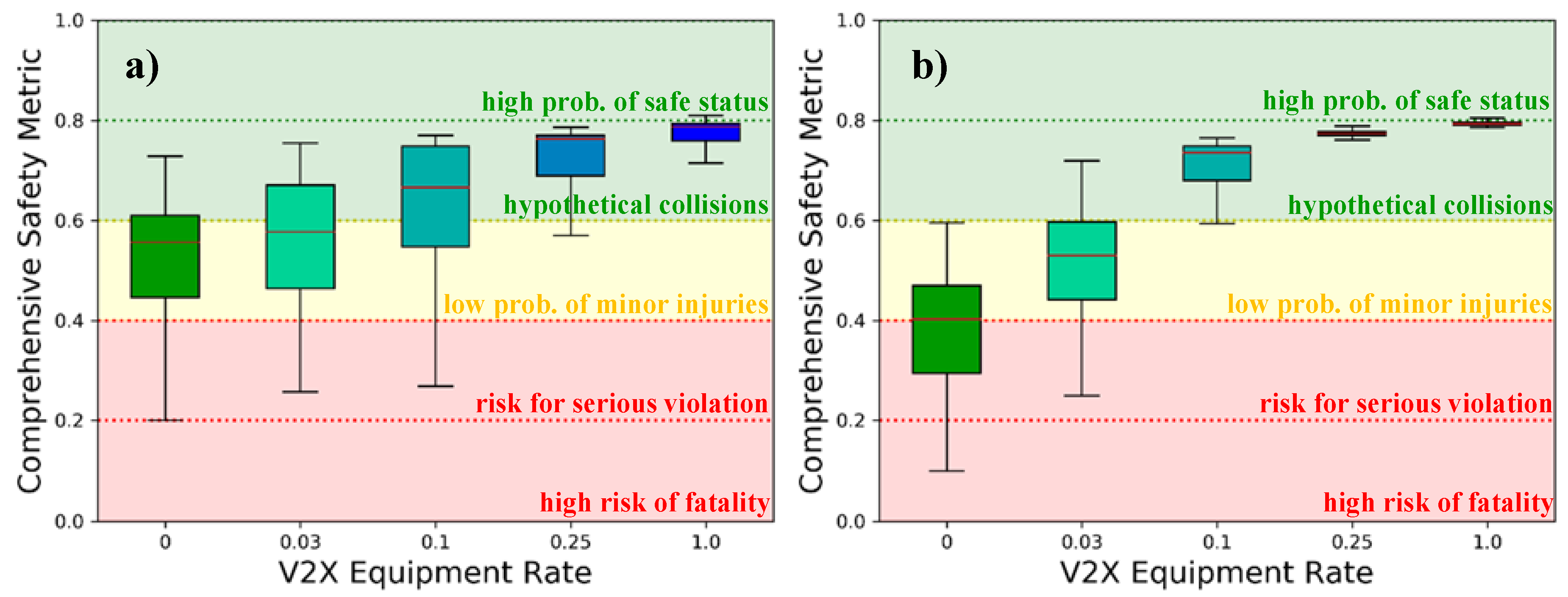

5.4.2. Safety Metric

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Günther, H.J.; Mennenga, B.; Trauer, O.; Riebl, R.; Wolf, L. Realizing collective perception in a vehicle. In Proceedings of the 2016 IEEE Vehicular Networking Conference (VNC), Columbus, OH, USA, 8–10 December 2016. [Google Scholar]

- ETSI ITS. Intelligent Transport System (ITS); Vehicular Communications. Basic Set of Applications; Analysis of the Collective Perception Service (CPS); Release 2. Available online: https://www.etsi.org/deliver/etsi_ts/103300_103399/10330002/02.01.01_60/ts_10330002v020101p.pdf (accessed on 23 December 2020).

- SAE V2X Vehicular Applications Technical Committee. Cooperative Perception System. J2945/8. 18 September 2018. Available online: https://www.sae.org/standards/content/j2945/8/ (accessed on 23 December 2020).

- Festag, A. Standards for Vehicular Communication–from IEEE 802.11p to 5G. Elektrotechnik Inf. 2015, 132, 409–416. [Google Scholar] [CrossRef]

- 3rd Generation Partnership Project, Release 14: Technical Specifications Group Services and System Aspects. Available online: https://www.etsi.org/deliver/etsi_tr/121900_121999/121914/14.00.00_60/tr_.pdf (accessed on 23 December 2020).

- Schiegg, F.A.; Bischoff, D.; Krost, J.R.; Llatser, I. Analytical performance evaluation of the collective perception service in IEEE 802.11 p networks. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Korea, 25–28 May 2020. [Google Scholar]

- Schiegg, F.; Brahmi, N.; Llatser, I. Analytical Performance Evaluation of the Collective Perception Service in C-V2X Mode 4 Networks. In Proceedings of the IEEE 22nd Intelligent Transportation Systems Conference (ITSC’19), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- ETSI ITS. Intelligent Transport System (ITS); Vehicular Communications; Basic Set of Applications, Part 2: Specification of Cooperative Awareness Basic Service. Available online: https://www.etsi.org/deliver/etsi_en/302600_302699/30263702/01.03.02_60/en_30263702v010302p.pdf (accessed on 23 December 2020).

- SAE DSRC Committee. Dedicated Short Range Communications (DSRC) Message Set Dictionary. SAE Std. J2735. Available online: https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwi836vCxePtAhVFCuwKHfqjAtoQFjAEegQIBRAC&url=https%3A%2F%2Frosap.ntl.bts.gov%2Fview%2Fdot%2F3413%2Fdot_3413_DS1.pdf%3F&usg=AOvVaw0ndi9n78Ov7ue5upCXSsxk (accessed on 23 December 2020).

- Günther, H.; Riebl, R.; Wolf, L.; Facchi, C. Collective perception and decentralized congestion control in vehicular ad-hoc networks. In Proceedings of the 2016 IEEE Vehicular Networking Conference (VNC), Columbus, OH, USA, 8–10 December 2016. [Google Scholar]

- Garlichs, K.; Günther, H.-J.; Wolf, L.-C. Generation Rules for the Collective Perception Service. In Proceedings of the 2019 IEEE Vehicular Networking Conference (VNC), Los Angeles, CA, USA, 4–6 December 2019. [Google Scholar]

- Thandavarayan, G.; Sepulcre, M.; Gozalvez, J. Analysis of Message Generation Rules for Collective Perception in Connected and Automated Driving. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Garlichs, K.; Wegner, M.; Wolf, L.C. Realizing Collective Perception in the Artery Simulation Framework. In Proceedings of the IEEE Vehicular Networking Conference (VNC), Taipei, Taiwan, 5–7 December 2018. [Google Scholar]

- Günther, H.J.; Riebl, R.; Wolf, L.; Facchi, C. The Effect of Decentralized Congestion Control on Collective Perception in Dense Traffic Scenarios. Comput. Commun. 2018, 122, 76–83. [Google Scholar] [CrossRef]

- Khan, S.; Andert, F.; Wojke, N.; Schindler, J.; Correa, A.; Wijbenga, A. Towards Collaborative Perception for Automated Vehicles in Heterogeneous Traffic. In International Forum on Advanced Microsystems for Automotive Applications; Springer: Cham, Switzerland, 2018; pp. 31–42. [Google Scholar]

- Hobert, L.; Festag, A.; Llatser, I.; Altomare, L.; Visintainer, F.; Kovacs, A. Enhancements of V2X communication in support of cooperative autonomous driving. IEEE Commun. Mag. 2015, 53, 64–70. [Google Scholar] [CrossRef]

- Llatser, I.; Michalke, T.; Dolgov, M.; Wildschütte, F.; Fuchs, H. Cooperative Automated Driving Use Cases for 5G V2X Communication. In Proceedings of the 5G World Forum, Dresden, Germany, 10–12 September 2019. [Google Scholar]

- Rondinone, M.; Walter, T.; Blokpoel, R.; Schindler, J. V2X Communications for Infrastructure-Assisted Automated Driving. In Proceedings of the 2018 IEEE 19th International Symposium on “A World of Wireless, Mobile and Multimedia Networks (WoWMoM)”, Chania, Greece, 12–15 June 2018. [Google Scholar]

- Schiegg, F.A.; Krost, J.; Jesenski, S.; Frye, J. A Novel Simulation Framework for the Design and Testing of Advanced Driver Assistance Systems. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019. [Google Scholar]

- Schiegg, F.A.; Li, S.; Mikhaylov, N. TEPLITS: A comprehensive Test Platform for Intelligent Transportation Systems. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25 May–31 July 2020. [Google Scholar]

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highD Dataset: A Drone Dataset of Naturalistic Vehicle Trajectories on German Highways for Validation of Highly Automated Driving Systems. In Proceedings of the 2018 IEEE 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Strigel, E.; Meissner, D.; Seeliger, F.; Wilking, B.; Dietmayer, K. The ko-per intersection laserscanner and video dataset. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014. [Google Scholar]

- Jesenski, S.; Stellet, J.E.; Schiegg, F.; Zöllner, J.M. Generation of scenes in intersections for the validation of HAD-functions. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV’19), Paris, France, 9–12 June 2019. [Google Scholar]

- Gonzales-Martín, M.; Sepulcre, M.; Molina-Masegosa, R.; Gozalvez, J. Analytical V2V Performance Model of IEEE 802.11p for Connected Vehicles, Technical Report for Universidad Miguel Hernandez. 2019.

- Gonzales-Martín, M.; Sepulcre, M.; Molina-Masegosa, R.; Gozalvez, J. Analytical Models of the Performance of C-V2X Mode 4 Vehicular Communications. IEEE Trans. Veh. Technol. 2019, 68, 1155–1166. [Google Scholar] [CrossRef]

- Molina-Masegosa, R.; Gozalvez, J.; Sepulcre, M. Comparison of IEEE 802.11p and LTE-V2X: An Evaluation with Periodic and Aperiodic Messages of Constant and Variable Size. IEEE Access 2020, 8, 121526–121548. [Google Scholar] [CrossRef]

- Bischoff, D.; Schiegg, F.A.; Meuser, T.; Schuller, D.; Dycke, N.; Steinmetz, R. What Cooperation Costs: Quality of Communication and Cooperation Costs for Cooperative Vehicular Maneuvering in Large-scale Scenarios. In Proceedings of the 6th International Conference on Vehicle Technology and Intelligent Transport. Systems (VEHITS 2020), Prague, Czechia, 2–4 May 2020. [Google Scholar]

- Allig, C.; Wanielik, G. Dynamic Dissemination Method for Collective Perception. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- Car 2 Car Communication Consortium, “A ‘cookbook’ for data quality in CPM,” C2C-CC. Available online: https://www.car-2-car.org/fileadmin/downloads/PDFs/car-2-car-journal/Journal_19_C2C-CC_Oct_2017.pdf (accessed on 23 December 2020).

- Thandavarayan, G.; Sepulcre, M.; Gozalvez, J. Redundancy Mitigation in Cooperative Perception for Connected and Automated Vehicles. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25 May–31 July 2020. [Google Scholar]

- Huang, H.; Li, H.; Shao, C.; Sun, T.; Fang, W.; Dang, S. Data Redundancy Mitigation in V2X Based Collective Perceptions. IEEE Access 2020, 8, 13405–13418. [Google Scholar] [CrossRef]

- Delooz, Q.; Festag, A. Network Load Adaptation for Collective Perception in V2X Communications. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo. (ICCVE), Graz, Austria, 4–8 November 2019. [Google Scholar]

- Aoki, S.; Higuchi, T.; Altintas, O. Cooperative Perception with Deep Reinforcement Learning for Connected Vehicles. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV 2020), Las Vegas, NV, USA, 19 October–13 November 2020. [Google Scholar]

- Khan, I.; Härri, J. Integration Challenges of Facilities-Layer DCC for Heterogeneous V2X Services. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26 June–1 July 2018. [Google Scholar]

- Furukawa, K.; Takai, M.; Ishihara, S. Controlling Sensor Data Dissemination Method for Collective Perception in VANET. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019. [Google Scholar]

- Sepulcre, M.; Mira, J.; Thandavarayan, G.; Gozalvez, J. Is Packet Dropping a Suitable Congestion Control Mechanism for Vehicular Networks? In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25 May–31 July 2020. [Google Scholar]

- Higuchi, T.; Giordani, M.; Zanella, A.; Zorzi, M.; Altintas, O. Value-Anticipating V2V Communications for Cooperative Perception. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Rauch, A.; Klanner, F.; Dietmayer, K. Analysis of V2X communication parameters for the development of a fusion architecture for cooperative perception systems. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Gunther, H.-J.; Trauer, O.; Wolf, L. The potential of collective perception in vehicular ad-hoc networks. In Proceedings of the 2015 14th International Conference on ITS Telecommunications (ITST), Copenhagen, Denmark, 2–4 December 2015. [Google Scholar]

- Huang, H.; Fang, W.; Li, H. Performance Modelling of V2V based Collective Perceptions in Connected and Autonomous Vehicles. In Proceedings of the 2019 IEEE 44th Conference on Local Computer Networks (LCN), Osnabrueck, Germany, 14–17 October 2019. [Google Scholar]

- Wang, Y.; Veciana, G.D.; Shimizu, T.; Lu, H. Performance and Scaling of Collaborative Sensing and Networking for Automated Driving Applications. In Proceedings of the 2018 IEEE International Conference on Communications Workshops (ICC Workshops), Kansas City, MO, USA, 20–24 May 2018. [Google Scholar]

- Schiegg, F.; Llatser, I.; Michalke, T. Object Detection Probability for Highly Automated Vehicles: An Analytical Sensor Model. In Proceedings of the 5th International Conference on Vehicle Technology and Intelligent Transport. Systems (VEHITS2019), Heraklion, Greece, 3–5 May 2019. [Google Scholar]

- Mikhaylov, N.; Schiegg, F.A.; Li, S.; Jiang, Y.; Liu, Y.; Ollander, S. Collaborative Positioning in Automated Car Driving: Accounting for Communication Channel and Multipath. In Proceedings of the 33rd International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2020), 20–24 September 2020; pp. 3008–3011. [Google Scholar]

- Volk, G.; Gamerdinger, J.; Bernuth, A.V.; Bringmann, O. A Comprehensive Safety Metric to Evaluate Perception in Autonomous Systems. In Proceedings of the 2020 IEEE Intelligent Transportation Systems Conference (ITSC), Rhodes, Greece, 20–23 September 2020. [Google Scholar]

- Stiefelhagen, R.; Bernardin, K.; Bowers, R.; Garofolo, J.; Mostefa, D.; Soundararajan, P. The CLEAR 2006 Evaluation. Available online: https://cvhci.anthropomatik.kit.edu/~stiefel/papers/clearOver2006-11-7.pdf (accessed on 23 December 2020).

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. On a Formal Model of Safe and Scalable Self-driving Cars. arXiv 2017, arXiv:1708.06374. [Google Scholar]

- Bischoff, D.; Schiegg, F.; Meuser, T.; Steinmetz, R. Impact of Imperfect Communication on Cooperative Vehicular Maneuvering at Intersections. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25 May–31 July 2020. [Google Scholar]

- ETSI ITS. Intelligent Transport Systems (ITS); Decentralized Congestion Control Mechanisms for Intelligent Transport Systems Operating in the 5 GHz Range. Available online: https://www.etsi.org/deliver/etsi_ts/102600_102699/102687/01.02.01_60/ts_102687v010201p.pdf (accessed on 23 December 2020).

- ETSI ITS. Intelligent Transport Systems (ITS); Vehicular Communications; Basic Set of Applications, Part 3. Specifications of Decentralized Environmental Notification Basic Service. Available online: https://www.etsi.org/deliver/etsi_en/302600_302699/30263703/01.02.01_30/en_30263703v010201v.pdf (accessed on 23 December 2020).

| Scenario | A | B |

|---|---|---|

| Speed limit [km/h] | - | 120 |

| Total distance driven [m] | 342,235 | 1,120,346 |

| Total time driven [s] | 10,298 | 80,676 |

| Number of vehicles (trucks) | 856 (168) | 2850 (389) |

| Number of lanes | 3↓↑3 | 3↓↑3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schiegg, F.A.; Llatser, I.; Bischoff, D.; Volk, G. Collective Perception: A Safety Perspective. Sensors 2021, 21, 159. https://doi.org/10.3390/s21010159

Schiegg FA, Llatser I, Bischoff D, Volk G. Collective Perception: A Safety Perspective. Sensors. 2021; 21(1):159. https://doi.org/10.3390/s21010159

Chicago/Turabian StyleSchiegg, Florian A., Ignacio Llatser, Daniel Bischoff, and Georg Volk. 2021. "Collective Perception: A Safety Perspective" Sensors 21, no. 1: 159. https://doi.org/10.3390/s21010159

APA StyleSchiegg, F. A., Llatser, I., Bischoff, D., & Volk, G. (2021). Collective Perception: A Safety Perspective. Sensors, 21(1), 159. https://doi.org/10.3390/s21010159