Position and Attitude Estimation Method Integrating Visual Odometer and GPS

Abstract

1. Introduction

2. The Position and Attitude Estimation Method of GVO

2.1. The Principle of GVO

2.2. The Position and Attitude Estimation

2.3. The Optimization of GVO

| Algorithm 1 Optimization of GVO |

| Require: Positioning results of GPS and poses of camera . |

| 1: Optimize and by minimizing error between GPS measurements and the positions estimated by VO with initial values obtained by (10) and (11). |

| 2: Optimize the poses of camera by minimizing error between GPS measurements and the positions estimated by VO . |

| 3: Use BA (Bundle Adjustment) to optimize the camera poses again by minimizing the image reprojection error between the matched 3D points in ENU frame and their keypoints in the image. |

| 4: if The optimization converges then |

| 5: return The optimized , and . |

| 6: else |

| 7: Repeat step1 to step 6 |

| 8: end if |

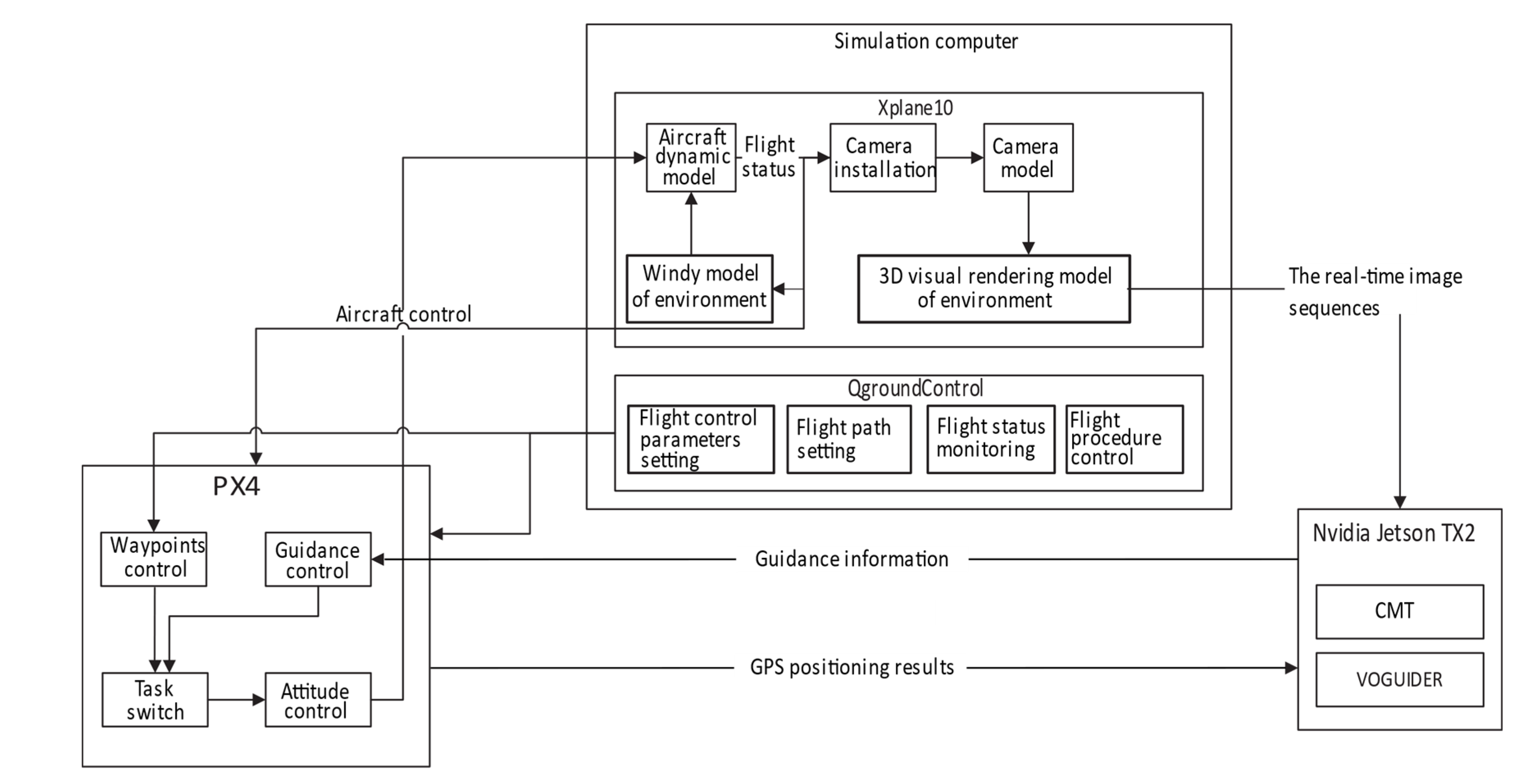

3. Hardware-in-the-Loop Simulation

3.1. The Experimental Environment

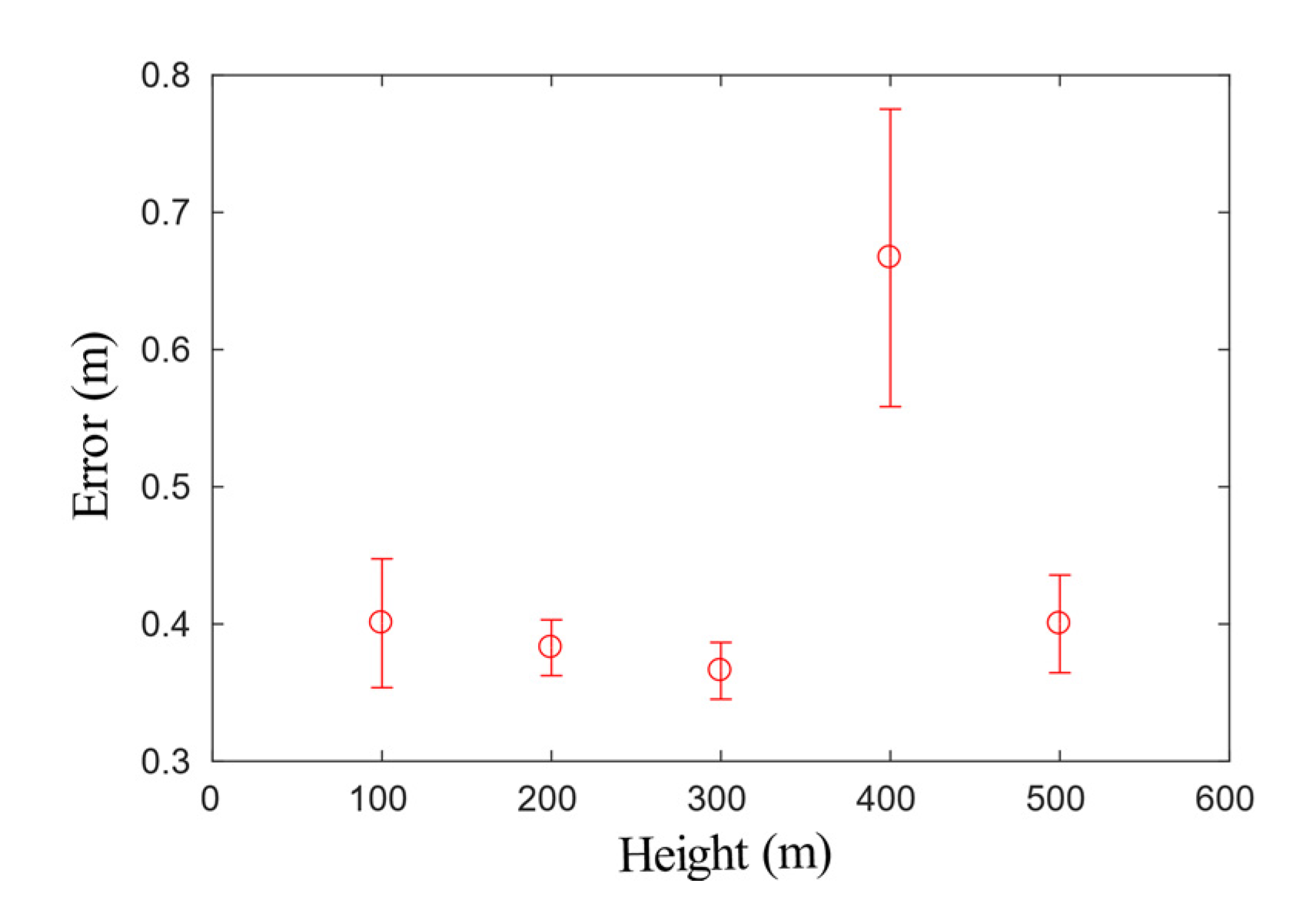

3.2. Positioning Results of GVO

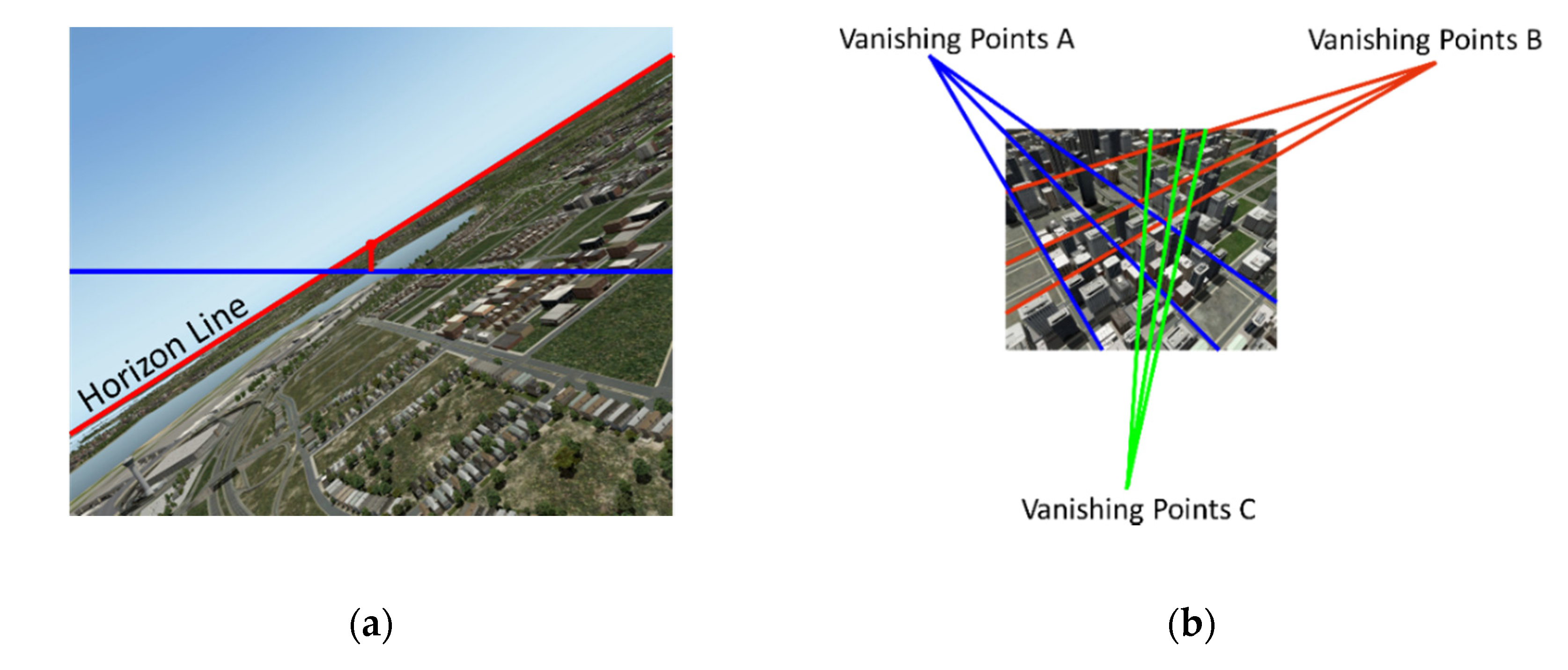

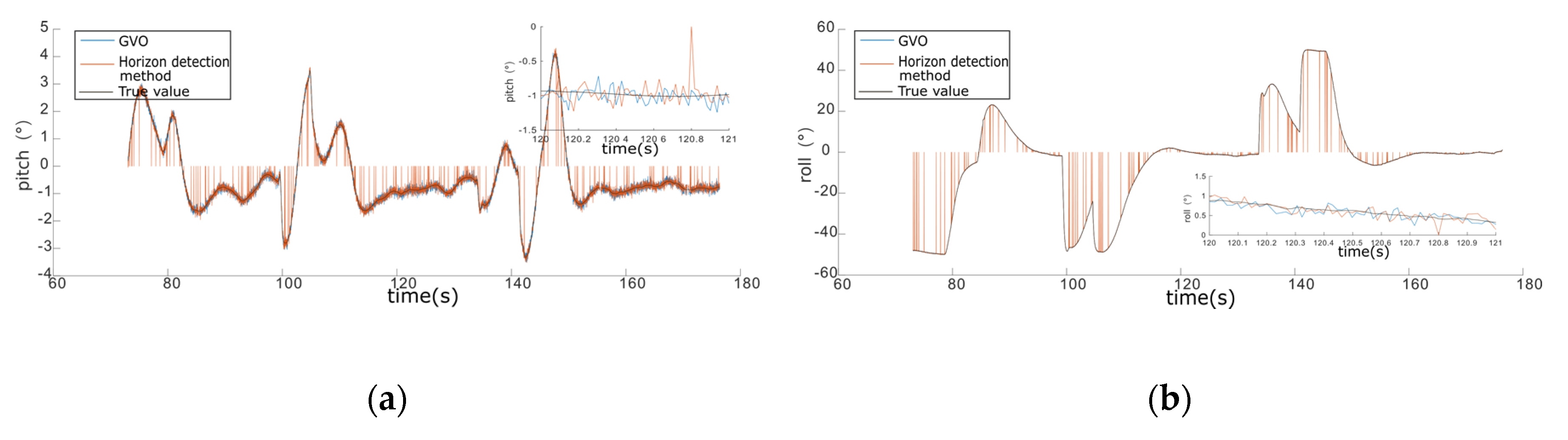

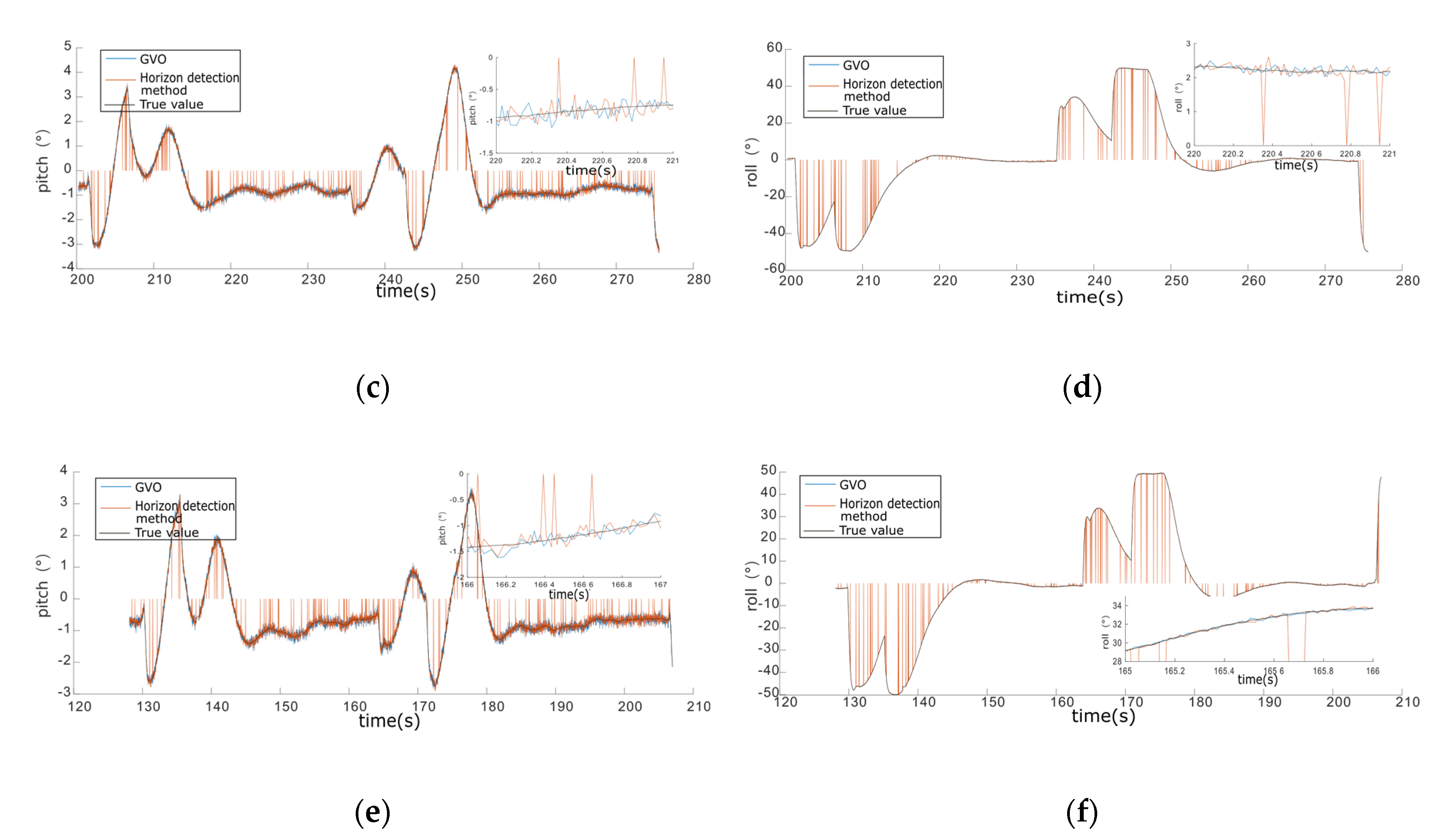

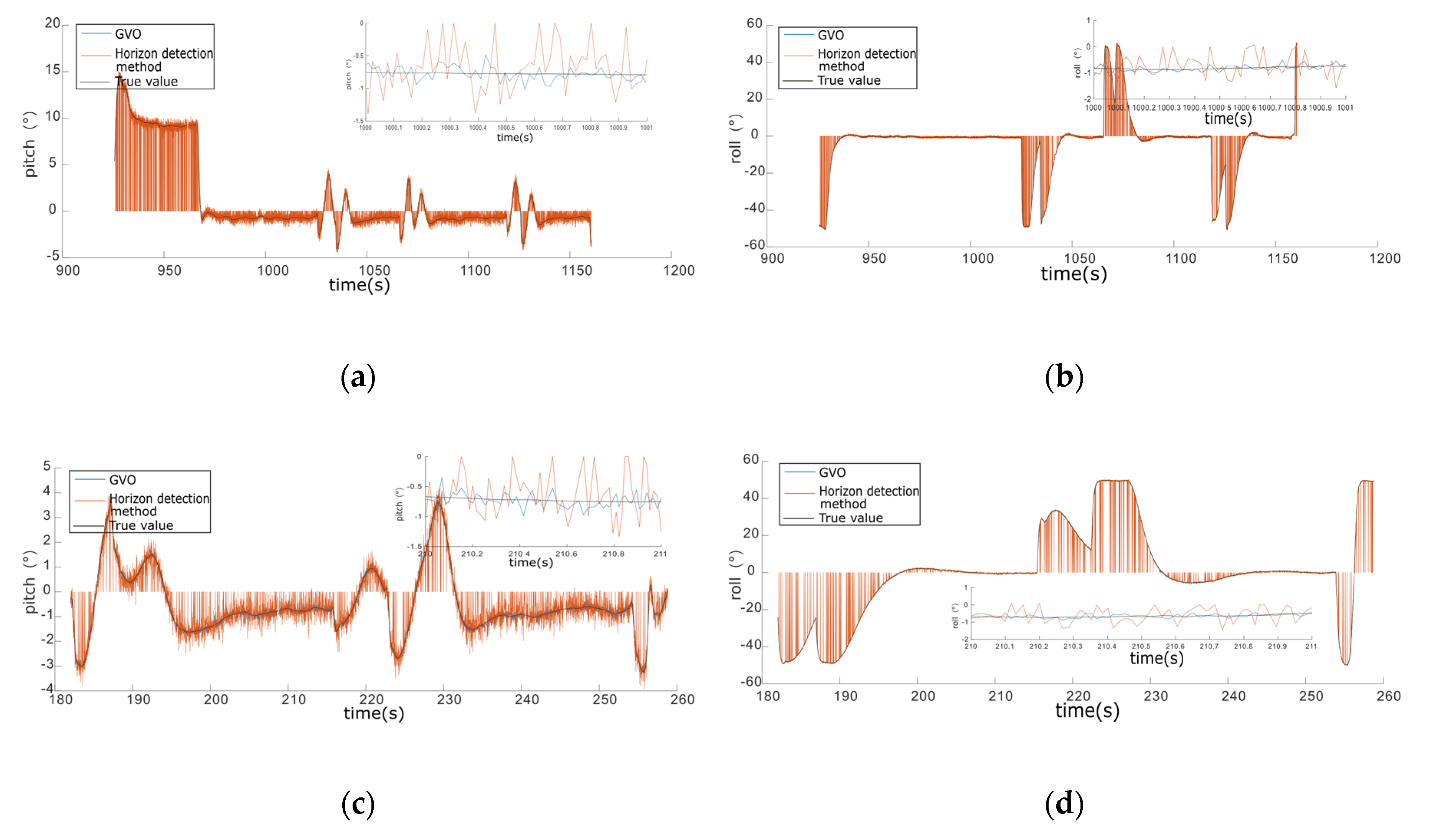

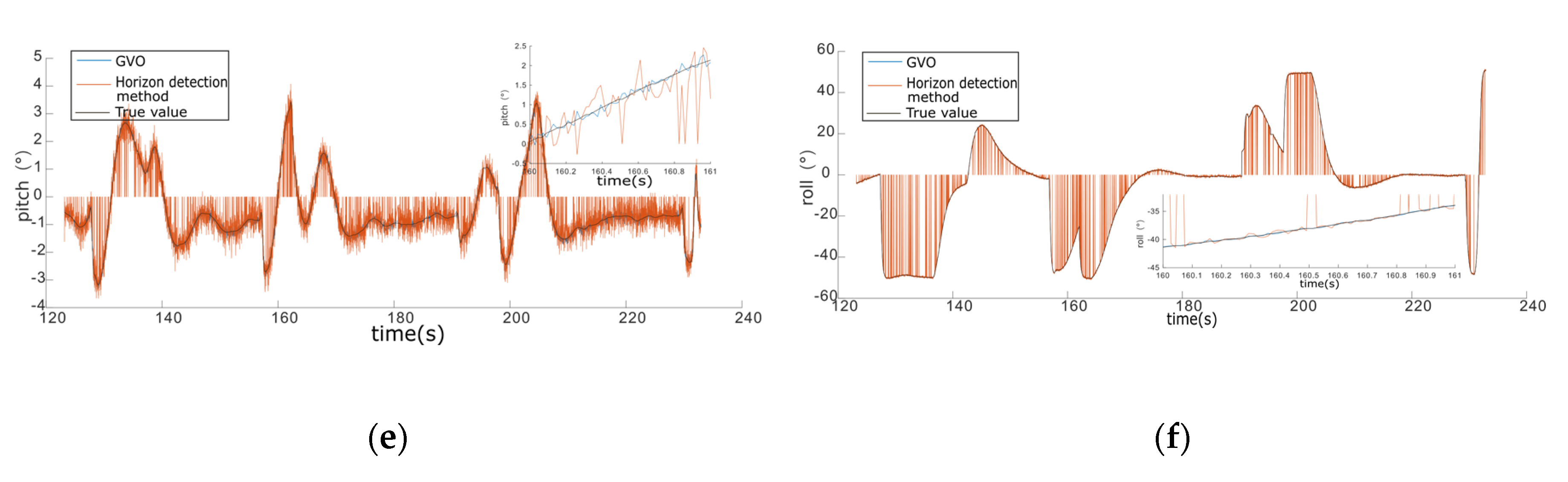

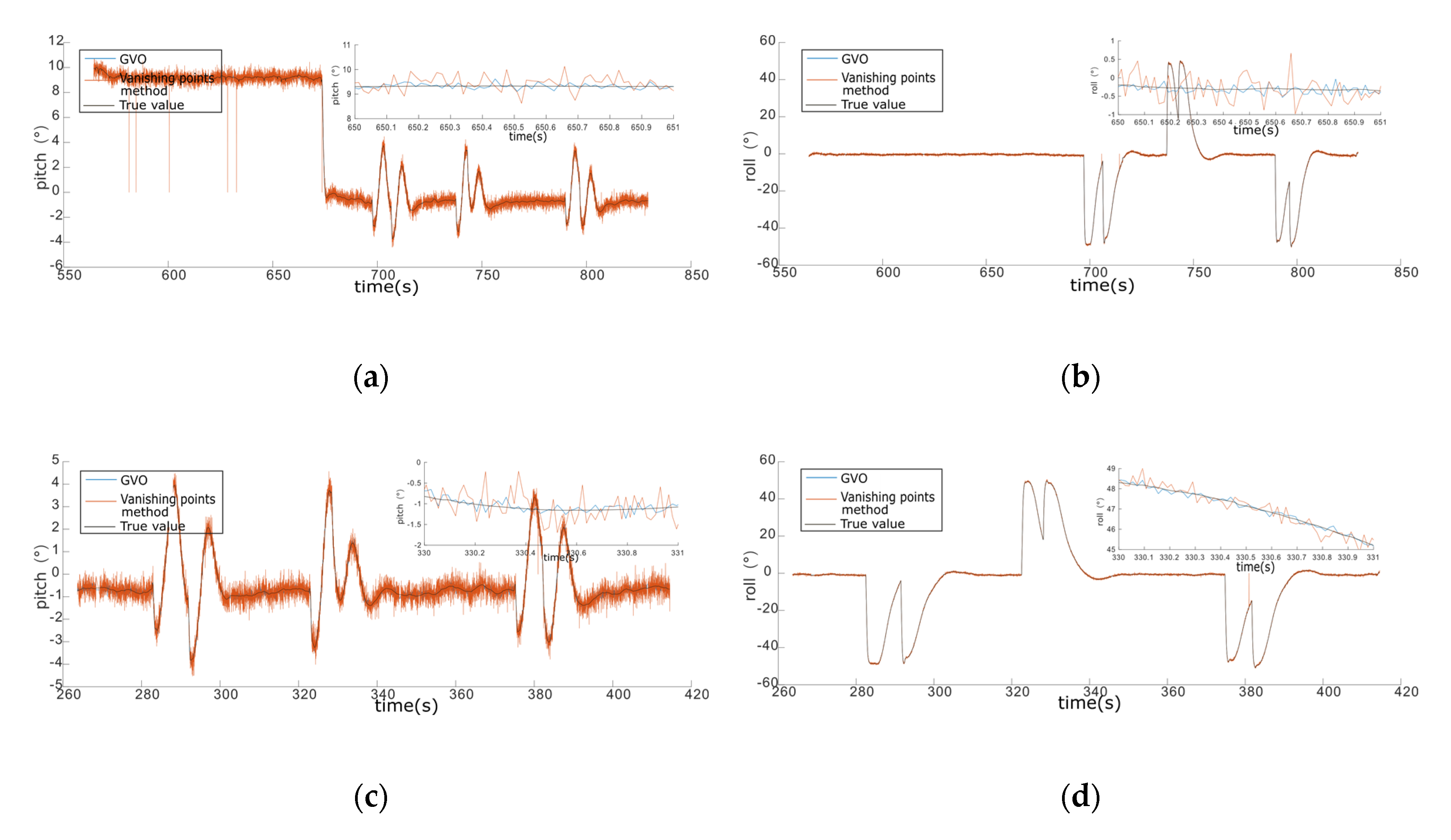

3.3. Attitude Estimation Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Savage, P.G. Strapdown Inertial Navigation Integration Algorithm Design Part 2: Velocity and Position Algorithms. J. Guid. Control. Dyn. 1998, 21, 208–221. [Google Scholar] [CrossRef]

- Hao, Y.; Xiong, Z.; Gao, W.; Li, L. Study of strapdown inertial navigation integration algorithms. In Proceedings of the 2004 International Conference on Intelligent Mechatronics and Automation, Chengdu, China, 26–31 August 2004; pp. 751–754. [Google Scholar]

- Christian, E.; Lasse, K.; Heiner, K. Real-time single-frequency GPS/MEMS-IMU attitude determination of lightweight UAVs. Sensors 2015, 15, 26212–26235. [Google Scholar]

- Rhudy, M.; Gross, J.; Gu, Y.; Napolitano, M. Fusion of GPS and Redundant IMU Data for Attitude Estimation. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Minneapolis, MN, USA, 13–16 August 2012; p. 5030. [Google Scholar]

- Ahmad, I.; Benallegue, A.; El Hadri, A. Sliding mode based attitude estimation for accelerated aerial vehicles using GPS/IMU measurements. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3142–3147. [Google Scholar]

- Zhang, J.; Jin, Z.-H.; Tian, W.-F. A suboptimal Kalman filter with fading factors for DGPS/MEMS-IMU/magnetic compass integrated navigation. In Proceedings of the 2003 IEEE International Conference on Intelligent Transportation Systems, Shanghai, China, 12–15 October 2003; pp. 1229–1234. [Google Scholar]

- Zhang, J.; Singh, S. INS Assisted Monocular Visual Odometry for Aerial Vehicles. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2015; pp. 183–197. [Google Scholar]

- Sirtkaya, S.; Seymen, B.; Alatan, A.A. Loosely coupled Kalman filtering for fusion of Visual Odometry and inertial navigation. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 219–226. [Google Scholar]

- Chouaib, I.; Wainakh, B.M.; Khalaf, C.W. Robust self-corrective initial alignment algorithm for strap-down INS. In Proceedings of the 2015 10th Asian Control Conference (ASCC); Institute of Electrical and Electronics Engineers (IEEE), Kota Kinabalu, Malaysia, 31 May–3 June 2015; pp. 1–6. [Google Scholar]

- Tan, C.; Zhu, X.; Su, Y.; Wang, Y.; Wu, Z.; Gu, D. A New Analytic Alignment Method for a SINS. Sensors 2015, 15, 27930–27953. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Gao, W.; Li, P.; Nie, Q. The study on transfer alignment for SINS on dynamic base. In Proceedings of the 2005 IEEE International Conference on Mechatronics and Automation, Niagara Falls, ON, Canada, 29 July–1 August 2006; Volume 3, pp. 1318–1322. [Google Scholar]

- Hao, Y.; Xiong, Z.; Wang, W. Rapid transfer alignment based on unsented Kalman filter. In Proceedings of the American Control Conference 2006, Minneapolis, MN, USA, 14–16 June 2006. [Google Scholar]

- Chen, Y.; Zhao, Y. New rapid transfer alignment method for SINS of airborne weapon systems. J. Syst. Eng. Electron. 2014, 25, 281–287. [Google Scholar] [CrossRef]

- Dusha, D.; Boles, W.; Walker, R. Attitude Estimation for a Fixed-Wing Aircraft Using Horizon Detection and Optical Flow. In Proceedings of the 9th Biennial Conference of the Australian Pattern Recognition Society on Digital Image Computing Techniques and Applications (DICTA 2007), Glenelg, Australia, 3–5 December 2007; pp. 485–492. [Google Scholar]

- Boroujeni, N.S.; Etemad, S.A.; Whitehead, A. Robust Horizon Detection Using Segmentation for UAV Applications. In Proceedings of the 2012 Ninth Conference on Computer and Robot Vision, Toronto, ON, Canada, 28–30 May 2012; pp. 346–352. [Google Scholar]

- Shabayek, A.E.R.; Demonceaux, C.; Morel, O.; Fofi, D. Vision Based UAV Attitude Estimation: Progress and Insights. J. Intell. Robot. Syst. 2011, 65, 295–308. [Google Scholar] [CrossRef]

- Rother, C. A New Approach for Vanishing Point Detection in Architectural Environments. Image Vision Comput. 2002, 20, 647–655. [Google Scholar] [CrossRef]

- Denis, P.; Elder, J.H.; Estrada, F.J. Efficient Edge-Based Methods for Estimating Manhattan Frames in Urban Imagery. In Proceedings of the 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Volume 5303, pp. 197–210. [Google Scholar]

- Timotheatos, S.; Piperakis, S.; Argyros, A.; Trahanias, P. Vision Based Horizon Detection for UAV Navigation. In Proceedings of the 27th International Conference on Robotics in Alpe-Adria-Danube Region, Patras, Greece, 6–8 June 2018; pp. 181–189. [Google Scholar]

- Dumble, S.J.; Gibbens, P.W. Horizon Profile Detection for Attitude Determination. J. Intell. Robot. Syst. 2012, 68, 339–357. [Google Scholar] [CrossRef]

- Hwangbo, M.; Kanade, T. Visual-inertial UAV attitude estimation using urban scene regularities. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2451–2458. [Google Scholar]

- Nister, D.; Naroditsky, O.; Bergen, J.R. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Esteban, I.; Dorst, L.; Dijk, J. Closed Form Solution for the Scale Ambiguity Problem in Monocular Visual Odometry. In Proceedings of the Third International Conference Intelligent Robotics and Applications, Shanghai, China, 10–12 November 2010; pp. 665–679. [Google Scholar]

- Choi, S.; Park, J.; Yu, W. Resolving scale ambiguity for monocular visual odometry. In Proceedings of the 10th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 30 October–2 November 2013; pp. 604–608. [Google Scholar]

- Kaplan, E.D.; Christopher, J.H. Understanding GPS: Principles and Applications, 2nd ed.; Artech House: Norwood, MA, USA, 2005; ISBN 978-1-58053-894-7. [Google Scholar]

- Cai, G.; Chen, B.M.; Lee, T.H. Advances in Industrial Control; Springer: London, UK, 2011; pp. 23–34. ISBN 0857296345. [Google Scholar]

- Mur-Artal, R.; Tardos, J. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- PX4/Firmware. Available online: https://github.com/PX4/Firmware/tree/v1.8.0 (accessed on 19 June 2018).

| Number | Flying Height | Flying Speed | Field Angle of Camera | Resolution of Camera |

|---|---|---|---|---|

| 1 | 100 m | 30 m/s | 45° × 45° | 1024 × 1024 |

| 2 | 200 m | 30 m/s | 45° × 45° | 1024 × 1024 |

| 3 | 300 m | 30 m/s | 45° × 45° | 1024 × 1024 |

| 4 | 400 m | 30 m/s | 45° × 45° | 1024 × 1024 |

| 5 | 500 m | 30 m/s | 45° × 45° | 1024 × 1024 |

| Height/m | Mean Deviation/m | Deviation Variance/m2 |

|---|---|---|

| 100 | 0.41 | 0.13 |

| 200 | 0.37 | 0.08 |

| 300 | 0.36 | 0.08 |

| 400 | 0.67 | 0.17 |

| 500 | 0.41 | 0.11 |

| Number | Area | Height | Algorithms to Compare |

|---|---|---|---|

| 1 | Plain | 100 | Horizon detection method |

| 2 | Plain | 200 | Horizon detection method |

| 3 | Plain | 300 | Horizon detection method |

| 4 | Mountain | 100 | Horizon detection method |

| 5 | Mountain | 200 | Horizon detection method |

| 6 | Mountain | 300 | Horizon detection method |

| 7 | City | 100 | Vanishing points method |

| 8 | City | 200 | Vanishing points method |

| Algorithm | Area | Height/m | Success Rate | Average Error/° |

|---|---|---|---|---|

| GVO | Plain | 100 | 100% | 1.2 |

| Horizon detection | Plain | 100 | 97% | 2.0 |

| GVO | Plain | 200 | 100% | 1.1 |

| Horizon detection | Plain | 200 | 98% | 2.1 |

| GVO | Plain | 300 | 100% | 1.3 |

| Horizon detection | Plain | 300 | 97% | 2.0 |

| GVO | Mountain | 100 | 100% | 1.2 |

| Horizon detection | Mountain | 100 | 83% | 2.5 |

| GVO | Mountain | 200 | 100% | 1.1 |

| Horizon detection | Mountain | 200 | 85% | 2.3 |

| GVO | Mountain | 300 | 100% | 1.1 |

| Horizon detection | Mountain | 300 | 91% | 2.2 |

| Algorithm | Height | Success Rate | Average Error/° |

|---|---|---|---|

| GVO | 100 | 100% | 1.3 |

| Vanishing points | 100 | 93% | 3.1 |

| GVO | 200 | 100% | 1.1 |

| Vanishing points | 200 | 82% | 2.7 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Shen, Q.; Li, J.; Deng, Z.; Wang, H.; Gao, X. Position and Attitude Estimation Method Integrating Visual Odometer and GPS. Sensors 2020, 20, 2121. https://doi.org/10.3390/s20072121

Yang Y, Shen Q, Li J, Deng Z, Wang H, Gao X. Position and Attitude Estimation Method Integrating Visual Odometer and GPS. Sensors. 2020; 20(7):2121. https://doi.org/10.3390/s20072121

Chicago/Turabian StyleYang, Yu, Qiang Shen, Jie Li, Zilong Deng, Hanyu Wang, and Xiao Gao. 2020. "Position and Attitude Estimation Method Integrating Visual Odometer and GPS" Sensors 20, no. 7: 2121. https://doi.org/10.3390/s20072121

APA StyleYang, Y., Shen, Q., Li, J., Deng, Z., Wang, H., & Gao, X. (2020). Position and Attitude Estimation Method Integrating Visual Odometer and GPS. Sensors, 20(7), 2121. https://doi.org/10.3390/s20072121