Integrated Circuit Angular Displacement Sensor with On-chip Pinhole Aperture

Abstract

:1. Introduction

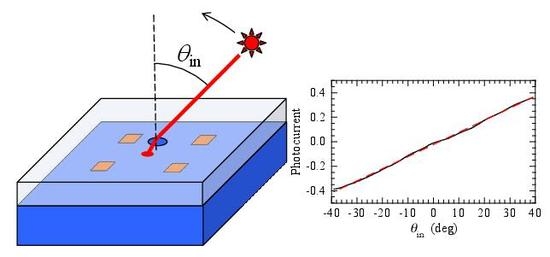

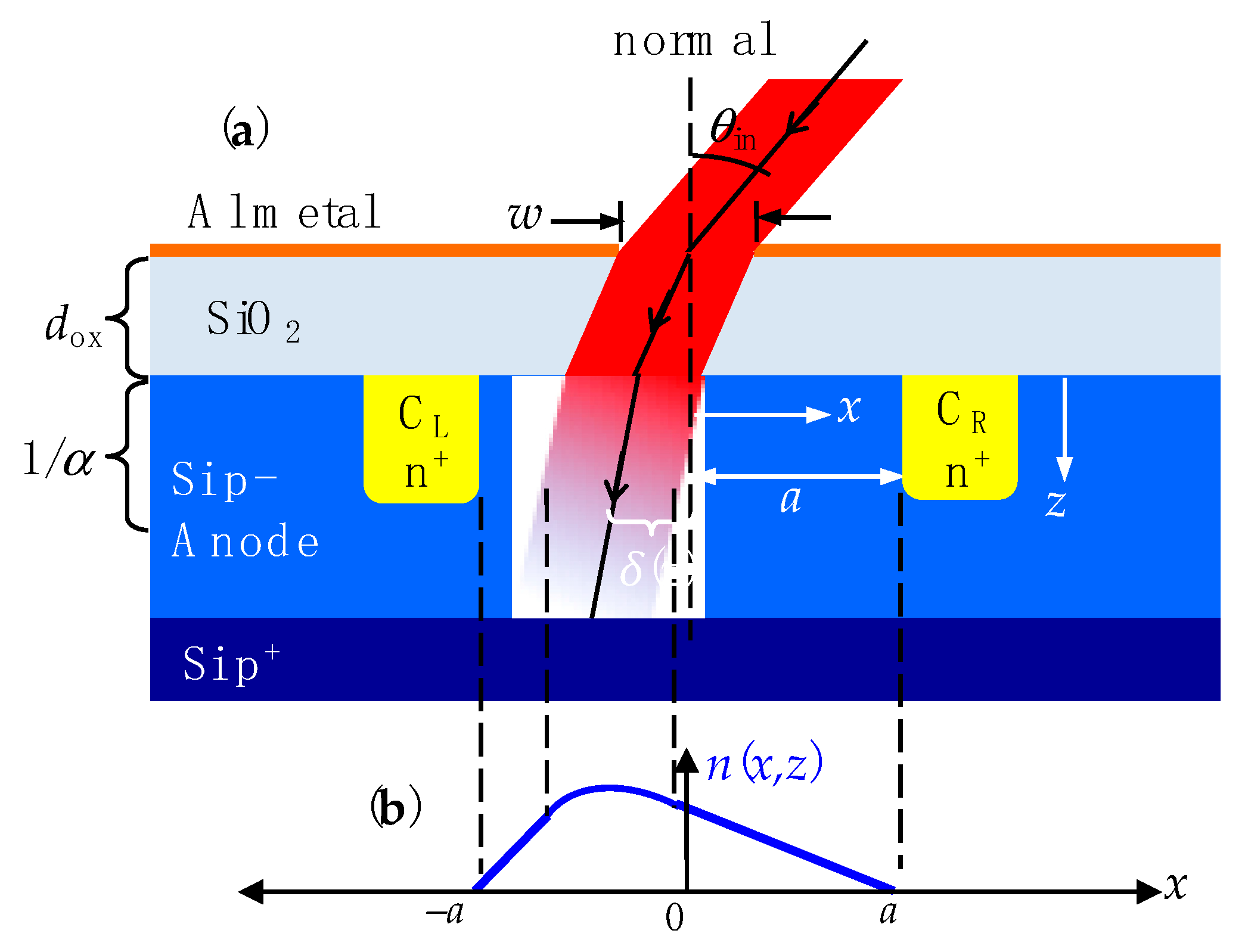

2. IC Astrolabe Concept and Angular Transduction Model

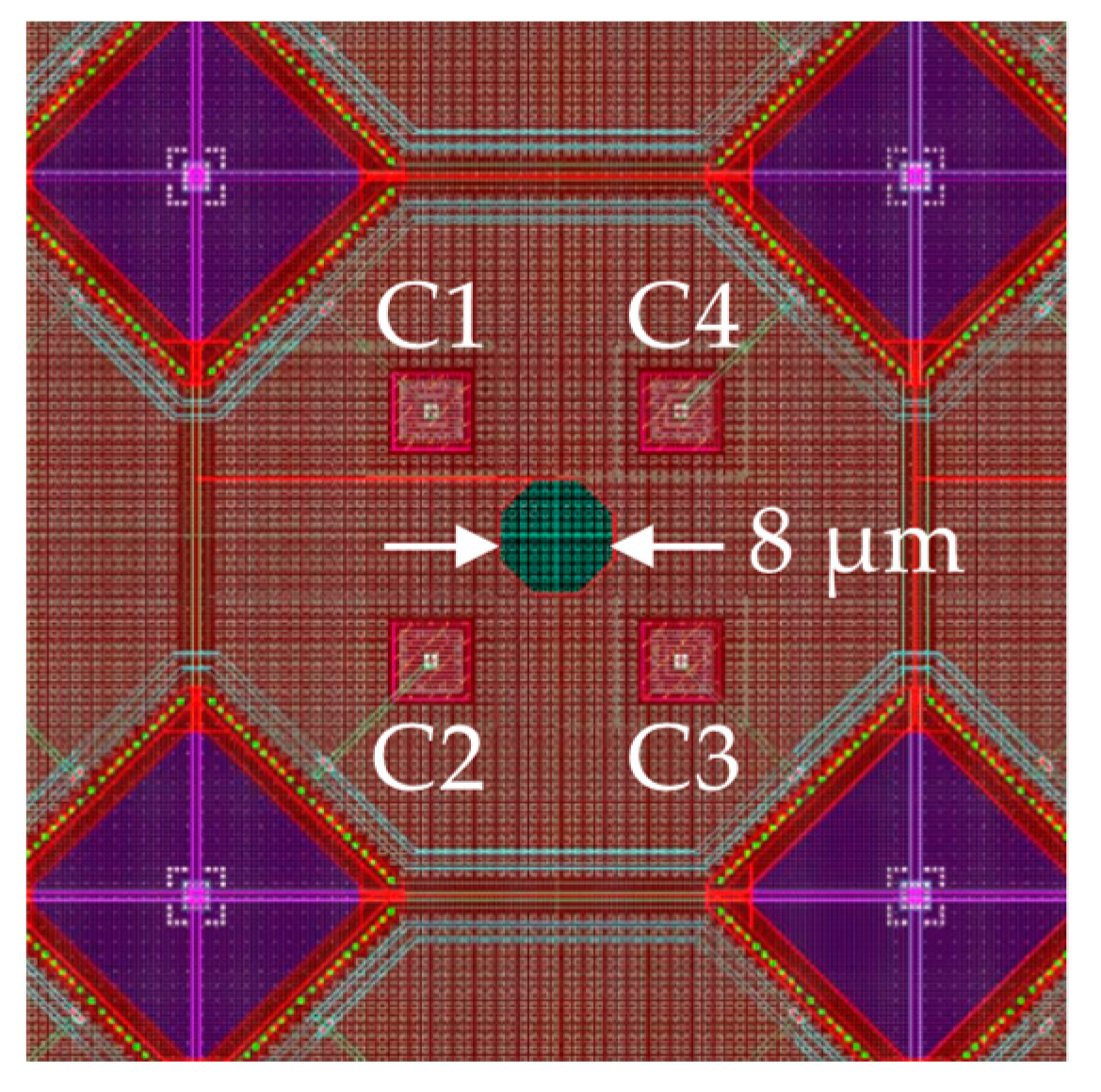

3. Prototype IC Astrolabe Layout and Fabrication

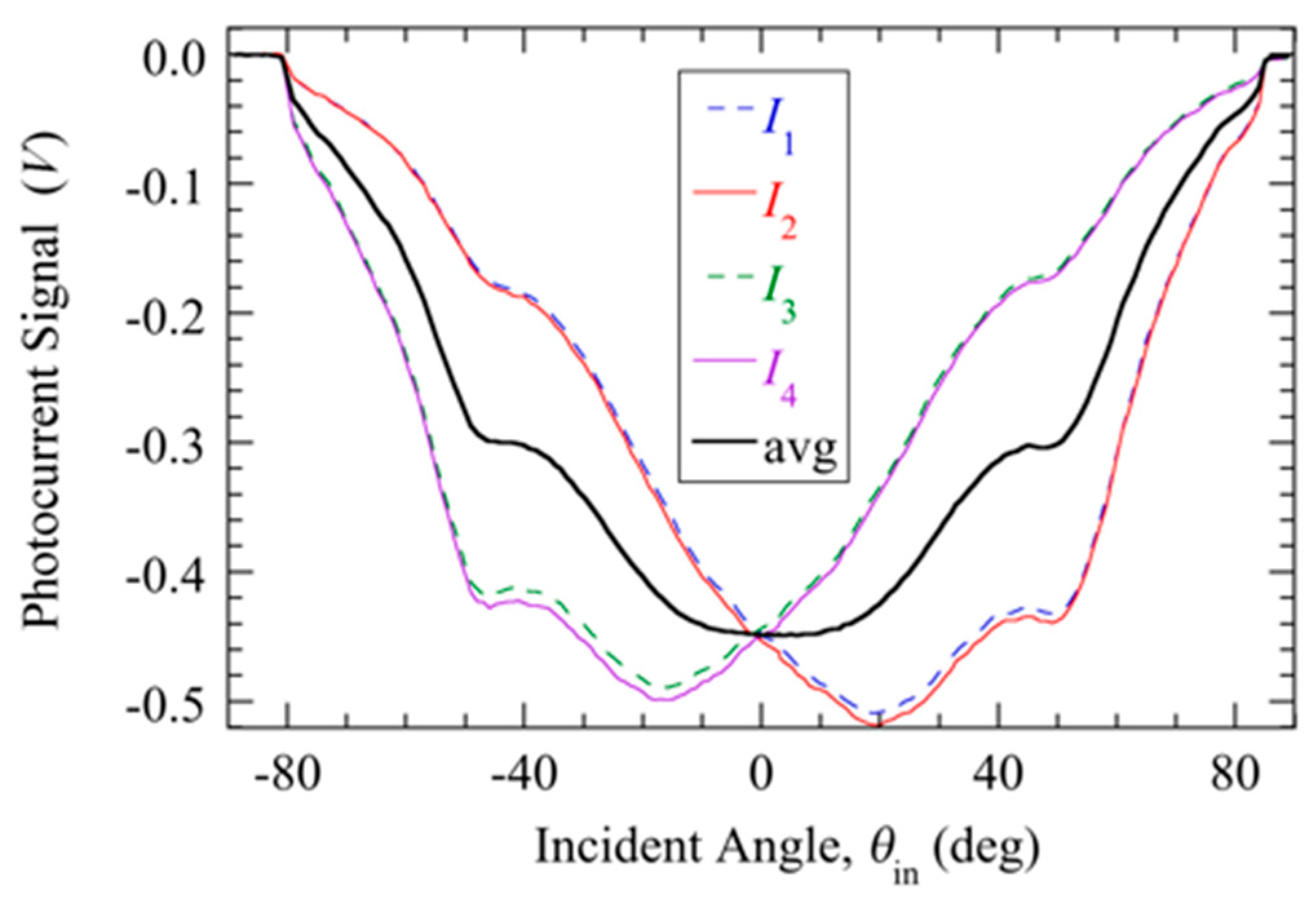

4. Basic Performance Characteristics of IC Astrolabe Prototype

4.1. Measurement Methods

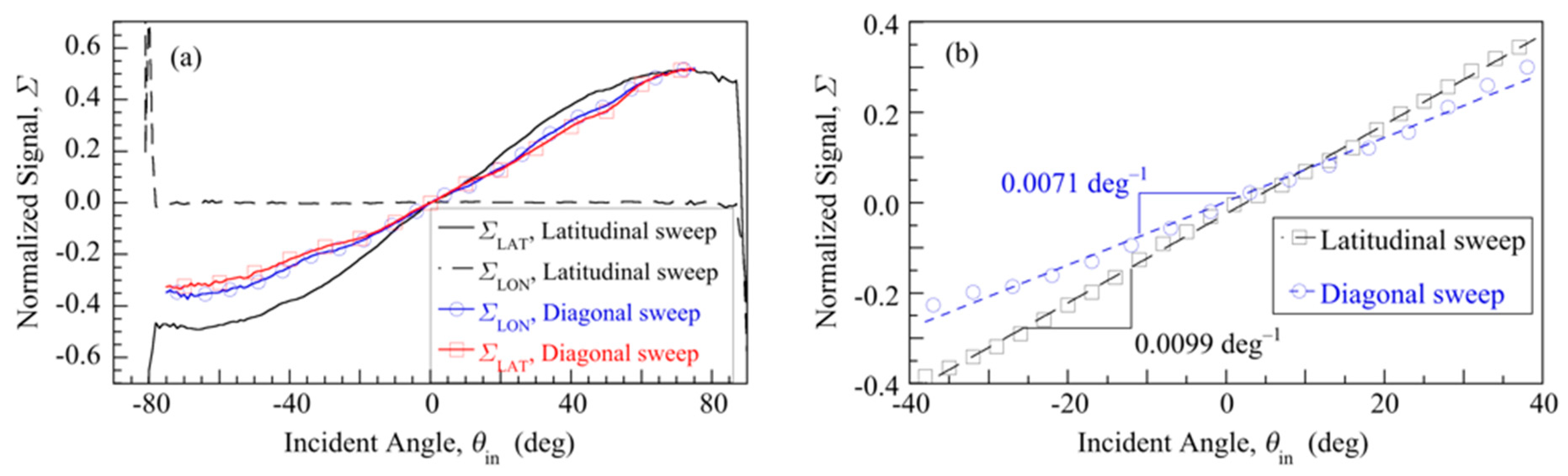

4.2. Horizon, Field-of-View, and Angular Sensitivity

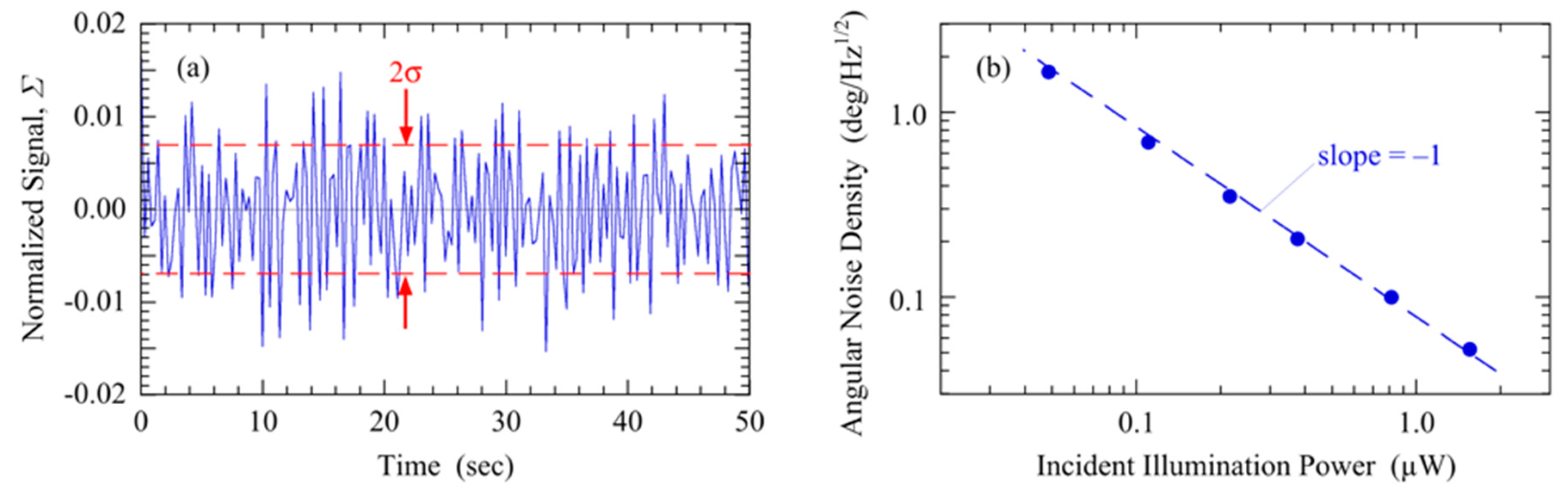

4.3. Basic Noise Characteristics

4.4. Light Tag Wavelength Dependence

4.5. Potential Future Improvements to the Prototype IC Astrolabe

5. Summary

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Nejat, G.; Benhabib, B. High-precision task-space sensing and guidance for autonomous robot localization. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; IEEE: Piscataway, NJ, USA; pp. 1527–1532. [Google Scholar] [CrossRef]

- Zhang, X.; Sui, J.; Yang, L. Application of PSD in Space Point Position Measurement. In Proceedings of the 2007 IEEE Eighth International Conference on Electronic Measurement and Instrument, Xi’an, China, 16–18 August 2007; IEEE: Piscataway, NJ, USA; pp. 4-538–4-542. [Google Scholar] [CrossRef]

- Nam, S.-H.; Oh, S.-Y. Real-Time Dynamic Visual Tracking Using PSD Sensors and Extended Trapezoidal Motion Planning. Appl. Intell. 1999, 10, 53–70. [Google Scholar] [CrossRef]

- Blank, S.; Shen, Y.; Xi, N.; Zhang, C.; Wejinya, U.C. High Precision PSD Guided Robot Localization: Design, Mapping, and Position Control. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; IEEE: Piscataway, NJ, USA; pp. 52–57. [Google Scholar] [CrossRef]

- Mäkynen, A. Position-Sensitive Devices and Sensor Systems for Optical Tracking and Displacement Sensing Applications. Ph.D. Thesis, University of Oulu, Oulu, Finland, September 2000. [Google Scholar]

- Achtelik, M.; Zhang, T.; Kuhnlenz, K.; Buss, M. Visual tracking and control of a quadcopter using a stereo camera system and inertial sensors. In Proceedings of the International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; IEEE: Piscataway, NJ, USA; pp. 2863–2869. [Google Scholar] [CrossRef]

- Nam, C.N.K.; Kang, H.J.; Suh, Y.S. Golf Swing Motion Tracking Using Inertial Sensors and a Stereo Camera. IEEE Trans. Instrum. Meas. 2014, 63, 943–952. [Google Scholar] [CrossRef]

- Burns, R.D.; Shah, J.; Hong, C.; Pepic, S.; Lee, J.S.; Homsey, R.I.; Thomas, P. Object location and centroiding techniques with CMOS active pixel sensors. IEEE Trans. Electron. Dev. 2003, 50, 2369–2377. [Google Scholar] [CrossRef]

- Constandinou, T.G.; Toumazou, C. A micropower centroiding vision processor. IEEE J. Solid-State Circuits 2006, 61, 1430–1443. [Google Scholar] [CrossRef]

- Habibi, M.; Sayedi, M. High speed, low power VLSI CMOS vision sensor for geometric centre object tracking. Int. J. Electron. 2009, 96, 821–836. [Google Scholar] [CrossRef]

- Salles, L.P.; de Lima Monteiro, D.W. Designing the Response of and Optical Quad-Cell as Position-Sensitive Detector. IEEE Sens. J. 2010, 10, 286–293. [Google Scholar] [CrossRef]

- Exper-Chaín, R.; Escuela, A.M.; Fariña, D.; Sendra, J.R. Configurable Quadrant Photodetector: An Improved Position Sensitive Device. IEEE Sens. J. 2016, 16, 109–119. [Google Scholar] [CrossRef]

- Donati, S. Electro-Optical Instrumentation–Sensing and Measuring with Lasers; Prentice Hall: Upper Saddleriver, NJ, USA, 2004. [Google Scholar]

- Wallmark, J.T. A New Semiconductor Photocell Using Lateral Photoeffect. Proc. IRE 1957, 45, 474–483. [Google Scholar] [CrossRef]

- Woltring, H. Single- and Dual-Axis Lateral Photodetectors of Rectangular Shape. IEEE Trans. Electron. Dev. 1975, 22, 581–590. [Google Scholar] [CrossRef]

- Liu, H.; Xiao, Y.; Chen, Z. A High Precision Optical Position Detector Based on Duo-Lateral PSD. In Proceedings of the 2009 International Forum on Computer Science Technology and Applications, Chongqing, China, 25–27 December 2009; IEEE: Piscataway, NJ, USA; pp. 90–92. [Google Scholar] [CrossRef]

- Mäkynen, A.; Kostamovaara, J.T.; Myllylä, R.A. Displacement Sensing Resolution of Position-Senstive Detectors in Atmospheric Turbulence Using Retroreflected Beam. IEEE Trans. Instrum. Meas. 1997, 46, 1133–1136. [Google Scholar] [CrossRef]

- Astrolabes. Available online: www.ifa.hawaii.edu/tops/astlabe.html (accessed on 23 March 2020).

- Young, M. Pinhole Optics. Appl. Optics 1971, 10, 2763–2767. [Google Scholar] [CrossRef] [PubMed]

- Henry, J.; Livingstone, J. A Comparison of Layered Metal-Semiconductor Optical Position Sensitive Detectors. IEEE Sens. J. 2002, 2, 372–376. [Google Scholar] [CrossRef]

- Green, M.A. Self-consistent optical parameters of intrinsic silicon at 300 K including temperature coefficients. Sol. Energy Mater. Sol. Cells 2008, 92, 1305–1310. [Google Scholar] [CrossRef]

- Reif, F. Fundamentals of Statistical and Thermal Physics; McGraw-Hill: New York, NY, USA, 1965; pp. 483–484. [Google Scholar]

- Schroeder, D.V. An Introduction to Thermal Physics; Addison Wesley Longman: New York, NY, USA, 2000; pp. 47–48. [Google Scholar]

| LED Wavelength (nm) | SLAT (deg−1) | ηPin (deg·µW/Hz1/2) |

|---|---|---|

| 525 | 0.0094 | 0.257 |

| 660 | 0.0099 | 0.207 |

| 830 | 0.0069 | 0.263 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wijesinghe, U.; Dey, A.N.; Marshall, A.; Krenik, W.; Duan, C.; Edwards, H.; Lee, M. Integrated Circuit Angular Displacement Sensor with On-chip Pinhole Aperture. Sensors 2020, 20, 1794. https://doi.org/10.3390/s20061794

Wijesinghe U, Dey AN, Marshall A, Krenik W, Duan C, Edwards H, Lee M. Integrated Circuit Angular Displacement Sensor with On-chip Pinhole Aperture. Sensors. 2020; 20(6):1794. https://doi.org/10.3390/s20061794

Chicago/Turabian StyleWijesinghe, Udumbara, Akash Neel Dey, Andrew Marshall, William Krenik, Can Duan, Hal Edwards, and Mark Lee. 2020. "Integrated Circuit Angular Displacement Sensor with On-chip Pinhole Aperture" Sensors 20, no. 6: 1794. https://doi.org/10.3390/s20061794

APA StyleWijesinghe, U., Dey, A. N., Marshall, A., Krenik, W., Duan, C., Edwards, H., & Lee, M. (2020). Integrated Circuit Angular Displacement Sensor with On-chip Pinhole Aperture. Sensors, 20(6), 1794. https://doi.org/10.3390/s20061794