Deep Learning Classification for Diabetic Foot Thermograms †

Abstract

1. Introduction

2. Related Work

2.1. Segmentation and Feature Extraction

2.2. Classification with Computational Intelligence Methods

3. Methodology

3.1. Dataset and Data Augmentation

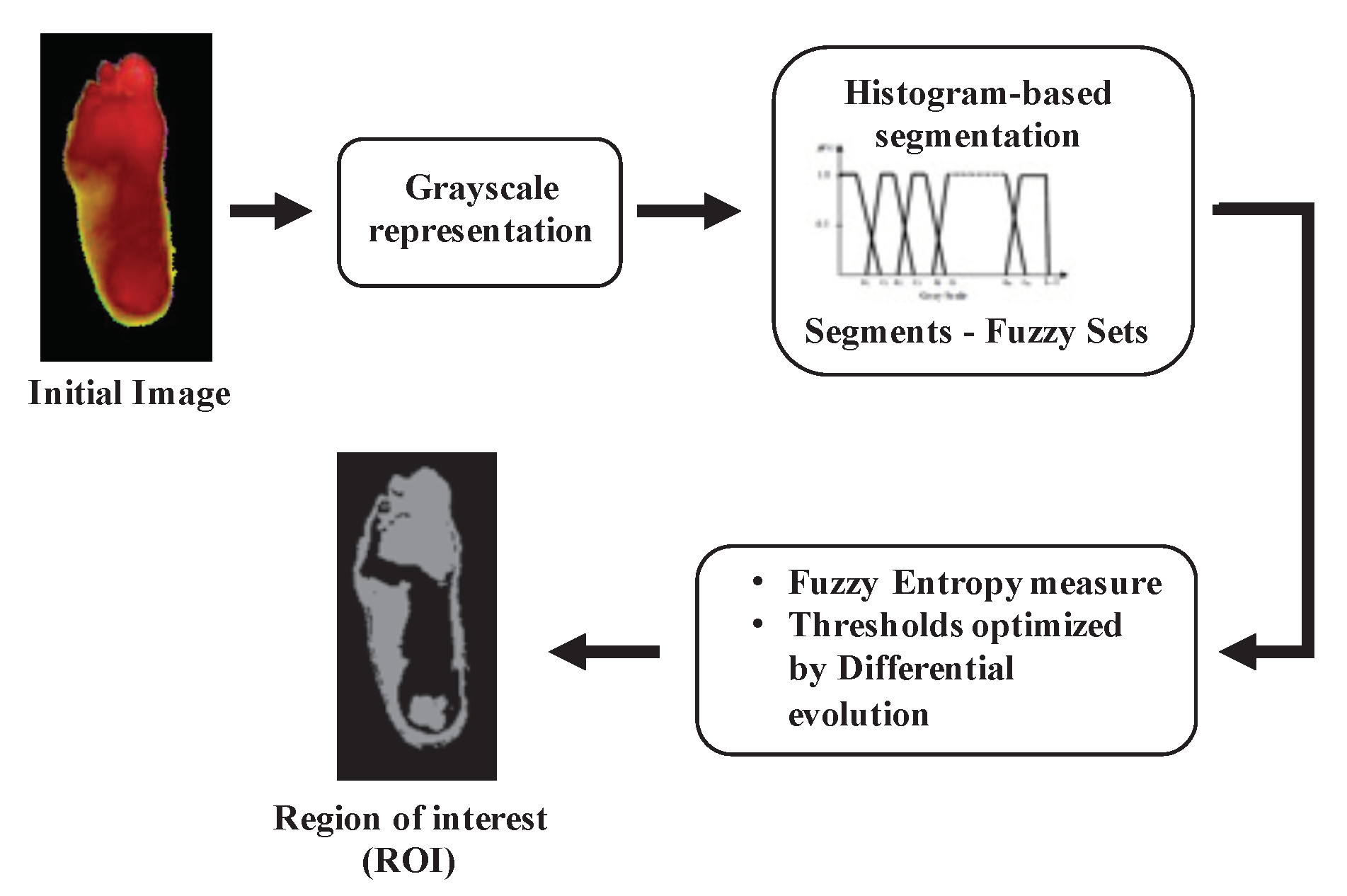

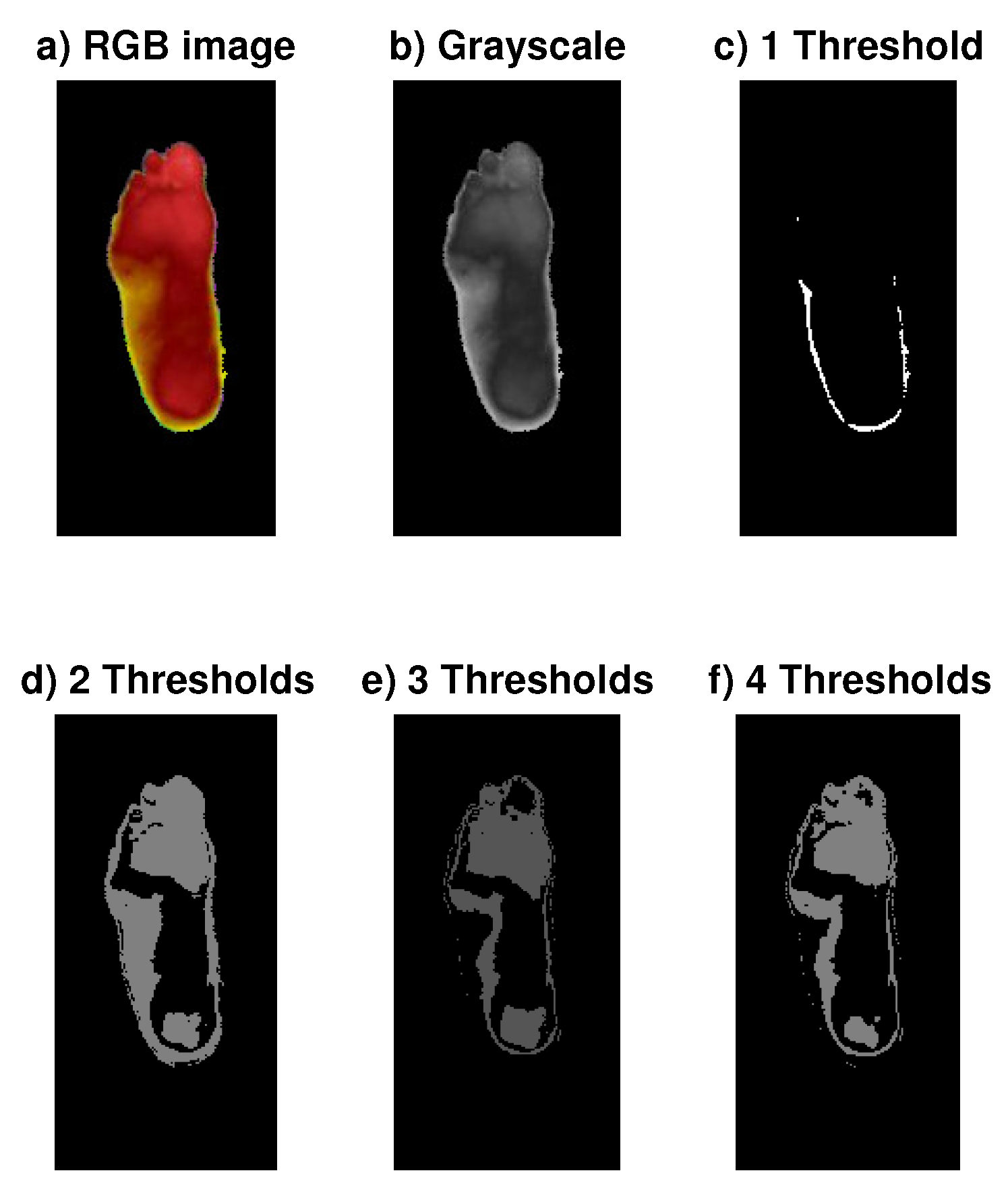

3.2. Automatic Segmentation

3.3. Machine Learning Classifiers

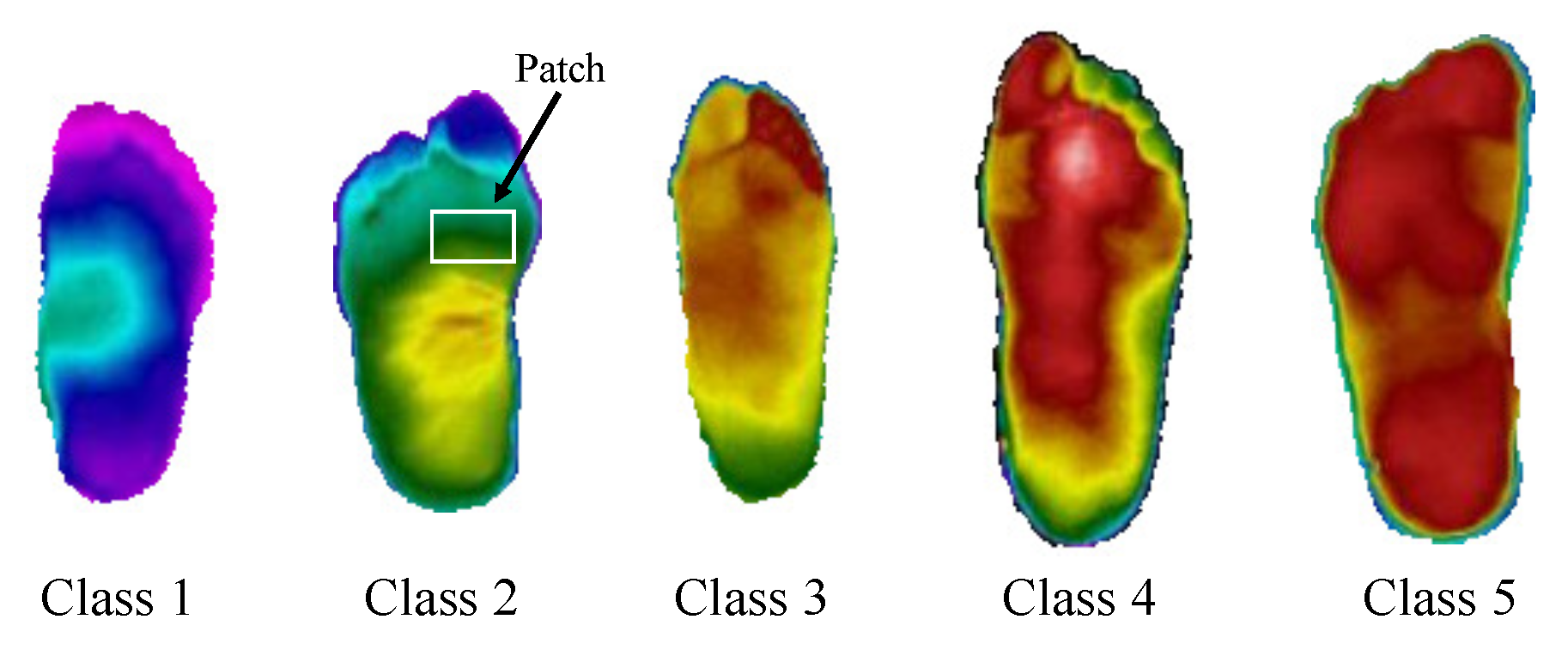

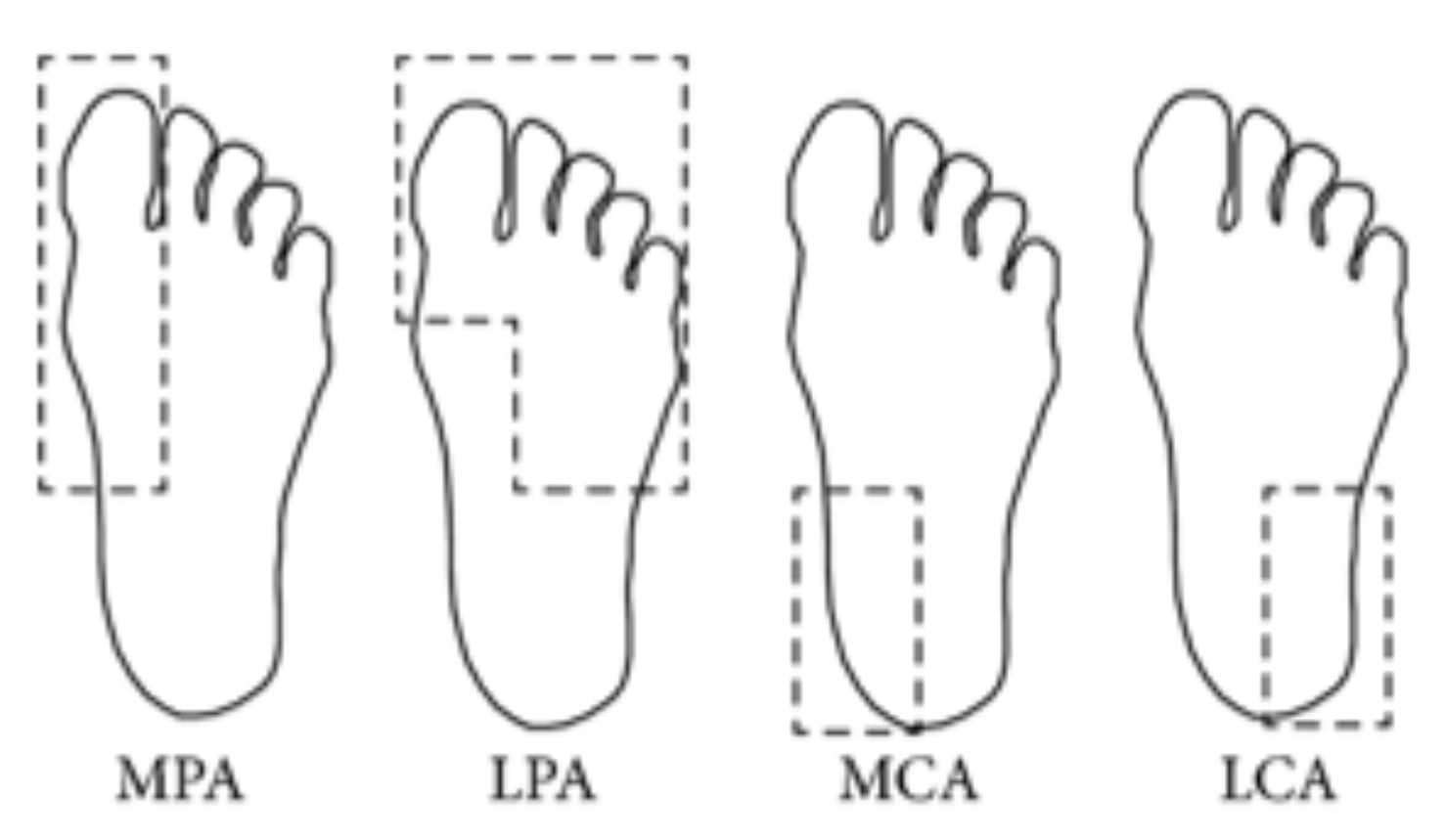

3.4. Multiple Classes

3.5. Performance Evaluation and Classification Scheme

3.6. Proposed Deep Learning Network

4. Experimental Results

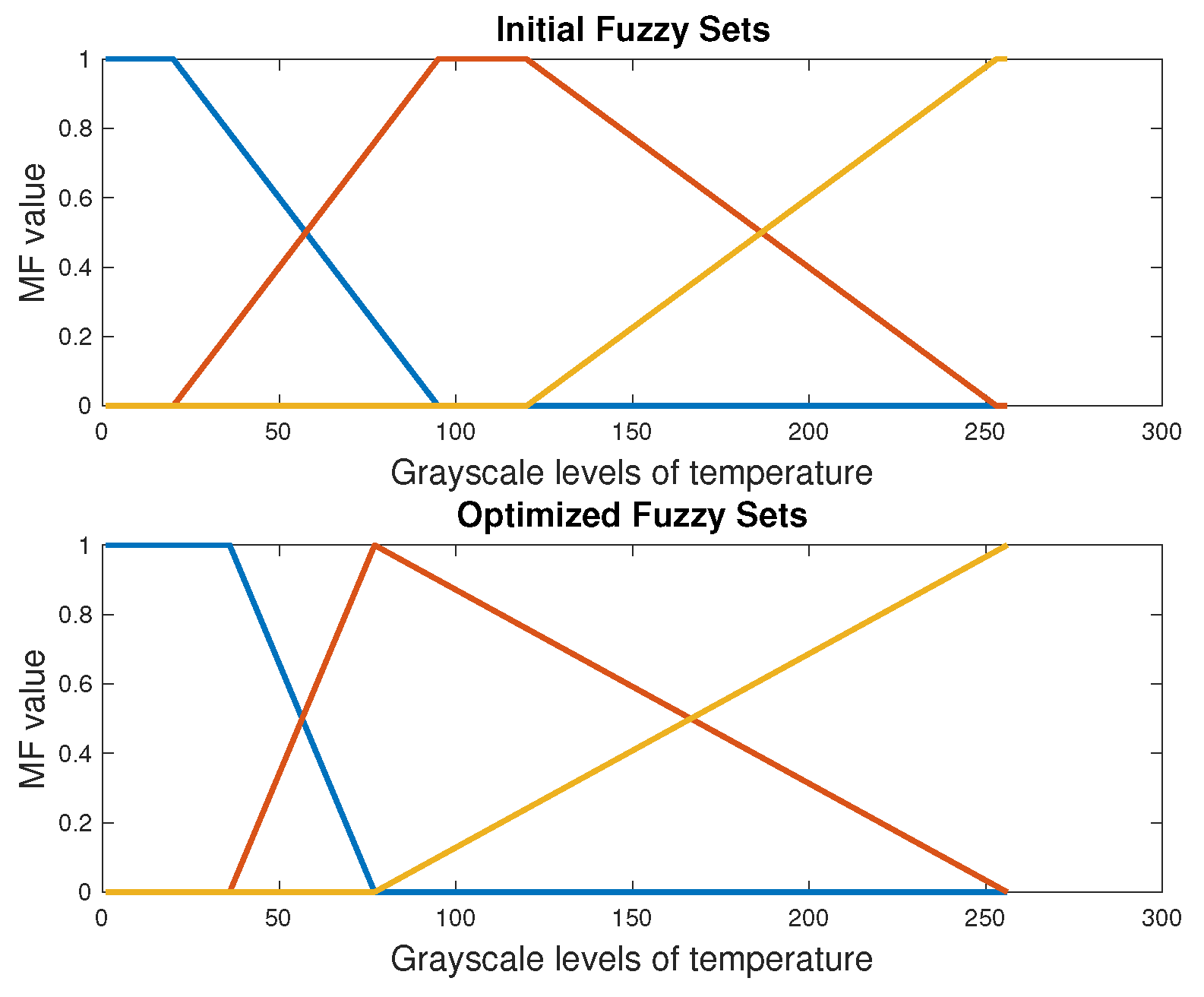

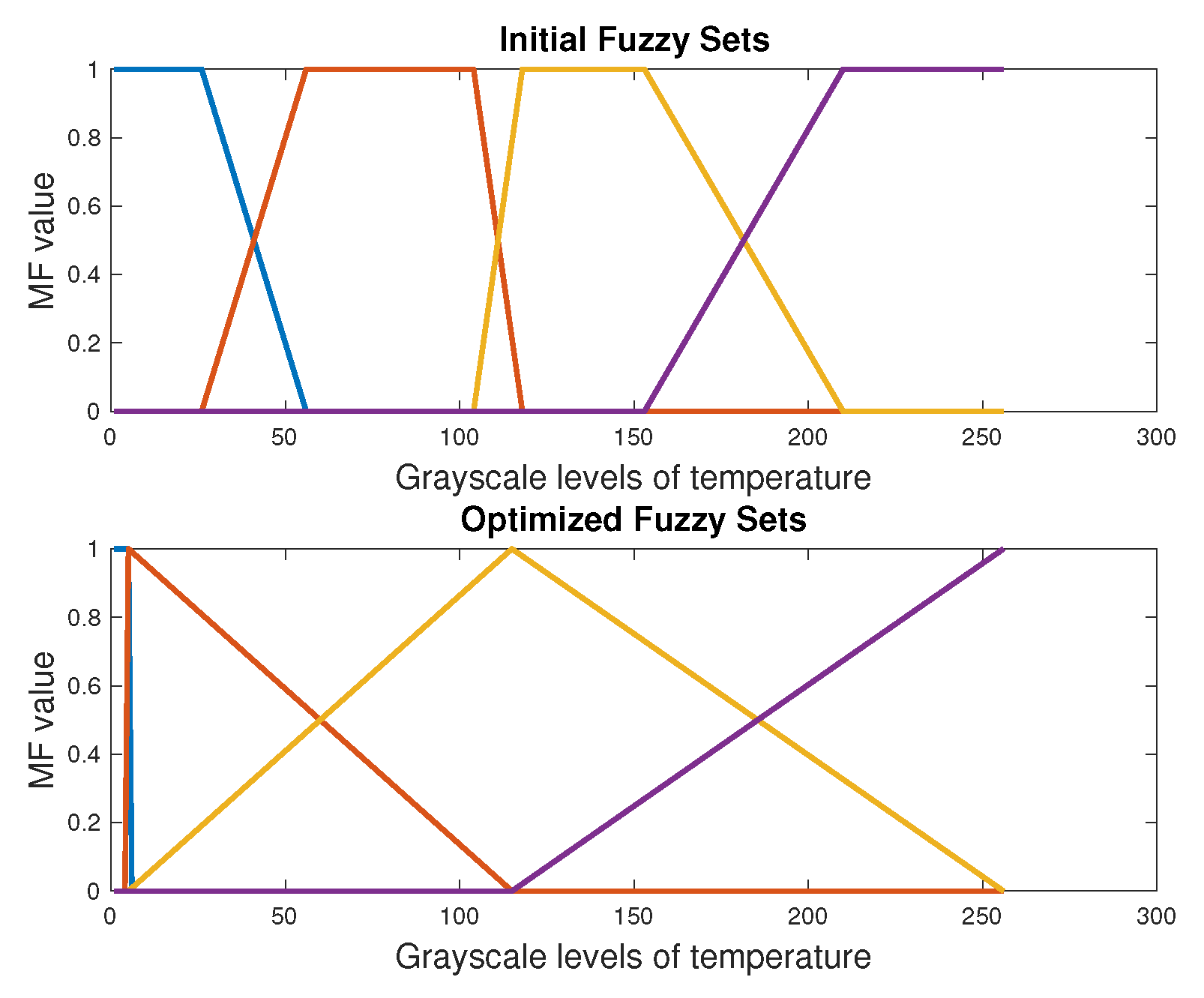

4.1. Automatic Segmentation with DE

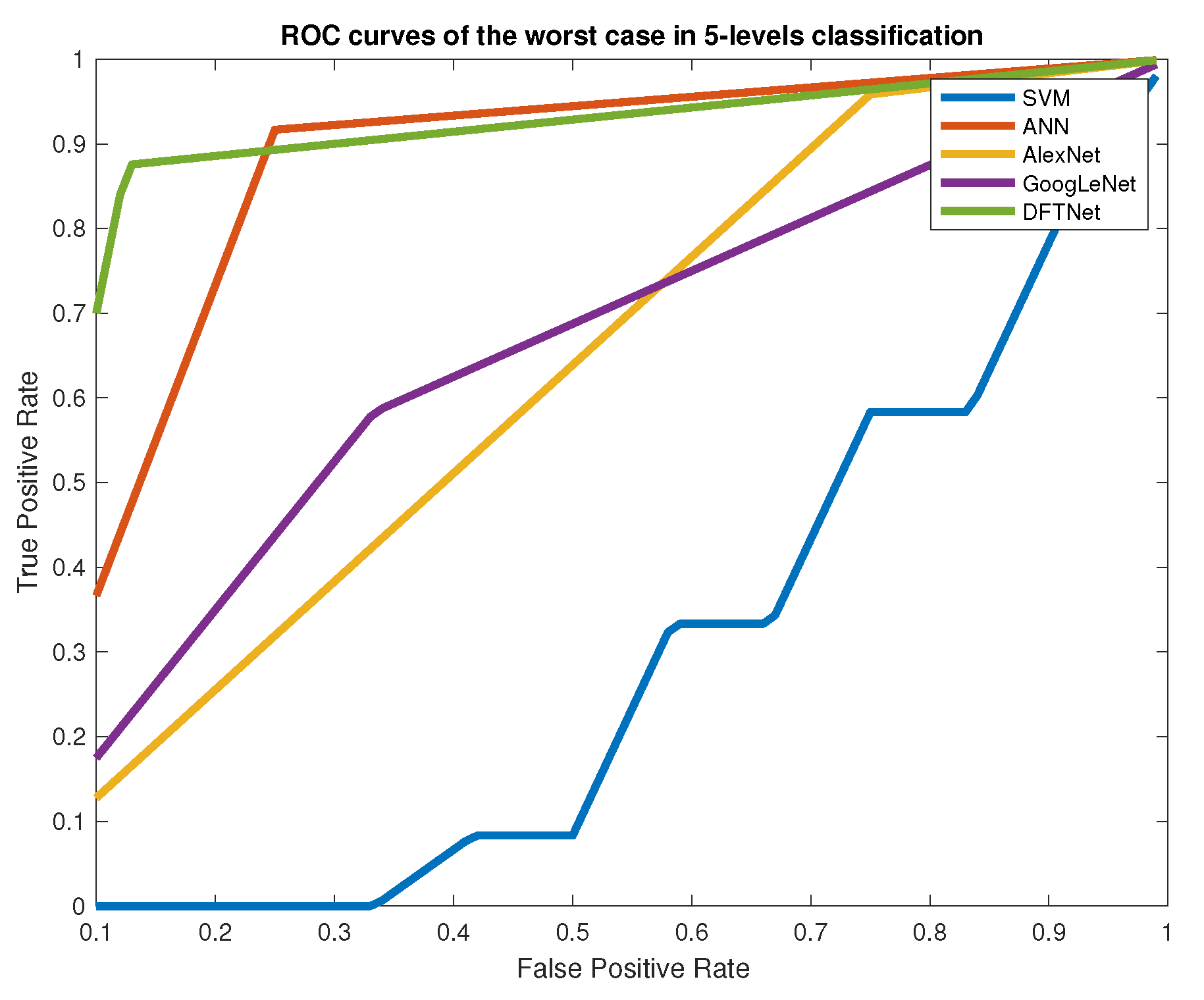

4.2. Classification of Multiple Classes

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A. Segmentation: Multilevel Shannon Entropy and Fuzzy Entropy

Appendix B. Differential Evolution

Appendix B.1. Artificial Neural Networks

Appendix B.2. Support Vector Machines

Appendix B.3. Deep Learning

References

- Cho, N.H.; Kirigia, J.; Mbanya, J.C.; Ogurstova, K.; Guariguata, L.; Rathmann, W.; Roglic, G.; Forouhi, N.; Dajani, R.; Esteghamatil, A.; et al. IDF Diabetes Atlas, 8th ed.; IDF: Brussels, Belgium, 2017. [Google Scholar]

- Sims, D.S., Jr.; Cavanagh, P.R.; Ulbrecht, J.S. Risk factors in the diabetic foot: recognition and management. Phys. Ther. 1998, 68, 1887–1902. [Google Scholar] [CrossRef] [PubMed]

- Iversen, M.; Tell, G.; Riise, T.; Hanestad, B.; Østbye, T.; Graue, M.; Midthjell, K. History of foot ulcer increases mortality among individuals with diabetes: ten-year follow-up of the Nord-Trøndelag Health Study, Norway. Diabetes Care 2009, 32, 2193–2199. [Google Scholar] [CrossRef] [PubMed]

- Ring, F. Thermal imaging today and its relevance to diabetes. J. Diabetes Sci. Technol. 2010, 4, 857–862. [Google Scholar] [CrossRef] [PubMed]

- Hernandez-Contreras, D.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.; Gonzalez-Bernal, J. Narrative review: Diabetic foot and infrared thermography. Infrared Phys. Technol. 2016, 78, 105–117. [Google Scholar] [CrossRef]

- Martín-Vaquero, J.; Hernández Encinas, A.; Queiruga-Dios, A.; José Bullón, J.; Martínez-Nova, A.; Torreblanca González, J.; Bullón-Carbajo, C. Review on wearables to monitor foot temperature in diabetic patients. Sensors 2019, 19, 776. [Google Scholar] [CrossRef]

- Armstrong, D.G.; Holtz-Neiderer, K.; Wendel, C.; Mohler, M.J.; Kimbriel, H.R.; Lavery, L.A. Skin temperature monitoring reduces the risk for diabetic foot ulceration in high-risk patients. Am. J. Med. 2007, 120, 1042–1046. [Google Scholar] [CrossRef]

- Bagavathiappan, S.; Philip, J.; Jayakumar, T.; Raj, B.; Rao, P.N.S.; Varalakshmi, M.; Mohan, V. Correlation between plantar foot temperature and diabetic neuropathy: a case study by using an infrared thermal imaging technique. J. Diabetes Sci. Technol. 2010, 4, 1386–1392. [Google Scholar] [CrossRef]

- Roback, K.; Johansson, M.; Starkhammar, A. Feasibility of a thermographic method for early detection of foot disorders in diabetes. Diabetes Technol. Therap. 2009, 11, 663–667. [Google Scholar] [CrossRef]

- Lavery, L.A. and Higgins, K.R. and Lanctot, D.R.and Constantinides, G.P. and Zamorano, R.G.; Athanasiou, K.A.; Armstrong, D.G.; Agrawal, C.M. Preventing diabetic foot ulcer recurrence in high-risk patients use of temperature monitoring as a selfassessment tool. Diabetes Care 2007, 30, 14–20. [Google Scholar] [CrossRef]

- Chan, A.W.; MacFarlane, I.A.; Bowsher, D.R. Contact thermography of painful diabetic neuropathic foot. Diabetes Care 1991, 14, 918–922. [Google Scholar] [CrossRef]

- Nagase, T.; Sanada, H.; Takehara, K.; Oe, M.; Iizaka, S.; Ohashi, Y.; Oba, M.; Kadowaki, T.; Nakagami, G. Variations of plantar thermographic patterns in normal controls and non-ulcer diabetic patients: novel classification using angiosome concept. J. Plast. Reconstr. Aesthet. Surg. 2011, 64, 860–866. [Google Scholar] [CrossRef] [PubMed]

- Mori, T.; Nagase, T.; Takehara, K.; Oe, M.; Ohashi, Y.; Amemiya, A.; Noguchi, H.; Ueki, K.; Kadowaki, T.; Sanada, H. Morphological pattern classification system for plantar thermography of patients with diabetes. J. Diabetes Sci. Technol. 2013, 7, 1102–1112. [Google Scholar] [CrossRef] [PubMed]

- Jones, B.F. A reappraisal of the use of infrared thermal image analysis in medicine. IEEE Trans. Med. Imag. 1998, 17, 1019–1027. [Google Scholar] [CrossRef] [PubMed]

- Kaabouch, N.; Chen, Y.; Anderson, J.; Ames, F.; Paulson, R. Asymmetry analysis based on genetic algorithms for the prediction of foot ulcers. In Proceedings of the IS&T/SPIE Electronic Imaging, Visualization and Data Analysis, San Jose, CA, USA, 18–22 January 2009; p. 724304. [Google Scholar]

- Kaabouch, N.; Chen, Y.; Hu, W.-C.; Anderson, J.W.; Ames, F.; Paulson, R. Enhancement of the asymmetry-based overlapping analysis rough features extraction. J. Electron. Imag. 2011, 20, 013012. [Google Scholar] [CrossRef]

- Liu, C.; van Netten, J.J.; van Baal, J.G.; Bus, S.A.; van der Heijden, F. Automatic detection of diabetic foot complications with infrared thermography by asymmetric analysis. J. Biomed. Opt. 2015, 20, 026003. [Google Scholar] [CrossRef]

- Hernandez-Contreras, D.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.; Ramirez-Cortes, J.; Renero-Carrillo, F. Automatic classification of thermal patterns in diabetic foot based on morphological pattern spectrum. Infrared Phys. Technol. 2015, 73, 149–157. [Google Scholar] [CrossRef]

- Hernandez-Contreras, D.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.; Gonzalez-Bernal, J.A.; Altamirano-Robles, L. A quantitative index for classification of plantar thermal changes in the diabetic foot. Infrared Phys. Technol. 2017, 81, 242–249. [Google Scholar] [CrossRef]

- Hernandez-Contreras, D.A.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.; Orihuela-Espina, F. Statistical Approximation of Plantar Temperature Distribution on Diabetic Subjects Based on Beta Mixture Model. IEEE Access 2019, 7, 28383–28391. [Google Scholar] [CrossRef]

- Suissa, S.; Ernst, P. Optical illusions from visual data analysis: example of the new zealand asthma mortality epidemic. J. Chronic Dis. 1997, 50, 1079–1088. [Google Scholar] [CrossRef]

- Faust, O.; Acharya, U.R.; Ng, E.; Hong, T.J.; Yu, W. Application of infrared thermography in computer aided diagnosis. Infrared Phys. Technol. 2014, 66, 160–175. [Google Scholar] [CrossRef]

- Kamavisdar, P.; Saluja, S.; Agrawal, S. A survey on image classification approaches and techniques. Int. J. Adv. Res. Com. Commun. Eng. 2013, 2, 1005–1009. [Google Scholar]

- Ren, J. ANN vs. SVM: Which one performs better in classification of MCCs in mammogram imaging. Knowl-Based Syst. 2012, 26, 144–153. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Cruz-Vega, I.; Peregrina-Barreto, H.; de Jesus Rangel-Magdaleno, J.; Manuel Ramirez-Cortes, J. A comparison of intelligent classifiers of thermal patterns in diabetic foot. In Proceedings of the 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Auckland, New Zealand, 20–23 May 2019; pp. 1–6. [Google Scholar]

- Adam, M.; Ng, E.Y.; Tan, J.H.; Heng, M.L.; Tong, J.W.; Acharya, U.R. Computer aided diagnosis of diabetic foot using infrared thermography: A review. Comput. Biol. Med. 2017, 91, 326–336. [Google Scholar] [CrossRef]

- Fraiwan, L.; AlKhodari, M.; Ninan, J.; Mustafa, B.; Saleh, A.; Ghazal, M. Diabetic foot ulcer mobile detection system using smart phone thermal camera: a feasibility study. Biomed. Eng. online 2017, 16, 117. [Google Scholar] [CrossRef]

- Karan, O.; Bayraktar, C.; Gümüşkaya, H.; Karlık, B. Diagnosing diabetes using neural networks on small mobile devices. Expert Syst. Appl. 2012, 39, 54–60. [Google Scholar] [CrossRef]

- Liu, C.; van der Heijden, F.; Klein, M.E.; van Baal, J.G.; Bus, S.A.; van Netten, J.J. Infrared dermal thermography on diabetic feet soles to predict ulcerations: a case study. In Proceedings of the SPIEBiOS, Advanced Biomedical and Clinical Diagnostic Systems XI, San Francisco, CA, USA, 2–7 February 2013. [Google Scholar]

- Nandagopan, G.L.; Bhargavi, A.B. Implementation and Comparison of Two Image Segmentation Techniques on Thermal Foot Images and Detection of Ulceration Using Asymmetry Presented at ICCSP 2016. Available online: https://ieeexplore.ieee.org/abstract/document/7754155 (accessed on 15 March 2020).

- Etehadtavakol, M.; Ng, E.; Kaabouch, N. Automatic segmentation of thermal images of diabetic-at-risk feet using the snakes algorithm. Infrared Phys. Technol. 2017, 86, 66–76. [Google Scholar] [CrossRef]

- Asma, B.; Harba, R.; Canals, R.; Ledee, R.; Jabloun, R. A joint snake and atlas-based segmentation of plantar foot thermal images. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017. [Google Scholar]

- Zhang, K.; Zhang, L.; Song, H.; Zhou, W. Active contours with selective local or global segmentation: A new formulation and level set method. Image Vision Comput. 2010, 28, 668–676. [Google Scholar] [CrossRef]

- Dong, F.; Chen, Z.; Wang, J. A new level set method for inhomogeneous image segmentation. Image Vision Comput. 2013, 31, 809–822. [Google Scholar] [CrossRef]

- Li, B.N.; Qin, J.; Wang, R.; Wang, M.; Li, X. Selective level set segmentation using fuzzy region competition. IEEE Access 2016, 4, 4777–4788. [Google Scholar] [CrossRef]

- Salah, M.B.; Mitiche, A.; Ismail, I.B. Multiregion image segmentation by parametric kernel graph cuts. IEEE T. Image Process. 2010, 20, 545–557. [Google Scholar] [CrossRef] [PubMed]

- Etehadtavakol, M.; Emrani, Z.; Ng, E.Y.K. Rapid extraction of the hottest or coldest regions of medical thermographic images. Med. Biol. Eng. Comput. 2019, 57, 379–388. [Google Scholar] [CrossRef] [PubMed]

- Gururajarao, S.B.; Venkatappa, U.; Shivaram, J.M.; Sikkandar, M.Y.; Amoudi, A.A. Infrared Thermography and Soft Computing for Diabetic Foot Assessment. Mach. Learn. Bio-Signal Anal. Diagn. Imaging 2019, 73–97. [Google Scholar] [CrossRef]

- Adam, M.; Ng, E.Y.K.; Oh, S.L.; Heng, M.L.; Hagiwara, Y.; Tan, J.H.; Tong, J.W.K.; Acharya, U.R. Automated characterization of diabetic foot using nonlinear features extracted from thermograms. Infrared Phys. Technol. 2018, 89, 325–337. [Google Scholar] [CrossRef]

- Adam, M.; Ng, E.Y.K.; Oh, S.L.; Heng, M.L.; Hagiwara, Y.; Tan, J.H.; Tong, J.W.K.; Acharya, U.R. Automated detection of diabetic foot with and without neuropathy using double density-dual tree-complex wavelet transform on foot thermograms. Infrared Phys. Technol. 2018, 92, 270–279. [Google Scholar] [CrossRef]

- Saminathan, J.; Sasikala, M.; Narayanamurthy, V.B.; Rajesh, K.; Arvind, R. Computer aided detection of diabetic foot ulcer using asymmetry analysis of texture and temperature features. Infrared Phys. Technol. 2020, 105, 103219. [Google Scholar] [CrossRef]

- Maldonado, H.; Bayareh, R.; Torres, I.A.; Vera, A.; Gutiérrez, J.; Leija, L. Automatic detection of risk zones in diabetic foot soles by processing thermographic images taken in an uncontrolled environment. Infrared Phys. Technol. 2020, 105, 103187. [Google Scholar] [CrossRef]

- Kavakiotis, I.; Tsave, O.; Salifoglou, A.; Maglaveras, N.; Vlahavas, I.; Chouvarda, I. Machine learning and data mining methods in diabetes research. Comput. Struct. Biotechnol. J. 2017, 15, 104–116. [Google Scholar] [CrossRef]

- Temurtas, H.; Yumusak, N.; Temurtas, F. A comparative study on diabetes disease diagnosis using neural networks. Expert Syts. Appl. 2009, 36, 8610–8615. [Google Scholar] [CrossRef]

- Ribeiro, Á.C.; Barros, A.K.; Santana, E.; Príncipe, J.C. Diabetes classification using a redundancy reduction preprocessor. Research on Biomed. Eng. 2015, 31, 97–106. [Google Scholar] [CrossRef][Green Version]

- Rashid, T.A.; Abdulla, S.M.; Abdulla, R.M. Decision Support System for Diabetes Mellitus through Machine Learning Techniques. Available online: https://www.researchgate.net/profile/Tarik_Rashid/publication/305730658_Decision_Support_System_for_Diabetes_Mellitus_through_Machine_Learning_Techniques.pdf (accessed on 15 March 2020).

- Sethi, H.; Goraya, A.; Sharma, V. Artificial intelligence based ensemble model for diagnosis of diabetes. Int. J. Adv. Res. Com. Sci. 2017, 8, 1540. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE T. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.B.; Bejnordi, E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Goyal, M.; Reeves, N.D.; Davison, A.K.; Rajbhandari, S.; Spragg, J.; Yap, M.H. Dfunet: Convolutional neural networks for diabetic foot ulcer classification. IEEE Trans. Emerging Topics Comput. Intell. 2018, 1–12. [Google Scholar] [CrossRef]

- Goyal, M.; Yap, M.H. Multi-class semantic segmentation of skin lesions via fully convolutional networks. arXiv 2017, arXiv:1711.10449. Available online: https://arxiv.org/abs/1711.10449 (accessed on 15 March 2020).

- Hernandez-Contreras, D.A.; Peregrina-Barreto, H.; de Jesus Rangel-Magdaleno, J.; Renero-Carrillo, F.J. Plantar Thermogram Database for the Study of Diabetic Foot Complications. IEEE Access 2019, 7, 161296–161307. [Google Scholar] [CrossRef]

- Suzuki, K. Computational Intelligence in Biomedical Imaging; Springer: Berlin, Germany, 2016. [Google Scholar]

- Taylor, G.I.; Palmer, J.H. The vascular territories (angiosomes) of the body: experimental study and clinical applications. Br. J. Plast. Surg. 1987, 40, 113–141. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med. Imaging 2015, 15, 29. [Google Scholar] [CrossRef]

- Russ, J.C. The Image Processing Handbook; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Lake Tahoe, Nevada, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Balian, R. Entropy, a protean concept. In Poincaré Seminar 2003; Birkhauser Verlag: Basel, Spain, 2004; pp. 119–144. [Google Scholar]

- Yu, X.; Zhou, Z.; Gao, Q.; Li, D.; Ríha, K. Infrared image segmentation using growing immune field and clone threshold. Infrared Phys. Technol. 2018, 88, 184–193. [Google Scholar] [CrossRef]

- Sarkar, S.; Das, S.; Chaudhuri, S.S. Multilevel image thresholding based on tsallis entropy and differential evolution. In Proceedings of the International Conference on Swarm, Evolutionary, and Memetic Computing, Bhubaneswar, India, 20–22 December 2012; pp. 17–24. [Google Scholar]

- Sarkar, S.; Paul, S.; Burman, R.; Das, S.; Chaudhuri, S.S. A fuzzy entropy based multi-level image thresholding using differential evolution. In Proceedings of the International Conference on Swarm, Evolutionary, and Memetic Computing, Bhubaneswar, India, 18–20 December 2014; pp. 386–395. [Google Scholar]

- Eiben, A.E.; Smith, J.E. Introduction to Evolutionary Computing; Springer: Berlin, Germany, 2003. [Google Scholar]

- Sarkar, S.; Patra, G.R.; Das, S. A differential evolution based approach formultilevel image segmentation using minimum cross entropy thresholding. In Proceedings of the International Conference on Swarm, Evolutionary, and Memetic Computing, Visakhapatnam, India, 19–21 December 2011; pp. 51–58. [Google Scholar]

- Das, S.; Suganthan, P.N. Differential evolution: A survey of the state-of-the-art. IEEE T. Evolut. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Haykin, S. A comprehensive foundation. Neural Netw. 2004, 2, 41. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer: New York, NY, USA, 2001. [Google Scholar]

- Gopinath, R.; Kumar, C.S.; Vishnuprasad, K.; Ramachandran, K. Feature mapping techniques for improving the performance of fault diagnosis of synchronous generator. Int. J. Progn. Health Manag. 2015, 6, 12. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

| Layer No. | Layer Type | Filter Size | Stride | No. of Filters | FC Units |

|---|---|---|---|---|---|

| Layer 1 | Conv. | 7 × 7 | 1 ×1 | 32 | - |

| Layer 2 | Max-Pool | 3×3 | 2×2 | - | - |

| Layer 3 | Conv. | 1×1 | 1×1 | 64 | - |

| Layer 4 | Conv. | 3×3 | 1×1 | 64 | |

| Layer 5 | Max-Pool | 3×3 | 2×2 | - | - |

| Layer 6 | Conv. | 3×3 | 1×1 | 32 | |

| Layer 7 | Max-Pool | 2×2 | 2×2 | - | - |

| Layer 8 | Conv. | 3×3 | 1×1 | 32 | - |

| Layer 9 | Full Conn. | - | - | - | No. Classes |

| Case | 1st Class | 2nd Class | Sensitivity | Specificity | Precision | Accuracy | F-measure | AUC |

|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 5 | 1.0000–0.9167 | 1.0000–0.9167 | 1.0000–0.9167 | 1.0000–0.9167 | 1.0000–0.9167 | 1.0000–0.9167 |

| 2 | 2 | 5 | 1.0000–0.9167 | 1.0000–0.8333 | 1.0000–0.8462 | 1.0000–0.8750 | 1.0000–0.8800 | 1.0000–0.8750 |

| 3 | 1 | 4 | 0.9167–0.6667 | 1.0000–1.0000 | 1.0000–1.0000 | 0.9583–0.8333 | 0.9565–0.8000 | 0.9167–0.8333 |

| 4 | 3 | 5 | 1.0000–1.0000 | 0.9167–0.5833 | 0.9231–0.7059 | 0.9583–0.7917 | 0.9600–0.8276 | 0.9826–0.7917 |

| 5 | 2 | 4 | 0.9167–0.6667 | 0.5000–0.9167 | 0.6471–0.8889 | 0.7083–0.7917 | 0.7586–0.7619 | 0.9167–0.7917 |

| 6 | 1 | 3 | 1.0000–0.5000 | 1.0000–1.0000 | 1.0000–1.0000 | 1.0000–0.7500 | 1.0000–0.6667 | 1.0000–0.7500 |

| 7 | 4 | 5 | 1.0000–1.0000 | 0.9167–0.4167 | 0.9231–0.6316 | 0.9583–0.7083 | 0.9600–0.7742 | 1.0000–0.7083 |

| 8 | 3 | 4 | 0.2500–0.6667 | 0.5833–1.0000 | 0.3750–1.0000 | 0.4167–0.8333 | 0.3000–0.8000 | 0.3194–0.8333 |

| 9 | 2 | 3 | 1.0000–0.8333 | 1.0000–1.0000 | 1.0000–1.0000 | 1.0000–0.9167 | 1.0000–0.9091 | 1.0000–0.9167 |

| 10 | 1 | 2 | 0.8333–0.5000 | 0.5833–1.0000 | 0.6667–1.0000 | 0.7083–0.7500 | 0.7407–0.6667 | 0.7639–0.7500 |

| Average | 0.8917–0.7667 | 0.8500–0.8667 | 0.8535–0.8989 | 0.8708–0.8167 | 0.8676–0.8003 | 0.8899–0.8167 |

| Case | 1st Class | 2nd Class | Sensitivity | Specificity | Precision | Accuracy | F-measure | AUC |

|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 5 | 0.9545–0.9091 | 1.0000–1.0000 | 1.0000–1.0000 | 0.9783–0.9565 | 0.9767–0.9524 | 0.9773–0.9545 |

| 2 | 2 | 5 | 1.0000–0.9583 | 0.9583–0.9583 | 0.9600–0.9583 | 0.9792–0.9583 | 0.9796–0.9583 | 0.9792–0.9583 |

| 3 | 1 | 4 | 0.7727–0.7727 | 1.0000–0.9583 | 1.0000–0.9444 | 0.8913–0.8696 | 0.8718–0.8500 | 0.8864–0.8655 |

| 4 | 3 | 5 | 0.9583–0.9167 | 0.7917–0.7917 | 0.8214–0.8148 | 0.8750–0.8542 | 0.8846–0.8627 | 0.8750–0.8542 |

| 5 | 2 | 4 | 0.9167–0.7917 | 0.8333–0.9167 | 0.8462–0.9048 | 0.8750–0.8542 | 0.8800–0.8444 | 0.8750–0.8542 |

| 6 | 1 | 3 | 0.9545–0.9091 | 0.5417–1.0000 | 0.6563–1.0000 | 0.7391–0.9565 | 0.7778–0.9524 | 0.7481–0.9545 |

| 7 | 4 | 5 | 0.7500–0.8333 | 0.8750–0.7500 | 0.8571–0.7692 | 0.8125–0.7917 | 0.8000–0.8000 | 0.8125–0.7917 |

| 8 | 3 | 4 | 0.5000–0.5833 | 0.5417–0.6250 | 0.5217–0.6087 | 0.5208–0.6042 | 0.5106–0.5957 | 0.5208–0.6042 |

| 9 | 2 | 3 | 0.8333–0.4583 | 0.6250–0.9583 | 0.6897–0.9167 | 0.7292–0.7083 | 0.7547–0.6111 | 0.7292–0.7083 |

| 10 | 1 | 2 | 0.8182–0.3182 | 0.6250–0.9167 | 0.6667–0.7778 | 0.7174–0.6304 | 0.7347–0.4516 | 0.7216–0.6174 |

| Average | 0.8458–0.7451 | 0.7792–0.8875 | 0.8019–0.8695 | 0.8118–0.8184 | 0.8171–0.7879 | 0.8125–0.8163 |

| Case | Class 1 | Class 2 | Sensitivity | Specificity | Precision | Accuracy | F-measure | AUC |

|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 5 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 2 | 5 | 1 | 1 | 1 | 1 | 1 | 1 |

| 3 | 1 | 4 | 1 | 0.9583 | 0.9565 | 0.9783 | 0.9778 | 0.9792 |

| 4 | 3 | 5 | 0.8333 | 0.9583 | 0.9524 | 0.8958 | 0.8889 | 0.8958 |

| 5 | 2 | 4 | 1 | 1 | 1 | 1 | 1 | 1 |

| 6 | 1 | 3 | 0.9545 | 0.9583 | 0.9545 | 0.9565 | 0.9545 | 0.9564 |

| 7 | 4 | 5 | 0.9583 | 0.9583 | 0.9583 | 0.9583 | 0.9583 | 0.9583 |

| 8 | 3 | 4 | 0.9167 | 0.75 | 0.7857 | 0.8333 | 0.8462 | 0.8533 |

| 9 | 2 | 3 | 0.9167 | 0.875 | 0.88 | 0.8958 | 0.898 | 0.8958 |

| 10 | 1 | 2 | 0.9545 | 0.9167 | 0.913 | 0.9348 | 0.9333 | 0.9356 |

| Average | 0.9534 | 0.9375 | 0.9401 | 0.9453 | 0.9457 | 0.9455 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz-Vega, I.; Hernandez-Contreras, D.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.d.J.; Ramirez-Cortes, J.M. Deep Learning Classification for Diabetic Foot Thermograms. Sensors 2020, 20, 1762. https://doi.org/10.3390/s20061762

Cruz-Vega I, Hernandez-Contreras D, Peregrina-Barreto H, Rangel-Magdaleno JdJ, Ramirez-Cortes JM. Deep Learning Classification for Diabetic Foot Thermograms. Sensors. 2020; 20(6):1762. https://doi.org/10.3390/s20061762

Chicago/Turabian StyleCruz-Vega, Israel, Daniel Hernandez-Contreras, Hayde Peregrina-Barreto, Jose de Jesus Rangel-Magdaleno, and Juan Manuel Ramirez-Cortes. 2020. "Deep Learning Classification for Diabetic Foot Thermograms" Sensors 20, no. 6: 1762. https://doi.org/10.3390/s20061762

APA StyleCruz-Vega, I., Hernandez-Contreras, D., Peregrina-Barreto, H., Rangel-Magdaleno, J. d. J., & Ramirez-Cortes, J. M. (2020). Deep Learning Classification for Diabetic Foot Thermograms. Sensors, 20(6), 1762. https://doi.org/10.3390/s20061762