Smart Containers Schedulers for Microservices Provision in Cloud-Fog-IoT Networks. Challenges and Opportunities

Abstract

1. Introduction

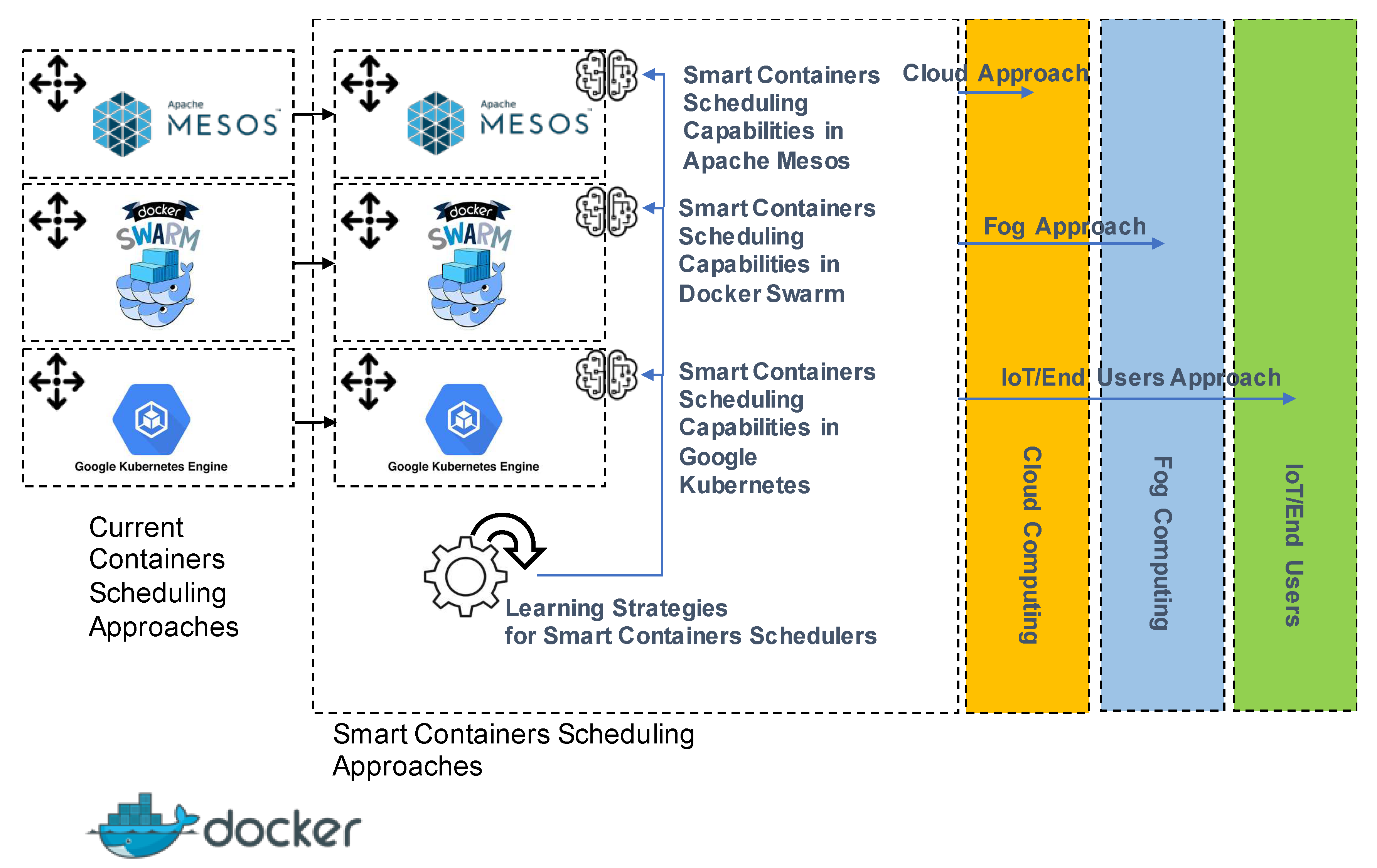

- First, the absence of intelligent scheduling strategies for containers in the major Docker containers’ management tools today, the open-source solutions Docker Swarm, Apache Mesos and Google Kubernetes, is studied. Specifically, it is discussed how the incorporation of soft-computing-derived strategies such as fuzzy logic (FL), evolutionary computation (EC), and bio-inspired computation strategies, as well as diverse machine learning (ML) strategies such as neural networks (NNs) and derived deep learning (DL), represents an open issue with major advantages [12,13]. To be precise, it can allow millions of users and administrators of these containers’ management tools to achieve a more efficient and personalized scheduling of their microservices, based on their specific objectives and applications in fog, cloud or IoT. Although these techniques have been largely proved effective in the scheduling of tasks and VMs in the last years [14,15,16,17,18], their adaptation and adoption in containers’ scheduling represent multiple challenges as well as opportunities. These challenges and opportunities have scarcely been explored and analyzed at the time of writing and motivates this work [19,20].

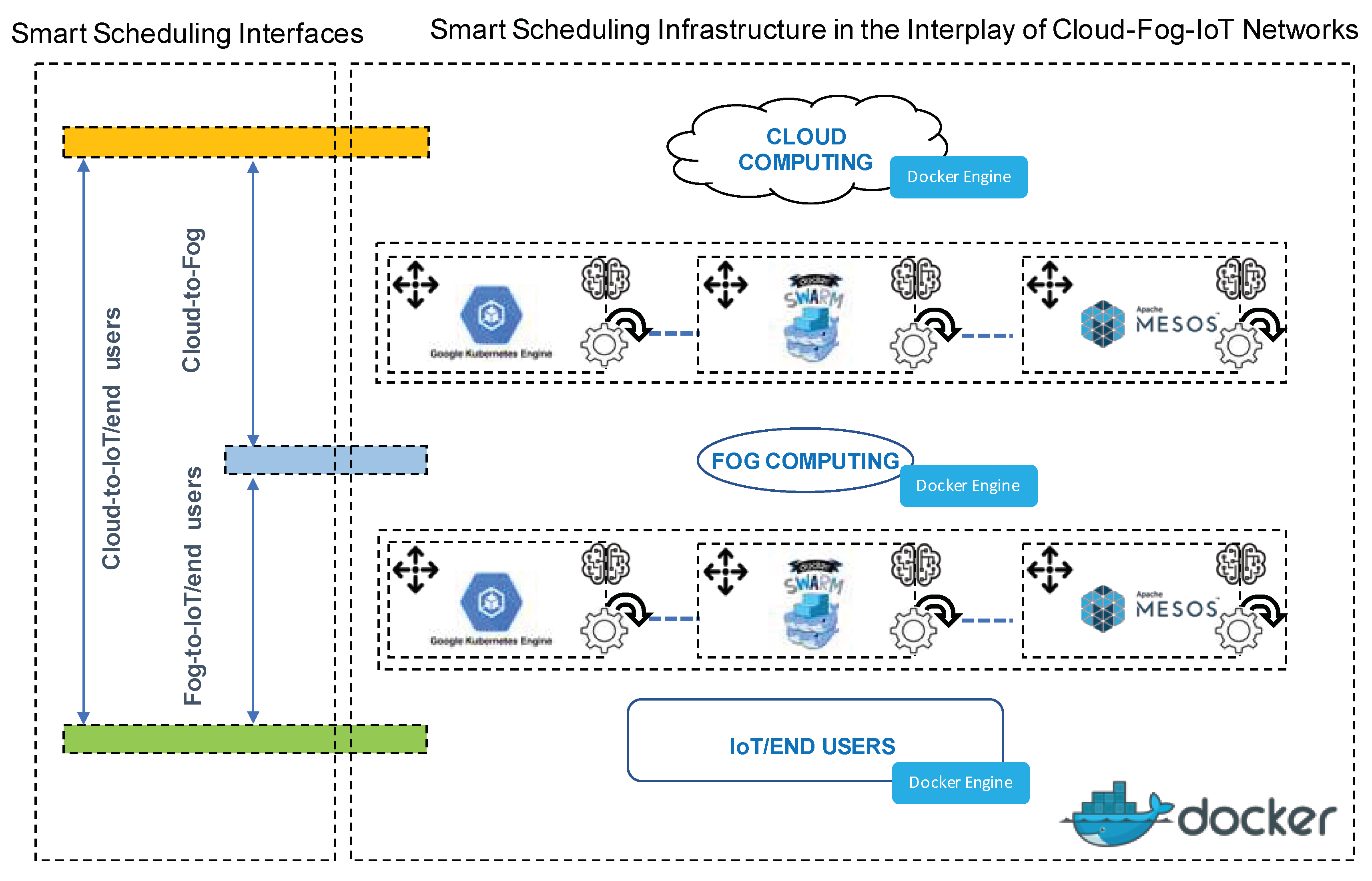

- Secondly, the possible benefits of specific smart containers’ schedulers for Docker Swarm, Apache Mesos and Google Kubernetes, for the different interfaces in cloud-fog-IoT networks and type of microservices are analyzed. Specifically, this work is mainly devoted, on the one hand, to suggest the convenience of optimization in the fog-to-cloud and IoT-to-cloud interface of the runtime, and, on the other hand, the optimization of latency, load balance and energy in the fog-to-IoT interface. Hence, it is proposed to consider interface-based scheduling solutions for very frequent objectives in the execution of microservices.

- Thirdly, the scientific-technical impact in the market of the proposed challenges and opportunities is also discussed, in order to show the significance of the research line.

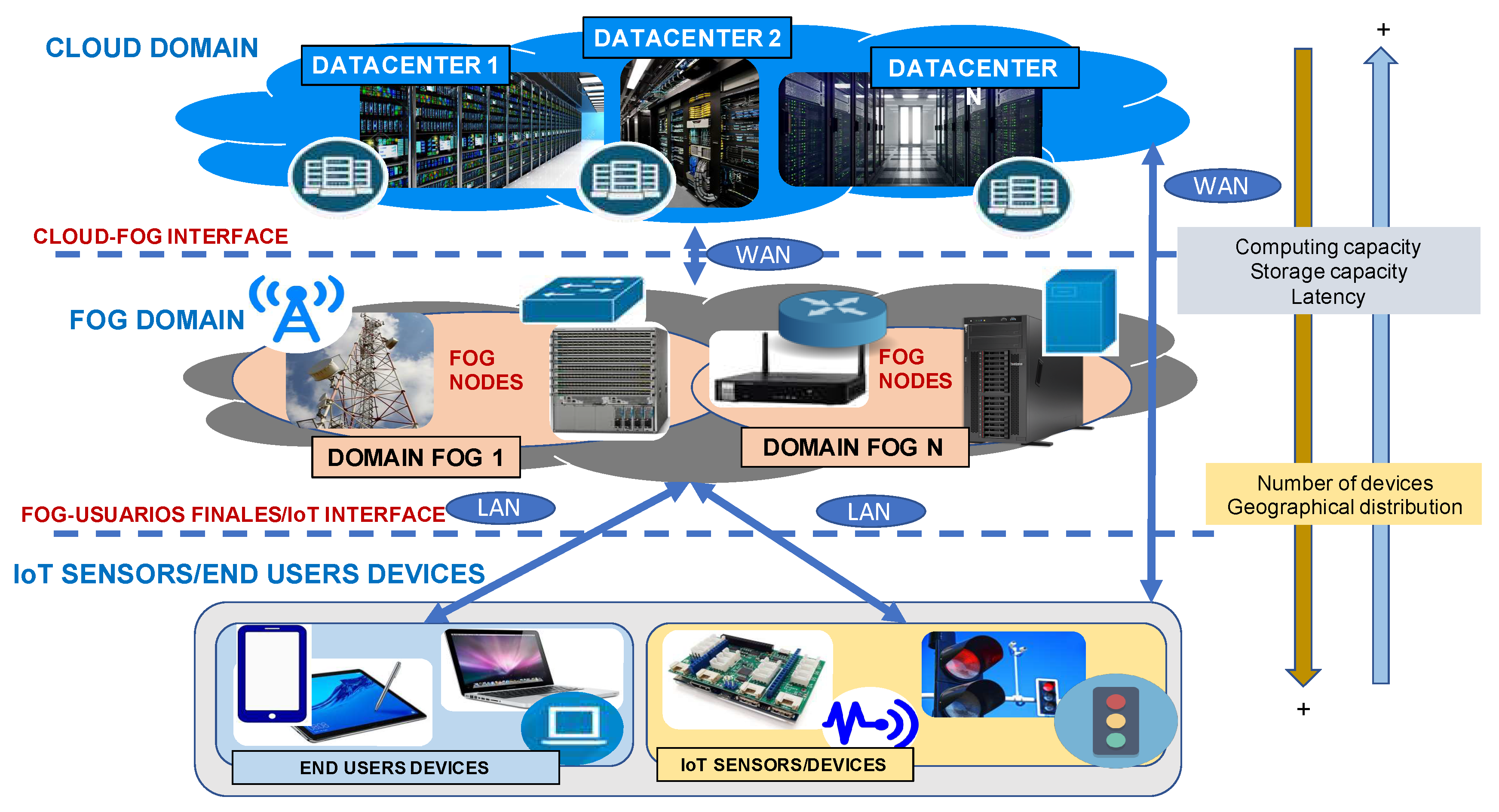

2. Fundamentals of Cloud-Fog-IoT Networks

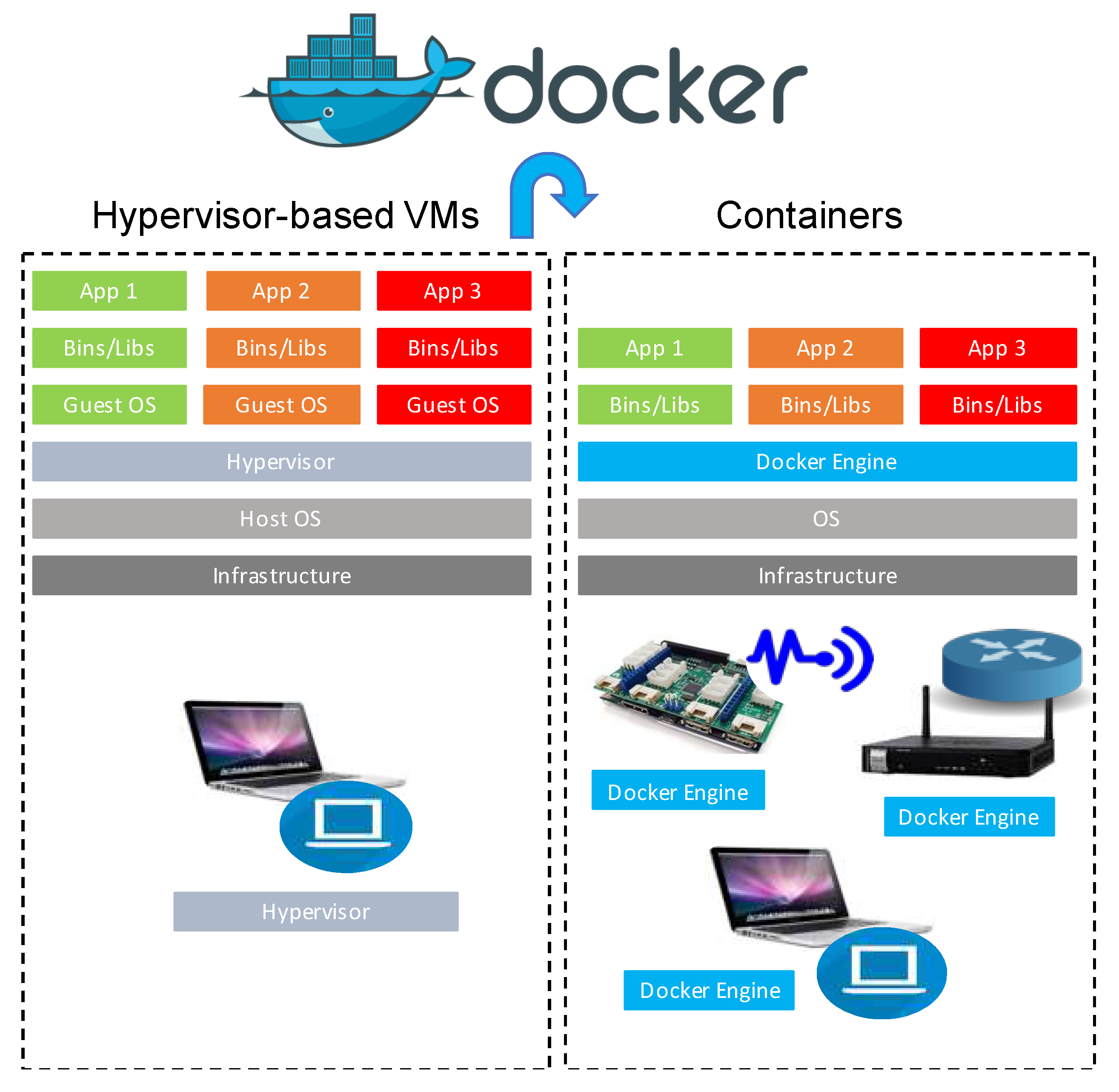

3. Fundamentals of Containers

3.1. The Concept of Container

3.2. Docker Containers

4. Previous Works in Containers’ Management and Smart Scheduling

4.1. Containers’ Management Platforms in the Market

4.2. Docker Containers’ Management Platforms in the Market: Scheduling Strategies

4.3. Smart Containers’ Scheduling Strategies in Scientific Literature

5. Challenges and Opportunities. Smart Scheduling in the Dominant Containers‘ Management Systems

5.1. Challenge Statement

5.2. Suitable Techniques

5.3. Scheduling General Framework

5.4. Expected Results

6. Challenges and Opportunities. Smart Containers’ Scheduling in the Cloud-Fog-IoT Interfaces

6.1. Challenge Statement

6.2. Interfaces in Cloud-Fog-IoT Networks

- Cloud-to-fog

- Fog-to-IoT/end users

- Cloud-to-IoT/end users

6.3. Scheduling General Framework

6.4. Expected Results

7. Discussion on the Scientific-Technical Impact of the Raised Challenges and Opportunities

8. Future Directions

- Kubernetes, Apache Mesos and Docker Swarm are containers’ management systems that focus on the allocation of Docker containers with fine-grained resource granularity. However, there are other open-source platforms such as YARN where a coarse-grained resource granularity is considered for Docker containers’ scheduling. In these coarse-grained-oriented platforms, the consideration of smart state-aware scheduling solutions could also improve QoS in terms of execution time, latency, flow-time, power consumption, etc., in cloud-fog-IoT applications.

- Resources and microservices’ state consideration in smart scheduling can favor the consecution of a greater QoS. Nevertheless, beyond this purpose, it could be beneficial to consider factors from the administration perspective. This is the case of costs of resources, prices and heterogeneities of services. Involving these factors in the scheduling would imply that a cloud-fog-IoT infrastructure could assess the expenses of microservices’ execution, and adjust to budgets, restrictions or peaks of QoS requirements, with time automatically.

- Failure management is a key procedure in any containers’ management strategy. The consideration of the peculiarities and state of nearby cloud-fog-IoT nodes in the scheduling could be beneficial to support container migration at the event of a failure. Hence, smart containers’ schedulers could integrate failure-focused parameters, that could provide migration-aware decisions.

- It could be relevant to analyze the possibility to incorporate, parallelly to containers’ scheduling, capabilities for autoscaling of resources. In this way, based on the current state of the resources and the type of service or budgets, an intelligent system could automatically scale resources to modify the maximum achievable QoS by the scheduling strategy.

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Morabito, R.; Kjallman, J.; Komu, M. Hypervisors vs. lightweight virtualization: A performance comparison. In Proceedings of the 2015 IEEE International Conference on Cloud Engineering, Tempe, AZ, USA, 9–13 March 2015; pp. 386–393. [Google Scholar]

- Microsoft Inc. Microsoft Azure. Available online: https://azure.microsoft.com/es-es/ (accessed on 2 December 2019).

- Amazon Inc. Amazon Web Services. Available online: https://aws.amazon.com/es/ (accessed on 2 December 2019).

- Google Inc. Google Compute Platform. Google Compute Engine. Available online: https://cloud.google.com/compute/ (accessed on 2 December 2019).

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, MCC ’12, Helsinki, Finland, 7 August 2012; ACM: New York, NY, USA, 2012; pp. 13–16. [Google Scholar]

- Morabito, R.; Farris, I.; Iera, A.; Taleb, T. Evaluating performance of containerized IoT services for clustered devices at the network edge. IEEE Internet Things J. 2017, 4, 1019–1030. [Google Scholar] [CrossRef]

- Hentschel, K.; Jacob, D.; Singer, J.; Chalmers, M. Supersensors: Raspberry pi devices for Smart campus infrastructure. In Proceedings of the 2016 IEEE 4th International Conference on Future Internet of Things and Cloud (FiCloud), Vienna, Austria, 22–24 August 2016; pp. 58–62. [Google Scholar]

- Morabito, R. A performance evaluation of container technologies on internet of things devices. In Proceedings of the 2016 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), San Francisco, CA, USA, 10–14 April 2016; pp. 999–1000. [Google Scholar]

- Docker, Docker Swarm. Available online: https://www.docker.com/products/docker-swarm (accessed on 9 December 2019).

- Apache, Apache Mesos. Available online: http://mesos.apache.org/ (accessed on 9 December 2019).

- Kubernetes, Kubernetes. Available online: http://kubernetes.io/ (accessed on 9 December 2019).

- Cordón, O.; Herrera, F.; Hoffmann, F.; Magdalena, L. Genetic Fuzzy Systems: Evolutionary Tuning and Learning of Fuzzy Knowledge Bases; World Scientific Pub Co Inc.: London, UK, 2001. [Google Scholar]

- Zadeh, L.A. Fuzzy Sets. Inf. Control 1965, 19, 338–353. [Google Scholar] [CrossRef]

- Farid, M.; Latip, R.; Hussin, M.; Hamid, N.A.W.A. Scheduling Scientific Workflow using Multi-objective Algorithm with Fuzzy Resource Utilization in Multi-cloud Environment. IEEE Access 2020, 8, 24309–24322. [Google Scholar] [CrossRef]

- Mallikarjuna, B. Feedback-Based Fuzzy Resource Management in IoT-Based-Cloud. Int. J. Fog Comput. (IJFC) 2020, 3, 1–21. [Google Scholar] [CrossRef]

- Shooli, R.G.; Javidi, M.M. Using gravitational search algorithm enhanced by fuzzy for resource allocation in cloud computing environments. Appl. Sci. 2020, 2, 195. [Google Scholar] [CrossRef]

- Rjoub, G.; Bentahar, J.; Wahab, O.A.; Bataineh, A. Deep Smart Scheduling: A Deep Learning Approach for Automated Big Data Scheduling Over the Cloud. In Proceedings of the 2019 7th International Conference on Future Internet of Things and Cloud (FiCloud), Istanbul, Turkey, 26–28 August 2019; pp. 189–196. [Google Scholar]

- García-Galán, S.; Prado, R.P.; Muñoz-Expósito, J.E. Rules Discovery in Fuzzy Classifier Systems with PSO for Scheduling in Grid Computational Infrastructures. Appl. Soft Comput. 2015, 29, 424–435. [Google Scholar] [CrossRef]

- Joseph, C.T.; Martin, J.P.; Chandrasekaran, K.; Kandasamy, A. Fuzzy Reinforcement Learning based Microservice Allocation in Cloud Computing Environments. In Proceedings of the TENCON 2019-2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 1559–1563. [Google Scholar]

- Liu, B.; Li, J.; Lin, W.; Bai, W.; Li, P.; Gao, Q. K-PSO: An improved PSO-based container scheduling algorithm for big data applications. Int. J. Netw. Manag. 2020, e2092. [Google Scholar] [CrossRef]

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A comprehensive survey on fog computing: State-of-the-art and research challenges. IEEE Commun. Surv. Tutor. 2018, 20, 416–464. [Google Scholar] [CrossRef]

- Vaquero, L.M.; Rodero-Merino, L.; Caceres, J.; Lindner, M. A break in the clouds: Towards a cloud definition. SIGCOMM Comput. Commun. 2008, 39, 50–55. [Google Scholar] [CrossRef]

- Zhang, Q.; Cheng, L.; Boutaba, R. Cloud computing: State-of-the-art and research challenges. J. Internet Serv. Appl. 2010, 1, 7–18. [Google Scholar] [CrossRef]

- Jiao, L.; Friedman, R.; Fu, X.; Secci, S.; Smoreda, Z.; Tschofenig, H. Cloud-based computation offloading for mobile devices: State of the art, challenges and opportunities. In Proceedings of the 2013 Future Network & Mobile Summit, Lisboa, Portugal, 3–5 July 2013; pp. 1–11. [Google Scholar]

- Stojmenovic, I. Fog computing: A cloud to the ground support for smart things and machine-to-machine networks. In Proceedings of the 2014 Australasian Telecommunication Networks and Applications Conference (ATNAC), Southbank, VIC, Australia, 26–28 November 2014; pp. 117–122. [Google Scholar]

- Yangui, S.; Ravindran, P.; Bibani, O.; Glitho, R.H.; Ben Hadj-Alouane, N.; Morrow, M.J.; Polakos, P.A. A platform as-a-service for hybrid cloud/fog environments. In Proceedings of the 2016 IEEE International Symposium on Local and Metropolitan Area Networks (LANMAN), Rome, Italy, 13–15 June 2016; pp. 1–7. [Google Scholar]

- Zhu, X.; Chan, D.S.; Hu, H.; Prabhu, M.S.; Ganesan, E.; Bonomi, F. Improving video performance with edge servers in the fog computing architecture. Intel Technol. J. 2015, 19, 202–224. [Google Scholar]

- Yangui, S.; Tata, S. The spd approach to deploy service-based applications in the cloud. Concurr. Comput. Pract. Exper. 2015, 27, 3943–3960. [Google Scholar] [CrossRef]

- Pop, D.; Iuhasz, G.; Craciun, C.; Panica, S. Support services for applications execution in multiclouds environments. In Proceedings of the 2016 IEEE International Conference on Autonomic Computing (ICAC), Wurzburg, Germany, 17–22 July 2016; pp. 343–348. [Google Scholar]

- Di Martino, B. Applications portability and services interoperability among multiple clouds. Proc. IEEE Cloud Comput. 2014, 1, 74–77. [Google Scholar] [CrossRef]

- Consortium, O. OpenFog Reference Architecture for Fog Computing; OpenFog Consortium: Needham Heights, MA, USA, 2017. [Google Scholar]

- Bonomi, F.; Milito, R.A.; Natarajan, P.; Zhu, J. Fog computing: A platform for internet of things and analytics. In Big Data and Internet of Things: A Roadmap for Smart Environments; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Al-Turjman, F.; Ever, E.; Bin-Zikria, Y.; Kim, S.W.; Elmahgoubi, A. SAHCI: Scheduling Approach for Heterogeneous Content-Centric IoT Applications. IEEE Access 2019, 19, 80342–80349. [Google Scholar] [CrossRef]

- Meng, Y.; Naeem, M.A.; Ali, R.; Zikria, Y.B.; Kim, S.W. DCS: Distributed Caching Strategy at the Edge of Vehicular Sensor Networks in Information-Centric Networking. Sensors 2019, 19, 4407. [Google Scholar] [CrossRef] [PubMed]

- Nauman, A.; Qadri, Y.A.; Amjad, M.; Zikria, Y.B.; Afzal, M.K.; Kim, S.W. Multimedia Internet of Things: A Comprehensive Survey. IEEE Access 2020, 8, 8202–8250. [Google Scholar] [CrossRef]

- Yi, S.; Hao, Z.; Qin, Z.; Li, Q. Fog computing: Platform and applications. In Proceedings of the 2015 Third IEEE Workshop on Hot Topics in Web Systems and Technologies (HotWeb), Washington, DC, USA, 12–13 November 2015; pp. 73–78. [Google Scholar]

- Yi, S.; Li, C.; Li, Q. A survey of fog computing: Concepts, applications and issues. In Proceedings of the 2015 Workshop on Mobile Big Data, Mobidata ’15; ACM: New York, NY, USA, 2015; pp. 37–42. [Google Scholar]

- Dastjerdi, A.V.; Buyya, R. Fog computing: Helping the internet of things realize its potential. Computer 2016, 49, 112–116. [Google Scholar] [CrossRef]

- Cao, Y.; Chen, S.; Hou, P.; Brown, D. Fast: A fog computing assisted distributed analytics system to monitor fall for stroke mitigation. In Proceedings of the 2015 IEEE International Conference on Networking, Architecture and Storage (NAS), Boston, MA, USA, 6–7 August 2015; pp. 2–11. [Google Scholar]

- Ha, K.; Chen, Z.; Hu, W.; Richter, W.; Pillai, P.; Satyanarayanan, M. Towards wearable cognitive assistance. In Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’14; ACM: New York, NY, USA, 2014; pp. 68–81. [Google Scholar]

- Levis, P.; Culler, D. MatÉ: A tiny virtual machine for sensor networks. SIGARCH Comput. Archit. News 2002, 30, 85–95. [Google Scholar] [CrossRef]

- Aslam, F.; Fennell, L.; Schindelhauer, C.; Thiemann, P.; Ernst, G.; Haussmann, E.; Rührup, S.; Uzmi, Z.A. Optimized java binary and virtual machine for tiny motes. In International Conference on Distributed Computing in Sensor Systems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 15–30. [Google Scholar]

- Alessandrelli, D.; Petraccay, M.; Pagano, P. T-res: Enabling reconfigurable in-network processing in iot-based wsns. In Proceedings of the 2013 IEEE International Conference on Distributed Computing in Sensor Systems, Cambridge, MA, USA, 20–23 May 2013; pp. 337–344. [Google Scholar]

- Docker, Docker containers. Available online: https://www.docker.com/ (accessed on 4 December 2019).

- Linux-Containers, LXC Containers. Available online: https://linuxcontainers.org (accessed on 11 June 2019).

- Vavilapalli, V.K. Apache Hadoop YARN: Yet Another Resource Negotiator. 2013. Available online: http://www.socc2013.org/home/program/a5-vavilapalli.pdf (accessed on 5 December 2019).

- Rodriguez, M.A.; Buyya, R. Container-based Cluster Orchestration Systems: A taxonomy and Future Directions. J. Softw. Pract. Exp. 2018, 49, 698–719. [Google Scholar] [CrossRef]

- Linux-Foundation, Open Containers Initiative. Available online: https://www.opencontainers.org/ (accessed on 11 December 2019).

- Google, Kubernetes Priorities. Available online: https://github.com/kubernetes/kubernetes/blob/release-1.1/plugin/pkg/scheduler/algorithm/priorities/priorities.go (accessed on 4 December 2019).

- Docker, Docker Hub. Available online: https://hub.docker.com/explore/ (accessed on 11 December 2019).

- Docker, Docker Pricing. Available online: https://www.docker.com/pricing (accessed on 11 December 2019).

- Google, Kubernetes Pods. Available online: http://kubernetes.io/v1.1/docs/user-guide/pods.html (accessed on 14 December 2019).

- Santos, J.; Wauters, T.; Volckaert, B.; De Turck, F. Resource provisioning in Fog computing: From theory to practice. Sensors 2019, 19, 2238. [Google Scholar] [CrossRef]

- Santos, J.; Wauters, T.; Volckaert, B.; De Turck, F. Towards Network-Aware Resource Provisioning in Kubernetes for Fog Computing Applications. In Proceedings of the 2019 IEEE Conference on Network Softwarization (NetSoft), Paris, France, 24–28 June 2019; pp. 351–359. [Google Scholar]

- Babu, G.C.; Hanuman, A.S.; Kiran, J.S.; Babu, B.S. Locality—Aware Scheduling for Containers in Cloud Computing. In Inventive Communication and Computational Technologies; Springer: Berlin/Heidelberg, Germany, 2020; pp. 177–185. [Google Scholar]

- Hong, C.; Lee, K.; Kang, M.; Yoo, C. qCon: QoS-Aware Network Resource Management for Fog Computing. Sensors 2018, 18, 3444. [Google Scholar] [CrossRef] [PubMed]

- Santoro, D.; Zozin, D.; Pizzolli, D.; De Pellegrini, F.; Cretti, S. Foggy: A platform for workload orchestration in a Fog Computing environment. In Proceedings of the 2017 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Hong Kong, China, 11–14 December 2017; pp. 231–234. [Google Scholar]

- Xhafa, F.; Abraham, A. Meta-heuristics for grid scheduling problems. In Metaheuristics for Scheduling: Distributed Computing Environments; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–37. [Google Scholar]

- Wang, J.; Li, X.; Ruiz, R.; Yang, J.; Chu, D. Energy Utilization Task Scheduling for MapReduce in Heterogeneous Clusters. IEEE Trans. Serv. Comput. 2020. [Google Scholar] [CrossRef]

- Ragmani, A.; Elomri, A.; Abghour, N.; Moussaid, K.; Rida, M. FACO: A hybrid fuzzy ant colony optimization algorithm for virtual machine scheduling in high-performance cloud computing. J. Ambient Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef]

- Zhou, J.; Yu, K.; Chou, C.; Yang, L.; Luo, Z. A Dynamic Resource Broker and Fuzzy Logic Based Scheduling Algorithm in Grid Environment. Lect. Notes Comput. Sci. 2007, 4431, 604–613. [Google Scholar]

- Yu, K.; Luo, Z.; Chou, C.; Chen, C.; Zhou, J. A fuzzy neural network-based scheduling algorithm for job assignment on computational grids. Lect. Notes Comput. Sci. 2007, 4658, 533–542. [Google Scholar]

- Hao, X.; Dai, Y.; Zhang, B.; Chen, T.; Yang, L. QoS-Driven Grid Resource Selection Based on Novel Neural Networks. Lect. Notes Comput. Sci. 2006, 3947, 456–465. [Google Scholar]

- Priyaa, A.R.; Tonia, E.R.; Manikandan, N. Resource Scheduling Using Modified FCM and PSO Algorithm in Cloud Environment. In International Conference on Computer Networks and Inventive Communication Technologies; Springer: Berlin/Heidelberg, Germany, 2019; pp. 697–704. [Google Scholar]

- Liu, H.; Abraham, A.; Hassanien, A.E. Scheduling jobs on computational grids using a fuzzy particle swarm optimization algorithm. Future Gener. Comput. Syst. 2010, 26, 1336–1343. [Google Scholar] [CrossRef]

- Strumberger, I.; Tuba, E.; Bacanin, N.; Tuba, M. Hybrid Elephant Herding Optimization Approach for Cloud Computing Load Scheduling. In Swarm, Evolutionary, and Memetic Computing and Fuzzy and Neural Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 201–212. [Google Scholar]

- Gazori, P.; Rahbari, D.; Nickray, M. Saving time and cost on the scheduling of fog-based IoT applications using deep reinforcement learning approach. In Future Generation Computer Systems; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Alcalá, R.; Casillas, J.; Cordón, O.; González, A.; Herrera, F. A genetic rule weighting and selection process for fuzzy control of heating, ventilating and air conditioning systems. Eng. Appl. Artif. Intell. 2005, 18, 279–296. [Google Scholar] [CrossRef]

- Munoz-Exposito, J.; García-Galan, S.; Ruiz-Reyes, N.; Vera-Candeas, P. Audio coding improvement using evolutionary speech/music discrimination. In Proceedings of the IEEE International Conference on Fuzzy Systems Conference, London, UK, 23–26 July 2007; pp. 1–6. [Google Scholar]

- Prado, R.P.; García-Galán, S.; Yuste, A.J.; Muñoz-Expósito, J.E. A fuzzy rule-based meta-scheduler with evolutionary learning for grid computing. Eng. Appl. Artif. Intell. 2010, 23, 1072–1082. [Google Scholar] [CrossRef]

- Franke, C.; Lepping, J.; Schwiegelshohn, U. On advantages of scheduling using genetic fuzzy systems. Lect. Notes Comput. Sci. 2007, 68–93. [Google Scholar]

- Franke, C.; Hoffmann, F.; Lepping, J.; Schwiegelshohn, U. Development of scheduling strategies with genetic fuzzy systems. Appl. Soft Comput. 2008, 8, 706–721. [Google Scholar] [CrossRef]

- Prado, R.P.; García-Galán, S.; Muñoz-Expósito, J.E.; Yuste Delgado, A.J. Knowledge Acquisition in Fuzzy Rule Based Systems with Particle Swarm Optimization. IEEE-Trans. Fuzzy Systems 2010, 18, 1083–1097. [Google Scholar] [CrossRef]

- García-Galán, S.; Prado, R.P.; Muñoz-Expósito, J.E. Swarm Fuzzy Systems: Knowledge Acquisition in Fuzzy Systems and its Applications in Grid Computing. IEEE-Trans. Knowl. Data Eng. 2014, 26, 1791–1804. [Google Scholar] [CrossRef]

- García-Galán, S.; Prado, R.P.; Muñoz-Expósito, J.E. Fuzzy Scheduling with Swarm Intelligence-Based Knowledge Acquisition for Grid Computing. Eng. Appl. Artif. Intell. 2012, 25, 359–375. [Google Scholar] [CrossRef]

- Prado, R.P.; Hoffmann, F.; García-Galán, S.; Muñoz-Expósito, J.E.; Bertram, T. On Providing Quality of Service in Grid Computing through Multi-Objetive Swarm-Based Knowledge Acquisition in Fuzzy Schedulers. Int. J. Approx. Reason. 2012, 53, 228–247. [Google Scholar] [CrossRef]

- Seddiki, M.; Prado, R.P.; Muñoz-Expósito, J.E.; García-Galán, S. Rule-Based Systems for Optimizing Power Consumption in Data Centers. Adv. Intell. Syst. Comput. 2014, 233, 301–308. [Google Scholar]

- Prado, R.P.; García-Galán, S.; Muñoz Expósito, J.E.; Yuste, A.J.; Bruque, S. Learning of fuzzy rule based meta-schedulers for grid computing with differential evolution. Commun. Comput. Inf. Sci. 2010, 80, 751–760. [Google Scholar]

- Prado, R.P.; Muñoz-Expósito, J.E.; García-Galán, S. Improving Expert Meta-Schedulers for Grid Computing through Weighted Rules Evolution. Fuzzy Logic Appl. Lect. Notes Comput. Sci. 2011, 6857, 204–211. [Google Scholar]

- Prado, R.P.; Muñoz Expósito, J.E.; García-Galán, S. Flexible Fuzzy Rule Bases Evolution with Swarm Intelligence for Meta-Scheduling in Grid Computing. Comput. Inform. 2014, 3, 810–830. [Google Scholar]

- Mell, P.; Grance, T. The NIST Definition of Cloud Computing; National Institute of Standards and Technology, U.S. Department of Commerce: Washington, DC, USA, 2011.

| Main Containers’ Management Tools Compared Features | ||||||

|---|---|---|---|---|---|---|

| Tool | Original Organization | Sharing | Containers Type | Resource Granularity | IP Per Container | Workload |

| Apollo | Microsoft | No open-source | N/S | Fine-grained | N/S | Batch jobs |

| Aurora | Open-source | Mesos, Docker | Fine-grained | Yes | Long running and cron jobs | |

| Borg | No open-source | Linux cgroups-based | Fine-grained | No | All | |

| Fuxi | Alibaba | No open-source | Linux cgroups-based | Bundle | N/S | Batch jobs |

| Kubernetes | Open-source | Docker, rkt, CRI API implementations, OCI-compliant runtimes | Fine-grained | Yes | All | |

| Mesos/ Marathon | UC Berkeley/ Mesosphere | Open-source | Mesos, Docker | Fine-grained | Yes | All/Long running jobs |

| Omega | No open-source | N/S | Fine-grained | N/S | All | |

| Swarm | Docker | Open-source | Docker | Fine-grained | Yes | Long running jobs |

| YARN | Apache | Open-source | Linux cgroups-based, Docker | Coarse-grained | No | Batch jobs |

| Previous Works in Advanced Containers’ Scheduling in Cloud-Fog-IoT Networks | |||||||

|---|---|---|---|---|---|---|---|

| Work | Soft-Computing -Based | Cloud | Fog | IoT | Cloud-Fog Interface | Fog-IoT Interface | Cloud-IoT Interface |

| Joseph et al., 2019 [19] | Yes | Yes | No | No | No | No | No |

| Liu et al., 2020 [20] | Yes | Yes | No | No | No | No | No |

| Santos et al., 2019 [53] | No | No | Yes | No | No | No | No |

| Santos et al., 2019 [54] | No | No | Yes | No | No | No | No |

| Babu et al., 2020 [55] | No | Yes | No | No | No | No | No |

| Hong et al., 2018 [56] | No | No | Yes | No | No | No | No |

| Santoro et al., 2017 [57] | No | No | Yes | Yes | No | No | No |

| Amount Per Year and Activity (Thousands of Millions of Dollars) | ||

|---|---|---|

| Sector within Fog Computing | 2019 | 2022 |

| Wearables | 158 | 778 |

| Smart Homes | 99 | 413 |

| Smart Cities | 128 | 629 |

| Smart Buildings | 160 | 693 |

| Agriculture | 362 | 2118 |

| Utilities | 851 | 3840 |

| Retail Sales | 178 | 509 |

| Data Centers | 162 | 856 |

| Healthcare | 503 | 2737 |

| Industry | 524 | 2305 |

| Transport | 582 | 3296 |

| Total Activity | 3707 | 18,174 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez de Prado, R.; García-Galán, S.; Muñoz-Expósito, J.E.; Marchewka, A.; Ruiz-Reyes, N. Smart Containers Schedulers for Microservices Provision in Cloud-Fog-IoT Networks. Challenges and Opportunities. Sensors 2020, 20, 1714. https://doi.org/10.3390/s20061714

Pérez de Prado R, García-Galán S, Muñoz-Expósito JE, Marchewka A, Ruiz-Reyes N. Smart Containers Schedulers for Microservices Provision in Cloud-Fog-IoT Networks. Challenges and Opportunities. Sensors. 2020; 20(6):1714. https://doi.org/10.3390/s20061714

Chicago/Turabian StylePérez de Prado, Rocío, Sebastián García-Galán, José Enrique Muñoz-Expósito, Adam Marchewka, and Nicolás Ruiz-Reyes. 2020. "Smart Containers Schedulers for Microservices Provision in Cloud-Fog-IoT Networks. Challenges and Opportunities" Sensors 20, no. 6: 1714. https://doi.org/10.3390/s20061714

APA StylePérez de Prado, R., García-Galán, S., Muñoz-Expósito, J. E., Marchewka, A., & Ruiz-Reyes, N. (2020). Smart Containers Schedulers for Microservices Provision in Cloud-Fog-IoT Networks. Challenges and Opportunities. Sensors, 20(6), 1714. https://doi.org/10.3390/s20061714