Automated Indoor Image Localization to Support a Post-Event Building Assessment

Abstract

1. Introduction

2. Literature Review of Path Reconstruction Techniques

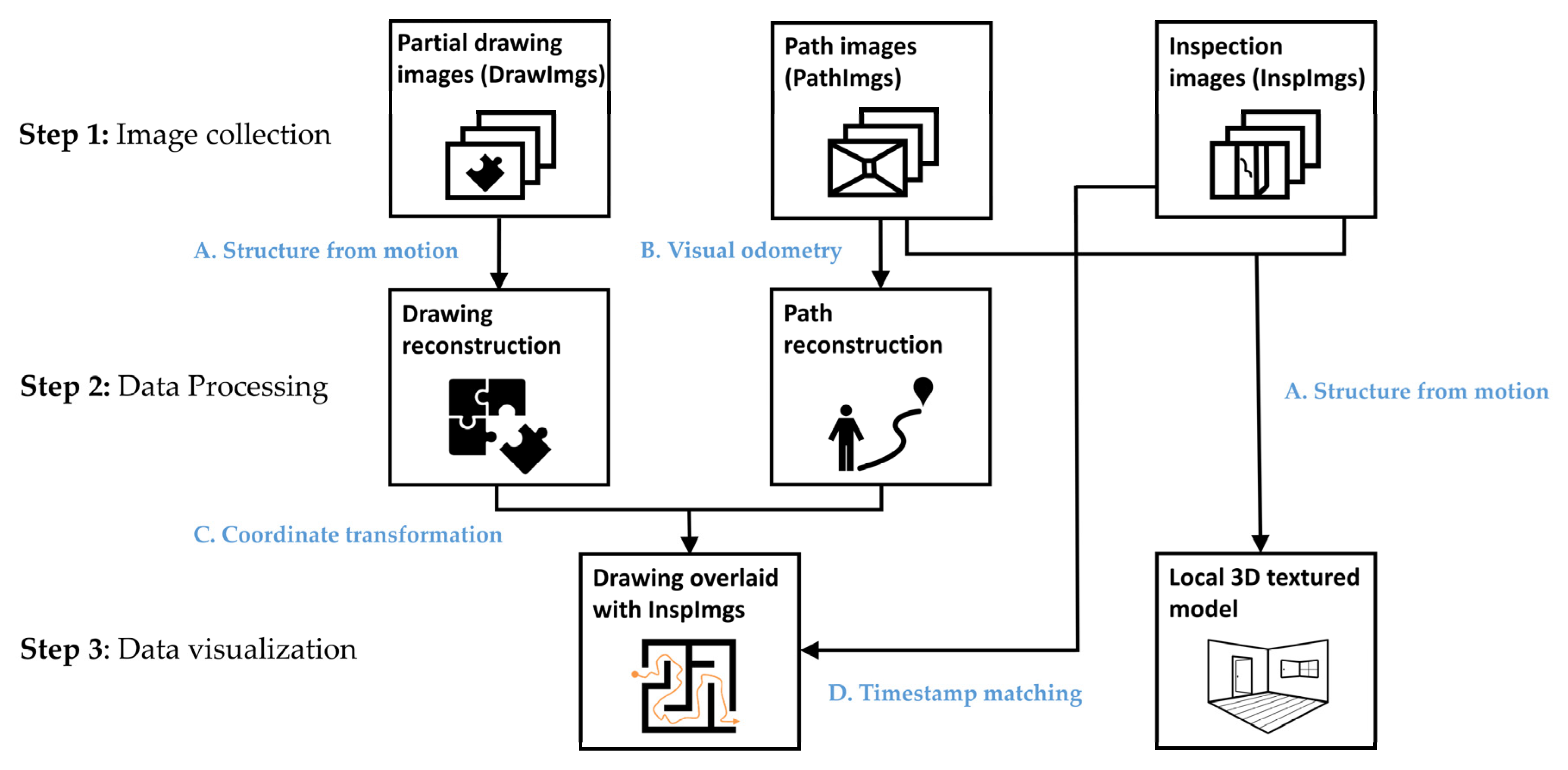

3. Technical Approach

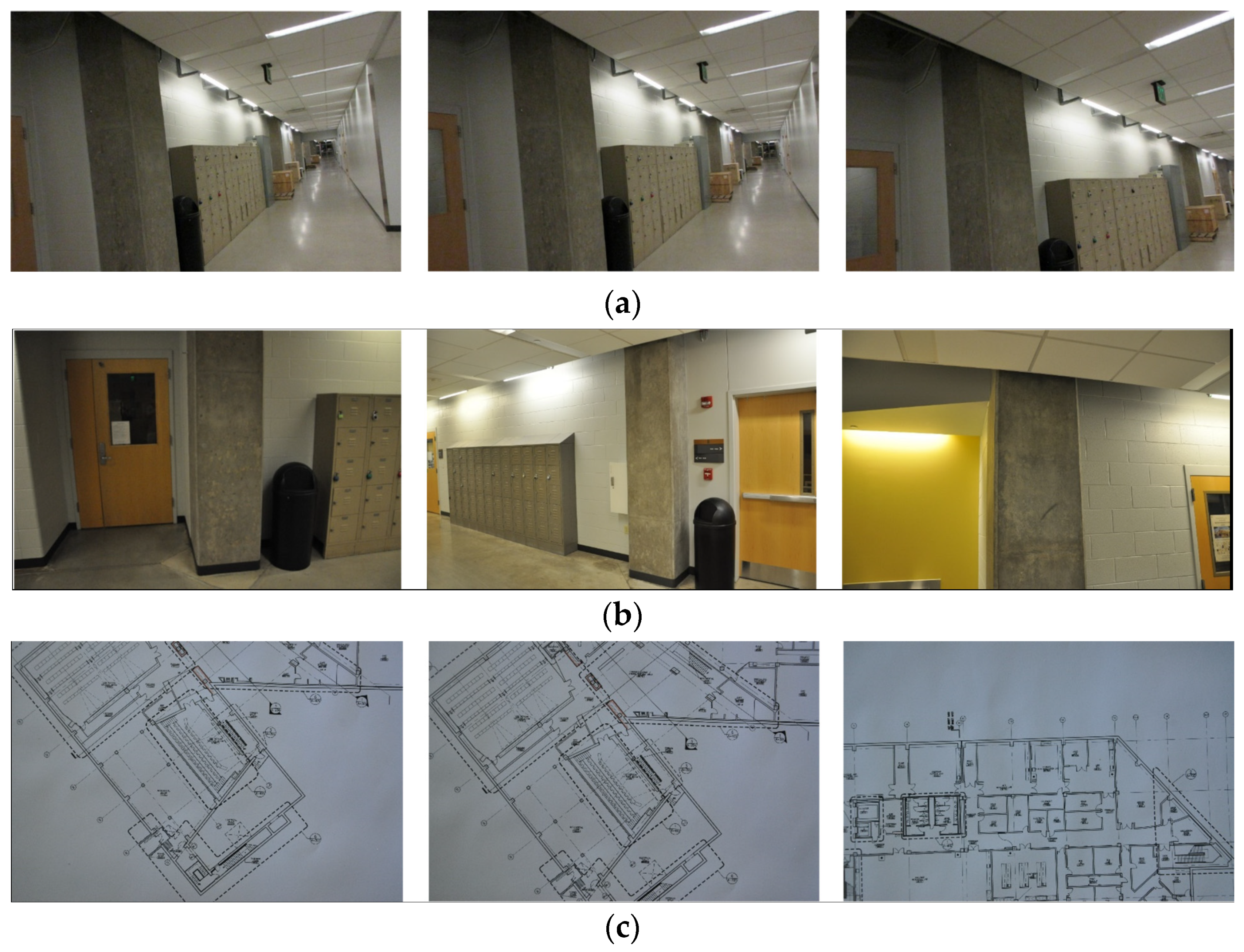

3.1. Reconnaissance Image Collection

3.1.1. Collecting InspImgs and DrawImgs

3.1.2. Collecting PathImgs

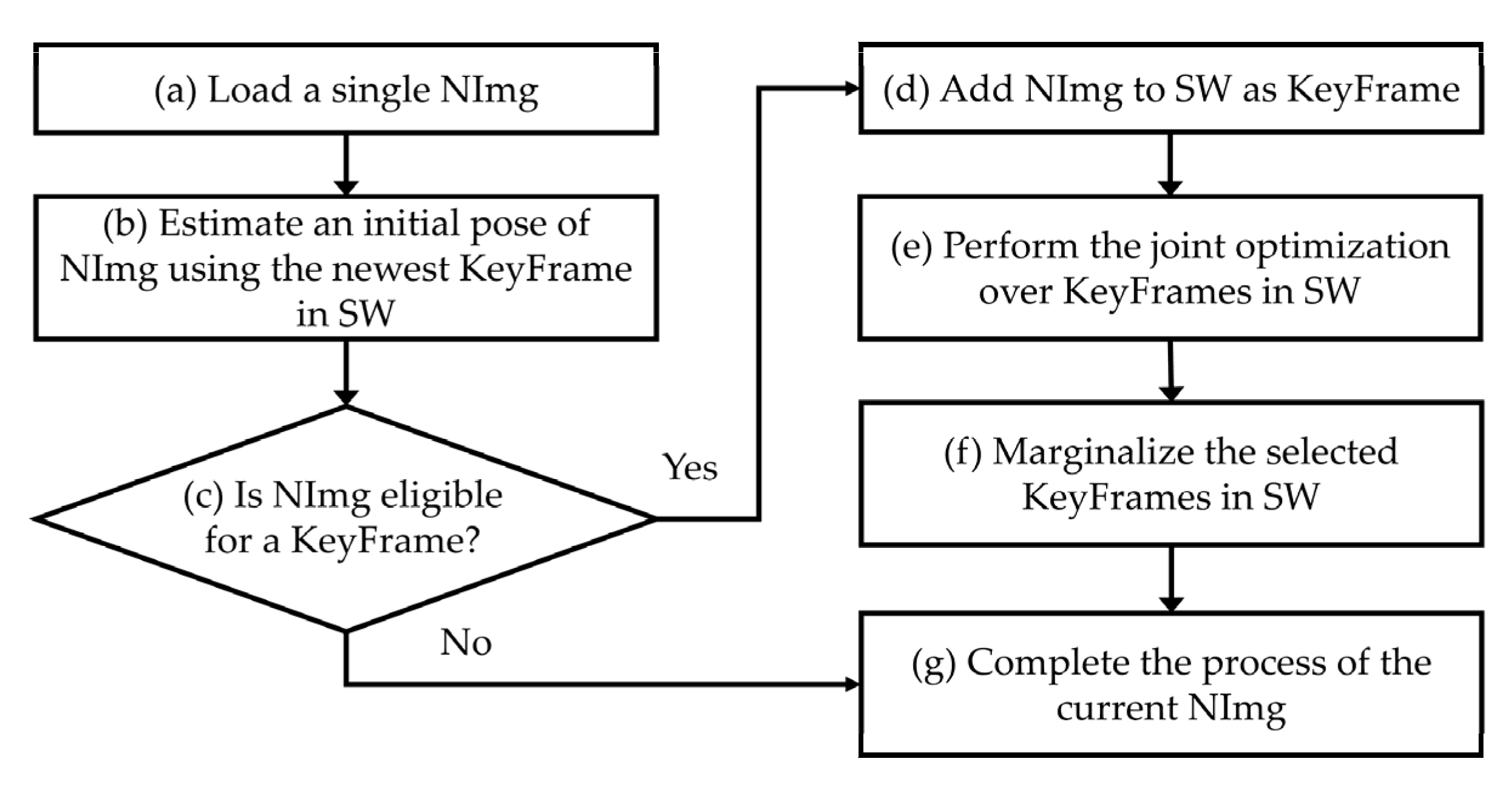

3.2. Path Reconstruction

3.3. Drawing Reconstruction

3.4. Overlaying the Path with the Drawing

4. Experimental Verification

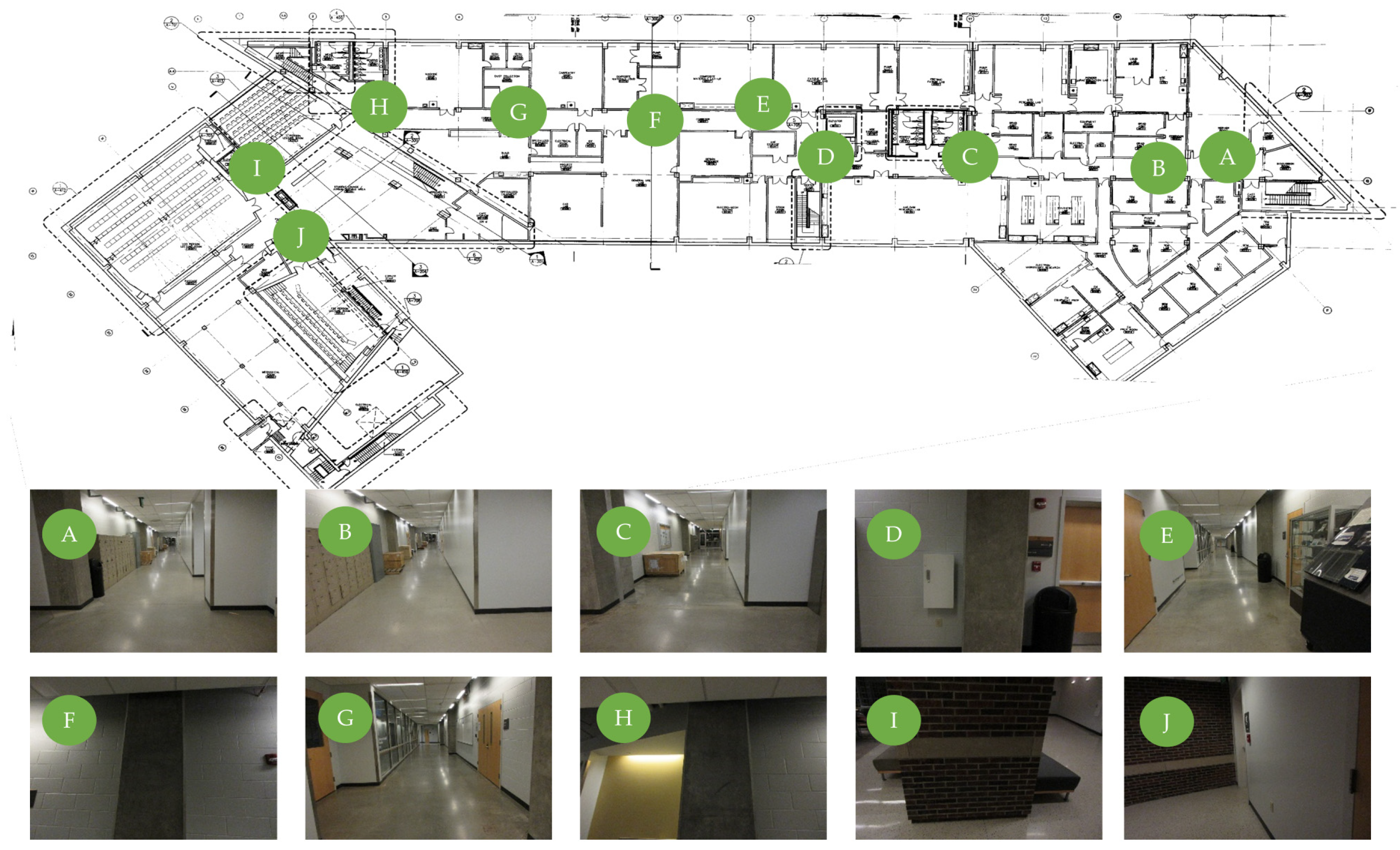

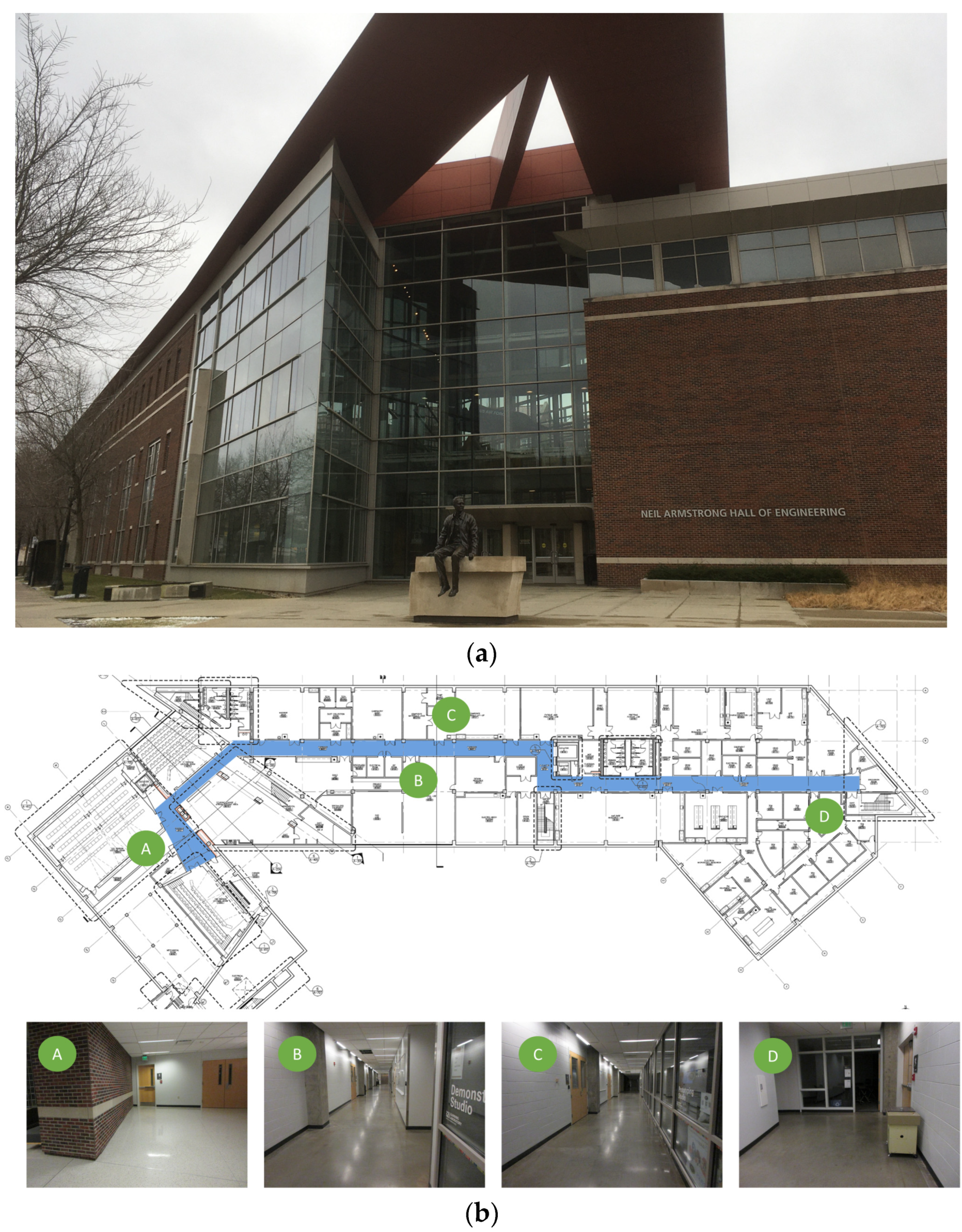

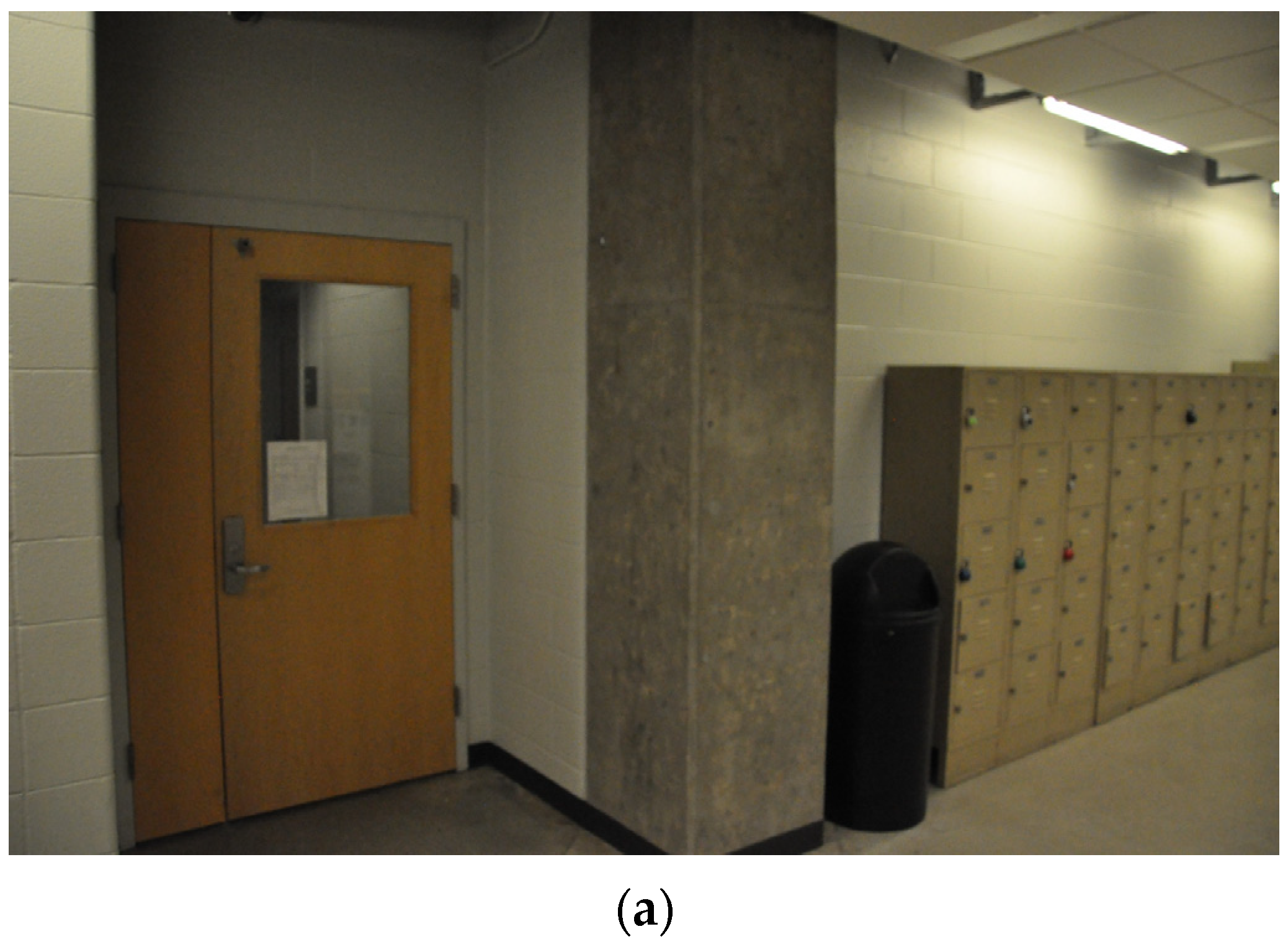

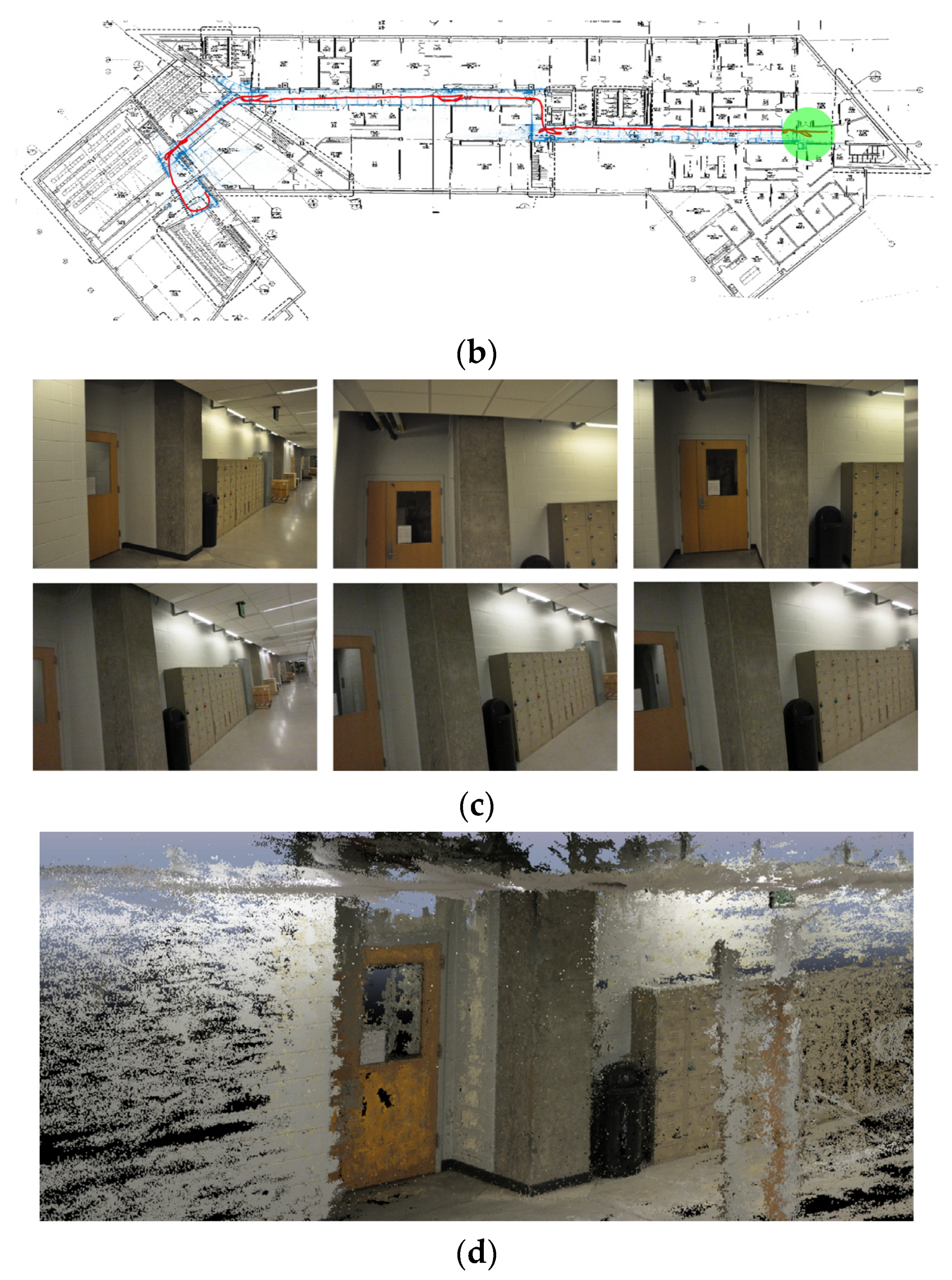

4.1. Description of the Test Site

4.2. Collection of the Image Data

4.3. Results

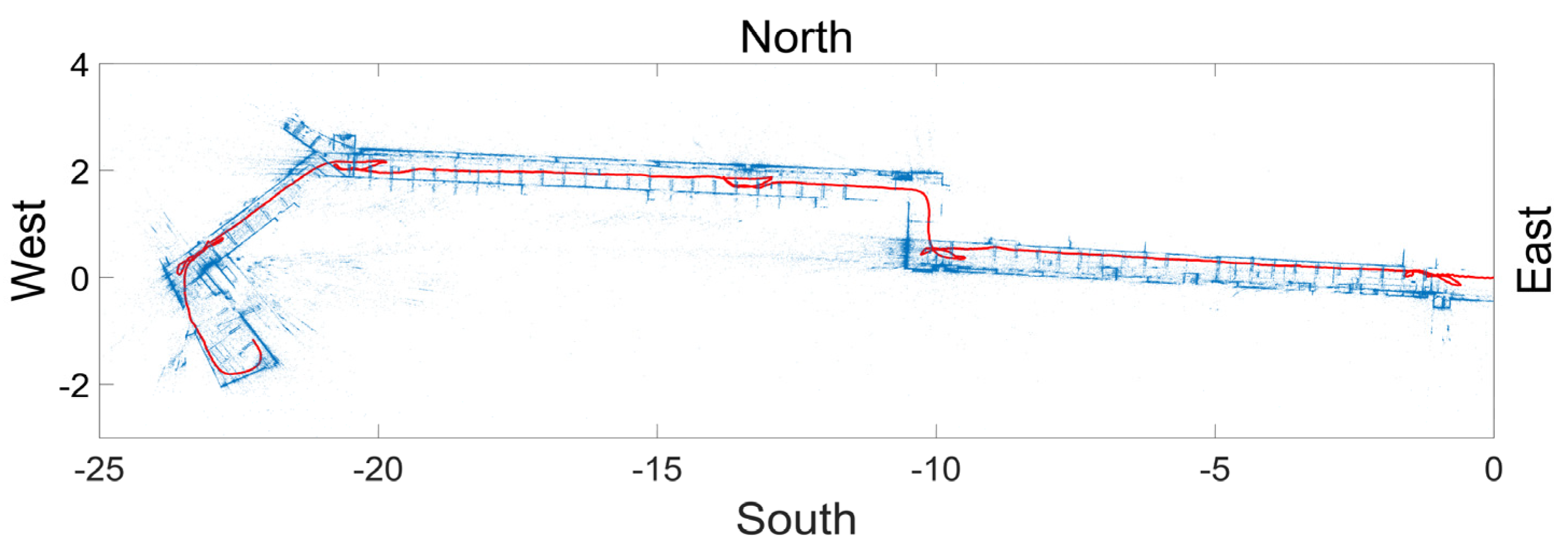

4.3.1. Path Reconstruction

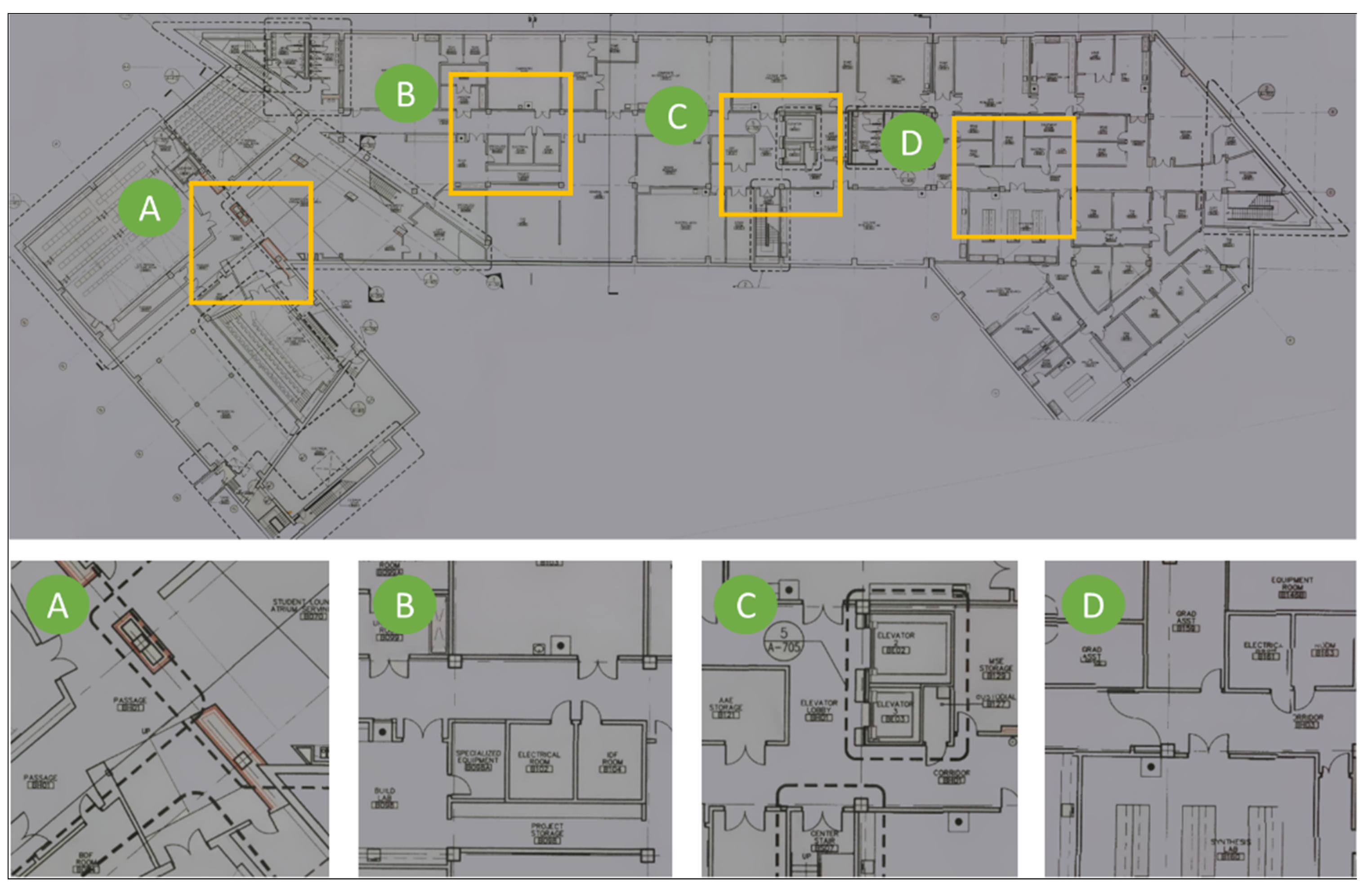

4.3.2. Drawing Reconstruction

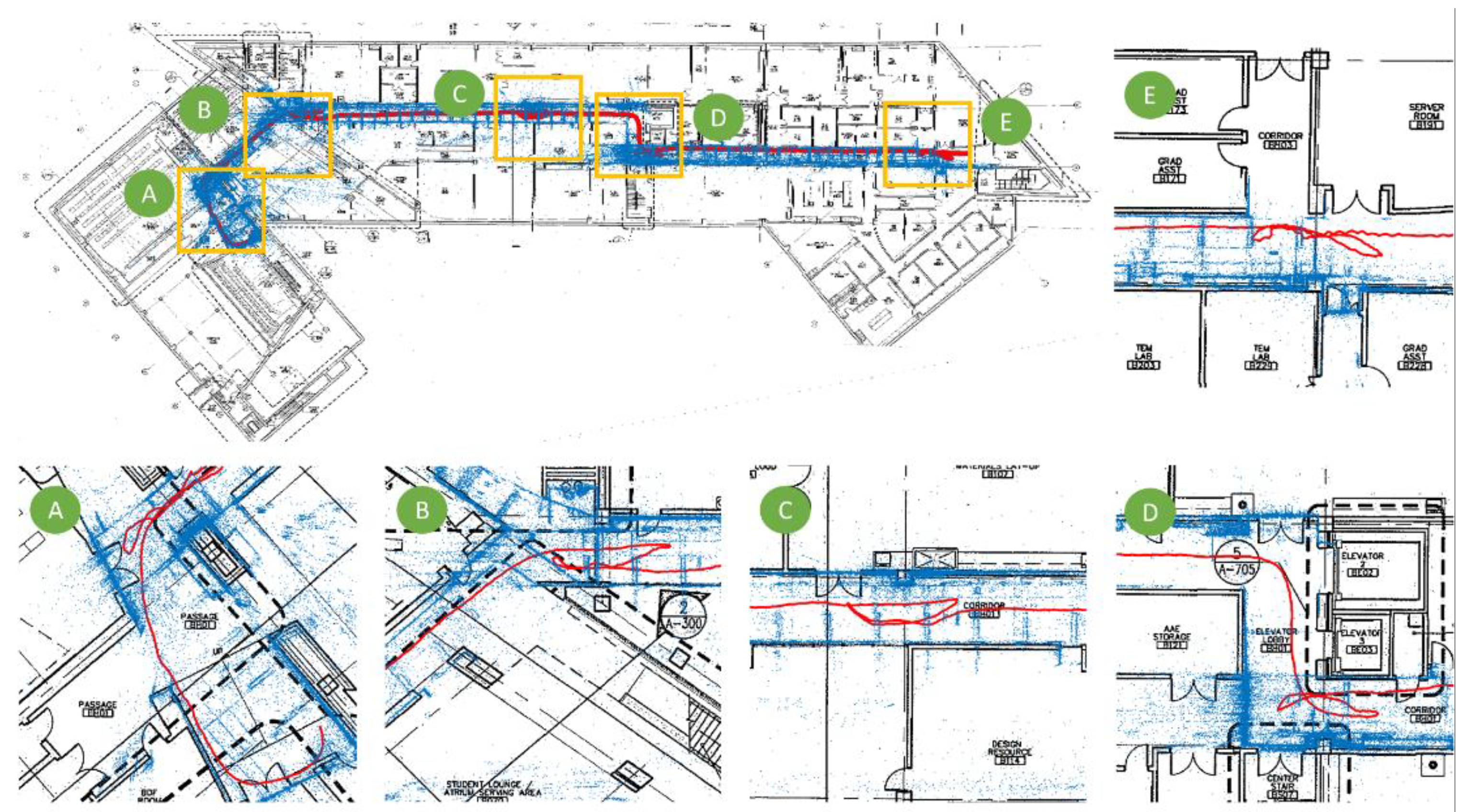

4.3.3. Path Overlay

4.4. Image Localization and Local 3D Textured Model Reconstruction

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wu, Y.; Wang, Y.; Hu, W.; Cao, G. Smartphoto: A resource-aware crowdsourcing approach for image sensing with smartphones. IEEE Trans. Mob. Comput. 2015, 15, 1249–1263. [Google Scholar] [CrossRef]

- Hua, Y.; He, W.; Liu, X.; Feng, D. SmartEye: Real-time and efficient cloud image sharing for disaster environments. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hongkong, China, 26 April–1 May 2015. [Google Scholar]

- Lenjani, A.; Yeum, C.M.; Dyke, S.J.; Bilionis, I. Automated Building Image Extraction from 360-Degree Panoramas for Post-Disaster Evaluation. Available online: https://onlinelibrary.wiley.com/doi/full/10.1111/mice.12493 (accessed on 5 January 2020).

- Lenjani, A.; Dyke, S.J.; Bilionis, I.; Yeum, C.M.; Kamiya, K.; Choi, J.; Liu, X.; Chowdhury, A.G. Towards fully automated post-event data collection and analysis: Pre-event and post-event information fusion. Eng. Struct. 2019, in press. [Google Scholar] [CrossRef]

- Adams, S.M.; Levitan, M.L.; Friedland, C.J. High resolution imagery collection utilizing unmanned aerial vehicles (UAVs) for post-disaster studies. In Advances in Hurricane Engineering: Learning from Our Past; ASCE Press: Reston, VA, USA, 2013; pp. 777–793. [Google Scholar]

- EERI. (Earthquake Engineering Research Institute). Earthquake Clearinghouse. Available online: www.eqclearinghouse.org (accessed on 5 January 2020).

- UC CEISMIC. Canterbury Earthquake Digital Archive. Available online: www.ceismic.org.nz (accessed on 5 January 2020).

- NIST. 2010 Chile Earthquake. Available online: https://www.nist.gov/image/2010chileearthquakejpg (accessed on 17 January 2020).

- DesignSafe-CI. Rapid Experimental Facility. Available online: https://rapid.designsafe-ci.org (accessed on 5 January 2020).

- QuakeQoRE. Available online: http://www.quakecore.nz/ (accessed on 5 January 2020).

- Christine, C.A.; Chandima, H.; Santiago, P.; Lucas, L.; Chungwook, S.; Aishwarya, P.; Andres, B. A cyberplatform for sharing scientific research data at DataCenterHub. Comput. Sci. Eng. 2018, 20, 49. [Google Scholar]

- Pacific Earthquake Engineering Research Center. Available online: https://peer.berkeley.edu/ (accessed on 5 January 2020).

- Yeum, C.M.; Choi, J.; Dyke, S.J. Automated region-of-interest localization and classification for vision-based visual assessment of civil infrastructure. Struct. Health Monit. 2019, 18, 675–689. [Google Scholar] [CrossRef]

- Yeum, C.M.; Dyke, S.J.; Benes, B.; Hacker, T.; Ramirez, J.; Lund, A.; Pujol, S. Postevent Reconnaissance Image Documentation Using Automated Classification. J. Perform. Constr. Facil. 2018, 33, 04018103. [Google Scholar] [CrossRef]

- Choi, J.; Dyke, S.J. CrowdLIM: Crowdsourcing to Enable Lifecycle Infrastructure Management. Available online: http://arxiv.org/pdf/1607.02565.pdf (accessed on 5 January 2020).

- Choi, J.; Yeum, C.M.; Dyke, J.S.; Jahanshahi, M. Computer-aided approach for rapid post-event visual evaluation of a building façade. Sensors 2018, 18, 3017. [Google Scholar] [CrossRef]

- GeoSLAM. Available online: https://geoslam.com/ (accessed on 5 January 2020).

- Leica Geosystems. BLK360. Available online: https://shop.leica-geosystems.com/ca/learn/reality-capture/blk360 (accessed on 17 January 2020).

- Yeum, C.M.; Lund, A.; Dyke, S.J.; Ramirez, J. Automated Recovery of Structural Drawing Images Collected from Postdisaster Reconnaissance. J. Comput. Civ. Eng. 2018, 33, 04018056. [Google Scholar] [CrossRef]

- Kos, T.; Markezic, I.; Pokrajcic, J. Effects of multipath reception on GPS positioning performance. In Proceedings of the ELMAR-2010, Zadar, Croatia, 15–17 September 2010. [Google Scholar]

- Want, R.; Hopper, A.; Falcao, V.; Gibbons, J. The active badge location system. Trans. Inf. Syst. (TOIS) 1992, 10, 91–102. [Google Scholar] [CrossRef]

- Bahl, V.; Padmanabhan, V. Enhancements to the RADAR User Location and Tracking System; Microsoft Corporation: Redmond, WA, USA, 2000. [Google Scholar]

- Gutmann, J.-S.; Fong, P.; Chiu, L.; Munich, M.E. Challenges of designing a low-cost indoor localization system using active beacons. In Proceedings of the 2013 IEEE Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 22–23 April 2013. [Google Scholar]

- Pierlot, V.; Droogenbroeck, M.V. A beacon-based angle measurement sensor for mobile robot positioning. IEEE Trans. Robot. 2014, 30, 533–549. [Google Scholar] [CrossRef][Green Version]

- Meng, W.; He, Y.; Deng, Z.; Li, C. Optimized access points deployment for WLAN indoor positioning system. In Proceedings of the 2012 IEEE Wireless Communications and Networking Conference (WCNC), Paris, France, 1–4 April 2012. [Google Scholar]

- Willis, S.; Helal, S. A passive RFID information grid for location and proximity sensing for the blind user. Univ. Fla. Tech. Rep. 2004, TR04–TR09, 1–20. [Google Scholar]

- Shirehjini, A.A.N.; Yassine, A.; Shirmohammadi, S. An RFID-based position and orientation measurement system for mobile objects in intelligent environments. IEEE Trans. Instrum. Meas. 2012, 61, 1664–1675. [Google Scholar] [CrossRef]

- Ruiz-López, T.; Garrido, J.L.; Benghazi, K.; Chung, L. A survey on indoor positioning systems: Foreseeing a quality design. In Distributed Computing and Artificial Intelligence; Springer: Berlin, Germany, 2010; pp. 373–380. [Google Scholar]

- Scaramuzza, D.; Fraundorfer, F. Visual odometry [tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Fraundorfer, F.; Scaramuzza, D. Visual odometry: Part II: Matching, robustness, optimization, and applications. IEEE Robot. Autom. Mag. 2012, 19, 78–90. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Aulinas, J.; Petillot, Y.R.; Salvi, J.; Lladó, X. The slam problem: A survey. CCIA 2008, 184, 363–371. [Google Scholar]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Applications. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2004), Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the 13th European conference on computer vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Wing, D. TrueSNAP Shutter Freezes Fast-Moving Objects. Available online: http://ericfossum.com/Articles/Cumulative%20Articles%20about%20EF/truesnaparticle.pdf (accessed on 3 March 2017).

- Clarke, T.A.; Fryer, J.G. The development of camera calibration methods and models. Photogramm. Rec. 1998, 16, 51–66. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Huber, P.J. Robust estimation of a location parameter. In Breakthroughs in Statistics; Springer: New York, NY, USA, 1992; pp. 492–518. [Google Scholar]

- Horn, B.K.; Hilden, H.M.; Negahdaripour, S. Closed-form solution of absolute orientation using orthonormal matrices. JOSA A 1988, 5, 1127–1135. [Google Scholar] [CrossRef]

- Engel, J. DSO: Direct Sparse Odometry. Available online: https://github.com/JakobEngel/dso (accessed on 3 March 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Dyke, S.J.; Yeum, C.M.; Bilionis, I.; Lenjani, A.; Choi, J. Automated Indoor Image Localization to Support a Post-Event Building Assessment. Sensors 2020, 20, 1610. https://doi.org/10.3390/s20061610

Liu X, Dyke SJ, Yeum CM, Bilionis I, Lenjani A, Choi J. Automated Indoor Image Localization to Support a Post-Event Building Assessment. Sensors. 2020; 20(6):1610. https://doi.org/10.3390/s20061610

Chicago/Turabian StyleLiu, Xiaoyu, Shirley J. Dyke, Chul Min Yeum, Ilias Bilionis, Ali Lenjani, and Jongseong Choi. 2020. "Automated Indoor Image Localization to Support a Post-Event Building Assessment" Sensors 20, no. 6: 1610. https://doi.org/10.3390/s20061610

APA StyleLiu, X., Dyke, S. J., Yeum, C. M., Bilionis, I., Lenjani, A., & Choi, J. (2020). Automated Indoor Image Localization to Support a Post-Event Building Assessment. Sensors, 20(6), 1610. https://doi.org/10.3390/s20061610