Color-Guided Depth Map Super-Resolution Using a Dual-Branch Multi-Scale Residual Network with Channel Interaction

Abstract

1. Introduction

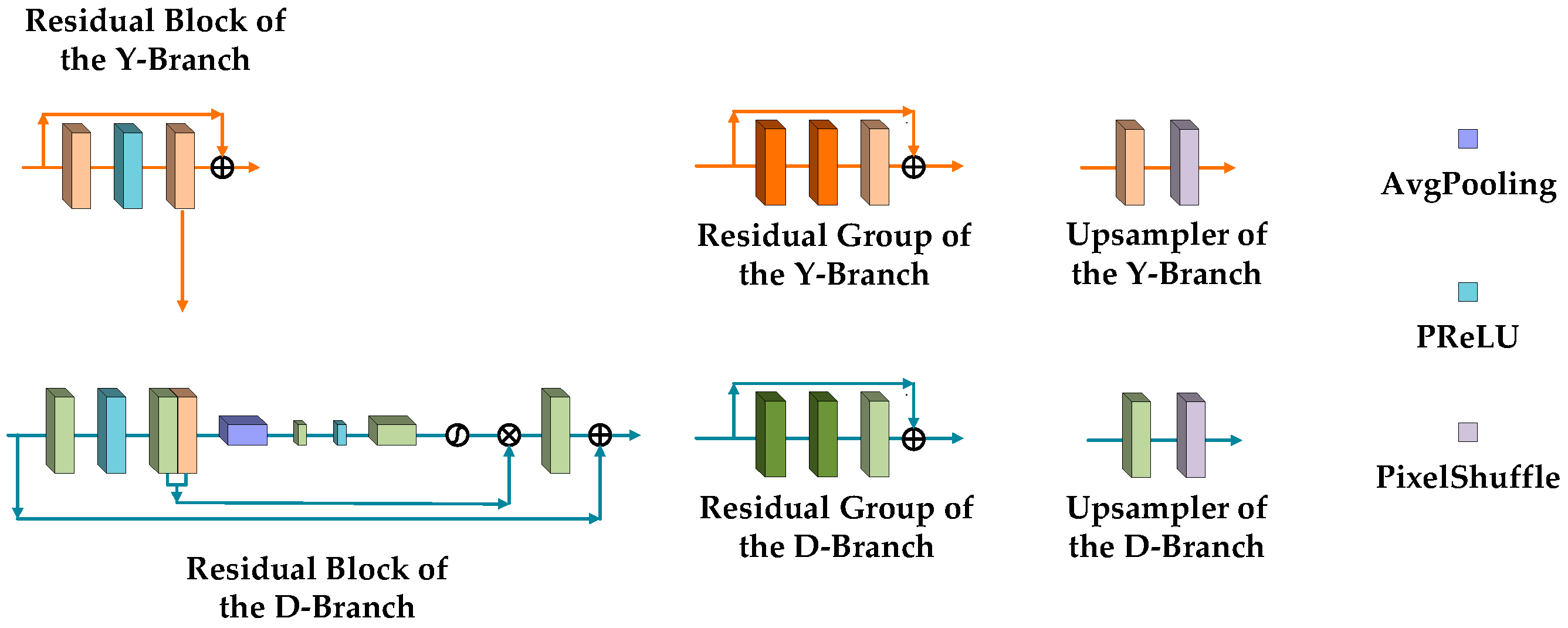

- We designed a multi-scale residual network with two branches to realize an end-to-end LR depth map super-resolution under the guidance from an HR color image.

- We applied a channel attention mechanism [1] to learn the features of a depth map and RGB image and fuse them via weights; furthermore, we tried to avoid copying artifacts to the depth map while ensuring the guidance from RGB image worked.

- We discuss the detailed steps toward realizing image-wise upsampling and end-to-end training of this dual-branch, multi-scale residual network.

2. Related Works

3. Proposed Dual-Branch Multi-Scale Residual Network with Channel Interaction

3.1. RGB Image Network Branch

3.2. Depth Map Network Branch

3.3. Channel Interaction

4. Evaluation

4.1. Network Training

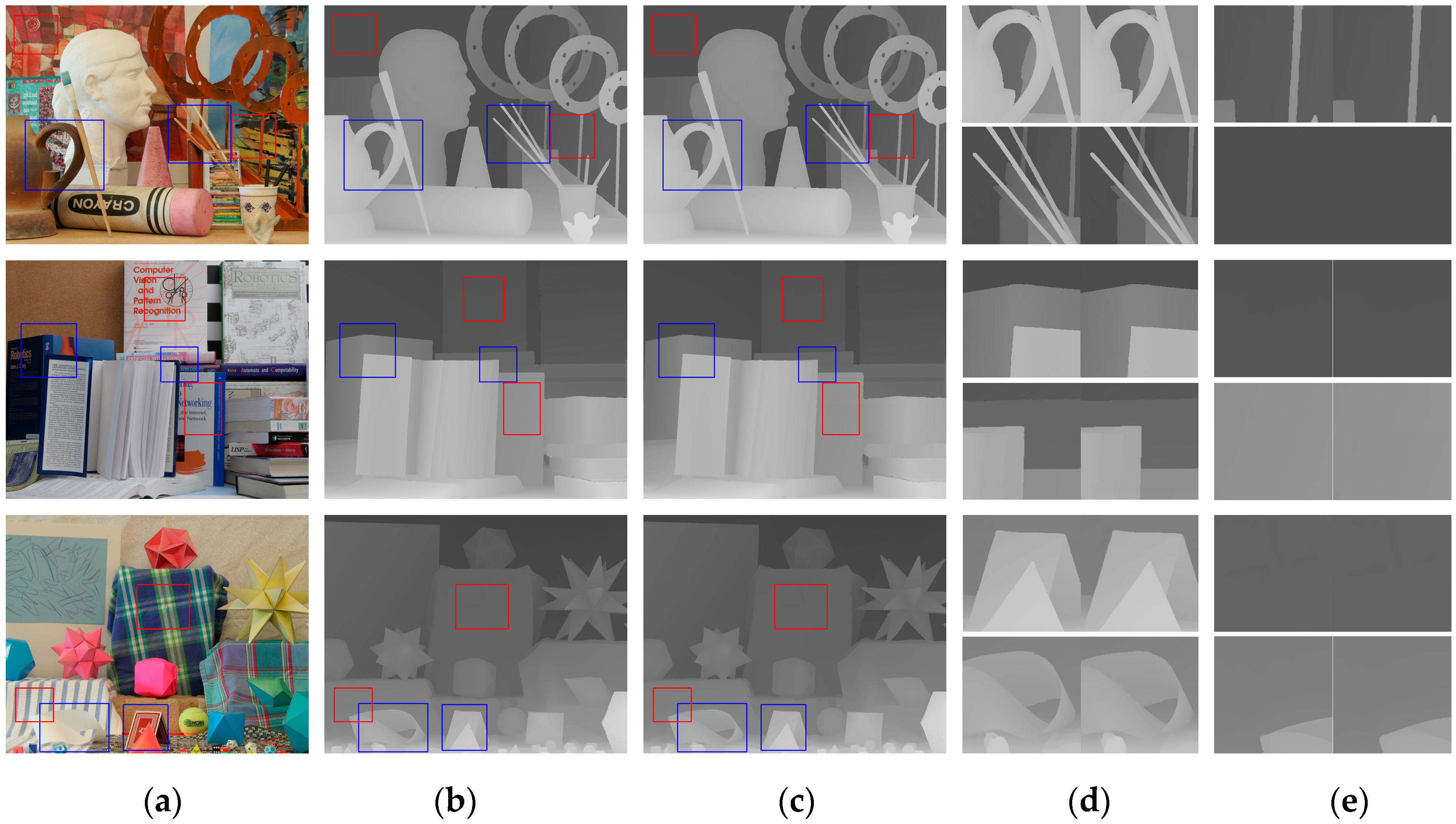

4.2. Evaluation on the Middlebury Dataset

4.3. Evaluation of Generalization

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Narayanan, B.N.; Hardie, R.C.; Balster, E. Multiframe Adaptive Wiener Filter Super-Resolution with JPEG2000-Compressed Images. EURASIP J. Adv. Signal Process. 2014, 55, 1–18. [Google Scholar] [CrossRef]

- Lu, J.; Forsyth, D. Sparse Depth Super Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2245–2253. [Google Scholar]

- Kwon, H.; Tai, Y.W.; Lin, S. Data-Driven Depth Map Refinement via Multi-Scale Sparse Representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 159–167. [Google Scholar]

- Xie, J.; Feris, R.S.; Sun, M. Edge-Guided Single Depth Image Super Resolution. IEEE Trans. Image Process. 2016, 25, 428–438. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. PAMI 2015, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Lai, W.; Huang, J.; Ahuja, N.; Yang, M. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and Checkerboard Artifacts. Distill 2016, 1, e3. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intel. 2013, 6, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Barron, J.T.; Poole, B. The Fast Bilateral Solver. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 617–632. [Google Scholar]

- Diebel, J.; Thrun, S. An Application of Markov Random Fields to Range Sensing. In Proceedings of the 19th Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005. [Google Scholar]

- Park, J.; Kim, H.; Tai, Y.W.; Brown, M.; Kweon, I. High Quality Depth Map Upsampling for 3D-TOF Cameras. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1623–1630. [Google Scholar]

- Ferstl, D.; Reinbacher, C.; Ranftl, R.; Rüther, M.; Bischof, H. Image Guided Depth Upsampling Using Anisotropic Total Generalized Variation. In Proceedings of the International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 993–1000. [Google Scholar]

- Zuo, Y.; Wu, Q.; Zhang, J.; An, P. Explicit Edge Inconsistency Evaluation Model for Color-Guided Depth Map Enhancement. IEEE Trans. Circuit Syst. Video Techol. 2018, 28, 439–453. [Google Scholar] [CrossRef]

- Zuo, Y.; Wu, Q.; Zhang, J.; An, P. Minimum Spanning Forest with Embedded Edge Inconsistency Measurement Model for Guided Depth Map Enhancement. IEEE Trans. Image Process. 2018, 27, 4145–4149. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Ye, X.; Ki, K.; Hou, C.; Wang, Y. Color-Guided Depth Recovery from RGB-D Data Using an Adaptive Autoregressive Model. TIP 2014, 23, 3962–3969. [Google Scholar] [CrossRef]

- Kiechle, M.; Hawe, S.; Kleinsteuber, M. A Joint Intensity and Depth Co-Sparse Analysis Model for Depth Map Super-Resolution. In Proceedings of the International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1545–1552. [Google Scholar]

- Riegler, G.; Rüther, M.; Bischof, H. Atgv-Net: Accurate Depth Super-Resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 268–284. [Google Scholar]

- Zhou, W.; Li, X.; Reynolds, D. Guided Deep Network for Depth Map Super-Resolution: How much can color help? In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 1457–1461. [Google Scholar]

- Yang, J.; Lan, H.; Song, X.; Li, K. Depth Super-Resolution via Fully Edge-Augmented Guidance. In Proceedings of the IEEE Visual Communications and Image Processing, St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Ye, X.; Duan, X.; Li, H. Depth Super-Resolution with Deep Edge-Inference Network and Edge-Guided Depth Filling. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Seoul, South Korea, 22–27 April 2018; pp. 1398–1402. [Google Scholar]

- Hui, T.-W.; Loy, C.C.; Tang, X. Depth Map Super-Resolution by Deep Multi-Scale Guidance. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 353–369. [Google Scholar]

- Zuo, Y.; Wu, Q.; Fang, Y.; An, P.; Huang, L.; Chen, Z. Multi-Scale Frequency Reconstruction for Guided Depth Map Super-Resolution via Deep Residual Network. IEEE Trans. Circuit Syst. Video Techol. 2020, 30, 297–306. [Google Scholar] [CrossRef]

- Zuo, Y.; Fang, Y.; Yang, Y.; Shang, X.; Wang, B. Residual Dense Network for Intensity-Guided Depth Map Enhancement. Inf. Sci. 2019, 495, 52–64. [Google Scholar] [CrossRef]

- Voynov, O.; Artemov, A.; Egiazarian, V.; Notchenko, A.; Bobrovskikh, G.; Burnaev, E. Perceptual Deep Depth Super-Resolution. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5653–5663. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2472–2481. [Google Scholar]

- Liu, D.; Wen, B.; Fan, Y.; Loy, C.C.; Huang, T.S. Non-Local Recurrent Network for Image Restoration. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 2–7 December 2018; pp. 1673–1682. [Google Scholar]

- Qiu, Y.; Wang, R.; Tao, D.; Cheng, J. Embedded Block Residual Network: A Recursive Restoration Model for Single-Image Super-Resolution. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4180–4189. [Google Scholar]

- Hu, Y.; Li, J.; Huang, Y.; Gao, X. Channel-wise and Spatial Feature Modulation Network for Single Image Super-Resolution. IEEE Trans. Circuit Syst. Video Techol. 2019. [Google Scholar] [CrossRef]

- Jing, P.; Guan, W.; Bai, X.; Guo, H.; Su, Y. Single Image Super-Resolution via Low-Rank Tensor Representation and Hierarchical Dictionary Learning. Multimed. Tools Appl. 2020. [Google Scholar] [CrossRef]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Recurrent Back-Projection Network for Video Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 3897–3906. [Google Scholar]

- Molini, A.B.; Valsesia, D.; Fracastoro, G.; Magli, E. DeepSUM: Deep Neural Network for Super-Resolution of Unregistered Multitemporal Images. IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Molini, A.B.; Valsesia, D.; Fracastoro, G.; Magli, E. DeepSUM++: Non-local Deep Neural Network for Super-Resolution of Unregistered Multitemporal Images. arXiv 2020, arXiv:2001.06342. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszar, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1874–1883. [Google Scholar]

- Silberman, N.; Kohli, P.; Hoiem, D.; Fergus, R. Indoor Segmentation and Support Inference from RGBD Images. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Handa, A.; Whelan, T.; McDonald, J.; Davison, A.J. A Benchmark for RGB-D Visual Odometry, 3D reconstruction and SLAM. In Proceedings of the IEEE Conference on Robotics and Automation, Hong Kong, China, 31 May–5 June 2014; pp. 1524–1531. [Google Scholar]

- Riegler, G.; Ferstl, D.; Ruther, M.; Bischof, H. A Deep Primal-Dual Network for Guided Depth Super-Resolution. In Proceedings of the British Machine Vision Conference, York, UK, 19–22 September 2016. [Google Scholar]

- Haefner, B.; Queau, Y.; Mollenhoff, T.; Cremers, D. Fight Ill-Posedness with Ill-Posedness: Single-shot Variational Depth Super-Resolution from Shading. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 164–174. [Google Scholar]

- Gu, S.; Zuo, W.; Guo, S.; Chen, Y.; Chen, C.; Zhang, L. Learing dynamic guidance for depth image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 712–721. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Cong, R.; Fu, H.; Han, P. Hierarchical Features Driven Residual Learning for Depth Map Super-Resolution. IEEE Trans. Image Process. 2019, 28, 2545–2557. [Google Scholar] [CrossRef] [PubMed]

| Method Used | Art | Books | Moebius | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2x | 4x | 8x | 16x | 2x | 4x | 8x | 16x | 2x | 4x | 8x | 16x | |

| Bilinear | 2.83 | 4.15 | 6.00 | 8.93 | 1.12 | 1.67 | 2.39 | 3.53 | 1.02 | 1.50 | 2.20 | 3.18 |

| Narayanan [2] | 2.76 | 3.10 | 3.51 | – | 1.17 | 1.24 | 1.82 | – | 0.99 | 1.03 | 1.76 | – |

| MRFs [12] | 3.12 | 3.79 | 5.50 | 8.66 | 1.21 | 1.55 | 2.21 | 3.40 | 1.19 | 1.44 | 2.05 | 3.08 |

| Park et al. [13] | 2.83 | 3.50 | 4.17 | 6.26 | 1.09 | 1.53 | 1.99 | 2.76 | 1.06 | 1.35 | 1.80 | 2.38 |

| Guided [10] | 2.93 | 3.79 | 4.97 | 7.88 | 1.16 | 1.57 | 2.10 | 3.19 | 1.10 | 1.43 | 1.88 | 2.85 |

| Kiechle et al. [18] | 1.25 | 2.01 | 3.23 | 5.77 | 0.65 | 0.92 | 1.27 | 1.93 | 0.64 | 0.89 | 1.27 | 2.13 |

| Ferstl et al. [14] | 3.03 | 3.79 | 4.79 | 7.10 | 1.29 | 1.60 | 1.99 | 2.94 | 1.13 | 1.46 | 1.91 | 2.63 |

| Lu et al. [3] | – | – | 5.80 | 7.65 | – | – | 2.73 | 3.55 | – | – | 2.42 | 3.12 |

| SRCNN [6] | 1.13 | 2.02 | 3.83 | 7.27 | 0.52 | 0.94 | 1.73 | 3.10 | 0.54 | 0.91 | 1.58 | 2.69 |

| MSF [16] | 3.01 | 3.70 | 4.66 | 6.68 | 1.25 | 1.63 | 2.02 | 2.84 | 1.13 | 1.51 | 2.06 | 2.93 |

| Hui et al. [23] | 0.66 | 1.47 | 2.46 | 4.57 | 0.37 | 0.67 | 1.03 | 1.60 | 0.36 | 0.66 | 1.02 | 1.63 |

| MFR-SR [24] | 0.71 | 1.54 | 2.71 | 4.35 | 0.42 | 0.63 | 1.05 | 1.78 | 0.42 | 0.72 | 1.10 | 1.73 |

| RDN-GDE [25] | 0.56 | 1.47 | 2.60 | 4.16 | 0.36 | 0.62 | 1.00 | 1.68 | 0.38 | 0.69 | 1.05 | 1.65 |

| Ours | 0.44 | 1.17 | 1.96 | 3.24 | 0.35 | 0.60 | 0.96 | 1.24 | 0.32 | 0.58 | 0.89 | 1.18 |

| Method Used | Dolls | Laundry | Reindeer | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2x | 4x | 8x | 16x | 2x | 4x | 8x | 16x | 2x | 4x | 8x | 16x | |

| Bicubic | 0.91 | 1.31 | 1.86 | 2.63 | 1.61 | 2.41 | 3.45 | 5.10 | 1.94 | 2.81 | 3.99 | 5.82 |

| Narayanan [2] | 0.84 | 1.25 | 1.69 | – | 1.34 | 1.87 | 2.65 | – | 1.79 | 2.02 | 2.40 | – |

| Park et al. [13] | 0.96 | 1.30 | 1.75 | 2.41 | 1.55 | 2.13 | 2.77 | 4.16 | 1.83 | 2.41 | 2.99 | 4.29 |

| Ferstl et al. [14] | 1.12 | 1.36 | 1.86 | 3.57 | 1.99 | 2.51 | 3.76 | 6.41 | 2.41 | 2.71 | 3.79 | 7.27 |

| Kiechle et al. [18] | 0.70 | 0.92 | 1.26 | 1.74 | 0.75 | 1.21 | 2.08 | 3.62 | 0.92 | 1.56 | 2.58 | 4.64 |

| AP [17] | 1.15 | 1.35 | 1.65 | 2.32 | 1.72 | 2.26 | 2.85 | 4.66 | 1.80 | 2.43 | 2.95 | 4.09 |

| SRCNN [6] | 0.58 | 0.95 | 1.52 | 2.45 | 0.64 | 1.18 | 2.43 | 4.58 | 0.77 | 1.50 | 2.86 | 5.25 |

| MSF [16] | 1.15 | 1.43 | 1.80 | 2.49 | 1.93 | 2.37 | 3.18 | 4.58 | 2.36 | 2.76 | 3.53 | 4.74 |

| Hui et al. [23] | 0.35 | 0.69 | 1.05 | 1.60 | 0.37 | 0.79 | 1.51 | 2.63 | 0.42 | 0.98 | 1.76 | 2.92 |

| MFR-SR [24] | 0.60 | 0.89 | 1.22 | 1.74 | 0.61 | 1.11 | 1.75 | 3.01 | 0.65 | 1.23 | 2.06 | 3.74 |

| RDN-GDE [25] | 0.56 | 0.88 | 1.21 | 1.71 | 0.48 | 0.96 | 1.63 | 2.86 | 0.51 | 1.17 | 2.05 | 3.58 |

| Ours | 0.27 | 0.64 | 0.99 | 1.34 | 0.34 | 0.64 | 1.06 | 1.50 | 0.33 | 0.78 | 1.31 | 2.04 |

| Method Used | Tsukuba | Venus | Teddy | Cones | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2x | 4x | 8x | 2x | 4x | 8x | 2x | 4x | 8x | 2x | 4x | 8x | |

| Kiechle et al. [18] | 3.65 | 6.21 | 10.08 | 0.61 | 0.82 | 1.17 | 1.20 | 1.82 | 2.37 | 1.47 | 2.97 | 4.52 |

| Ferstl et al. [14] | 5.25 | 7.35 | – | 1.11 | 1.74 | – | 1.69 | 2.60 | – | 2.19 | 3.50 | – |

| Lu et al. [3] | – | 10.29 | 13.77 | – | 1.73 | 2.13 | – | 2.72 | 3.47 | – | 3.99 | 5.34 |

| SRCNN [6] | 3.28 | 7.94 | 11.28 | 0.46 | 0.79 | 1.71 | 1.17 | 1.99 | 3.25 | 1.48 | 3.59 | 5.18 |

| Hui et al. [23] | 1.85 | 4.29 | 8.43 | 0.14 | 0.35 | 1.04 | 0.71 | 1.49 | 2.76 | 0.91 | 2.60 | 4.23 |

| Ours | 0.91 | 2.75 | 6.18 | 0.21 | 0.42 | 0.95 | 0.55 | 1.34 | 2.16 | 0.51 | 2.09 | 3.33 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, R.; Gao, W. Color-Guided Depth Map Super-Resolution Using a Dual-Branch Multi-Scale Residual Network with Channel Interaction. Sensors 2020, 20, 1560. https://doi.org/10.3390/s20061560

Chen R, Gao W. Color-Guided Depth Map Super-Resolution Using a Dual-Branch Multi-Scale Residual Network with Channel Interaction. Sensors. 2020; 20(6):1560. https://doi.org/10.3390/s20061560

Chicago/Turabian StyleChen, Ruijin, and Wei Gao. 2020. "Color-Guided Depth Map Super-Resolution Using a Dual-Branch Multi-Scale Residual Network with Channel Interaction" Sensors 20, no. 6: 1560. https://doi.org/10.3390/s20061560

APA StyleChen, R., & Gao, W. (2020). Color-Guided Depth Map Super-Resolution Using a Dual-Branch Multi-Scale Residual Network with Channel Interaction. Sensors, 20(6), 1560. https://doi.org/10.3390/s20061560