2.1. General Formulation of Data Assimilation

Let

be a model describing of the dynamics in a given system, represented by its state vector

. For example,

might be a vector of temperatures over a grid (discretized area of interest).

where

is the initial value of the state vector.

Data assimilation aims at providing an analysis which will be used to compute optimal forecasts of the system’s evolution.

Such an analysis is produced using various sources of information about the system: observations (measurements), previous forecasts, past or a priori information, statistics on the data and/or model errors, and so on.

In this paper, we assume that these ingredients are available:

the numerical model ,

a priori information about , denoted and called background state vector,

partial and imperfect observations of the system, denoted and called observation vector,

the observation operator , mapping the state space into the observation space,

statistical modelling of the background and observation errors (assumed unbiased), by means of their covariance matrices and .

Data assimilation provides the theoretical framework to produce an optimal (under some restrictive hypotheses) analysis

using all the aforementioned ingredients. In this work, we will focus on how to make the most of the observation error statistics information and we will not consider the background error information. Regarding the observation information, typically, most approaches can be formulated as providing the best (in some sense) vector in order to minimize the following quantity, measuring the misfit to the available information:

where the notation

stands for the Mahalanobis distance; namely

. Some information about algorithms and methods will be given in following paragraphs. For an extensive description we refer the reader to the recent book [

8].

2.2. Spatial Error Covariance Modelling Using Wavelets

Being able to accurately describe the covariances matrices

and

is a crucial issue in data assimilation, as they count as main ingredients in the numerical computation. The

matrix modelling has been largely investigated (see e.g., [

9,

10]). DA works actually using non diagonal

matrices are quite recent (e.g., [

2,

7,

11]). Evidence shows that observation errors are indeed correlated [

12] and that ignoring it can be detrimental [

13,

14].

In [

2] the authors introduced a linear change of variable

for accounting for correlated observation errors, while still using a diagonal matrix in the algorithm core. For the sake of clarity we will summarize the approach in the next few lines. If we assume that the observation error

is such that

, with

,

being the true vector (without any error) and

designing the normal distribution of zero mean and covariance matrix

. Then changing variables writes

and

. Then we carefully choose

so that the transformed matrix is almost diagonal:

. Indeed, we then have the following property:

After this change of variable, the covariance matrix that will be used in the data assimilation algorithm is therefore , it is diagonal. At the same time, the covariance information still has some interesting features, if the change of variable is carefully chosen.

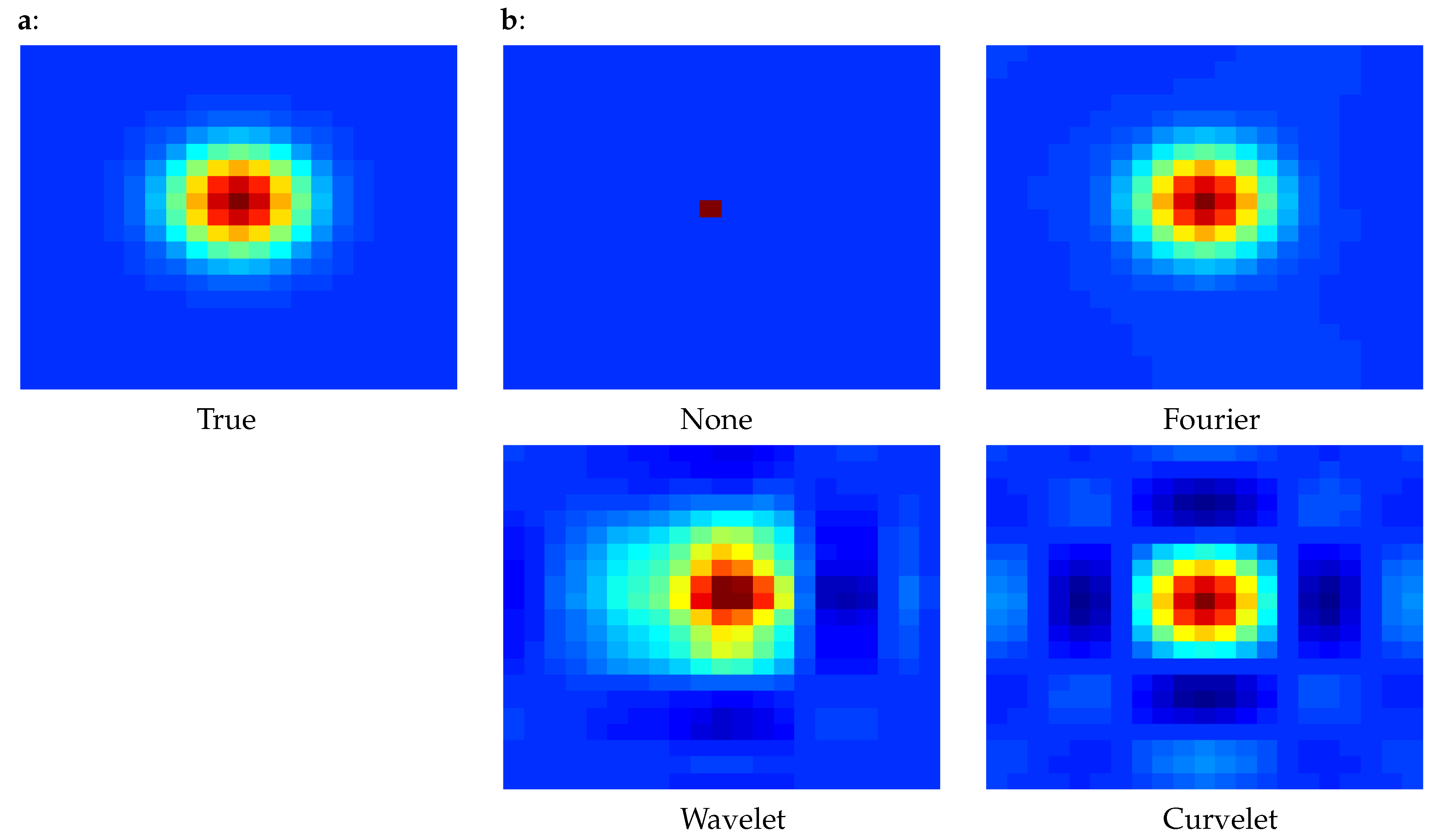

As an illustration,

Figure 1 presents the correlations of the central point with respect to its neighbors for diagonal covariance matrices using various changes of variables: none, change into wavelet space, change into Fourier space, change into curvelet space. This figure was produced using a diagonal correlation matrix

, then applying the chosen change of variable to obtain

, then plotting the correlation described by

. We can see in the figure that interesting correlations can be produced with an adequate change of variable. Indeed, all these changes of variables have the following fact in common: they perform a change of basis such that the new basis vectors have supports distributed over multiple neighboring points (contrary to the classical Euclidean basis vector, which are zero except in one point). This fact explains the fact that

is now non-diagonal.

Let us explain briefly the Fourier, wavelet and curvelet change of variables. For Fourier, the image is decomposed in the Fourier basis:

where

represents the Fourier basis (e.g., sinusoidal functions) and the index

j describes the scale of the

jth basis vector (think of

j as a frequency). The change of variables consists of describing

by its coefficients

on the basis

:

.

Similarly for the wavelets, the decomposition writes

where

represents the wavelet basis (e.g., Haar or Daubechies), where the index

j describes the scale of the

jth basis vector and

k is its position in space (think of wavelets as localised Fourier functions). The change of variables (

, denoted

for the wavelets) into wavelets space consists of describing

by its coefficients

on the basis

:

. In other words,

is the vector of coefficients

.

This is also similar for the curvelets:

where the index

l describe the orientation of the basis vector.

Using these changes of variables then allows various observation error modelling:

Fourier: when the errors change with the scale only

Wavelets: when the errors change with the scale as well as the position (e.g., for a geostationary satellite whose incidence angle impacts the errors, so that the errors vary depending on the position in the picture)

Curvelets: when the errors change with the scale, the position and the orientation (e.g., when errors are highly non linear and depend on the flow, so that they are more correlated in one direction than another).

In this work, our focus is with wavelet basis, which presents many advantages: there exists fast wavelet transform algorithms (as for Fourier), so the computational cost remains reasonable. Also, contrary to Fourier, wavelets are localised in space and allow error correlations that are inhomogeneous in space, which is more realistic for satellite data, as well as data with missing zones.

To be more specific about wavelet transform, let’s assume the observation space is represented by a subset of

, where each number represents a given observation point location (in 1D). Wavelet decomposition consists of computing, at each given scale, a coarse approximation at that scale, and finer details. Both are decomposed on a multiscale basis and are therefore represented by their coefficients on the bases. Approximation and details coefficients are given by a convolution formula:

where

represents the approximation coefficient at scale

j at point

,

represents the details coefficient at scale

j at point

,

h and

g are functions depending on the chosen wavelets basis, each of them being equal to zero outside of their support

. Moreover, wavelet

g has

k vanishing moments. A wavelet is said to have a vanishing moment of order k if

g is orthogonal to every polynomial of degree up to

. As an example a wavelet with 1 vanishing moment is represented by a filter

g such that

. This property is very important. Indeed, if the correlation is smooth enough (i-e. can be well approximated by polynomials of degree smaller than

k), then details coefficients have a very small variance.

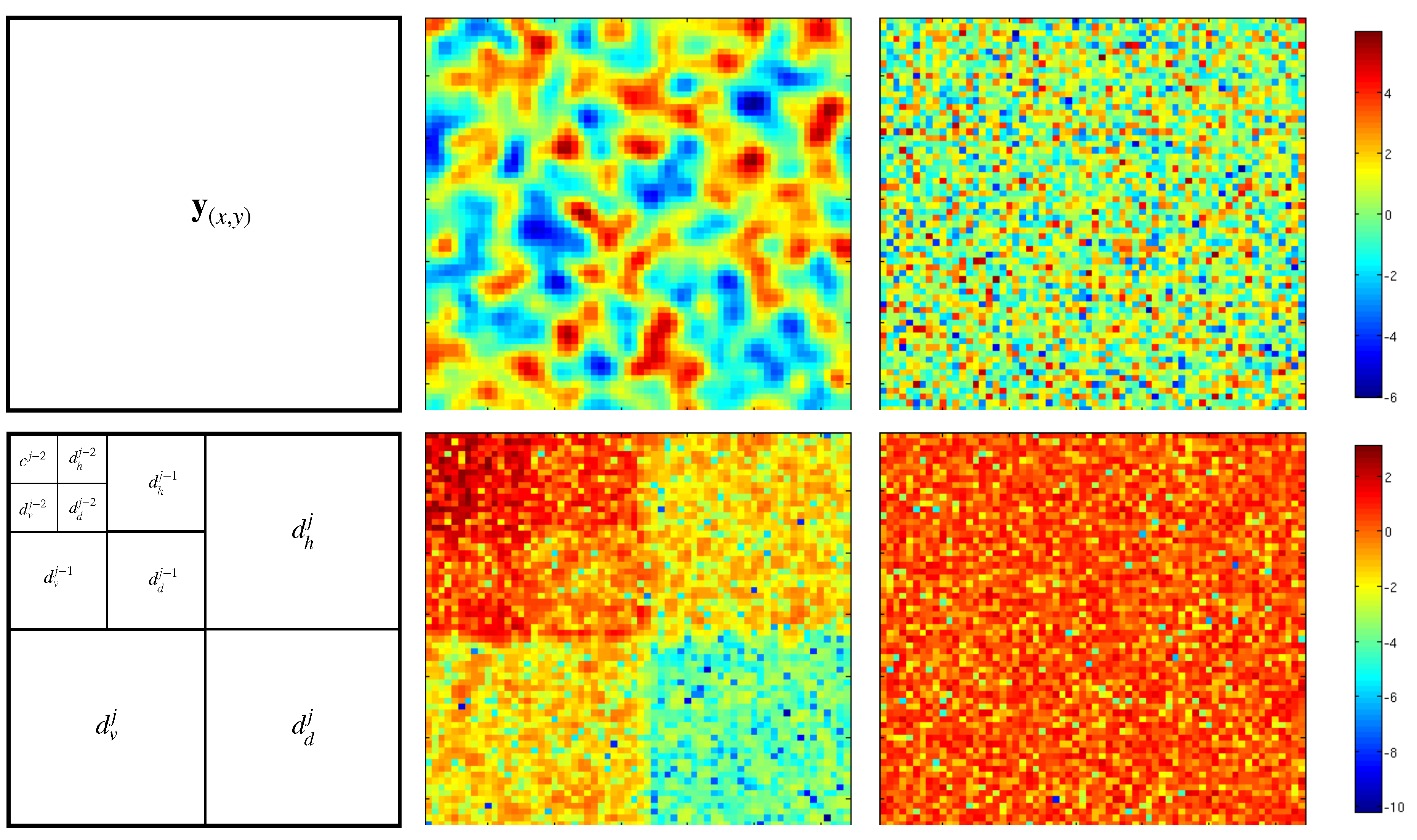

This can be extended in 2D (or more), where details coefficients at scale

j will be separated into 3 components: vertical (

), horizontal (

) and diagonal (

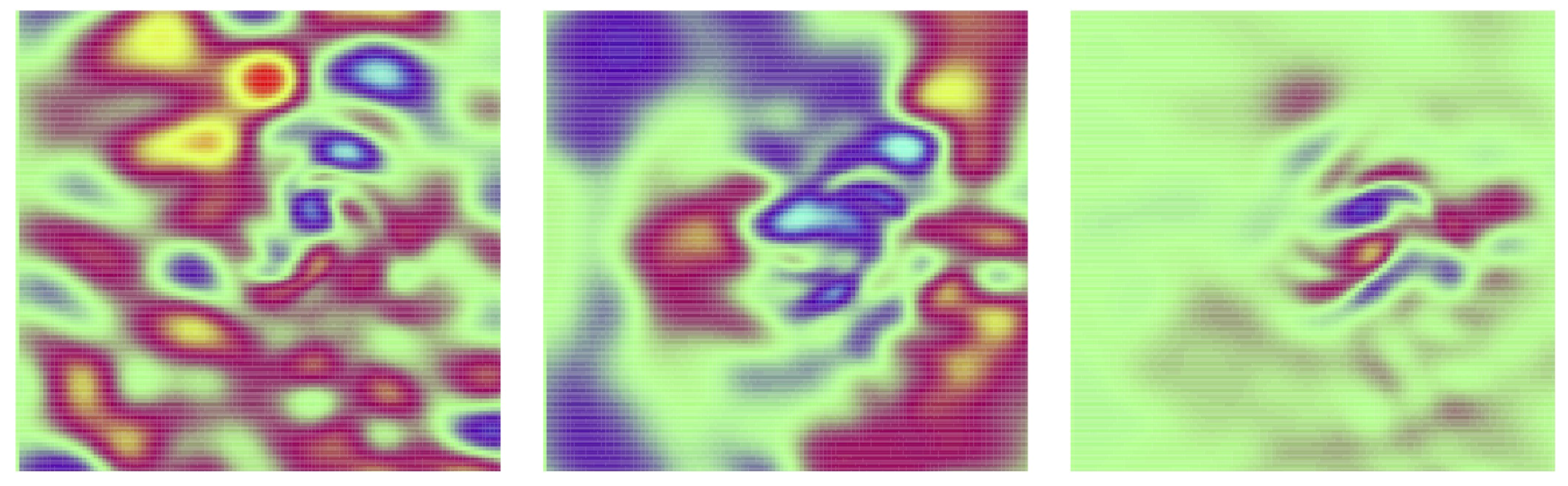

). Bottom-left panel of

Figure 2 shows the classical representation of coefficients on the wavelet space of a 2D signal. Finer details coefficient being stored in the three larger submatrices. The coarse approximation at finer scale is stored in the top-left submatrix and is itself decomposed into details and a coarser approximation. In this example the signal is decomposed into three scales.

The top row of

Figure 2 shows examples of both correlated (middle panel) and uncorrelated (right panel) noise. The bottom row shows their respective coefficient (in log-scale) in wavelet space using the representation depicted above. While uncorrelated noise affect all scales indiscriminately, the effect of correlated noise is significantly different from one scale to another (up to a factor 100 in this example). One can observe that approximation coefficients are very large compare to small scale details coefficients. This means that correlated noise (or smooth noise) affect more approximation coefficients than small scale details coefficients. This is due to the “vanishing moment” property of the wavelet

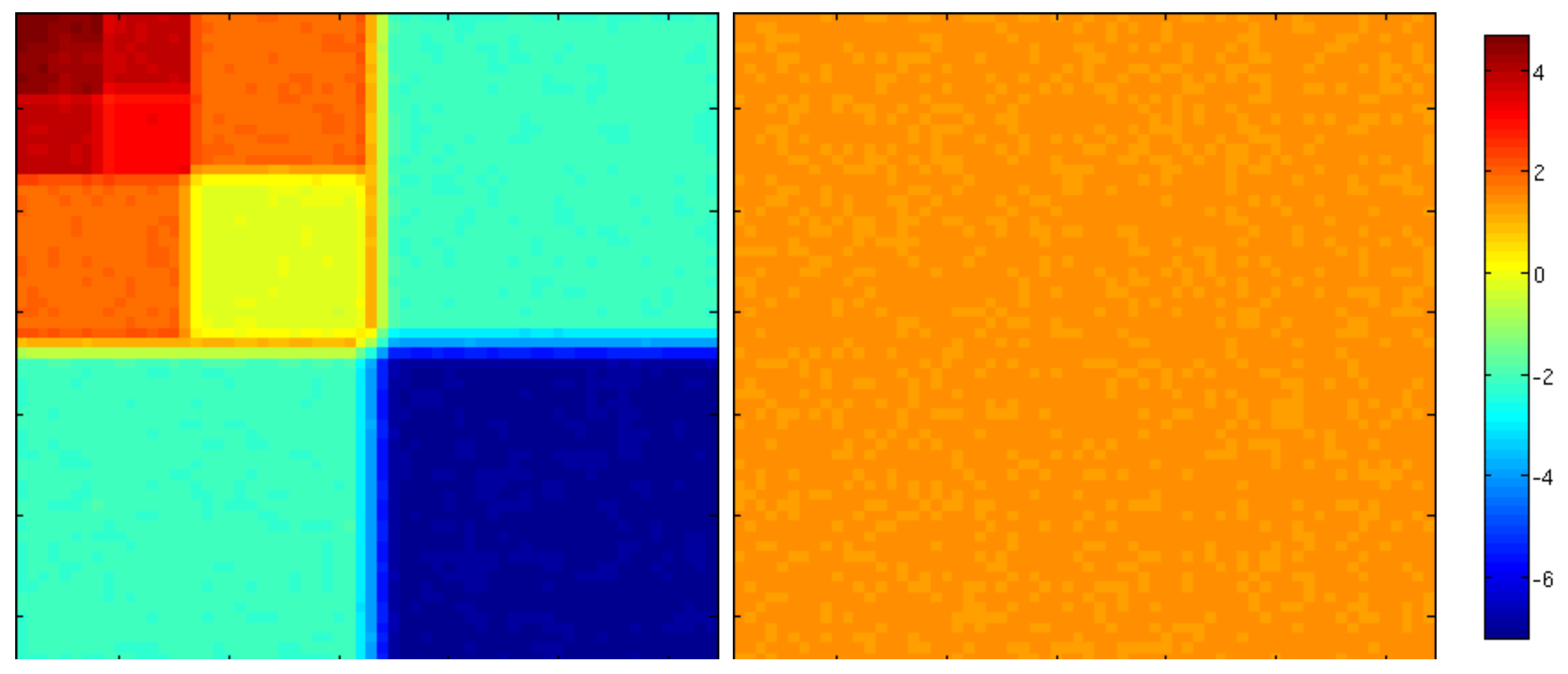

g. Additionnaly the effect of a correlated noise resemble a (different) uncorrelated noise on each scale, meaning the diagonal approximation of the error covariance matrix will be a good one, as long as the sub-diagonals corresponding to each scales are different. This is represented in

Figure 3 that shows the variances (log-scale) in the wavelet space of both correlated and uncorrelated noise from

Figure 2.

In the next paragraphs we will describe hos this transformation can be used in the two classical frameworks of Data Assimilation: variational methods and filtering methods.

2.3. Implementation in Variational Assimilation

In the framework of variational assimilation, the analysis is set to be the minimizer of the following cost function

J, which diagnoses the misfit between the observations and a priori information and their model equivalent, as in (

2):

where

is the solution of Equation (

1), when the initial state is

. In practice

stores time-distributed observations, so that it can be written as

where

is the observation operator at time

i,

is the observation vector at this time, and

is the observation error covariance matrix.

Using the wavelets change of variables

, we choose a diagonal matrix

(possibly varying with the observation time

i, but we omit the index for the sake of simplicity), and we set

so that the observation error covariance matrix that is actually defined is:

Meanwhile, the algorithm steps are:

Compute the model trajectory and deduce the misfits to observation for all i

Apply the change of variable (wavelet decomposition)

Compute the contribution to the gradient for all i:

Descent and update following the minimization process

In this algorithm, we can see that there is no need to form nor inverse

, the optimization module only sees the diagonal covariance matrix

, so that the minimization can be approached with classical methods like conjugate gradient or quasi Newton. Therefore, the only modification consists of coding the wavelets change of variable and its adjoint. As wavelet transforms are usually implemented using optimized and efficient libraries, the added cost is reasonable [

2].

2.5. Toward Realistic Applications

This approach works well with idealistic academic test cases. To go toward realistic applications, several issues need to be sorted out. In this section we address two of them. The first one is quite general and requires the ability to deal with incomplete observations, where part of the signal is missing, either due to sensor failure or external perturbation/obstruction. The second one is more specific to variational data assimilation, where the conditioning of the minimisation, and hence its efficiency, can be severely affected by complex correlation structure in the observation error covariance matrix. It is likely to also affect the Kalman Filter, in particular the matrix inversion in the observation space it requires (e.g., in Equation (

5)), but it is yet to be demonstrated.

2.5.1. Accounting for Missing Observations

When dealing with remote sensing, reasons for missing observation are numerous, ranging from a glitch in the acquisition process to an obstacle blocking temporarily one part of the view. This may be quite detrimental to our proposed approach since it violates the multi-scale decomposition hypotheses. However, contrary to Fourier, wavelets (and many, if not all, x-lets) have local support that may be exploited to handle this issue. Please note that the same kind of issue can arise in case of complex geometry. For instance if one observes sea surface temperatures, land is present in the observation, while not being part of the signal. Somehow it can be treated as missing value.

One possibility would be to use inpainting techniques to fill in the missing values. However, this would make the associated error very difficult to describe. Indeed, it would require the estimation of the errors associated with introducing ’new’ data in the missing zones, which is likely to be of different nature than that of original observations.

The idea is therefore to adapt the

matrix to the available data. Without any change of variable, the adaptation would be straightforward, as we would just have to apply a projection operator

to both the data and the

matrix:

where the projector

maps the full observation space into the subset of the observed points, and

represents the full observation vector (with 0 where there is no available data).

When using a change of variable into wavelet space, it is a bit more tricky to perform, as a given observation point is used to compute many wavelet coefficients. Vice-versa a given wavelet coefficient is based on several image observation points. As a consequence, if some observation points are missing and others are available, it may result in “partially observed” wavelet coefficients, as schematized in

Figure 4.

Our choice is to still take into account these coefficients (and not discard them, because it would result in discarding too much information, as a single missing observation point affects numerous wavelet coefficients), but to carefully tune the diagonal coefficient of the diagonal matrix .

Missing observation points have two opposite effects:

To account for both effects, we propose an heuristic to adjust the variance

(in other words, the coefficients of the diagonal matrix

) corresponding to coefficients whose support is partially masked as follows:

where:

is the original error variance (e.g., given by the data provider);

is multiplied by the variance of the wavelet coefficient without any correlation : it accounts to inflating the variance due to missing information (loss of the error averaging effect);

stands for information content, and models the deflation effect. It takes into account the impact of missing observation points on the considered wavelet coefficient.

We now explain how and I can be tuned. For the sake of simplicity, let us assume that our observation lives in a one dimensional space.

Computation of I

The deflation percentage

I for each coefficient is computed using also a wavelet transform, where

h and

g are replaced by constant functions with the same support:

Those functions extract the percentage of observation point present on the wavelet support. We proceed as follows. First we set the mask corresponding to the missing observation, it is an observation vector m equals to 1 where the observation point is observed and equal to zero where the observation point is missing. The wavelet transform of the mask aims to keep track of the impact of any missing observation point on any given wavelet coefficient. The percentage I is computed for each coefficient and by induction:

At the finest scale

:

Computation of

Let us now explain how to compute the inflation coefficient

. As explained previously, as

g has

k vanishing moments, small scale coefficients have small variances. However, when using masked signal, one loses this property. In other world, missing data damages the smoothness of the signal (and of the noise), which in turn damages the efficiency of wavelet representation. The coefficient

reflect the loss of the first vanishing moment: in the following formula we can see that

is zero if the first vanishing moment is preserved, and non-zero if not, in order to inflate the variance of small scales. For the finest scale,

is given by:

Indeed, means that wavelet still has a 0-th order null moment, even with missing coefficients, and in that case .

Coarser scales coefficients are computed by induction, as:

Finally, the variance model is modified as follows for every detail coefficient whose data is partially missing:

For approximation coefficient, only the deflation factor is used:

Indeed, when the error is correlated, the variance of the approximation coefficient

is much greater than

. This is the case on

Figure 3 where

while

. Moreover,

h can be seen as a local smoothing operator (

) and therefore correlated errors do not compensate themselves. Consequently, their is no need for inflation. Inversely, for finer details, in our example

so

has a significant impact on those scales.

These modifications give therefore a new diagonal matrix

which takes into account the occurrence of missing information.

Section 3 will present numerical results.

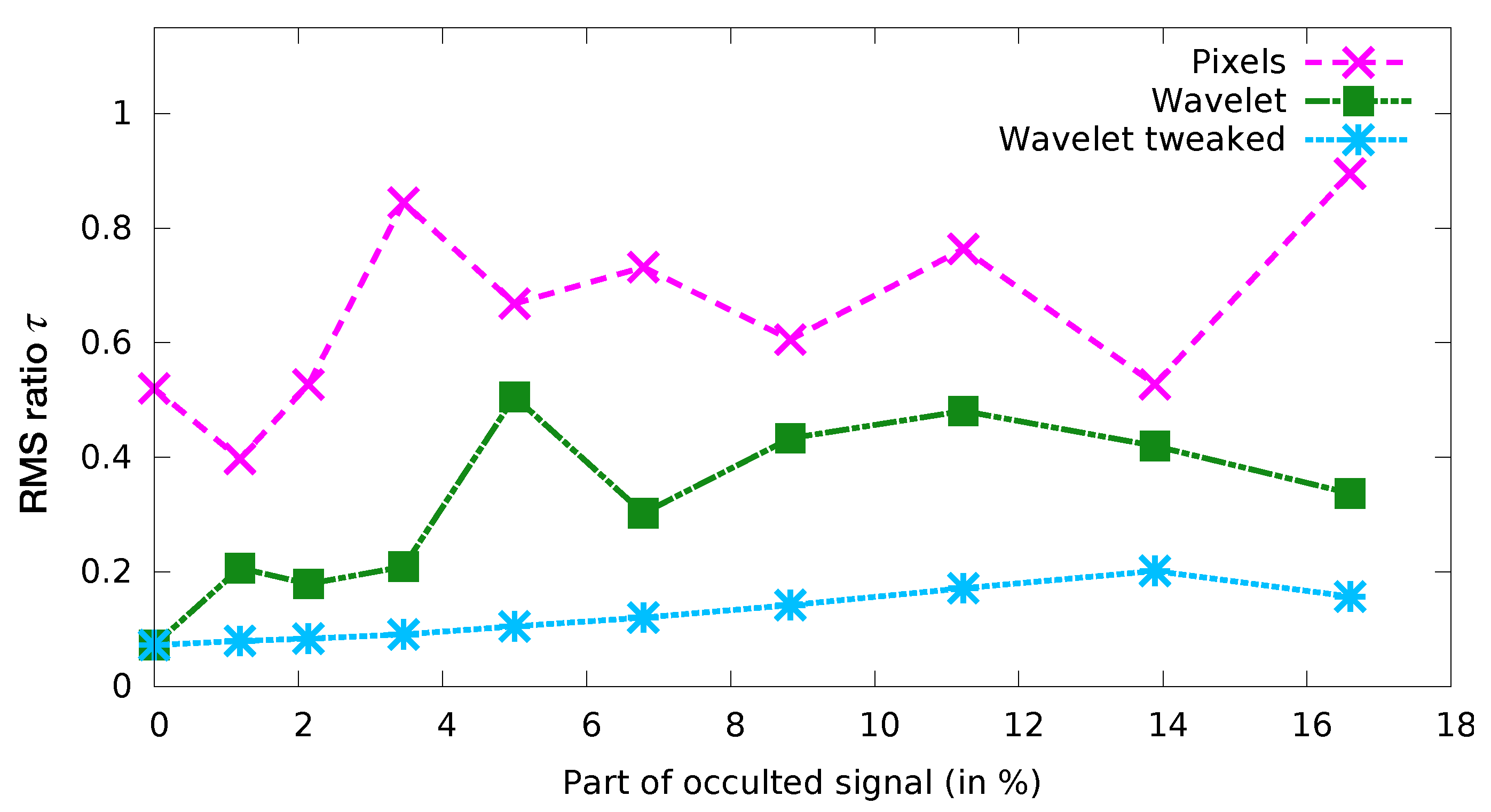

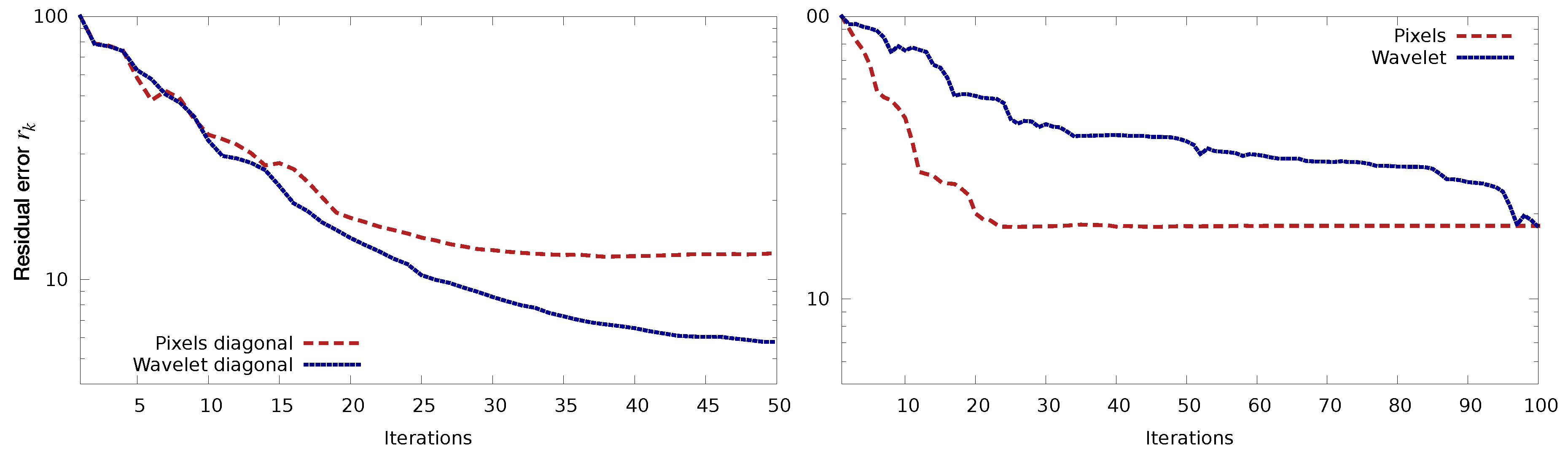

2.5.2. Gradual Assimilation of the Smallest Scales

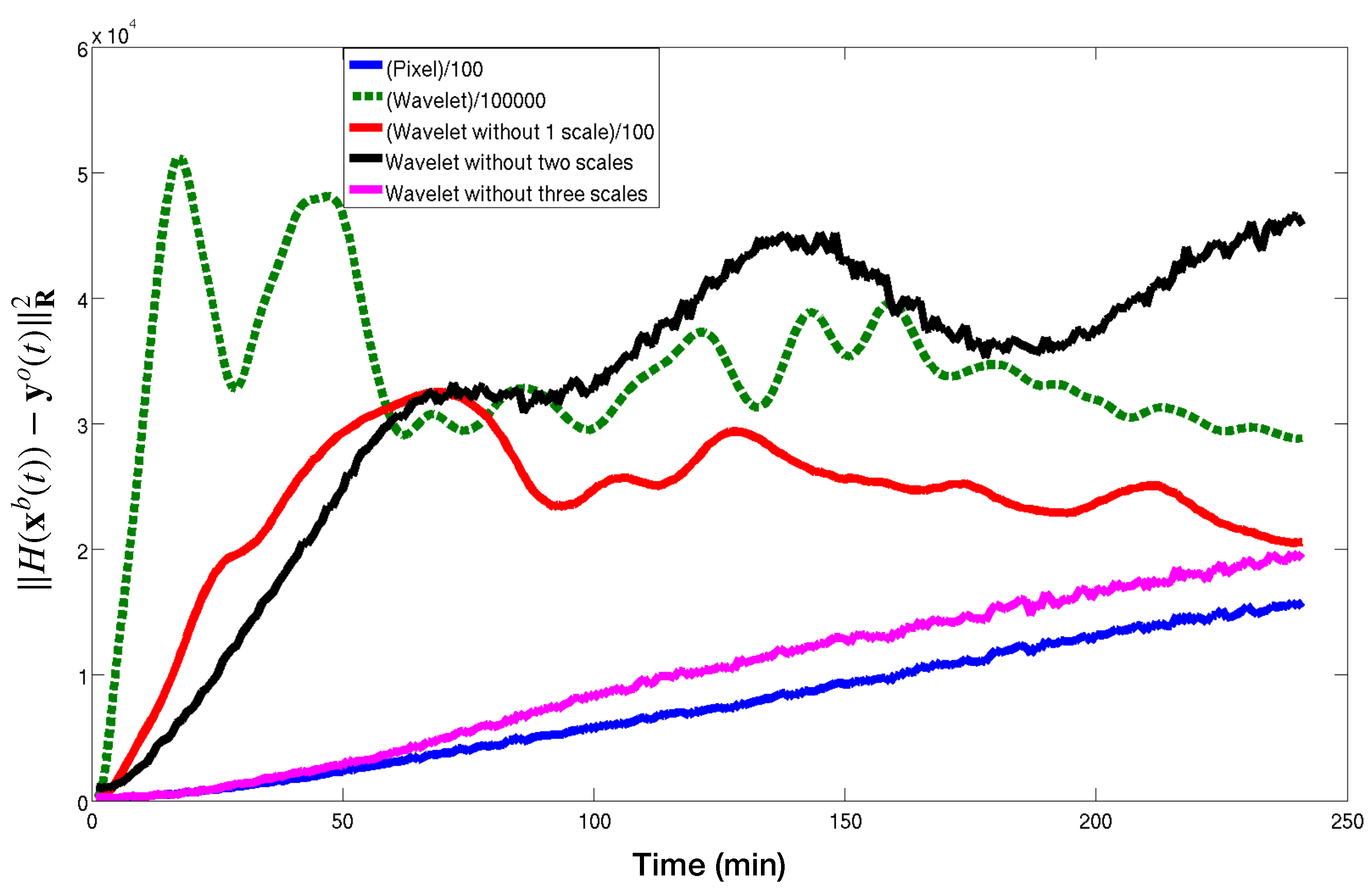

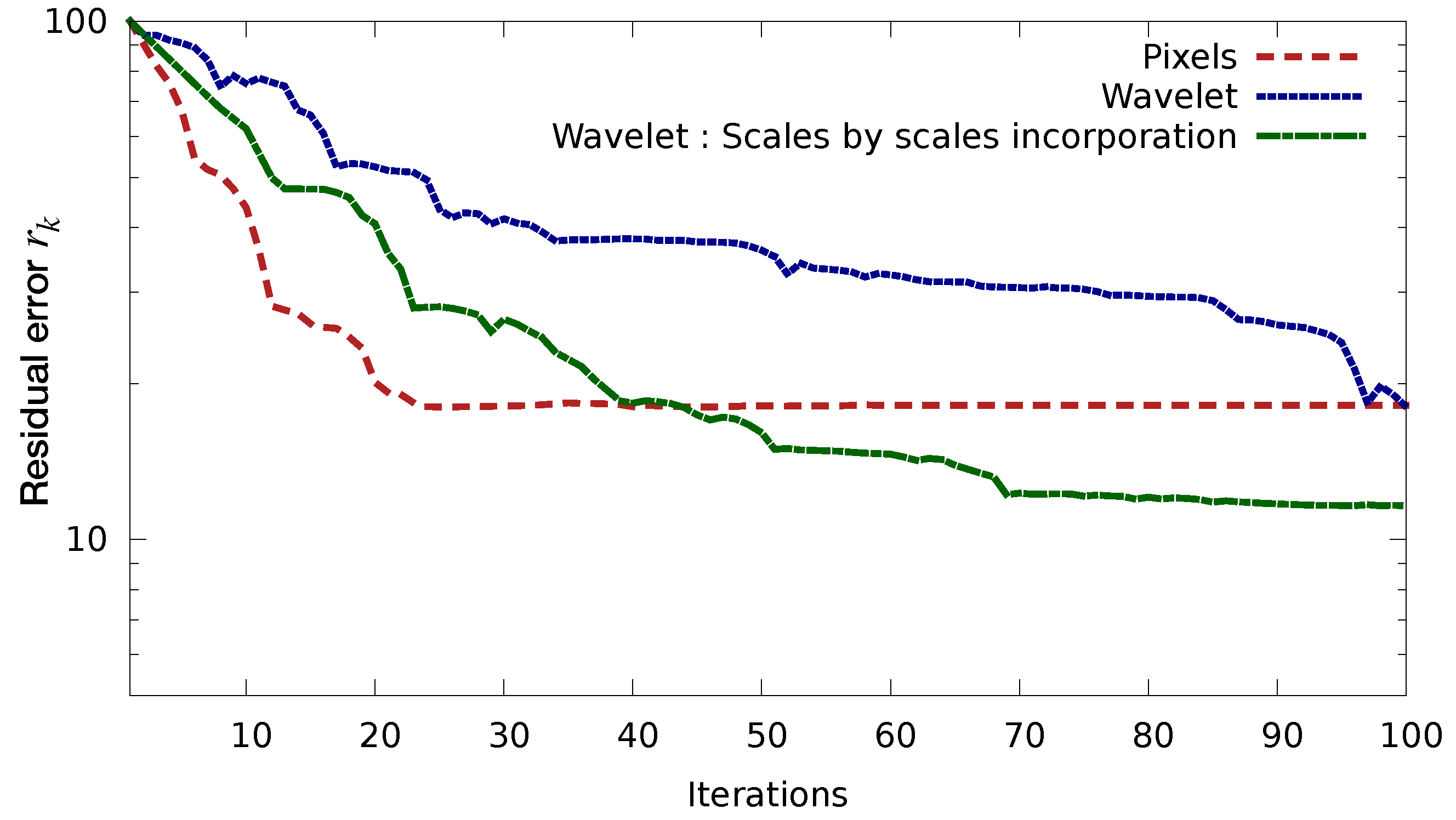

As will be shown in the numerical results

Section 3 below, another issue can occur with real data: convergence issues due to the nature of observation errors. Indeed, what our experiments highlight is that our test-case behaves well when the represented error correlation are Gaussian and homogeneous in space. For correlated Gaussian errors whose correlations are inhomogeneous in space, convergence issues occur to the point that it destroys the advantage of using wavelets: they do worse than the classical diagonal matrix without correlation. Please note that in a general case, even accounting for homogeneous noise may degrade the conditioning of the minimization [

4]. Wavelet transform does not change the conditioning of the problem, but its multi-scale nature can be of help to circumvent this problem.

Numerical investigation of the results shows that some sort of aliasing occurs for small wavelet scales. Indeed, smallest scales are the least affected by the correlated noise, so they are not well constrained by the assimilation and they tend to cause a divergence when large scales are not well known either, which is at the beginning of the assimilation iteration process. Removing the smaller scales altogether is not a suitable solution, as they contain valuable information we still want to use. The proposed solution is therefore to first assimilate the data without the small scales and then add smaller scales gradually. Please note that this is not a scale selection method per se, as all scales will eventually be included. It can be related to the quasi-static approach [

17] that gradually include observations over time.

Description of the Gradual Scale Assimilation Method

Let us rewrite the observation cost function given by Equation (

3):

where

, for

, (resp.

) represent the wavelet coefficients at scale

s of the signal

(resp.

) and the

are the associated error variances (corresponding to the diagonal coefficients of the matrix

).

Let us denote by

the total cost corresponding to the scale

s and observation time

i:

We then decide that the information at a given scale is usable only if the cost remains small, e.g., smaller than a given threshold

, we define the thresholded cost

by:

The new observation cost function is then:

As mentioned before, the same issue could arise when using Kalman Filter type techniques during the matrix inversion needed when computing the gain matrix. Similar approaches based on iterative and multi-resolution could be used to sort this out.

2.6. Experimental Framework

Numerical experiments have been performed to study and illustrate the two issues that were previously highlighted: how to account for covariances with missing observations, and how to improve the algorithm convergence while still accounting for smaller scale information. This paragraph describes the numerical setup which has been used.

We wish to avoid adding difficulty to these already complex issues, therefore we chose a so-called twin experiment framework. In this approach, synthetic observations are created from a given state of the system (which we call the “true state”, which will serve as reference) and then used in assimilation.

The experimental model represents the drift of a vortex on the experimental turntable CORIOLIS (Grenoble, France), which simulates atmospheric vortices in the atmosphere: the turning of the table provides an experimental environment which emulates the effect of the Coriolis force on a thin layer of water. A complete rotation of the tank takes 60 seconds, which corresponds to one Earth rotation.

2.6.1. Numerical Model

A numerical model represents the experiment, using the shallow-water equations on the water elevation

and the horizontal velocity of the fluid

, where

u and

v are the zonal and meridional components of the velocity. The time variable

t is defined on an interval

, while the space variable

lives in

a rectangle in the plane

. The equations write:

The relative vorticity is denoted by and the Bernoulli potential by , where g is the gravity constant. The Coriolis parameter on the -plane is given by , is the diffusion coefficient and r the bottom friction coefficient. The following numerical values were used for the experiments: , , , and . The model is discretized using a finite differences scheme over a grid and a 4th-order Runge-Kutta scheme in time, with a time step of 2.5s. Please note that this means the model fields can be decomposed in up to 7 different scales using wavelet transform ().

Additional equations represent the evolution of the tracer concentration (fluorescein):

where

is the initial concentration of the tracer (assumed to be known),

is the tracer diffusion coefficient and

the fluid velocity computed above.

2.6.2. Synthetic Observations for Twin Experiments

In the twin experiment framework, observations are computed using the model. A known “true state” is used to produce a sequence of images which constitutes the observations. Therefore, the observation operator

is given by:

where

comes from (

6).

Then assimilation experiments are performed starting from another system state, using synthetic observations. The results of the analysis can then be compared to the synthetic truth.

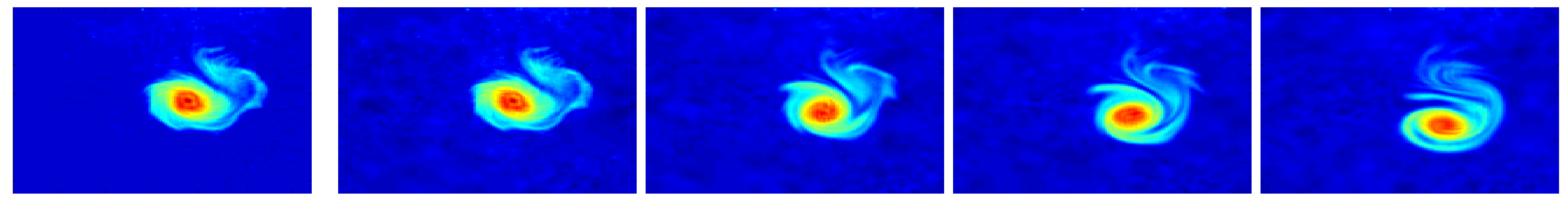

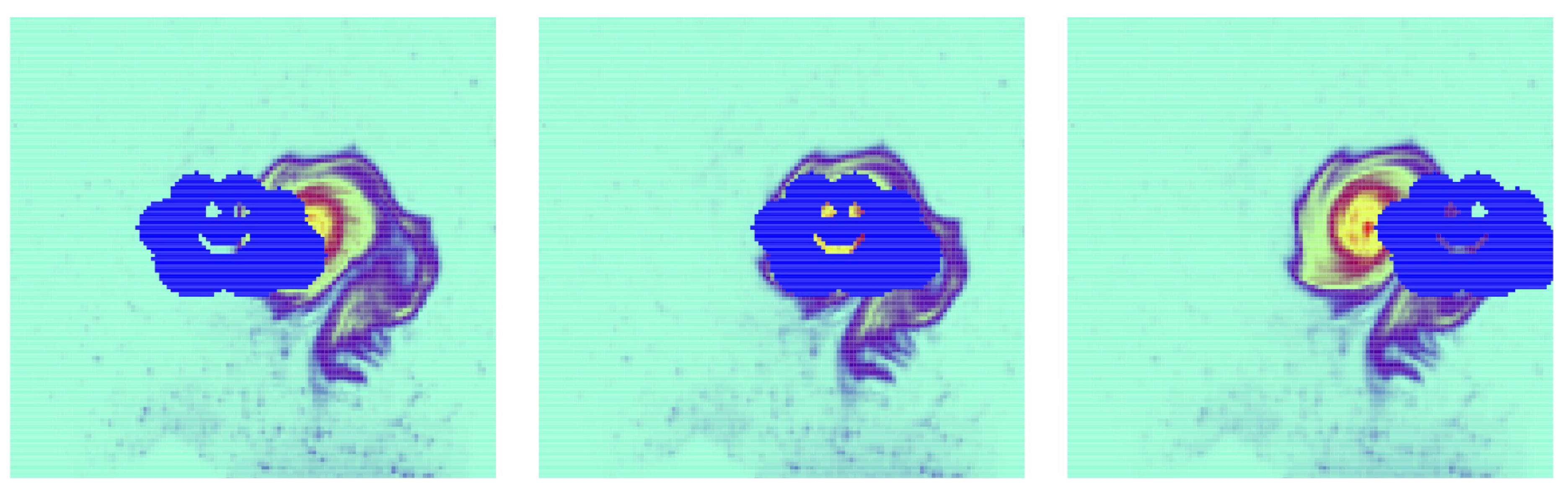

Unless otherwise stated, the assimilation period will be of 144 min, with one snapshot of passive tracer concentration every 6 min (24 snapshot in total). A selection of such snapshots is shown in

Figure 5.

The observations are then obtained by adding an observation error , with and a suitably chosen matrix.

Our experiments will focus on three different formulations of the observation error covariance matrix. We will refer to “Pixels” the experiments for which there is no change of variable and the observation error covariance matrix is equal to

. “Wavelet” will represent the experiments with the wavelet change of variable

and the observation error covariance matrix

. Finally, the last set of experiments will proceed as for the wavelets but will adjust the observation error covariance matrix according to the computations presented in

Section 2.5.1 and

Section 2.5.2. The following

Table 1 summarises this up.