1. Introduction

Aging in Japan has been progressing rapidly not only because of an increasing number of elderly people and their longevity, but also a decreasing number of young people caused by a declining birthrate. Although the demand for nursing-care services has been growing along with the continuously developing aging society, the supply is insufficient because of changing demographics [

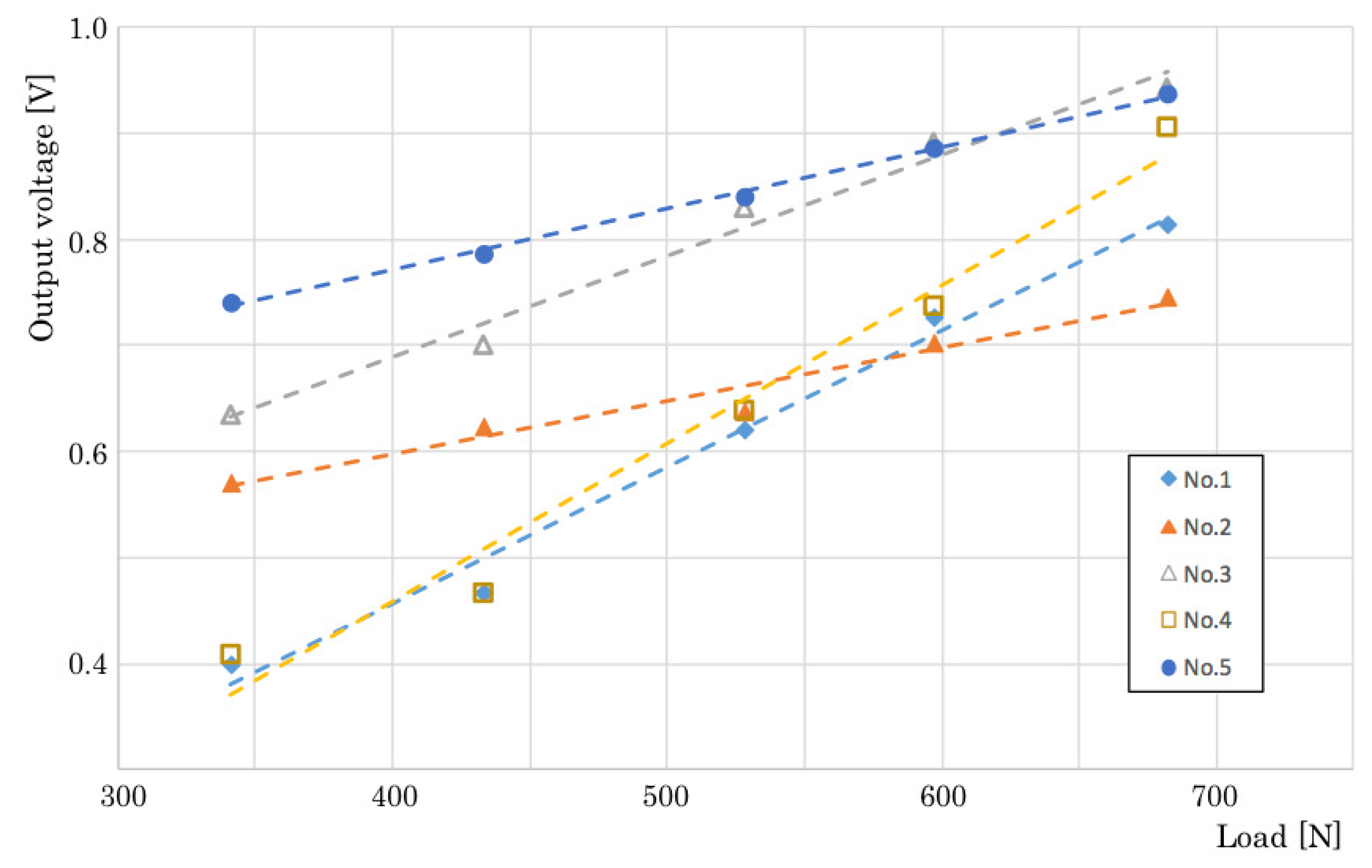

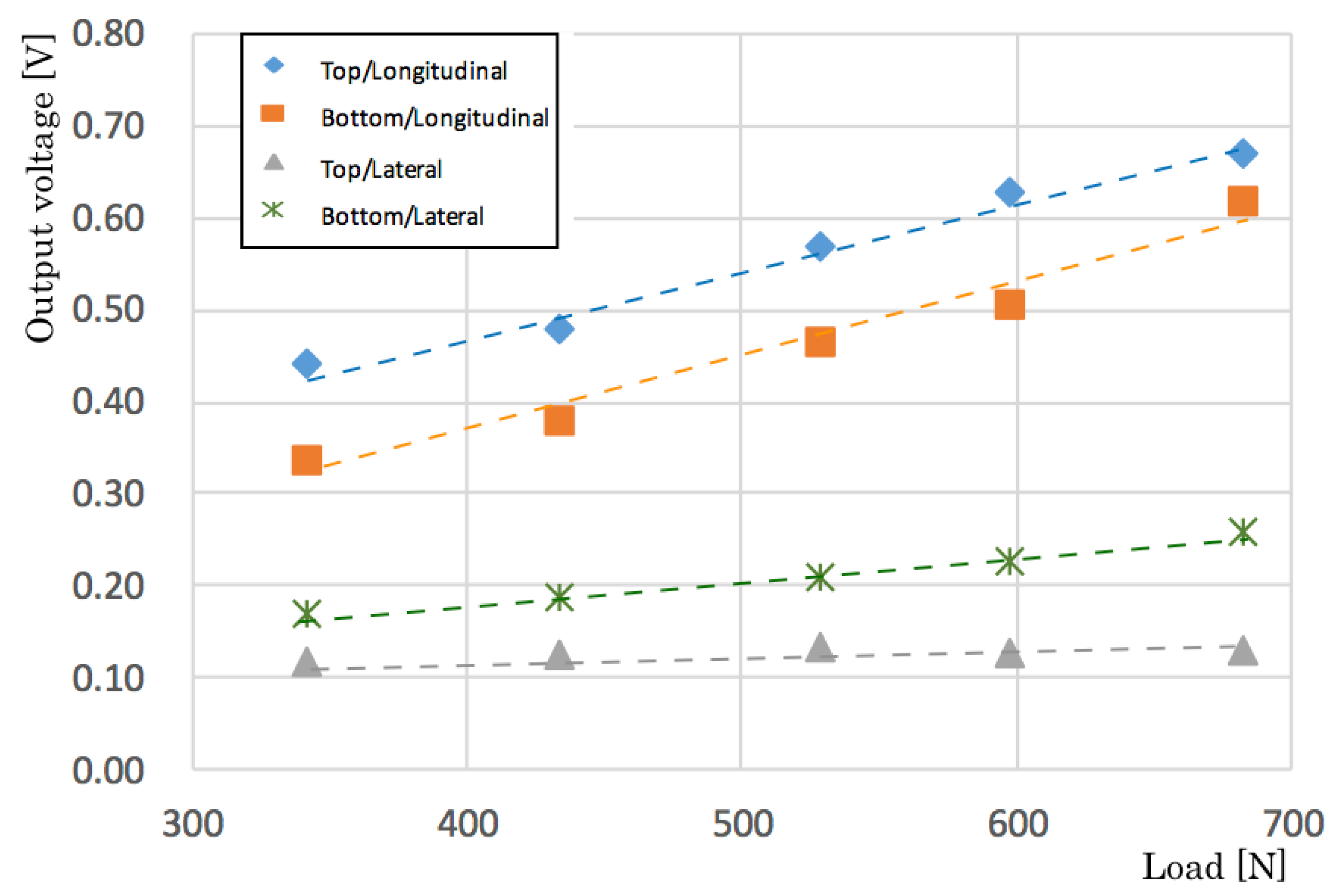

1]. As an occupational characteristic of caregivers, the occupation and turnover rates are both high compared to those of other industries [

2]. Caregivers must work not only to provide various care services physically and mentally, but also for shifting during nighttime for providing 24-hour nursing services and support. Especially for nighttime, a severe caregiver shortage leads to insufficient nursing-care, which involves a risk of inducing accidents related to the daily life for care recipients.

Mitadera et al. [

3] reported that fall accidents of elderly people accounted for more than 50% of all accidents at nursing-care facilities. Situational details reveal that most accidents occurred when elderly people left their bed and its surroundings. Moreover, 85.5% of fall-related accidents occurred under circumstances without assistance or supervision. Therefore, preventive measures using bed-leaving sensors are indispensable for detecting bed-leaving behavior in an early stage because facility administrators are charged with management responsibility if an accident occurs.

Recently, various bed-leaving sensors are commercially available from manufacturers. For example, clip sensors, mat sensors, infrared (IR) sensors are widely used at hospitals and nursing-care facilities. Although clip sensors are used easily because they are the most reasonable means available, care recipients are restrained by sensor wires because they are attached directly to the patient’s nightwear. Moreover, a risk exists that a sensor wire might wrap around the neck of a care recipient. Therefore, the use of clip sensors has been discouraged recently. Mat sensors, which are inexpensive even compared with clip sensors, are used widely at clinical sites because they entail no restraint. One shortcoming of mat sensors is their slow detection and response from the position where a care recipient sits at the bed terminal while putting their feet on it. Another shortcoming is the excessive reaction even when a caregiver or a family member passes through while stepping on it. Furthermore, a care recipient might attempt to leave while consciously avoiding stepping on a mat sensor because it is a visible sensor. IR sensors present similar shortcomings to those of mat sensors. Moreover, caregivers must check the sensor installation status because care recipients touch them occasionally.

To prevent fall accidents, sensor systems with bed-leaving behavior predictions at an early stage have been studied. Asano et al. [

4] proposed a detection system using a depth camera. They employed support vector machines (SVMs) to recognize bed-leaving behavior patterns after optimizing parameters and motion features combined with the body size, position, and orientation of respective subjects. Their experimentally obtained result achieved 92.65% recall after 68 iterations. However, the precision was insufficient for practical application because false detection occurred in within 24 iterations. Moreover, they used a depth camera for capturing images. Although it is difficult to identify profiles solely from depth images, a challenging task remains: eliminating unpleasantness felt by a patient who is monitored by a camera.

Kawamura et al. [

5] proposed a wearable sensor system using a three-axis accelerometer. For their system, unrestrained measurements are actualized using a lightweight sensor module of 13 g. With consideration of clinical applications, they obtained not only metaparameters that contributed to recognition, but also experimentally obtained results with bed-up and wheelchair locomotion. No recognition accuracy was provided as detailed sensor characteristics. Moreover, they provided neither recognition accuracy nor detailed sensor characteristics. Existing bed-leaving sensors entail persistent difficulties related to quality of life (QoL), detection speed, convenience, and cost. No sensors that satisfy these requirements have been put to practical use. In modern society, the declining birthrate and aging population are progressing rapidly. We therefore regard development of sensor systems that overcome these problems as an urgent task to.

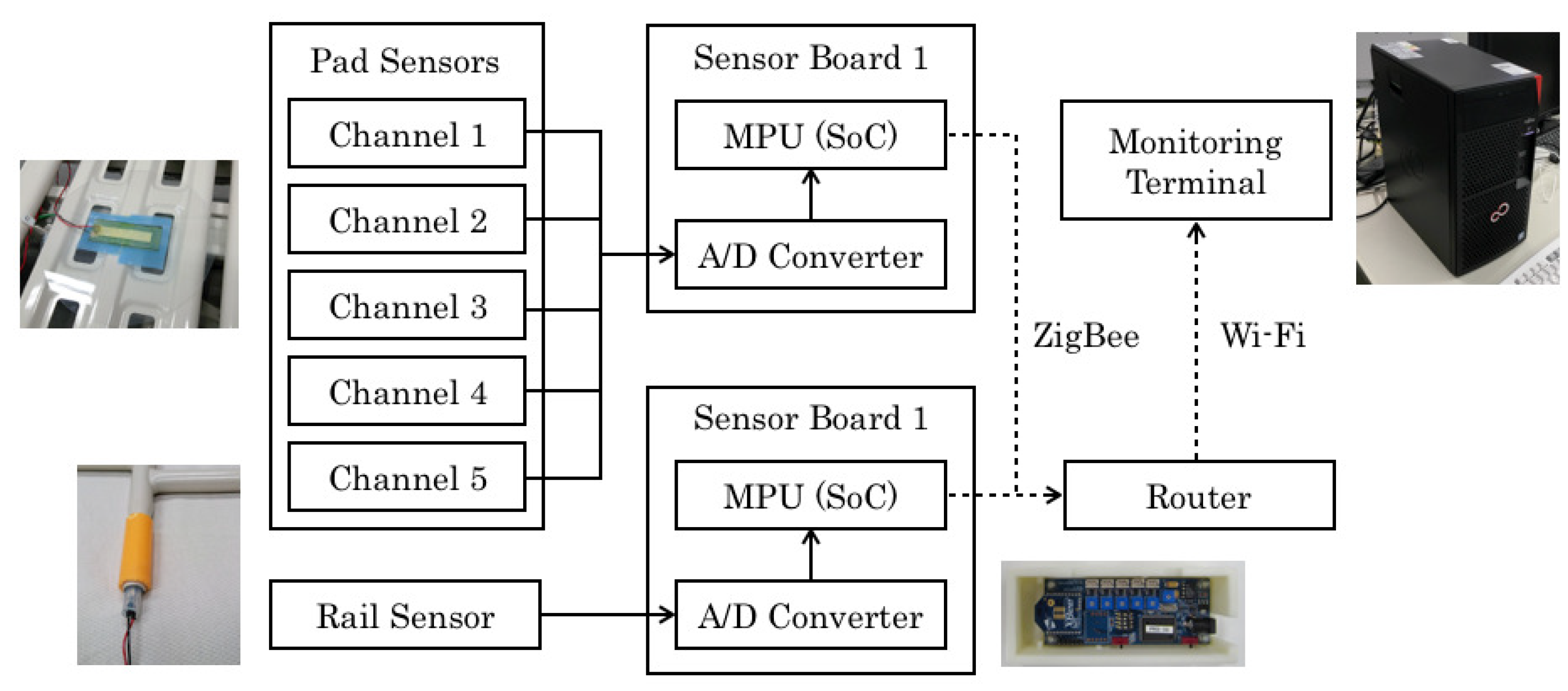

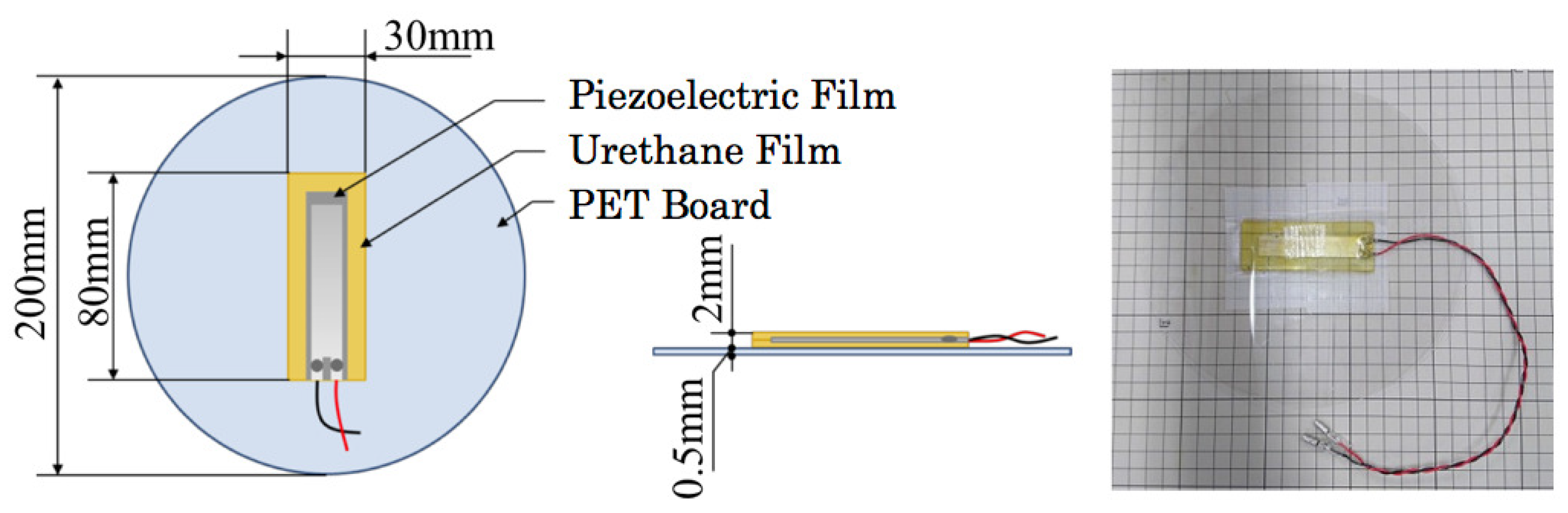

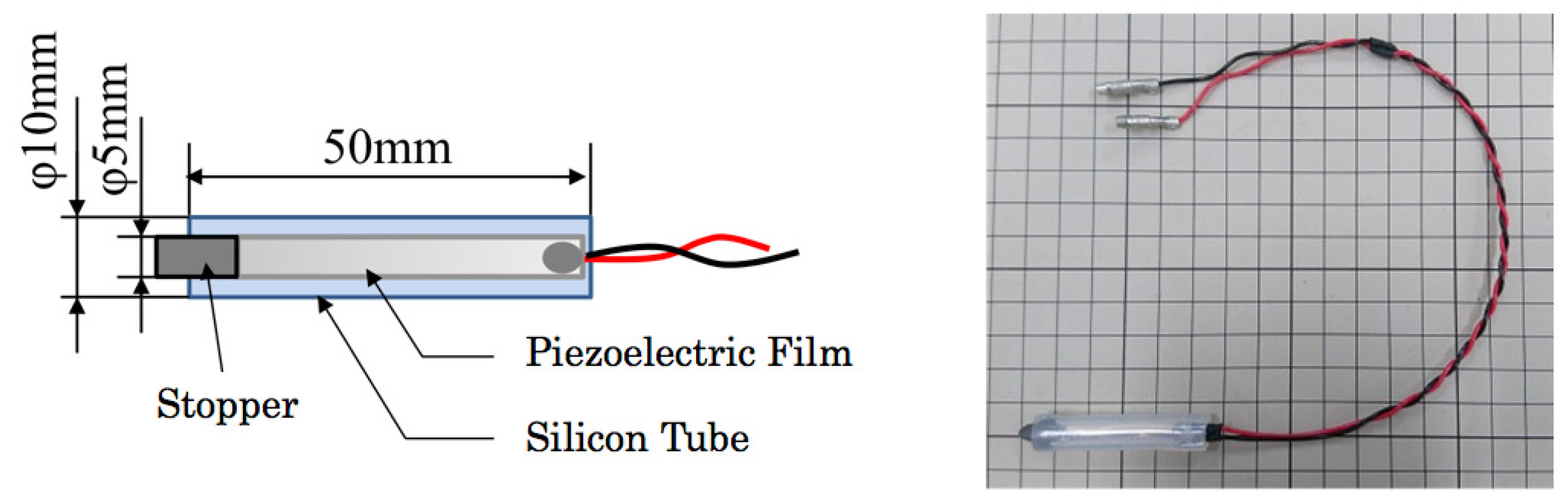

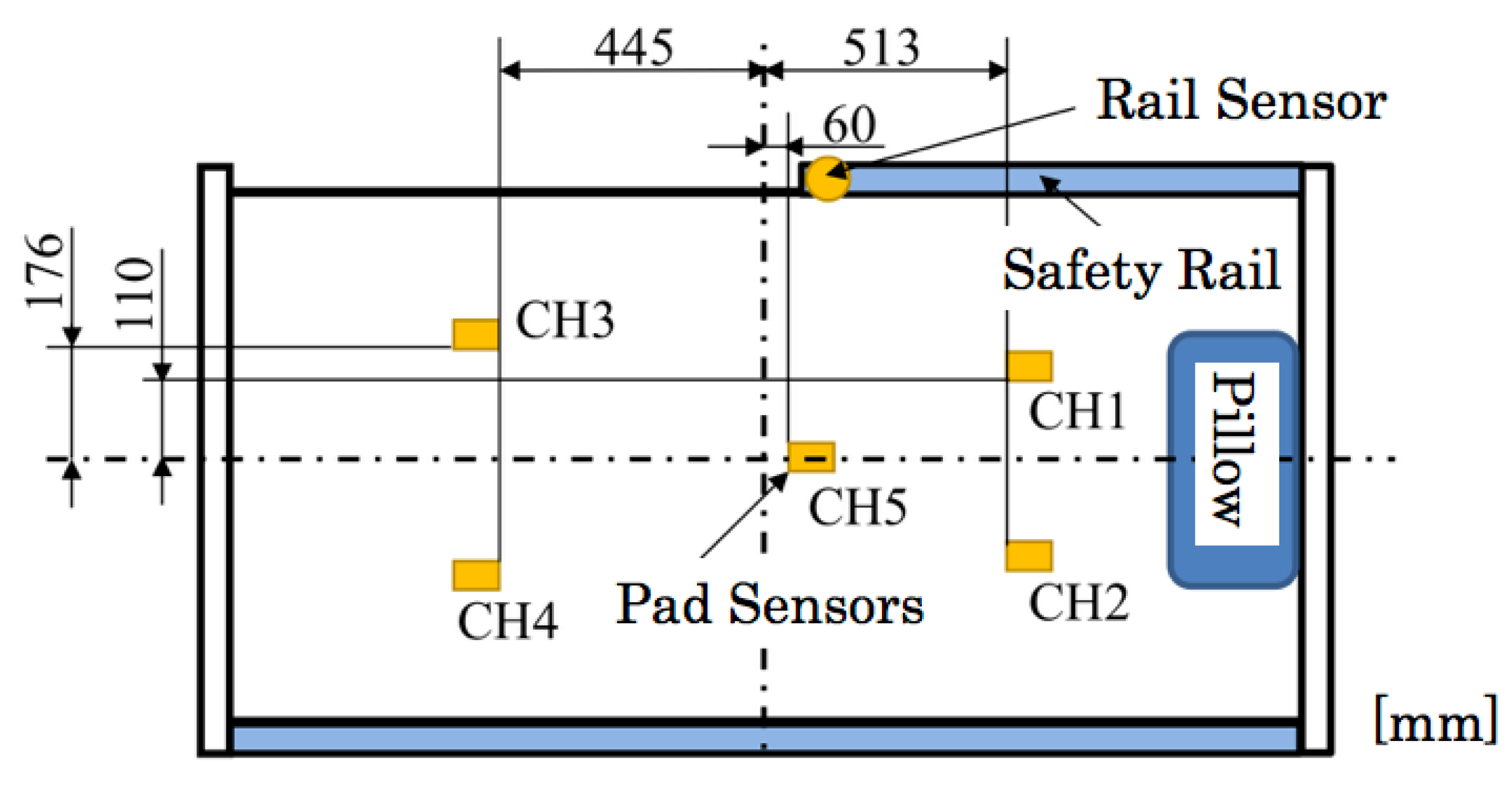

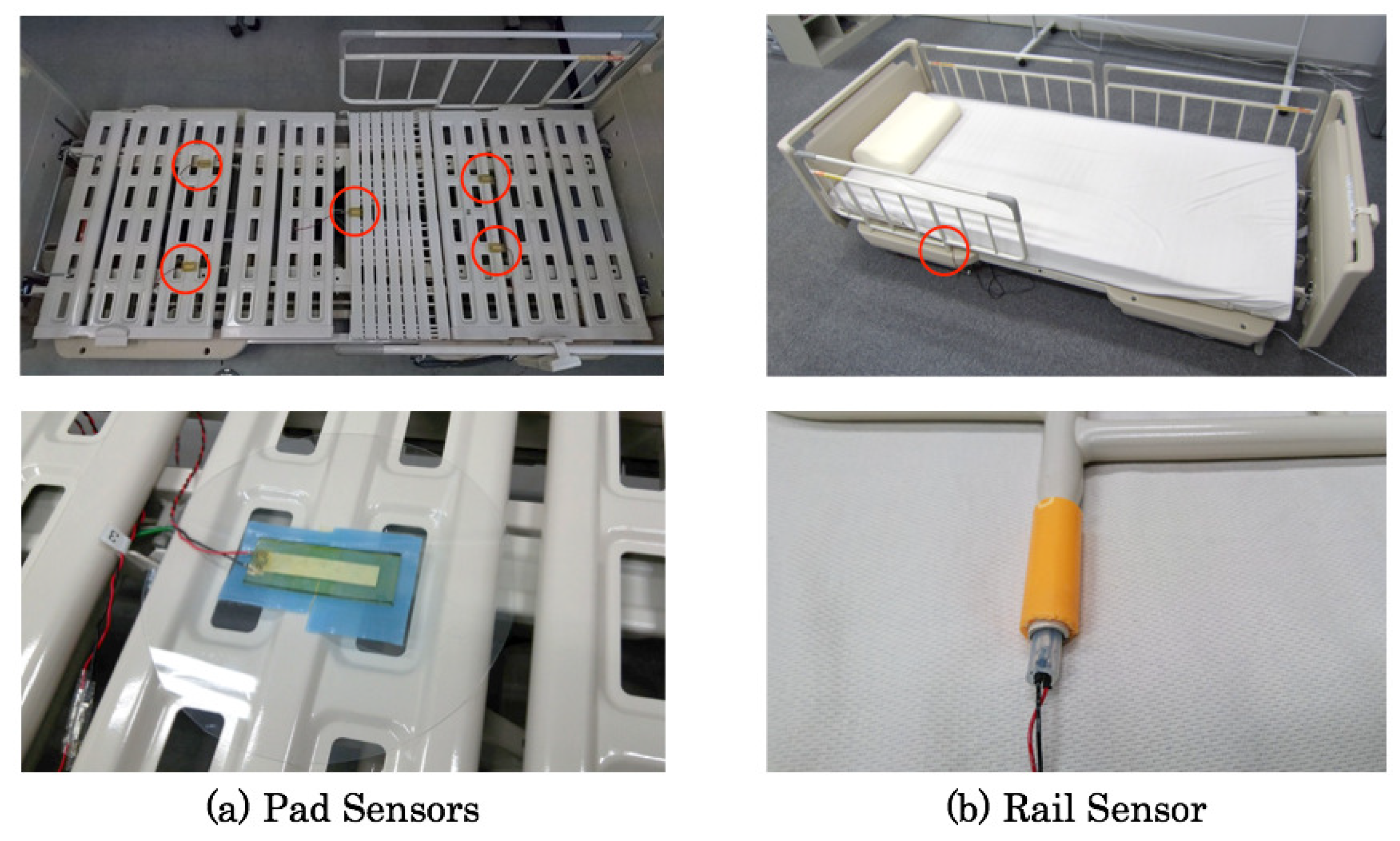

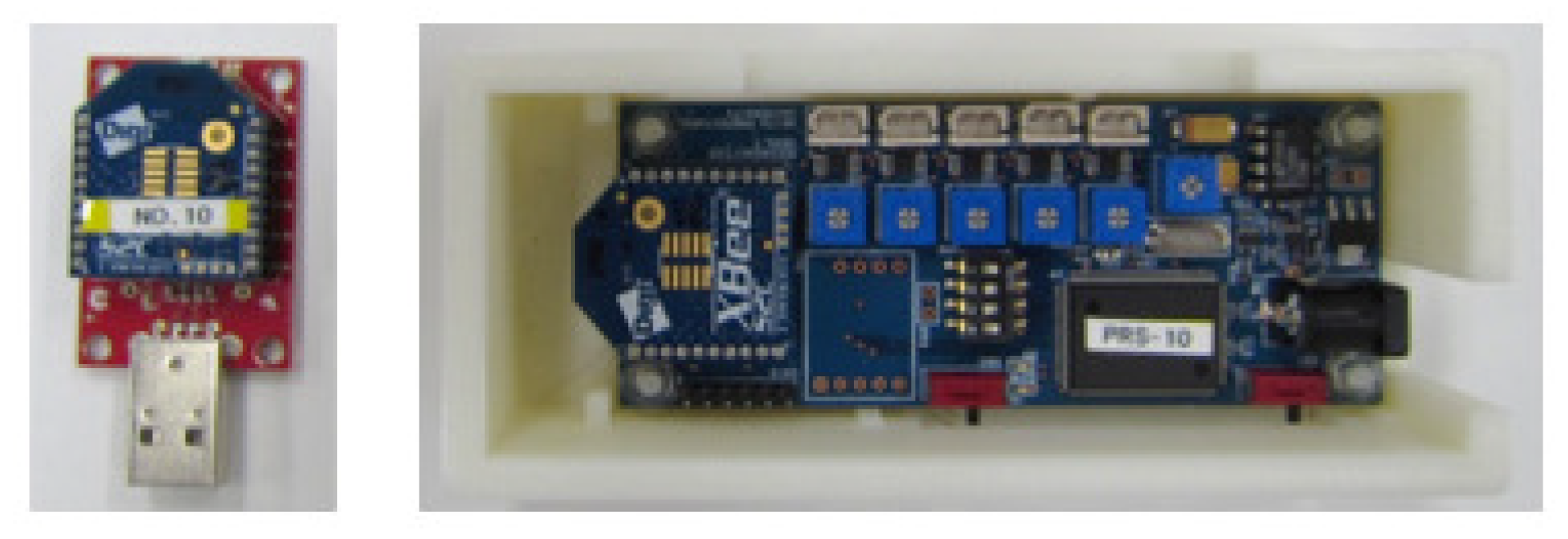

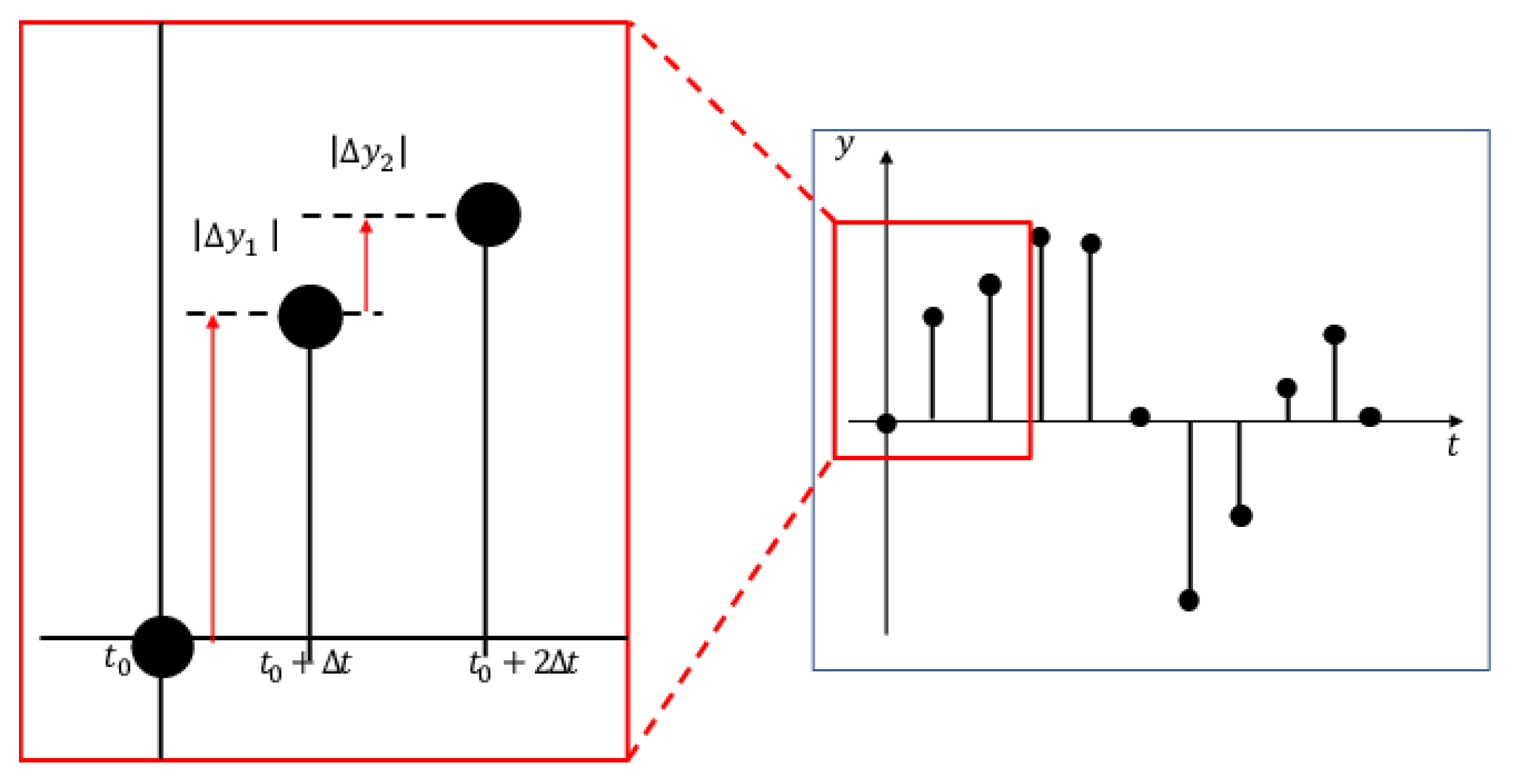

This study was conducted to develop a bed-leaving recognition sensor system that is inexpensive, convenient, and maintainable with advanced QoL for care recipients. For improving recognition accuracy and reliability compared with our earlier bed-leaving sensor system [

6], our novel sensor prototype comprises pad sensors and a rail sensor installed respectively on a bed frame and a bed-side safety rail. For evaluating our sensor system, we obtained original benchmark datasets of two types: continuous datasets with behavior transitions from sleeping to bed leaving in a predefined interval and discontinuous datasets with free and random movements obtained from 10 subjects. We compared machine learning algorithms of five types to recognize five behavior patterns. Our earlier study [

6] provided respective classifiers because bed-leaving behavior patterns have unique characteristics along with their subjects. Nevertheless, for this learning strategy, the recognition accuracy was insufficient to ensure reliability for discontinuous datasets. Therefore, we developed a single classifier using all continuous datasets. The experimentally obtained results revealed that the proposed sensor system improved recognition accuracy for both datasets.

The rest of the paper is structured as follows. In

Section 2, our originally developed sensors of two types and their measurement system are presented.

Section 3 and

Section 4 present our proposed method based on machine learning algorithms of five types and our original datasets obtained from ten subjects, respectively. Subsequently,

Section 5 presents evaluation results with recognition accuracies and confusion matrixes in respective datasets, respectively. Finally,

Section 6 concludes and highlights future work. Herein, we had proposed this basic method with originally developed sensors of two types in the proceeding [

7]. Moreover, we had presented basic characteristics of pad sensors in the proceeding [

8]. For this paper, we have described detail results and discussion in

Section 5.

4. Datasets

4.1. Target Behavior Patterns

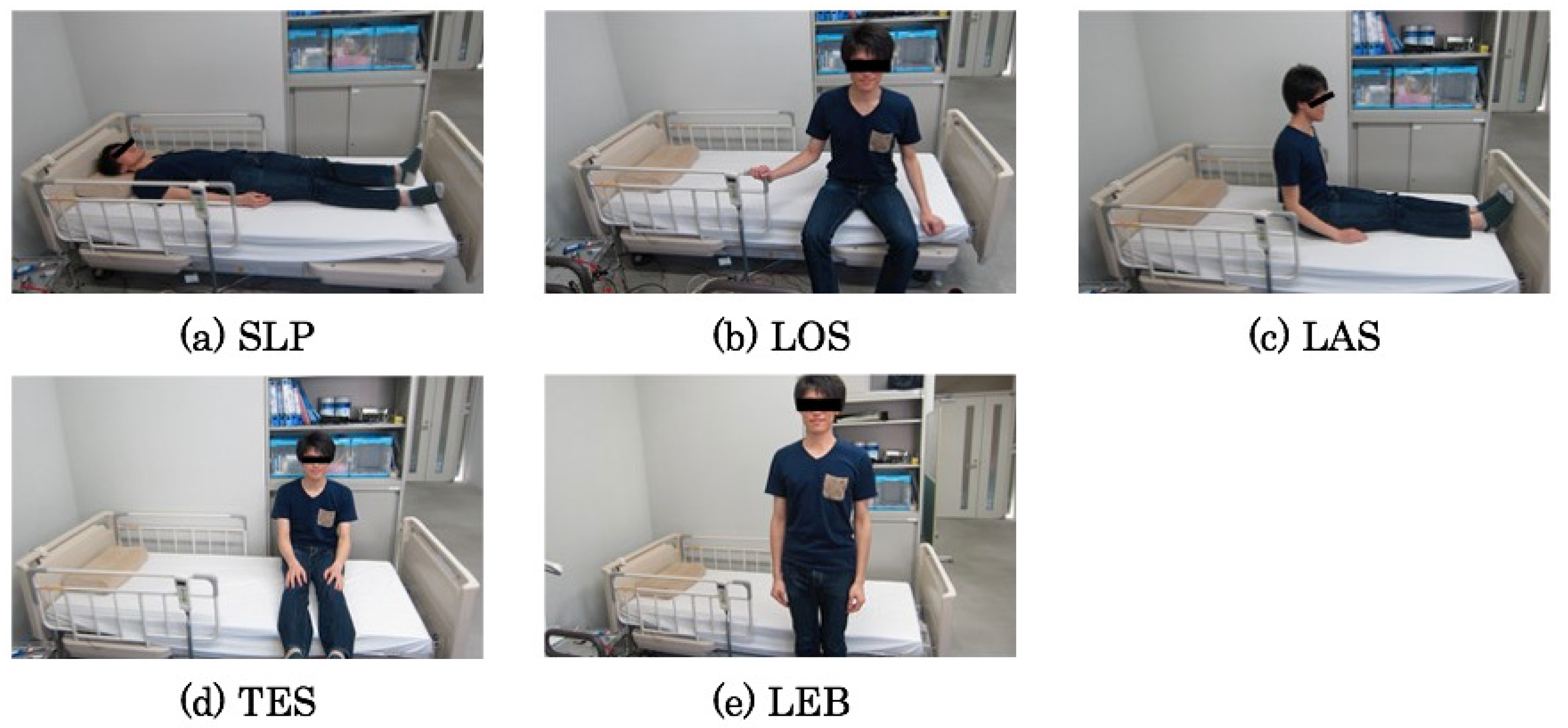

Figure 12 depicts photographs in each pose for the target behavior patterns. The following are features and estimated sensor responses for the respective patterns.

- SLP

Sleeping: a subject is sleeping on a bed normally.

- LOS

Longitudinal sitting: a subject is sitting longitudinally on a bed after rising.

- LAS

Lateral sitting: a subject is sitting laterally on a bed after turning the body from longitudinal sitting.

- TES

Terminal sitting: a subject is sitting in the terminal position on a bed trying to leave a bed. Rapid and correct detection is necessary because of the terminal situation for leaving a bed.

- LEB

Left a bed: a subject has left the bed. Herein, sensor responses disappear in the status of losing consciousness or a life crisis. For such circumstances, monitoring devices such as electrocardiographs are used. Such circumstances are beyond our prediction targets.

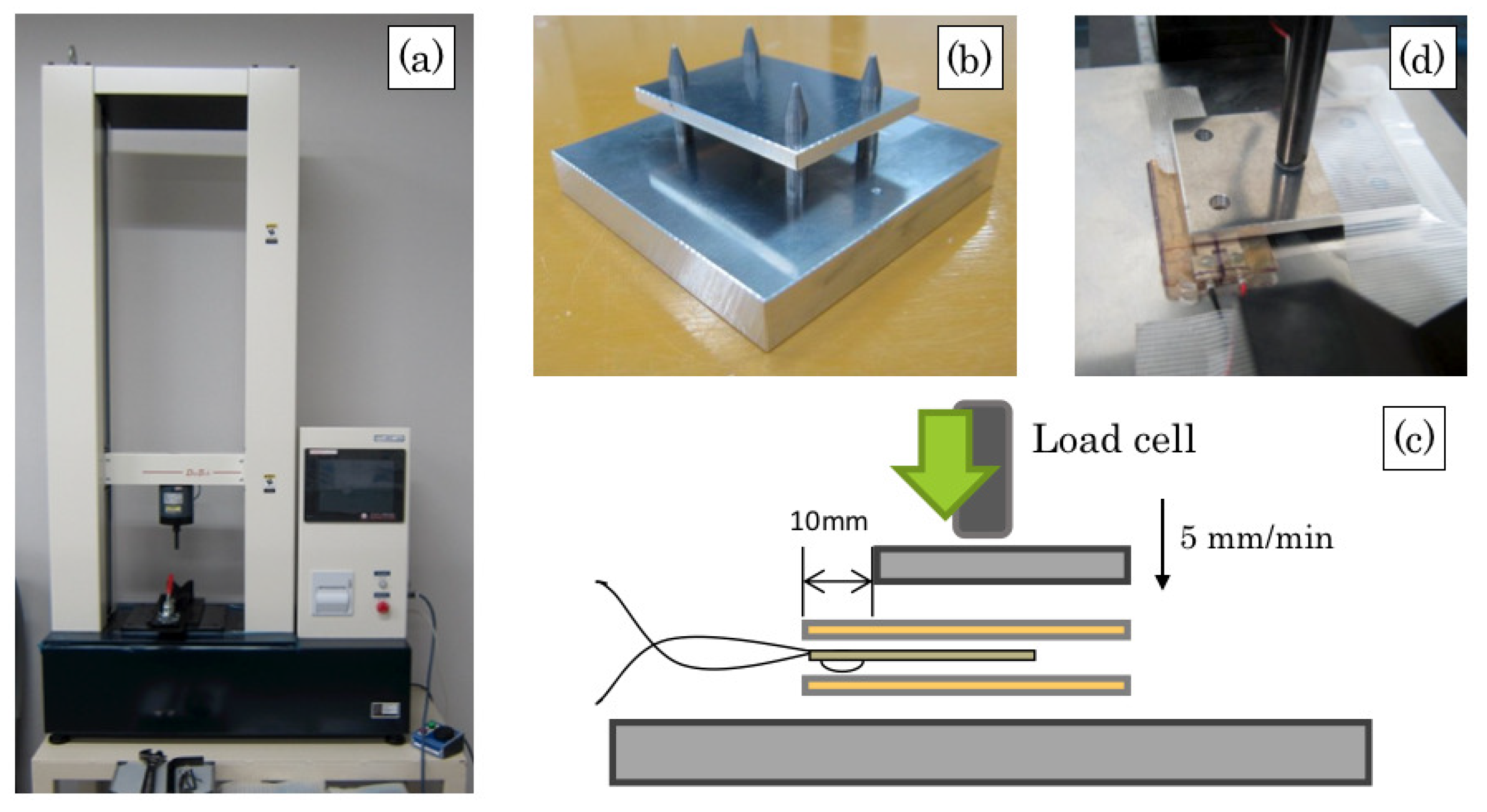

4.2. Datasets Obtained for Conditions

We obtained bed-leaving behavior pattern datasets at a simulated experimental room that resembled a clinical site. We used an electro-actuation bed (KA-36121R; Paramount Bed Co., Ltd.) equipped with three actuators for reclining the back and feet panels and for adjusting its height. We obtained datasets without using the back plate reclining function for avoiding load pattern changes on the bed. The route for a subject to leave from the bed is restricted to one side with two attached safety rails.

The subjects were 10 persons: nine men and one woman.

Table 1 presents profiles of all subjects. We set two protocols to obtain different characteristic datasets. The first protocol comprises the same procedures as those of our earlier study [

6]. Each subject switched their behavior patterns of five types with 20 s intervals. For this study, we call them continuous datasets (CDS). We obtained 10 sets of CDS from each subject. Herein, the data sampling rate was set to 50 Hz.

The second protocol comprises behavior patterns as discontinuous datasets. The order and duration of bed-leaving behavior patterns are various along with body parameters and health conditions in each subject. For example, behavior patterns from LOS to SLP without changing to LAS occurred frequently. We consider that the employment of our sensor system at a clinical site gives rise to dramatically lower recognition accuracy. As a basic consideration for aiming a practical application, we obtained datasets without fixed sequences or time intervals for subjects of their basic behavior pattern transitions. We designate them as discontinuous datasets (DDS). The data acquisition period was set to 15 min per person.

For calculating recognition accuracy, ground truth (GT) labels are indispensable to DDS. However, the burden to allocate GT labels is excessively high because each subject moved freely for 15 min. Therefore, we used a depth camera to record video images for annotation. We allocated GT labels to DDS manually from video image observation.

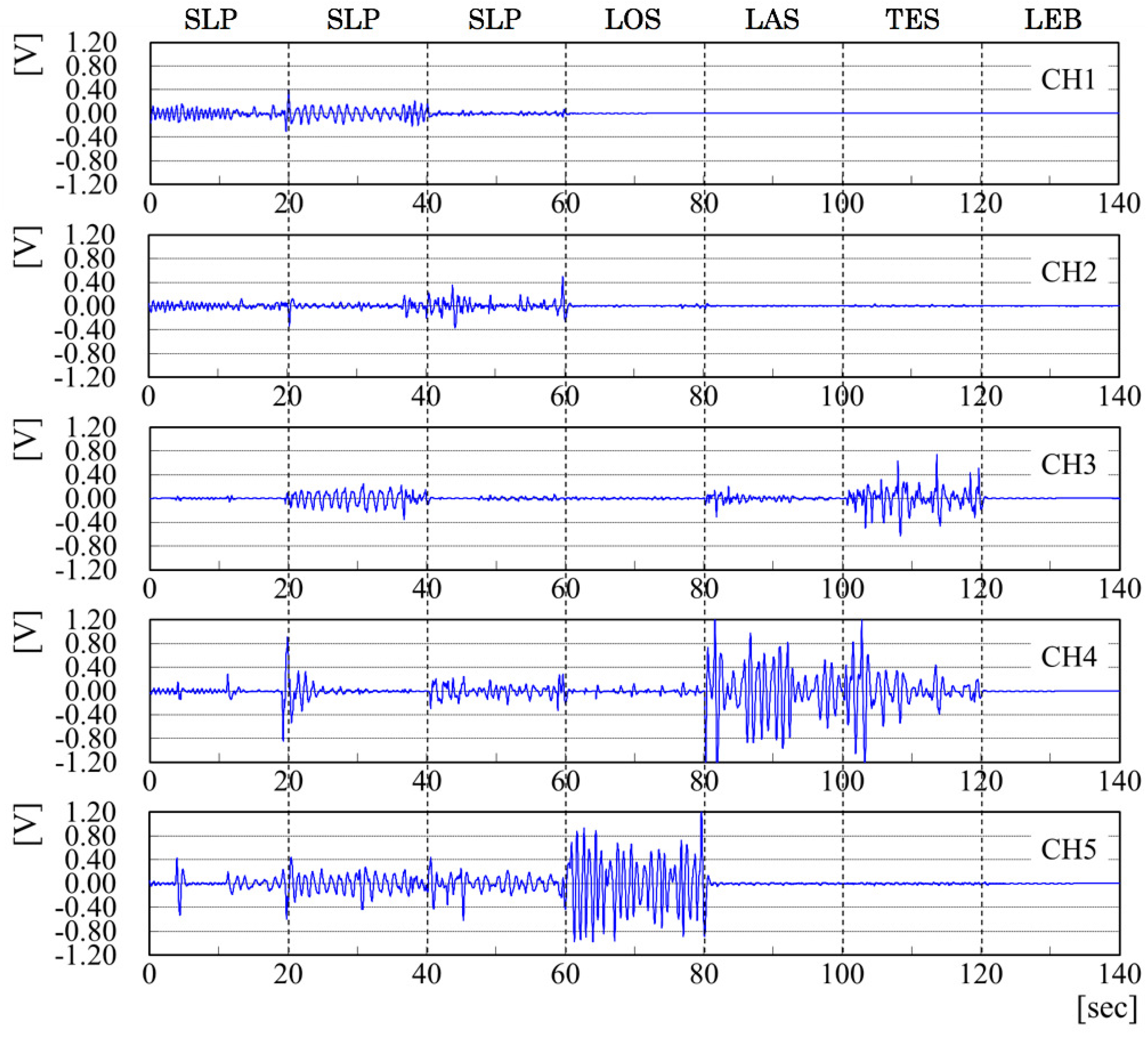

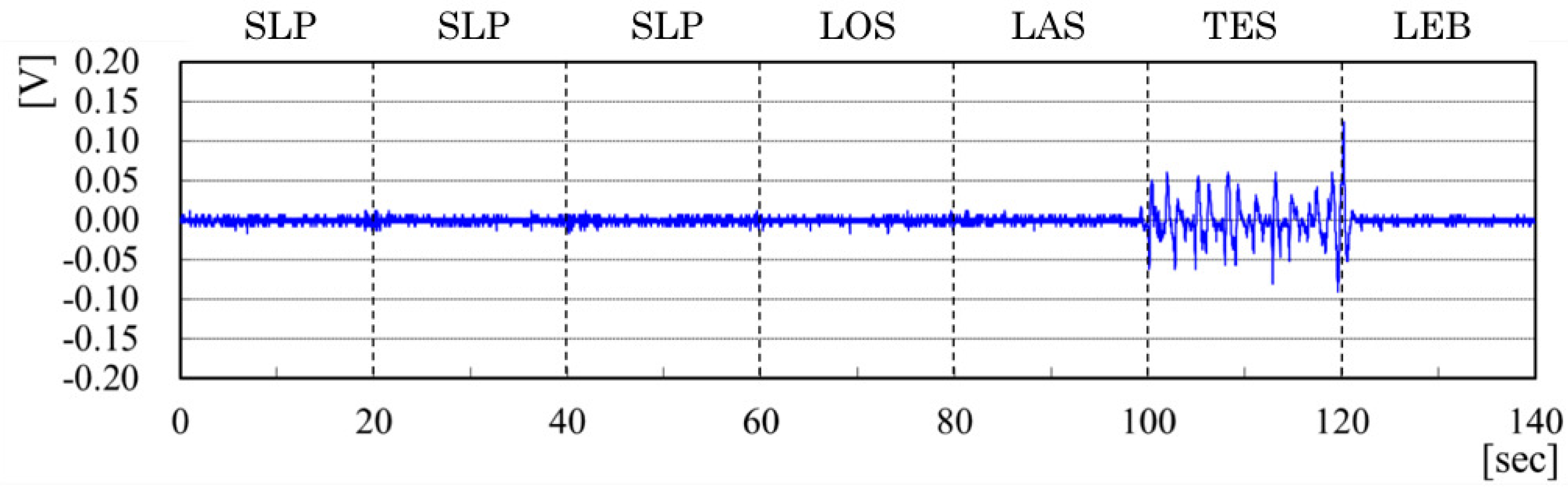

4.3. Sensor Output Signals

Figure 13 depicts output signals from the pad sensors. The vertical and horizontal axes respectively represent the output voltage and translation time in seconds. The voltage range is up to

V. Along with time transitions, each subject changes their behavior patterns in the order as depicted in

Figure 12. During 60 s from the initial point, a subject was sleeping on the bed with turning of the body. The output signals in respective channels were changed slightly.

The output signals from CH5, which correspond to the bed center, have come to be high in LOS. This tendency demonstrates that the upper body weight was concentrated to this channel. The output signals from CH4 are salient in LAS because of turning the body to the lateral bed direction for the transition to LEB. Salient output signals in TES are changed from CH4 to CH3. The output signals in LEB are disappeared completely.

Figure 14 depicts output signals from the rail sensor. No salient signals are presented in SLP, LOS, and LAS. The output signals are noticeable in TES. After the boundary between TES and LEB, no output signals are presented. The output signal tendency from the rail sensor indicates a selective feature in TES compared with those of other behavior patterns.

5. Evaluation Experiment

5.1. Evaluation Criteria

Let

and

respectively be the total numbers of test signals and GT labels. For evaluation criteria, the recognition accuracy

R for a test dataset is defined as

Herein, we define mean

R as

. Moreover, we define

R of SLP, LOS, LAS, TES, and LEB as

,

,

,

, and

.

We used

K-Fold cross-validation for evaluating results along with machine-learning and evolutional-learning approaches. Herein, we set

based on the results of earlier studies [

20,

21].

We conducted four evaluation experiments using behavior pattern datasets of two types.

Table 2 summarizes experimental details.

5.2. Comparison Results of Learning Algorithms

We evaluated recognition accuracy of machine-learning algorithms using CDS.

Table 3 depicts comparison results. We used CPNs as a discriminator for our earlier study [

6]. As a comparison result,

using CPNs was 75.4%, which is the second lowest of six algorithms. In contrast,

using RF was 91.1% that the highest. For all behavior patterns except of LEB,

using RF were higher than those of other algorithms. For LEB, LSVM provided the highest recognition accuracy.

As a commonplace tendency for all algorithms, achieved the highest. In contrast, , , and are smaller than 90.0%. We consider that the recognition accuracies for these three behavior patterns must be high because our system intends to predict bed-leaving behavior. Therefore, we examine measures to improve recognition accuracies for these three behavior patterns from the viewpoint of datasets and discriminators.

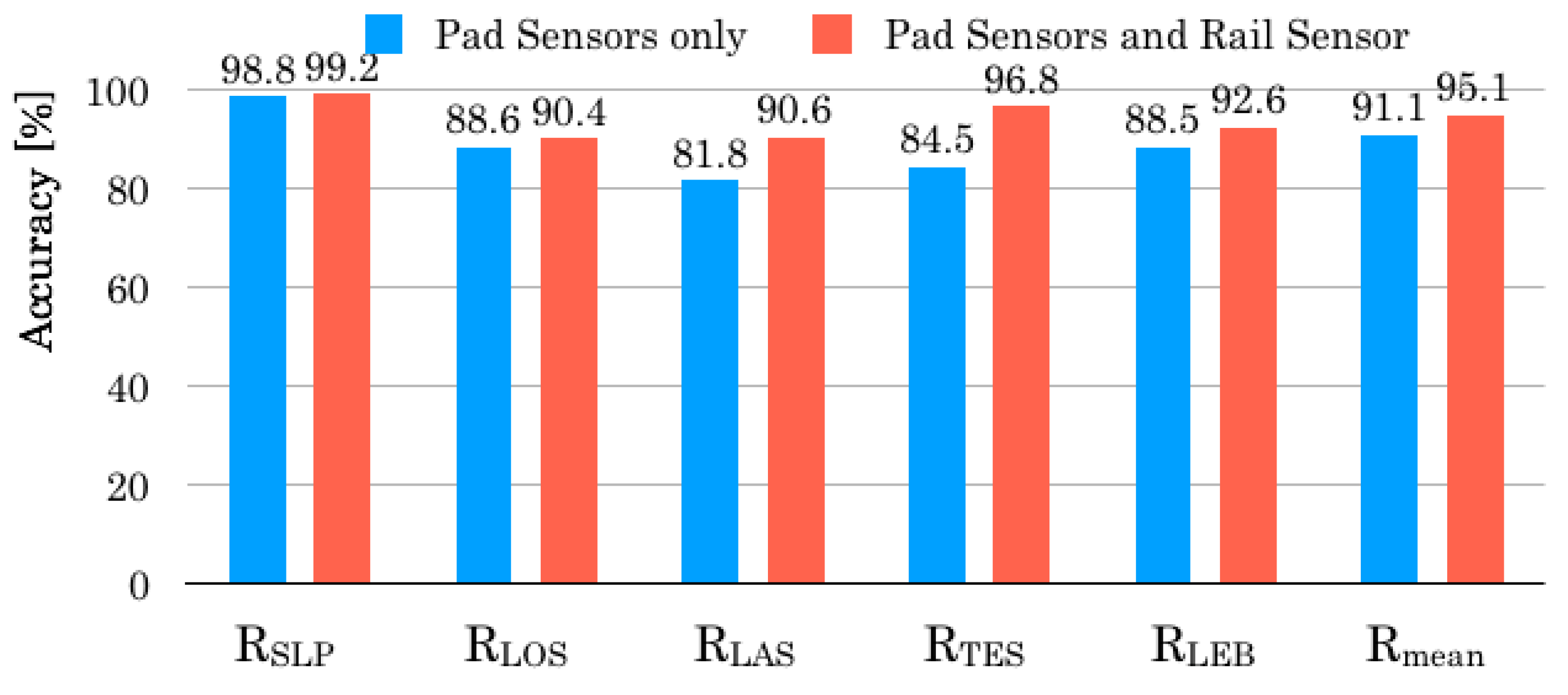

5.3. Experimental Results for CDS

Using CDS, we evaluated the capabilities of our originally developed sensors of two types.

Figure 15 depicts comparison results of

for the solely used pad sensors and the combined sensors with pad sensors and a rail sensor. Comparison of the results shows that

of the combined sensors is 4.0% higher than that of the pad sensors. Recognition accuracies in respective behavior patterns are improved from 0.4% up to 12.3%. Particularly,

exhibits the maximum improvement. Moreover, the experiment result demonstrates that the rail sensor, which detects the grasp of a safety rail in TES intensively, contributes to the improvement of the overall recognition accuracy.

As shown in

Figure 14, the output voltage from the piezoelectric film in the rail sensor occasionally exceeded 0.1 V when a subject was in TES. We infer that sufficient recognition accuracy is obtainable using the rail sensor solely if we set the target-only TES. However, we consider that false recognition occurred between TES and LEB, especially for the immediate transition from TES to LEB. Although sensor signals should be approximately 0 V in LEB, sharp signals are outputted. This tendency occurs when a subject tries to leave from the bed with holding or shaking of a safety rail, which enhances vibration. Therefore, we infer that the combination between the rail sensor and the pad sensors is the best for a practical use because of avoiding the problem that is occurred in the case of a solely used rail sensor. Moreover, false recognition to LEB is avoidable accurately because the recognition accuracy of the combined sensors obtained relative superior improvement for LAS, TES, and LEB.

We examine detailed recognition results obtained using a confusion matrix.

Table 4 and

Table 5 respectively present confusion matrixes for the pad sensors and the combined sensors. Specifically examining TES with the highest recognition accuracy, the number of false recognition instances was reduced in all behavior patterns after appending the rail sensor. Particularly, the number of false recognitions to LEB was reduced from 30 signals to 4 signals. We infer that the correct discrimination between TES and LEB engenders improved recognition accuracy.

Although the use of the rail sensor was aimed at improved , was improved from 81.8%–90.6% as a subsidiary contribution. The number of false recognition instances was reduced, except for SLP. Particularly, the number of false recognition instances for TES was decreased to 26 times. The improved after appending the rail sensor produces decreased false recognition instances of LAS. As a result, correct recognition instances, except for TES, produces an improved . We demonstrated that the addition of a sensor that can reliably recognize a single posture engendering improvement of . The combined sensors have clear benefits for bed-leaving behavior recognition when compared to other configurations.

5.4. Experiment Results for DDS

Table 6 denotes

for each subject for DDS. Compared with that of CDS as depicted in

Figure 15, recognition accuracies were lower in all five behavior patterns. Especially,

was drastically lower. This experimentally obtained result revealed that recognition accuracy, except for

, which was the highest accuracy among five behavior patterns, was strongly affected by randomness in DDS.

The disparity of detailed recognition accuracies in respective subjects was from 43.3% as the lowest to 95.3% as the highest. Compared with the mean accuracy of 75.2%, the recognition accuracies were above for seven subjects and below for the remaining three subjects. Therefore, significant lower accuracy for specific subjects decreased the overall recognition accuracy. We infer that this tendency is influenced by individual differences in behavior patterns. Each subject played predetermined behavior patterns in CDS and free behavior patterns in DDS. We consider that the recognition accuracy was significantly lower because behavior patterns were various among the subjects in DDS.

Table 7 depicts the confusion matrix for all subjects.

Table 8 presents the confusion matrix for Subject C, with the lowest recognition accuracy. Numerous signals were falsely recognized to SLP. Particularly, correct recognition in LEB was merely 2 of 179 signals. Other signals were falsely recognized to SLP. This trend demonstrated that false recognition occurred in the state that SLP and other behavior patterns were not distinguished. In DDS,

was dramatically lower in particular subjects. We consider that this is attributable to behavior variations of subjects between learning datasets and test datasets. Therefore, we consider that

improves if learning datasets contain diversity.

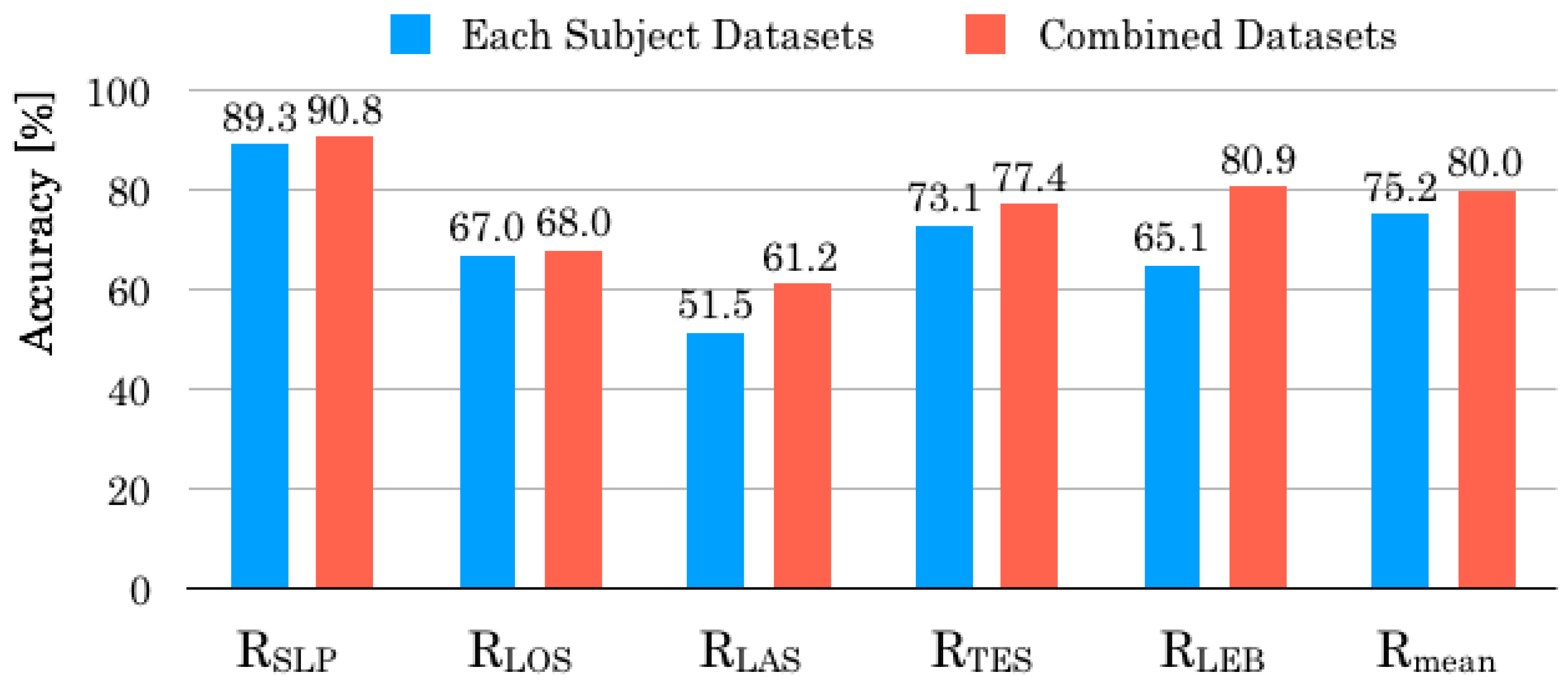

5.5. Integration of Learning Datasets

For applying our system at a clinical site, learning dataset preparation for each subject might be troublesome and time-consuming. In addition, preserving accuracy has come to be a problem of system reliability because generalization was dropped in DDS. Therefore, we attempt to construct a generic classifier combined with learning datasets for all subjects as depicted in

Table 1. For this experiment, we used CDS for learning and DDS for validation.

Table 9 depicts recognition accuracies obtained before and after the integration of learning datasets. Recognition accuracies in six of ten subjects were improved. Particularly, recognition accuracies of Subjects C and G were improved notably. Although recognition accuracies of four subjects dropped, three of them remained dropped percentages up to 2.0%. However, with regard to Subject E,

decreased 5.6 percentage points, especially for steep drops in

and

.

Figure 16 depicts the comparison result of recognition accuracy for each behavior pattern. All recognition accuracies were improved in the integrated datasets. Particularly,

improved 15.8 percentage points.

Table 10 denotes the confusion matrix for all subjects after the integration of validation datasets. Compared with the results depicted in

Table 7, the numbers of false recognition instances were lower in all behavior patterns. Although the false recognition instances for SLP were numerous in

Table 7, these results were improved in

Table 10. Moreover, false recognition instances appeared frequently in behavior patterns that were close to GT labels except of LAS. We consider that it is a challenging task to recognize intermediate states of two neighbor behavior patterns which change their body among respective behavior patterns. To reduce false recognition instances, we infer that it is necessary to use a method to maintain the previous status until the recognition becomes stable if a present recognition result differs from the previous one. In contrast, false recognition instances of LAS were divided to the other four behavior patterns evenly. We infer that this tendency makes it difficult to distinguish LAS from other behavior patterns.

For the integration of learning datasets among subjects, we achieved not only maintenance of generalization performance for DDS, but also prevention of false recognition extremely. Although recognition accuracy was improved overall, several subjects showed low recognition accuracy. We conclude that this learning strategy does not ensure whole improve recognition accuracy. For improving this system, we consider that important prerequisites remain as the following: to improve generalization capability for collecting numerous datasets from numerous subjects, to change learning datasets along with subject profiles in terms of height or weight, to perform incremental learning without stopping the system until sufficient accuracy is obtained, and to construct learning datasets specialized to each subject temporally.

5.6. Discussion

For this study, we developed the sensor system that is inexpensive, convenient, and maintainable with advanced QoL for care recipients. Actually, as described in the introduction, using a camera as a bed monitoring sensor can provide a low-cost system that can obtain much information from subjects. However, it is still a challenging task to predict behavior patterns obtained from images, even when state-of-the-art computer vision technologies are used. For example, as a deep-learning-based approach, OpenPose [

22] does not handle sleeping or laying positions. Therefore, medical staff members must observe images directly. Moreover, we have to consider aspects of human rights and QOL, especially, it is impossible not only to monitor numerous subjects simultaneously with a few operators but also to recognize behavior patterns related to bed-leaving using only sensor responses, even when detailed analyses are conducted, because behavior patterns differ among people. Furthermore, monitoring using a camera imposes a mental load on patients because they feel as though they are under surveillance all daytime and nighttime. However, we have not evaluated this sensor system at hospitals or care facilities. We would like to subjective and objective evaluation to validate our sensor system in a clinical environment without the use of cameras.

6. Conclusions

This paper presented the bed-leaving behavior recognition system that comprises pad sensors installed on a bed, a rail sensor inserted in a safety rail, and a behavior pattern recognizer based on machine learning algorithms. We obtained benchmark datasets of continuous and discontinuous behavior patterns from 10 subjects. The experimentally obtained results revealed that RF obtained the highest recognition accuracy in our benchmark datasets. Compared with our earlier study, results obtained using CPNs, the recognition accuracies were improved by 20.7% for LOS and 21.9% for TES. After appending the rail sensor to the pad sensors, the mean recognition accuracy improved 4.0 percentage points, including a 12.3 percentage point improvement for TES. Regarding the difference in behavior pattern transitions, the mean recognition accuracy decreased 22.9 percentage points in discontinuous datasets. For improving the generalization of our system, datasets of all subjects were combined for learning. The mean recognition accuracy improved 4.8 percentage points, especially improved considerably for two subjects.

For our future work, we aim to apply our proposed sensor system to a clinical site such as care facilities or single senior’s homes for security and safety observation that simultaneously maintains QOL and privacy. We will achieve steady detection to expand the application range of our method and thereby increase the number of subjects. Additionally, we must demonstrate the system reliability for conducting long-term monitoring.