1. Introduction

Human–machine interactions rely on human behavior [

1]. Behavioral biometrics can prove effective in situations where a person’s mood, emotions, or intent are to be identified. While the majority of biometric research focuses on behavioral biometrics such as voice and gait [

2], as well as the enhancement of accuracy through information fusion [

3,

4], this article presents the most comprehensive study to date on the use of aesthetic-based human traits expressed through human–machine interaction for biometric identification. In the domain of behavioral biometrics, social-behavioral biometrics utilizes a person’s interpersonal interactions, dispositions, and attitudes expressed through online media and communications as features [

5,

6]. Social network users exhibit many unique features through daily communications [

7]. One such feature includes an individual’s visual aesthetic preference, which can be described as the principles or criteria which represent one’s judgment of visual beauty [

8]. Research on the identification of individuals based on their visual aesthetics, essentially their visual preferences, emerged very recently [

9,

10,

11]. With knowledge of an individual’s visual preference from a selection of images, corresponding features can be retrieved, which forms the person’s specific visual aesthetic authentication template. This is the basis of visual aesthetic identification. The present research is a significantly extended version of conference paper [

12].

As a relatively new domain of research, visual aesthetic identification shows very high potential. Human–machine interaction often includes not only work-related tasks but also online social activities, interactions with family members, recreation and more. Among them, sharing photographs, discussing art, and expressing the liking of posted images are common expressions of human behavior. However, understanding these behaviors has been notoriously difficult due to the numerous visual features that are present in the images. During the creation of user authentication templates, it is important to use the most discriminatory features for classification. In pattern recognition, a feature is a characteristic that represents some data of an observation. Although what are considered good feature characteristics depends heavily on the problem domain, many common techniques aim to improve the efficacy of feature data such as normalization, selection, extraction, and expansion. Highly correlated or otherwise non-discriminatory features can decrease the accuracy and speed of the classifier, with intensity varying depending on the model. The most recent work [

11] used 861 original features, reduced to 700 Principal Components (PC) using Principal Component Analysis (PCA). Although the accuracy obtained was higher than in the previous works, a further increase in accuracy and reduction of template generation time were outlined as future challenges. A reduced feature set can lower these times, which is typically done through feature selection or extraction techniques [

13].

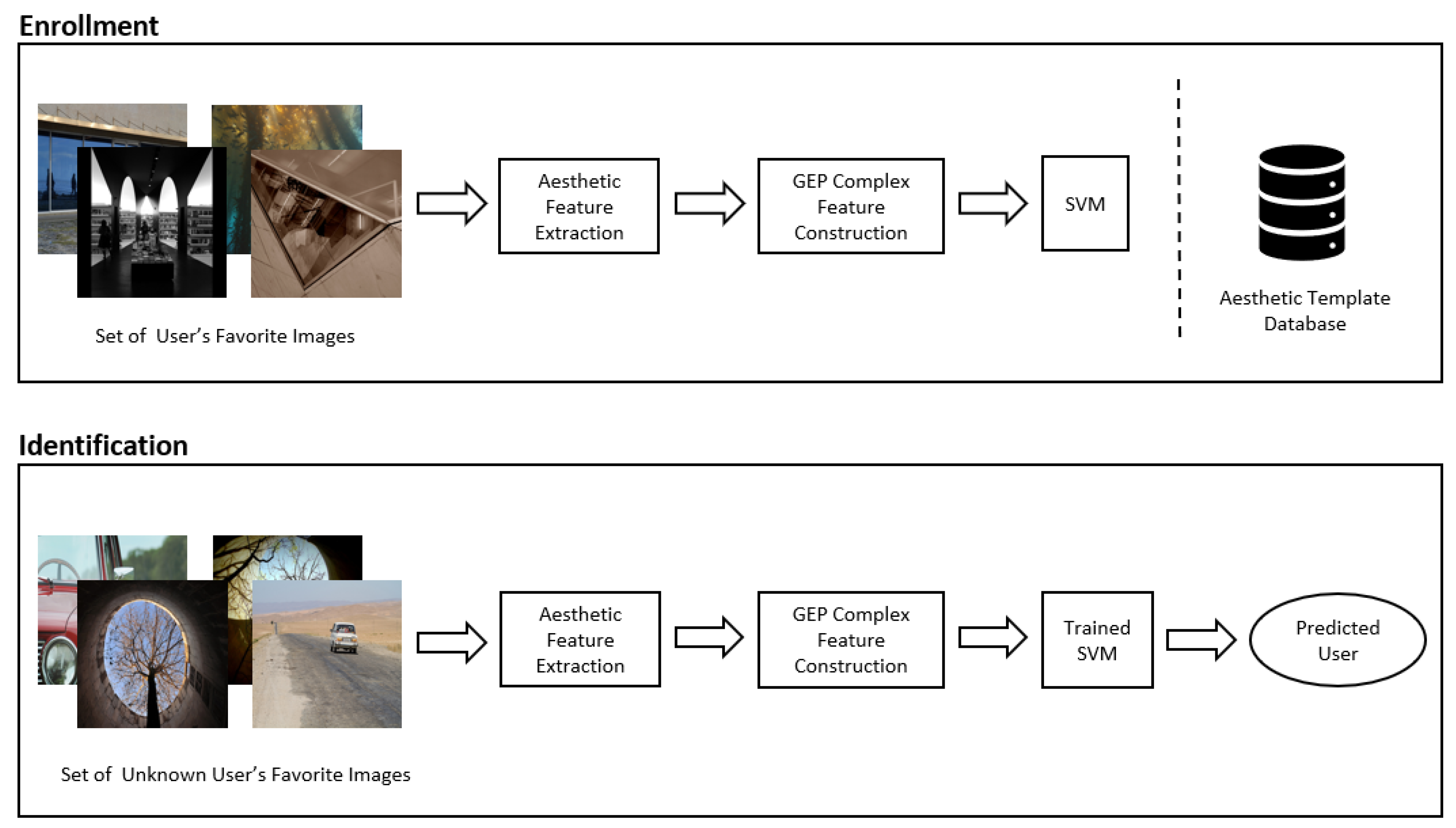

In this paper, we apply Gene Expression Programming (GEP) to reduce feature dimensionality and increase classifier accuracy in a new identification model for visual aesthetic identification. Gene expression programming is a stochastic, meta-heuristic approach that utilizes structured gene trees to represent generated candidate solutions. The general structure of a GEP model follows other genetic approaches that use the fundamental principles of evolution: selection, mutation, and crossover (also called recombination). Through the use of program generations, unique candidates are generated based on random mutation and crossover with the previous generation to maintain a population of programs [

7,

14]. The motivation to investigate the benefits of a GEP method for biometric identification originates from the recent successful applications of this method in medicine [

15] and physics [

16].

A modified GEP-based approach to feature extraction is proposed in this paper to improve aesthetic-based person identification accuracy and reduce enrollment times. This approach transforms the original feature set into a smaller set of complex features through structured program evolution. We establish that these complex features can increase accuracy due to the reduction of noise introduced into the classifier system. A smaller feature vector with higher discriminatory ability also results in fewer computations during classification and a lower amount of memory required to store an authentication template. The proposed approach can be applied to other domains and lead to integration into more complex systems. In proposing such a model, the paper aims to improve the current state-of-the-art visual aesthetic identification and test the efficacy of a GEP approach given a large feature set in this domain. Results surpass the state-of-the-art methods for visual aesthetic identification.

Very preliminary research on this topic has been published as a conference paper [

12]. In the current work, additional extensive experimentations on model hyperparameters and analysis have been provided. An adaptive mutation behavior was implemented, which increases the robustness and growth of the overall accuracy of the system. Model performance comparisons between different machine learning algorithms have also been added. Thus, this paper makes the following contributions:

A novel visual aesthetic-based identification model is introduced that achieves higher accuracy over the most recently reported results.

The investigation of utilizing gene expression programming to construct complex features is conducted for the first time in the aesthetic research domain.

The proposed model reduces the dimensionality of the feature data required for identification, which achieves an improvement in both computation speed and system robustness of the visual aesthetic-based identification system.

A comparison with the proposed model and previous works is performed on the benchmark dataset.

2. Previous Work

The research on visual aesthetics presented in this paper is introduced in the context of social biometrics [

5,

6], an area of biometrics that understands human behavior and personality traits based on human social network activities. This emerging direction of research was preceded by the development of a new generation of biometric systems based on fundamental modeling principles [

17], with a focus on the simulation of biometric information based on real-life models [

18]. The majority of research on visual aesthetic identification is very recent, with the initial attempt at identification using visual aesthetics conducted by Lovato et al. [

9]. This work used a total of 62 image features from a collected database of 40,000 Flickr images, chosen by 200 users (200 images per user). A lasso-regression model was used to construct the visual aesthetic template to identify users, achieving an accuracy of 14% at rank 1 and 51% at rank 5. Although the accuracy was very low, the work served as a proof of concept and feasibility on the discriminatory usage of basic visual aesthetic features.

A more sophisticated approach was developed by Segalin et al., which utilized a multi-resolution counting grid to categorize similar images [

10]. Features were separated into two categories: perceptual features and content features. The experiments resulted in above 70% accuracy at rank 1 on the benchmark dataset collected by the authors of [

9].

The more recent work performed by Azam and Gavrilova presented a larger feature set of 861 that consisted of local perceptual features, global perceptual features, histogram of gradients (HOG) features, and content features [

11]. The methodology used Principal Component Analysis for feature reduction, which produced 700 principal components from 861 original features. These principal components are complex features generated through linear combinations of the original feature set, sorted by total variance. Using the same database of 40,000 liked Flickr images from 200 users (200 images per user), the system was able to identify users with a rank 1 accuracy of 84.50%, and a rank 5 accuracy of 98%.

In solving this identification problem, the classification techniques used in the previous works consisted of a mixture of traditional machine learning and feature engineering. As a general trend, more features are extracted to improve identification accuracy, with 861 features introduced in the most recent work [

11] compared to 62 in the initial work [

9]. More sophisticated machine learning techniques have also been shown to improve the system’s ability to capture a person’s aesthetic judgment. However, the introduction of a high number of features can result in a classifier’s diminished performance [

19]. Non-discriminative features can be interpreted as noise to a machine learning algorithm that detracts from useful data. This non-discriminative data can also encourage unnecessary computations and storage requirements in a real-world system. Thus, in this work, we propose to utilize gene expression programming for complex feature extraction in order to further improve accuracy and reduce computation time.

The recently introduced GEP methodology has proven highly beneficial in a variety of applications [

20]. Like other techniques such as genetic algorithms (GA) or genetic programming (GP), GEP operates using the fundamental principles of genetic evolution. Generally, GEP has been applied to five categories of problems: symbolic regression, classification, automatic model design, combinatorial optimization, and real parameter optimization [

21]. GEP leverages the strengths of genetic-based algorithms while retaining simple genetic operations and faster convergence than GP approaches in complex optimization problems [

22]. Thus, we hypothesize that GEP will be highly suitable for the aesthetic identification problem. The benefits of GP and GEP for feature construction are numerous, with solution trees used for both single and multi-dimensional feature construction [

23]. Single dimensional feature constructions using GP compresses many features into one complex feature encoded by a single GP tree [

24]. Though performance is favorable in certain scenarios, one complex feature may not be enough to accurately discriminate between classes in a problem with high complexity. Thus, multi-dimensional approaches have been proposed that include using many evolutionary generations to implement a unique function which designates a new feature in each subtree [

25]. Although the application of GEP on large feature dimensionality has been explored, it was never considered for the behavioral biometric classification problem.

4. Experimentation and Results

The classification system was implemented in Python 3.5, using the open-source PyGEP 3.5 port library for the base GEP framework. All experiments were run on a Windows distribution using an Intel Core i7-8700 @ 3.20 GHz CPU and an Nvidia GeForce GTX 1080 GPU. Six cores, 12 logical processors, and 16 GB RAM are available to the machine.

The system is tested on a 40,000 Flickr benchmark dataset consisting of 200 users each with 200 labeled favorite images [

9]. An overall rate of repeated images chosen by the different users is 0.05%. A large feature vector was extracted and precomputed from the local, perceptual, content and HOG features. The images are split into a 50–50 distribution of testing and training sets, with different combinations of these images organized for 5-fold cross-validation. Each image set is composed of 100 images. The 5-fold cross-validation is applied within the image set level, where each fold shuffles the choice of the images used within the 100 image sets. This ensures that each test and training set is different between folds.

To evaluate the strength of the computed complex features, the GEP approach was used with a gene number or complex feature count of 150 on the same classification problem. A termination condition of 300 generations was used, as the improvement of the produced solution was found to become stable after this generation count.

The experiments were designed to verify the system’s ability to perform highly accurate identification reliably and efficiently. The experiments begin with the testing of the optimal generation and gene count. This configuration is then used to compare the performance of different classifiers, where SVM produces the best result. Performance metrics for the system including the cumulative matching characteristic, receiver operating characteristic, and the false positive/negative graphs are shown. These results are compared to the current state-of-the-art methods, with the proposed system outperforming the most recent published model in [

11] and its reimplemented result. Lastly, a final comparison shows that the GEP model outperforms other common dimensionality reduction techniques for the visual aesthetic-based identification problem.

Figure 6 shows a graph of the performance of the system using the SVM classifier for a period of 400 generations. The initial best candidate has a rank 1 identification accuracy of 76%, which increases to 93% based on steady improvement observed from generation 0 to 200. The intervals of improvement grow smaller between generation 200 and 300, reaching an accuracy of 95%. Beyond generation 288, no further improvement to the system accuracy can be observed. This is indicative of the GEP module being unable to find a better solution within a heavily restricted solution space. Even after 100 generations of no improvement, the system with a high variable mutation rate remains unable to find a better solution in the less restricted solution space. A maximum has been reached, with the probability of both escaping and finding a better solution low. Therefore, a baseline generation count of 300 was used.

The choice of gene count was determined empirically, with the results of the experiments shown in

Figure 7. The rank 1 accuracy comparison with the different gene counts is available in

Table 2. Since each gene subtree encodes a complex feature, the gene count parameter determines the number of complex features in the resulting feature vector. A higher feature count allows for an expanded solution space with more trainable parameters but is prone to higher generation count required and difficulty in convergence. A lower feature count is a primary objective of feature dimensionality reduction, though a feature count that is too low can be unable to capture sufficient discriminatory information between classes. From these tests, a gene count of 150 proved to result in a system with the highest accuracy at generation 300.

A comparison of the different classifiers is shown in

Figure 8. The rank 1 accuracy comparison with the different classifiers is available in

Table 3. K-Nearest Neighbor (KNN), Naive Bayes, Stochastic Gradient Descent (SGD) Classifier, and Support Vector Machines are compared, with SVM showing the highest rank 1 identification at 95.1%. The tolerance for the SGD and SVM classifiers were both set to 0.001, with the SVM set to the linear kernel with a regularization parameter of 0.25. Each classifier’s decision boundaries can influence the gene expression programming module with every evaluation, with an effect on both accuracy and model runtime. SVM was found to be the best performing classifier.

The Cumulative Matching Characteristic (CMC) curve is shown in

Figure 9, which displays the proposed classification system’s accuracy across rank 1 to rank 5 recognition rates. A CMC curve is a common indication of classifier accuracy, as it displays the correctness at each rank of prediction. A rank N recognition rate is the probability that the correct prediction is chosen among the top N matches. The normalized Area-Under-the-Curve (nAUC) of a CMC curve is a performance metric that gauges accuracy over all ranks for a specific classifier system, where a value of 1 is ideal accuracy. The system achieves a normalized AUC of 0.9987 among all 200 classes, with a rank 1 recognition of 95.1% and a rank 5 recognition of 98.9%.

Given the prediction probabilities for the test samples, a CMC curve is constructed by calculating the rank N recognition rate for each rank. As the system identifies individuals, there are 200 ranks. This is depicted in the following formula:

The Receiver Operating Characteristic (ROC) curve in

Figure 10 shows the relation between a system’s true positive rate over false positive rate. A high true positive rate and area-under-the-curve are indicative of a more robust model with less verification error. The false positive/negative graph in

Figure 11 shows the false positive rate (Type I error) over false negative rate (Type II error), along with the equal error rate (EER). A false positive corresponds to the incorrect acceptance of an unknown user, and a false negative corresponds to the incorrect rejection of an enrolled user. A lower equal error rate is desired for a biometric system, as it is less prone to making both types of verification error. The model exhibits a ROC area-under-the-curve of 0.9964, with an equal error rate of 0.0303.

The equal error rate is the point at which the false positive rate and the false negative rate intercept. In the context of this multiclass problem, a micro-mean approach is taken, where the ROC and EER metrics were averaged for all binary class scenarios using the one-versus-rest strategy. The relationship between the ROC and EER metrics are depicted in the following formula:

Analysis

Three of the referenced models are compared to the proposed model, with data shown in

Table 4. The experiments were conducted on the same image dataset initially collected by Lovato et al. [

9]. The overall classification accuracy of the system increases as classification accuracy is used to optimize an initially random complex feature set, surpassing the original accuracy seen in [

11] while using only 150 computed complex features generated over 300 generations. The 700 principal components used in [

11] is also far higher than the computed feature size in the proposed model. An improvement of 81.1% percent was achieved over the original 2012 work [

9], 22.1% percent over 2014 work [

10], and 11.1% over the most recent work [

11] at rank 1. Rank 5 results were also improved. In addition, the proposed model effectively used 550 fewer features in the resultant feature vector than the most recent work. The GEP implementation offers high accuracy in these test scenarios by constructing highly discriminative features that are not bound by only linear combinations of the original feature set. Using a similar original feature vector size as [

11], the usage of GEP and the novel customization of its genetic operators have heavily reduced feature dimensionality while increasing the discriminative ability of the model. The proposed model also surpasses the rank 1 and rank 5 recognition rates of other state-of-the-art works operating on the same dataset. The dataset used is the largest benchmark dataset for person identification using visual aesthetics.

As more improvements are made throughout the generations, the growth in classifier accuracy decreases as it becomes more difficult to generate further discriminating features without overlap. More generations are required to fine-tune smaller changes to the feature trees due to the random nature of the evolutionary operations. It is also important to consider 300 generations as a low count for typical genetic-based algorithms. The largest expense to the model is the evaluation of every individual complex feature vector, which takes on average under 10 s. Each generation requires at most n evaluations, along with evaluations required for refitting the classifier. Further tests with higher generation count, higher population size, or additional complex features can potentially increase accuracy rates further.

In addition, the state-of-the-art approach presented in [

11] has been fully reimplemented in MATLAB and compared against the proposed model on the same machine, with results shown in

Table 5. The method in [

11] uses PCA to generate 700 principal component features and lasso-regression for classification.

The proposed model is shown in

Table 6 to have much higher rank 1 and rank 5 recognition rates when compared to the reimplementation, with an improvement of 14.5% and 1.6%, respectively. This is indicative of a more accurate classification system, which is a product of the complex features constructed by the GEP module along with the linear SVM classifier.

In addition, the proposed model is compared with the most recent state-of-the-art methods in

Table 5. The amount of time required to generate and test the aesthetic template of all 200 users given the feature vector is significantly lower with the proposed model at 6.71 s. This difference is likely due to the efficiencies of the linear SVM in classifying this distribution of observations and the smaller dimensions of the complex feature vector compared to the PCA feature vector. The smaller complex feature vector when stored only requires 150 megabytes (MB), which is much less than the 511 MB required for the reimplemented approach. The proposed model has higher rank 1 accuracy when compared to the recent conference results by 1%, with similar time and memory requirements.

Additional tests are shown in

Table 7 that display the performance of the classification system with different types of dimensionality reduction techniques. All techniques were set to output a reduced dimensionality of 150 from the original 924 feature vector for accurate comparison. Independent component analysis discovers statistically independent components of the feature set through maximizing rotated component non-Gaussanity. Factor analysis searches for underlying latent variables that describe observed variables by considering joint variations or factors. Lastly, principal component analysis extracts linear combinations of the feature vector that explain the most variance. It is shown that the proposed GEP module reduces the components while also increasing the accuracy of the original feature set over other existent dimensionality reduction techniques. When applied on the 924-feature set, the GEP module reduced dimensionality to 150 components while having a 16.2% increase in rank 1 accuracy over using PCA.

Summarizing the performed experiments, both the rationale and the performance of the system have been validated. First, it was discovered that a gene count of 150 performed the best among a set range of different gene counts. This gene count translates to 150 complex features, as each gene subtree encodes one complex feature. Using 150 complex features, the GEP model outperformed common dimensionality reduction techniques such as Independent Component Analysis, Factor Analysis, and Principal Component Analysis when applied to the same feature space. Different classifier configurations are tested with the model, with a Support Vector Machine-based classification outperforming K-Nearest Neighbor, Naive Bayes, and Stochastic Gradient Descent. The Cumulative Matching Characteristic curve establishes the system’s consistently accurate performance across ranks and a normalized Area-Under-the-Curve of 0.9987. A Receiver Operating Characteristic Area-Under-the-Curve of 0.9964 and an Equal Error Rate of 0.0303 proves the system’s robustness and low chance of misidentification. The proposed system achieves a rank 1 accuracy of 95.1% and a rank 5 accuracy of 98.9%. The time and memory required for this system are much lower than the reimplementation of the most recent state-of-the-art method. The results prove that the proposed model outperforms the state-of-the-art visual aesthetic identification methods in accuracy, speed, and storage required.

5. Conclusions and Future Works

This article presents the most comprehensive study to date on the use of aesthetic-based human traits expressed through human–machine interaction for biometric identification. It answers the research question of whether a new algorithm can be developed based on gene expression programming that can significantly outperform previous approaches for aesthetic-based human identification. The research demonstrated on a large dataset through extensive experimentation that GEP is an effective method for feature extraction in visual aesthetic identification and that the proposed model reduces the number of features required for identification while also increasing the overall recognition rate. The method reduced the dimensionality of the large original feature set while achieving rank 1 and rank 5 accuracies of 95.1% and 98.9%, respectively. The time and memory requirements have also been shown to be lower than in the previous methods.

This study opens new avenues in harvesting rich information provided during human–machine interaction, where human aesthetics plays a vital role in selecting favorite images. A more thorough investigation into the model implementation may allow the opportunity for further optimizations in system performance through the use of extensive parallelism. In addition to system complexity, the selection, mutation, and crossover strategies can be further developed to increase the robustness and the accuracy of the converged solution. On a broader level, the exploration of alternative ways of human–machine interaction based on other aesthetics, such as music and touch, can be performed for the development of more comprehensive human–machine interfaces as well as more versatile security systems.