Grasping Force Control of Multi-Fingered Robotic Hands through Tactile Sensing for Object Stabilization

Abstract

1. Introduction

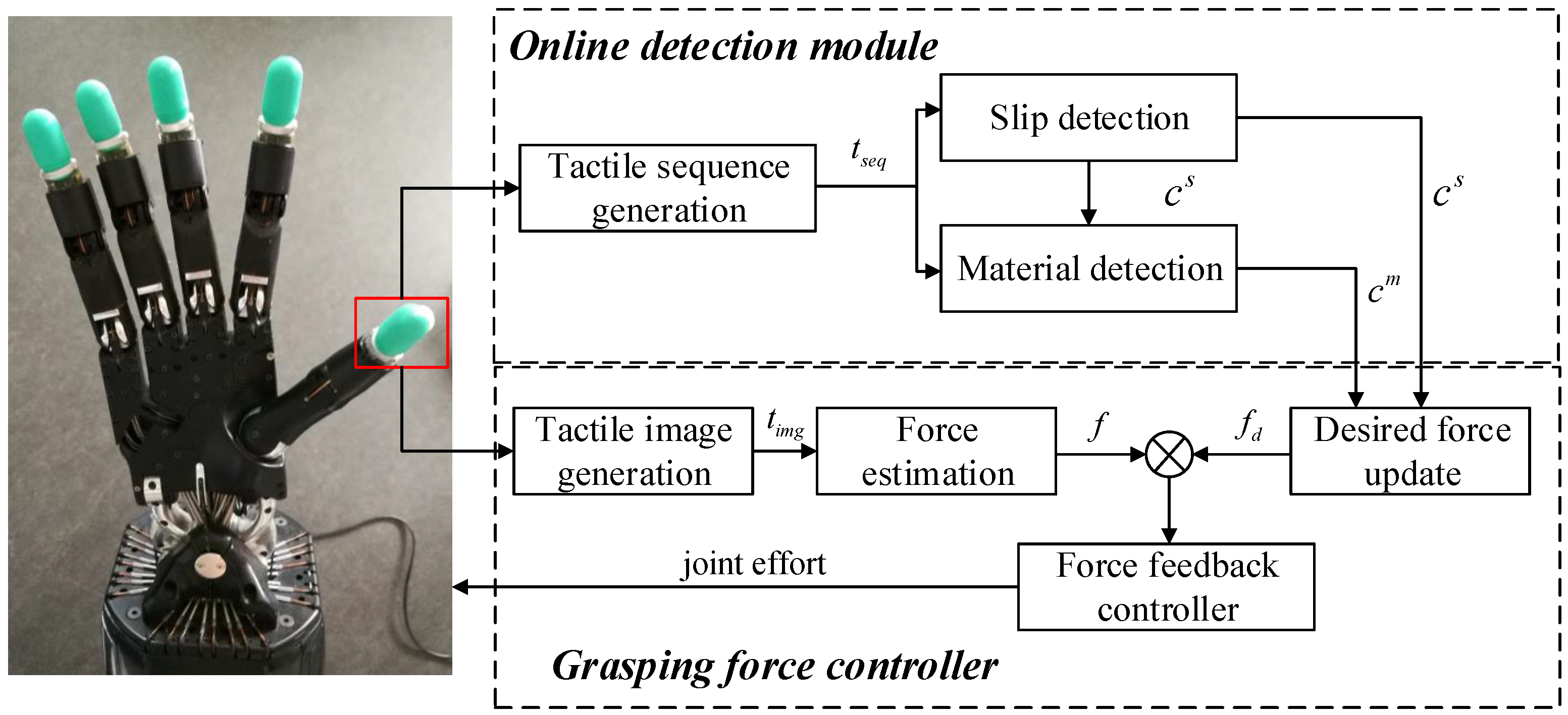

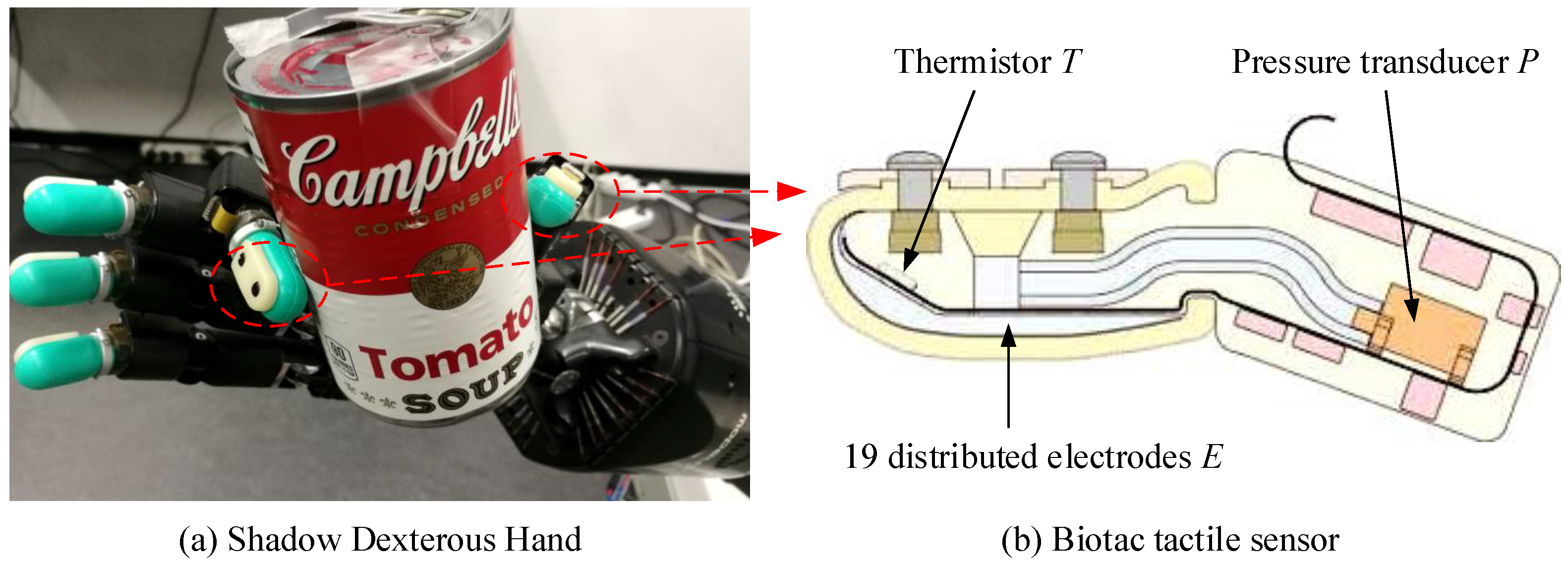

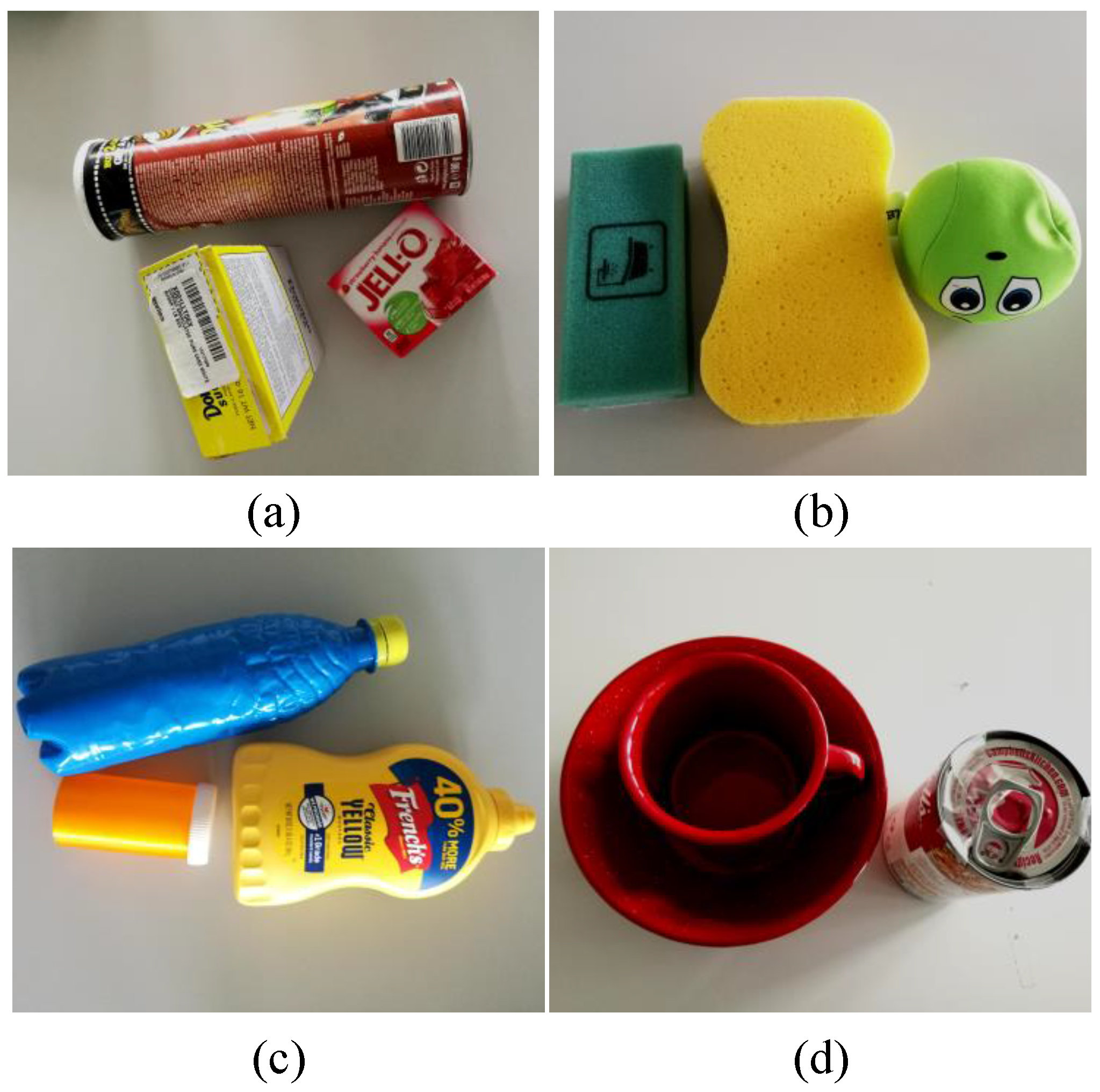

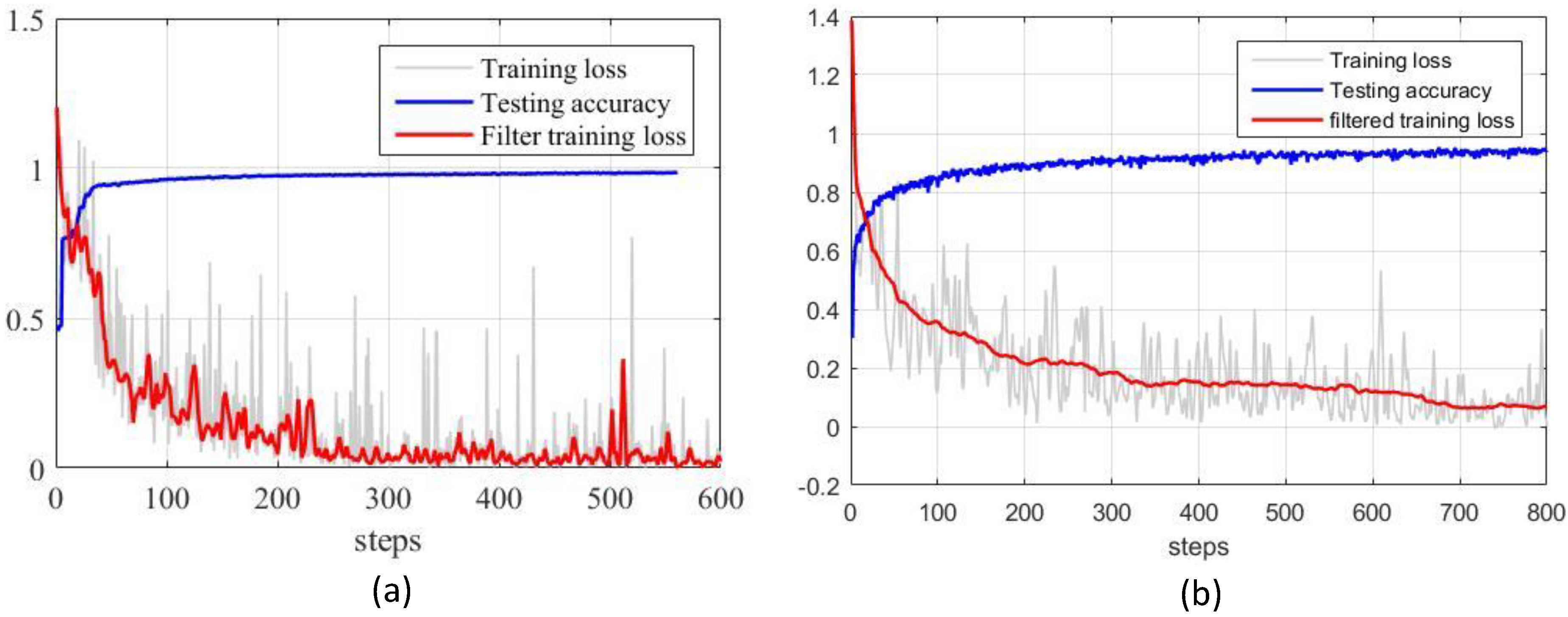

- An online detection module is introduced, which trains a Deep Neural Network (DNN) to detect contact events and object materials simultaneously from tactile data. A tactile dataset is collected for slip and material detection. The dataset contains tactile data, contact event, and material information.

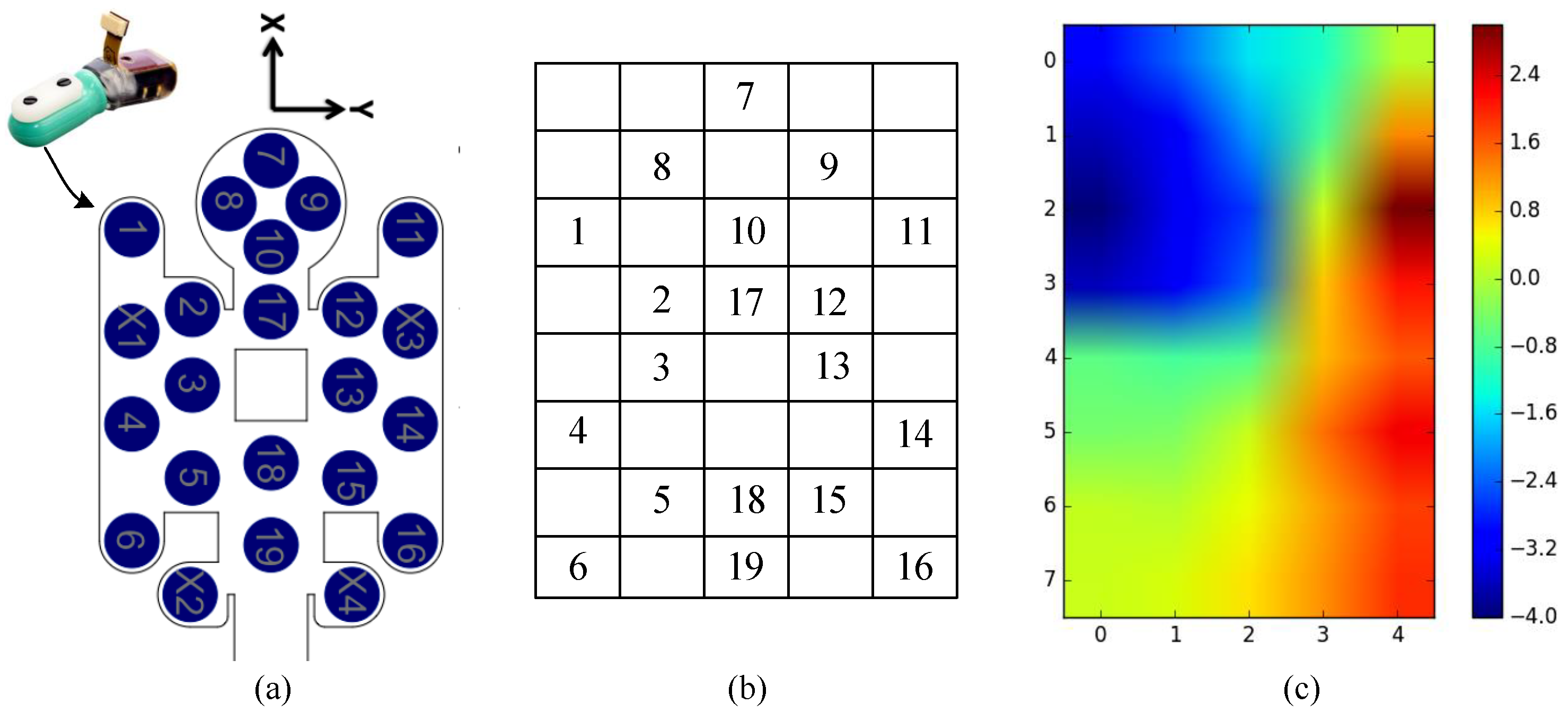

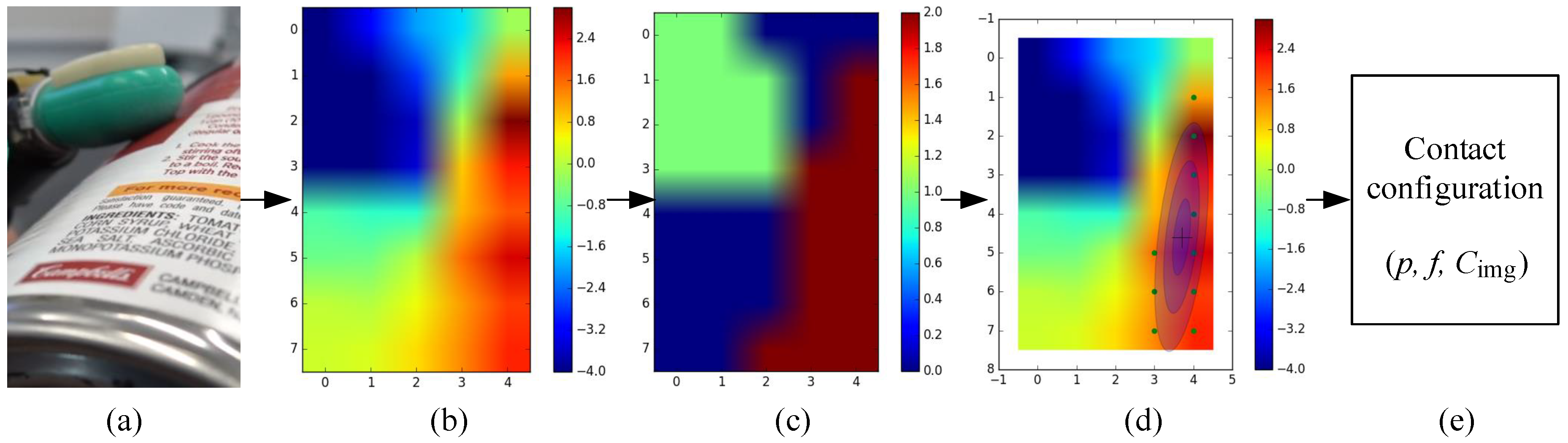

- A force estimation method based on GMM is proposed to calculate the contact information (i.e., contact force and contact location) from tactile data. This method considers the computation of the contact region on the surface of the tactile sensor.

- A grasping force controller is proposed for a multi-fingered robotic hand to stabilize the grasped object. It integrates tactile sensing with force feedback controller to adjust the grasping force between the robotic hand and the object online.

2. Related Work

3. Methodology

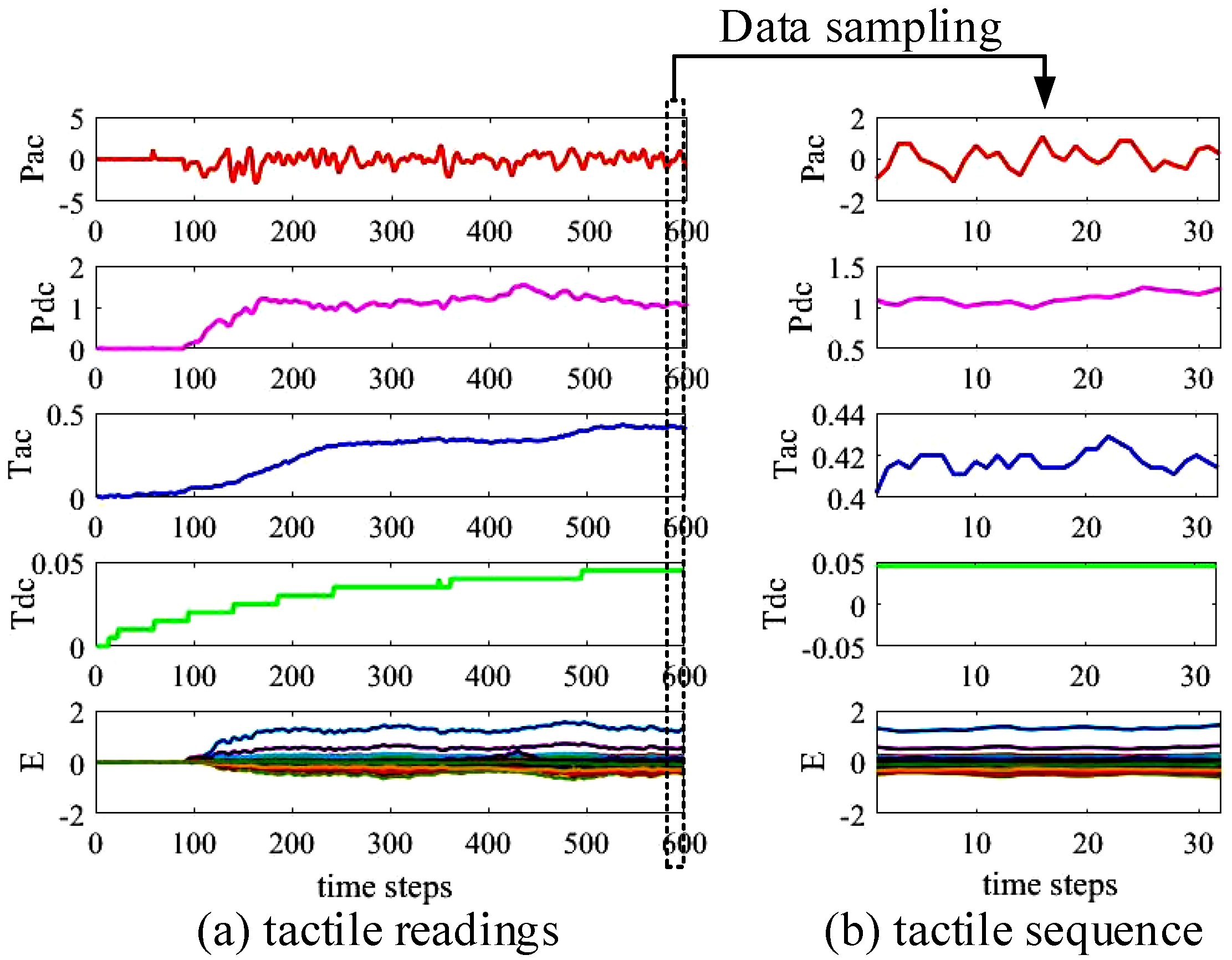

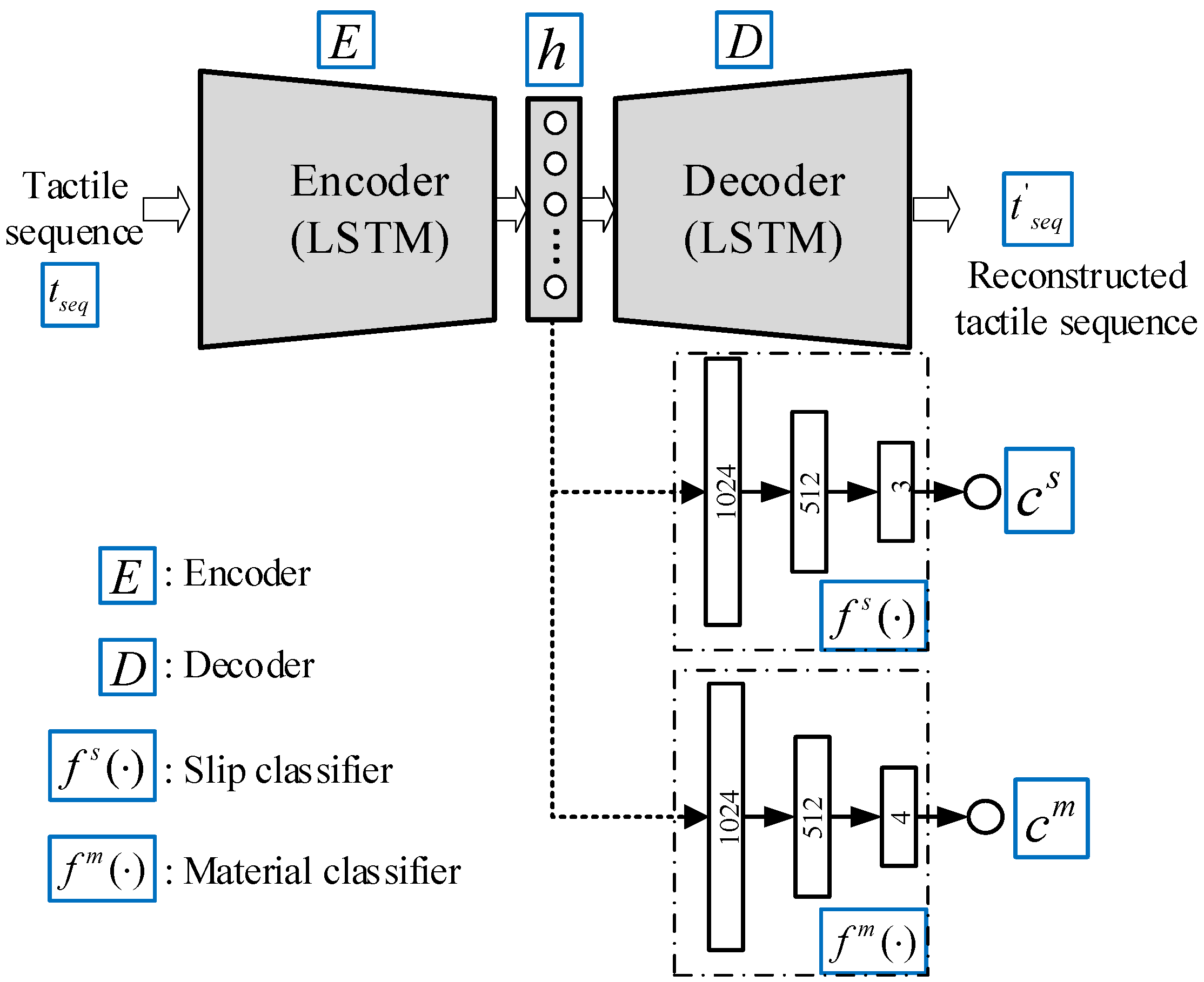

3.1. Online Detection Module for Slip and Material Detection

3.2. Force Estimation from Tactile Information

3.3. Grasping Force Controller

- An initial grasping force is manually set for each finger of the robotic hand in the initial phase.

- The initial grasping force is then updated to be according to the result of the material detection, as shown in Equation (8). The definition of the initial grasping force is related to the object material [8]. In the work, we use the detection material to update the initial grasping force. Usually, the object with foam material is usually lightweight and has a large friction coefficient. Thus, we use a smaller initial force for the object with foam material. The object with metal material has a relatively high weight and low friction coefficient. Thus, we use a bigger initial force for the object with metal material. Objects with different materials require different grasping forces to ensure the grasping stability:

- Once a slippage is detected during the grasping process, we increase the desired grasping force by adding a fixed amount of resulting in . In this work, the value of is to 0.2. Otherwise, the desired grasping force is set to equal , as shown in Equation (9):

- The desired grasping force is finally obtained by clipping into a safety region with the maximum and minimum value (i.e., and ). During the grasping process, the desired grasping force is updated continually, the force feedback controller is employed to drive the robotic fingers to track .

| Algorithm 1: Grasping force control through tactile sensing. |

| Requires: a trained online detection module, tactile readings, a initial grasping force |

| Repeat: |

| Generate a tactile sequence and a tactile image from tactile readings |

| Perform online detection based on and output contact event and object material |

| Perform force estimation based on to calculate current contact information |

| If then |

| Perform position controller to control the joints of the robotic hand to reach desired joint position |

| else |

| Update the desired grasping force based on and |

| Perform force feedback control to tracking the desired grasping force |

4. Experiments

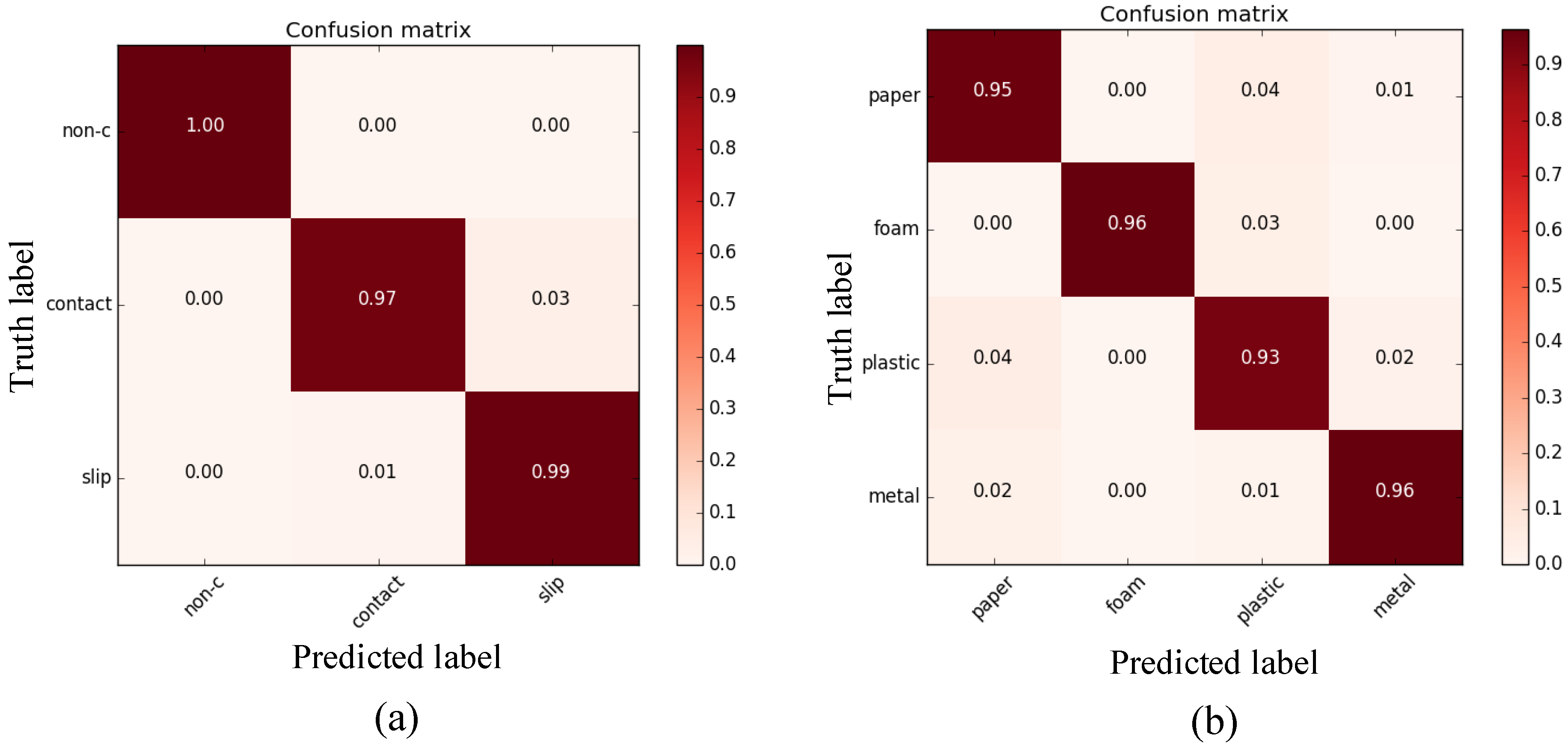

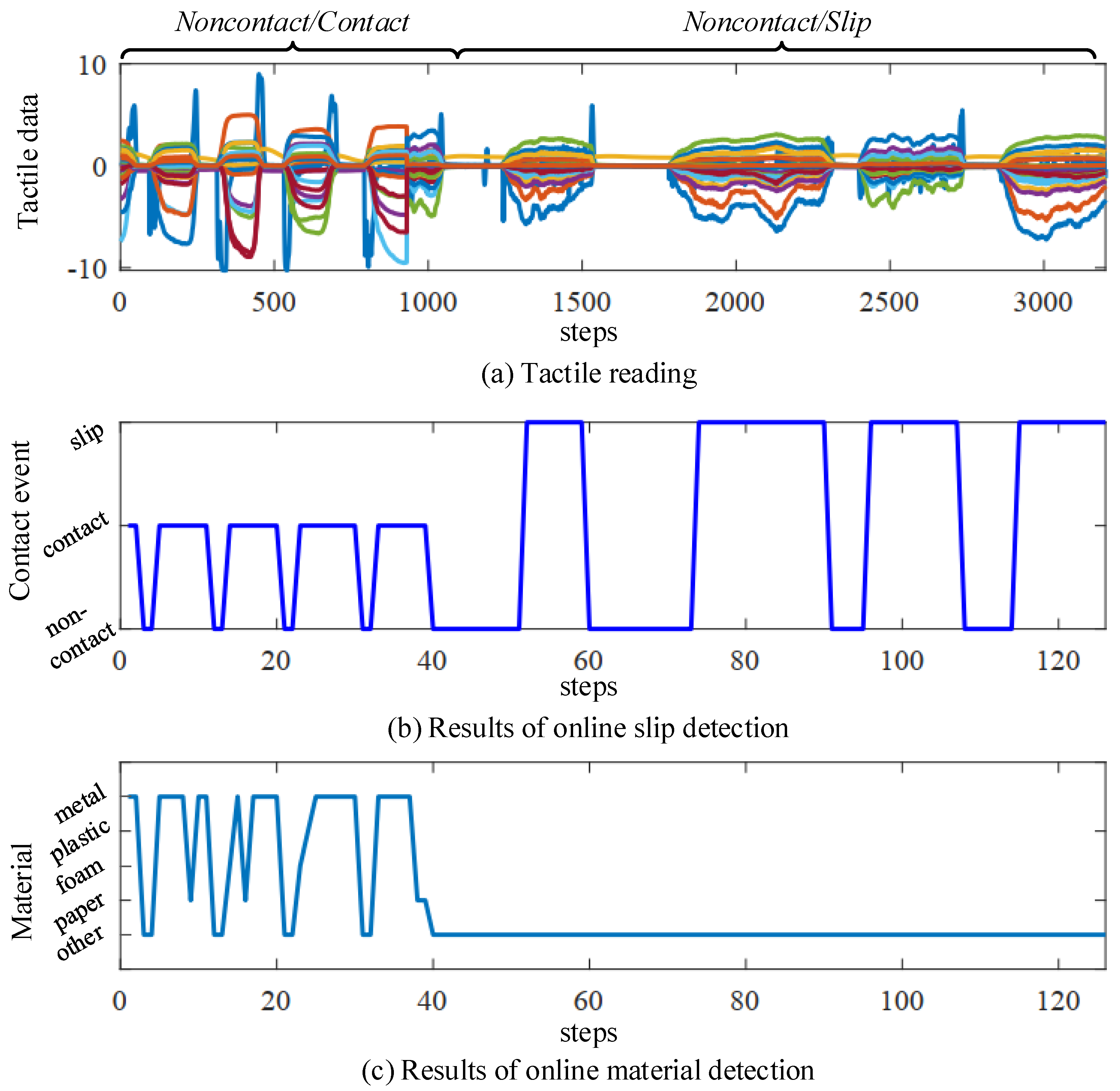

4.1. Evaluation of Online Detection Module

4.1.1. Dataset and Implementation

4.1.2. Experimental Results

4.2. Evaluation of Force Estimation

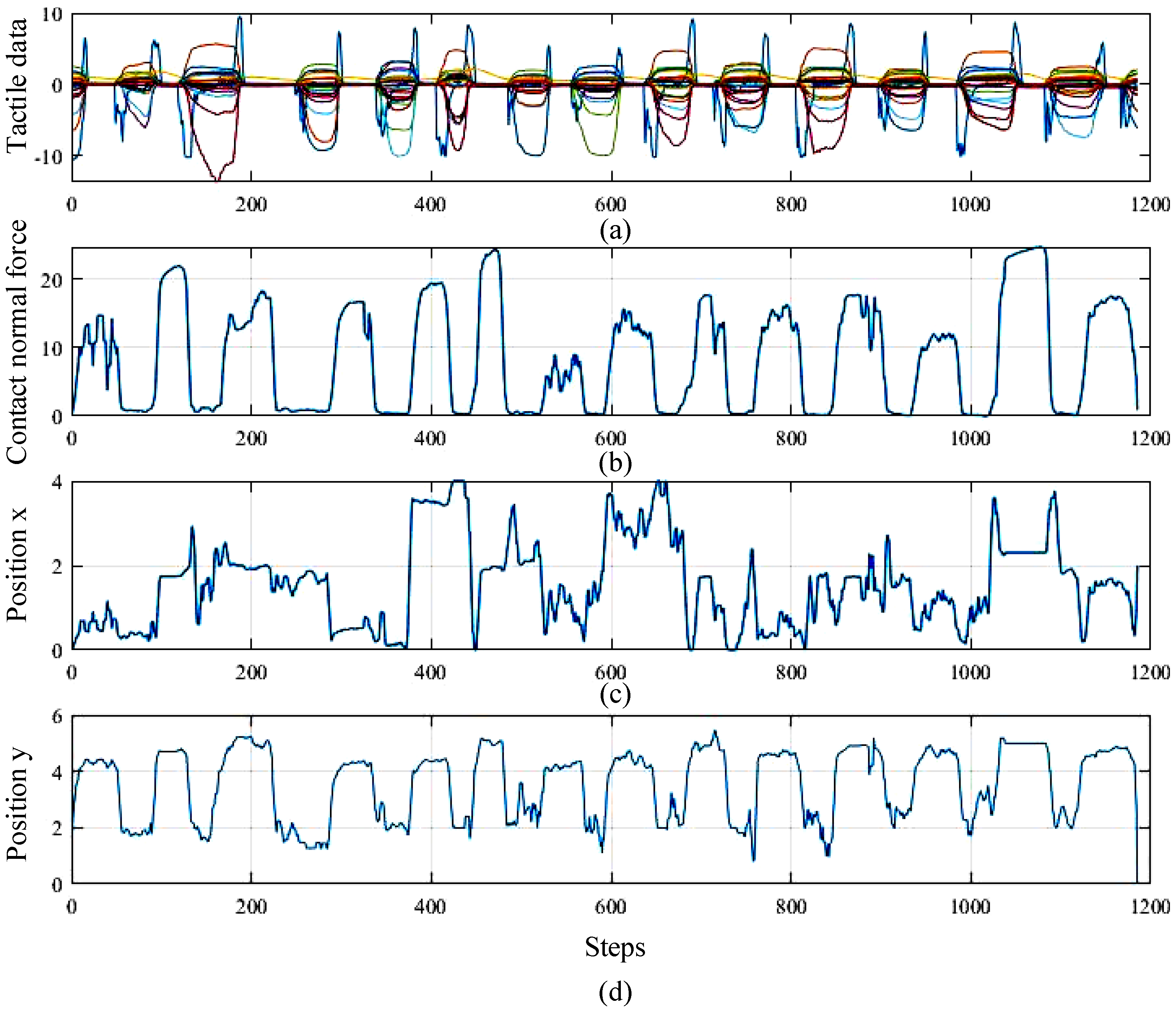

4.3. Real-World Grasping Force Control Experiment

4.4. Discussion

5. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Johansson, R.S.; Flanagan, J.R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 2009, 10, 345. [Google Scholar] [CrossRef]

- Chen, W.; Khamis, H.; Birznieks, I.; Lepora, N.F.; Redmond, S.J. Tactile sensors for friction estimation and incipient slip detection—toward dexterous robotic manipulation: A review. IEEE Sens. J. 2018, 18, 9049–9064. [Google Scholar] [CrossRef]

- Chebotar, Y.; Hausman, K.; Su, Z.; Sukhatme, G.S.; Schaal, S. Self-supervised regrasping using spatio-temporal tactile features and reinforcement learning. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 Octorber 2016; pp. 1960–1966. [Google Scholar]

- Veiga, F.; Van Hoof, H.; Peters, J.; Hermans, T. Stabilizing novel objects by learning to predict tactile slip. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5065–5072. [Google Scholar]

- Van Wyk, K.; Falco, J. Calibration and analysis of tactile sensors as slip detectors. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2744–2751. [Google Scholar]

- Zapata-Impata, B.S.; Gil, P.; Torres, F. Learning Spatio Temporal Tactile Features with a ConvLSTM for the Direction Of Slip Detection. Sensors 2019, 19, 523. [Google Scholar] [CrossRef] [PubMed]

- Meier, M.; Patzelt, F.; Haschke, R.; Ritter, H.J. Tactile convolutional networks for online slip and rotation detection. In Proceedings of the International Conference on Artificial Neural Networks, Barcelona, Spain, 6–9 September 2016; pp. 12–19. [Google Scholar]

- Tiest, W.M.B.; Kappers, A.M.L. The influence of visual and haptic material information on early grasping force. R. Soc. Open Sci. 2019, 6, 181563. [Google Scholar] [CrossRef] [PubMed]

- Chu, V.; McMahon, I.; Riano, L.; McDonald, C.G.; He, Q.; Perez-Tejada, J.M.; Arrigo, M.; Darrell, T.; Kuchenbecker, K.J. Robotic learning of haptic adjectives through physical interaction. Robot. Auton. Syst. 2015, 63, 279–292. [Google Scholar] [CrossRef]

- Gao, Y.; Hendricks, L.A.; Kuchenbecker, K.J.; Darrell, T. Deep learning for tactile understanding from visual and haptic data. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 536–543. [Google Scholar]

- Han, D.; Nie, H.; Chen, J.; Chen, M.; Deng, Z.; Zhang, J. Multi-modal haptic image recognition based on deep learning. Sens. Rev. 2018, 38, 486–493. [Google Scholar] [CrossRef]

- Ozawa, R.; Tahara, K. Grasp and dexterous manipulation of multi-fingered robotic hands: A review from a control view point. Adv. Robot. 2017, 31, 1030–1050. [Google Scholar] [CrossRef]

- Sundaralingam, B.; Lambert, A.S.; Handa, A.; Boots, B.; Hermans, T.; Birchfield, S.; Ratliff, N.; Fox, D. Robust learning of tactile force estimation through robot interaction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9035–9042. [Google Scholar]

- Su, Z.; Hausman, K.; Chebotar, Y.; Molchanov, A.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 297–303. [Google Scholar]

- Delgado, A.; Corrales, J.A.; Mezouar, Y.; Lequievre, L.; Jara, C.; Torres, F. Tactile control based on Gaussian images and its application in bi-manual manipulation of deformable objects. Robot. Auton. Syst. 2017, 94, 148–161. [Google Scholar] [CrossRef]

- Romano, J.M.; Hsiao, K.; Niemeyer, G.; Chitta, S.; Kuchenbecker, K.J. Human-inspired robotic grasp control with tactile sensing. IEEE Trans. Robot. 2011, 27, 1067–1079. [Google Scholar] [CrossRef]

- Li, M.; Bekiroglu, Y.; Kragic, D.; Billard, A. Learning of grasp adaptation through experience and tactile sensing. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3339–3346. [Google Scholar]

- Srivastava, N.; Mansimov, E.; Salakhudinov, R. Unsupervised learning of video representations using lstms. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 843–852. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Yuan, W.; Li, R.; Srinivasan, M.A.; Adelson, E.H. Measurement of shear and slip with a GelSight tactile sensor. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 304–311. [Google Scholar]

- Donlon, E.; Dong, S.; Liu, M.; Li, J.; Adelson, E.H.; Rodriguez, A. GelSlim: A High-Resolution, Compact, Robust, and Calibrated Tactile-sensing Finger. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1927–1934. [Google Scholar]

- Zhang, Y.; Kan, Z.; Tse, Y.A.; Yang, Y.; Wang, M.Y. FingerVision Tactile Sensor Design and Slip Detection Using Convolutional LSTM Network. arXiv 2018, arXiv:1810.02653. [Google Scholar]

- Luo, S.; Bimbo, J.; Dahiya, R.; Liu, H. Robotic tactile perception of object properties: A review. Mechatronics 2017, 48, 54–67. [Google Scholar] [CrossRef]

- Liu, H.; Yu, Y.; Sun, F.; Gu, J. Visual–tactile fusion for object recognition. IEEE Trans. Autom. Sci. Eng. 2016, 14, 996–1008. [Google Scholar] [CrossRef]

- Schneider, A.; Sturm, J.; Stachniss, C.; Reisert, M.; Burkhardt, H.; Burgard, W. Object identification with tactile sensors using bag-of-features. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 243–248. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Twenty-sixth Annual Conference on Neural Information Processing Systems, Stateline, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Veiga, F.; Peters, J.; Hermans, T. Grip stabilization of novel objects using slip prediction. IEEE Trans. Haptics 2018, 11, 531–542. [Google Scholar] [CrossRef] [PubMed]

- Bekiroglu, Y.; Laaksonen, J.; Jorgensen, J.A.; Kyrki, V.; Kragic, D. Assessing grasp stability based on learning and haptic data. IEEE Trans. Robot. 2011, 27, 616–629. [Google Scholar] [CrossRef]

- Krug, R.; Lilienthal, A.J.; Kragic, D.; Bekiroglu, Y. Analytic grasp success prediction with tactile feedback. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 165–171. [Google Scholar]

- Roa, M.A.; Suárez, R. Grasp quality measures: Review and performance. Auton. Robots 2015, 38, 65–88. [Google Scholar] [CrossRef] [PubMed]

- Dang, H.; Allen, P.K. Grasp adjustment on novel objects using tactile experience from similar local geometry. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4007–4012. [Google Scholar]

- Dang, H.; Allen, P.K. Stable grasping under pose uncertainty using tactile feedback. Auton. Robots 2014, 36, 309–330. [Google Scholar] [CrossRef]

- Hogan, F.R.; Bauza, M.; Canal, O.; Donlon, E.; Rodriguez, A. Tactile regrasp: Grasp adjustments via simulated tactile transformations. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2963–2970. [Google Scholar]

- Willis, M. Proportional-Integral-Derivative Control; Dept. of Chemical and Process Engineering University of Newcastle: Callaghan, Australia, 1999. [Google Scholar]

| (a) Slip Detection | |||

| Sampling Rate () | |||

| Window size () | 50 | 33.3 | 25 |

| 16 | |||

| 32 | |||

| 48 | |||

| (b) Material Detection | |||

| Sampling Rate () | |||

| Window size () | 50 | 33.3 | 25 |

| 16 | |||

| 32 | |||

| 48 | |||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, Z.; Jonetzko, Y.; Zhang, L.; Zhang, J. Grasping Force Control of Multi-Fingered Robotic Hands through Tactile Sensing for Object Stabilization. Sensors 2020, 20, 1050. https://doi.org/10.3390/s20041050

Deng Z, Jonetzko Y, Zhang L, Zhang J. Grasping Force Control of Multi-Fingered Robotic Hands through Tactile Sensing for Object Stabilization. Sensors. 2020; 20(4):1050. https://doi.org/10.3390/s20041050

Chicago/Turabian StyleDeng, Zhen, Yannick Jonetzko, Liwei Zhang, and Jianwei Zhang. 2020. "Grasping Force Control of Multi-Fingered Robotic Hands through Tactile Sensing for Object Stabilization" Sensors 20, no. 4: 1050. https://doi.org/10.3390/s20041050

APA StyleDeng, Z., Jonetzko, Y., Zhang, L., & Zhang, J. (2020). Grasping Force Control of Multi-Fingered Robotic Hands through Tactile Sensing for Object Stabilization. Sensors, 20(4), 1050. https://doi.org/10.3390/s20041050