Face Recognition at a Distance for a Stand-Alone Access Control System †

Abstract

1. Introduction

2. Related Work

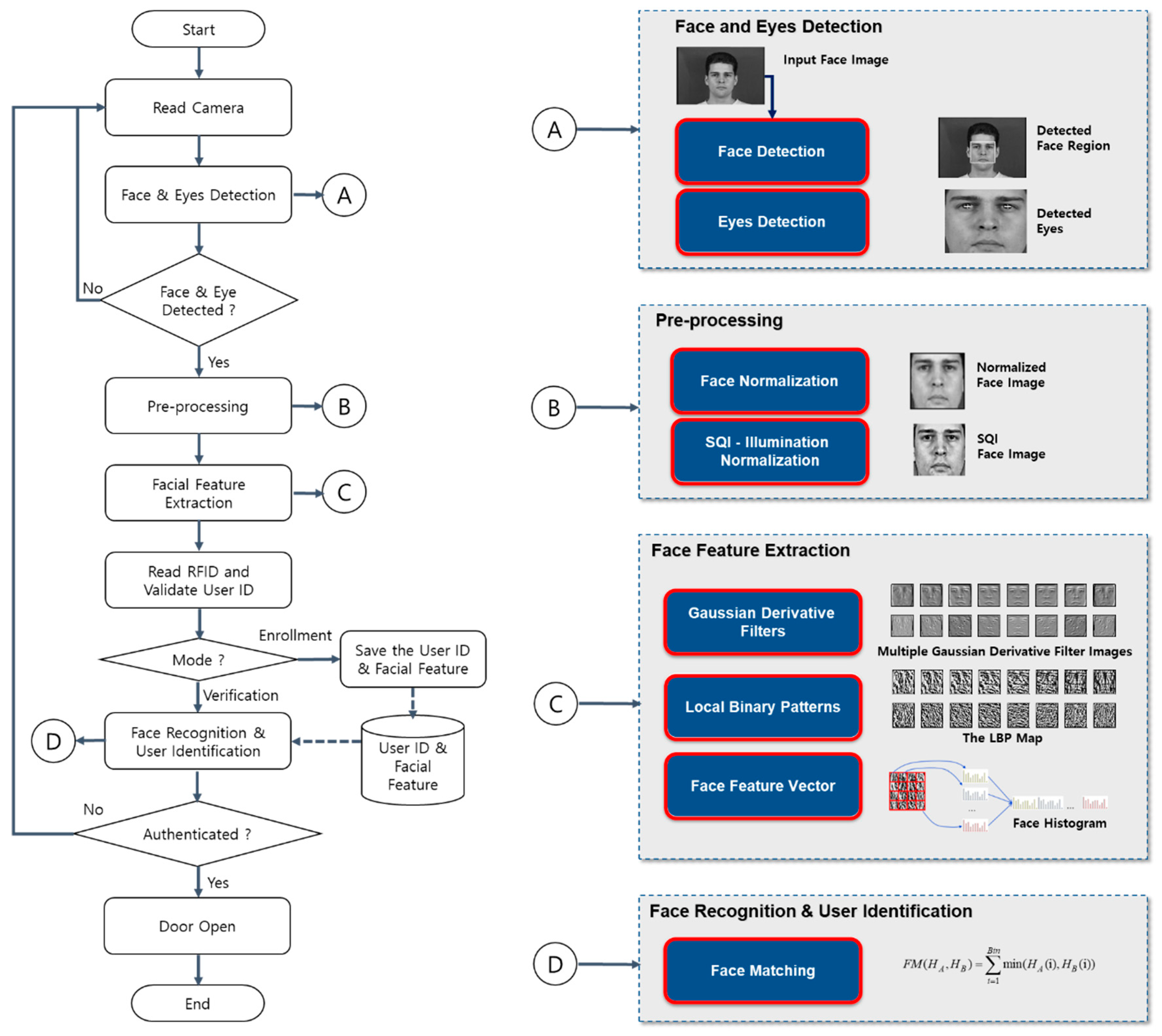

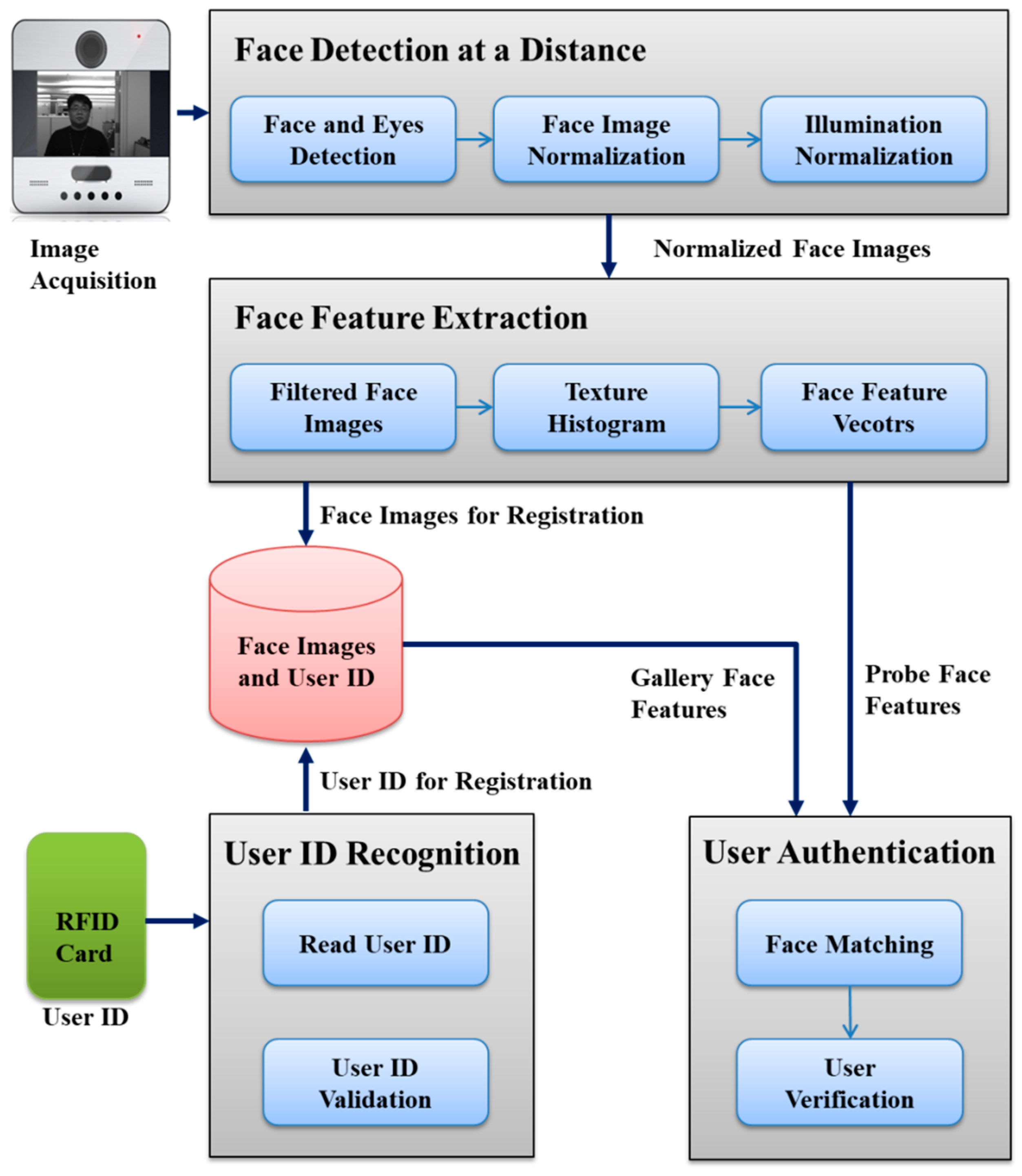

3. User-Friendly Face Recognition

3.1. Face Recognition Methods for Access Control

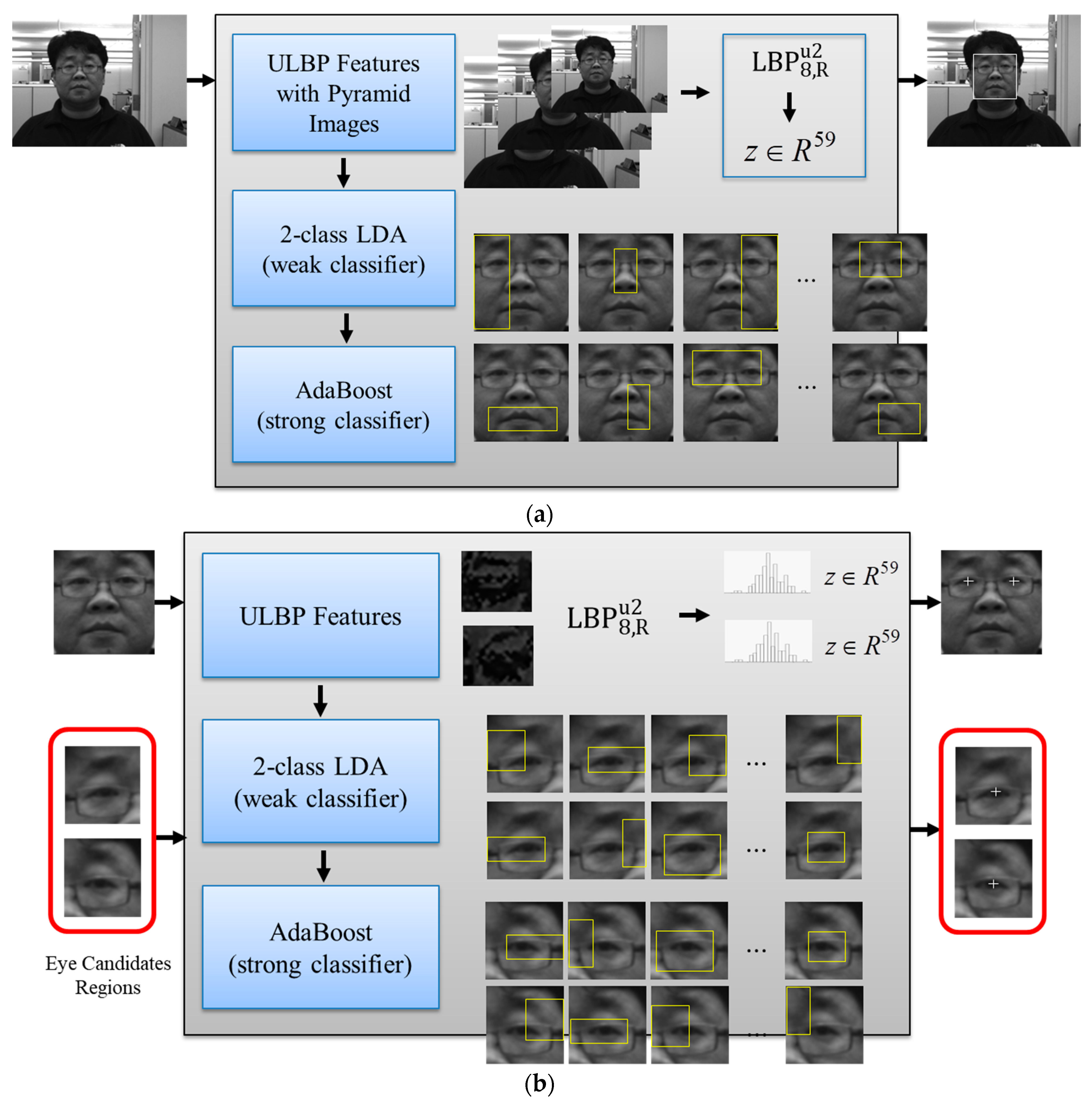

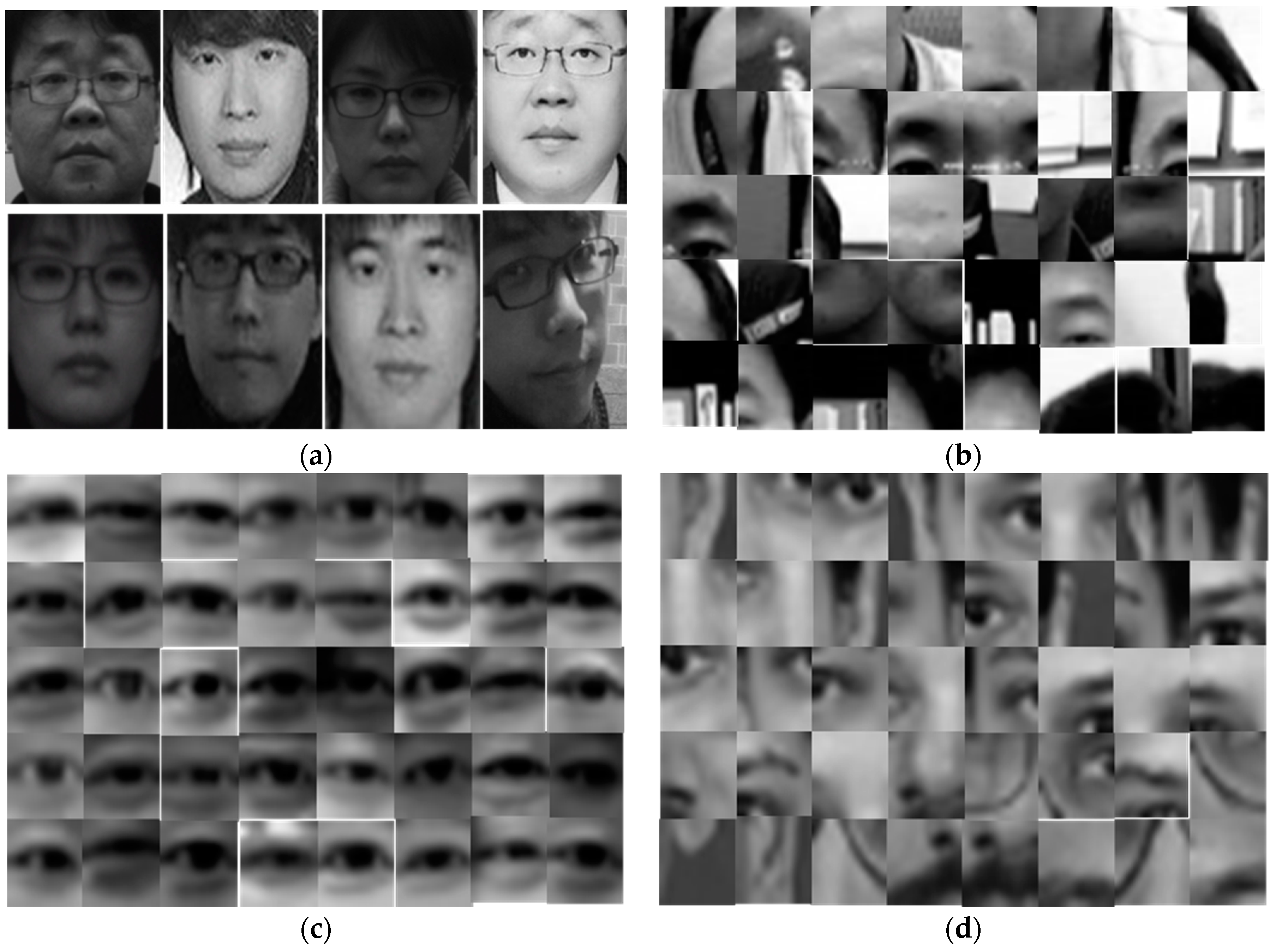

3.2. Face Detection and Eye Localization

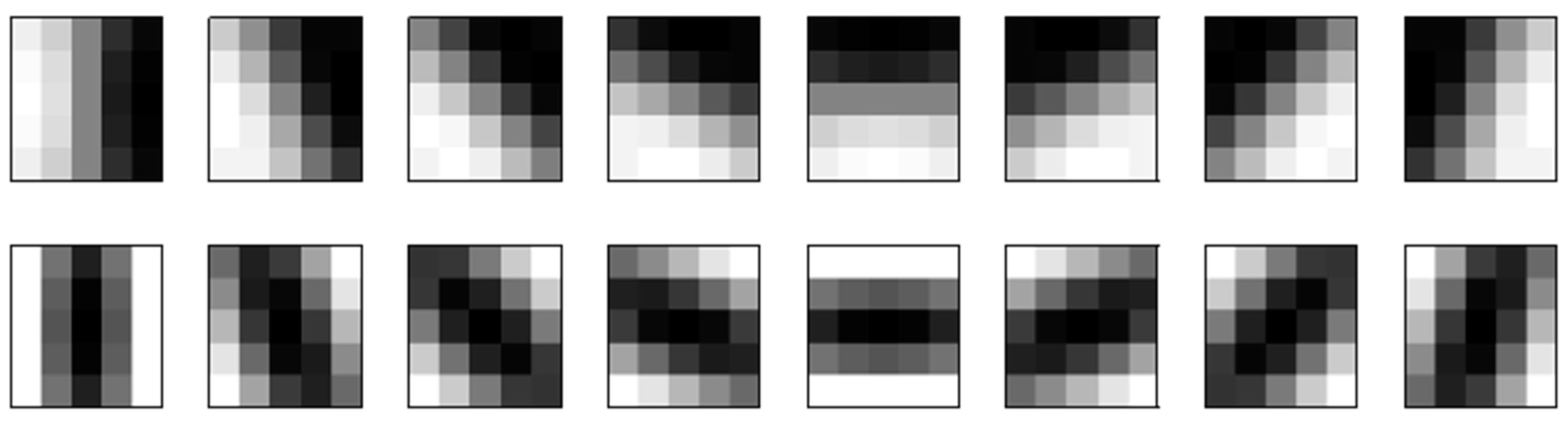

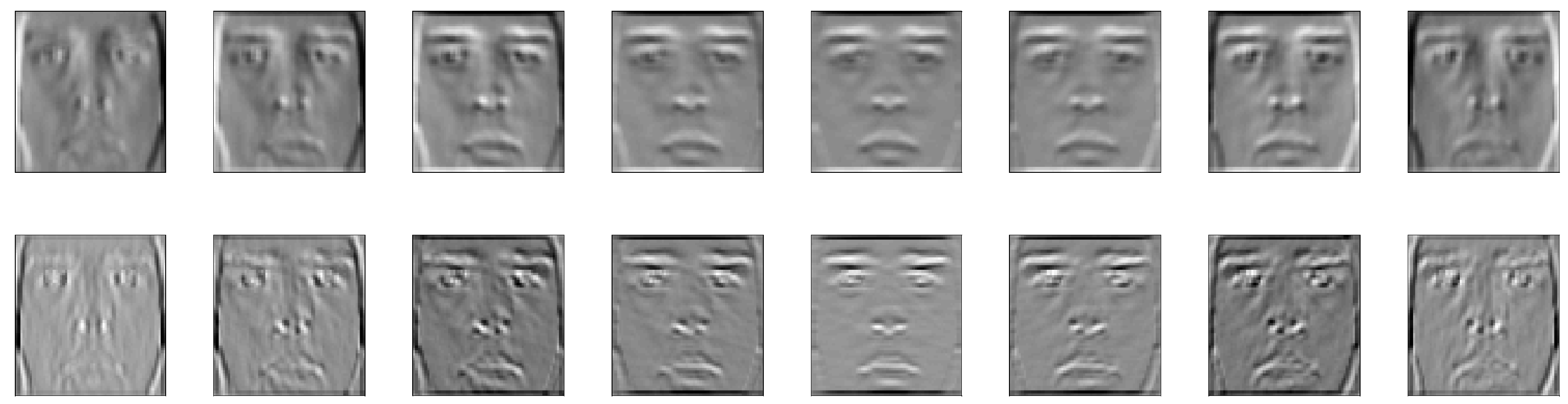

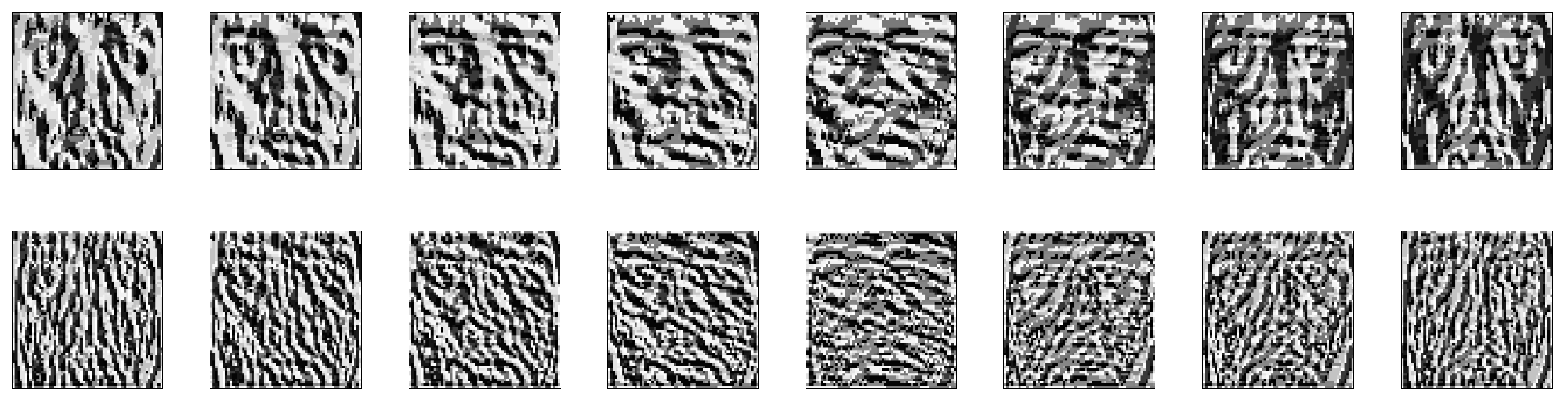

3.3. Face Recognition Based on a Gaussian Derivative Filter-LBP Histogram

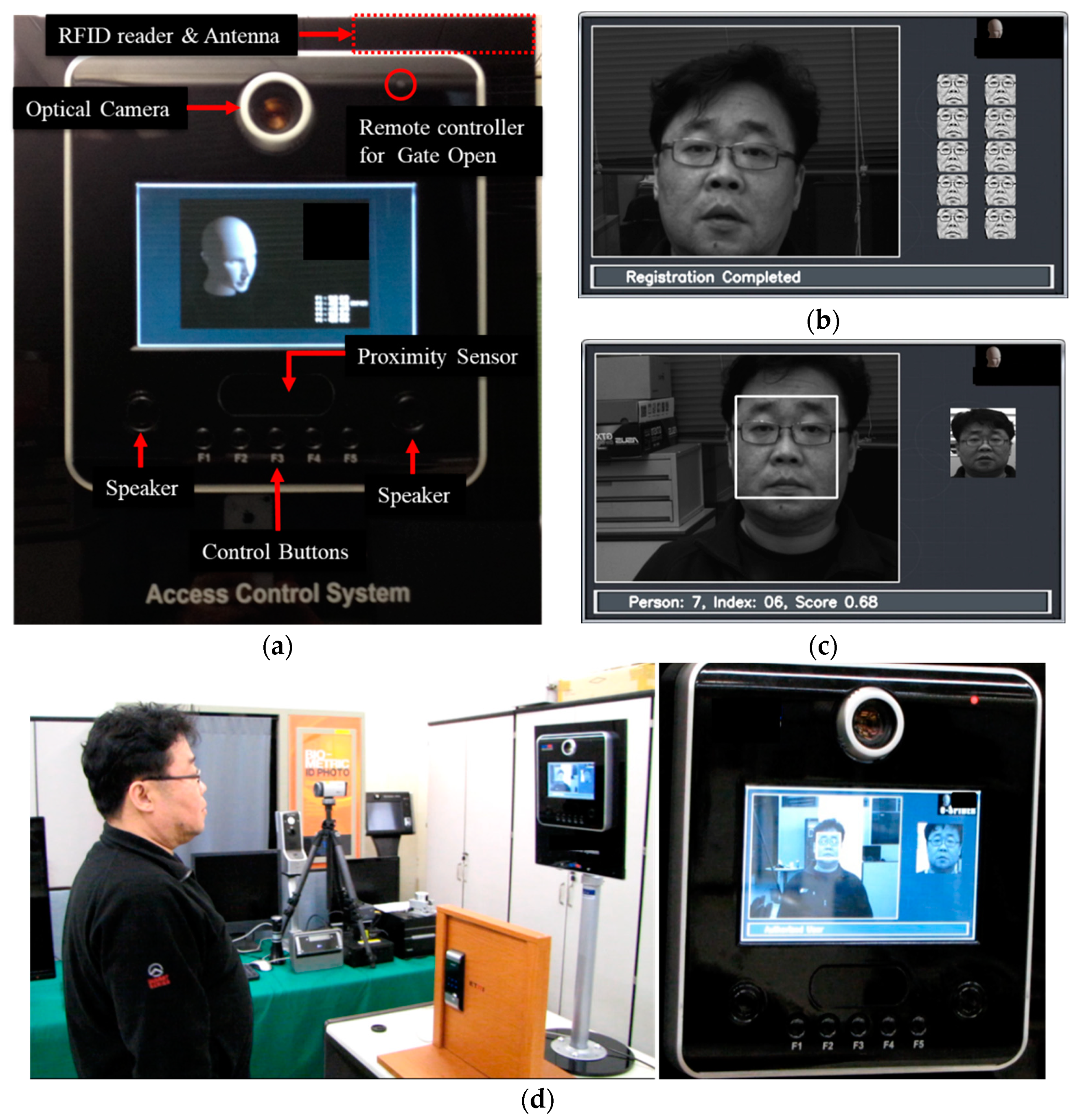

3.4. User-Friendly Access Control System

4. Experimental Results

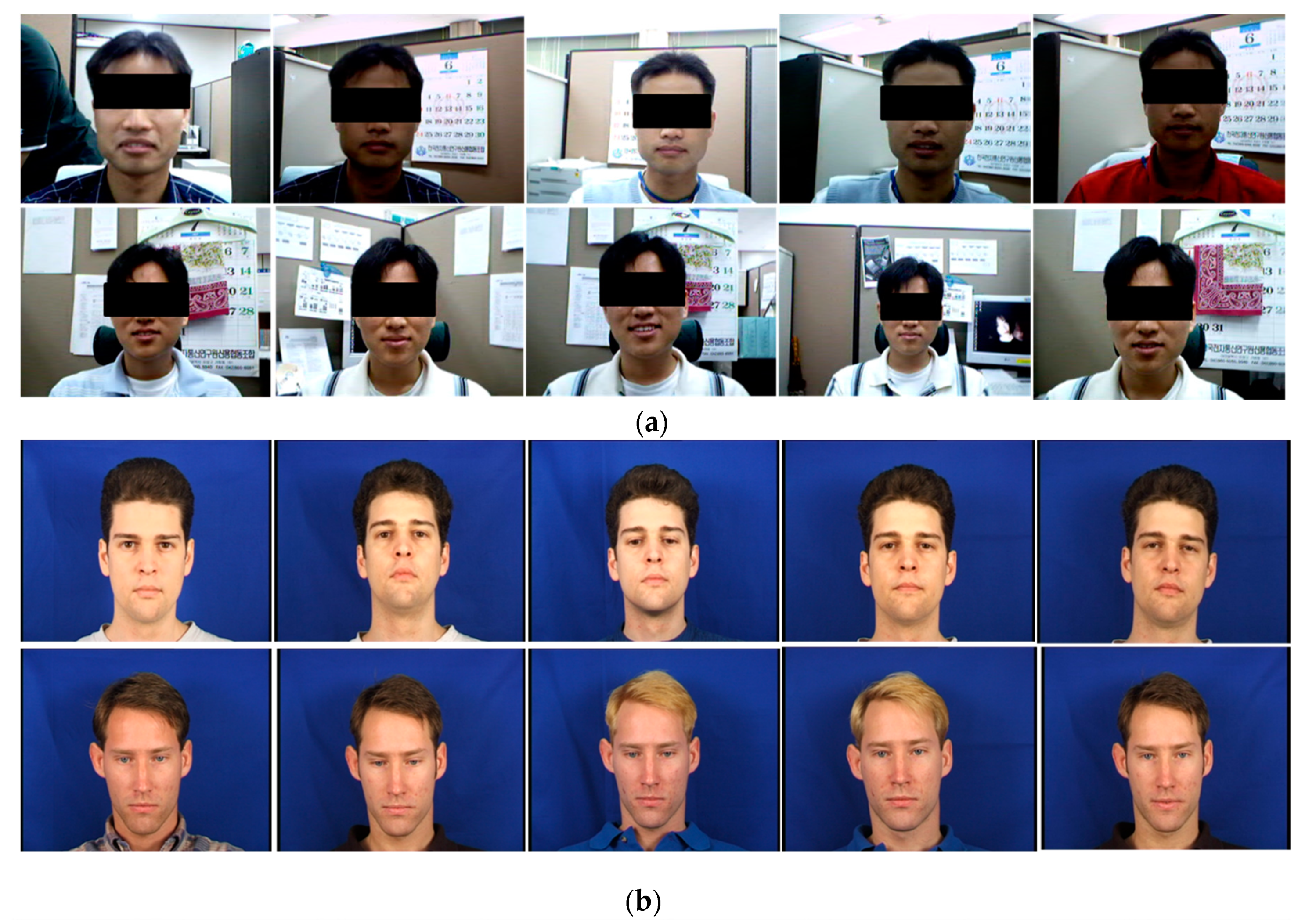

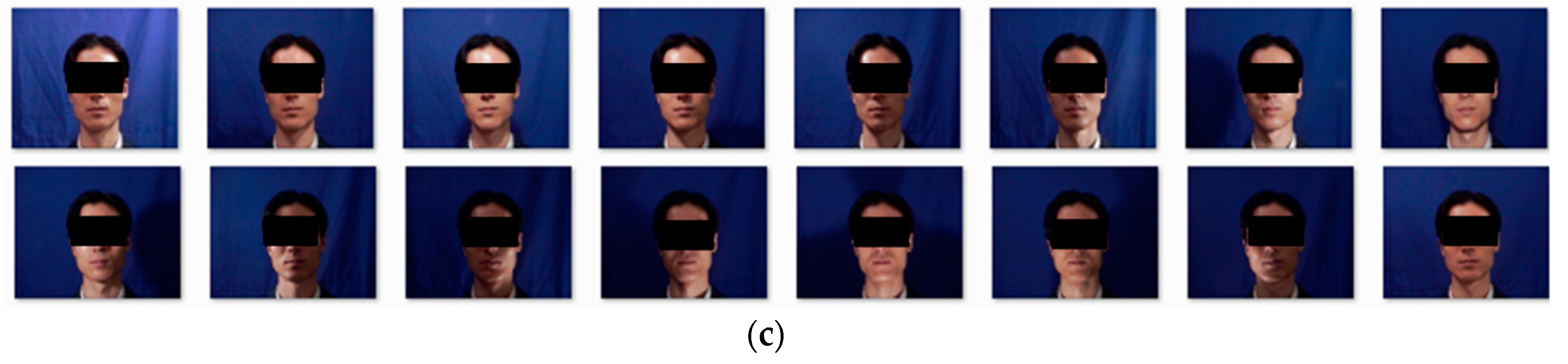

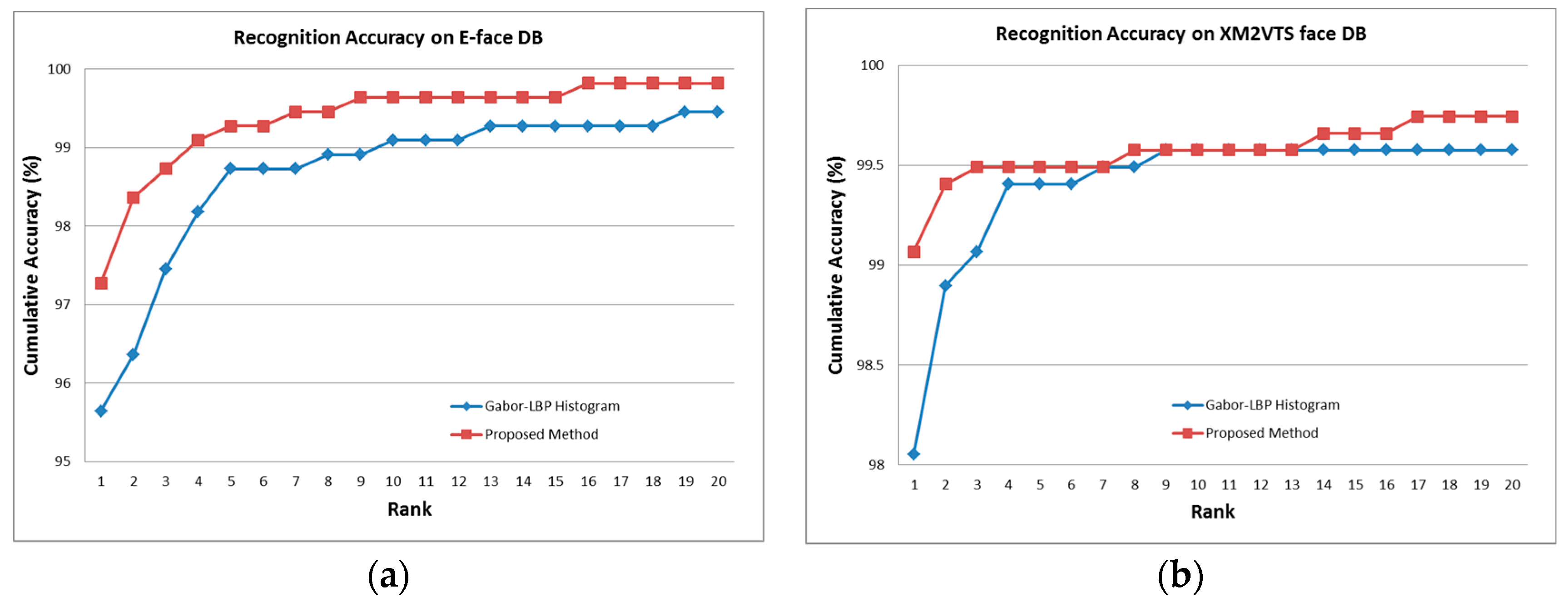

4.1. Experimental Setup

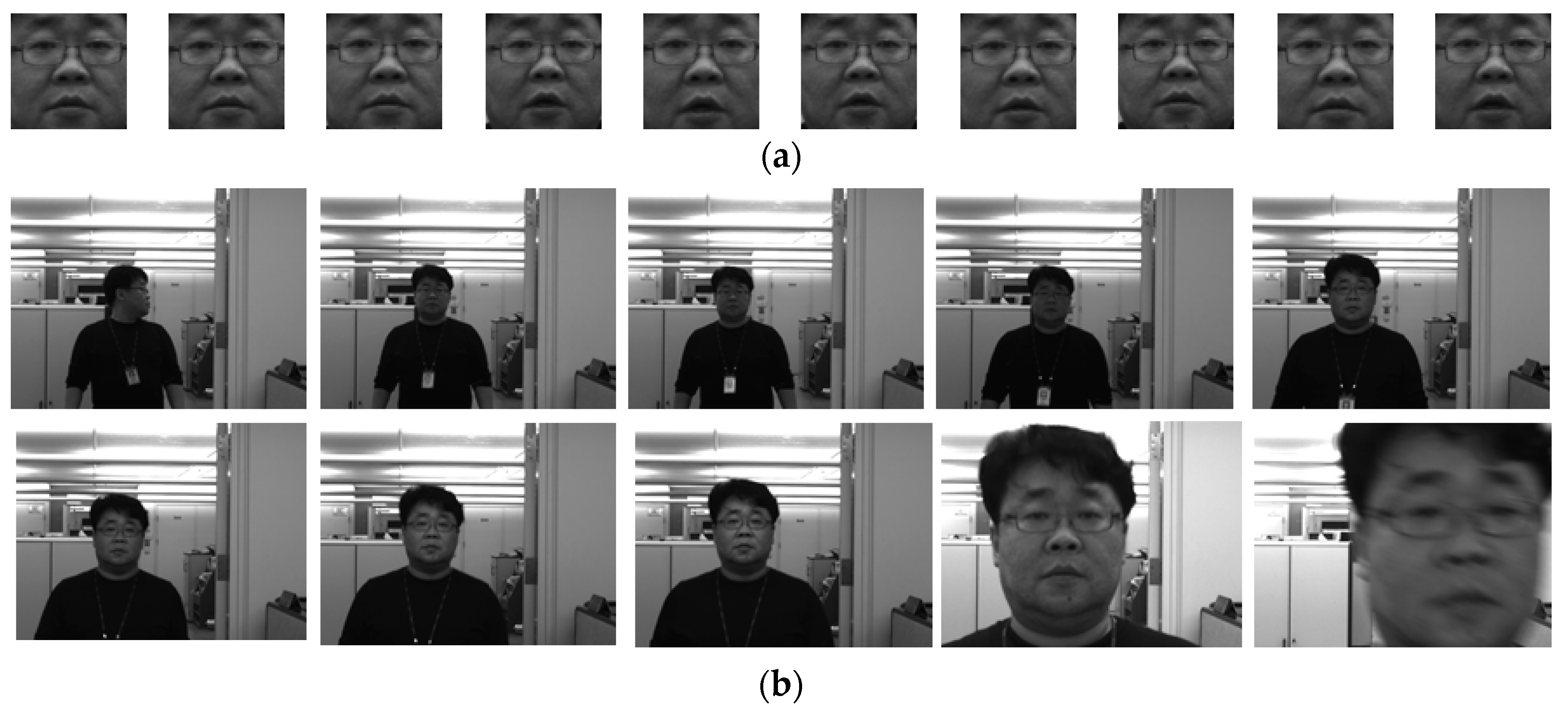

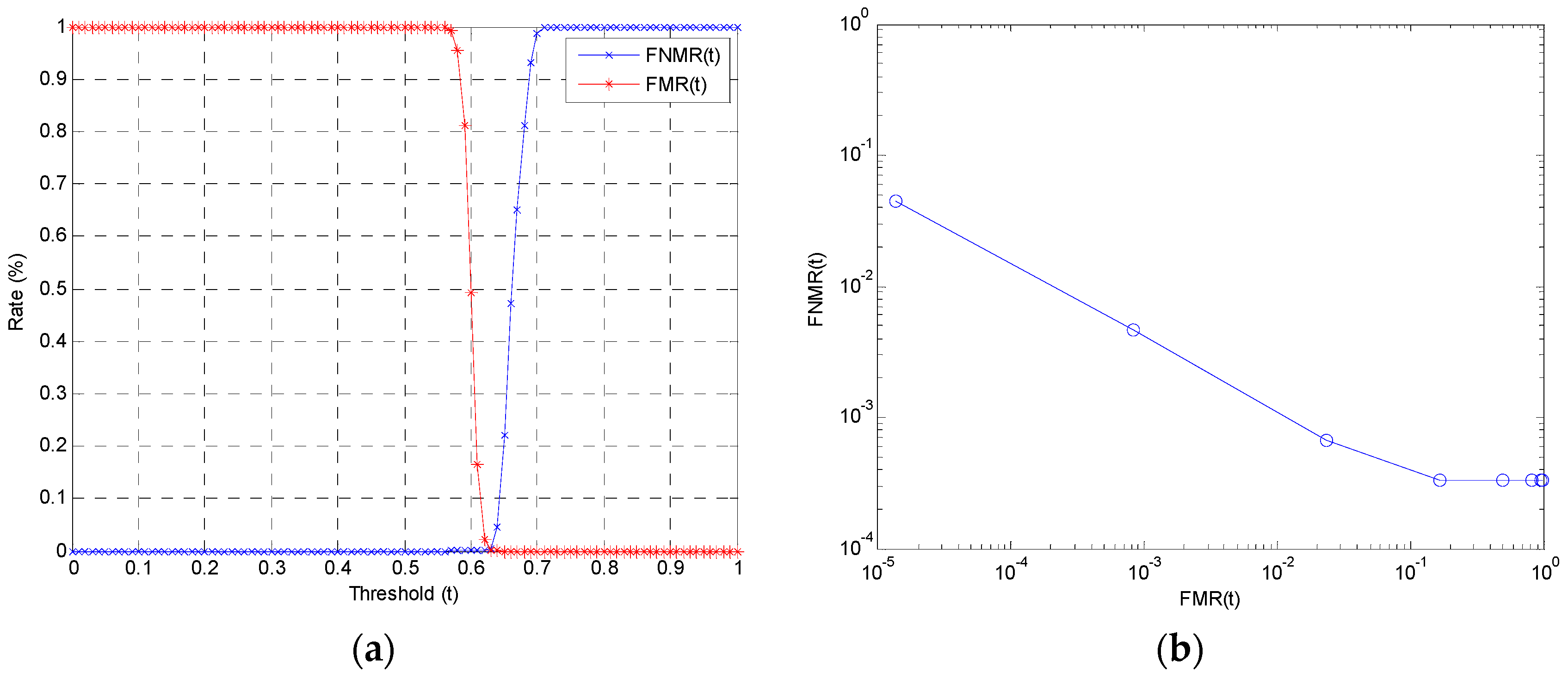

4.2. Experimental Evaluations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bryliuk, D.; Starovoitov, V. Access control by face recognition using neural networks and negative examples. In Proceedings of the 2nd International Conference on Artificial Intelligence, Crimea, Ukraine, 16–20 September 2002; pp. 428–436. [Google Scholar]

- Lee, H.; Chung, Y.; Kim, J.; Park, D. Face image retrieval using sparse representation classifier with Gabor-LBP hitogram. In Proceedings of the International Workshop on Information Security Applications (WISA 2010), Jeju Island, Korea, 24–26 August 2010; pp. 273–280. [Google Scholar]

- Salvador, E.; Foresti, G.L. Interactive reception desk with face recognition-based access control. In Proceedings of the 2007 First ACM/IEEE International Conference on Distributed Smart Cameras, Vienna, Austria, 25–28 September 2007; pp. 140–146. [Google Scholar]

- Wang, X.; Du, X. Study on algorithm of access control system based on face recognition. In Proceedings of the 2009 ISECS International Colloquium on Computing, Communication, Control, and Management, Sanya, China, 8–9 August 2009; pp. 336–338. [Google Scholar]

- Hadid, A.; Heikkilä, M.; Ahonen, T.; Pietikäinen, M. A novel Approach to Access Control based on Face Recognition. In Proceedings of the Workshop on Processing Sensory Information for Proactive Systems (PSIPS), Oulu, Finland, 14–15 June 2004; pp. 68–74. [Google Scholar]

- Xiang, P. Research and implementation of access control system based on RFID and FNN-face recognition. In Proceedings of the ISDEA, Sanya, China, 6–7 January 2012; pp. 716–719. [Google Scholar]

- Wu, D.L.; Ng, W.W.; Chan, P.P.; Ding, H.L.; Jing, B.Z.; Yeung, D.S. Access control by RFID and face recognition based on neural network. In Proceedings of the ICMLC, Qingdao, China, 11–14 July 2010; pp. 675–680. [Google Scholar]

- Tistarelli, M.; Li, S.Z.; Chellappa, R. Handbook of Remote Biometrics for Surveillance and Security, 1st ed.; Springer: London, UK, 2009; pp. 155–168. [Google Scholar]

- Zhou, S.; Xiao, S. 3D face recognition: a survey. Hum. Cent. Comput. Info. Sci. 2018, 8, 35. [Google Scholar] [CrossRef]

- Soltanpour, S.; Boufama, B.; Wu, Q.J. A survey of local feature methods for 3D face recognition. Pattern Recognit. 2017, 72, 391–406. [Google Scholar] [CrossRef]

- Vezzetti, E.; Marcolin, F. 3D Landmarking in Multiexpression Face Analysis: A Preliminary Study on Eyebrows and Mouth. Aesthetic Plast. Surg. 2014, 38, 796–811. [Google Scholar] [CrossRef] [PubMed]

- Vezzetti, E.; Calignano, F.; Moos, S. Computer-aided morphological analysis for maxillo-facial diagnostic: A preliminary study. J. Plast. Reconstr. Aes. Surg. 2010, 63, 218–226. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. In Proceedings of the on Analysis and Modeling of Faces and Gestures, Rio de Janeiro, Brazil, 20 October 2007; pp. 168–182. [Google Scholar]

- Wang, S.; Deng, H. Face recognition using principal component analysis-fuzzy linear discriminant analysis and dynamic fuzzy neural network. In Proceedings of the 2011 International Conference on Future Wireless Networks and Information Systems, Macao, China, 30 November–1 December 2011; pp. 577–586. [Google Scholar]

- Serrano, Á.; de Diego, I.M.; Conde, C.; Cabello, E. Recent advances in face biometrics with Gabor wavelets: a review. Pattern Recognit. Lett. 2010, 31, 372–381. [Google Scholar] [CrossRef]

- Lee, H.; Chung, Y.; Yoo, J.H.; Won, C. Face recognition based on sparse representation classifier with Gabor-edge components histogram. In Proceedings of the 2012 Eighth International Conference on Signal Image Technology and Internet Based Systems, Naples, Italy, 25–29 November 2012; pp. 105–109. [Google Scholar]

- Huang, D.; Shan, C.; Ardabilian, M.; Wang, Y.; Chen, L. Local binary patterns and its application to facial image analysis: a survey. IEEE Trans. Syst. Man Cybern. Part C 2011, 41, 765–781. [Google Scholar] [CrossRef]

- Gao, T.; He, M. A novel face description by local multi-channel Gabor histogram sequence binary pattern. In Proceedings of the 2008 International Conference on Audio, Language and Image Processing, Shanghai, China, 7–9 July 2008; pp. 1240–1244. [Google Scholar]

- Zhang, W.; Shan, S.; Gao, W.; Chen, X.; Zhang, H. Gabor binary pattern histogram sequence (LGBPHS): a novel non-statistical model for face representation and recognition. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; pp. 786–791. [Google Scholar]

- Lee, Y.; Lee, H.; Chung, Y. Face identification with lazy learning. In Proceedings of the FCV, Kawasaki, Japan, 2–4 February 2012. [Google Scholar]

- Xie, S.; Shan, S.; Chen, X.; Chen, J. Fusing local patterns of Gabor magnitude and phase for face recognition. IEEE Trans. Image Process. 2010, 19, 1349–1361. [Google Scholar] [PubMed]

- Yoo, J.H.; Park, S.H.; Lee, Y.J. Real-Time Age and Gender Estimation from Face Images. In Proceedings of the 1st International Conference on Machine Learning and Data Engineering (iCMLDE2017), Sydney, Australia, 20–22 November 2017; pp. 15–20. [Google Scholar]

- Zuo, F.; de With, P.H.N. Real-time Embedded Face Recognition for Smart Home. IEEE Trans. Consum. Electron. 2005, 51, 183–190. [Google Scholar]

- Kremic, E.; Subasi, A.; Hajdarevic, K. Face Recognition Implementation for Client-Server Mobile Application using PCA. In Proceedings of the ITI 2012 34th International Conference on Information Technology Interfaces, Cavtat, Croatia, 25–28 June 2012; pp. 435–440. [Google Scholar]

- Saraf, T.; Shukla, K.; Balkhande, H.; Deshmukh, A. Automated Door Access Control System Using Face Recogntion. IRJET 2018, 5, 3036–3040. [Google Scholar]

- Boka, A.; Morris, B. Person Recognition for Access Logging. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019. [Google Scholar]

- Bakshi, N.; Prabhu, V. Recognition System for Access Control using Principal Component Analysis. In Proceedings of the 2017 International Conference on Intelligent Communication and Computational Techniques (ICCT), Jaipur, India, 22–23 December 2017. [Google Scholar]

- Sagar, D.; Narasimha, M.K. Development and Simulation Analysis of a Robust Face Recognition based Smart Locking System. In Innovations in Electronics and Communication Engineering; Springer: Singapore, 2019; pp. 3–14. [Google Scholar]

- Sajjad, M.; Nasir, M.; Muhammad, K.; Khan, S.; Jan, Z.; Sangaiah, A.K.; Elhoseny, M.; Baik, S.W. Pi Assisted Face Recognition Framework for Enhanced Law-enforcement Services in Smart Cities. Future Gener. Comput. Syst. 2017. [Google Scholar] [CrossRef]

- Heinly, J.; Dunn, E.; Frahm, J.-M. Comparative Evaluation of Binary Features. In Proceedings of the 12th European Conference on Computer Vision (ECCV2012), Firenze, Italy, 7–13 October 2012. [Google Scholar]

- Park, S.; Yoo, J. Robust Eye Localization using Correlation Filter. In Proceedings of the IIISC & ICCCS, Chiang Mai, Thailand, 20–23 December 2012; pp. 137–140. [Google Scholar]

- An, K.H.; Park, S.H.; Chung, Y.S.; Moon, K.Y.; Chung, M.J. Learning discriminative multi-scale and multi-position LBP features for face detection based on Ada-LDA. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guilin, China, 19–23 December 2009; pp. 1117–1122. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution Gray-scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Shan, C.; Gritti, T. Learning Discriminative LBP-histogram Bins for Facial Expression Recognition. In Proceedings of the British Machine Vision Conference, Leeds, UK, 1–4 September 2008. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks. IEEE Signal Process Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, S.; Liu, Y.; Gao, X.; Han, Z. MobileFaceNets: Efficient CNNs for Accurate Real-Time Face Verification on Mobile Devices. In Proceedings of the Chinese Conference on Biometric Recognition (CCBR 2018), Urumqi, China, 11–12 August 2018. [Google Scholar]

- Paris, S.; Glotin, H.; Zhao, Z.Q. Real-time face detection using integral histogram of multi-scale local binary patterns. In Proceedings of the ICIC, Zhengzhou, China, 11–14 August 2011; pp. 276–281. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the CVPR, Kauai, HI, USA, 8–14 December 2001; pp. 511–518. [Google Scholar]

- Wang, H.; Li, S.Z.; Wang, Y. Face recognition under varying lighting conditions using self-quotient image. In Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition (FGR), Seoul, Korea, 17–19 May 2004; pp. 819–824. [Google Scholar]

- Wahl, E.; Hillenbrand, U.; Hirzinger, G. Surflet-pair-relation histograms: a statistical 3D-shape representation for rapid classification. In Proceedings of the Fourth International Conference on 3-D Digital Imaging and Modeling, Banff, AB, Canada, 6–10 October 2003; pp. 474–481. [Google Scholar]

- Kim, D.J.; Chung, K.W.; Hong, K.S. Person authentication using face, teeth, and voice modalities for mobile device security. IEEE Trans. Consumer Electron. 2010, 56, 2678–2685. [Google Scholar] [CrossRef]

- Grother, P.J.; Grother, P.J.; Phillips, P.J.; Quinn, G.W. Report on the Evaluation of 2D Still-Image Face Recognition Algorithms; NIST Interagency/Internal Report (NISTIR)-7709; NIST: Gaithersburg, MD, USA, 2011.

- Grother, P.; Quinn, G.; Ngan, M. Face Recognition Vendor Test (FRVT) 2012; NIST: Gaithersburg, MD, USA, 2012. [Google Scholar]

- Kim, J.H.; Kim, B.G.; Roy, P.P.; Jeong, D.M. Efficient Facial Expression Recognition Algorithm Based on Hierarchical Deep Neural Network Structure. IEEE Access 2019, 7, 41273–41285. [Google Scholar] [CrossRef]

- Kim, J.H.; Hong, G.S.; Kim, B.G.; Dogra, D.P. deepGesture: Deep learning-based gesture recognition scheme using motion sensors. Displays 2018, 55, 38–45. [Google Scholar] [CrossRef]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: the extended M2VTS database. In Proceedings of the Second international conference on audio and video-based biometric person authentication (AVBPA), Washington, DC, USA, 22–23 March 1999; pp. 72–77. [Google Scholar]

- Lee, H.S.; Park, S.; Kang, B.N.; Shin, J.; Lee, J.Y.; Je, H.; Jun, B.; Kim, D. The POSTECH face database (PF07) and performance evaluation. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; pp. 1–6. [Google Scholar]

- Lee, S.; Woo, H.; Shin, Y. Study on Personal Information Leak Detection Based on Machine Learning. Adv. Sci. Lett. 2017, 23, 12818–12821. [Google Scholar] [CrossRef]

- Kim, S. Apply Blockchain to Overcome Wi-Fi Vulnerabilities. J. Multimed. Inf. Syst. 2019, 6, 139–146. [Google Scholar] [CrossRef]

- In, K.; Kim, S.; Kim, U. DSPI: An Efficient Index for Processing Range Queries on Wireless Broadcast Stream. In Mobile, Ubiquitous, and Intelligent Computing; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2014; Volume 274, pp. 39–46. [Google Scholar]

- Seo, Y.; Huh, J. Automatic emotion-based music classification for supporting intelligent IoT applications. Electronics 2019, 8, 164. [Google Scholar] [CrossRef]

| Face Database (DB) | Gabor-LBP Histogram | Proposed Method |

|---|---|---|

| E-face Database | 95.64% | 97.27% |

| XM2VTS Face Database | 98.05% | 99.06% |

| Performance Criterion | TAR | FRR | FAR | EER |

|---|---|---|---|---|

| Performance | 99.53% | 0.47% | 0.08% | 0.28% |

| Performance Criterion | TAR | FRR | FAR | EER |

|---|---|---|---|---|

| Performance | 95.00% | 5.0% | 0.00% | 2.33% |

| Process | Average Execution Time |

|---|---|

| Face and Eyes Detection | 102 ms |

| Face Verification | 53 ms |

| Total Execution Time | 190 ms |

| Criteria | Saraf et al. [25] | Boka et al. [26] | Bakshsi et al. [27] | Sagar et al. [28] | Sajjad et al. [29] | Proposed Method |

|---|---|---|---|---|---|---|

| Embedded H/W | Raspberry Pi | Raspberry Pi with the Intel Movidius Neural Compute Stick | Arduino Uno | Renesas board (RL78 G13) | Raspberry Pi 3 model B, ARM Cortex-A53, 1.2 GHz processor, video Core IV GPU | Cortex dual core CPU, 2.50 DMIPS/MHz per core |

| Camera | Single camera | Two cameras | Single camera | Single camera | Raspberry Pi camera | Single camera |

| Face detection | Haar cascade method | Haar-feature based approach | N/A | PCA, LDA, histogram equalization | Viola Jones Method | AdaBoost with LBP |

| Facial feature | LBP histogram | FaceNet | Global feature with principal component analysis | PCA, LDA, histogram equalization | Bag of words with oriented FAST and rotated BRIEF | Gaussian derivative filter-LBP histogram |

| Face identification | N/A | k-NN | Euclidean Distance | N/A | Support vector machine | Histogram intersection |

| Execution time | N/A | N/A | N/A | 10 sec for authentication | 9 s for first 10 matches | 190 ms per image |

| Overall accuracy | N/A | 99.60% accuracy on LFW dataset | N/A | 90.00% accuracy on own dataset | 91.33% average accuracy on Face-95 dataset | 95.00% true accept rate on own video stream dataset |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.; Park, S.-H.; Yoo, J.-H.; Jung, S.-H.; Huh, J.-H. Face Recognition at a Distance for a Stand-Alone Access Control System. Sensors 2020, 20, 785. https://doi.org/10.3390/s20030785

Lee H, Park S-H, Yoo J-H, Jung S-H, Huh J-H. Face Recognition at a Distance for a Stand-Alone Access Control System. Sensors. 2020; 20(3):785. https://doi.org/10.3390/s20030785

Chicago/Turabian StyleLee, Hansung, So-Hee Park, Jang-Hee Yoo, Se-Hoon Jung, and Jun-Ho Huh. 2020. "Face Recognition at a Distance for a Stand-Alone Access Control System" Sensors 20, no. 3: 785. https://doi.org/10.3390/s20030785

APA StyleLee, H., Park, S.-H., Yoo, J.-H., Jung, S.-H., & Huh, J.-H. (2020). Face Recognition at a Distance for a Stand-Alone Access Control System. Sensors, 20(3), 785. https://doi.org/10.3390/s20030785