HKF-SVR Optimized by Krill Herd Algorithm for Coaxial Bearings Performance Degradation Prediction

Abstract

1. Introduction

2. Theoretical Background

2.1. KH Algorithm

2.2. HKF–SVR

- (1)

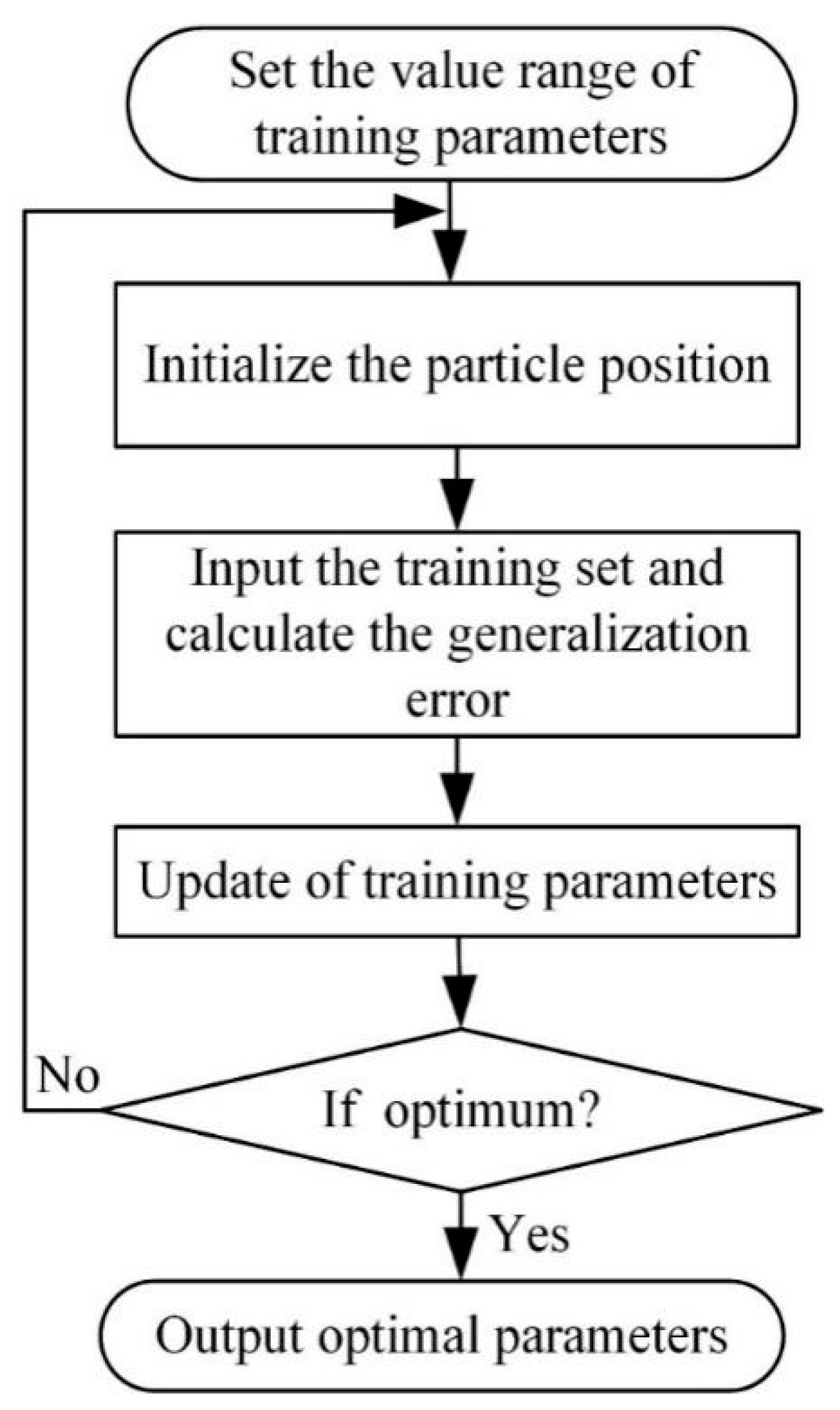

- The number of iterations t, the number of krill and the maximum number of cycles are initialized.

- (2)

- The value range of parameters is set and the set of parameters is randomly generated as the initial position.

- (3)

- Particle motion and generalization error calculation are conducted.

- (4)

- If the error at a given moment meets the requirements or reaches the number of iterations, Step 7 is performed.

- (5)

- The number of iterations t = t + 1.

- (6)

- The current particle position and velocity are updated on the basis of Equations (6) and (7), new training parameters are found and then Step 3 is repeated.

- (7)

- The optimal parameters are obtained.

3. HKF–SVR Method for Bearing Performance Degradation Prediction

- (1)

- Multi-domain features extraction. The performance degradation process of bearings has a certain non-linearity. It is difficult for a single feature to accurately reflect the degradation process. In order to comprehensively reflect the bearing state, in this study, eight time-domain features and eight frequency-domain features F1-F16 shown in Table 2 and Table 3 are extracted from the mixed bearing vibration signal. Where the F1-F8 stand for mean, root mean square value, square root amplitude, absolute mean, skewness, waveform indicators, pulse indicator and margin index, respectively. F9-F12 stand for mean frequency, standard deviation of frequency, center frequency, frequency RMS and F13-F16 stand for the degrees of dispersion or concentration of the spectrum where si is a spectrum for i = 1, 2, …, N (N is the number of spectrum lines) and fi is the frequency value of the i-th spectrum line, which indicates the degree of dispersion or concentration of the spectrum and the change of the dominant frequency band. Assume that the sample of the healthy state is X, the sample of the current monitoring data sample is Y. The two samples are both divided into ni segments {X1, X2, …, Xni} and {Y1, Y2, …, Yni} and then the 16 features of each segment are calculated as the original feature vectors {Fx} = {Fx1,1, Fx1,2, …, Fx1,ni; Fx2,1, Fx2,2, …, Fx2,ni; …; Fx16,1, Fx16,2, …, Fx16,ni }16 × ni and {Fy} = {Fy1,1, Fy1,2, …, Fy1,ni; Fy2,1, Fy2,2, …, Fy2,ni; …; Fy16,1, Fy16,2, …, Fy16,ni}16 × ni.

- (2)

- Feature fusion using KJADE. In this step, KJADE is used to further extract latent sensitive source features that could accurately reflect the performance degradation of the monitored bearing from the features {Fx} and {Fy} extracted in the previous step. To facilitate visualization of results, the dimension of the latent sensitive source feature vector is set to be 3. So, after this step, the latent sensitive source features are transformed to be {Fx} = {Fx1,1, Fx1,2, …, Fx1,ni; Fx2,1, Fx2,2, …, Fx2,ni; Fx3,1, Fx3,2, …, Fx3,ni }3 × ni and {Fy} = {Fy1,1, Fy1,2, …, Fy1,ni; Fy2,1, Fy2,2, …, Fy2,ni; Fy3,1, Fy3,2, …, Fy3,ni }3 × ni.

- (3)

- Performance degradation index calculation. The integration evaluation factor of SS between the {Fx} and {Fy} obtained in the previous step is calculated as the comprehensive performance degradation index. First, the between-class scatter matrix is calculated as follows:Then, the inter-class scatter matrix is calculated as follows:where C is the number of categories, is the feature mean in category i, m is the mean of the entire feature sample. Finally, the SS is calculated using the following equation:After this step, the SS that standing for the performance degradation index of the current monitored data sample can be obtained.

- (4)

- Prediction model constructed through HKF-SVR. In practical engineering application, after continuous monitoring for a period of time, a continuous monitoring vibration data can be obtained. One SS value corresponding to each monitoring moment can be obtained by using steps 1–4 and the performance degradation prediction model can be conducted by using HKF-SVR.

- (5)

- Performance degradation prediction using the constructed model. The performance degradation of the next moment can be predicted using the constructed model obtained in the previous step. Meanwhile, the model is updated in real time with the current and historical data.

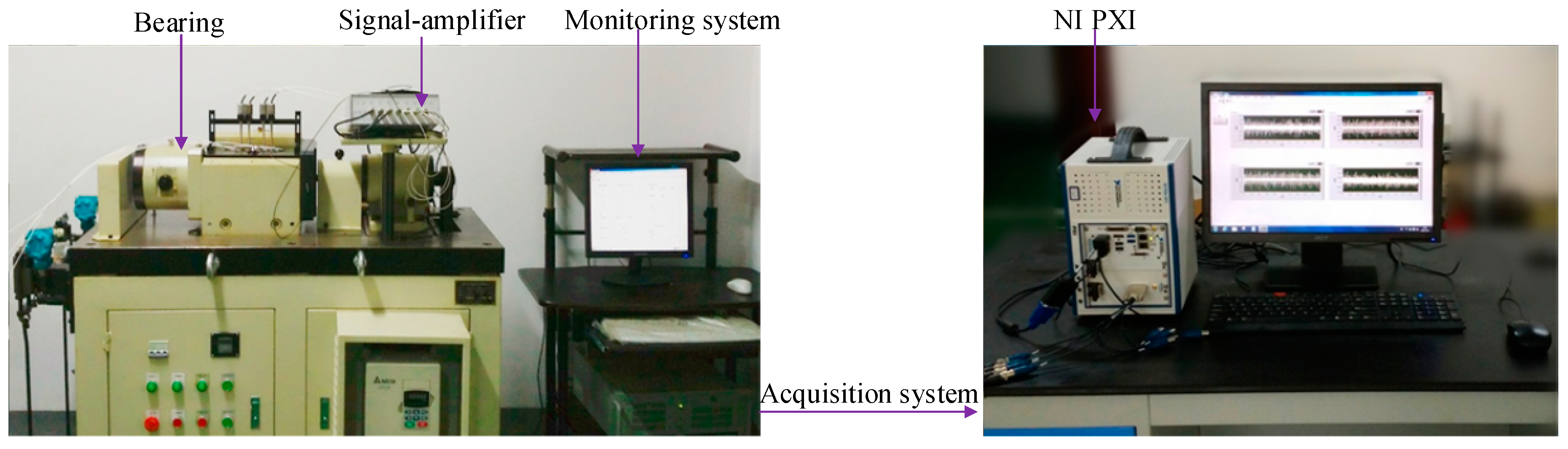

4. Case Studies

4.1. CASE I

4.2. CASE II

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zheng, G.; Zhao, H.; Wu, D.; Li, X. Study on a Novel Fault Diagnosis Method of Rolling Bearing in Motor. Recent Pat. Mech. Eng. 2016, 9, 144–152. [Google Scholar]

- Nohál, L.; Vaculka, M. Experimental and computational evaluation of rolling bearing steel durability. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017. [Google Scholar]

- Dong, W.; Tsui, K.L.; Qiang, M. Prognostics and Health Management: A Review of Vibration based Bearing and Gear Health Indicators. IEEE Access 2017, 6, 665–676. [Google Scholar]

- Liao, W.Z.; Dan, L. An improved prediction model for equipment performance degradation based on Fuzzy-Markov Chain. In Proceedings of the IEEE International Conference on Industrial Engineering & Engineering Management, Singapore, 6–9 December 2015. [Google Scholar]

- Liu, P.; Hongru, L.I.; Baohua, X.U. A performance degradation feature extraction method and its application based on mathematical morphological gradient spectrum entropy. J. Vib. Shock 2016, 35, 86–90. [Google Scholar]

- Duan, L.; Zhang, J.; Ning, W.; Wang, J.; Fei, Z. An Integrated Cumulative Transformation and Feature Fusion Approach for Bearing Degradation Prognostics. Shock Vib. 2018, 2018, 9067184. [Google Scholar] [CrossRef]

- Liu, W.B.; Zou, Z.Y.; Xing, W.W. Feature Fusion Methods in Pattern Classification. J. Beijing Univ. Posts Telecommun. 2017, 40, 1–8. [Google Scholar]

- Liu, P.; Li, H.; Ye, P. A Method for Rolling Bearing Fault Diagnosis Based on Sensitive Feature Selection and Nonlinear Feature Fusion. In Proceedings of the International Conference on Intelligent Computation Technology and Automation, Nanchang, China, 14–15 June 2015; pp. 30–35. [Google Scholar]

- Zhang, M.; Shan, X.; Yang, Y.U.; Na, M.I.; Yan, G.; Guo, Y. Research of individual dairy cattle recognition based on wavelet transform and improved KPCA. Acta Agric. Zhejiangensis. 2017, 29, 2000–2008. [Google Scholar]

- Liu, Y.; He, B.; Liu, F.; Lu, S.; Zhao, Y. Feature fusion using kernel joint approximate diagonalization of eigen-matrices for rolling bearing fault identification. J. Sound Vib. 2016, 385, 389–401. [Google Scholar] [CrossRef]

- Han, T.; Jiang, D.; Zhao, Q.; Wang, L.; Yin, K. Comparison of random forest, artificial neural networks and support vector machine for intelligent diagnosis of rotating machinery. Trans. Inst. Meas. Control 2018, 40, 2681–2693. [Google Scholar] [CrossRef]

- Shao, H.; Cheng, J.; Jiang, H.; Yang, Y.; Wu, Z. Enhanced deep gated recurrent unit and complex wavelet packet energy moment entropy for early fault prognosis of bearing. Knowl. Based Syst. 2019. [Google Scholar] [CrossRef]

- Qi, Y.; Shen, C.; Dong, W.; Shi, J.; Zhu, Z. Stacked Sparse Autoencoder-Based Deep Network for Fault Diagnosis of Rotating Machinery. IEEE Access 2017, 5, 15066–15079. [Google Scholar] [CrossRef]

- Fan, G.F.; Peng, L.L.; Hong, W.C.; Fan, S. Electric load forecasting by the SVR model with differential empirical mode decomposition and auto regression. Neurocomputing 2016, 173, 958–970. [Google Scholar] [CrossRef]

- Bahmani, S.; Romberg, J. Phase Retrieval Meets Statistical Learning Theory: A Flexible Convex Relaxation. arXiv 2016, arXiv:1610.04210. [Google Scholar]

- Liu, Z.; Yu, G. A neural network approach for prediction of bearing performance degradation tendency. In Proceedings of the 2017 9th International Conference on Modelling, Identification and Control (ICMIC), Kunming, China, 10–12 July 2017. [Google Scholar]

- Qian, Y.; Hu, S.; Yan, R. Bearing performance degradation evaluation using recurrence quantification analysis and auto-regression model. In Proceedings of the 2013 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Minneapolis, MN, USA, 6–9 May 2013. [Google Scholar]

- Shen, C.; Dong, W.; Kong, F.; Tse, P.W. Fault diagnosis of rotating machinery based on the statistical parameters of wavelet packet paving and a generic support vector regressive classifier. Measurement 2013, 46, 1551–1564. [Google Scholar] [CrossRef]

- Dong, W.; Tsui, K.L. Two novel mixed effects models for prognostics of rolling element bearings. Mech. Syst. Signal Process. 2018, 99, 1–13. [Google Scholar]

- Wang, X.L.; Han, G.; Li, X.; Hu, C.; Hui, G. A SVR-Based Remaining Life Prediction for Rolling Element Bearings. J. Fail. Anal. Prev. 2015, 15, 548–554. [Google Scholar] [CrossRef]

- Xiang, L.; Deng, Z.; Hu, A. Forecasting Short-Term Wind Speed Based on IEWT-LSSVM model Optimized by Bird Swarm Algorithm. IEEE Access 2019, 7, 59333–59345. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, Y.; Wang, Y. Performance evaluation of kernel functions based on grid search for support vector regression. In Proceedings of the IEEE International Conference on Cybernetics & Intelligent Systems, Siem Reap, Cambodia, 15–17 July 2015. [Google Scholar]

- Kai, C.; Lu, Z.; Wei, Y.; Yan, S.; Zhou, Y. Mixed kernel function support vector regression for global sensitivity analysis. Mech. Syst. Signal Process. 2017, 96, 201–214. [Google Scholar]

- Zhou, J.M.; Wang, C.; He, B. Forecasting model via LSSVM with mixed kernel and FOA. Comput. Eng. Appl. 2013, 33, 964–966. [Google Scholar]

- Wu, D.; Wang, Z.; Ye, C.; Zhao, H. Mixed-kernel based weighted extreme learning machine for inertial sensor based human activity recognition with imbalanced dataset. Neurocomputing 2016, 190, 35–49. [Google Scholar] [CrossRef]

- Madamanchi, D. Evaluation of a New Bio-Inspired Algorithm: Krill Herd. Master’s Thesis, North Dakota State University, Fargo, ND, USA, 2014. [Google Scholar]

- Ayala, H.V.H.; Segundo, E.V.; Coelho, L.D.S.; Mariani, V.C. Multiobjective Krill Herd Algorithm for Electromagnetic Optimization. IEEE Trans. Magn. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Ren, Y.T.; Qi, H.; Huang, X.; Wang, W.; Ruan, L.M.; Tan, H.P. Application of improved krill herd algorithms to inverse radiation problems. Int. J. Therm. Sci. 2016, 103, 24–34. [Google Scholar] [CrossRef]

- Yan, T.; Pang, B.; Hua, W.; Gao, X. Research on the Optimized Algorithms for Support Vector Regression Model of Slewing Bearing’s Residual life Prediction. In Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, Wuhan, China, 7–9 April 2017. [Google Scholar]

- A Gentle Introduction To Support Vector Machines in Biomedicine: Volume 1: Theory and Methods. Available online: https://www.researchgate.net/publication/311233724_A_gentle_introduction_to_support_vector_machines_in_biomedicine_Volume_1_Theory_and_methods (accessed on 24 January 2020).

- Lu, S.; Tao, C.; Tian, S.; Lim, J.H.; Tan, C.L. Scene text extraction based on edges and support vector regression. Int. J. Doc. Anal. Recognit. 2015, 18, 125–135. [Google Scholar] [CrossRef]

- Wei, C.; Pourghasemi, H.R.; Naghibi, S.A. A comparative study of landslide susceptibility maps produced using support vector machine with different kernel functions and entropy data mining models in China. Bull. Eng. Geol. Environ. 2017, 77, 647–664. [Google Scholar]

- Ouyang, T.; Zha, X.; Qin, L.; Xiong, Y.; Xia, T.; Huang, H. Short-term wind power prediction based on kernel function switching. Electr. Power Autom. Equip. 2016, 9, 12. [Google Scholar]

- Chen, D.; Yuan, Z.; Wang, J.; Chen, B.; Gang, H.; Zheng, N. Exemplar-Guided Similarity Learning on Polynomial Kernel Feature Map for Person Re-identification. Int. J. Comput. Vis. 2017, 123, 392–414. [Google Scholar] [CrossRef]

| Description | Notation |

|---|---|

| Polynomial kernel function parameter | g1 |

| Polynomial kernel function parameter | coef0 |

| Polynomial kernel function parameter | d |

| Gaussian kernel function parameters | g2 |

| SVR penalty coefficient | c |

| Kernel function hybrid coefficient | λ |

| Method | Bearing 1 | Bearing 4 | Bearing 3 |

|---|---|---|---|

| SVR | 0.104 | 0.082 | 0.062 |

| KH–SVR | 0.079 | 0.069 | 0.047 |

| HKF–SVR | 0.026 | 0.027 | 0.022 |

| Method | Bearing1 | Bearing4 | Bearing3 |

|---|---|---|---|

| BPNN | 0.051 | 0.04 | 0.047 |

| ELM | 0.042 | 0.055 | 0.05 |

| HKF-SVR | 0.026 | 0.027 | 0.022 |

| Method | Bearing1 | Bearing4 | Bearing3 |

|---|---|---|---|

| GA-HKFSVR | 0.078 | 0.052 | 0.054 |

| KH-HKFSVR | 0.026 | 0.027 | 0.022 |

| SVR | KH–SVR | HKF–SVR | |

|---|---|---|---|

| RMSE | 0.225 | 0.077 | 0.035 |

| BPNN | ELM | HKF-SVR | |

|---|---|---|---|

| RMSE | 0.067 | 0.065 | 0.035 |

| GA-HKF-SVR | KH-HKF-SVR | |

|---|---|---|

| RMSE | 0.163 | 0.035 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, F.; Li, L.; Liu, Y.; Cao, Z.; Yang, H.; Lu, S. HKF-SVR Optimized by Krill Herd Algorithm for Coaxial Bearings Performance Degradation Prediction. Sensors 2020, 20, 660. https://doi.org/10.3390/s20030660

Liu F, Li L, Liu Y, Cao Z, Yang H, Lu S. HKF-SVR Optimized by Krill Herd Algorithm for Coaxial Bearings Performance Degradation Prediction. Sensors. 2020; 20(3):660. https://doi.org/10.3390/s20030660

Chicago/Turabian StyleLiu, Fang, Liubin Li, Yongbin Liu, Zheng Cao, Hui Yang, and Siliang Lu. 2020. "HKF-SVR Optimized by Krill Herd Algorithm for Coaxial Bearings Performance Degradation Prediction" Sensors 20, no. 3: 660. https://doi.org/10.3390/s20030660

APA StyleLiu, F., Li, L., Liu, Y., Cao, Z., Yang, H., & Lu, S. (2020). HKF-SVR Optimized by Krill Herd Algorithm for Coaxial Bearings Performance Degradation Prediction. Sensors, 20(3), 660. https://doi.org/10.3390/s20030660