Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network

Abstract

1. Introduction and Related Work

2. Methods

2.1. Motivation

2.2. PISANet

2.2.1. Backbone Network

2.2.2. Modifications Based on Backbone

2.2.3. Self-Attention

2.2.4. Pyramid Self-Attention

2.3. Preprocessing

2.4. Training Strategy

2.5. Advantages and Disadvantages

3. Dataset and Evaluate Indicators

3.1. Dataset

3.2. Evaluate Indicators

4. Experiment and Discussion

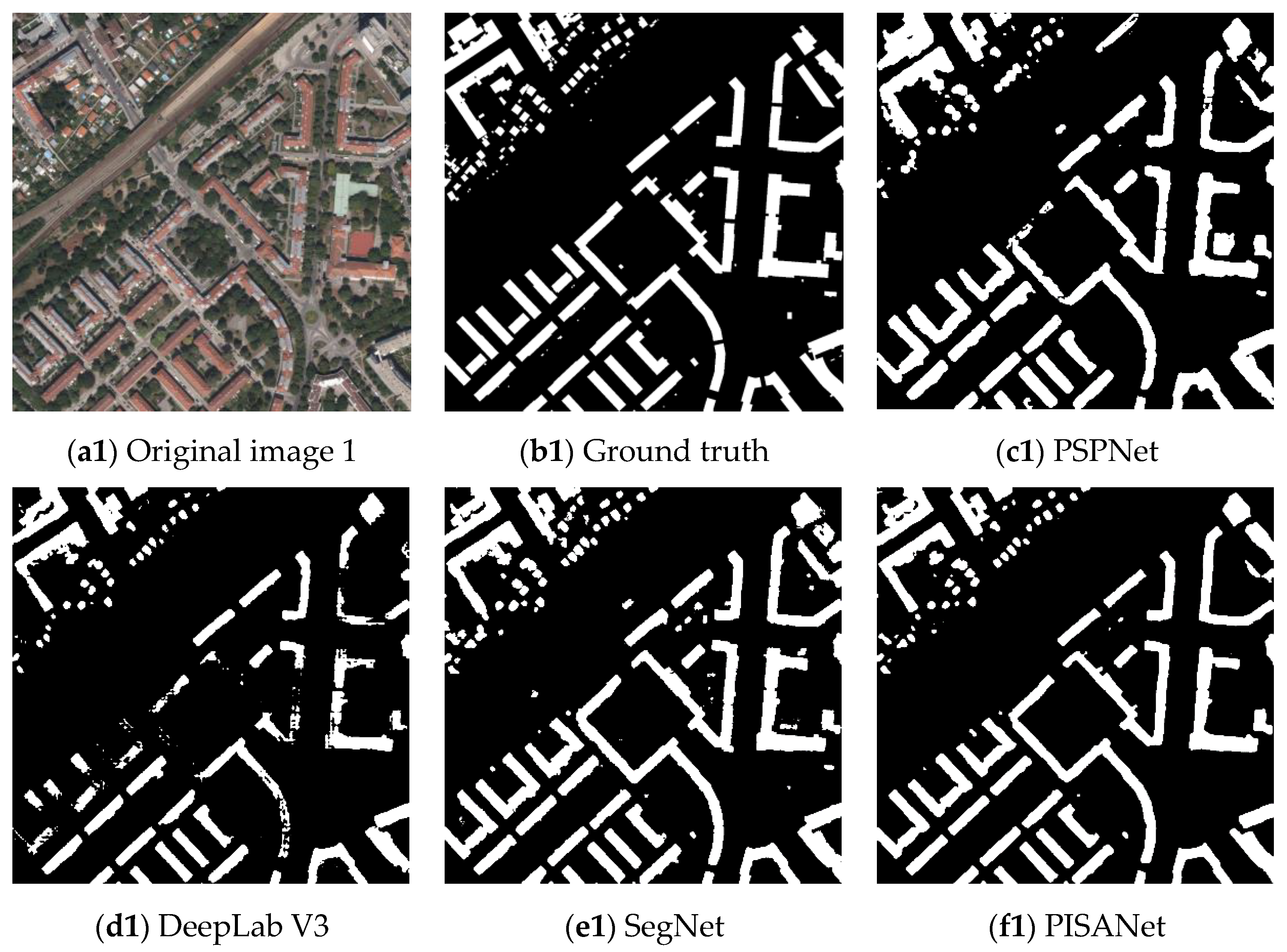

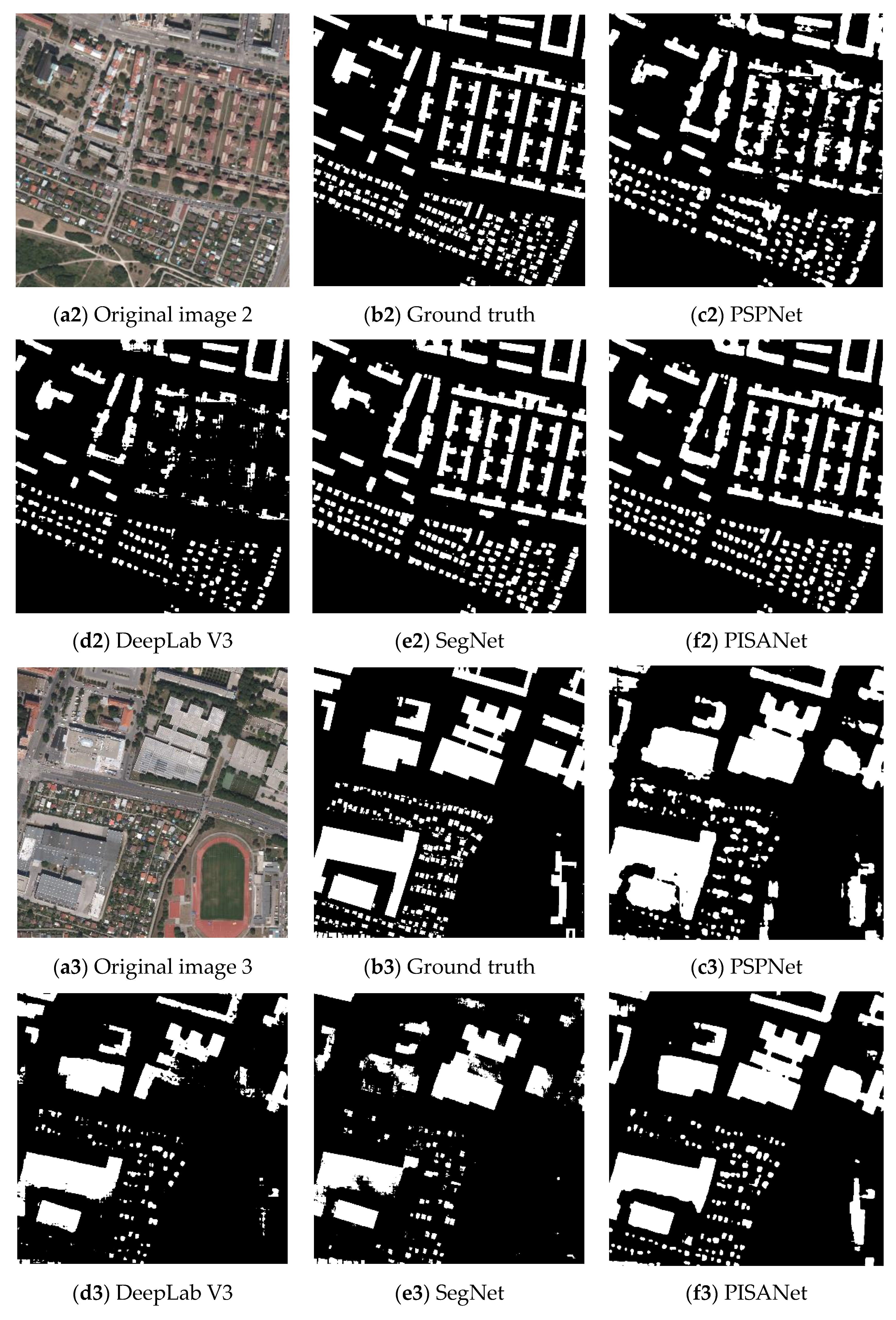

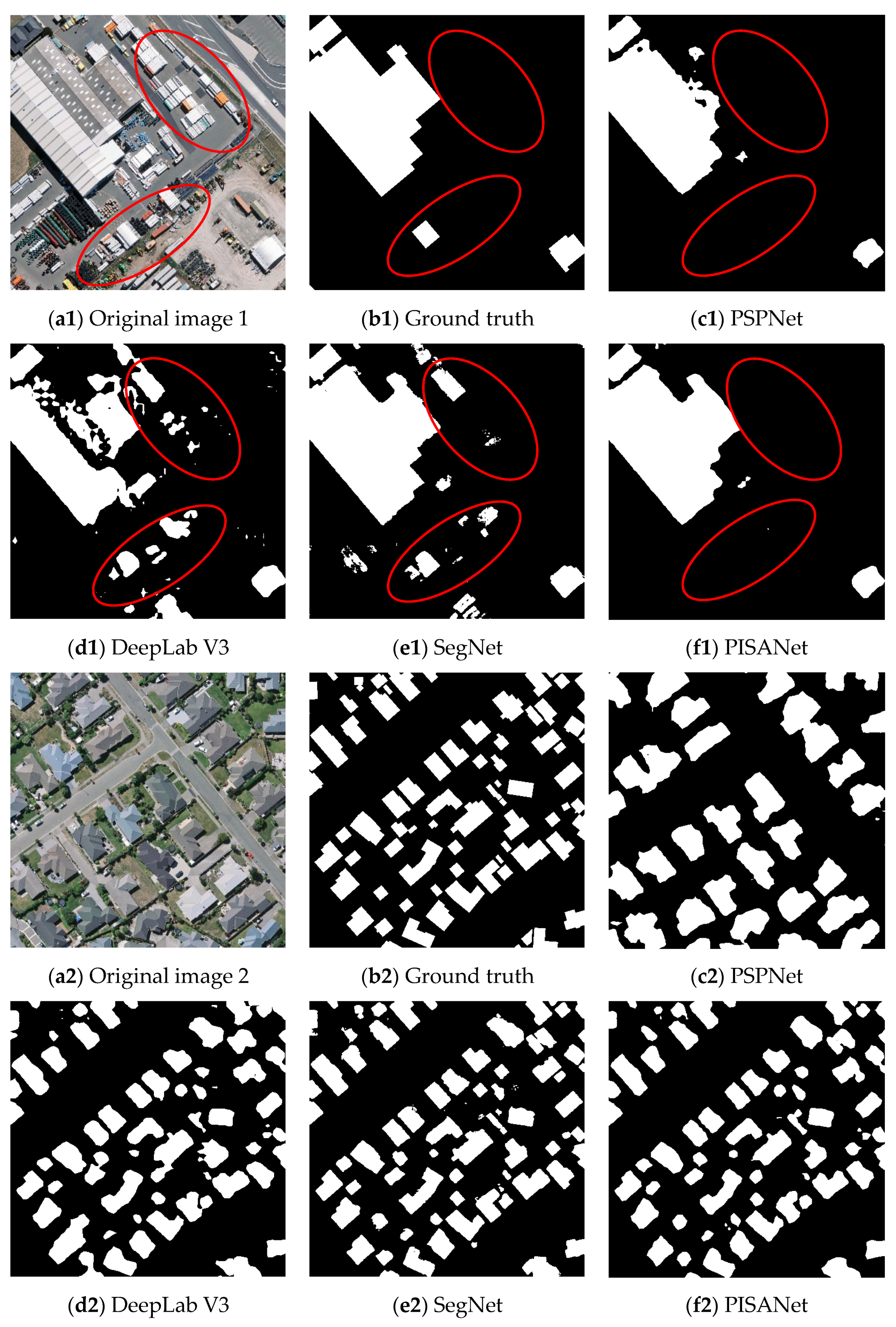

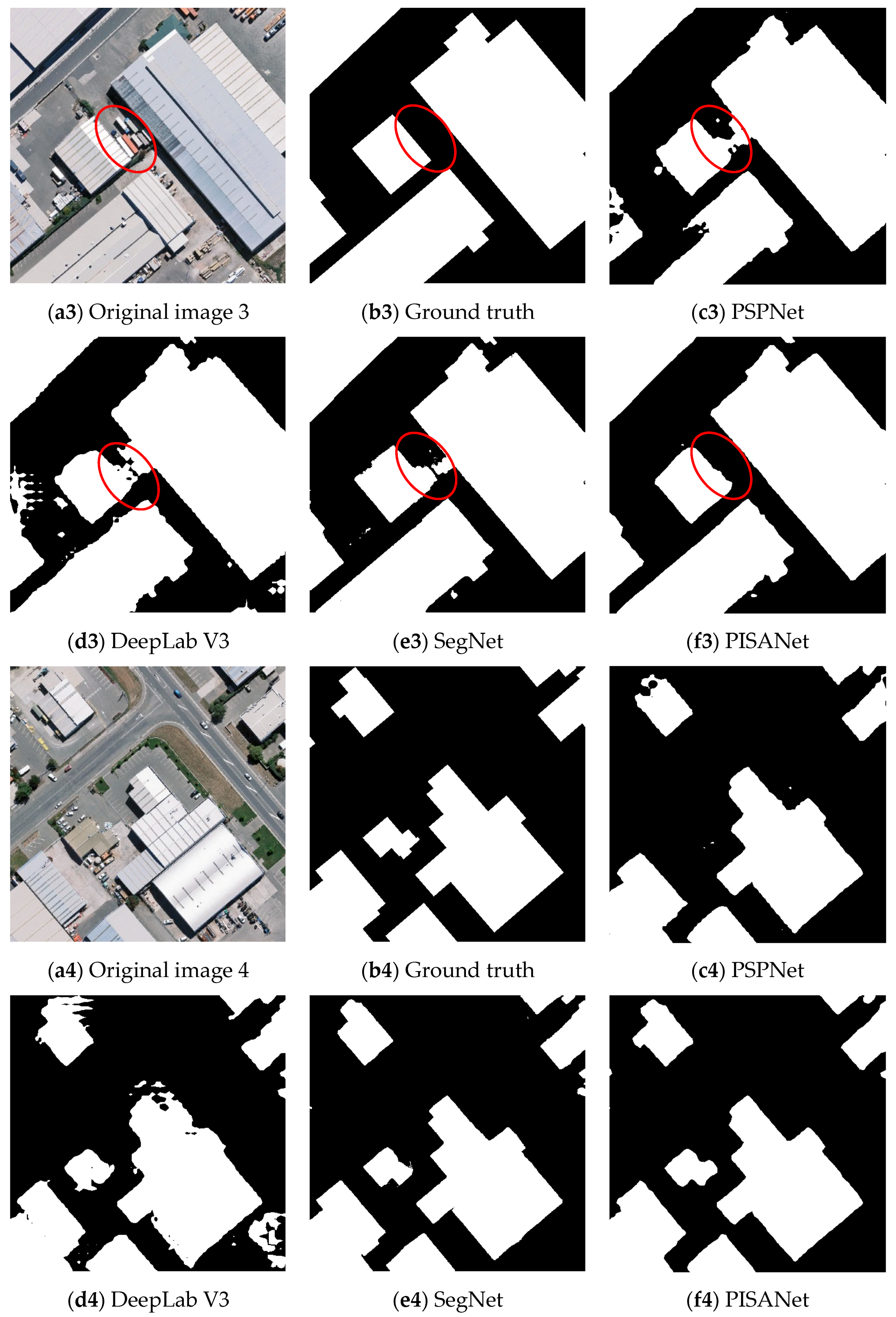

4.1. Inria Aerial Image Labeling Dataset Experiment

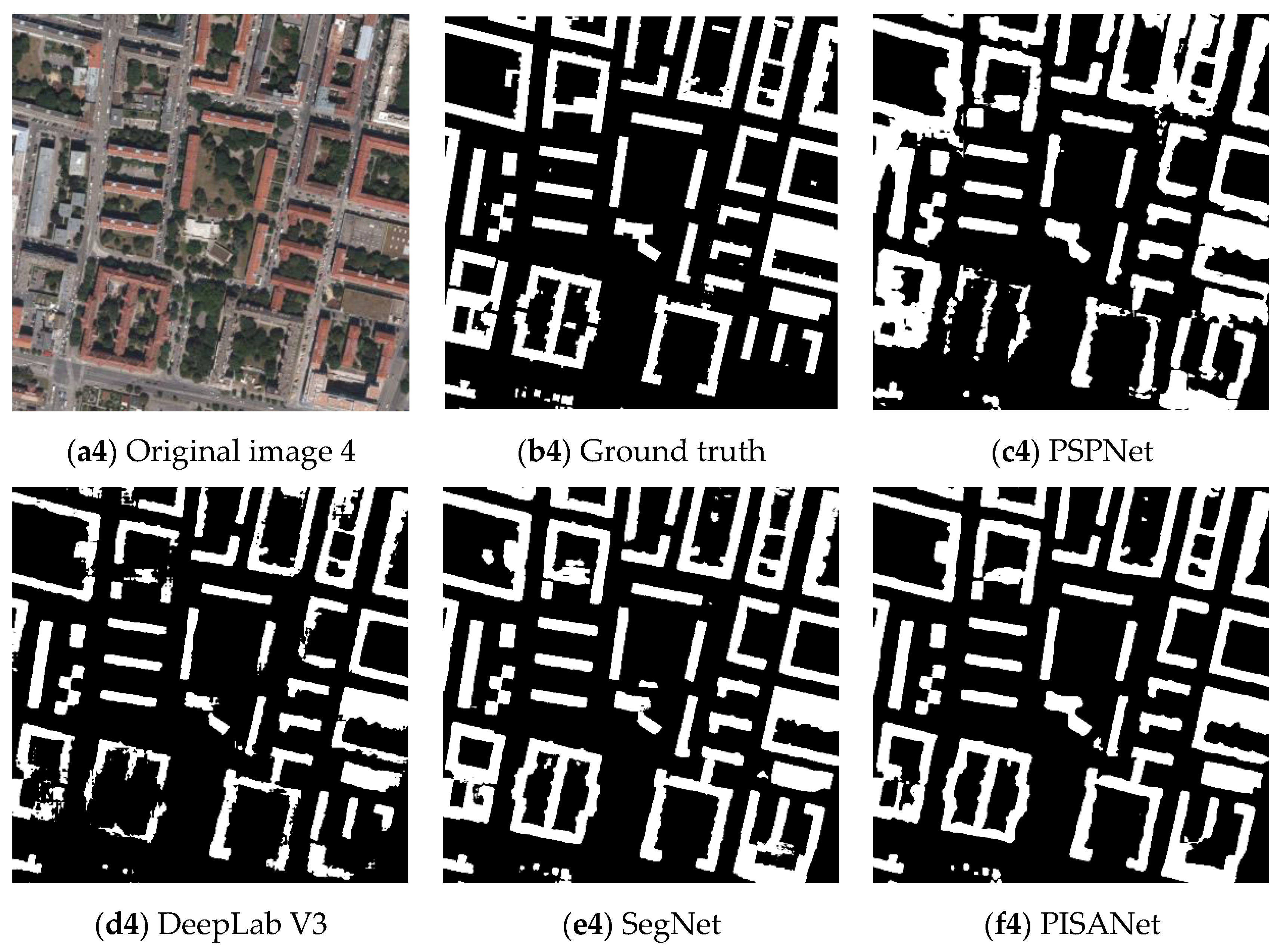

4.2. WHU Building Dataset Experiment

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, J.; Zhang, X.; Xin, Q.; Sun, Y.; Zhang, P. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y. JointNet: A common neural network for road and building extraction. Remote Sens. 2019, 11, 696. [Google Scholar] [CrossRef]

- Sun, G.; Huang, H.; Zhang, A.; Li, F.; Zhao, H.; Fu, H. Fusion of multiscale convolutional neural networks for building extraction in very high-resolution images. Remote Sens. 2019, 11, 227. [Google Scholar] [CrossRef]

- Liow, Y.T.; Pavlidis, T. Use of shadows for extracting buildings in aerial images. Comput. Vis. Graph. Image Process. 1990, 49, 242–277. [Google Scholar] [CrossRef]

- Jin, X.; Davis, C.H. Automated building extraction from high-resolution satellite imagery in urban areas using structural, contextual, and spectral information. EURASIP J. Adv. Signal Process. 2005, 14, 2196–2206. [Google Scholar] [CrossRef]

- Theng, L.B. Automatic Building Extraction from Satellite Imagery. Eng. Lett. 2006, 13, 255–259. [Google Scholar]

- Lefèvre, S.; Weber, J.; Sheeren, D. Automatic building extraction in VHR images using advanced morphological operators. In Proceedings of the Urban Remote Sensing Joint Event (IEEE), Paris, France, 11–13 April 2007; pp. 1–5. [Google Scholar]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral GeoEye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological building/shadow index for building extraction from high-resolution imagery over urban areas. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2011, 5, 161–172. [Google Scholar] [CrossRef]

- Jiang, N.; Zhang, J.X.; Li, H.T.; Lin, X.G. Semi-automatic building extraction from high resolution imagery based on segmentation. In Proceedings of the 2008 International Workshop on Earth Observation and Remote Sensing Applications (IEEE), Beijing, China, 30 June–2 July 2008; pp. 1–5. [Google Scholar]

- Liu, P.; Di, L.; Du, Q.; Wang, L. Remote sensing big data: Theory, methods and applications. Remote Sens. 2018, 10, 711. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Bittner, K.; Cui, S.; Reinartz, P. Building extraction from remote sensing data using fully convolutional networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 481–486. [Google Scholar] [CrossRef]

- Shrestha, S.; Vanneschi, L. Improved fully convolutional network with conditional random fields for building extraction. Remote Sens. 2018, 10, 1135. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully convolutional networks for building and road extraction: Preliminary results. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1591–1594. [Google Scholar]

- Huang, Z.; Cheng, G.; Wang, H.; Li, H.; Shi, L.; Pan, C. Building extraction from multi-source remote sensing images via deep deconvolution neural networks. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1835–1838. [Google Scholar]

- Yuan, J. Learning building extraction in aerial scenes with convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2793–2798. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Wei, S.; Lu, M. A scale robust convolutional neural network for automatic building extraction from aerial and satellite imagery. Int. J. Remote Sens. 2019, 40, 3308–3322. [Google Scholar] [CrossRef]

- Yang, H.L.; Yuan, J.; Lunga, D.; Laverdiere, M.; Rose, A.; Bhaduri, B. Building extraction at scale using convolutional neural network: Mapping of the United States. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 2600–2614. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Li, X.; Zhong, Z.; Wu, J.; Yang, Y.; Lin, Z.; Liu, H. Expectation-maximization attention networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9167–9176. [Google Scholar]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Change Loy, C.; Lin, D.; Jia, J. Psanet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A^ 2-nets: Double attention networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 352–361. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Long Beach, CA, USA, 16–20 June 2019; pp. 603–612. [Google Scholar]

- Liu, P.; Zhang, H.; Eom, K.B. Active deep learning for classification of hyperspectral images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 10, 712–724. [Google Scholar] [CrossRef]

- Liu, P.; Choo, K.K.R.; Wang, L.; Huang, F. SVM or deep learning? A comparative study on remote sensing image classification. Soft Comput. 2017, 21, 7053–7065. [Google Scholar] [CrossRef]

- Liu, P.; Liu, X.; Liu, M.; Shi, Q.; Yang, J.; Xu, X.; Zhang, Y. Building footprint extraction from high-resolution images via spatial residual inception convolutional neural network. Remote Sens. 2019, 11, 830. [Google Scholar] [CrossRef]

- Li, X.; Yao, X.; Fang, Y. Building-a-nets: Robust building extraction from high-resolution remote sensing images with adversarial networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3680–3687. [Google Scholar] [CrossRef]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric non-local neural networks for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4 December 2017; pp. 5998–6008. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? The inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. Available online: https://arxiv.org/abs/1706.05587 (accessed on 5 December 2017).

| Dataset | Resolution | Image Size | Spectral | Image Counts | File Size | Cities | Area |

|---|---|---|---|---|---|---|---|

| Inria Aerial Image Labeling Dataset | 0.3 m | 5000 × 5000 | Red/Green/Blue | 360 | 12.7 G | Austin, Bellingham, Bloomington, Chicago, Innsbruck, Kitsap, San Francisco, Western and Eastern Tyrol, Vienna | 810 km2 |

| WHU Building Dataset | 0.075~0.3 m | 512 × 512 | Red/Green/Blue | 8188 | 6.1 G | Christchurch in New Zealand | 450 km2 |

| Models | OA | IoU | Precision | Recall | F1 |

|---|---|---|---|---|---|

| PSPNet | 90.11 | 61.47 | 76.73 | 77.72 | 75.85 |

| DeepLab V3 | 91.18 | 60.30 | 92.37 | 63.60 | 74.84 |

| SegNet | 94.24 | 76.71 | 85.04 | 88.71 | 86.80 |

| PISANet | 94.50 | 77.45 | 85.92 | 88.68 | 87.27 |

| Models | OA | IoU | Precision | Recall | F1 |

|---|---|---|---|---|---|

| PSPNet | 92.59 | 71.75 | 91.20 | 76.80 | 81.16 |

| DeepLab V3 | 92.21 | 79.19 | 84.46 | 92.48 | 88.16 |

| SegNet | 95.67 | 87.20 | 93.29 | 93.05 | 93.05 |

| PISANet | 96.15 | 87.97 | 94.20 | 92.94 | 93.55 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, D.; Wang, G.; He, G.; Long, T.; Yin, R.; Zhang, Z.; Chen, S.; Luo, B. Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network. Sensors 2020, 20, 7241. https://doi.org/10.3390/s20247241

Zhou D, Wang G, He G, Long T, Yin R, Zhang Z, Chen S, Luo B. Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network. Sensors. 2020; 20(24):7241. https://doi.org/10.3390/s20247241

Chicago/Turabian StyleZhou, Dengji, Guizhou Wang, Guojin He, Tengfei Long, Ranyu Yin, Zhaoming Zhang, Sibao Chen, and Bin Luo. 2020. "Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network" Sensors 20, no. 24: 7241. https://doi.org/10.3390/s20247241

APA StyleZhou, D., Wang, G., He, G., Long, T., Yin, R., Zhang, Z., Chen, S., & Luo, B. (2020). Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network. Sensors, 20(24), 7241. https://doi.org/10.3390/s20247241