A Real-Time Trajectory Prediction Method of Small-Scale Quadrotors Based on GPS Data and Neural Network

Abstract

1. Introduction

2. Related Work

3. Data Preparation

4. Methodology

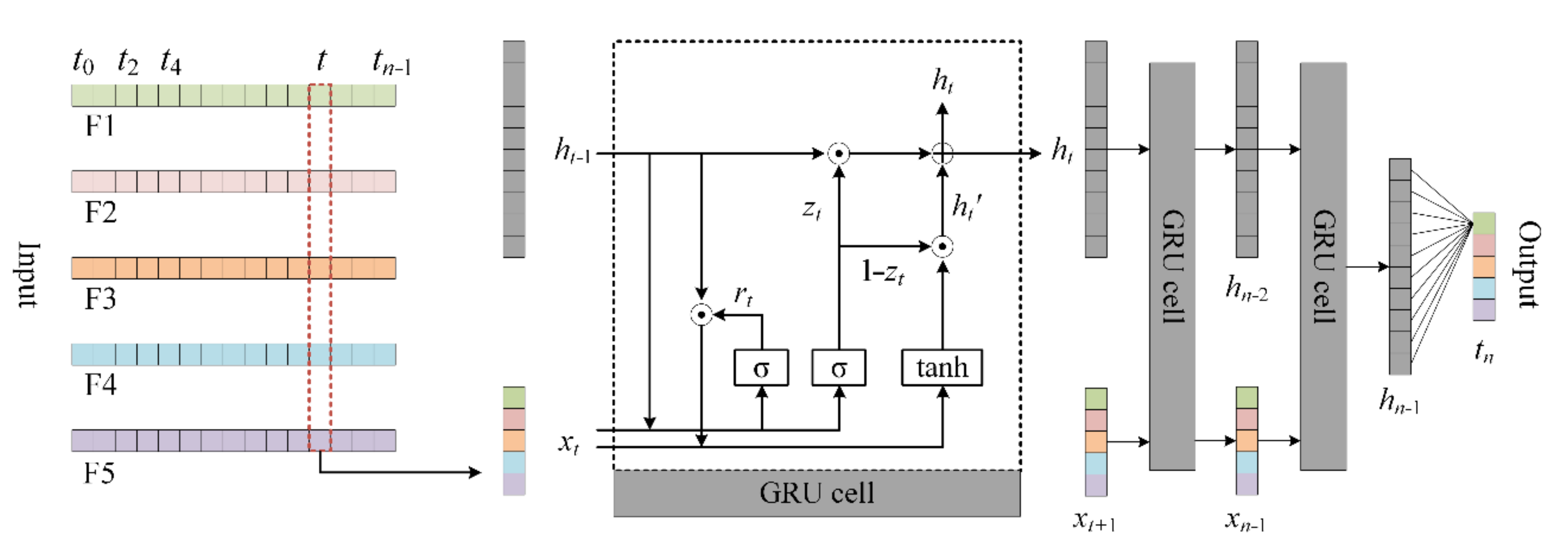

4.1. The D-GRU Network

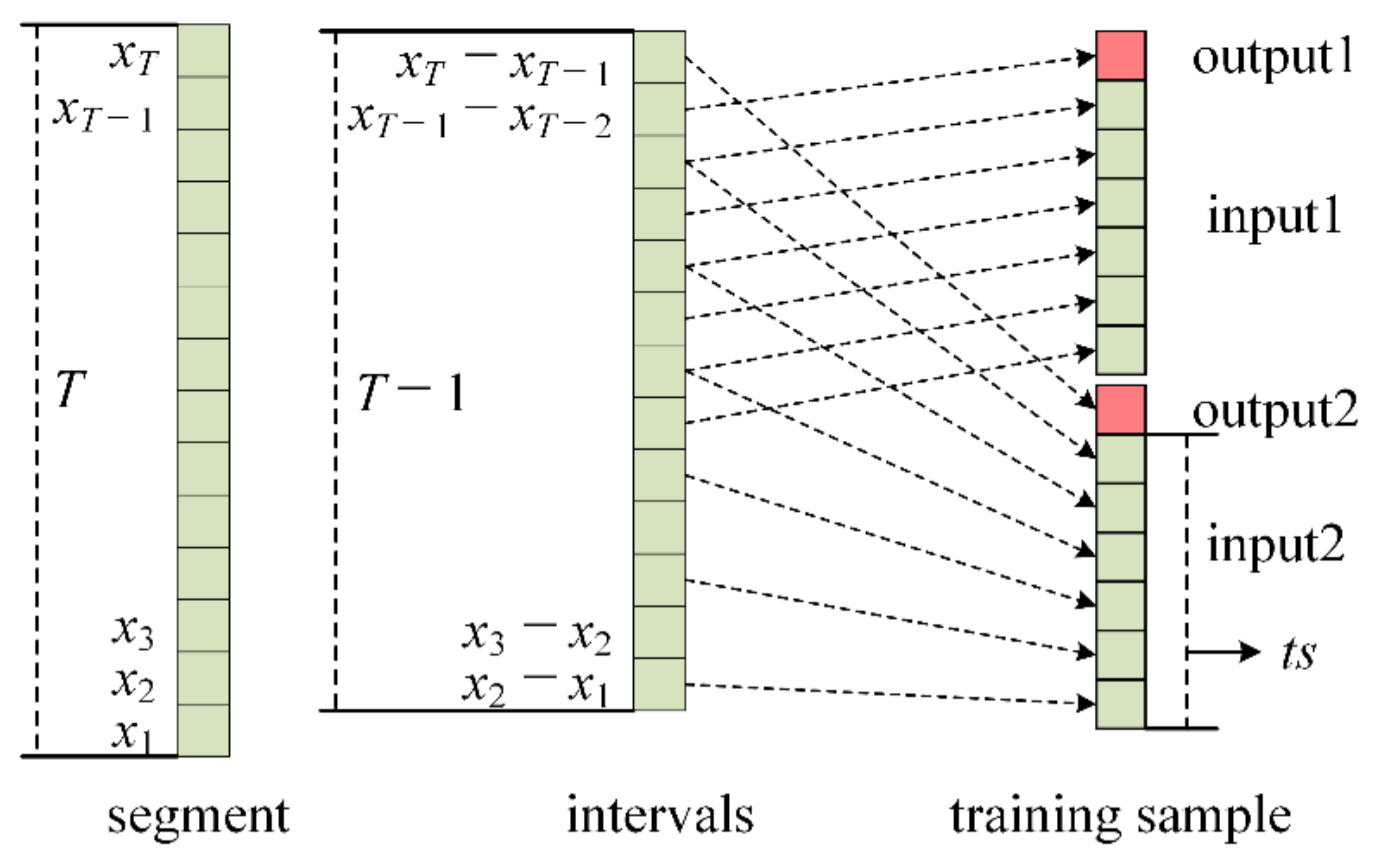

- Step 1:

- Obtain each segment through data processing, where xn represents the data at time n.

- Step 2:

- According to the requirement of input data length ts in the neural network, two different types of time series data are generated, with one- and two-time intervals, respectively.

- Step 3:

- Input the two different types of time series data in the neural network.

- Step 4:

- Generate the neural network layers composed of GRU cells.

- Step 5:

- Obtain the outputs of the fully connected layers. In Figure 8, htn−1 and hdn−1 represent the hidden states of the GRU layers; tn′ and dn′ represent the outputs of the fully connected layers; and tn is the output of the model.

- Step 6:

- Obtain the predicted value. xn′ represents the predicted value of the location.

4.2. Loss Gunction

4.3. Evaluation Metrics

5. Test and Results

- Training Sample 2-1: the segments of all types of quadrotors in Sample 2;

- Training Sample 2-2: the segments of Mavic Air, Mavic 2, Mavic Pro and Spark in Sample 2, with weights of less than 1000 g;

- Training Sample 2-3: only the segments of Mavic 2 in Sample 2;

- Training Sample 2-4: half of the sample randomly selected from Sample 2-1.

- Training Sample 3-1: the segments of all types of quadrotors in Sample 3;

- Training Sample 3-2: the segments of Mavic Air, Mavic 2, Mavic Pro and Spark in Sample 3, with weights of less than 1000 g;

- Training Sample 3-3: only the segments of Mavic 2 in Sample 3;

- Training Sample 3-4: half of the sample randomly selected from Sample 3-1.

- ●

- The proposed D-GRU model produces better prediction results than the GRU model in terms of all the three evaluation indicators. The MAEs of latitude, longitude, and altitude are reduced by at least 11.85, 6.35, and 5.66% in Sample 2, as well as 17.55 9.18, and 4.26% in Sample 3, respectively. Compared with the single-interval sequence GRU model, the D-GRU model can extract more information from the trajectory segment of inherent length T, which will try to restrain the irregularity of the transmission pattern learned from the information of single-interval data based on the information of double intervals.

- ●

- The prediction accuracies from Samples “2-1” and “3-1” were better than those from Samples “2-4” and “3-4”, indicating that a larger sample dataset for training may help to improve the model performance. The prediction results from Sample “2-2”, Sample “2-3” and Sample “3-2”, Sample “3-3” were significantly better than the prediction results from Sample “2-1” and Sample “3-1”. The MAEs of the optimal prediction results from Sample “2-2” and Sample “2-3” for latitude, longitude, and altitude were reduced by 17.29, 15.25, and 23.86% as compared with Sample “2-1”, and 18.64, 16.44, and 21.97% from Sample “3-2” and Sample “3-3” as compared with Sample “3-1”, respectively. Selecting the historical trajectory samples of the quadrotors close to the weight or volume of the target quadrotor can improve the performance of the prediction model, indicating that the weights and biases learned from the training samples close to the target are more appropriate for trajectory prediction.

- ●

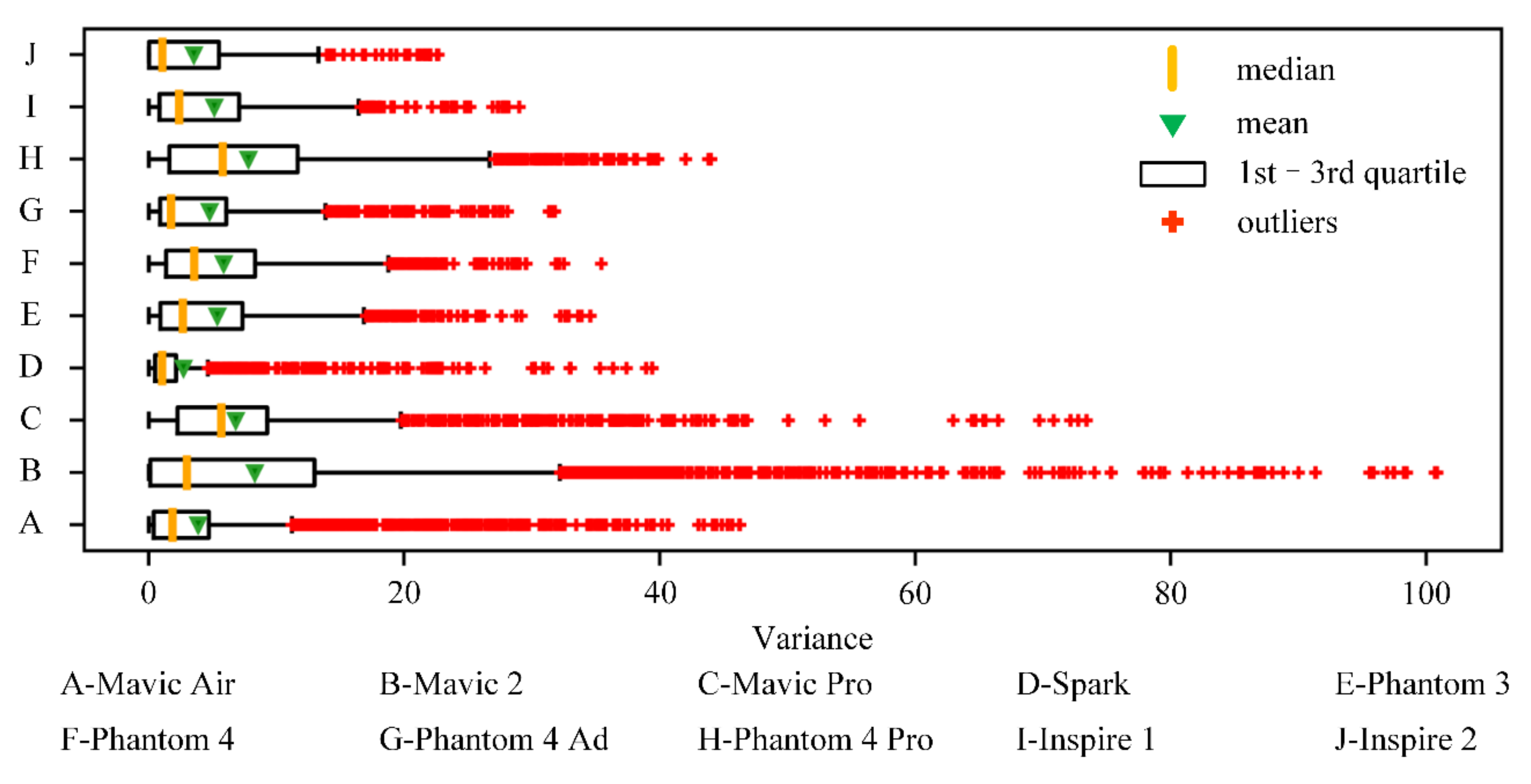

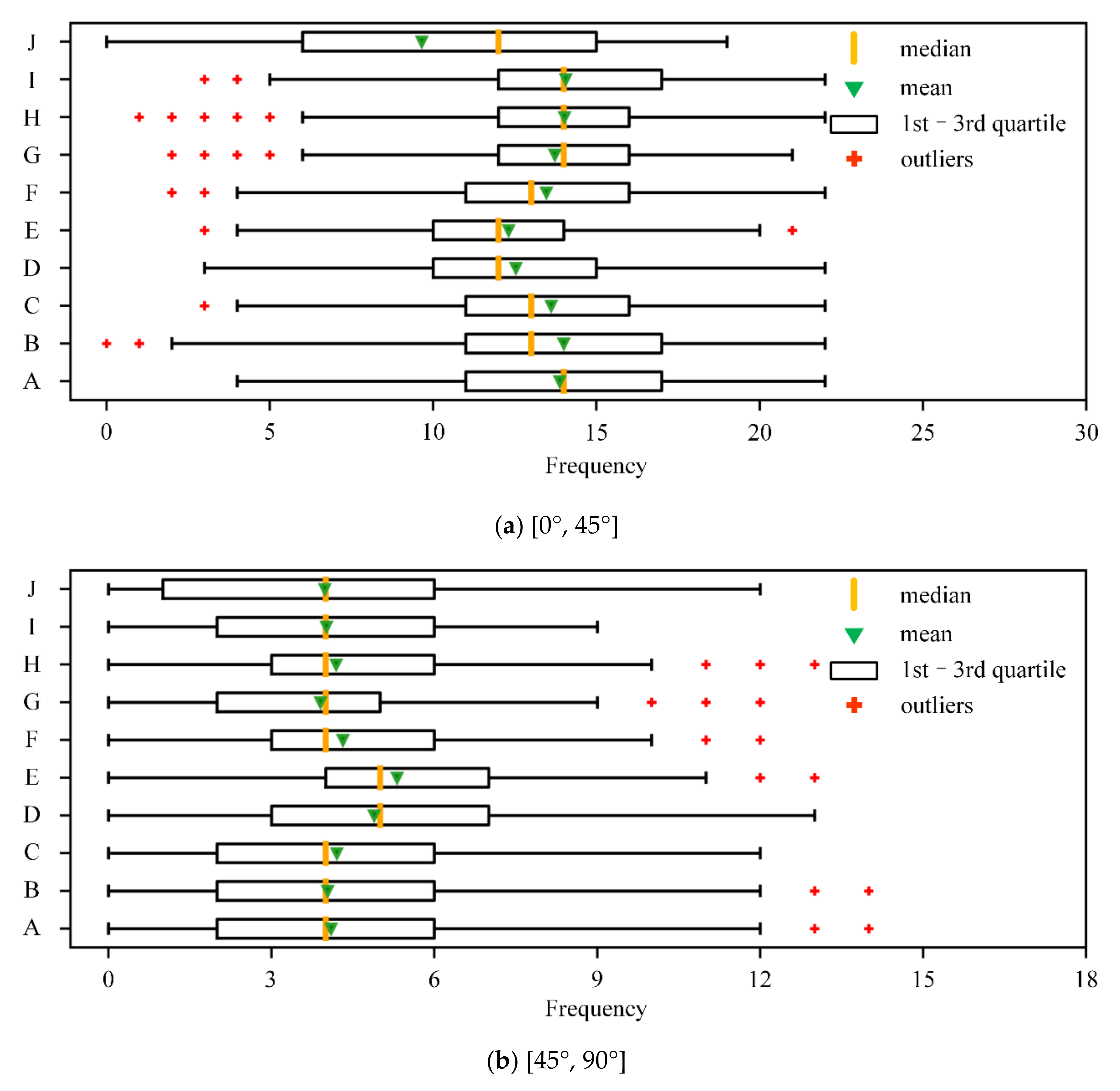

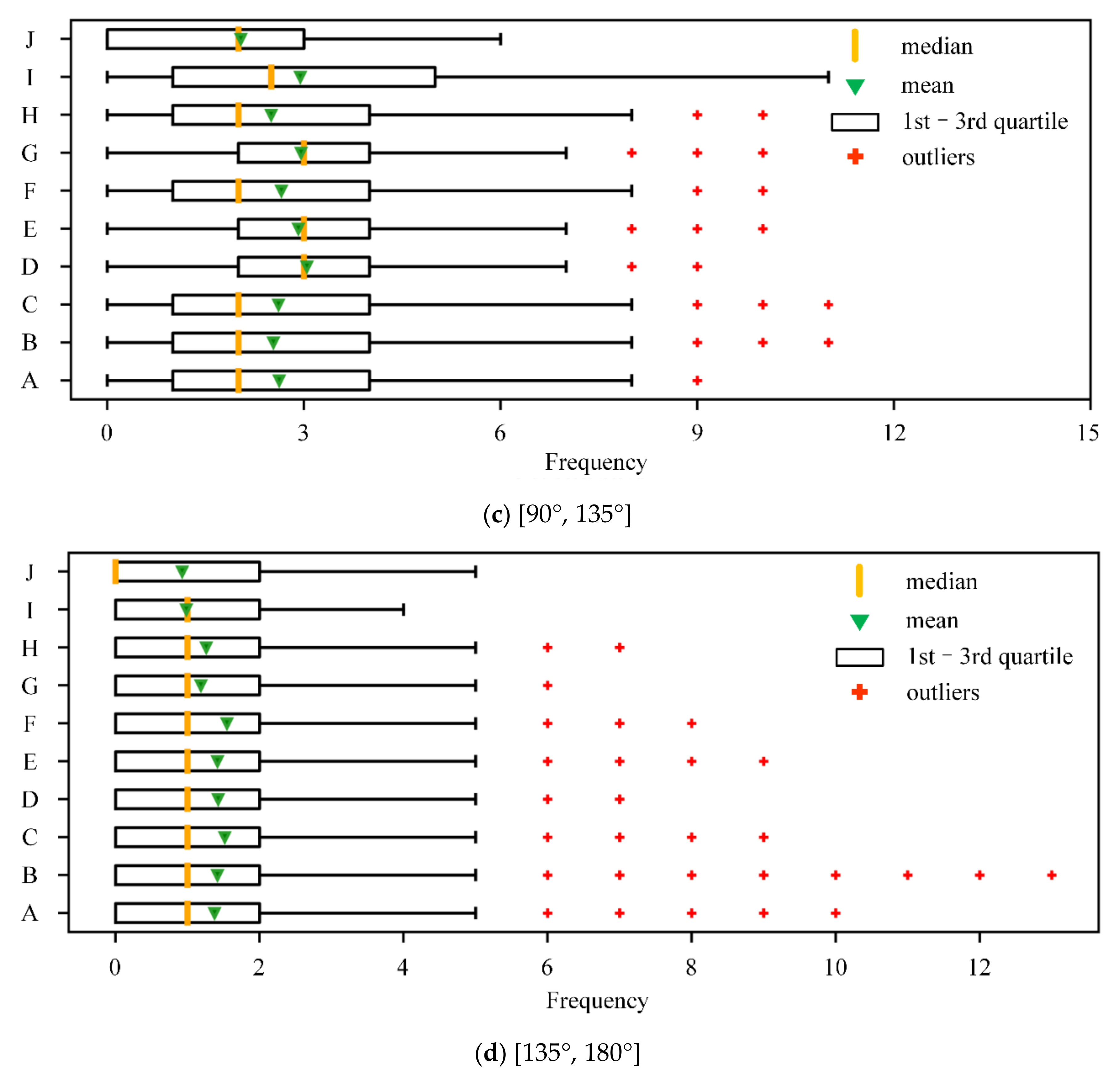

- As compared with Sample 2, the prediction results from Sample 3 tends to be much better. Specifically, the MAEs of latitude, longitude, and altitude of the optimal predictions from Sample 3 were reduced by 29.56, 29.21, and 10.00% compared with Sample 2, respectively. This is due to the reason that by introducing the constrains of the Var and Freq of the trajectory segment, the training trajectory segments in Sample 3 are more stable as compared with Sample 2 in latitude and longitude. By removing unstable trajectory samples such as noise, just like the training sample filtering process, the performance of the trajectory prediction model can be further improved.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nuic, A.; Poles, D.; Mouillet, V. BADA: An advanced aircraft performance model for present and future ATM systems. Int. J. Adapt. Control Signal Process. 2010, 24, 850–866. [Google Scholar] [CrossRef]

- Porretta, M.; Dupuy, M.-D.; Schuster, W.; Majumdar, A.; Ochieng, W. Performance Evaluation of a Novel 4D Trajectory Prediction Model for Civil Aircraft. J. Navig. 2008, 61, 393–420. [Google Scholar] [CrossRef]

- Benavides, J.V.; Kaneshige, J.; Sharma, S.; Panda, R.; Steglinski, M. Implementation of a Trajectory Prediction Function for Trajectory Based Operations. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Atlanta, GA, USA, 16–20 June 2014. [Google Scholar]

- Zhou, Z.; Chen, J.; Shen, B.; Xiong, Z.; Shen, H.; Guo, F. A trajectory prediction method based on aircraft motion model and grey theory. In Proceedings of the 2016 IEEE Advanced Information Management Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 3–5 October 2016; pp. 1523–1527. [Google Scholar]

- Ille, M.; Namerikawa, T. Collision avoidance between multi-UAV-systems considering formation control using MPC. In Proceedings of the 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Munich, Germany, 3–7 July 2017; pp. 651–656. [Google Scholar]

- Legrand, K.; Rabut, C.; Delahaye, D. Wind networking applied to aircraft trajectory prediction. In Proceedings of the 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), Prague, Czech Republic, 13–17 September 2015; pp. 1A4-1–1A4-10. [Google Scholar]

- De Souza, E.C.; Fattori, C.C.; Silva, S. Improved Trajectory Prediction and Simplification Analysis for ATM System Modernization. IFAC-PapersOnLine 2017, 50, 15627–15632. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, J.; Hu, R.; Zhu, H. Online four dimensional trajectory prediction method based on aircraft intent updating. Aerosp. Sci. Technol. 2018, 77, 774–787. [Google Scholar] [CrossRef]

- Sun, R.; Wang, G.; Zhang, W.; Hsu, L.-T.; Ochieng, W.Y. A gradient boosting decision tree based GPS signal reception classification algorithm. Appl. Soft Comput. 2020, 86, 105942. [Google Scholar] [CrossRef]

- Alligier, R.; Gianazza, D.; Durand, N. Machine Learning Applied to Airspeed Prediction during Climb. In Proceedings of the ATM seminar 2015, 11th USA/EUROPE Air Traffic Management R&D Seminar, FAA& Eurocontrol, Lisboa, Portugal, 23–26 June 2015. [Google Scholar]

- Alligier, R.; Gianazza, D. Learning aircraft operational factors to improve aircraft climb prediction: A large scale multi-airport study. Transp. Res. Part C Emerg. Technol. 2018, 96, 72–95. [Google Scholar] [CrossRef]

- Verdonk Gallego, C.E.; Gómez Comendador, V.F.; Sáez Nieto, F.J.; Orenga Imaz, G.; Arnaldo Valdés, R.M. Analysis of air traffic control operational impact on aircraft vertical profiles supported by machine learning. Transp. Res. Part C Emerg. Technol. 2018, 95, 883–903. [Google Scholar] [CrossRef]

- Song, Y.; He, G.; Yu, D. Modified Bayesian Inference for Trajectory Prediction of Network Virtual Identity Based on Spark. In Proceedings of the 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; Volume 2, pp. 25–29. [Google Scholar]

- Farooq, H.; Imran, A. Spatiotemporal Mobility Prediction in Proactive Self-Organizing Cellular Networks. IEEE Commun. Lett. 2017, 21, 370–373. [Google Scholar] [CrossRef]

- Carvalho, A.; Gao, Y.; Lefevre, S.; Borrelli, F. Stochastic predictive control of autonomous vehicles in uncertain environments. In Proceedings of the 12th International Symposium on Advanced Vehicle Control, Tokyo, Japan, 22–26 September 2014. [Google Scholar]

- Wiest, J.; Höffken, M.; Kreßel, U.; Dietmayer, K. Probabilistic trajectory prediction with Gaussian mixture models. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 141–146. [Google Scholar]

- Chen, X.; Mériaux, F.; Valentin, S. Predicting a user’s next cell with supervised learning based on channel states. In Proceedings of the 2013 IEEE 14th Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Darmstadt, Germany, 16–19 June 2013; pp. 36–40. [Google Scholar]

- Altché, F.; de La Fortelle, A. An LSTM network for highway trajectory prediction. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 353–359. [Google Scholar]

- Sarkar, A.; Czarnecki, K.; Angus, M.; Li, C.; Waslander, S. Trajectory prediction of traffic agents at urban intersections through learned interactions. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–8. [Google Scholar]

- Saleh, K.; Hossny, M.; Nahavandi, S. Intent prediction of vulnerable road users from motion trajectories using stacked LSTM network. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 327–332. [Google Scholar]

- Saleh, K.; Hossny, M.; Nahavandi, S. Intent Prediction of Pedestrians via Motion Trajectories Using Stacked Recurrent Neural Networks. IEEE Trans. Intell. Veh. 2018, 3, 414–424. [Google Scholar] [CrossRef]

- Xue, M. UAV Trajectory Modeling Using Neural Networks. In Proceedings of the 17th AIAA Aviation Technology, Integration, and Operations Conference, Denver, CO, USA, 5–9 June 2017. [Google Scholar]

- Tastambekov, K.; Puechmorel, S.; Delahaye, D.; Rabut, C. Aircraft trajectory forecasting using local functional regression in Sobolev space. Transp. Res. Part C Emerg. Technol. 2014, 39, 1–22. [Google Scholar] [CrossRef]

- Hrastovec, M.; Solina, F. Prediction of aircraft performances based on data collected by air traffic control centers. Transp. Res. Part C Emerg. Technol. 2016, 73, 167–182. [Google Scholar] [CrossRef]

- Ghazbi, S.N.; Aghli, Y.; Alimohammadi, M.; Akbari, A.A. Uadrotors Unmanned Aerial Vehicles: A Review. Int. J. Smart Sens. Intell. Syst. 2016, 9, 309–333. [Google Scholar] [CrossRef]

- Castillo-Effen, M.; Ren, L.; Yu, H.; Ippolito, C.A. Off-nominal trajectory computation applied to unmanned aircraft system traffic management. In Proceedings of the 2017 IEEE/AIAA 36th Digital Avionics Systems Conference (DASC), St. Petersburg, FL, USA, 17–21 September 2017; pp. 1–8. [Google Scholar]

- Fernando, H.C.T.E.; Silva, A.T.A.D.; Zoysa, M.D.C.D.; Dilshan, K.A.D.C.; Munasinghe, S.R. Modelling, simulation and implementation of a quadrotor UAV. In Proceedings of the 2013 IEEE 8th International Conference on Industrial and Information Systems, Kandy, Sri Lanka, 17–20 December 2013; pp. 207–212. [Google Scholar]

- Alexis, K.; Nikolakopoulos, G.; Tzes, A. On Trajectory Tracking Model Predictive Control of an Unmanned Quadrotor Helicopter Subject to Aerodynamic Disturbances. Asian J. Control. 2014, 16, 209–224. [Google Scholar] [CrossRef]

- Huang, K.; Shao, K.; Zhen, S.; Sun, H.; Yu, R. A novel approach for trajectory tracking control of an under-actuated quad-rotor UAV. IEEE/CAA J. Autom. Sin. 2016, 1–10. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spatiotemporal Dynamics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9154–9162. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3634–3640. [Google Scholar] [CrossRef]

- Cortes, C.; Mohri, M.; Rostamizadeh, A. L2 Regularization for Learning Kernels. arXiv 2012, arXiv:1205.2653. [Google Scholar]

- Belokon’, S.A.; Zolotukhin, Y.N.; Kotov, K.Y.; Mal’tsev, A.S.; Nesterov, A.A.; Pivkin, V.Y.; Sobolev, M.A.; Filippov, M.N.; Yan, A.P. Using the Kalman filter in the quadrotor vehicle trajectory tracking system. Optoelectron. Instrument. Proc. 2013, 49, 536–545. [Google Scholar] [CrossRef]

- Xie, W.; Wang, L.; Bai, B.; Peng, B.; Feng, Z. An Improved Algorithm Based on Particle Filter for 3D UAV Target Tracking. In Proceedings of the ICC 2019–2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Zhang, X.; Fan, C.; Fang, J.; Xu, S.; Du, J. Tracking prediction to avoid obstacle path of agricultural unmanned aerial vehicle based on particle filter. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2018, 232, 408–416. [Google Scholar] [CrossRef]

- Jiang, C.; Fang, Y.; Zhao, P.; Panneerselvam, J. Intelligent UAV Identity Authentication and Safety Supervision Based on Behavior Modeling and Prediction. IEEE Trans. Ind. Inform. 2020, 16, 6652–6662. [Google Scholar] [CrossRef]

| Drone Type | Weight (g) | Max Speed (m/s) | Max WS a (m/s) | Max CR b (m/s) | Max DR c (m/s) |

|---|---|---|---|---|---|

| Mavic Air | 430 | 19.0 | 10.7 | 3.0 | 2.0 |

| Mavic 2 | 905 | 20.0 | 10.7 | 5.0 | 3.0 |

| Mavic Pro | 743 | 18.0 | 10.7 | 5.0 | 3.0 |

| Spark | 300 | 13.9 | 8.0 | 3.0 | 3.0 |

| Phantom 3 | 1216 | 16.0 | 10.7 | 5.0 | 3.0 |

| Phantom 4 | 1380 | 20.0 | 10.7 | 6.0 | 4.0 |

| Phantom 4 Ad | 1368 | 20.0 | 10.7 | 6.0 | 4.0 |

| Phantom 4 Pro | 1388 | 20.0 | 10.7 | 6.0 | 4.0 |

| Inspire 1 | 3060 | 20.0 | 10.7 | 5.0 | 4.0 |

| Inspire 2 | 3290 | 26.0 | 10.7 | 6.0 | 4.0 |

| Type of Quadrotors | Trajectory | Sample 1 | Sample 2 | Sample 3 | Percent |

|---|---|---|---|---|---|

| Mavic Air | 140 | 5517 | 5513 | 2657 | 51.80% |

| Mavic 2 | 300 | 14,437 | 14,384 | 4311 | 70.03%a |

| Mavic Pro | 185 | 7725 | 7581 | 3471 | 54.21% |

| Spark | 51 | 2075 | 2069 | 878 | 57.56% |

| Phantom 3 | 64 | 1966 | 1792 | 804 | 55.13% |

| Phantom 4 | 65 | 2683 | 2480 | 999 | 59.72% |

| Phantom 4 Ad | 18 | 1241 | 1172 | 519 | 55.72% |

| Phantom4 Pro | 63 | 4146 | 3681 | 1632 | 55.66% |

| Inspire 1 | 26 | 838 | 824 | 824 | 57.89% |

| Inspire 2 | 12 | 484 | 403 | 274 | 32.01% |

| Target | Subset | MAE (m) a | RMSE (m) b | MAPE (%) c | |||

|---|---|---|---|---|---|---|---|

| D-GRU | GRU | D-GRU | GRU | D-GRU | GRU | ||

| Latitude | Sample 2-1 | 5.20 | 5.32 | 8.76 | 10.25 | 11.13 | 15.33 |

| Sample 2-2 | 4.40d | 4.98 | 7.49 | 8.90 | 9.64 | 10.21 | |

| Sample 2-3 | 4.55 | 4.74 | 7.37 | 7.81 | 8.45 | 11.28 | |

| Sample 2-4 | 5.37 | 5.45 | 9.76 | 10.98 | 11.16 | 15.42 | |

| Longitude | Sample 2-1 | 6.10 | 6.88 | 10.86 | 12.20 | 10.75 | 17.59 |

| Sample 2-2 | 5.75 | 6.15 | 10.72 | 10.98 | 8.95 | 10.71 | |

| Sample 2-3 | 5.17 | 5.52 | 9.02 | 9.26 | 9.48 | 10.86 | |

| Sample 2-4 | 6.15 | 7.24 | 11.78 | 12.79 | 11.34 | 18.26 | |

| Altitude | Sample 2-1 | 1.97 | 2.46 | 3.27 | 3.73 | 18.09 | 25.74 |

| Sample 2-2 | 1.59 | 1.59 | 2.80 | 3.07 | 13.54 | 14.03 | |

| Sample 2-3 | 1.50 | 1.59 | 2.77 | 2.77 | 9.52 | 10.17 | |

| Sample 2-4 | 2.03 | 2.49 | 3.33 | 4.01 | 18.55 | 26.74 | |

| Target | Subset | MAE (m) | RMSE (m) | MAPE (%) | |||

|---|---|---|---|---|---|---|---|

| D-GRU | GRU | D-GRU | GRU | D-GRU | GRU | ||

| Latitude | Sample 3-1 | 3.81 | 4.08 | 6.76 | 6.80 | 8.76 | 10.21 |

| Sample 3-2 | 3.59 | 4.04 | 6.88 | 7.00 | 7.15 | 9.83 | |

| Sample 3-3 | 3.10a | 3.76 | 5.88 | 6.70 | 5.81 | 9.48 | |

| Sample 3-4 | 4.03 | 4.40 | 7.01 | 7.37 | 9.68 | 12.59 | |

| Longitude | Sample 3-1 | 4.38 | 4.34 | 8.53 | 8.25 | 7.57 | 7.99 |

| Sample 3-2 | 3.87 | 4.52 | 7.90 | 8.80 | 5.89 | 7.30 | |

| Sample 3-3 | 3.66 | 4.03 | 7.35 | 8.27 | 5.70 | 6.31 | |

| Sample 3-4 | 4.57 | 4.73 | 9.01 | 9.24 | 8.19 | 8.31 | |

| Altitude | Sample 3-1 | 1.73 | 1.86 | 3.13 | 3.41 | 12.16 | 12.89 |

| Sample 3-2 | 1.46 | 1.70 | 2.86 | 3.39 | 8.22 | 8.95 | |

| Sample 3-3 | 1.35 | 1.41 | 2.67 | 2.87 | 8.11 | 8.26 | |

| Sample 3-4 | 1.91 | 1.97 | 3.44 | 3.38 | 14.24 | 13.07 | |

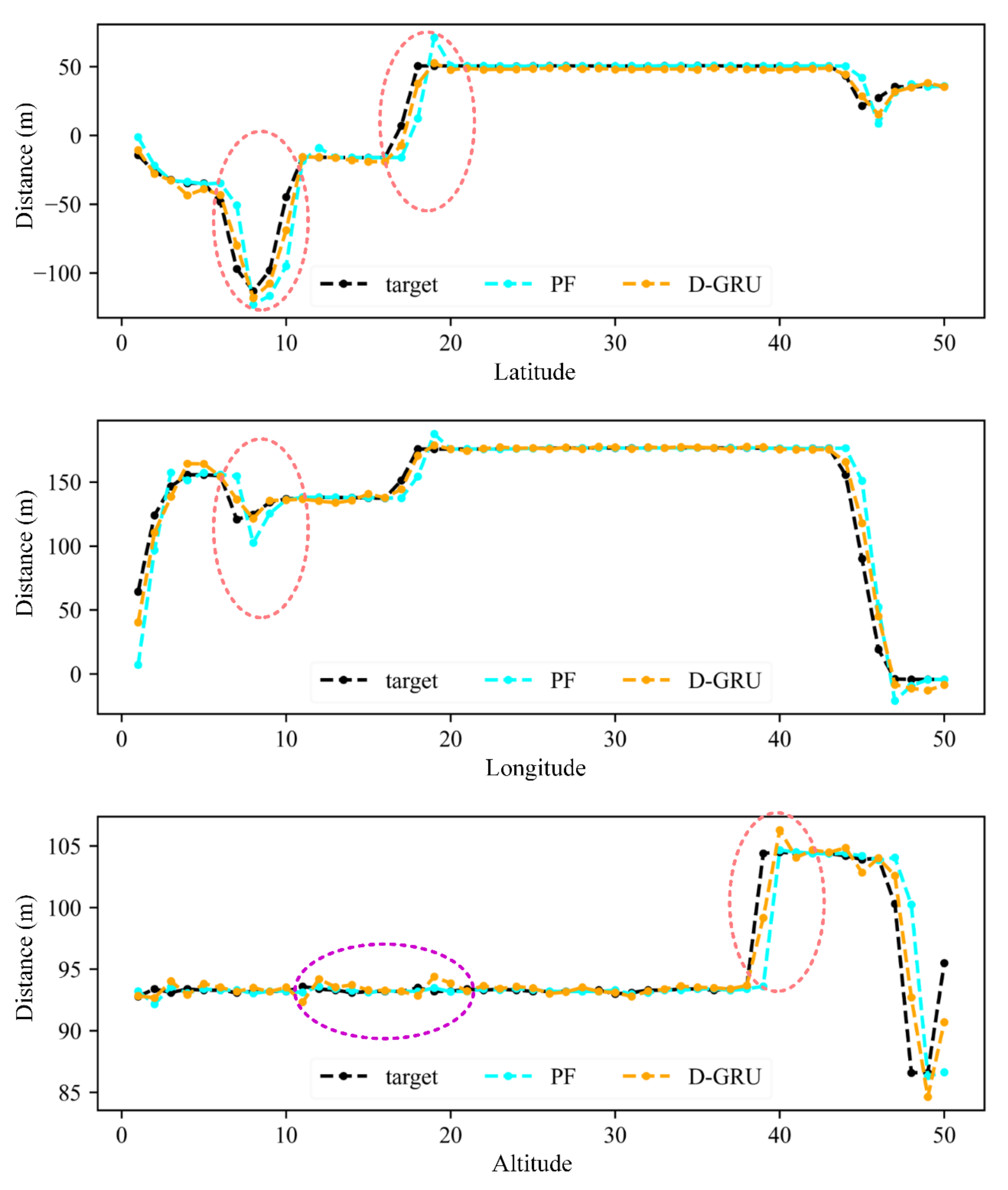

| Target | MAE (m) | RMSE (m) | MAPE (%) | |||

|---|---|---|---|---|---|---|

| D-GRU | PF | D-GRU | PF | D-GRU | PF | |

| Altitude | 1.35a | 1.78 | 2.67 | 3.99 | 8.11 | 10.32 |

| Latitude | 3.10 | 5.26 | 5.88 | 9.62 | 5.81 | 10.07 |

| Longitude | 3.66 | 5.35 | 7.35 | 10.14 | 5.70 | 8.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Tang, R.; Bao, J.; Lu, J.; Zhang, Z. A Real-Time Trajectory Prediction Method of Small-Scale Quadrotors Based on GPS Data and Neural Network. Sensors 2020, 20, 7061. https://doi.org/10.3390/s20247061

Yang Z, Tang R, Bao J, Lu J, Zhang Z. A Real-Time Trajectory Prediction Method of Small-Scale Quadrotors Based on GPS Data and Neural Network. Sensors. 2020; 20(24):7061. https://doi.org/10.3390/s20247061

Chicago/Turabian StyleYang, Zhao, Rong Tang, Jie Bao, Jiahuan Lu, and Zhijie Zhang. 2020. "A Real-Time Trajectory Prediction Method of Small-Scale Quadrotors Based on GPS Data and Neural Network" Sensors 20, no. 24: 7061. https://doi.org/10.3390/s20247061

APA StyleYang, Z., Tang, R., Bao, J., Lu, J., & Zhang, Z. (2020). A Real-Time Trajectory Prediction Method of Small-Scale Quadrotors Based on GPS Data and Neural Network. Sensors, 20(24), 7061. https://doi.org/10.3390/s20247061