Deep Feature Extraction and Classification of Android Malware Images

Abstract

1. Introduction

- We propose a novel system called SARVOTAM that is defined as Summing of neurAl aRchitecture and VisualizatiOn Technology for Android Malware classification.

- It works on the raw bytes and eliminates the need for decryption, disassembly, reverse engineering, and execution of code for malware identification. The system converts the malware non-intuitive features into fingerprint images to extract the quality information.

- Seeing through malware binary, the proposed system can discover and extract insights necessary for malware analysis, and paves the path for the development of effective malware classification systems.

- A CNN was fine-tuned to automatically extract the rich features from visualized malware thus eliminating the feature engineering and domain expert cost.

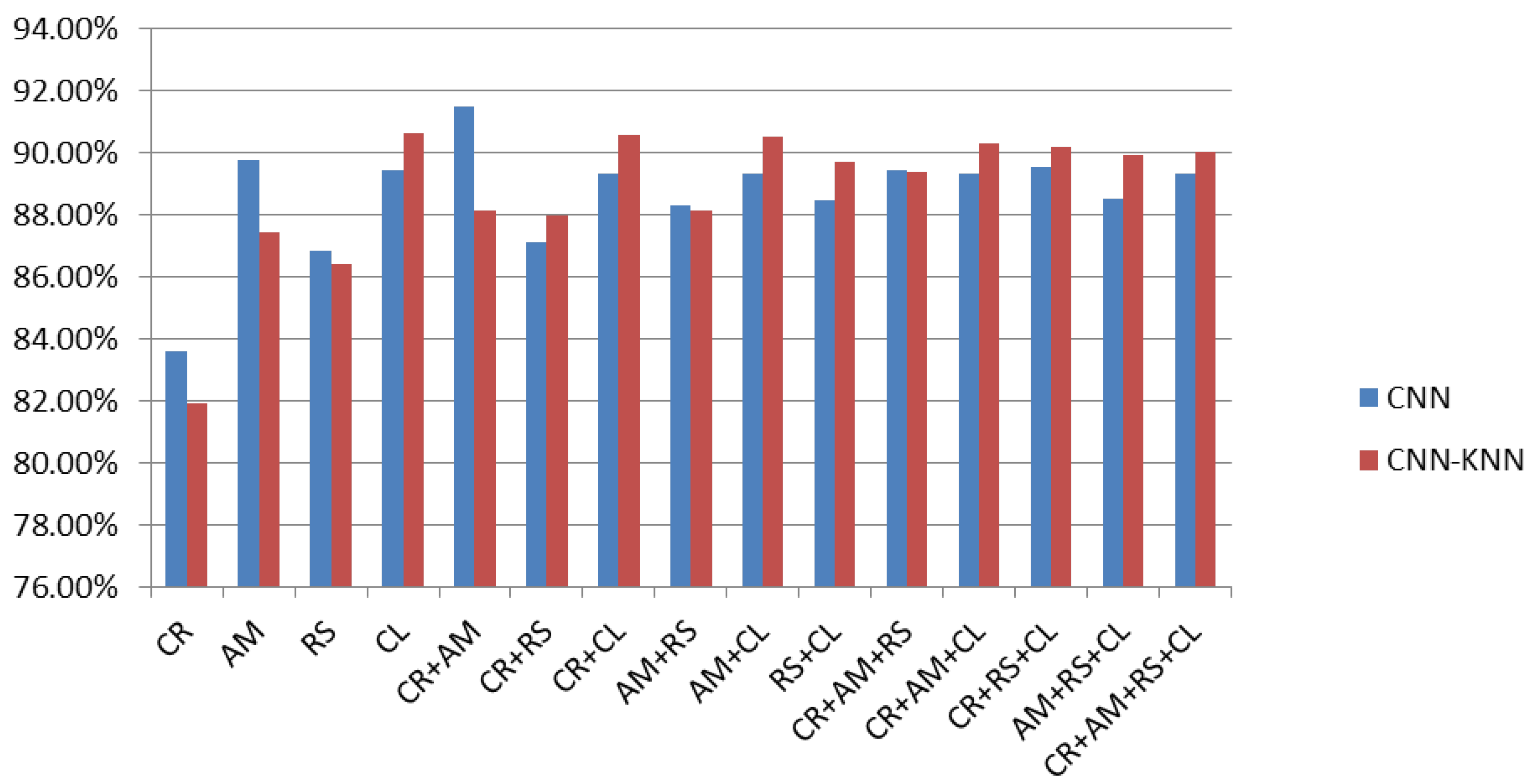

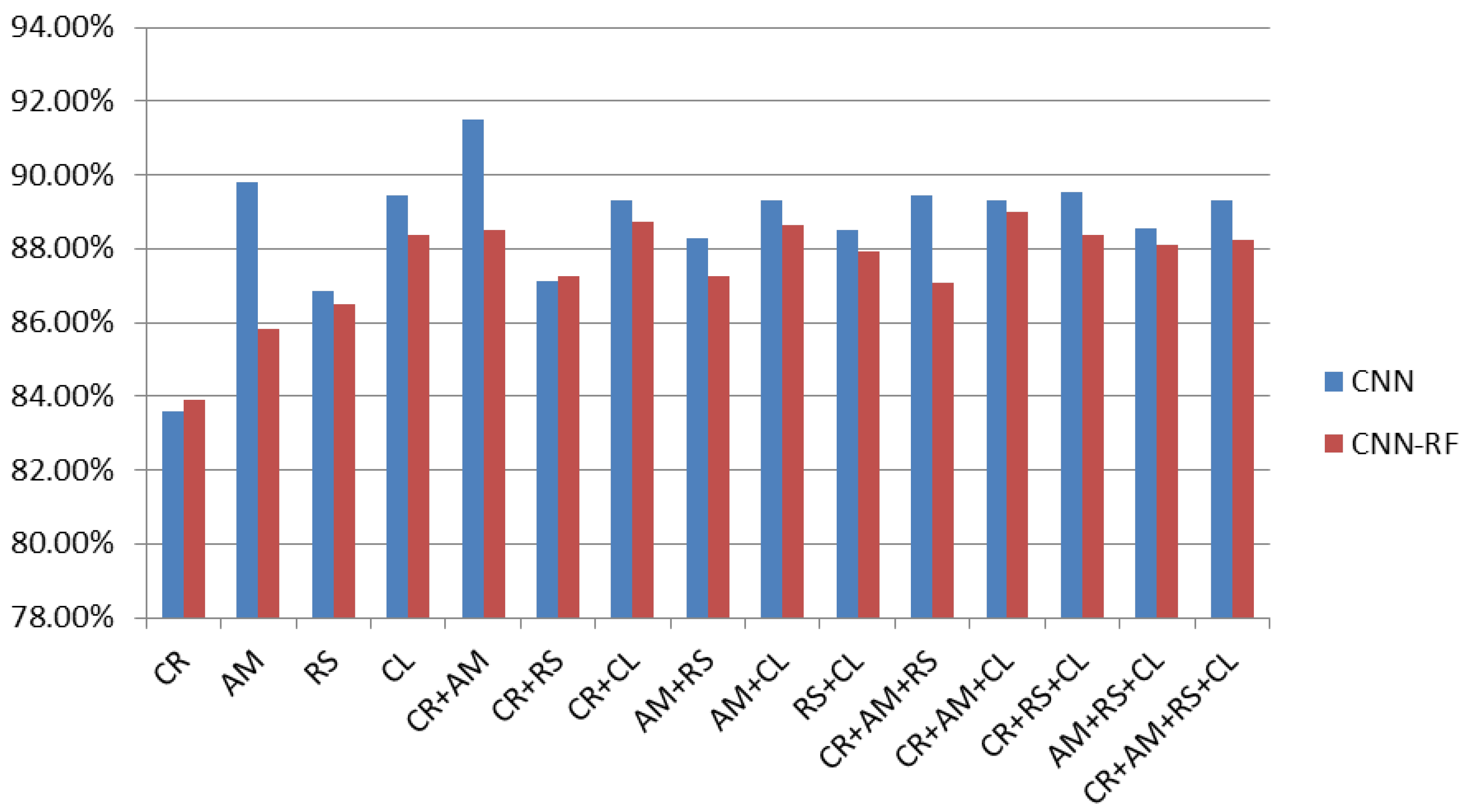

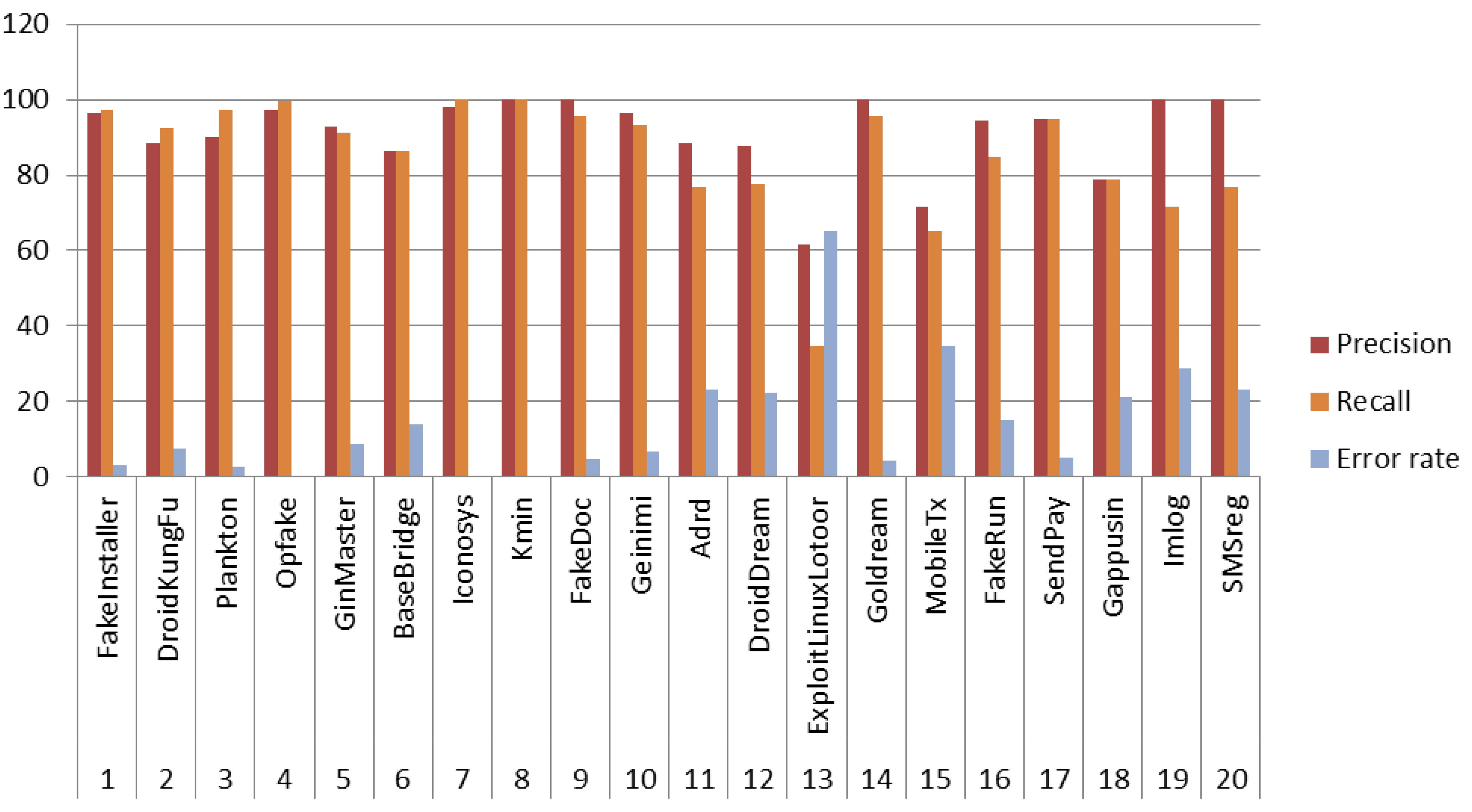

- SARVOTAM was augmented by imbuing traditional classifiers like K-Nearest Neighbour (KNN), Support Vector Machine (SVM) and Random Forest (RF) to recommend prominent Android File structure features for malware identification and classification. It was noted that CNN-SVM model outperformed original CNN as well as CNN-KNN, and CNN-RF.

- To the best of our knowledge, classification and generation of malware images using fifteen unique combinations of Android malware file structure have been explored for the first time.

- It was observed that malware images formed using Certificate and Android Manifest files (CR+AM) offer a light-weight and much precise option for malware identification. One may not try inspecting all files in the APK for malware identification and classification.

- The proposed system was evaluated against the DREBIN dataset [47]. This dataset consists of 179 different malware families containing 5560 applications.

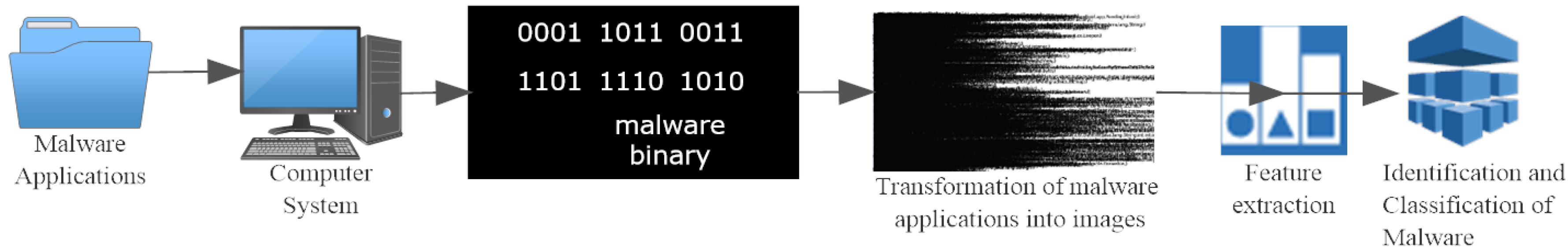

- Malware applications: Malicious applications from the DREBIN dataset were considered to evaluate the efficiency of the proposed methodology. This dataset contains 179 Android malware families and is widely used among the research community.

- Computer System: The machine with configuration Intel core i5 processor, 8G RAM, and 2.7 Ghz clock speed was used for the experiments and results.

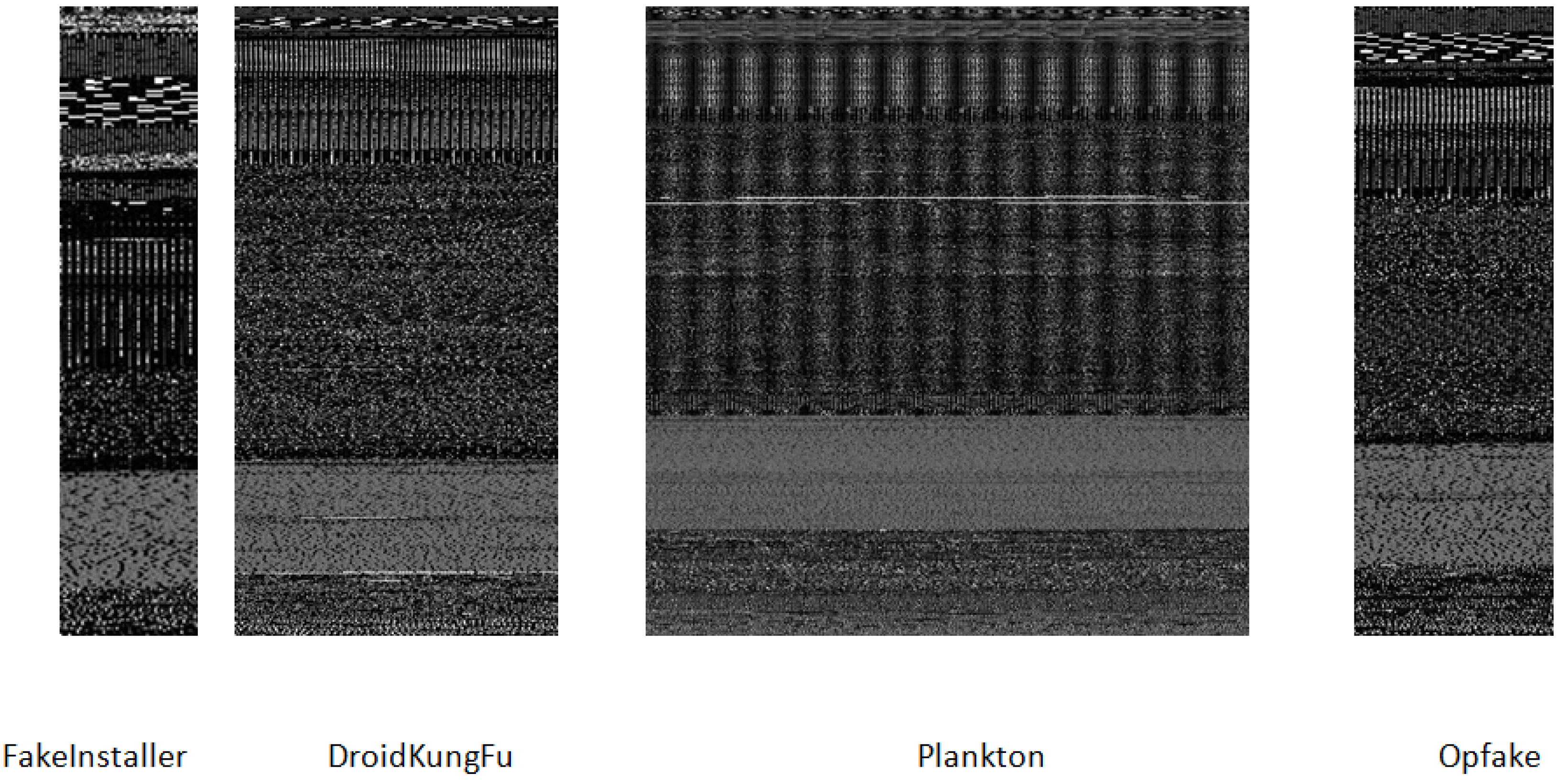

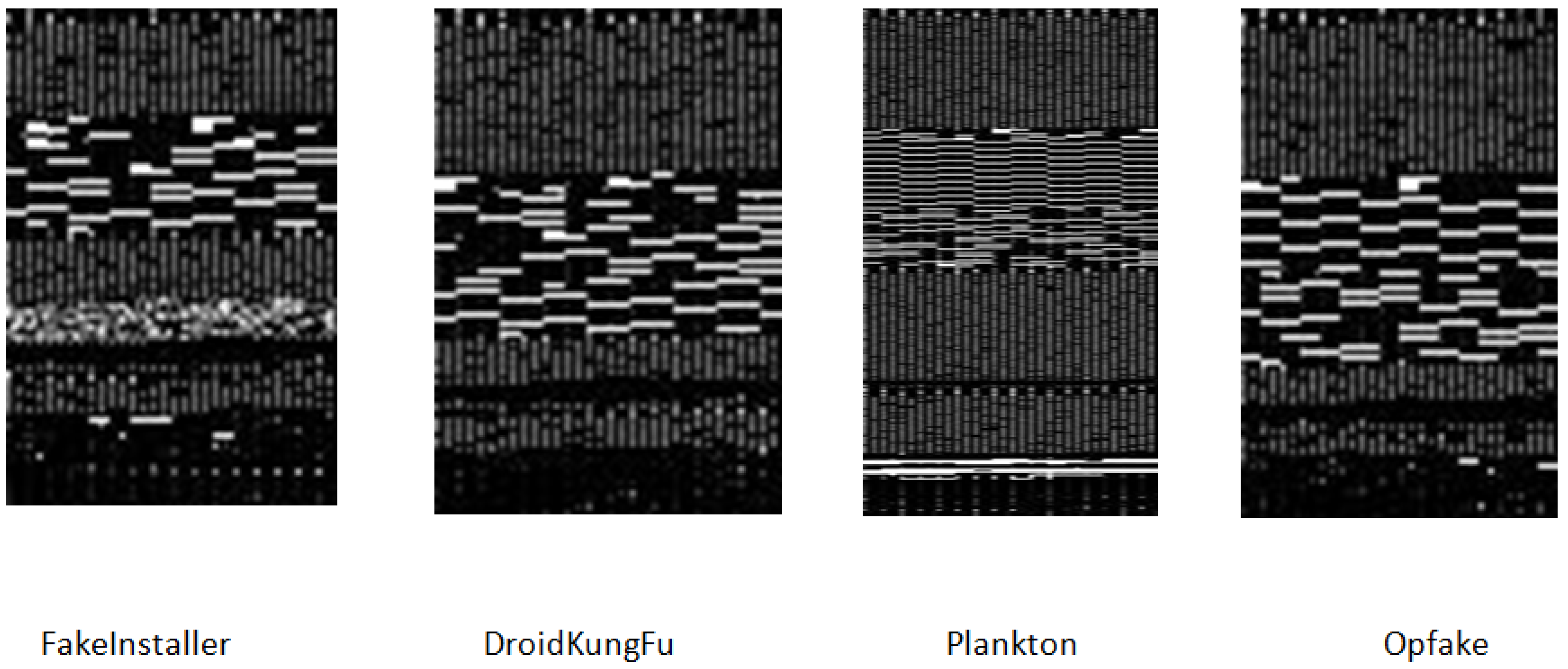

- Transformation of malware applications into images: The proposed SARVOTAM system allows seeing through malware binary, discover and extract insights necessary for malware analysis by converting malware binary into grayscale images. Fifteen unique malware images were created using different files of an APK for every malware family samples. Section 3.1 discusses in detail about the methodology adopted to transform malware applications into images.

- Feature Extraction: Accurate Feature engineering is the important task for any classification model. In this study, a fine-tuned CNN was used to automatically extract rich features from visualized malware images thus eliminating the feature engineering and domain expert cost. Section 3.2.1 discusses more about CNN architectures, used in the experiments.

- Identification and classification of malware: The machine learning algorithms such as SVM, KNN, and RF were used for the classification purpose. More detail about this is presented in Section 3.2.2 and Section 4.

2. Related Work

3. Materials and Methods

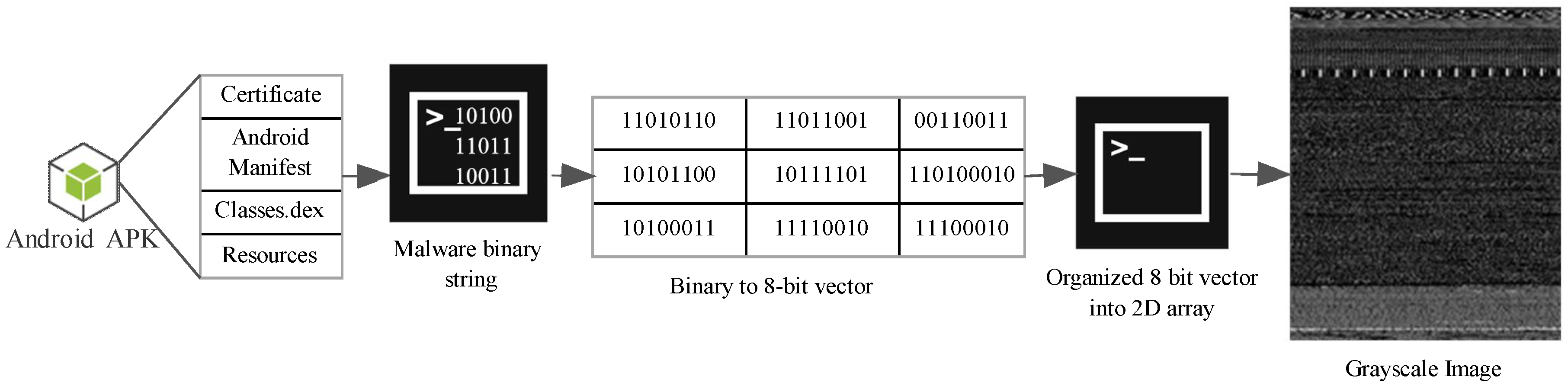

3.1. Transforming Malware APK into Images

3.2. Experiment Design

3.2.1. CNN Architectures

- (a)

- Convolutional Layer: This is the first layer for CNN. At this layer, we convolve image or data using filters or kernels. Filters are small units that are to be applied through a sliding window. The depth of the filter is the same as that of input. For instance, a coloured image would have RGB values hence its depth would be set to three. In other words, a filter of depth 3 would be applied to it. The convolution operation involves taking the element-wise product of filters in the image and then summing those values for every sliding action. The output of the convolution of a 3D filter with a color image is a 2D matrix. It is important to note that convolution is not only applicable to images but can also convolve one-dimensional time-series data. In this experiment, the convolution layers are composed of 32, 128, and 256 with filters of size 7 × 7, 5 × 5, and 3 × 3 for the first, second, and third convolutional layer respectively.

- (b)

- Activation Function Layer: An activation function is used to activate the neurons and send the signals further within the model. Weights and activation functions are important to transfer the signals through neurons. Rectified Linear Unit (ReLU) activation function prevents the vanishing gradient problem. It supports faster computation and less overhead as it does not compute exponentials and divisions. ReLU has been used to remove all the negative values from the output or matrix that we got through the convolution layer. It only activates a node if the input is above a certain threshold. While the input is below zero the output is also zero. When the input rises above the certain threshold it has a linear relationship with the dependent variable. The output of the ReLU activation function is fed to the pooling layer.

- (c)

- Pooling Layer: It involves the downsampling of features to reduce the number of parameters during training. Typically, there are two hyper parameters introduced with the pooling layer. The first is the dimensions of the spatial extent. It is defined as the value of N for which we can take N × N feature representation and map to a single value. The second is the stride which is defined as how many features the sliding window should skip along the width and height of the malware image. In this experiment, the pooling layer uses a max filter of size 3 × 3, 3 × 3, and 2 × 2 for the first, second, and third convolutional layers respectively. It was moved across entire matrix resulted by ReLU layer. The maximum pixel value is taken from each window to shrink the malware image. All these layers were stacked up by adding more layers of convolution, ReLU, and pooling.

- (d)

- Batch Normalization Layer: Batch normalization is used for stable learning of deep neural network. There is a significant problem in stable convergence in deep networks. This problem is caused by the vanishing and exploding gradient problems [64,65] and the different variants of activations within layers. The varying scale of different parameters cause bouncing in the gradient descent. In the forward propagation, it multiplicatively depends on each weight and activation function evaluation. The key point is that in the backward propagation, the partial derivative gets multiplied by the weights and the activation function derivatives. When the product of the weight and the activation function derivative is exactly one the gradients will either tend to increase or they will tend to decrease. This is partially caused by the fact that the activations in different layers have different variances. The distribution of input at each layer changes over training. Batch normalization is a way to address this issue by adding an additional batch normalization layer between the layers of the neural network. It ensures that the variances of the outputs of each layer are similar. Batch normalization normalizes not only the input features but also the features in each layer. This principle of normalization of the input features is carried through to all layers to ensure the most stable behaviour and faster convergence of the underlying algorithm.

- (e)

- Dropout Layer: In the multilayer neural network, we often face an overfitting problem, also known as high variance problem. The Dropout layer in a neural network is used to solve the overfitting problem. Only a subset of features is selected from the input layer. Dropout randomly selects the neurons and deactivate them while learning the process. In a nutshell, deactivated neurons do not participate in the learning process. For every layer, a Dropout Ratio value is selected to be as 0.5.

- (f)

- Flatten Layer: Flatten is a function or a library which converts the 2D image into 1D image. The flatten layer in the network takes the output from the previous layer and flattening it into a one-dimensional tensor. Basically, it takes the shrunk malware images and put it in a single list or vector.

- (g)

- Fully Connected/Dense Layer: The output from the convolutional layers represents high-level features in data. Essentially the convolutional layers provide the meaningful low dimensional and somewhat invariant feature space whereas the fully connected layer learns a possible nonlinear function in that space. The output of a pooling layer has to be converted to a suitable input for the fully connected layers. The output of the pooling layer is a 3D feature map (a 3D volume of features). However, the input to a simple fully-connected feed-forward neural network is a one-dimensional feature vector. The features are usually very deep at this point because of the increased number of kernels that are introduced at every convolutional layer. Convolution, activation, and pooling layers can occur at many times before the fully connected layers and hence is the reason for the increased depth. To convert the 3D feature map into one dimension the output width and height has to be 1. This is done by flattening the 3D layer into a 1D vector. For classification problems, it involves introducing hidden layers and applying a softmax activation to the dense layers of neurons. In this paper, hidden dense layers D1, D2, and D3 have been added to the CNN architecture which has 50,100, and 200 neurons respectively. At the last, one more dense layer D4 is used as the output layer with 20 neurons. It classifies the malware images with respect to their families. Softmax is used as the activation function at the last layer.

3.2.2. Machine Learning Algorithms

- (a)

- KNN (K-NearestNeighbors): KNN or K-Nearest Neighbor is a supervised classification algorithm. It identifies data points which are separated into several classes and predicts the class label for a new sample data point. It is a renowned method to classify data objects based on the closest training samples in a feature space. K in KNN refers to the number of nearest neighbors that the classifier will use to make its prediction. The unknown data points are classified by majority votes from chosen ‘K’ nearest neighbors. KNN uses the least distance measures such as Euclidean and Manhattan to find out the nearest neighbors. We have used Euclidean distance measure in this study.

- (b)

- SVM (Support Vector machine):SVM is specific to supervised machine learning. The model based on supervised learning learns from the past input data and makes future predictions as output. SVM is primarily used for classification purposes, though it can also solve regression problem statements. In the SVM algorithm, support vectors are the extreme points in the dataset. The distance between the hyperplane and the support vectors should be as far as possible. Hyperplane has the maximum distance to the support vectors of any class. The distance between the support vectors of different classes is defined as a distance margin. Distance margin is calculated as the sum of D− and D+, where D− is the shortest distance from hyperplane to closest negative point and D+ is the shortest distance from hyperplane to the closest positive point. SVM aims to find the largest distance margin that leads to getting the optimal hyperplane. An optimal hyperplane produces good classification results. For the non-linear data or where hyperplane having a low or no margin, there is a high chance of misclassification of data points. In such scenarios, kernel functions are used to transform the data into a 2D or 3D array which makes it easy to split the data and classify. Kernel functions take the low dimensional feature space as input and transform into high dimensional feature space as output. Applications of the support vector machine are commonly used with it face detection, text and hypertext categorization, classification of images, and bioinformatics.

- (c)

- Random Forests: The random forests algorithm is one of the most popular and powerful supervised machine learning algorithms that is capable of performing both regression and classification tasks. Random forests combine the simplicity of decision trees with flexibility resulting in a vast improvement in the accuracy. In general, the more trees in the forest, the more robust is the prediction. The use of multiple trees in random forests reduces the risk of overfitting. It runs efficiently and produces highly accurate predictions on large databases. Random forests can maintain accuracy even when there is a large proportion of data is missing. To classify a new object based on attributes each tree gives a classification result according to its defined rules. It can also be assumed that each tree cast its vote for classification. The random forests choose the classification class which has the most votes over all the other trees in the forests.

4. Results

| Algorithm 1: Classification of Android malware families |

| Input: Malicious aplications from DREBIN dataset |

| Result: Classification of Android malware families |

| Step 1. Import all the necessary libraries. |

| Step 2. An empty list is created for storing the training data. |

| train = [ ] |

| Step 3. Create list of 15 unique combinations. |

| combi_list=[‘CR’,‘AM’,‘RS’,‘CL’,‘CR+AM’,‘CR+RS’,‘CR+CL’,‘AM+RS’,‘AM+CL’, |

| ‘RS+CL’,‘CR+AM+RS’,‘CR+AM+CL’,‘CR+RS+CL’,‘AM+RS+CL’,‘CR+AM+RS+CL’] |

| Step 4. Load the pickle file from the local drive location in binary mode. |

| fw = open(‘local/content/drive/My Drive/allfiles.pckl’,‘rb’) |

| Step 5. Create the object of the file for further processing. |

| obj=pickle.load(fw) |

| Step 6. For every unique combination as stated in Step 3. |

| [alldata,label,flist]=Fimg(obj,comb) |

| TRAINDATA=numpy.array(alldata) |

| train_L=numpy.array(label) |

| model_cnn,train_all,test_label,pred_prob=cnn_model(TRAINDATA,train_L) |

| Step 7. Split the testing and training data and set up the features and labels. |

| [X_train, X_test, train_label, test_label] = train_test_split(train_all, |

| train_L, test_size=0.33, random_state=31,stratify=train_L) |

| feat_layer= K.function([model_cnn.layers[0].input], |

| [model_cnn.layers[12].output]) |

| for i in range(0,len(X_train)): |

| feat=feat_layer([X_train[i:i+1,:,:,:]])[0] |

| if(i==0): |

| cnn_train=feat |

| else: |

| cnn_train=numpy.concatenate((cnn_train,feat),axis=0) |

| end for |

| for i in range(0,len(X_test)): |

| feat=feat_layer([X_test[i:i+1,:,:,:]])[0] |

| if(i==0): |

| cnn_test=feat |

| else: |

| cnn_test=numpy.concatenate((cnn_test,feat),axis=0) |

| end for |

| Step 8. Print the results. |

| Metrics=numpy.zeros((20,4)) |

| CONFUSION=[] |

| AUC=[] |

| print(’CNN results’) |

| Metrics[0:4,:],conf,auc_value=eval_classi(cnn_train,cnn_test, |

| train_label,test_label,‘ORG_CNN_’) |

| CONFUSION.append(conf) |

| AUC.append(auc_value) |

| Algorithm 2: Loading the malware families from the path using the above procedure |

| Input: Call made from Algorithm 1 to read the families |

| Result: Classes with count, names and labels of the family |

| Procedure read_family(): |

| tl=pd.read_csv(‘local/content/drive/My Drive/sha256_family.csv’, |

| header=None) |

| tl.drop(tl.index[[0,0]], inplace = True) |

| labelencoder_X = LabelEncoder() |

| Nlabels=labelencoder_X.fit_transform(tl[1]) |

| qa=pd.value_counts(Nlabels) |

| Sclasses=(qa[0:20].index).values |

| allnames=tl[0].tolist() |

| return Sclasses,allnames,Nlabels |

| end procedure |

| procedure get_label(fname,allnames,Nlabels,Sclasses): |

| idxC=allnames.index(fname) |

| idxclass=Nlabels[idxC] |

| TMP=numpy.where(Sclasses==idxclass) |

| return int(TMP[0]) |

| end procedure |

| Algorithm 3: Training of CNN model |

| Input: Malware images |

| Result: Trained CNN model |

| Step 1. To train the CNN model important libraries such as Conv2D and MaxPooling2D, |

| Activation, Dropout, Flatten, and Dense are imported. |

| Step 2. Object of sequential function is created which defines the model name of the neural |

| network. |

| model = tf.keras.Sequential() |

| Step 3. The next step is to build and train the CNN on the malware images of different families. |

| Since we are dealing with the 2D malware grayscale images, we added the first convolutional |

| layer to the model which is represented as the Conv2D layer. |

| model.add(Conv2D(32,(7,7),strides=1,padding=‘valid’, kernel_initializer= |

| ‘glorot_uniform’, input_shape=(108,108,1),use_bias=True)) |

| Step 4. In the convolution layer, each feature will move throughout the entire image and the |

| pixel value of the image gets multiplied with that of the corresponding pixel value of the filter |

| adding them up and dividing by the total number of pixels to get the output. |

| Step 5. ReLU activation function is applied as we want to remove all the negative values from |

| the output or matrix that we got through the convolution layer. |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| Step 6. The output of the ReLU activation function is fed to the MaxPooling layer. The pooling |

| layer uses a max filter of size 3 × 3, 3 × 3, and 2 × 2 for the first, second, and third |

| convolutional layers respectively. |

| model.add(MaxPooling2D(pool_size=(3,3))) |

| Step 7. Batch normalization is applied for the stable learning of the network |

| model.add(BatchNormalization()) |

| Step 8. The Dropout layer in a neural network is used to solve the overfitting problem. The |

| value is selected to be as 0.5. |

| model.add(Dropout(0.5)) |

| Step 9. More layers of convolution, ReLU, pooling, batch normalization, and dropout are |

| stacked up. |

| model.add(Conv2D(128,(5,5),strides=1,padding=‘valid’, |

| kernel_initializer=‘glorot_uniform’,use_bias=True)) |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| model.add(MaxPooling2D(pool_size=(3,3))) |

| model.add(BatchNormalization()) |

| model.add(Dropout(0.5)) |

| model.add(Conv2D(256,(3,3),strides=1,padding=‘valid’, |

| kernel_initializer=‘glorot_uniform’,use_bias=True)) |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| model.add(MaxPooling2D(pool_size=(2,2))) |

| model.add(BatchNormalization()) |

| model.add(Dropout(0.5)) |

| Step 10. The flatten layer is used in the network that takes the output from the previous layers |

| and flattening it into a one-dimensional tensor. Shrunk malware images are put it in a single |

| list or vector. |

| model.add(Flatten()) |

| Step 11. Further, malware images fed into a fully connected layer/dense layer. Three dense |

| layers D1, D2, and D3 have been added to the CNN architecture which has 50,100, and 200 |

| neurons respectively. |

| model.add(Dense(50,kernel_initializer=‘glorot_uniform’,use_bias=True)) |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| model.add(Dense(100,kernel_initializer=‘glorot_uniform’,use_bias=True)) |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| model.add(Dense(200,kernel_initializer=‘glorot_uniform’,use_bias=True)) |

| tf.keras.layers.ReLU(max_value=None, negative_slope=0.0, threshold=0.0) |

| Step 12. Apply one more dense layer as the output layer with 20 nodes. It classifies the |

| malware images with respect to their families. |

| Step 13. Apply the activation function of softmax in the last layer. |

| model.add(Dense(20,activation=‘softmax’)) |

| Step 14. In the compilation phase of the model, apply adam optimizer and loss as categorical |

| cross-entropy. |

| model.compile(loss=‘sparse_categorical_crossentropy’, optimizer=’adam’, |

| metrics=[‘accuracy’]) |

| Step 15. The array is specified with the single string accuracy as the metrics. To train the |

| model, function generator is called. Model is trained for 100 epochs. |

| model.fit(datagen1.flow(X_train, train_label, batch_size=16), |

| steps_per_epoch=len(X_train)//16,epochs=100,verbose=1) |

| Algorithm 4: Transformation of malware binary into images |

| Input: Malware binary |

| Result: Malware images of the family depending upon the combination |

| procedure transform(Family[i]), comb): |

| if(comb==1) |

| Result = make_image(Famliy[i], extract certificate files |

| from each sample of Family[i]) |

| elif(comb==2) |

| Result = make_image(Famliy[i], extract android manifest |

| files from each sample of Family[i]) |

| elif(comb==3) |

| Result = make_image(Famliy[i], extract resource files |

| from each sample of Family[i]) |

| elif(comb==4) |

| Result = make_image(Famliy[i], extract classes.dex files |

| from each sample of Family[i]) |

| elif(comb==5) |

| Result = make_image(Famliy[i], extract certificate and |

| android manifest files from each sample of Family[i]) |

| elif(comb==6) |

| Result = make_image(Famliy[i], extract certificate and |

| resource files from each sample of Family[i]) |

| elif(comb==7) |

| Result = make_image(Famliy[i], extract certificate and |

| classes.dex files from each sample of Family[i]) |

| elif(comb==8) |

| Result = make_image(Famliy[i], extract android manifest |

| and resource files from each sample of Family[i]) |

| elif(comb==9) |

| Result = make_image(Famliy[i], extract android manifest |

| and classes.dex files from each sample of Family[i]) |

| elif(comb==10) |

| Result = make_image(Famliy[i], extract resource and |

| classes.dex files from each sample of Family[i]) |

| elif(comb==11) |

| Result = make_image(Famliy[i], extract certificate, |

| android manifest, and resource files from each sample |

| of Family[i]) |

| elif(comb==12) |

| Result = make_image(Famliy[i], extract certificate, |

| android manifest, and classes.dex files from each sample |

| of Family[i]) |

| elif(comb==13) |

| Result = make_image(Famliy[i], extract certificate, |

| resource, and classes.dex files from each sample |

| of Family[i]) |

| elif(comb==14) |

| Result = make_image(Famliy[i], extract android manifest, |

| resource, and classes.dex from each sample of Family[i]) |

| elif(comb==15) |

| Result = make_image(Famliy[i], extract certificate, |

| android manifest, resource, and classes.dex from each |

| sample of Family[i]) |

| End procedure |

| procedure make_image(ar,filesizelist,widthlist): |

| ar_len=len(ar)/1024 |

| width=0 |

| for cidx in range(1,len(filesizelist)): |

| if(ar_len>=filesizelist[cidx-1] and ar_len<filesizelist[cidx]): |

| width=widthlist[cidx-1] |

| if(width==0): |

| width=1024 |

| rem1=len(ar)\%width |

| n=array.array("B") |

| n.frombytes(ar) |

| a=array.array("B") |

| a=n[0:len(ar)-rem1] |

| if (len(a)<width): |

| return numpy.array([]) |

| img=numpy.reshape(a,(int(len(a)/width),width)) |

| img=numpy.uint8(img) |

| return img |

| End procedure |

5. Conclusions and Future Scope

Author Contributions

Funding

Conflicts of Interest

References

- Qamar, A.; Karim, A.; Chang, V. Mobile malware attacks: Review, taxonomy and future directions. Future Gener. Comput. Syst. 2019, 97, 887–909. [Google Scholar] [CrossRef]

- Dong, S.; Li, M.; Diao, W.; Liu, X.; Liu, J.; Li, Z.; Xu, F.; Chen, K.; Wang, X.; Zhang, K. Understanding android obfuscation techniques: A large-scale investigation in the wild. In Proceedings of the International Conference on Security and Privacy in Communication Systems, Singapore, 8–10 August 2018; Springer: Cham, Switzerland, 2018; pp. 172–192. [Google Scholar]

- Maiorca, D.; Ariu, D.; Corona, I.; Aresu, M.; Giacinto, G. Stealth attacks: An extended insight into the obfuscation effects on Android malware. Comput. Secur. 2015, 51, 16–31. [Google Scholar] [CrossRef]

- Suarez-Tangil, G. DroidSieve: Fast and Accurate Classification of Obfuscated Android Malware. In Proceedings of the Seventh ACM on Conference on Data and Application Security and Privacy, Scottsdale, AZ, USA, 22–24 March 2017; pp. 309–320. [Google Scholar]

- Bakour, K.; Ünver, H.M.; Ghanem, R. A Deep Camouflage: Evaluating Android’s Anti-malware Systems Robustness Against Hybridization of Obfuscation Techniques with Injection Attacks. Arab. J. Sci. Eng. 2019, 44, 9333–9347. [Google Scholar] [CrossRef]

- Garcia, J.; Hammad, M.; Malek, S. Lightweight, obfuscation-Resilient detection and family identification of android malware. ACM Trans. Softw. Eng. Methodol. 2018, 26, 1–29. [Google Scholar] [CrossRef]

- Rastogi, V.; Chen, Y.; Jiang, X. Catch Me If You Can: Evaluating Android Anti-Malware against Transformation Attacks. IEEE Trans. Inf. Forensics Secur. 2014, 9, 99–108. [Google Scholar] [CrossRef]

- Mirzaei, O.; de Fuentes, J.; Tapiador, J.; Gonzalez-Manzano, L. AndrODet: An adaptive Android obfuscation detector. Future Gener. Comput. Syst. 2019, 90, 240–261. [Google Scholar] [CrossRef]

- Balachandran, V.; Sufatrio; Tan, D.J.; Thing, V.L. Control flow obfuscation for Android applications. Comput. Secur. 2016, 61, 72–93. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.P.; Poornachandran, P.; Venkatraman, S. Robust intelligent malware detection using deep learning. IEEE Access 2019, 7, 46717–46738. [Google Scholar] [CrossRef]

- Fu, J.; Xue, J.; Wang, Y.; Liu, Z.; Shan, C. Malware Visualization for Fine-Grained Classification. IEEE Access 2018, 6, 14510–14523. [Google Scholar] [CrossRef]

- Wei, F.; Li, Y.; Roy, S.; Ou, X.; Zhou, W. Deep Ground Truth Analysis of Current Android Malware. In Proceedings of the International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment, Bonn, Germany, 6–7 July 2017; Springer: Cham, Switzerland, 2017; pp. 252–276. [Google Scholar]

- Xie, N.; Wang, X.; Wang, W.; Liu, J. Fingerprinting Android malware families. Front. Comput. Sci. 2019, 13, 637–646. [Google Scholar] [CrossRef]

- Ni, S.; Qian, Q.; Zhang, R. Malware identification using visualization images and deep learning. Comput. Secur. 2018, 77, 871–885. [Google Scholar] [CrossRef]

- Türker, S.; Can, A.B. AndMFC: Android Malware Family Classification Framework. In Proceedings of the 2019 IEEE 30th International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC Workshops), Istanbul, Turkey, 8–11 September 2019; pp. 1–6. [Google Scholar]

- Vasan, D.; Alazab, M.; Wassan, S.; Naeem, H.; Safaei, B.; Zheng, Q. IMCFN: Image-based malware classification using fine-tuned convolutional neural network architecture. Comput. Netw. 2020, 171, 107138. [Google Scholar] [CrossRef]

- Aafer, Y.; Du, W.; Yin, H. DroidAPIMiner: Mining API-Level Features for Robust Malware Detection in Android. Secur. Priv. Commun. Netw. 2013, 127, 86–103. [Google Scholar]

- Feng, Y.; Dillig, I.; Anand, S.; Aiken, A. Apposcopy: Automated Detection of Android Malware. In Proceedings of the 2nd International Workshop on Software Development Lifecycle for Mobile, Hong Kong, China, 17 November 2014; pp. 13–14. [Google Scholar]

- Arzt, S.; Rasthofer, S.; Fritz, C.; Bodden, E.; Bartel, A.; Klein, J.; Le Traon, Y.; Octeau, D.; McDaniel, P. Flowdroid: Precise context, flow, field, object-sensitive and lifecycle-aware taint analysis for android apps. ACM Sigplan Not. 2014, 49, 259–269. [Google Scholar] [CrossRef]

- Li, L. Iccta: Detecting inter-component privacy leaks in android apps. In Proceedings of the 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; pp. 280–291. [Google Scholar]

- Feizollah, A.; Anuar, N.B.; Salleh, R.; Suarez-Tangil, G.; Furnell, S. AndroDialysis: Analysis of Android Intent Effectiveness in Malware Detection. Comput. Secur. 2017, 65, 121–134. [Google Scholar] [CrossRef]

- Martín, A.; Menéndez, H.D.; Camacho, D. MOCDroid: Multi-objective evolutionary classifier for Android malware detection. Soft Comput. 2017, 21, 7405–7415. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, M.; Gao, Z.; Xu, G.; Xian, H.; Li, Y.; Zhang, X. Constructing features for detecting android malicious applications: issues, taxonomy and directions. IEEE Access 2019, 7, 67602–67631. [Google Scholar] [CrossRef]

- Naway, A.; Li, Y. A review on the use of deep learning in android malware detection. arXiv 2018, arXiv:1812.10360. [Google Scholar]

- Aslan, O.; Samet, R. A Comprehensive Review on Malware Detection Approaches. IEEE Access 2020, 8, 6249–6271. [Google Scholar] [CrossRef]

- Venkatraman, S.; Alazab, M.; Vinayakumar, R. A hybrid deep learning image-based analysis for effective malware detection. J. Inf. Secur. Appl. 2019, 47, 377–389. [Google Scholar] [CrossRef]

- Cai, H.; Meng, N.; Ryder, B.; Yao, D. DroidCat: Effective Android Malware Detection and Categorization via App-Level Profiling. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1455–1470. [Google Scholar] [CrossRef]

- Martín, A.; Rodríguez-Fernández, V.; Camacho, D. CANDYMAN: Classifying Android malware families by modelling dynamic traces with Markov chains. Eng. Appl. Artif. Intell. 2018, 74, 121–133. [Google Scholar] [CrossRef]

- You, W.; Liang, B.; Shi, W.; Wang, P.; Zhang, X. TaintMan: An ART-compatible dynamic taint analysis framework on unmodified and non-rooted Android devices. IEEE Trans. Dependable Secur. Comput 2017, 17, 209–222. [Google Scholar] [CrossRef]

- Dini, G.; Martinelli, F.; Matteucci, I.; Petrocchi, M.; Saracino, A.; Sgandurra, D. Risk analysis of Android applications: A user-centric solution. Futur. Gener. Comput. Syst. 2018, 80, 505–518. [Google Scholar] [CrossRef]

- Teufl, P.; Ferk, M.; Fitzek, A.; Hein, D.; Kraxberger, S.; Orthacker, C. Malware detection by applying knowledge discovery processes to application metadata on the Android Market (Google Play). Secur. Commun. Netw. 2016, 9, 389–419. [Google Scholar] [CrossRef]

- Alzaylaee, M.K.; Yerima, S.Y.; Sezer, S. DynaLog: An automated dynamic analysis framework for characterizing android applications. In Proceedings of the 2016 International Conference On Cyber Security And Protection Of Digital Services (Cyber Security), London, UK, 13–14 June 2016; pp. 1–8. [Google Scholar]

- Sadeghi, A.; Bagheri, H.; Garcia, J.; Malek, S. A Taxonomy and Qualitative Comparison of Program Analysis Techniques for Security Assessment of Android Software. IEEE Trans. Softw. Eng. 2017, 43, 492–530. [Google Scholar] [CrossRef]

- Faruki, P.; Bharmal, A.; Laxmi, V.; Ganmoor, V.; Gaur, M.S.; Conti, M.; Rajarajan, M. Android Security: A Survey of Issues, Malware Penetration, and Defenses. IEEE Commun. Surv. Tutor. 2015, 17, 998–1022. [Google Scholar] [CrossRef]

- Alzaylaee, M.K.; Yerima, S.Y.; Sezer, S. EMULATOR vs REAL PHONE: Android Malware Detection Using Machine Learning. In Proceedings of the 3rd ACM on International Workshop on Security and Privacy Analytics, Scottsdale, AZ, USA, 24 March 2017; pp. 65–72. [Google Scholar]

- Vidas, T.; Christin, N. Evading android runtime analysis via sandbox detection. In Proceedings of the 9th ACM Symposium on Information, Computer and Communications Security, Kyoto, Japan, 4–6 June 2014; pp. 447–458. [Google Scholar]

- Gascon, H.; Yamaguchi, F.; Arp, D.; Rieck, K. Structural detection of android malware using embedded call graphs. In Proceedings of the 2013 ACM Workshop on Artificial Intelligence and Security, Berlin, Germany, 4 November 2013; pp. 45–54. [Google Scholar]

- Su, D.; Liu, J.; Wang, X.; Wang, W. Detecting Android Locker-Ransomware on Chinese Social Networks. IEEE Access 2018, 7, 20381–20393. [Google Scholar] [CrossRef]

- Idrees, F.; Rajarajan, M.; Conti, M.; Chen, T.M.; Rahulamathavan, Y. PIndroid: A novel Android malware detection system using ensemble learning methods. Comput. Secur. 2017, 68, 36–46. [Google Scholar] [CrossRef]

- Jung, B.; Kim, T.; Im, E.G. Malware classification using byte sequence information. In Proceedings of the 2018 Conference on Research in Adaptive and Convergent Systems, Honolulu, HI, USA, 9–12 October 2018; pp. 143–148. [Google Scholar]

- Wu, S.; Wang, P.; Li, X.; Zhang, Y. Effective detection of android malware based on the usage of data flow APIs and machine learning. Inf. Softw. Technol. 2016, 75, 17–25. [Google Scholar] [CrossRef]

- Suarez-Tangil, G.; Tapiador, J.E.; Peris-Lopez, P.; Blasco, J. Dendroid: A text mining approach to analyzing and classifying code structures in Android malware families. Expert Syst. Appl. 2014, 41, 1104–1117. [Google Scholar] [CrossRef]

- Dash, S.K.; Suarez-Tangil, G.; Khan, S.; Tam, K.; Ahmadi, M.; Kinder, J.; Cavallaro, L. DroidScribe: Classifying Android Malware Based on Runtime Behavior. In Proceedings of the 2016 IEEE Security and Privacy Workshops (SPW), San Jose, CA, USA, 22–26 May 2016; pp. 252–261. [Google Scholar]

- Yang, C.; Xu, Z.; Gu, G.; Yegneswaran, V.; Porras, P. DroidMiner: Automated mining and characterization of fine-grained malicious behaviors in android applications. In Computer Security—ESORICS 2014; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8712, pp. 163–182. [Google Scholar]

- Hanif, M.; Naqvi, R.A.; Abbas, S.; Khan, M.A.; Iqbal, N. A novel and efficient 3D multiple images encryption scheme based on chaotic systems and swapping operations. IEEE Access. 2020, 8, 123536–123555. [Google Scholar] [CrossRef]

- Naqvi, R.A.; Arsalan, M.; Rehman, A.; Rehman, A.U.; Loh, W.K.; Paul, A. Deep Learning-Based Drivers Emotion Classification System in Time Series Data for Remote Applications. Remote Sens. 2020, 12, 587. [Google Scholar] [CrossRef]

- Arp, D.; Spreitzenbarth, M.; Hübner, M.; Gascon, H.; Rieck, K. Drebin: Effective and Explainable Detection of Android Malware in Your Pocket. In Proceedings of the 2014 Network and Distributed System Security (NDSS) Symposium, San Diego, CA, USA, 23–26 February 2014; pp. 23–26. [Google Scholar]

- Nataraj, L.; Kirat, D.; Manjunath, B.S.; Vigna, G. Sarvam: Search and retrieval of malware. In Proceedings of the Annual Computer Security Conference (ACSAC) Worshop on Next Generation Malware Attacks and Defense (NGMAD), New Orleans, LA, USA, 10 December 2013. [Google Scholar]

- Nataraj, L.; Yegneswaran, V.; Porras, P.; Zhang, J. A comparative assessment of malware classification using binary texture analysis and dynamic analysis. In Proceedings of the 4th ACM Workshop on Security and Artificial Intelligence, Chicago, IL, USA, 21 October 2011; pp. 21–30. [Google Scholar]

- Farrokhmanesh, M.; Hamzeh, A. A novel method for malware detection using audio signal processing techniques. In Proceedings of the 2016 Artificial Intelligence and Robotics (IRANOPEN), Qazvin, Iran, 9 April 2016; pp. 85–91. [Google Scholar]

- Zhang, J.; Qin, Z.; Yin, H.; Ou, L.; Xiao, S.; Hu, Y. Malware variant detection using opcode image recognition with small training sets. In Proceedings of the 2016 25th International Conference on Computer Communication and Networks (ICCCN), Waikoloa, HI, USA, 1–4 August 2016; pp. 1–9. [Google Scholar]

- Han, K.; Kang, B.; Im, E.G. Malware analysis using visualized images andentropy graphs. Int. J. Inf. Secur. 2015, 14, 1–15. [Google Scholar] [CrossRef]

- Han, K.; Kang, B.; Im, E.G. Malware Analysis Using Visualized Image Matrices. Sci. World J. 2014, 2014, 132713. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Sagar, K.P.; Kuppusamy, K.S.; Aghila, G. Machine learning based malware classification for Android applications using multimodal image representations. In Proceedings of the 2016 10th International Conference on Intelligent Systems and Control (ISCO), Coimbatore, Tamil Nadu, India, 7–8 January 2016; pp. 1–6. [Google Scholar]

- Yen, Y.S.; Sun, H.M. An android mutation malware detection based on deep learning using visualization of importance from codes. Microelectron. Reliab. 2019, 93, 109–114. [Google Scholar] [CrossRef]

- Li, Y.; Liu, F.; Du, Z.; Zhang, D. A Simhash-based integrative features extraction algorithm for malware detection. Algorithms 2018, 11, 124. [Google Scholar] [CrossRef]

- Li, Y.; Jang, J.; Hu, X.; Ou, X. Android malware clustering through malicious payload mining. In Proceedings of the International Symposium on Research in Attacks, Intrusions, and Defenses, Atlanta, GA, USA, 18–20 September 2017; pp. 192–214. [Google Scholar]

- Luo, J.S.; Lo, D.C.T. Binary malware image classification using machine learning with local binary pattern. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 4664–4667. [Google Scholar]

- Jain, A.; Gonzalez, H.; Stakhanova, N. Enriching reverse engineering through visual exploration of Android binaries. In Proceedings of the 5th Program Protection and Reverse Engineering Workshop, Los Angeles, CA, USA, 8 December 2015; pp. 1–9. [Google Scholar]

- Ning, Y. Fingerprinting Android Obfuscation Tools Using Visualization. Ph.D. Dissertation, Dept. Comput. Sci.. New Brunswick Univ., Fredericton, NB, Canada, 2017. [Google Scholar]

- Ieracitano, C.; Adeel, A.; Morabito, F.C.; Hussain, A. A novel statistical analysis and autoencoder driven intelligent intrusion detection approach. Neurocomputing 2020, 387, 51–62. [Google Scholar] [CrossRef]

- Kasongo, S.M.; Sun, Y. A deep learning method with wrapper based feature extraction for wireless intrusion detection system. Comput. Secur. 2020, 92, 10172. [Google Scholar] [CrossRef]

- Alswaina, F.; Elleithy, K. Android Malware Family Classification and Analysis: Current Status and Future Directions. Electronics 2020, 9, 942. [Google Scholar] [CrossRef]

- Hanin, B. Which neural net architectures give rise to exploding and vanishing gradients? Adv. Neural Inf. Process. Syst. 2018, 582–591. [Google Scholar]

- Kumar, S.; Hussain, L.; Banarjee, S.; Reza, M. Energy load forecasting using deep learning approach-LSTM and GRU in spark cluster. In Proceedings of the 5th International Conference on Emerging Applications of Information Technology (EAIT), Shibpur, West Bengal, India, 12–13 January 2018; pp. 1–4. [Google Scholar]

- Gong, W.; Chen, H.; Zhang, Z.; Zhang, M.; Wang, R.; Guan, C.; Wang, Q. A novel deep learning method for intelligent fault diagnosis of rotating machinery based on improved CNN-SVM and multichannel data fusion. Sensors 2019, 19, 1693. [Google Scholar] [CrossRef] [PubMed]

- Niu, X.X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Sun, J.; Wu, Z.; Yin, Z.; Yang, Z. SVM-CNN-Based Fusion Algorithm for Vehicle Navigation Considering Atypical Observations. IEEE Signal Process. Lett. 2018, 26, 212–216. [Google Scholar] [CrossRef]

- Xue, D.X.; Zhang, R.; Feng, H.; Wang, Y.L. CNN-SVM for microvascular morphological type recognition with data augmentation. J. Med. Biol. Eng. 2016, 36, 755–764. [Google Scholar] [CrossRef]

- Szarvas, M.; Yoshizawa, A.; Yamamoto, M.; Ogata, J. Pedestrian detection with convolutional neural networks. In Proceedings of the IEEE Proceedings. Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 224–229. [Google Scholar]

- Mori, K.; Matsugu, M.; Suzuki, T. Face Recognition Using SVM Fed with Intermediate Output of CNN for Face Detection. In Proceedings of the MVA2005 IAPR Conference on Machine VIsion Applications, Tsukuba, Japan, 16–18 May 2005; pp. 410–413. [Google Scholar]

- Vinayakumar, R.; Soman, K.; Poornachandran, P.; Sachin Kumar, S. Detecting Android malware using long short-term memory (LSTM). J. Intell. Fuzzy Syst. 2018, 34, 1277–1288. [Google Scholar] [CrossRef]

- Zhang, M.; Duan, Y.; Yin, H.; Zhao, Z. Semantics-aware android malware classification using weighted contextual api dependency graphs. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1105–1116. [Google Scholar]

- Martinelli, F.; Marulli, F.; Mercaldo, F. Evaluating convolutional neural network for effective mobile malware detection. Procedia Comput. Sci. 2017, 112, 2372–2381. [Google Scholar] [CrossRef]

- Deshotels, L.; Notani, V.; Lakhotia, A. Droidlegacy: Automated familial classification of android malware. In Proceedings of the ACM SIGPLAN on Program Protection and Reverse Engineering Workshop, San Diego, CA, USA, 22–24 January 2014; pp. 1–12. [Google Scholar]

- Yuan, Z.; Lu, Y.; Xue, Y. Droiddetector: android malware characterization and detection using deep learning. Tsinghua Sci. Technol. 2016, 21, 114–123. [Google Scholar] [CrossRef]

- Burguera, I.; Zurutuza, U.; Nadjm-Tehrani, S. Crowdroid: behavior-based malware detection system for android. In Proceedings of the 1st ACM Workshop on Security and Privacy in Smartphones and Mobile Devices, Chicago, IL, USA, 17 October 2011; pp. 15–26. [Google Scholar]

- Shaha, M.; Pawar, M. Transfer learning for image classification. In Proceedings of the 2nd International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, Tamil Nadu, India, 29–30 March 2018; pp. 656–660. [Google Scholar]

- Rezende, E.; Ruppert, G.; Carvalho, T.; Theophilo, A.; Ramos, F.; de Geus, P. Malicious software classification using VGG16 deep neural networks bottleneck features. Inf.-Technol.-New Gener. 2018, 51–59. [Google Scholar]

| File Size | Width |

|---|---|

| <50 KB | 64 |

| 50 KB~100 KB | 128 |

| 100 KB~200 KB | 256 |

| 200 KB~500 KB | 512 |

| 500 KB~1000 KB | 1024 |

| Name | Classes | CR * | AM * | RS * | CL * | CR+AM | CR+RS | CR+CL | AM+RS | AM+CL | RS+CL | CR+AM+RS | CR+AM+CL | CR+RS+CL | AM+RS+CL | CR+AM+RS+CL |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FakeInstaller | 1 | 360 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 | 925 |

| DroidKungFu | 2 | 236 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 | 666 |

| Plankton | 3 | 439 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 | 625 |

| Opfake | 4 | 5 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 | 613 |

| GinMaster | 5 | 30 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 | 339 |

| BaseBridge | 6 | 13 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 | 329 |

| Iconosys | 7 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 | 152 |

| Kmin | 8 | 4 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 | 147 |

| FakeDoc | 9 | 107 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 | 132 |

| Geinimi | 10 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 |

| Adrd | 11 | 88 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 | 91 |

| DroidDream | 12 | 63 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 | 81 |

| ExploitLinuxLotoor | 13 | 39 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 |

| MobileTx | 14 | 20 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 |

| Glodream | 15 | 59 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 | 69 |

| FakeRun | 16 | 27 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 | 61 |

| SendPay | 17 | 22 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 | 59 |

| Gappusin | 18 | 51 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 | 58 |

| Imlog | 19 | 6 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 | 43 |

| SMSreg | 20 | 14 | 40 | 40 | 41 | 40 | 40 | 41 | 40 | 41 | 41 | 40 | 41 | 41 | 41 | 41 |

| All instances | 1826 | 4659 | 4659 | 4660 | 4659 | 4659 | 4660 | 4659 | 4660 | 4660 | 4659 | 4660 | 4660 | 4660 | 4660 |

| Layer Number | Layer Type | Hyperparameters | |

|---|---|---|---|

| Layer 1 | Convolution Layer | Filter Size | 7 × 7 |

| Number of Filters | 32 | ||

| Activation Layer | Relu | ||

| Layer 2 | Pooling Layer | Pool Size | 3 × 3 |

| Pooling type | Max-Pooling | ||

| Layer 3 | Batch Normalization Layer | ||

| Layer 4 | Dropout Layer | Rate | 0.5 |

| Layer 5 | Convolution Layer | Filter Size | 5 × 5 |

| Number of Filters | 128 | ||

| Activation Layer | Relu | ||

| Layer 6 | Pooling Layer | Pool Size | 3 × 3 |

| Pooling type | Max-Pooling | ||

| Layer 7 | Batch Normalization Layer | ||

| Layer 8 | Dropout Layer | Rate | 0.5 |

| Layer 9 | Convolution Layer | Filter Size | 3 × 3 |

| Number of Filters | 256 | ||

| Activation Layer | Relu | ||

| Layer 10 | Pooling Layer | Pool Size | 2 × 2 |

| Pooling type | Max-Pooling | ||

| Layer 11 | Batch Normalization Layer | ||

| Layer 12 | Dropout Layer | Rate | 0.5 |

| Layer 13 | Flatten Layer | ||

| Layer 14 | Dense Layer (D1) | Activation Layer | Relu |

| Neurons | 50 | ||

| Layer 15 | Dense Layer (D2) | Activation Layer | Relu |

| Neurons | 100 | ||

| Layer 16 | Dense Layer (D3) | Activation Layer | Relu |

| Neurons | 200 | ||

| Layer 17 | Dense Layer (D4) | Activation Layer | Softmax |

| Neurons | 20 | ||

| Image Combination | CNN | CNN-SVM | CNN-KNN | CNN-RF | |

|---|---|---|---|---|---|

| 1 | CR | 83.58% | 82.92% | 77.11% | 83.42% |

| 2 | AM | 89.79% | 90.18% | 83.94% | 84.85% |

| 3 | RS | 86.86% | 88.56% | 86.02% | 84.53% |

| 4 | CL | 89.46% | 90.57% | 89.40% | 87.58% |

| 5 | CR+AM | 91.48% | 92.59% | 86.93% | 87.52% |

| 6 | CR+RS | 87.12% | 89.47% | 86.80% | 85.89% |

| 7 | CR+CL | 89.33% | 90.25% | 89.01% | 88.43% |

| 8 | AM+RS | 88.29% | 89.47% | 87.78% | 84.98% |

| 9 | AM+CL | 89.33% | 90.83% | 89.79% | 88.69% |

| 10 | RS+CL | 88.49% | 90.96% | 89.34% | 87.58% |

| 11 | CR+AM+RS | 89.46% | 90.77% | 88.75% | 85.50% |

| 12 | CR+AM+CL | 89.33% | 90.51% | 88.49% | 88.82% |

| 13 | CR+RS+CL | 89.53% | 90.90% | 89.66% | 88.17% |

| 14 | AM+RS+CL | 88.55% | 90.70% | 89.86% | 87.97% |

| 15 | CR+AM+RS+CL | 89.33% | 90.70% | 89.60% | 87.84% |

| Study | Classification of Android Malware Families | Automatic Extraction of Features through Deep Learning | Features | Model | Time (s) | Environment |

|---|---|---|---|---|---|---|

| [42] | Yes | No | Control flow graph | Single linkage clustering | Not specified | Not specified |

| [44] | Yes | No | Call graph, Application programming interface (API) | Naive Bayes (NB), Support Vector Machine (SVM), Decision Tree (DT), Random Forest (RF) | 19.8 | Corei5, 6 G RAM |

| [72] | No | No | Permissions, events generated by monkey tool | Recurrent Neural Network (RNN), Long Short Term Memory (LSTM) | Not specified | Not specified |

| [73] | Yes | No | Information flow between APIs | Semantic-based approach | 175.88 | Xeon, 128 G RAM |

| [74] | No | No | System calls | Convolutional Neural Network (CNN) | Executed app for 60 s | Not specified |

| [75] | Yes | No | Application programming interface | Visualization and similarity-based | Not specified | Not specified |

| [6] | Yes | No | Permissions, Package names, Intents, Information flow between APIs | C4.5 | 95.2 | 8-core, 64 G RAM |

| [76] | Yes | No | Permissions, API | Deep belief network | Not specified | Not specified |

| [77] | No | No | System calls into feature vectors | K-means | Not stated | Not specified |

| [43] | Yes | No | Network, System calls, File system access, Binder transactions | SVM | Not specified | Not specified |

| Our method (SARVOTAM) | Yes | Yes | CNN features extacted from Lightweight malware images | CNN, CNN-SVM, CNN-KNN, CNN-RF | 0.55 | Core i5, 8 G RAM |

| S.No. | Combination | RAM Usage (in %) | Execution Time (s) | Average Time per App (s) |

|---|---|---|---|---|

| 1 | CR | 36.50 | 228.89 | 0.15 |

| 2 | AM | 37.33 | 751.33 | 0.49 |

| 3 | RS | 48.42 | 880.98 | 0.57 |

| 4 | CL | 49.33 | 1069.71 | 0.70 |

| 5 | CR+AM | 44.42 | 840.22 | 0.55 |

| 6 | CR+RS | 49.75 | 953.16 | 0.62 |

| 7 | CR+CL | 51.17 | 1074.94 | 0.70 |

| 8 | AM+RS | 57.92 | 855.42 | 0.56 |

| 9 | AM+CL | 56.58 | 1052.11 | 0.68 |

| 10 | RS+CL | 57.75 | 1060.74 | 0.69 |

| 11 | CR+AM+RS | 57.25 | 896.58 | 0.58 |

| 12 | CR+AM+CL | 63.58 | 1088.45 | 0.71 |

| 13 | CR+RS+CL | 54.33 | 1207.43 | 0.79 |

| 14 | AM+RS+CL | 63.67 | 1153.69 | 0.75 |

| 15 | CR+AM+RS+CL | 68.42 | 1478.16 | 0.96 |

| S.No. | FamilyName | FakeInstaller | DroidKungFu | Plankton | Opfake | GinMaster | BaseBridge | Iconosys | Kmin | FakeDoc | Geinimi | Adrd | DroidDream | ExploitLinuxLotoor | Glodream | MobileTx | FakeRun | SendPay | Gappusin | Imlog | SMSreg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | FakeInstaller | 296 | 1 | 0 | 3 | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 2 | DroidKungFu | 0 | 203 | 5 | 2 | 1 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 1 | 0 | 0 |

| 3 | Plankton | 0 | 3 | 200 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 4 | Opfake | 1 | 0 | 0 | 201 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | GinMaster | 0 | 3 | 3 | 0 | 102 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 6 | BaseBridge | 3 | 6 | 4 | 0 | 0 | 94 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 7 | Iconosys | 0 | 0 | 0 | 0 | 0 | 0 | 50 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 8 | Kmin | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 49 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 9 | FakeDoc | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 42 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 10 | Geinimi | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 28 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 11 | Adrd | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 23 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 |

| 12 | DroidDream | 1 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 21 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 13 | ExploitLinuxLotoor | 3 | 5 | 1 | 0 | 2 | 3 | 0 | 0 | 0 | 0 | 0 | 1 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 14 | Goldream | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 22 | 0 | 0 | 0 | 0 | 0 | 0 |

| 15 | MobileTx | 1 | 3 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 15 | 0 | 0 | 0 | 0 | 0 |

| 16 | FakeRun | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 17 | 0 | 0 | 0 | 0 |

| 17 | SendPay | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 18 | 0 | 0 | 0 |

| 18 | Gappusin | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 15 | 0 | 0 |

| 19 | Imlog | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 10 | 0 |

| 20 | SMSreg | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 |

| S.No. | Image Combination | SARVOTAM | Typic Network | |||

|---|---|---|---|---|---|---|

| CNN | CNN-SVM | CNN-KNN | CNN-RF | VGG16 | ||

| 1 | CR | 83.58% | 82.92% | 77.11% | 83.42% | 78.27% |

| 2 | AM | 89.79% | 90.18% | 83.94% | 84.85% | 85.76% |

| 3 | RS | 86.86% | 88.56% | 86.02% | 84.53% | 82.12% |

| 4 | CL | 89.46% | 90.57% | 89.40% | 87.58% | 87.23% |

| 5 | CR+AM | 91.48% | 92.59% | 86.93% | 87.52% | 90.57% |

| 6 | CR+RS | 87.12% | 89.47% | 86.80% | 85.89% | 88.91% |

| 7 | CR+CL | 89.33% | 90.25% | 89.01% | 88.43% | 89.34% |

| 8 | AM+RS | 88.29% | 89.47% | 87.78% | 84.98% | 86.78% |

| 9 | AM+CL | 89.33% | 90.83% | 89.79% | 88.69% | 84.43% |

| 10 | RS+CL | 88.49% | 90.96% | 89.34% | 87.58% | 84.37% |

| 11 | CR+AM+RS | 89.46% | 90.77% | 88.75% | 85.50% | 87.67% |

| 12 | CR+AM+CL | 89.33% | 90.51% | 88.49% | 88.82% | 86.81% |

| 13 | CR+RS+CL | 89.53% | 90.90% | 89.66% | 88.17% | 84.56% |

| 14 | AM+RS+CL | 88.55% | 90.70% | 89.86% | 87.97% | 89.29% |

| 15 | CR+AM+RS+CL | 89.33% | 90.70% | 89.60% | 87.84% | 84.32% |

| S.No. | Combination | VGG16 Run Time Performance | SARVOTAM Run Time Performance | ||

|---|---|---|---|---|---|

| RAM Usage (in %) | Execution Time (in secs) | RAM Usage (in %) | Execution Time (in secs) | ||

| 1 | CR | 44.50 | 1935.51 | 36.50 | 228.89 |

| 2 | AM | 48.67 | 1722.41 | 37.33 | 751.33 |

| 3 | RS | 70.33 | 1548.42 | 48.42 | 880.98 |

| 4 | CL | 55.08 | 1635.32 | 49.33 | 1069.71 |

| 5 | CR+AM | 48.75 | 1754.86 | 44.42 | 840.22 |

| 6 | CR+RS | 49.58 | 1724.42 | 49.75 | 953.16 |

| 7 | CR+CL | 56.5 | 1680.31 | 51.17 | 1074.94 |

| 8 | AM+RS | 55.42 | 1535.78 | 57.92 | 855.42 |

| 9 | AM+CL | 55.17 | 1418.45 | 56.58 | 1052.11 |

| 10 | RS+CL | 62.92 | 1656.43 | 57.75 | 1060.74 |

| 11 | CR+AM+RS | 63.5 | 1771.69 | 57.25 | 896.58 |

| 12 | CR+AM+CL | 63.75 | 1619.16 | 63.58 | 1088.45 |

| 13 | CR+RS+CL | 73.25 | 1834.11 | 54.33 | 1207.43 |

| 14 | AM+RS+CL | 73.42 | 1795.91 | 63.67 | 1153.69 |

| 15 | CR+AM+RS+CL | 74.33 | 2178.12 | 68.42 | 1478.16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, J.; Thakur, D.; Ali, F.; Gera, T.; Kwak, K.S. Deep Feature Extraction and Classification of Android Malware Images. Sensors 2020, 20, 7013. https://doi.org/10.3390/s20247013

Singh J, Thakur D, Ali F, Gera T, Kwak KS. Deep Feature Extraction and Classification of Android Malware Images. Sensors. 2020; 20(24):7013. https://doi.org/10.3390/s20247013

Chicago/Turabian StyleSingh, Jaiteg, Deepak Thakur, Farman Ali, Tanya Gera, and Kyung Sup Kwak. 2020. "Deep Feature Extraction and Classification of Android Malware Images" Sensors 20, no. 24: 7013. https://doi.org/10.3390/s20247013

APA StyleSingh, J., Thakur, D., Ali, F., Gera, T., & Kwak, K. S. (2020). Deep Feature Extraction and Classification of Android Malware Images. Sensors, 20(24), 7013. https://doi.org/10.3390/s20247013