Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metric

Abstract

1. Introduction

- (Case 1) A clean sample is regarded as mislabeled and cleaned. This case harms the classification performance, especially when the size of the training dataset is small.

- (Case 2) A mislabeled sample is regarded as clean and retained or unchanged. This makes noisy samples remain in the training dataset and degrades the classification performance.

- Filtering based on voting (consensus vote and majority vote) of base classifiers of a Bagging ensemble.

- Filtering based on removing training examples that obtained high weights in the boosting process. Indeed, mislabeled examples are assumed to have high weights.

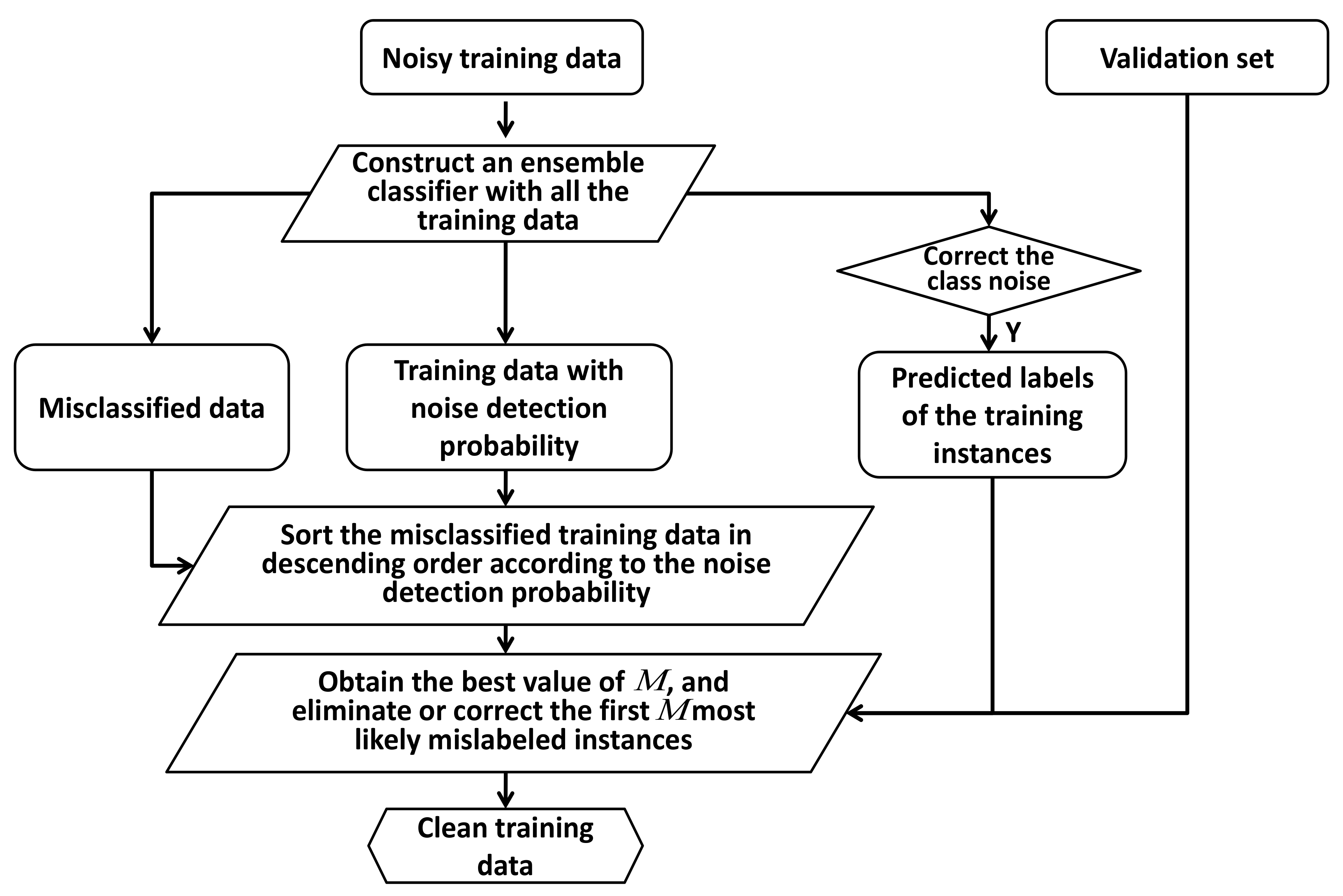

2. Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metrics

2.1. Label Noise Detection Metric

- is an ensemble model composed of T base classifiers,

- () is an instance, with as a feature vector and y as one of the C class labels,

- is a set of N training samples,

- is the number of classifiers predicting the label c when the feature vector is ,

- is a metric assessing the likelihood that a sample will be mislabeled (it is used to sort samples),

- is equal to one when statement is true and is equal to zero otherwise,

- counting parameter.

2.1.1. Supervised Max Operation (SuMax)

2.1.2. Supervised Sum Operation (SuSum)

2.1.3. Unsupervised Max Operation (UnMax)

2.1.4. Unsupervised Sum Operation (UnSum)

2.2. Label Noise Cleaning Method

2.2.1. Label Noise Removal with ENDM

| Algorithm 1 Label-noise removal with an adaptive ensemble method based on noise-detection metric |

|

2.2.2. Label Noise Correction with ENDM

| Algorithm 2 Label noise correction with an adaptive ensemble method based on noise-detection metric |

|

3. Experimental Results

3.1. Experiment Settings

3.2. Datasets

3.3. Comparison of ENDM Versus no Filtering

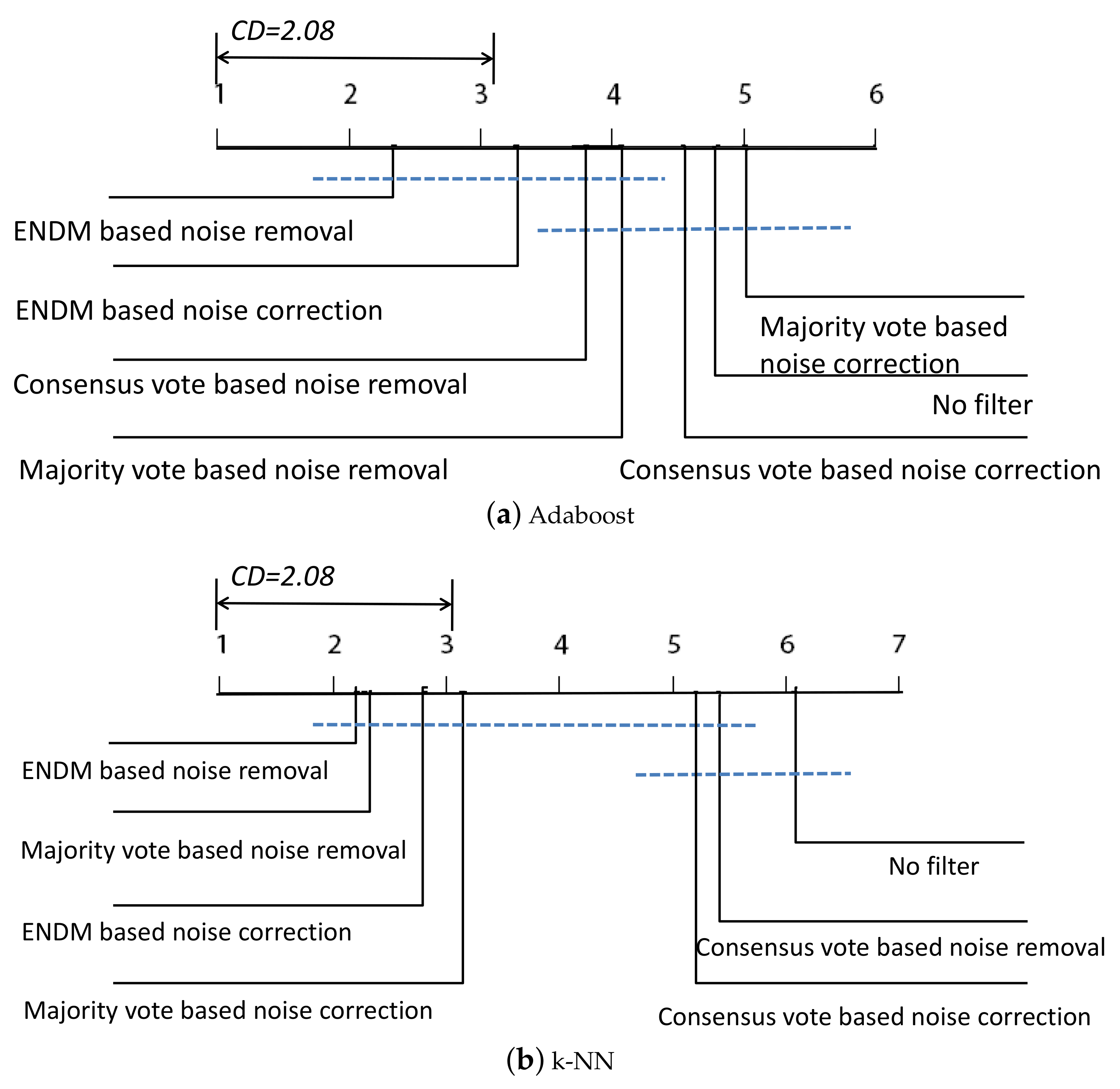

3.4. Comparison of ENDM Versus Other Ensemble-Vote-Based Noise Filter

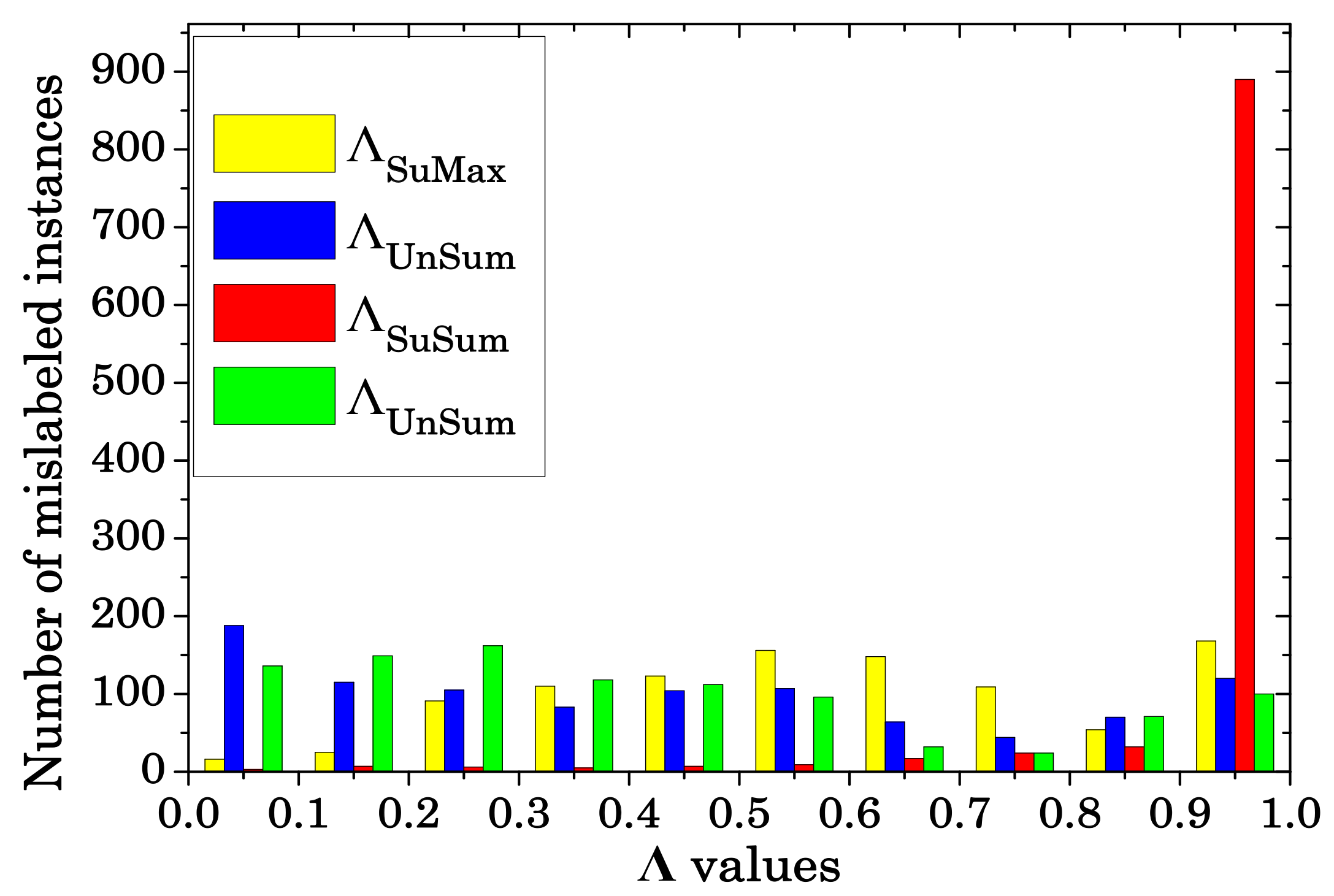

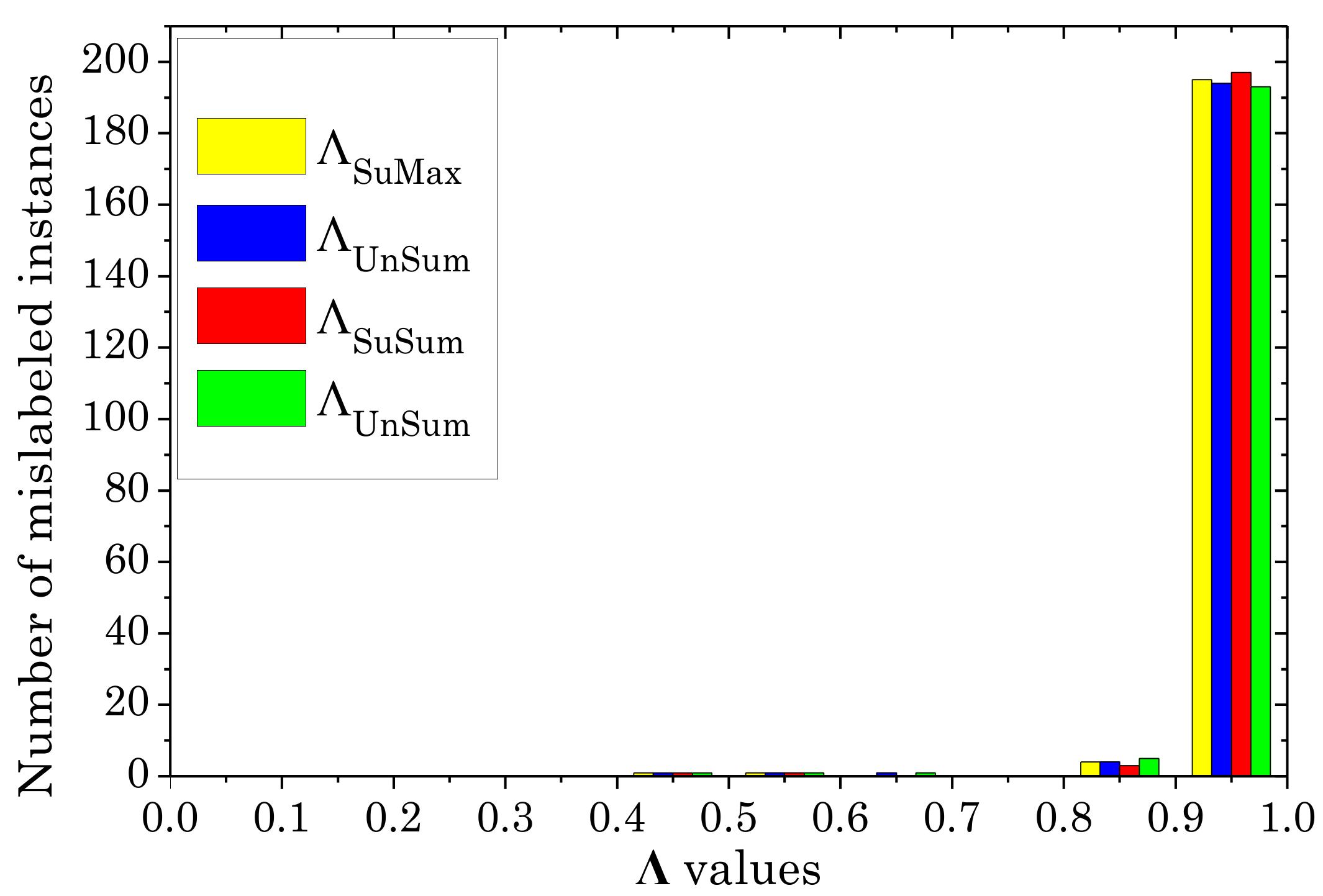

3.5. Comparing Different Noise Detection Metrics in ENDM

3.6. Comparing Different Classifiers Used for -Selection in ENDM

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Frenay, B.; Verleysen, M. Classification in the Presence of Label Noise: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 845–869. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Wu, X. Class Noise vs. Attribute Noise: A Quantitative Study. Artif. Intell. Rev. 2004, 22, 177–210. [Google Scholar] [CrossRef]

- Gamberger, D.; Lavrac, N.; Dzeroski, S. Noise detection and elimination in preprocessing: Experiments in medical domains. Appl. Artif. Intell. 2000, 14, 205–223. [Google Scholar] [CrossRef]

- Brodley, C.E.; Friedl, M.A. Identifying Mislabeled Training Data. J. Artif. Intell. Res. 1999, 11, 131–167. [Google Scholar] [CrossRef]

- Feng, W. Investigation of Training Data Issues in Ensemble Classification Based on Margin Concept. Application to Land Cover Mapping. Ph.D. Thesis, University of Bordeaux 3, Bordeaux, France, 2017. [Google Scholar]

- Quan, Y.; Zhong, X.; Feng, W.; Dauphin, G.; Gao, L.; Xing, M. A Novel Feature Extension Method for the Forest Disaster Monitoring Using Multispectral Data. Remote Sens. 2020, 12, 2261. [Google Scholar] [CrossRef]

- Quan, Y.; Tong, Y.; Feng, W.; Dauphin, G.; Huang, W.; Xing, M. A Novel Image Fusion Method of Multi-Spectral and SAR Images for Land Cover Classification. Remote Sens. 2020, 12, 3801. [Google Scholar] [CrossRef]

- Feng, W.; Dauphin, G.; Huang, W.; Quan, Y.; Liao, W. New margin-based subsampling iterative technique in modified random forests for classification. KnowledgeBased Systems 2019, 182. [Google Scholar] [CrossRef]

- Feng, W.; Dauphin, G.; Huang, W.; Quan, Y.; Bao, W.; Wu, M.; Li, Q. Dynamic synthetic minority over-sampling technique based rotation forest for the classification of imbalanced hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2019, 12, 2159–2169. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Bao, W. Imbalanced Hyperspectral Image Classification With an Adaptive Ensemble Method Based on SMOTE and Rotation Forest With Differentiated Sampling Rates. IEEE Geosci. Remote. Sens. Lett. 2019, 16, 1879–1883. [Google Scholar] [CrossRef]

- Yuan, W.; Guan, D.; Ma, T.; Khattak, A.M. Classification with class noises through probabilistic sampling. Inf. Fusion 2018, 41, 57–67. [Google Scholar] [CrossRef]

- Feng, W.; Bao, W. Weight-Based Rotation Forest for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2167–2171. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Ye, H.; Zhao, L. Synthetic Minority Over-Sampling Technique Based Rotation Forest for the Classification of Unbalanced Hyperspectral Data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2651–2654. [Google Scholar]

- Sabzevari, G.M.M.M.; Suarez, A. A two-stage ensemble method for the detection of class-label noise. Neurocomputing 2018, 275, 2374–2383. [Google Scholar] [CrossRef]

- Verbaeten, S.; Assche, A. Ensemble Methods for Noise Elimination in Classification Problems. In International Workshop Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2709, pp. 317–325. [Google Scholar]

- Li, Q.; Feng, W.; Quan, Y. Trend and forecasting of the COVID-19 outbreak in China. J. Infect. 2020, 80, 469–496. [Google Scholar] [CrossRef] [PubMed]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- John, G. Robust Decision Trees: Removing Outliers from Databases. In Proceedings of the First International Conference on Knowledge Discovery and Data Mining, Montreal, QC, Canada, 20–21 August 1995; pp. 174–179. [Google Scholar]

- Breiman, L. Bias, Variance, and Arcing Classifiers; Technical Report 460; Statistics Department, University of California: Berkeley, CA, USA, 1996. [Google Scholar]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar]

- Freund, Y.; Schapire, R. Experiments with a New Boosting Algorithm. In Proceedings of the ICML’96: 13th International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; pp. 148–156. [Google Scholar]

- Duda, R.; Hart, P.; Stork, D. Pattern Classification, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2001. [Google Scholar]

- Guo, L.; Boukir, S. Ensemble margin framework for image classification. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4231–4235. [Google Scholar]

- Teng, C. Correcting Noisy Data. In Proceedings of the Sixteenth International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999; pp. 239–248. [Google Scholar]

- Feng, W.; Boukir, S.; Guo, L. Identification and correction of mislabeled training data for land cover classification based on ensemble margin. In Proceedings of the IEEE International, Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4991–4994. [Google Scholar] [CrossRef]

- Pechenizkiy, M.; Tsymbal, A.; Puuronen, S.; Pechenizkiy, O. Class Noise and Supervised Learning in Medical Domains: The Effect of Feature Extraction. In Proceedings of the 19th IEEE International Symposium on Computer-Based Medical Systems, Salt Lake City, UT, USA, 22–23 June 2006; pp. 708–713. [Google Scholar]

- Sluban, B.; Gamberger, D.; Lavrac, N. Ensemble-based noise detection: Noise ranking and visual performance evaluation. Data Min. Knowl. Discov. 2013, 28, 265–303. [Google Scholar] [CrossRef]

- Zhu, X.Q.; Wu, X.D.; Chen, Q.J. Eliminating class noise in large datasets. In Proceeding of the International Conference on Machine Learning ( ICML2003), Washington, DC, USA, 21–24 August 2003; pp. 920–927. [Google Scholar]

- Khoshgoftaar, T.M.; Zhong, S.; Joshi, V. Enhancing Software Quality Estimation Using Ensemble-classifier Based Noise Filtering. Intell. Data Anal. 2005, 9, 3–27. [Google Scholar] [CrossRef]

- Guyon, I.; Matic, N.; Vapnik, V. Advances in Knowledge Discovery and Data Mining; Chapter Discovering Informative Patterns and Data Cleaning; American Association for Artificial Intelligence: Menlo Park, CA, USA, 1996; pp. 181–203. [Google Scholar]

- Quinlan, J.R. C4.5: Programs for Machine Learning; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1993. [Google Scholar]

- Luengo, J.; Shim, S.; Alshomrani, S.; Altalhi, A.; Herrera, F. CNC-NOS: Class noise cleaning by ensemble filtering and noise scoring. Knowl.-Based Syst. 2018, 140, 27–49. [Google Scholar] [CrossRef]

- Karmaker, A.; Kwek, S. A boosting approach to remove class label noise. In Proceedings of the Fifth International Conference on Hybrid Intelligent Systems, Rio de Janeiro, Brazil, 6–9 November 2005; Volume 3, pp. 169–177. [Google Scholar] [CrossRef]

- Breiman, L. Arcing the Edge; Technical Report 486; Department of Statistics, University of California: Berkeley, CA, USA, 1997. [Google Scholar]

- Wheway, V. Using Boosting to Detect Noisy Data. In Advances in Artificial Intelligence. Pacific Rim International Conference on Artificial Intelligence 2000 Workshop Reader; Kowalczyk, R., Loke, S., Reed, N.E., Williams, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 2112, pp. 123–130. [Google Scholar]

- Schapire, R.E.; Freund, Y.; Bartlett, P.; Lee, W.S. Boosting the Margin: A New Explanation for the Effectiveness of Voting Methods. Ann. Stat. 1998, 26, 1651–2080. [Google Scholar] [CrossRef]

- Kapp, M.; Sabourin, R.; Maupin, P. An empirical study on diversity measures and margin theory for ensembles of classifiers. In Proceedings of the 10th International Conference on Information Fusion, Québec City, QC, Canada, 9–12 July 2007; pp. 1–8. [Google Scholar]

- Feng, W.; Huang, W.; Ren, J. Class Imbalance Ensemble Learning Based on the Margin Theory. Appl. Sci. 2018, 8, 815. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Olshen, R.; Stone, C. Classification and Regression Trees; Wadsworth and Brooks: Monterey, CA, USA, 1984. [Google Scholar]

- Asuncion, A.; Newman, D. UCI Machine Learning Repository. 2007. Available online: http://archive.ics.uci.edu/ml/index.php (accessed on 24 November 2020).

- Demsar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Garcia, S.; Herrera, F. An Extension on “Statistical Comparisons of Classifiers over Multiple Data Sets” for all Pairwise Comparisons. J. Mach. Learn. Res. 2008, 9, 2677–2694. [Google Scholar]

| Data Set | Training Set Num. | Validation Set Num. | Test Set Num. | Variables | Classes |

|---|---|---|---|---|---|

| Abalone | 1500 | 750 | 1500 | 8 | 3 |

| ForestTypes | 200 | 100 | 200 | 27 | 4 |

| Glass | 80 | 40 | 80 | 10 | 6 |

| Hayes-roth | 64 | 32 | 64 | 3 | 3 |

| Letter | 5000 | 2500 | 5000 | 16 | 26 |

| Optdigits | 1000 | 500 | 1000 | 64 | 10 |

| Penbased | 440 | 220 | 440 | 15 | 10 |

| Pendigit | 2000 | 1000 | 2000 | 16 | 10 |

| Segment | 800 | 400 | 800 | 19 | 7 |

| Statlog | 2000 | 1000 | 2000 | 36 | 6 |

| Texture | 2000 | 1000 | 2000 | 40 | 11 |

| Vehicle | 200 | 100 | 200 | 18 | 4 |

| Waveform | 2000 | 1000 | 2000 | 21 | 3 |

| Wine | 71 | 35 | 72 | 12 | 3 |

| Winequalityred | 600 | 300 | 600 | 11 | 6 |

| Data | No Filter | Label Noise Removal | Label Noise Correction | ||||

|---|---|---|---|---|---|---|---|

| Majority Vote | Consensus Vote | ENDM | Majority Vote | Consensus Vote | ENDM | ||

| Abalone | 53.85 | 53.92 | 54.27 | 54.64 | 54.19 | 54.36 | 54.61 |

| ForestTypes | 84.50 | 84.40 | 84.70 | 84.83 | 84.50 | 83.40 | 83.20 |

| Glass | 98.00 | 97.50 | 98.25 | 97.92 | 97.50 | 97.75 | 96.50 |

| Hayes-roth | 65.00 | 64.38 | 64.06 | 59.38 | 64.06 | 65.31 | 60.94 |

| Letter | 46.72 | 47.83 | 47.96 | 56.88 | 45.59 | 45.22 | 50.88 |

| Optdigits | 89.32 | 90.84 | 88.98 | 94.14 | 87.98 | 88.78 | 93.23 |

| Penbased | 90.05 | 93.05 | 90.36 | 91.89 | 92.45 | 90.00 | 92.23 |

| Pendigit | 90.34 | 92.95 | 91.73 | 95.40 | 89.56 | 90.80 | 93.64 |

| Segment | 92.12 | 91.13 | 93.73 | 94.90 | 91.11 | 93.47 | 94.41 |

| Statlog | 83.38 | 85.68 | 86.27 | 88.75 | 83.66 | 86.00 | 88.15 |

| Texture | 86.31 | 89.52 | 88.11 | 94.03 | 85.83 | 87.43 | 91.42 |

| Vehicle | 72.20 | 73.70 | 73.20 | 73.00 | 74.30 | 71.80 | 73.80 |

| Waveform | 81.44 | 79.09 | 81.53 | 82.97 | 77.07 | 81.70 | 82.41 |

| Wine | 90.83 | 92.78 | 89.17 | 91.67 | 93.33 | 91.11 | 93.89 |

| Winequalityred | 60.90 | 60.67 | 60.70 | 60.83 | 60.60 | 61.47 | 60.30 |

| Average | 79.00 | 79.83 | 79.53 | 81.42 | 78.78 | 79.24 | 80.64 |

| Data | No Filter | Label Noise Removal | Label Noise Correction | ||||

|---|---|---|---|---|---|---|---|

| Majority Vote | Consensus Vote | ENDM | Majority Vote | Consensus Vote | ENDM | ||

| Abalone | 45.07 | 53.53 | 49.27 | 51.53 | 53.40 | 49.47 | 52.33 |

| ForestTypes | 65.00 | 78.00 | 65.00 | 77.50 | 80.00 | 65.00 | 76.00 |

| Glass | 63.75 | 73.75 | 63.75 | 70.00 | 76.25 | 63.75 | 72.50 |

| Hayes-roth | 45.31 | 53.12 | 37.50 | 46.88 | 57.81 | 40.62 | 51.56 |

| Letter | 74.62 | 59.08 | 75.02 | 85.12 | 63.20 | 74.72 | 80.44 |

| Optdigits | 77.90 | 93.30 | 79.20 | 93.10 | 88.70 | 79.80 | 90.40 |

| Penbased | 80.23 | 93.41 | 80.45 | 94.77 | 92.50 | 80.91 | 93.18 |

| Pendigit | 79.75 | 95.05 | 85.45 | 96.30 | 89.80 | 85.80 | 94.15 |

| Segment | 81.54 | 90.30 | 86.50 | 93.30 | 89.12 | 86.38 | 93.02 |

| Statlog | 73.35 | 84.95 | 83.10 | 87.15 | 81.40 | 83.20 | 85.80 |

| Texture | 80.21 | 94.42 | 87.10 | 95.51 | 87.32 | 86.90 | 93.24 |

| Vehicle | 59.00 | 66.50 | 59.00 | 71.50 | 66.50 | 59.00 | 67.50 |

| Waveform | 62.75 | 78.40 | 64.80 | 77.45 | 75.20 | 65.55 | 75.70 |

| Wine | 79.17 | 94.44 | 79.17 | 88.89 | 93.06 | 79.17 | 88.89 |

| Winequalityred | 49.67 | 58.67 | 48.33 | 57.33 | 61.00 | 48.00 | 58.17 |

| Average | 67.82 | 77.79 | 69.58 | 79.09 | 77.02 | 69.88 | 78.19 |

| Data | No Filter | Label Noise Removal | Label Noise Correction | ||||

|---|---|---|---|---|---|---|---|

| Majority Vote | Consensus Vote | ENDM | Majority Vote | Consensus Vote | ENDM | ||

| Abalone | 7 | 6 | 4 | 1 | 5 | 3 | 2 |

| ForestTypes | 3 | 5 | 2 | 1 | 3 | 6 | 7 |

| Glass | 2 | 5 | 1 | 3 | 5 | 4 | 7 |

| Hayes-roth | 2 | 3 | 4 | 7 | 4 | 1 | 6 |

| Letter | 5 | 4 | 3 | 1 | 6 | 7 | 2 |

| Optdigits | 4 | 3 | 5 | 1 | 7 | 6 | 2 |

| Penbased | 6 | 1 | 5 | 4 | 2 | 7 | 3 |

| Pendigit | 6 | 3 | 4 | 1 | 7 | 5 | 2 |

| Segment | 5 | 6 | 3 | 1 | 7 | 4 | 2 |

| Statlog | 7 | 5 | 3 | 1 | 6 | 4 | 2 |

| Texture | 6 | 3 | 4 | 1 | 7 | 5 | 2 |

| Vehicle | 6 | 3 | 4 | 5 | 1 | 7 | 2 |

| Waveform | 5 | 6 | 4 | 1 | 7 | 3 | 2 |

| Wine | 6 | 3 | 7 | 4 | 2 | 5 | 1 |

| Winequalityred | 2 | 5 | 4 | 3 | 6 | 1 | 7 |

| Average rank | 4.80 | 4.07 | 3.80 | 2.33 | 5.00 | 4.53 | 3.27 |

| Data | No Filter | Label Noise Removal | Label Noise Correction | ||||

|---|---|---|---|---|---|---|---|

| Majority Vote | Consensus Vote | ENDM | Majority Vote | Consensus Vote | ENDM | ||

| Abalone | 7 | 1 | 6 | 4 | 2 | 5 | 3 |

| ForestTypes | 5 | 2 | 5 | 3 | 1 | 5 | 4 |

| Glass | 5 | 2 | 5 | 4 | 1 | 5 | 3 |

| Hayes-roth | 5 | 2 | 7 | 4 | 1 | 6 | 3 |

| Letter | 5 | 7 | 3 | 1 | 6 | 4 | 2 |

| Optdigits | 7 | 1 | 6 | 2 | 4 | 5 | 3 |

| Penbased | 7 | 2 | 6 | 1 | 4 | 5 | 3 |

| Pendigit | 7 | 2 | 6 | 1 | 4 | 5 | 3 |

| Segment | 7 | 3 | 5 | 1 | 4 | 6 | 2 |

| Statlog | 7 | 3 | 5 | 1 | 6 | 4 | 2 |

| Texture | 7 | 2 | 5 | 1 | 4 | 6 | 3 |

| Vehicle | 5 | 3 | 5 | 1 | 3 | 5 | 2 |

| Waveform | 7 | 1 | 6 | 2 | 4 | 5 | 3 |

| Wine | 5 | 1 | 5 | 3 | 2 | 5 | 3 |

| Winequalityred | 5 | 2 | 6 | 4 | 1 | 7 | 3 |

| Average rank | 6.07 | 2.27 | 5.40 | 2.20 | 3.13 | 5.20 | 2.80 |

| SuMax | UnMax | SuSum | UnSum | ||

|---|---|---|---|---|---|

| Letter | Bagging | 48.13 (12%) | 52.83 (30%) | 53.08 (24%) | 49.60 (28%) |

| AdaBoost | 52.04 (22%) | 49.60 (24%) | 56.88 (30%) | 50.54 (26%) | |

| k-NN () | 51.72 (21%) | 46.79 (16%) | 51.03 (16%) | 49.35 (7%) | |

| Optdigits | Bagging | 93.00 (17%) | 90.71 (12%) | 90.65 (10%) | 93.57 (18%) |

| AdaBoost | 93.43 (15%) | 93.15 (22%) | 94.14 (20%) | 93.23 (20%) | |

| k-NN () | 93.45 (22%) | 92.13 (23%) | 93.10 (21%) | 91.97 (25%) | |

| Pendigit | Bagging | 91.23 (6%) | 91.25 (5%) | 94.98 (21%) | 91.41 (7%) |

| AdaBoost | 95.27 (20%) | 94.24 (23%) | 95.40 (18%) | 93.87 (25%) | |

| k-NN () | 94.90 (20%) | 93.90 (25%) | 94.70 (22%) | 92.93 (22%) | |

| Statlog | Bagging | 86.50 (10%) | 86.44 (9%) | 87.97 (22%) | 86.46 (10%) |

| AdaBoost | 88.75 (22%) | 86.68 (13%) | 88.66 (20%) | 86.98 (14%) | |

| k-NN () | 88.66 (22%) | 86.90 (29%) | 87.65 (26%) | 85.98 (30%) | |

| Vehicle | Bagging | 72.10 (14%) | 72.90 (6%) | 70.35 (8%) | 72.60 (7%) |

| AdaBoost | 72.30 (20%) | 72.05 (17%) | 72.05 (18%) | 73.00 (13%) | |

| k-NN () | 72.80 (6%) | 72.25 (10%) | 70.85 (8%) | 72.15 (20%) |

| SuMax | UnMax | SuSum | UnSum | ||

|---|---|---|---|---|---|

| Letter | Bagging | 79.32 (14%) | 78.22 (18%) | 80.88 (30%) | 76.64 (6%) |

| AdaBoost | 77.68 (35%) | 73.52 (35%) | 79.00 (35%) | 76.52 (5%) | |

| k-NN | 79.94 (23%) | 78.18 (13%) | 85.12 (16%) | 77.60 (8%) | |

| Optdigits | Bagging | 90.60 (17%) | 85.30 (10%) | 91.90 (20%) | 87.30(14%) |

| AdaBoost | 90.30 (18%) | 88.40 (15%) | 92.70 (24%) | 89.10 (19%) | |

| k-NN | 93.00 (25%) | 92.90 (25%) | 93.10 (23%) | 93.00 (25%) | |

| Pendigit | Bagging | 87.40 (7%) | 89.15 (9%) | 96.05 (23%) | 87.40(7%) |

| AdaBoost | 95.90 (21%) | 95.80 (22%) | 96.25 (20%) | 95.20 (24%) | |

| k-NN | 96.30 (22%) | 95.90 (25%) | 95.85 (19%) | 94.20 (20%) | |

| Statlog | Bagging | 84.20 (13%) | 81.00 (9%) | 86.55 (22%) | 83.60 (12%) |

| AdaBoost | 86.80 (23%) | 84.90 (15%) | 85.95 (18%) | 85.90 (23%) | |

| k-NN | 87.10 (22%) | 86.40 (25%) | 87.15 (23%) | 86.85 (29%) | |

| Vehicle | Bagging | 59.50 (2%) | 60.00 (2%) | 59.00 (2%) | 59.00 (2%) |

| AdaBoost | 70.00 (14%) | 65.50 (12%) | 71.50 (22%) | 67.50 (14%) | |

| k-NN | 63.00 (4%) | 64.00 (9%) | 70.00 (17%) | 66.00 (13%) |

| SuMax | UnMax | SuSum | UnSum | ||

|---|---|---|---|---|---|

| Letter | Bagging | 50.94 (1%) | 50.85 (1%) | 46.02 (0%) | 50.88(1%) |

| AdaBoost | 50.44 (1%) | 50.44 (1%) | 48.40 (0%) | 50.32 (1%) | |

| k-NN | 43.56 (9%) | 46.11 (7%) | 41.81 (15%) | 44.00 (8%) | |

| Optdigits | Bagging | 89.80 (0%) | 90.10 (0%) | 89.87 (0%) | 89.80 (0%) |

| AdaBoost | 93.23 (16%) | 92.30 (15%) | 92.21 (18%) | 91.33 (17%) | |

| k-NN | 92.36 (17%) | 92.73 (16%) | 92.17 (19%) | 90.51 (22%) | |

| Pendigit | Bagging | 90.89 (6%) | 90.76 (3%) | 90.04 (0%) | 91.05 (6%) |

| AdaBoost | 93.57 (19%) | 91.84 (16%) | 93.64 (18%) | 91.57 (12%) | |

| k-NN | 92.11 (15%) | 91.00 (14%) | 93.08 (18%) | 91.11 (14%) | |

| Statlog | Bagging | 86.48 (14%) | 86.02 (16%) | 84.83 (0%) | 86.50 (12%) |

| AdaBoost | 87.80 (20%) | 86.39 (9%) | 88.15 (19%) | 86.38 (13%) | |

| k-NN | 86.58 (18%) | 86.16 (16%) | 86.92 (23%) | 86.24 (16%) | |

| Vehicle | Bagging | 73.90 (1%) | 73.75 (1%) | 73.50 (0%) | 72.95 (0%) |

| AdaBoost | 73.85 (17%) | 72.00 (20%) | 73.55 (17%) | 72.80 (23%) | |

| k-NN | 73.85 (11%) | 72.90 (2%) | 73.05 (8%) | 72.95 (2%) |

| SuMax | UnMax | SuSum | UnSum | ||

|---|---|---|---|---|---|

| Letter | Bagging | 74.10 (1%) | 73.96 (1%) | 73.02 (0%) | 74.94 (2%) |

| AdaBoost | 73.74 (1%) | 73.74 (1%) | 72.86 (0%) | 73.76 (1%) | |

| k-NN | 76.44 (11%) | 75.72 (4%) | 80.44 (15%) | 75.98 (5%) | |

| Optdigits | Bagging | 76.60 (0%) | 76.60 (0%) | 78.80 (2%) | 76.60 (0%) |

| AdaBoost | 88.90 (15%) | 89.30 (17%) | 90.10 (19%) | 88.00 (17%) | |

| k-NN | 89.10 (16%) | 89.20 (17%) | 90.40 (20%) | 88.00 (20%) | |

| Pendigit | Bagging | 83.55 (3%) | 84.25 (4%) | 79.90 (0%) | 83.55 (3%) |

| AdaBoost | 93.15 (21%) | 88.80 (9%) | 94.15 (19%) | 91.85 (13%) | |

| k-NN | 92.60 (15%) | 91.75 (15%) | 93.65 (18%) | 92.05 (16%) | |

| Statlog | Bagging | 80.30 (8%) | 84.55 (16%) | 73.70 (1%) | 82.20 (10%) |

| AdaBoost | 83.40 (11%) | 82.40 (10%) | 85.75 (18%) | 83.80 (12%) | |

| k-NN | 85.05 (18%) | 84.45 (16%) | 85.80 (19%) | 84.70 (16%) | |

| Vehicle | Bagging | 63.00 (2%) | 63.00 (2%) | 60.00 (1%) | 62.00 (1%) |

| AdaBoost | 65.50 (15%) | 65.50 (10%) | 67.50 (20%) | 66.00 (15%) | |

| k-NN | 64.00 (12%) | 63.50 (8%) | 64.50 (6%) | 61.50 (2%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, W.; Quan, Y.; Dauphin, G. Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metric. Sensors 2020, 20, 6718. https://doi.org/10.3390/s20236718

Feng W, Quan Y, Dauphin G. Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metric. Sensors. 2020; 20(23):6718. https://doi.org/10.3390/s20236718

Chicago/Turabian StyleFeng, Wei, Yinghui Quan, and Gabriel Dauphin. 2020. "Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metric" Sensors 20, no. 23: 6718. https://doi.org/10.3390/s20236718

APA StyleFeng, W., Quan, Y., & Dauphin, G. (2020). Label Noise Cleaning with an Adaptive Ensemble Method Based on Noise Detection Metric. Sensors, 20(23), 6718. https://doi.org/10.3390/s20236718