Visual Guidance and Egg Collection Scheme for a Smart Poultry Robot for Free-Range Farms

Abstract

1. Introduction

2. Methodology

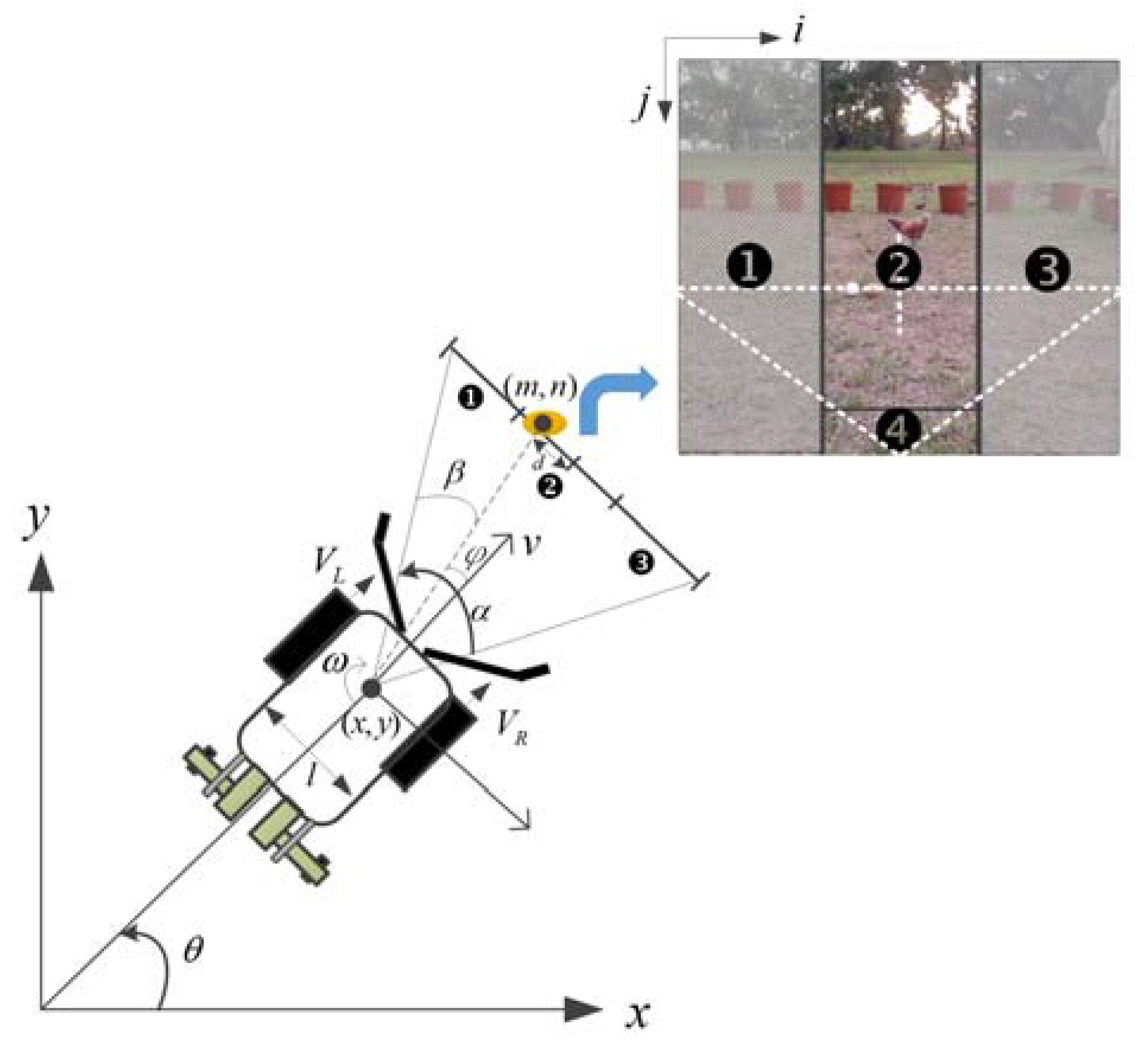

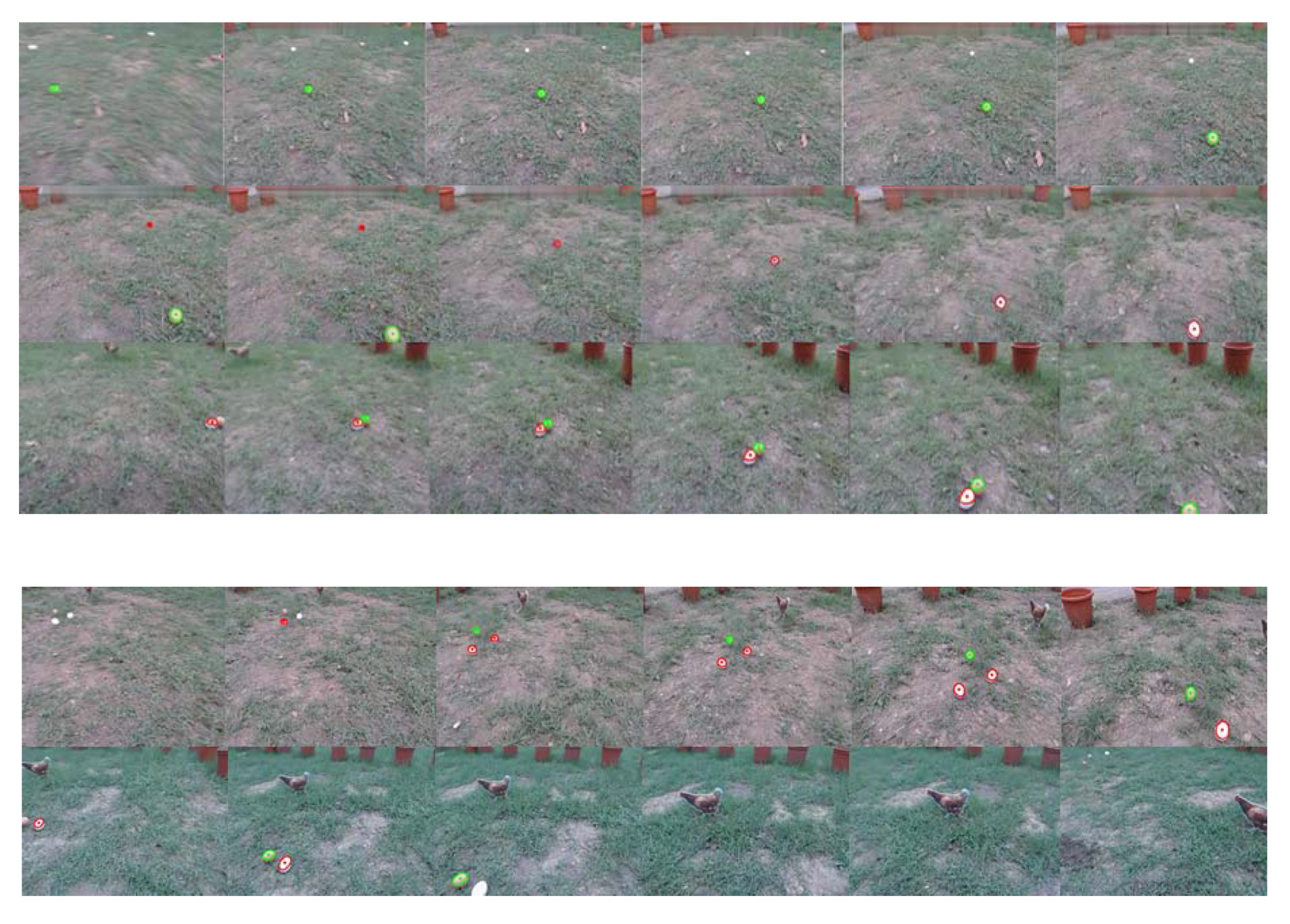

2.1. Visual Guidance of a Mobile Robot

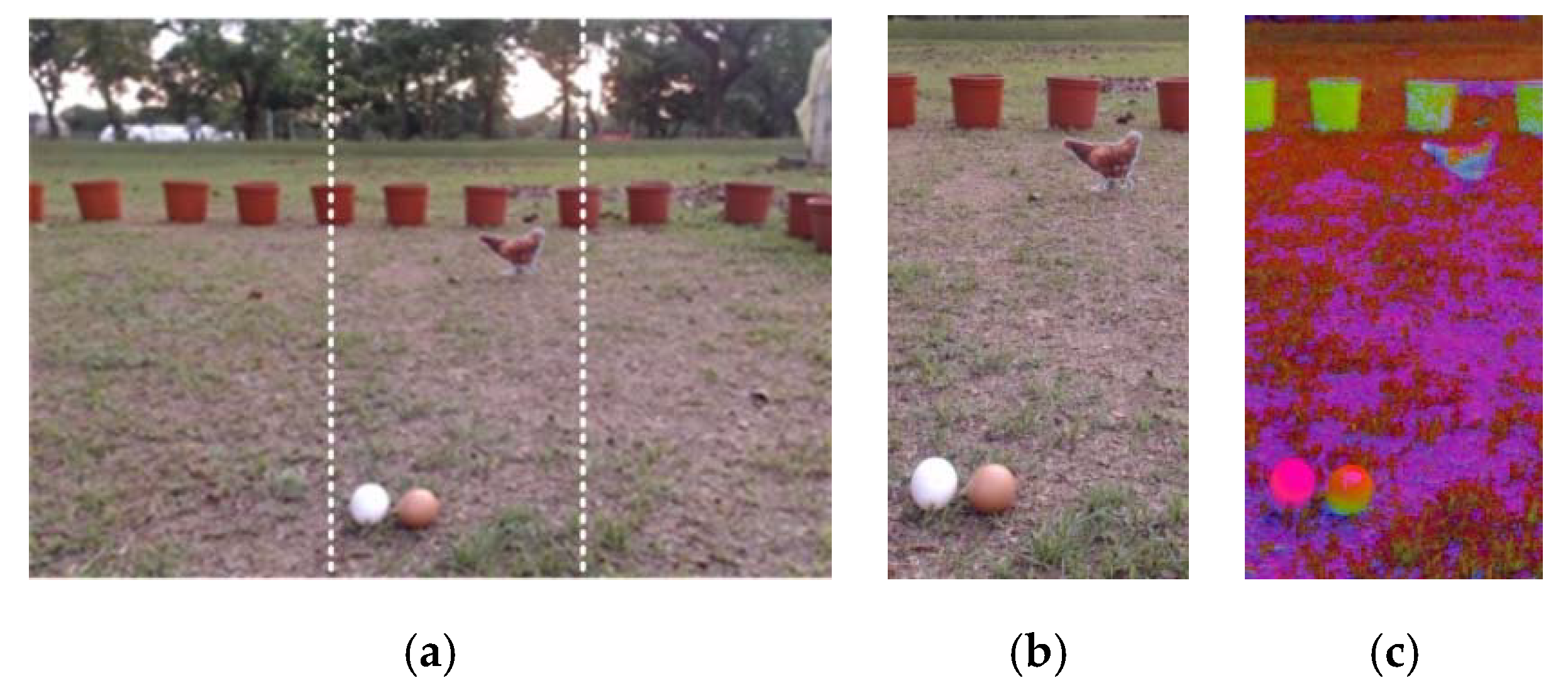

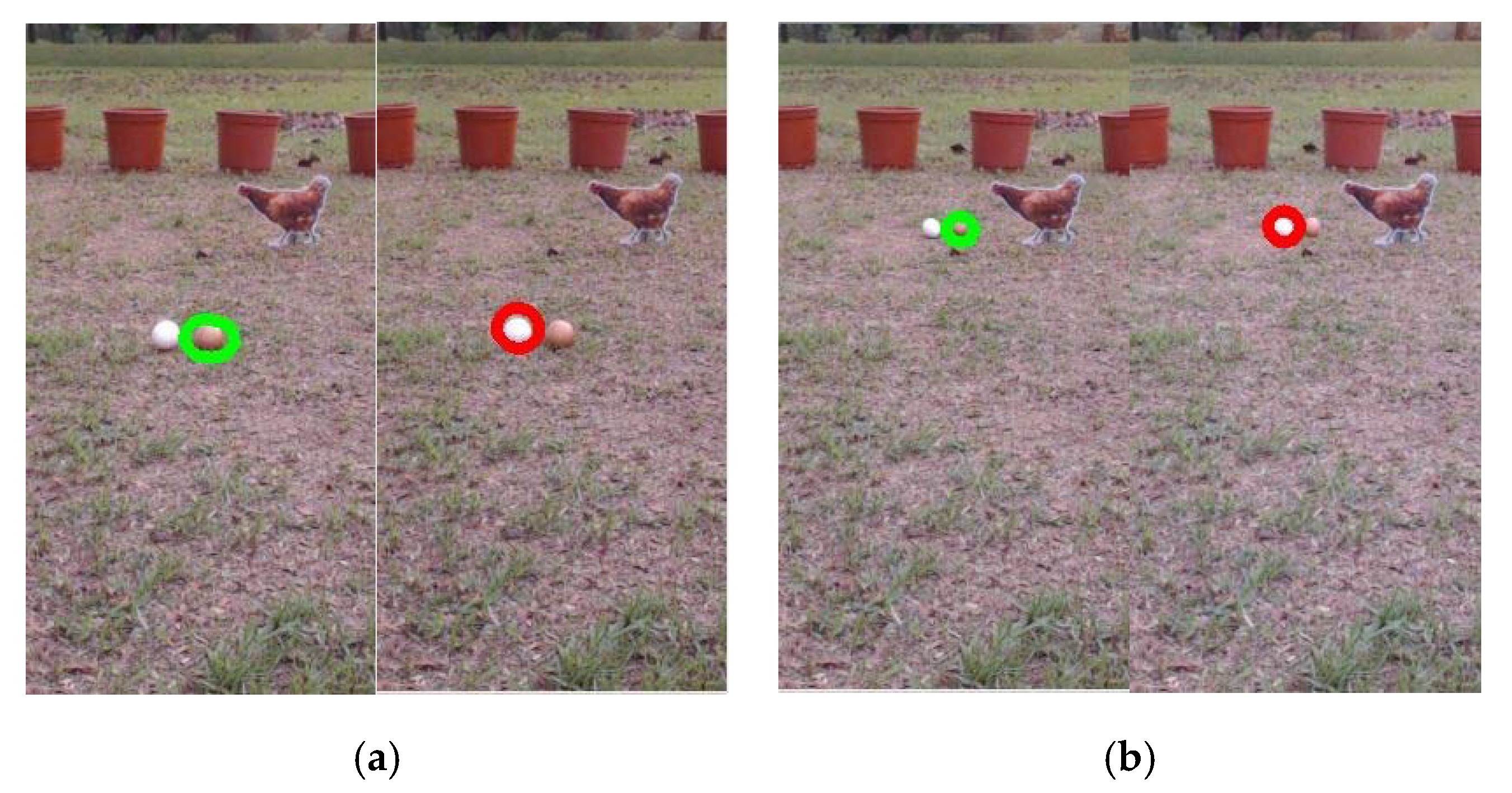

2.2. Egg Recognition Process

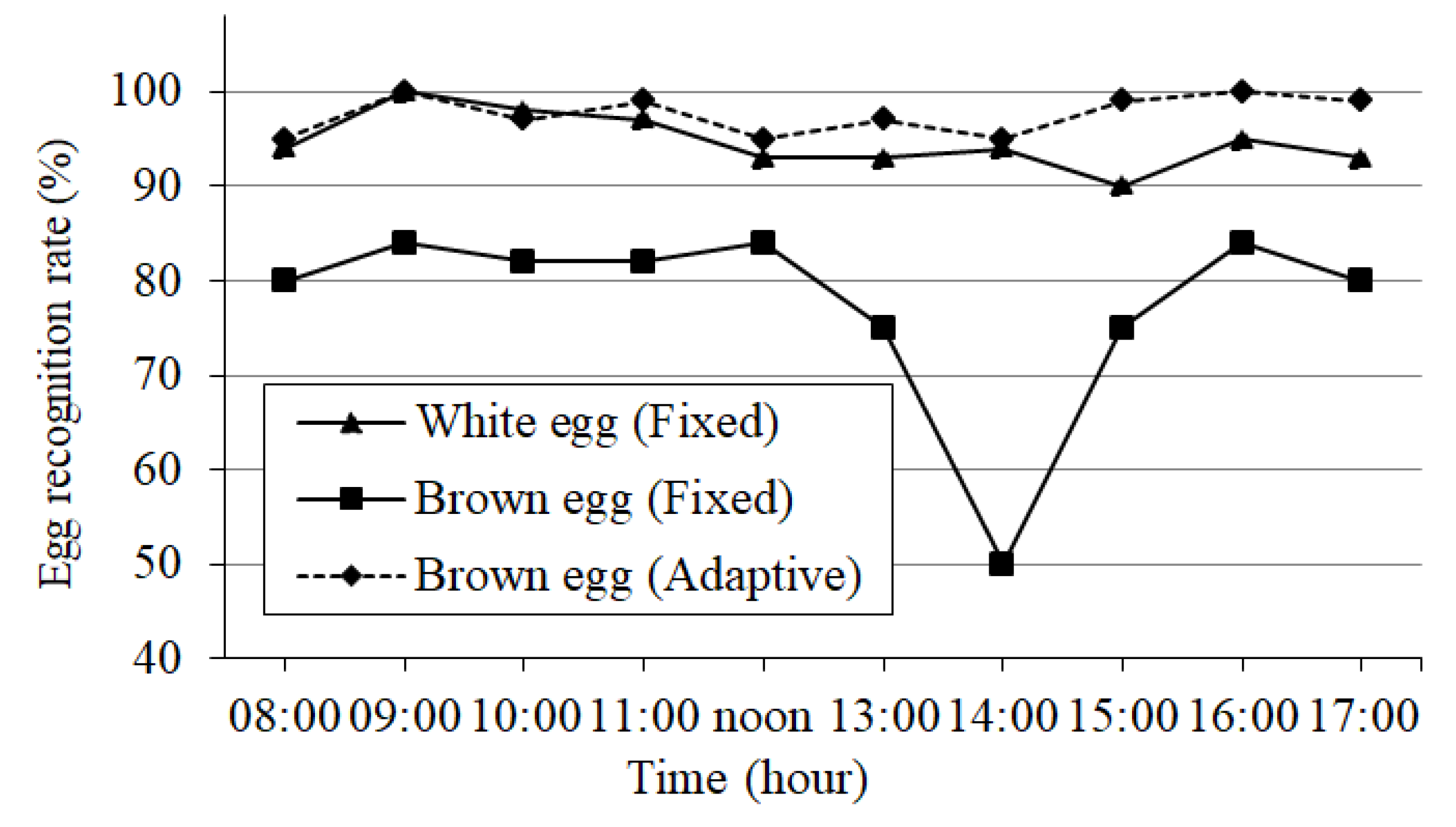

2.3. Adaptive Threshold

2.4. Behavior-Based Navigation Method

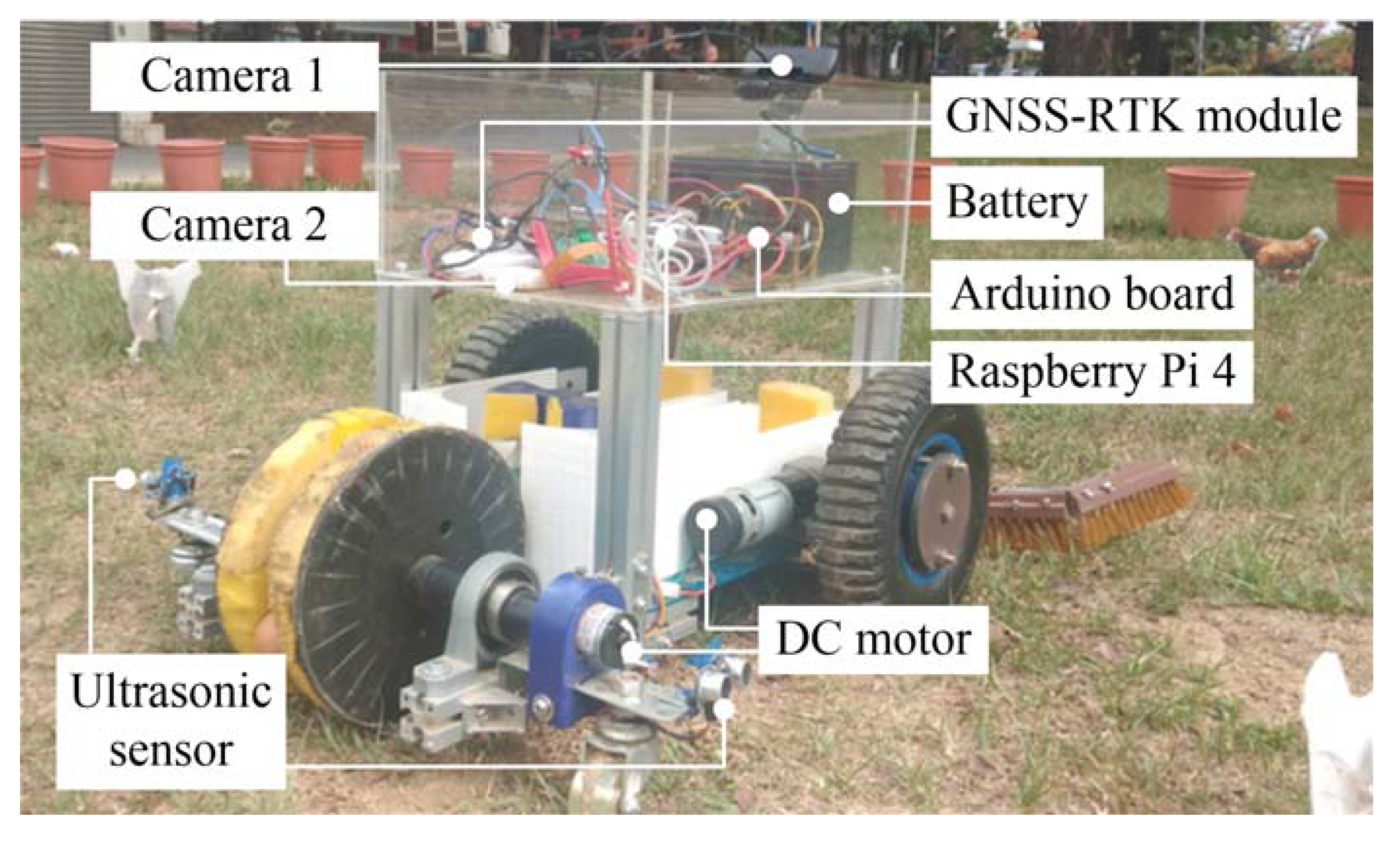

3. Proposed Mobile Poultry Robot

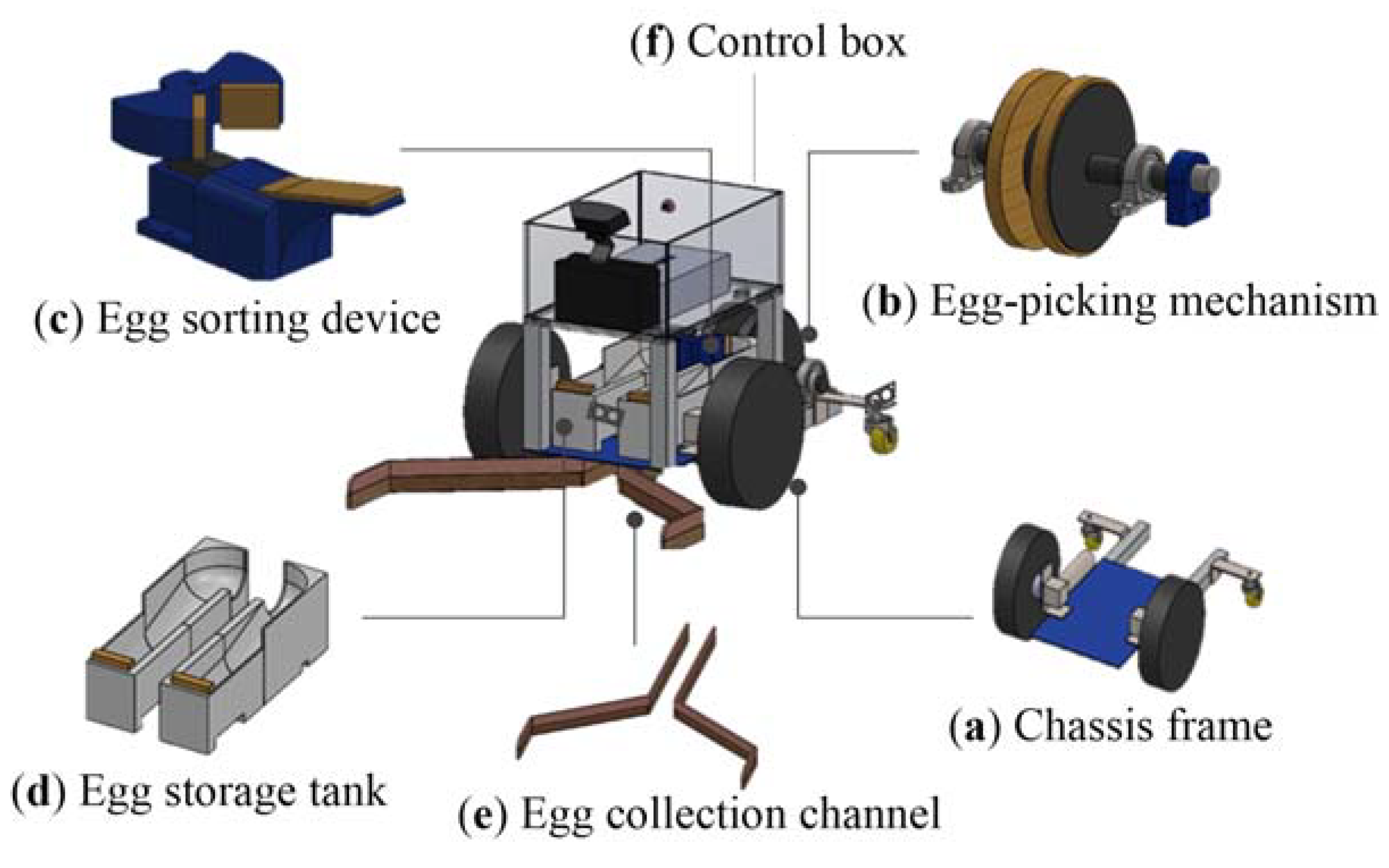

3.1. Design of the Robot Mechanism

3.2. Hardware and Software Platforms

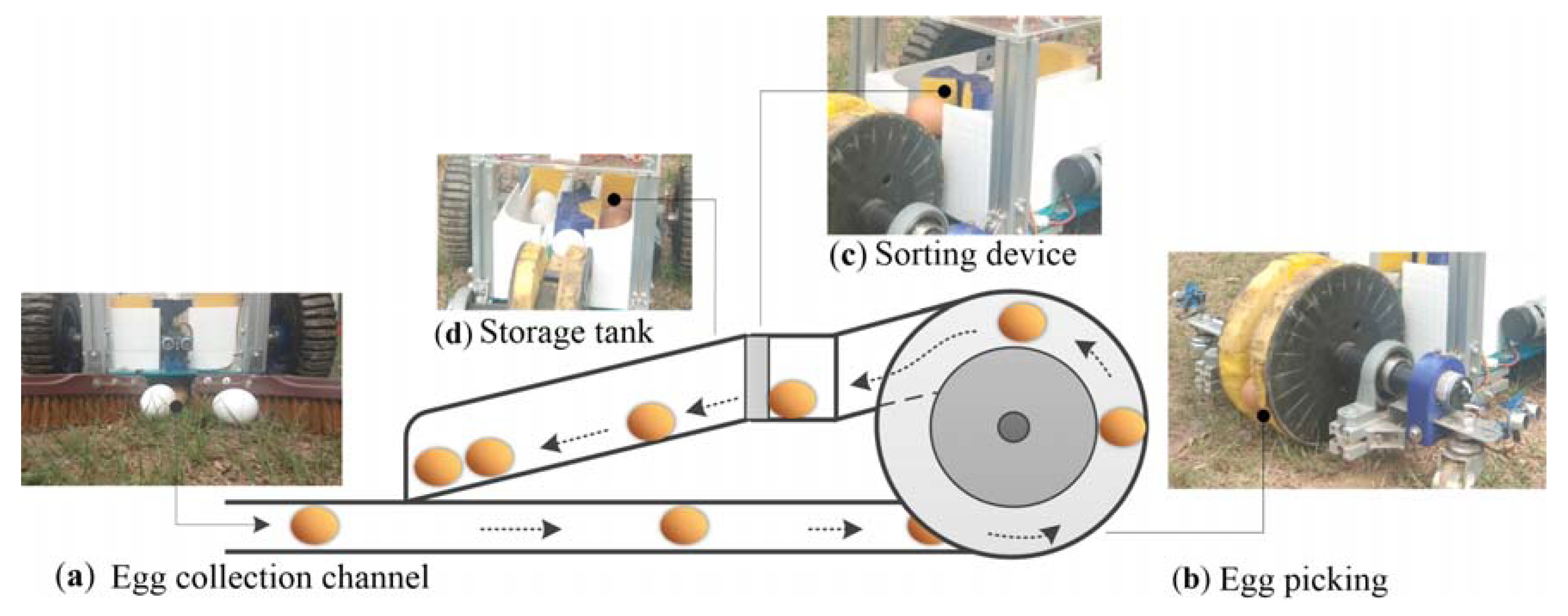

3.3. Egg Collection System

4. Experimental Description and Results

4.1. Ambient Light Intensity Measurement

4.2. Initial Testing for Egg Detection

4.3. Experimental Setup

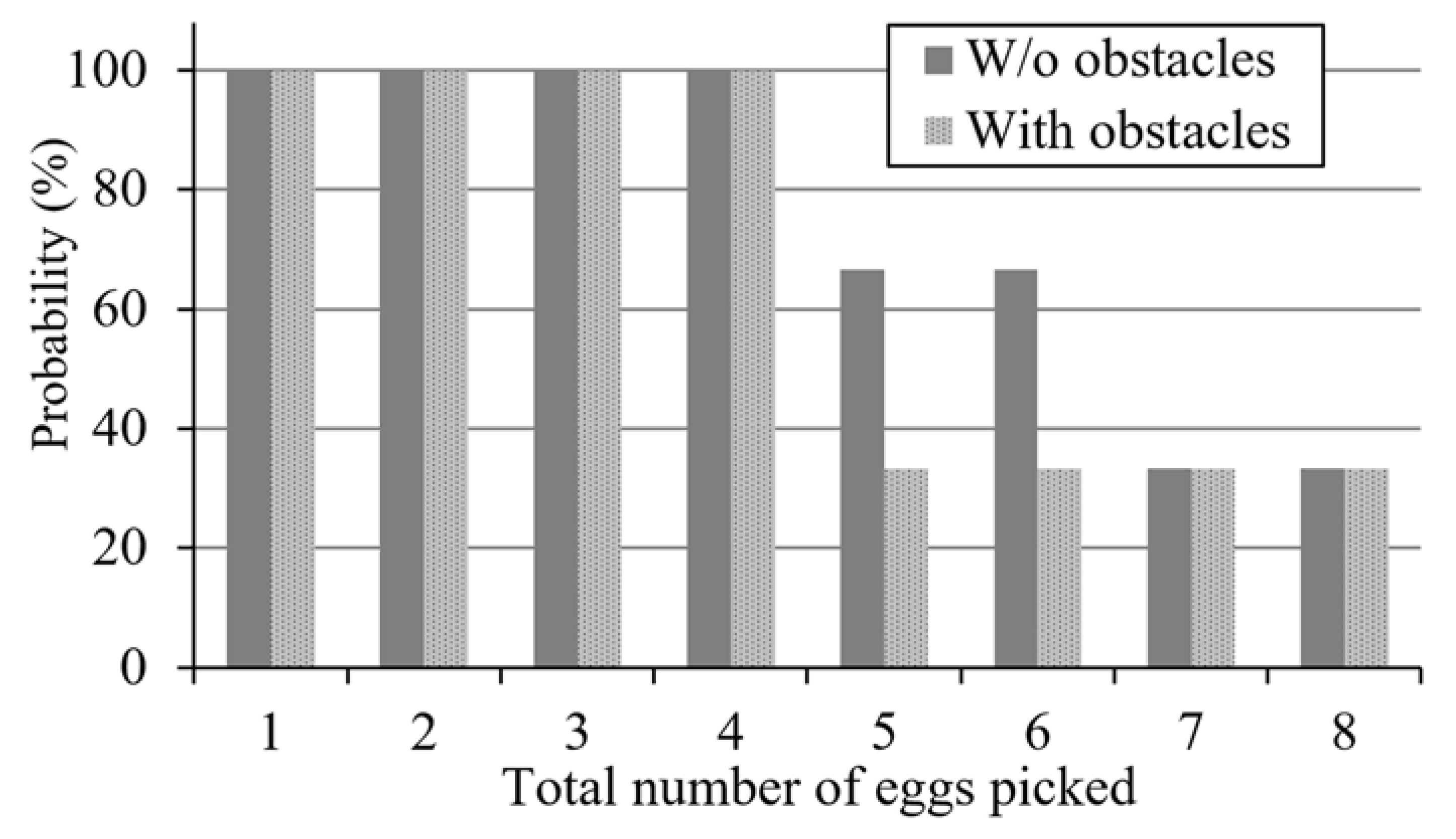

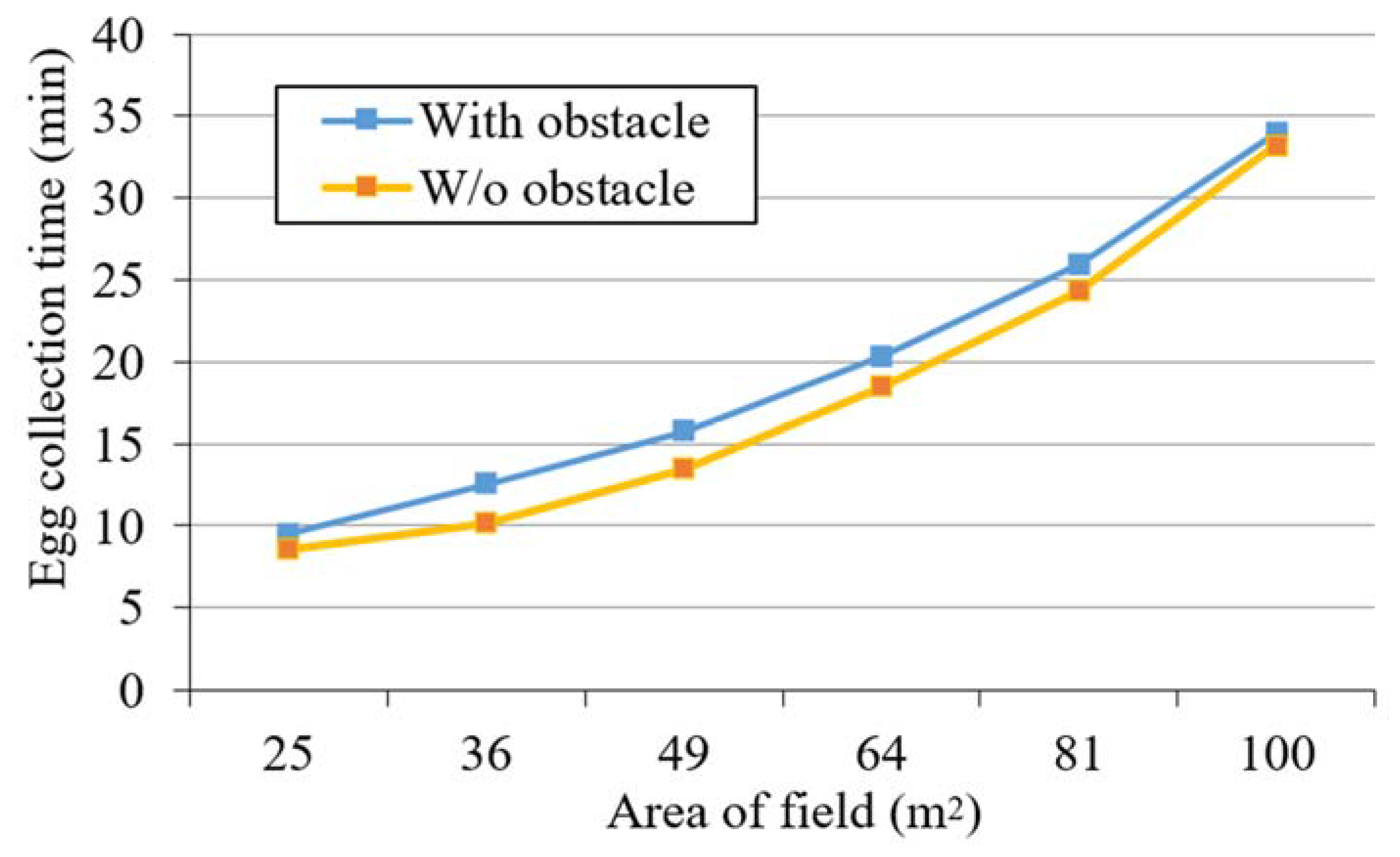

4.4. Performance Evaluation Results

4.5. Discussion

- A combination of color and shape are extracted as features for use in the image-processing method for object detection. It is suitable for detecting small objects in the environment, such as hen’s eggs on the ground or duck eggs;

- The use of automatic thresholding can reduce the effect of light intensity on egg recognition;

- The modular design of the mechanism, which includes an egg collection channel, an egg-picking and -sorting mechanism, and a storage tank, can be easily expanded into a large-scale platform to store larger numbers of eggs;

- A behavior-based navigation method based on visual guidance allows the robot to collect all of the eggs on a small free-range farm within a short time.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hewson, C.J. What is animal welfare? Common definitions and their practical consequences. Can. Vet. J. 2003, 44, 496–499. [Google Scholar]

- Shields, S.; Duncan, I.J.H. A Comparison of the Welfare of Hens in Battery Cages and Alternative Systems. 2009. Available online: https://www.wellbeingintlstudiesrepository.org/hsus_reps_impacts_on_animals/18/ (accessed on 10 July 2020).

- Meseret, S. A review of poultry welfare in conventional production system. Livest. Res. Rural Dev. 2016, 28, 12. [Google Scholar]

- Bowles, D.; Paskin, R.; Gutierrez, M.; Kasterine, A. Animal welfare and developing countries: Opportunities for trade in high-welfare products from developing countries. Rev. Sci. Tech. Off. Int. Epizoot. 2005, 24, 783–790. [Google Scholar] [CrossRef]

- European Commission. Communication from the Commission to the European Parliament and the Council on a Community Action Plan on the Protection and Welfare of Animals 2006–2010; COM (2006) 13 Final; DG Consumer Protection and Health: Brussels, Belgium, 2006; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52006DC0013&from=EN (accessed on 3 March 2020).

- Van Horne, P.L.M.; Achterbosch, T.J. Animal welfare in poultry production systems: Impact of EU standards on world trade. World’s Poult. Sci. J. 2008, 64, 40–52. [Google Scholar] [CrossRef]

- Huertas, S.; Gallo, C.; Galindo, F. Drivers of animal welfare policy in the Americas. Rev. Sci. Tech. 2014, 33, 67–76. [Google Scholar]

- Marino, L. Thinking chickens: A review of cognition, emotion, and behavior in the domestic chicken. Amin. Cogn. 2017, 20, 127–147. [Google Scholar] [CrossRef]

- Glatz, P.; Pym, R. Poultry Housing and Management in Developing Countries. Available online: http://www.fao.org/3/a-al734e.pdf (accessed on 23 October 2020).

- Blokhuis, H.J.; Metz, J.H.M. Integration of animal welfare into housing systems for laying hens. Neth. J. Agric. Sci. 1992, 40, 327–337. [Google Scholar] [CrossRef]

- Wood-Gush, D.G.M. The behavior of the domestic chicken: A review of the literature. Br. J. Anim. Behav. 1955, 111, 81–110. [Google Scholar] [CrossRef]

- Ren, G.; Lin, T.; Ying, Y.; Chowdhary, G.; Ting, K.C. Agricultural robotics research applicable to poultry production: A review. Comput. Electron. Agric. 2020, 169, 105216. [Google Scholar] [CrossRef]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596. [Google Scholar] [CrossRef]

- Alatise, M.B.; Hancke, G.P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Gopalakrishnan, B.; Tirunellayi, S.; Todkar, R. Design and development of an autonomous mobile smart vehicle: A mechatronics application. Mechatronics 2004, 14, 491–514. [Google Scholar] [CrossRef]

- Mekhtiche, M.A.; Benselama, Z.A.; Bencherif, M.A.; Zakariah, A.; Alsulaiman, M.; Hedjar, R.; Faisal, M.; Algabri, M.; AlMuteb, K. Visual tracking in unknown environments using fuzzy logic and dead reckoning. Int. J. Adv. Robot. Syst. 2016, 13, 53. [Google Scholar] [CrossRef]

- Ko, M.H.; Ryuh, B.S.; Kim, K.C.; Suprem, A.; Mahalik, N.P. Autonomous greenhouse mobile robot driving strategies from system integration perspective: Review and application. IEEE ASME Trans. Mechatron. 2015, 20, 1705–1716. [Google Scholar] [CrossRef]

- Chang, C.L.; Chen, J.F.; Jhu, J.H. Design and implementation of a gardening mobile robot with embedded face-tracking system. In Proceedings of the IEEE International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS 2012), New Taipei City, Taiwan, 4–7 November 2012; pp. 239–244. [Google Scholar]

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B. Agricultural robotics: The future of robotic agriculture. arXiv 2018, arXiv:1806.06762. [Google Scholar]

- Vroegindeweij, B.A.; Van Willigenburg, L.G.; Koerkamp, P.W.G.G.; van Henten, E.J. Path planning for the autonomous collection of eggs on floors. Biosyst. Eng. 2014, 121, 186–199. [Google Scholar] [CrossRef]

- Vroegindeweij, B.A.; Blaauw, S.K.; IJsselmuiden, J.M.; van Henten, E.J. Evaluation of the performance of PoultryBot, an autonomous mobile robotic platform for poultry houses. Biosyst. Eng. 2018, 174, 295–315. [Google Scholar] [CrossRef]

- Usher, C.T.; Daley, W.D.; Joffe, B.P.; Muni, A. Robotics for poultry house management. In Proceedings of the ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017. [Google Scholar] [CrossRef]

- Chatterjee, A.; Rakshit, A.; Singh, N.N. Vision Based Autonomous Robot Navigation; Springer: New York, NY, USA, 2013. [Google Scholar]

- Shiller, Z. Off-line and on-line trajectory planning. In Motion and Operation Planning of Robotic Systems; Mechanisms and Machine Science; Carbone, G., Gomez-Bravo, F., Eds.; Springer: Cham, Switzerland, 2015; pp. 29–62. [Google Scholar]

- Santos, L.C.; Santos, F.N.; Pires, E.J.S.; Valente, A.; Costa, P.; Magalhaes, S. Path planning for ground robots in agriculture: A short review. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–17 April 2020; pp. 61–66. [Google Scholar]

- Gupta, M.; Abdelsalam, M.; Khorsandroo, S.; Mittal, S. Security and privacy in smart farming: Challenges and opportunities. IEEE Access 2020, 8, 34564–34584. [Google Scholar] [CrossRef]

- Krotkov, E.; Hebert, M. Mapping and positioning for a prototype lunar rover. In Proceedings of the IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; pp. 2913–2919. [Google Scholar]

- Daily, M.; Harris, J.; Keirsey, D.; Olim, K.; Payton, D.; Reiser, K.; Rosenblatt, J.; Tseng, D.; Wong, V. Autonomous cross-country navigation with the ALV. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 24–29 April 1988; pp. 718–726. [Google Scholar]

- DeSouza, G.N.; Avinash, C.; Kak, A.C. Vision for mobile robot navigation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef]

- Astrand, B.; Baerveldt, A.J. A vision based row-following system for agricultural field machinery. Mechatronics 2005, 15, 251–269. [Google Scholar] [CrossRef]

- Chang, C.L.; Liew, C.; Chen, T. Design and implementation of a semi-autonomous mini-cultivator using human-machine collaboration systems. In Proceedings of the 2017 IEEE/SICE International Symposium on System Integration, Taipei, Taiwan, 11–14 December 2017; pp. 423–428. [Google Scholar]

- Ahmadi, A.; Nardi, L.; Chebrolu, N.; Stachniss, C. Visual servoing-based navigation for monitoring row-crop fields. arXiv 2019, arXiv:1909.12754. [Google Scholar]

- Chang, C.L.; Lin, K.M. Smart agricultural machine with a computer vision-based weeding and variable-rate irrigation scheme. Robotics 2018, 7, 38. [Google Scholar] [CrossRef]

- Bac, C.; Hemming, J.; Van Henten, E. Robust pixel-based classification of obstacles for robotic harvesting of sweet-pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; Van Tuijl, B.; Barth, R.; Wais, E.; van Henten, E.J. Performance evaluation of a harvesting robot for sweet pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D.; Lin, S. Feeding behavior recognition for group-housed pigs with the Faster R-CNN. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.-H.; Olsson, A.-C.; Müller, S.; Hensel, O. Deep learning and machine vision approaches for posture detection of individual pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef]

- Li, G.; Xu, Y.; Zhao, Y.; Du, Q.; Huang, Y. Evaluating convolutional neural networks for cage-free floor Egg detection. Sensors 2020, 20, 332. [Google Scholar] [CrossRef]

- Arroyo, J.; Guijarro, M.; Pajares, G. An instance-based learning approach for thresholding in crop images under different outdoor conditions. Comput. Electron. Agric. 2016, 127, 669–679. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, K.; Pan, Y. A method of apple image segmentation based on color-texture fusion feature and machine learning. Agronomy 2020, 10, 972. [Google Scholar] [CrossRef]

- Vroegindeweij, B.A.; Kortlever, J.W.; Wais, E.; van-Henten, E.J. Development and test of an egg collecting device for floor eggs in loose housing systems for laying hens. In Proceedings of the AgEng 2014, Zurich, The Netherlands, 6–10 July 2014; Available online: https://library.wur.nl/WebQuery/wurpubs/482639 (accessed on 20 September 2019).

- Siegwart, R.; Nourbakhsh, I. Introduction to Autonomous Mobile Robots; A Bradford Book; The MIT Press: Cambridge, MA, USA; London, UK, 2004. [Google Scholar]

- Dudek, G.; Jenkin, M. Computational Principles of Mobile Robotics; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Gonzalez, R.; Woods, R.E. Digital Image Processing, 2nd ed.; Prentice Hall Press: Upper Saddle River, NJ, USA, 2002; p. 295. [Google Scholar]

- Suzuki, S.; Be, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Prasad, D.K.; Leung, M.K.H.; Quek, C.; Cho, S.Y. A novel framework for making dominant point detection methods non-parametric. Image Vis. Comput. 2012, 30, 843–859. [Google Scholar] [CrossRef]

- Douglas, D.; Peucker, T. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Can. Cartogr. 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Tu, Z.W.; Yuille, A.L. Shape matching and recognition—Using generative models and informative features. Lect. Notes Comput. Sci. 2004, 3, 195–209. [Google Scholar]

- Huang, Q.; Gao, W.; Cai, W. Thresholding technique with adaptive window selection for uneven lighting image. Pattern Recogn. Lett. 2005, 26, 801–808. [Google Scholar] [CrossRef]

- Sahasrabudhes, C.; Gupta, S.D. A valley-seeking threshold selection technique. Comput. Vis. Image Process. 1992, 56, 55–65. [Google Scholar]

- Ramesh, N.; Yoo, J.-H.; Sethi, I.K. Thresholding based on histogram approximation. IEEE Proc. Vis. Image Signal Process. 1995, 142, 271–279. [Google Scholar] [CrossRef]

- Otsu, N. A thresholding selection method from gray-scale histogram. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support vector machines for crop/weeds identification in maize fields. Expert Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Hernandez-Hernandez, J.L.; Azadshahraki, F.; Karimzadeh, R. The use of the combination of texture, color and intensity transformation features for segmentation in the outdoors with emphasis on video processing. Agriculture 2019, 9, 104. [Google Scholar] [CrossRef]

- Wang, C.H.; Xie, B.X.; Chang, C.L. Design and implementation of livestock robot for egg picking and classification in the farm. In Proceedings of the 2019 International Symposium on Electrical and Electronics Engineering (ISEE), Ho Chi Minh, Vietnam, 10–12 October 2019; pp. 161–165. [Google Scholar]

- Chukkapalli, S.S.L.; Mittal, S.; Gupta, M.; Abdelsalam, M.; Joshi, A.; Sandhu, R.; Joshi, K. Ontologies and Artificial Intelligence Systems for the Cooperative Smart Farming Ecosystem. IEEE Access 2020, 8, 164045–164064. [Google Scholar] [CrossRef]

| Description | Value or Feature |

|---|---|

| Body | |

| Size: length (cm) × width (cm) × height (cm) | 60 × 30 × 40 |

| Maximum weight | 23 kg |

| Chassis frame | Aluminum/plastic/acrylic |

| Drive components | |

| Drive method | Two-wheel differential drive |

| Maximum speed | 0.8 ms−1 |

| Motors (gear ratio; torque; speed) | 24 V, 34.7 W (1:212; 25 kg/cm; 31 rpm) |

| Egg picking motor (speed) | 12 V (7.7 rpm) |

| Servo motor (torque; speed) | 5 V (3.3 kg/cm; 62.5 rpm) |

| Battery | 12 V, 7.2 Ah |

| Electronics | |

| Controller boards | Arduino UNO; Raspberry Pi 4 model B |

| Ultrasonic sensors (distance; effectual angle) | 5 V (2–400 cm; 15°) |

| Light sensors (range; accuracy; communication) | 2.4 to 3.6 V (1–65,535 lux; ±20%; I2C bus) |

| Camera 1 | Full HD 1080 p, 30 fps, USB 2.0, AUTO Focus |

| Camera 2 (pixel count; lens; view angle) | 2592 × 1944 (5 megapixel; 3.57 mm; 65°) |

| GNSS-RTK | ZED-F9P RTK GNSS receiver board |

| Weather Conditions | White Egg | Brown Egg | |||||

|---|---|---|---|---|---|---|---|

| Illuminance (lux) | H | S | V | H | S | V | |

| Cloudy | [0, 3000] | [0, 170] | [0, 30] | [245, 255] | [0, 15] | [75, 135] | [100, 255] |

| Cloudy and sunny | (3000, 9800] | [0, 200] | [25, 110] | [190, 255] | |||

| Sunny | (9800, 35,000] | [0, 10] | [45, 150] | [155, 255] | |||

| Scorching sunny | 35,001 or more | [0, 12] | [65, 150] | [55, 255] | |||

| With Obstacles | Without Obstacles | Manual Picking | |

|---|---|---|---|

| Corner | 8.62 | 6.61 | 0.53 |

| Central | 4.6 | 3.62 | 0.25 |

| Dispersed | 9.49 | 8.53 | 0.81 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-L.; Xie, B.-X.; Wang, C.-H. Visual Guidance and Egg Collection Scheme for a Smart Poultry Robot for Free-Range Farms. Sensors 2020, 20, 6624. https://doi.org/10.3390/s20226624

Chang C-L, Xie B-X, Wang C-H. Visual Guidance and Egg Collection Scheme for a Smart Poultry Robot for Free-Range Farms. Sensors. 2020; 20(22):6624. https://doi.org/10.3390/s20226624

Chicago/Turabian StyleChang, Chung-Liang, Bo-Xuan Xie, and Chia-Hui Wang. 2020. "Visual Guidance and Egg Collection Scheme for a Smart Poultry Robot for Free-Range Farms" Sensors 20, no. 22: 6624. https://doi.org/10.3390/s20226624

APA StyleChang, C.-L., Xie, B.-X., & Wang, C.-H. (2020). Visual Guidance and Egg Collection Scheme for a Smart Poultry Robot for Free-Range Farms. Sensors, 20(22), 6624. https://doi.org/10.3390/s20226624