Recognition of Impulse of Love at First Sight Based On Photoplethysmography Signal

Abstract

1. Introduction

2. Experimental Setup

2.1. Participants

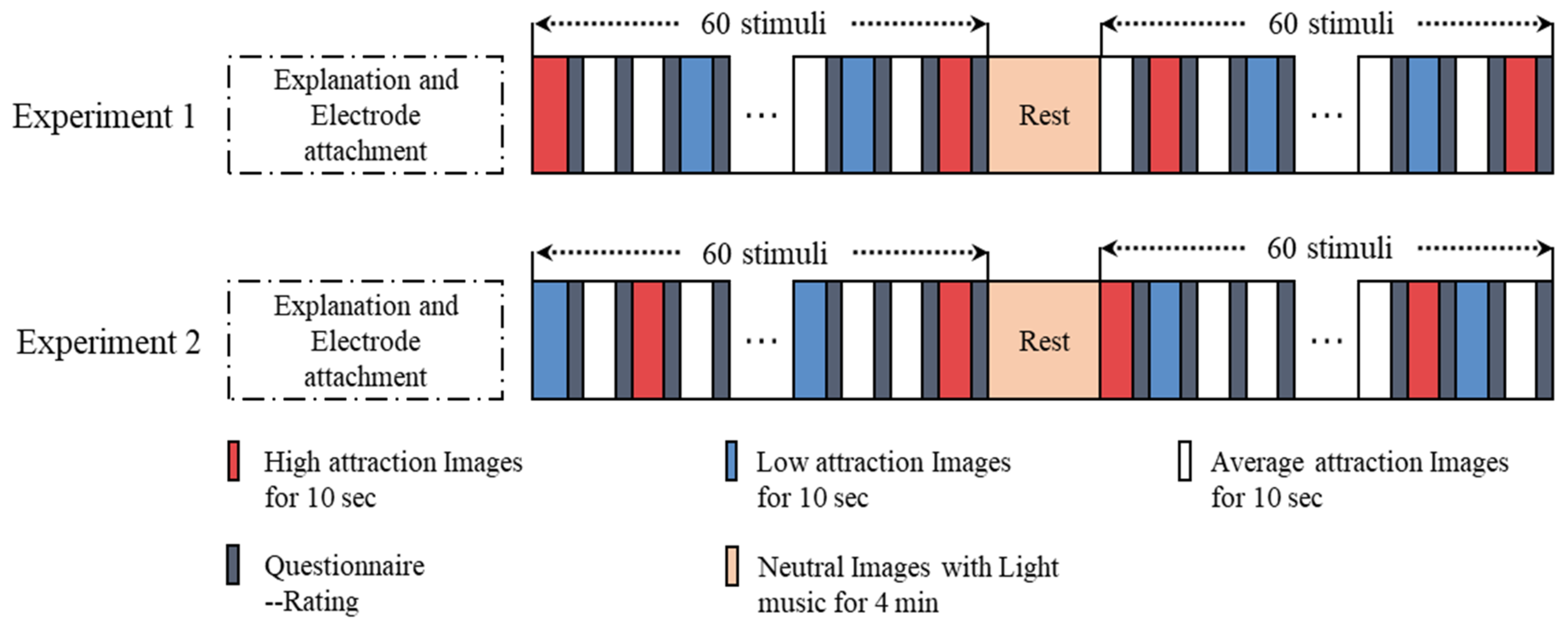

2.2. Emotional Stimulation

2.3. Experimental Procedure

2.4. Physiological Signal Recording

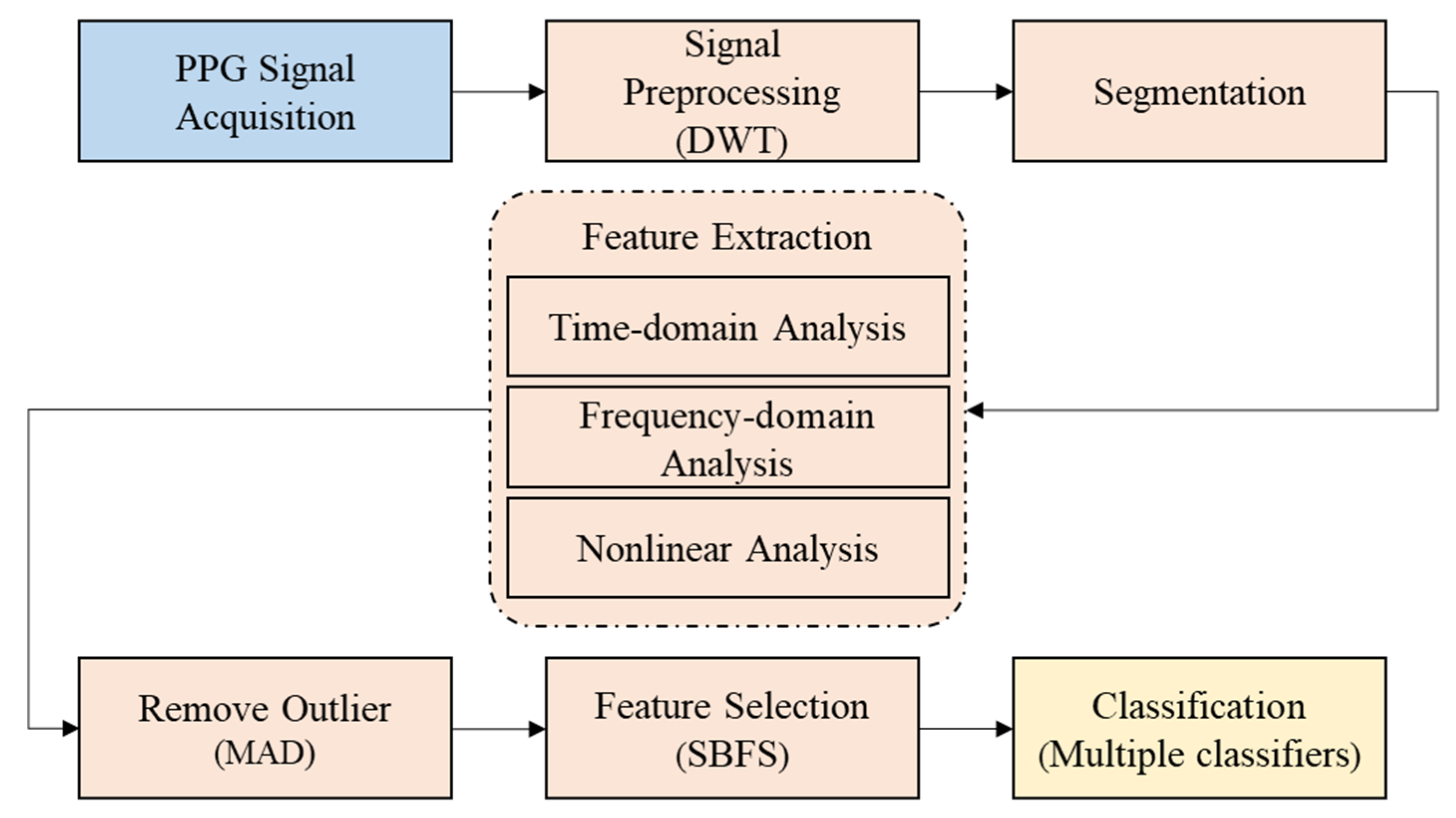

3. Method

- (1)

- Signal preprocessing: A discrete wavelet transform (DWT) is used to eliminate noise (such as baseline drift, power frequency interference, and EMG interference) from the original PPG signal.

- (2)

- Segmentation: The preprocessed PPG signal is intercepted according to the 10 s time interval from the appearance to the end of the picture.

- (3)

- Feature extraction: 26 physiological features are extracted from a time domain analysis, frequency domain analysis, and nonlinear analysis of the PPG signal.

- (4)

- Remove outliers: The median absolute deviation (MAD) algorithm is used to remove outliers.

- (5)

- Feature selection: The sequence backward floating selection (SBFS) algorithm is used to select suitable features from the 26 features.

- (6)

- Classification: A variety of classifiers (support vector machine (SVM), random forest (RF), linear discriminant analysis (LDA), and extreme gradient boosting (XGBoost)) are used for emotion recognition.

3.1. Preprocessing

3.2. Label Processing

3.3. Feature Extraction

3.4. Remove Outliers

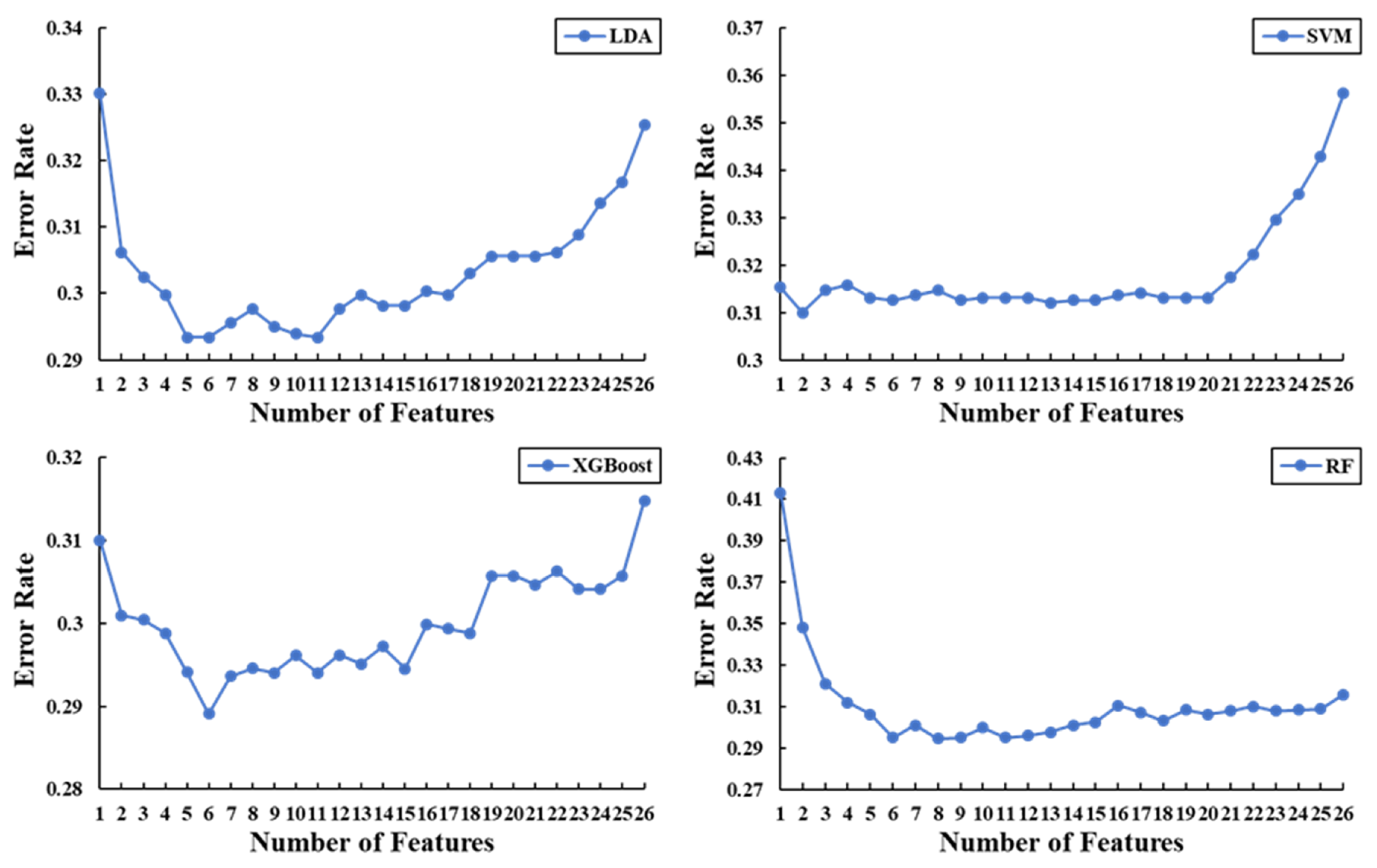

3.5. Feature Selection

3.6. Performance Metrics

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zsok, F.; Haucke, M.; De Wit, C.Y.; Barelds, D.P. What kind of love is love at first sight? An empirical investigation. Pers. Relatsh. 2017, 24, 869–885. [Google Scholar] [CrossRef]

- Alea, N.; Vick, S.C. The first sight of love: Relationship-defining memories and marital satisfaction across adulthood. Memory 2010, 18, 730–742. [Google Scholar] [CrossRef]

- Asendorpf, J.B.; Penke, L.; Back, M.D. From dating to mating and relating: Predictors of initial and long-term outcomes of speed-dating in a community sample. Eur. J. Personal. 2011, 25, 16–30. [Google Scholar] [CrossRef]

- Eastwick, P.W.; Luchies, L.B.; Finkel, E.J.; Hunt, L.L. The predictive validity of ideal partner preferences: A review and meta-analysis. Psychol. Bull. 2014, 140, 623–665. [Google Scholar] [CrossRef]

- Bolmont, M.; Cacioppo, J.T.; Cacioppo, S. Love is in the gaze: An eye-tracking study of love and sexual desire. Psychol. Sci. Vol. 2014, 25, 1748–1756. [Google Scholar] [CrossRef]

- Wioleta, S. Using physiological signals for emotion recognition. In Proceedings of the 2013 6th International Conference on Human System Interactions (HSI), Sopot, Poland, 6–8 June 2013; pp. 556–561. [Google Scholar] [CrossRef]

- Picard, R.W. Picard Affective Computing; MIT Press: Cambridge, UK, 1997; Volume 252. [Google Scholar]

- Hönig, F.; Batliner, A.; Nöth, E. Real-time recognition of the affective user state with physiological signals. In Proceedings of the Doctoral Consortium, Affective Computing and Intelligent Interaction, Lisbon, Portugal, 12–14 September 2007. [Google Scholar]

- Anttonen, J.; Surakka, V. Emotions and heart rate while sitting on a chair. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 491–499. [Google Scholar]

- Mandryk, R.L.; Inkpen, K.; Calvert, T. Using Psychophysiological Techniques to Measure User Experience with Entertainment Technologies. Behav. Inf. Technol. 2006, 25, 141–158. [Google Scholar] [CrossRef]

- Kavsaoğlu, A.R.; Polat, K.; Bozkurt, M.R. A novel feature ranking algorithm for biometric recognition with PPG signals. Comput. Biol. Med. 2014, 49, 1–14. [Google Scholar] [CrossRef]

- Fong MW, K.; Ng EY, K.; Jian KE, Z.; Hong, T.J. SVR ensemble-based continuous blood pressure prediction using multi-channel photoplethysmogram. Comput. Biol. Med. 2019, 113, 103392. [Google Scholar] [CrossRef]

- Boukhechba, M.; Cai, L.; Wu, C.; Barnes, L.E. ActiPPG: Using Deep Neural networks for Activity Recognition from Wrist-Worn Photoplethysmography (PPG) sensors. Smart Health 2019, 14, 100082. [Google Scholar] [CrossRef]

- Fan, F.; Yan, Y.; Tang, Y.; Zhang, H.A. motion-tolerant approach for monitoring SpO2 and heart rate using photoplethysmography signal with dual frame length processing and multi-classifier fusion. Comput. Biol. Med. 2017, 91, 291–305. [Google Scholar] [CrossRef]

- Motin, M.A.; Karmakar, C.K.; Penzel, T.; Palaniswami, M. Sleep-Wake Classification using Statistical Features Extracted from Photoplethysmographic Signals. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019. [Google Scholar]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef]

- Khan, Y.; Han, D.; Ting, J.; Ahmed, M.; Nagisetty, R.; Arias, A.C. Organic Multi-Channel Optoelectronic Sensors for Wearable Health Monitoring. IEEE Access 2019, 7, 128114–128124. [Google Scholar] [CrossRef]

- Biswas, S.; Shao, Y.; Hachisu, T.; Nguyen-Dang, T.; Visell, Y. Integrated Soft Optoelectronics for Wearable Health Monitoring. Adv. Mater. Technol. 2020, 5, 2000347. [Google Scholar] [CrossRef]

- Hao, T.; Rogers, J.; Chang, H.Y.; Ball, M.; Walter, K.; Sun, S.; Chen, C.-H.; Zhu, X. Towards Precision Stress Management: Design and Evaluation of a Practical Wearable Sensing System for Monitoring Everyday Stress. Iproceedings 2017, 3, e15. [Google Scholar] [CrossRef]

- Udovičić, G.; Ðerek, J.; Russo, M.; Sikora, M. Wearable emotion recognition system based on GSR and PPG signals. In Proceedings of the 2nd International Workshop on Multimedia for Personal Health and Health Care, Mountain View, CA, USA, 23 October 2017; pp. 53–59. [Google Scholar]

- Sahambi, J.S.; Tandonz, S.N.; Bhatt, R.K.P. Using Wavelet Transforms for ECG Characterization. IEEE Eng. Med. Biol. Mag. 1997, 16, 77–83. [Google Scholar] [CrossRef]

- Kaur, I.; Ni, R.; Sikri, G. Denoising of ECG Signal with Different Wavelets. Int. J. Eng. Trends Technol. 2014, 9, 658–661. [Google Scholar] [CrossRef]

- Mallat, S.G. A Wavelet Tour of Signal Processing; China Machine Press: Beijing, China, 2010. [Google Scholar]

- Xue, Y.; Gencay, R.; Fagan, S. Jump detection with wavelets for high-frequency financial time series. Quant. Financ. 2014, 14, 1427–1444. [Google Scholar] [CrossRef]

- Poornachandra, S. Wavelet-based denoising using subband dependent threshold for ECG signals. Digital Signal Process. 2008, 18, 49–55. [Google Scholar] [CrossRef]

- Singer, A.; Shkolnisky, Y.; Nadler, B. Diffusion Interpretation of Nonlocal Neighborhood Filters for Signal Denoising. Siam J. Imaging Ences 2009, 2, 118–139. [Google Scholar] [CrossRef]

- García, J.; Astrom, M.; Mendive, J.; Laguna, P.; Sornmo, L. ECG-based detection of body position changes in ischemia monitoring. IEEE Trans. Bio-Med Eng. 2003, 50, 677. [Google Scholar]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Fu, K.S.; Min, P.J.; Li, T.J. Feature Selection in Pattern Recognition. IEEE Trans. Syst. Sci. Cybern. 1970, 6, 33–39. [Google Scholar] [CrossRef]

- Chen, Y.W.; Lin, C.J. Combining SVMs with Various Feature Selection Strategies. In Feature Extraction; Springer: Berlin/Heidelberg, Germany, 2006; pp. 315–324. [Google Scholar]

- Hua, J.; Tembe, W.D.; Dougherty, E.R. Performance of feature-selection methods in the classification of high-dimension data. Pattern Recognit. 2009, 42, 409–424. [Google Scholar] [CrossRef]

- Xie, J.; Lei, J.; Xie, W.; Shi, Y.; Liu, X. Two-stage hybrid feature selection algorithms for diagnosing erythemato-squamous diseases. Health Inf. Sci. Syst. 2013, 1, 10. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Liu, H.; Yu, L. Toward integrating feature selection algorithms for classification and clustering. IEEE Trans. Knowl. Data Eng. 2005, 17, 491–502. [Google Scholar]

- Oh, I.S.; Lee, J.S.; Moon, B.R. Hybrid genetic algorithms for feature selection. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1424–1437. [Google Scholar]

- Saeys, Y.; Inza, I.; Larrañaga, P. A review of feature selection techniques in bioinformatics. Bioinformatics 2007, 23, 2507–2517. [Google Scholar] [CrossRef]

- Pudil, P.; Novovičová, J.; Kittler, J. Floating Search Methods in Feature Selection. Pattern Recognit. Lett. 1994, 15, 1119–1125. [Google Scholar] [CrossRef]

| ID | Features | Description |

|---|---|---|

| Time-domain (Geometrical) features | ||

| 1 | Mean_HR | Mean of instantaneous heart rate |

| 2 | Mean_VP | Mean of the time from Valley to Peak |

| 3 | Mean_PV | Mean of the time from Peak to Valley |

| 4 | Mean_NNI | Mean of the time from Peak to Peak |

| 5 | Mean_VVI | Mean of the time from Valley to Valley |

| Time-domain (Statistical) features | ||

| 6 | RMSSD | Root mean square of successive differences of NN interval |

| 7 | SDNN | Standard deviation of NN interval |

| 8 | SDSD | Standard deviation of successive differences of NN interval |

| 9 | Range_NN | Difference between the maximum and minimum NN interval |

| 10 | NN50 | Number of interval differences of successive NN interval greater than 50 ms |

| 11 | pNN50 | Corresponding percentage of NN50 |

| 12 | NN20 | Number of interval differences of successive NN interval greater than 20 ms |

| 13 | pNN20 | Corresponding percentage of NN20 |

| 14 | CVSD | Coefficient of variation of successive differences equal to the RMSSD divided by Mean_NNI |

| 15 | CVNNI | Coefficient of variation equal to the ratio of SDNN divided by Mean_NNI |

| Frequency-domain features | ||

| 16 | LF | Total energy of NN interval in the low frequency band (0.04–0.15 Hz) |

| 17 | HF | Total energy of NN interval in the high frequency band (0.15–0.4 Hz) |

| 18 | LF/HF ratio | Ratio of LF power to HF power |

| 19 | Total_Power | Total energy of NN interval |

| 20 | nLFP | Normalized low frequency power |

| 21 | nHFP | Normalized high frequency power |

| Nonlinear (Geometrical) features | ||

| 22 | SD1 | Standard deviation for T direction in Poincare plot |

| 23 | SD2 | Standard deviation for L direction in Poincare plot |

| 24 | SD12 | Ratio between SD2 and SD1 |

| 25 | CSI | Cardiac Sympathetic Index |

| 26 | CVI | Cardiac Vagal Index. |

| Classifier | F1 (%) | Acc (%) | Se (%) | Sp (%) |

|---|---|---|---|---|

| LDA | 68.33 | 67.57 | 69.97 | 65.18 |

| SVM | 69.59 | 67.84 | 73.59 | 62.09 |

| XGBoost | 68.29 | 68.10 | 68.69 | 67.52 |

| RF | 68.07 | 67.57 | 69.12 | 66.03 |

| Classifier | F1 (%) | Acc (%) | Se (%) | Sp (%) | Selected Features |

|---|---|---|---|---|---|

| LDA | 71.98 | 70.04 | 75.29 | 64.76 | 5, 13, 17, 19, 21 |

| SVM | 69.84 | 68.96 | 71.88 | 66.03 | 16, 17 |

| XGBoost | 71.59 | 71.09 | 72.84 | 69.33 | 4, 9, 13, 14, 15, 17 |

| RF | 68.39 | 68.16 | 68.90 | 67.41 | 3, 4, 9, 10, 17, 22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, H.; Yuan, G.; Zhang, J.; Liu, G. Recognition of Impulse of Love at First Sight Based On Photoplethysmography Signal. Sensors 2020, 20, 6572. https://doi.org/10.3390/s20226572

Lu H, Yuan G, Zhang J, Liu G. Recognition of Impulse of Love at First Sight Based On Photoplethysmography Signal. Sensors. 2020; 20(22):6572. https://doi.org/10.3390/s20226572

Chicago/Turabian StyleLu, Huan, Guangjie Yuan, Jin Zhang, and Guangyuan Liu. 2020. "Recognition of Impulse of Love at First Sight Based On Photoplethysmography Signal" Sensors 20, no. 22: 6572. https://doi.org/10.3390/s20226572

APA StyleLu, H., Yuan, G., Zhang, J., & Liu, G. (2020). Recognition of Impulse of Love at First Sight Based On Photoplethysmography Signal. Sensors, 20(22), 6572. https://doi.org/10.3390/s20226572