1. Introduction

Facing an unpredictable ocean, humans developed a variety of observation means and detection instruments to deeply explore and understand marine phenomena [

1]. Remote sensing satellites, coastal ocean observatories, sea-based marine observation systems, seafloor observatories, and stereoscopic observation constitute the stereo observation systems used to perform ocean observations in different dimensions and different spatial-temporal scales [

2,

3,

4,

5,

6]. For example, space-based ocean observation systems use multispectral, hyperspectral, and synthetic aperture radar (SAR) to monitor multiple marine elements of a large sea area in real-time, synchronously and continuously [

7]. Coastal ocean observatories are used to realize real-time, long-term, and fixed-point monitoring [

8]. Seafloor observatories perform long-term in situ monitoring of geological, physical, chemical, and biological changes in specific areas of the seabed [

9]. Numerous and varying observation systems mean a large number of heterogeneous data. However, due to high data volume, users were drowning in useless data but starved of valuable data. It is challenging for researchers to identify the required data from the massive amount of ocean observation data.

Recommendation systems, which originated from the Internet and e-commerce industries, provide users with personalized information services and decision support based on data mining of magnanimity data [

10]. It is an effective method to improve retrieval capabilities to help users obtain the required data, i.e., news recommendations, music recommendations, and movie recommendations [

11,

12,

13]. With the increase of recommendation demand in various fields, scholars proposed many recommendation algorithms, such as content-based filtering (CBF), collaborative filtering (CF), and association-rule-based recommendation model (ARB) [

14,

15,

16]. In recent years, recommendation systems have become pervasive and have been applied to spatial data [

17,

18]. However, recommendation systems are rarely used in marine scientific data due to their complex attributes and the lack of a large user sample. Marine scientific data have abundant attributes, including space, time, topics, and other multidimensional information. The users of marine science data mostly include educators and scientific researchers. In addition, the users’ demands for data are complex, and the demands are changing continuously with research content. In addition, marine data sharing sites often lack an evaluation system, thus it is difficult to obtain users’ behavioral preferences and sufficient user ratings. Therefore, it is difficult for traditional recommendation algorithms to solve them.

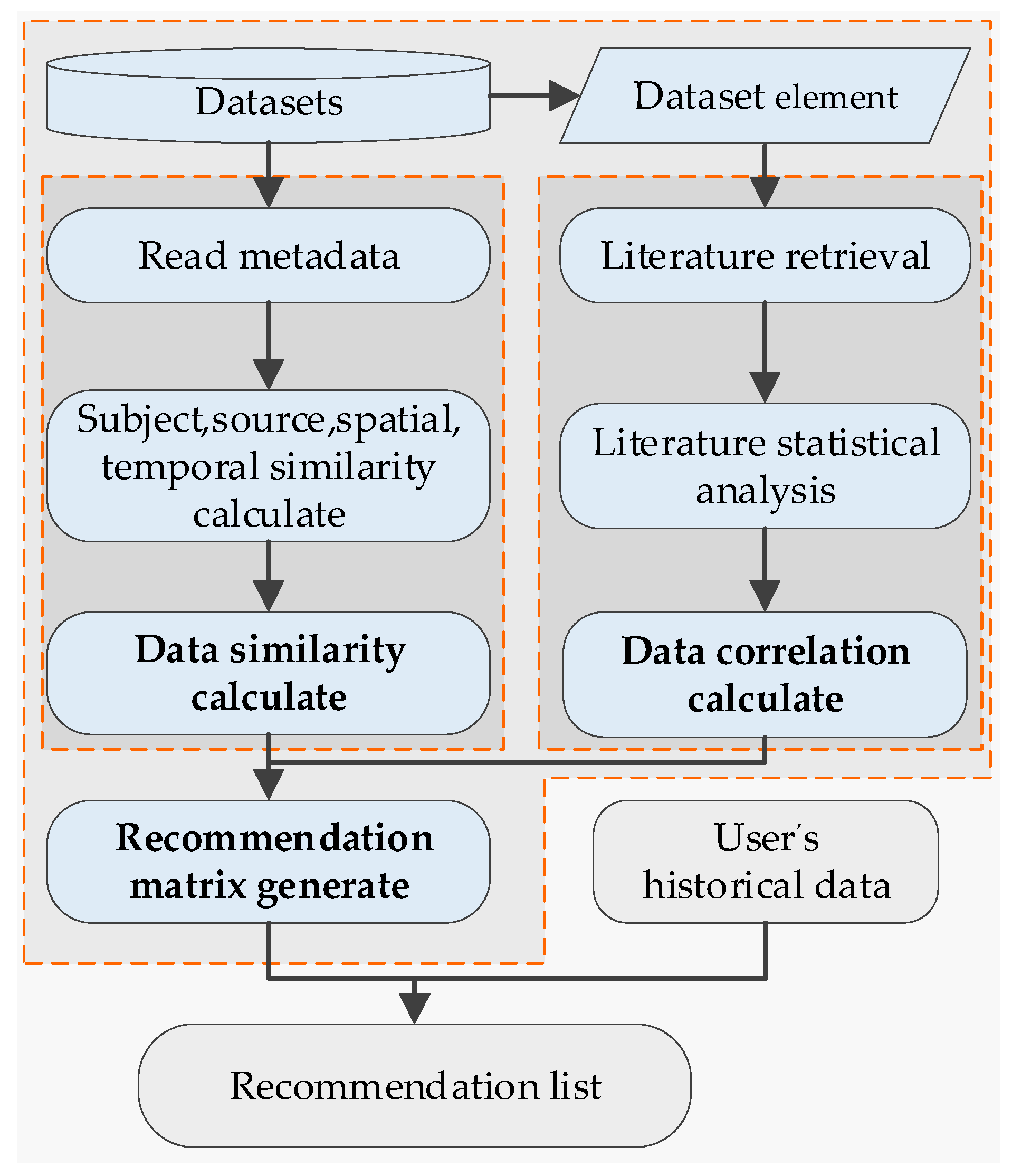

In this study, we developed a hybrid recommendation system for marine scientific data based on the CBF and CF with literature. CBF uses the information of data that has been selected by the user. CBF calculates the similarity of the data based on the attributes, then sorts the data and recommends data. CF filters and evaluates data for the user based on the interest level of other users with the same interest in data. CF calculates the similarity matrix among users based on the relationship of the existing data, finds users with common interests and hobbies, and generates recommendations that the users may be interested in [

19]. Given the lack of a large user sample, a literature analysis is introduced instead of a large user sample. In this method, data similarity was calculated by analyzing the subject, source, spatial, and temporal attributes, which was used to build the initial data similarity matrix. Then data correlation, which presents the implicit relationship between marine data, was obtained by analyzing literature on marine data. The data correlation was used to optimize the data similarity matrix and build the data recommendation matrix. Finally, based on the data recommendation matrix and user’s historical data, the recommendation system generates a recommendation list for the user. This recommendation system improved the recommendation accuracy and ensured the diversity of recommended data by combing various data attributes and literature analysis.

The rest of this paper is organized as follows. In

Section 2, we review the related work on the recommender algorithm and the hybrid recommender systems. The detailed description of our method is presented in

Section 3.

Section 4 gives experiment results,

Section 5 provides a brief discussion, and

Section 6 concludes this paper.

2. Related Work

In this section, we first introduce some related work on recommendation algorithms, and then some hybrid recommendation systems are reviewed. Additionally, we also discuss some recommendation evaluation methods.

2.1. Recommendation Systems

The recommendation algorithm is the foundation and core of the recommendation system—it includes CBF, CF, ARB, etc. CBF and CF are two popular methods used by personalized recommendation systems.

CBF makes recommendations by analyzing the features of the items and establishing the similarity between items. In recent years, an increasing number of studies on CBF have been performed. Yu Li et al. proposed a recommendation system of good images based on image feature combinations and achieved high accuracy in recommending similar good images at high speed [

14]. Jiangbo Shu et al. used CBF to target the learning resources to the right students combined with a convolutional neural network, which was used to predict the latent factors from the text information of the multimedia resources [

20]. Son Jieun et al. used a multi-attribute network to effectively reflect several attributes in CBF to recommend items to users with high accuracy and good robustness [

21]. CBF is simple and easy to understand, and the new items can be recommended as well as old ones. However, it only recommends similar data, thus it has limitations on recommendation diversity. In addition, it is difficult to explore users’ potential interests.

CF is the most widely used algorithm. It only relies on interaction information between users and items, such as clicks, browsing, and rating, rather than information about users or items. CF is widely used in many fields. For example, Zhihua Hu et al. embedded CF for online clothing and verified the effectiveness of this method using a test dataset from e-commerce platforms (

www.taobao.com) in China [

22]. Choi Il Young et al. generated online footwear commercial video recommendations based on changes in user’s facial expression captured every moment based on CF [

23]. Bach Ngo Xuan et al. proposed an efficient collaborative filtering method that exploits co-commenting patterns of users to generate recommendations for news services, and this method exhibited high accuracy [

24]. CF can effectively discover the potential interests of users and recommend new items. However, CF has a cold start problem. The lack of basic information of new users would lead to low-quality recommendations, and a limited number of users make it difficult to recommend new data.

2.2. Hybrid Recommendation Systems

These recommendation algorithms have their own unique advantages and limitations. The hybrid recommendation systems were proposed to optimize the algorithms and address the limitations by combining two or more recommendation algorithms or introducing other algorithms. According to different targets, several hybridization methods are used to combine a variety of algorithms, such as weighted, switching, mixed, feature combination, cascade, feature augmentation, and meta-level [

25]. The weighted hybrid recommendation systems calculate recommendation algorithms and weighted their results. The switching hybrid recommendation systems choose the appropriate algorithms to make a recommendation based on certain pre-defined criteria, the mixed hybrid recommendation systems will present all commendation results of mixed\algorithms, the feature combination hybrid make commendations with features from different recommendation algorithms, the cascade hybrid using one recommendation algorithm to refine another recommendation result, the feature augmentation recommendation takes one recommendation result as an input feature to another, and the meta-level recommendation system integrates one recommendation algorithm to another algorithm. The most common hybrid recommendation system is to combine CF and CBF. Bozanta Aysun et al. integrated CBF and CF together with contextual information in order to get rid of the disadvantages of each approach, and the experimental evaluation showed improvement [

26]. Geetha G et al. provided more precise recommendations concerning movies to a new user and other existing users by hybrid filtering that provided a combination of the results of CBF and CF [

27].

Hybrid recommendation systems can optimize recommendation results by effectively making use of additional data information and other technologies. Fang Q et al. proposed a spatial-temporal context-aware personalized location recommendation system based on personal interest, local preference, and spatial-temporal context influence [

28]. As one of the advancing researches of the world, the neural network has been applied in many fields [

29,

30,

31,

32], many scholars also introduced neural network into the recommendation system. Yang Han et al. using the convolutional neural network algorithm to predict the cause and time of recurrence of cancer patients, designed an intelligent recommendation system of a cancer rehabilitation scheme [

33]. Libo Zhang et al. proposed a model combining a collaborative filtering recommendation algorithm with deep learning technology to further improve the quality of the recommendation results [

34] T.H. Roh et al. proposed a three-step CF recommendation model combing with self-organizing map (SOM) and case-based reasoning (CBR), and demonstrates the utility of this model through an open dataset of user preference [

35].

Many of the researchers have made much effort on hybrid recommendation systems and proposed many recommendation methods, but no previous systems fit all data recommendation scenarios.

2.3. Recommendation Evaluation Metrics

Effective evaluation of the recommendation system is critical. Depending on the recommendation task, the most commonly used recommendation quality measures can be divided into three categories: (a) Quality of the predictions, such as mean absolute error and mean squared error. (b) Quality of recommendations, such as precision, recall, average reciprocal hit rank (ARHR). (c) Quality of the list of recommendations, such as half-life utility (HL), mean reciprocal rank (MRR), mean average precision (MAP). (d) Other assessment indicators, such as intra list similarity (ILS), mean absolute shift (MAS) [

36].

Although the importance of freshness and unpredictability was proposed, there has been no systematic quantitative study of them. In this study, ARHR and ILS were adopted. ARHR is a very popular indicator to evaluate recommendation systems. It measures how strongly an item is recommended [

37,

38]. ILS measures the diversity of recommendation lists. If the items in the recommendation list are less similar and ILS is smaller, the more diverse the recommendation results will be [

39].

3. Methods

The proposed method was mainly divided into 2 steps: (1) Calculate the similarity between items; (2) Generate recommendation lists for users according to the similarity of items and users’ historical behaviors. For the marine dataset, the user’s interest in a dataset was calculated by the following formula:

where,

is the user’s interest in

dataset.

is a collection of the historical datasets that the user has used, and

.

is the value of the

th row and the

th column in the recommendation matrix.

is the user’s interest in

dataset. In practice,

can be set according to the user’s ratings or browsing time.

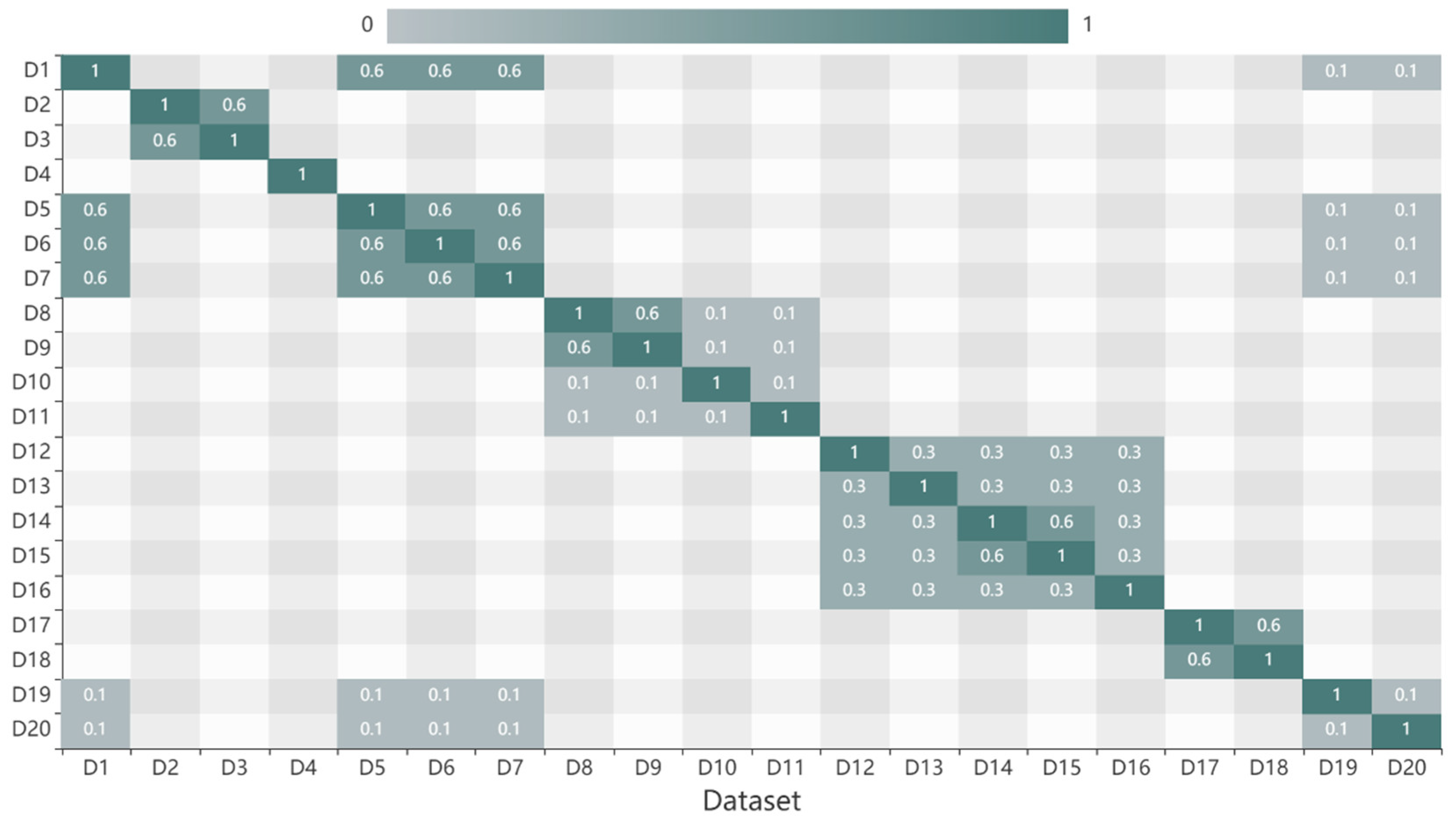

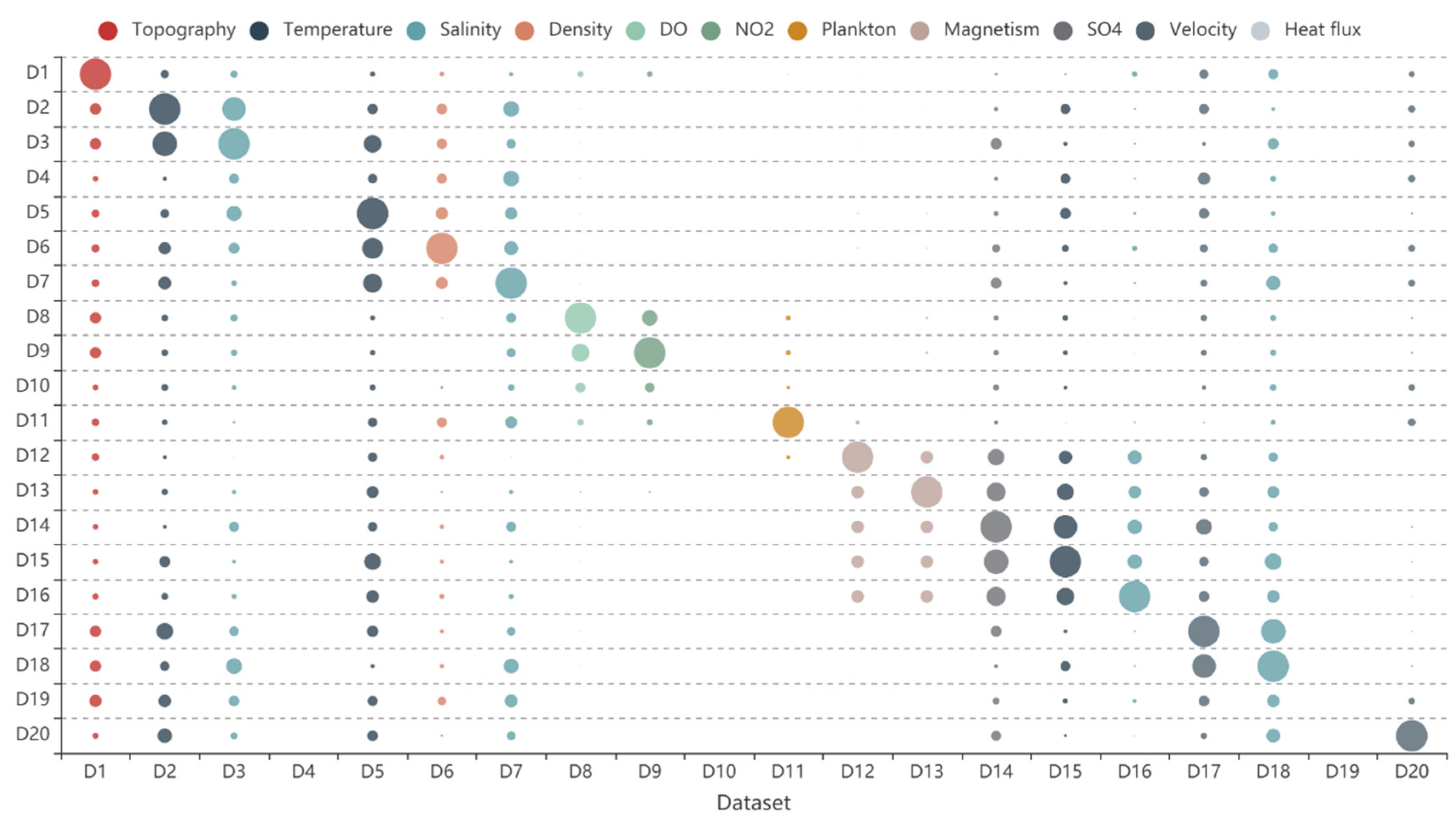

The proposed method was primarily used to calculate the recommendation matrix, which was the hardest and the most critical part of the recommendation system. The proposed method included the following 3 steps. First, the data similarity was calculated by analyzing the attributes of marine science data. Then, the data correlations between different marine science data were obtained by retrieving relevant literature from each category of marine science data. Finally, the data similarities and correlations were integrated to obtain the recommended matrix.

3.1. Data Similarity

Data similarity was defined based on 4 aspects of similarity, that was the subject similarity, source similarity, spatial similarity, and temporal similarity. Based on the 4 similarities, the weighted sum calculation model was used to calculate data similarity (

) as Equation (2).

Here, and are 2 datasets, is the subject similarity of the 2 datasets, is the source similarity, is the spatial similarity, and is the temporal similarity.

In the study, , , and were determined subjectively by expert scoring. Through consulting several experts in different fields, , , , and were set as 0.2, 0.2, 0.3, and 0.3, respectively.

3.1.1. Subject Similarity

Subject similarity refers to the similarity of data category. Generally, there are 4 basic branches of marine science: Marine physics, marine chemistry, marine geology, and marine biology [

40]. These branches have different research topics, such as marine meteorology, marine acoustics, marine electromagnetism, and marine optics, and different research topics contain different observation elements. The impact of the category was described by subject similarity. A category hierarchy tree was generated, and a parameter was assigned to each layer to represent the increasing hierarchy. As shown in

Figure 1, the category hierarchy tree was a 4-layer structure consisting of the branch, topic, element, and data. Here,

,

and

represented the parameters of each layer, separately. Because these parameters do not necessarily increase linearly as the depth of the category hierarchy tree increases in practical applications,

,

and

were different [

41].

According to the category hierarchy tree, the subject similarity was calculated based on the parameters from the first layer to the common layer of 2 marine data, as shown in Formula (3).

Here,

is the sum of the parameters from the first layer to the common layer of 2 marine data. Using

Figure 1 as an example, the common layer of data

and

is the second layer, which represents a topic layer. Thus,

is the sum of the parameters of the 1st layer and the 2nd layer, namely,

.

3.1.2. Source Similarity

With the development of ocean technology, the increasing diversity of oceanographic instruments has led to a greater diversity of data sources with different spatial and temporal dimension properties. Thus, the similarity of data sources was crucial. The source hierarchy tree can be divided into 5 layers: (a) Observation system, including space-based systems, shore-based systems, air-based systems, sea-based systems, ship-based systems, seabed-based systems, and reanalysis systems, with new data obtained by multidata fusion and analysis; (b) observation means, including mooring buoys, submariners buoys, voyage, stations, and numerical simulation; (c) instrument mounted on observation means, such as acoustic doppler current profiler (ADCP), conductivity-temperature-depth system (CTD), and ocean color imager; (d) specific instrument number; (e) Datasets. For example, the space-based observation method included the Seastar satellite, OceanSat series satellites, and HY-1 satellites. Sea- and seabed-based observation methods included several CTDs, ADCPs, and other different in situ observation instruments [

42]. The source hierarchy tree was constructed, as shown in

Figure 2.

According to the source hierarchy tree, the source similarity was calculated based on parameters for each marine dataset, as shown in Formula (4).

Here,

is the parameter of layer

, and

is the sum of the parameters from the 1st layer to the common layer of 2 marine datasets. Using

Figure 2 as an example,

is the sum of

,

,and

.

3.1.3. Spatial Similarity

To calculate spatial similarity, the compatibility of the spatial scale and the overlap of the spatial range were analyzed. Based on the geometric type, marine data can be divided into a point, line, polygon, and three-dimensional(3D) volume, which was the most striking feature of marine scientific data. When calculating the spatial similarity, the compatibility of the space scale was the primary consideration factor. The spatial similarity of the 2 datasets were unidirectional. For instance, data and were 2 marine scientific datasets, data is line data, and data is point data. For data , the similarity to data is 0. For data , the similarity to data is calculated by the distance between these datasets.

- (1)

Data

is point data:

The spatial similarity was calculated by analyzing the distance between two data points. Argo floats data were the most commonly used point marine data, and the Argo floats were fixed by satellites when they were drifting on the surface with errors of 150 m to 1000 m [

43]. Thus, in this study, the similarity was considered as 0 if the distance between 2 data points was greater than 1 km. Thus, the spatial similarity was calculated using the following formula:

where

is the distance of data

and

, and the unit is km.

- (2)

Data

is line data:

For line data, the similarity was calculated by the overlap length as shown in Formula (6). If data

is point data, the similarity is 0.

Here, is the overlap length of data and , and the unit is km.

- (3)

Data

is polygon data:

For polygon data, the similarity was calculated based on the overlap area (Formula (7)). Considering the compatibility of space scale, data

must be polygon data or 3D volume. Otherwise, the similarity is 0.

Here, is the overlap area of data and . is the area of data .

- (4)

Data

is 3D volume data:

To compute spatial similarity of 3D volume data, it is essential to ensure that data

is also 3D volume data; otherwise, the similarity is 0. The similarity is calculated based on the overlap volume using Formula (8).

Here, is the overlap volume

of data and . is the volume of data .

3.1.4. Temporal Similarity

Similar to the calculation of spatial similarity, the calculation of temporal similarity also needs to consider scale and range. The scale of the data generally includes seconds, minutes, hours, days, decades, months, and years, corresponding to levels 1~7. Before the temporal similarity calculation, it is necessary to judge whether the time scales are the same or compatible. For example, the annual data can be calculated by monthly data, but monthly data cannot be obtained from annual data, thus the similarity of annual data for monthly data is 0. The temporal similarity can be expressed, as noted in Formula (9).

3.2. Data Correlation

To ensure the diversity of the recommendation data, data correlation was added to the proposed method. In interdisciplinary studies, data with less content similarities were often used together. In this study, the published papers were used to reflect the use of marine science data comprehensively. A large number of relevant studies were retrieved using the authoritative literature database, and data were applied and correlated based on statistical analysis. A co-occurrence matrix was used to describe the correlation between data, and data correlation was assessed using Formula (10) [

44,

45].

Here, and are the number of literatures for data and , respectively. and are literature of data and , respectively. is the number of the same literature of data and .

3.3. Data Recommendation Model

In this study, the data recommendation matrix is a result of the joint action of data similarity and data correlation and was calculated by the weighted average method. The weights of data similarity and data correlation were set by their contribution. The number of relevant studies will affect the weight of data correlation in data recommendation. A limited number of studies will lead to low reliability of data association. In this case, the data similarity played an important role in the data recommendation model. Thus, the weight of data correlation varies with the number of relevant literature. In this case, it was assumed that the data similarity and data correlation have the same contribution to the data recommendation model. The data recommendation matrix is constructed as follows:

where,

is the data similarity of data

for data

,

is the data correlation between data

and data

.

is used to build a recommendation matrix, and the data recommendation matrix can be represented by formula (12).

Here, are all datasets.

Based on the data recommendation matrix and user’s historical data, the recommendation system generates a recommendation list for the user, as shown in

Figure 3.

3.4. Evaluation Metrics

Referring to the calculation method of average reciprocal hit rank (ARHR), the distribution of the same element (DE) was used to evaluate the distribution of the datasets, which belonged to the same element. DE was used to describe the sensitivity of the data sequence. The formula is noted as follows:

where for dataset

,

is the

th row in recommendation matrix.

is the position of the dataset

within list

L,

is the

th dataset in

,

belongs to the same element as dataset

.

ILS was used to reveal the diversity of the recommended list. A smaller ILS indicates increased diversity of recommended results. The formula is noted as follows:

where,

is the data similarity of data

for data

,

is the

th row in recommendation matrix. Obviously, the lower values of DE and ILS indicate better performance of the model.

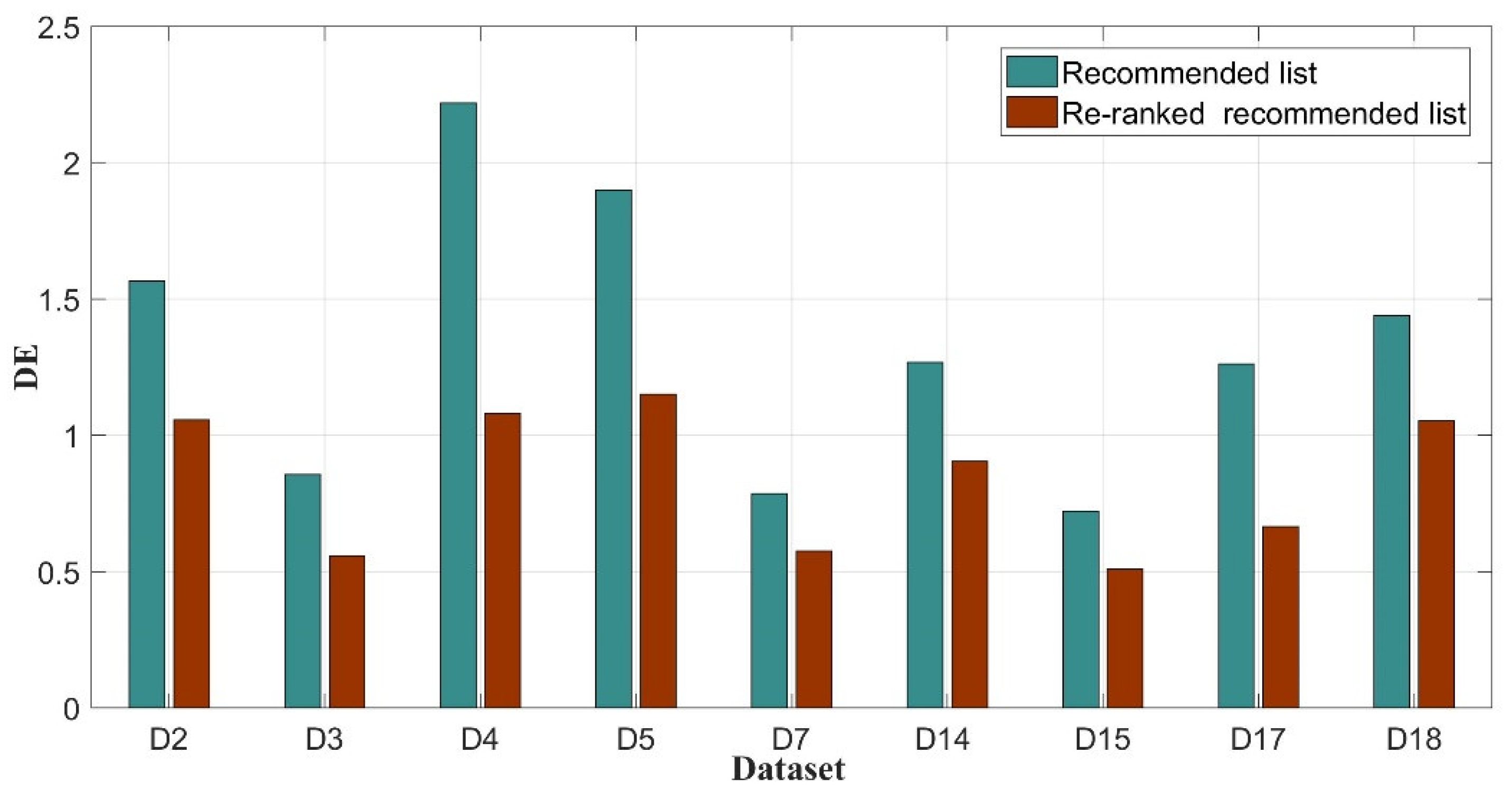

5. Discussion

Based on the data similarity and data correlation, the data recommendation results before and after adding data correlations were obtained. The elements of temperature and salinity included multiple datasets, thus elements E1 and E2 were used as examples. In the recommended list for D2, which is a temperature dataset, the top 10 recommendation results included D4, D5, D14, and D18 temperature datasets. However, in the re-ranked recommended list for D2, only D4 and D5 were included in the list of top 10 results. Instead of D14 and D18, D15 and D17 salinity datasets were included in the top 10. The recommended list for other datasets that belonged to the same element exhibited the same sorted results.

To describe this distribution and diversity of recommended results, DE and ILS were used to evaluate the recommended results.

Figure 10 presents the DE results of several datasets in the recommended list and re-ranked the recommended list. DEs of the dataset in the recommended list are increased compared with those in the re-ranked recommended list. DEs of D4, D5, and D17 in two recommended list have obvious differences. DE of D4 in the recommended list is 2.22. In contrast, D4 in the re-ranked recommended list has a DE of 1.08. For D5, DE in the recommended list is 1.9, whereas DE in the re-ranked recommended list is 1.15. DE of D17 in the recommended list is 1.26, and DE of D17 in the re-ranked recommended list is 0.67. Although DEs of other datasets exhibit relatively small differences, the smallest difference was 0.21, which was noted as the DE difference of D7 and D15. The average DE of several datasets in the recommended list is 1.34, and the average DE in the re-ranked recommended list is 0.84. In short, the distribution of datasets in the re-ranked recommended list is superior to that in the recommended list.

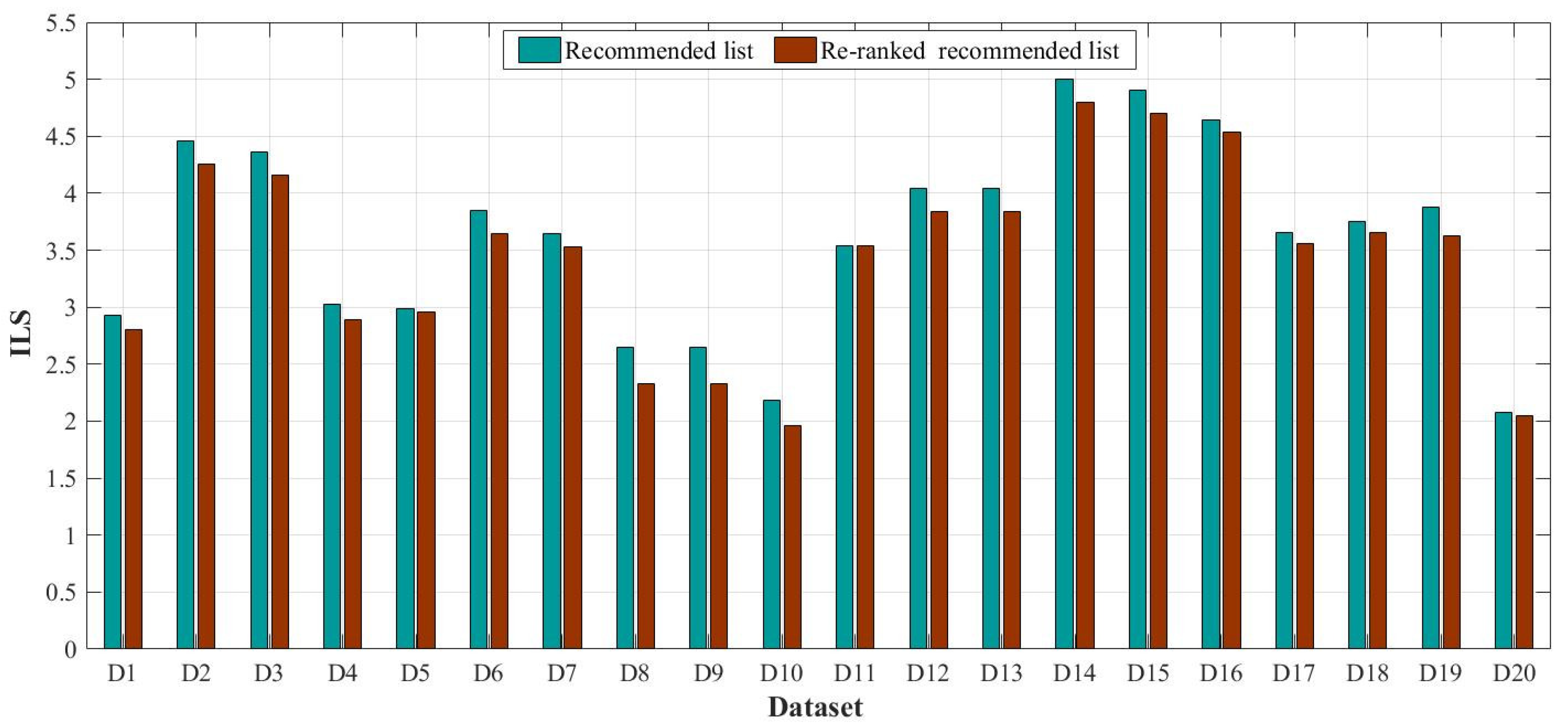

In this study, the top 10 datasets in the two recommended lists are considered as valid recommendation datasets. Thus, based on the top 10 datasets, ILS was calculated, and the result is shown in

Figure 11 below. ILSs of all datasets in the recommended list is greater than ILSs in the re-ranked recommended list. ILSs with the greatest differences include ILSs of D8 and D9, and the value is 0.32. ILSs of D5, D11, and D20 in the two recommended lists exhibit minimal differences, and the value is approximately 0.01. On the whole, the average of ILSs of all datasets in the recommended list is 3.61, whereas the average in the re-ranked recommended list is 3.45.

6. Conclusions

In this study, the recommendation system is proposed for marine science observation data based on data similarity and data correlation. According to our method, the dataset recommendation list was obtained using data similarity. Then, the dataset recommendation list was ranked by data correlation to obtain a re-ranked dataset recommended list. We conducted experiments on 20 simulated datasets, and DE and ILS were used to evaluate the effectiveness. The result shows that the average DE of several datasets belonging to two elements in the recommended list is 1.34, and the average DE in the re-ranked recommended list is 0.84. The distribution of datasets in the re-ranked recommended list is superior to that in the recommended list. The average of ILSs of all datasets in the recommended list is 3.61, whereas the average in the re-ranked recommended list is 3.45. The diversity of the recommended list is greater than that of the re-ranked recommended list. In summary, the proposed method exhibits better effectiveness.

The recommendation system we proposed combined the data attributes and data applications, and we tested the recommendation system with simulated datasets from three marine data sharing websites. Therefore, a next step would be to expand the experiments with more comprehensive data from several other marine data sharing websites, such as the national oceanic and atmospheric administration (NOAA), Ocean Networks Canada (ONC), and Monterey Bay Aquarium Research Institute (MBARI). In addition, this method can be applied to a wide range of science data scenarios similar to marine data, and it is well-suited to the data sharing websites that lack users or lack a user rating system.