An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

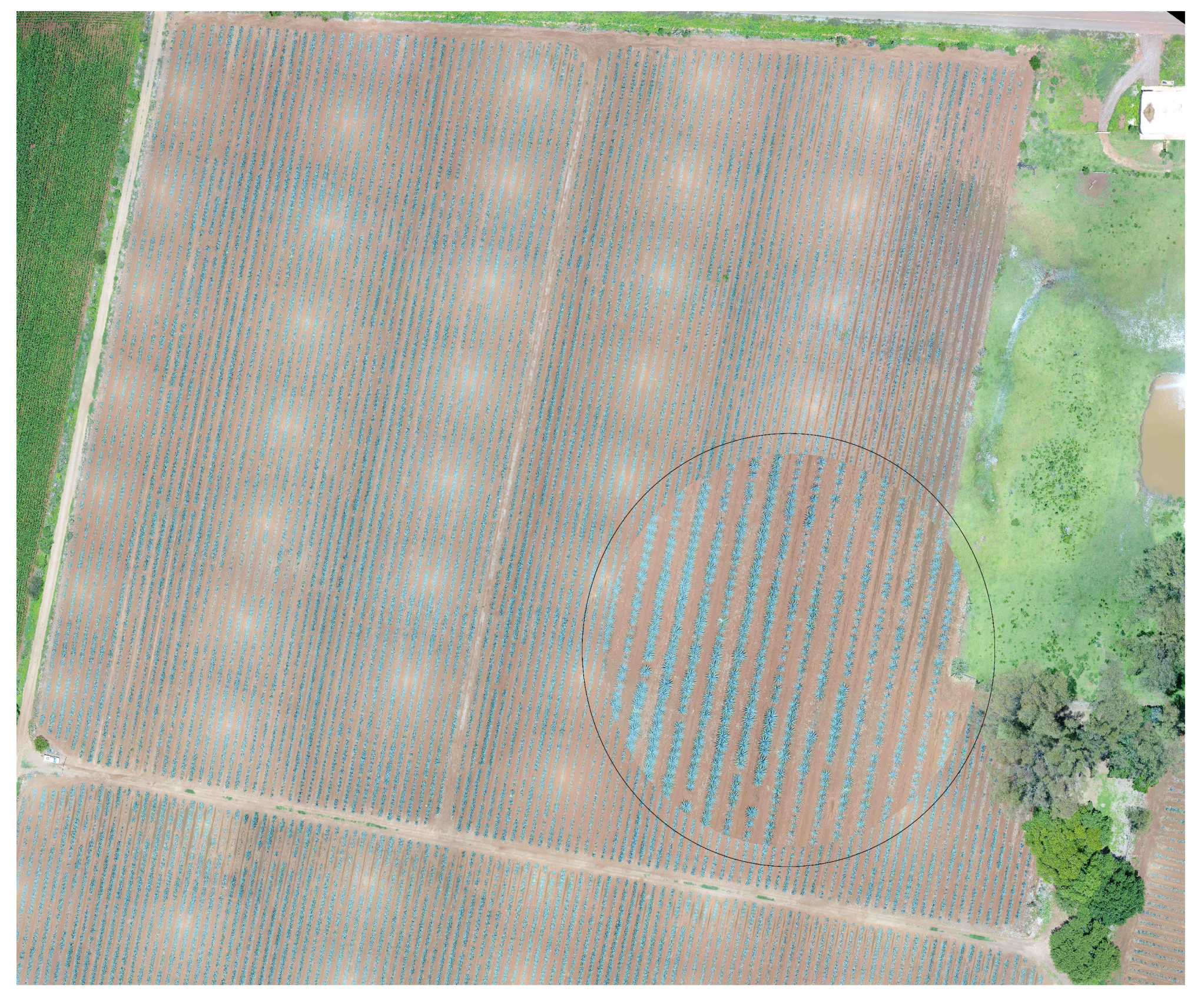

3.1. Study Areas

3.2. Workflow

3.3. Implementation of Mathematical Morphology Operations for Counting Agave Plants

- Preprocessing: The main aim of this step is to obtain a binary image as clean as possible so that we can achieve a good object separation in the next step. For this purpose, we carry out the following steps:

- Secondly, we binarize the previous image I according to the following equation:

- After the previous step, we typically achieve a noisy binary image with isolated points and holes. For this reason, we remove these artifacts in order to reduce false positives or false negatives during the counting process. For this step, we can use several image processing techniques. In particular, in this work, we use morphological filters, i.e., clean and fill morphological filters. We denote the final preprocessed image as ; see Figure 9. Note that, in this case, isolated points and holes are removed; compare the images in Figure 9c,d with the images in Figure 9e,f, respectively.

- Object separation: The main objective of this step is to eliminate the overlap of regions corresponding to agave plants. However, this is a very hard task, so in practice, in this step, we reduce this problem. It is well known that morphological operators are efficient image-processing transformations that allows to attenuate the overlapping problem. Hence, in this step we apply a set of morphological opening and morphological closing operations [8,39]. One advantage of MM is that it allows to detect structures of different sizes and shapes. In the proposed algorithm, the rounded shape and diameter size are the features that define the patterns of agave plants to be counted. Following the recommendation of the Tequila Council in Jalisco Mexico, we need to preserve all patterns corresponding to each agave plant and its shoots. Therefore, the order of application of morphological operators to the images is very relevant. For the previous reason, morphological opening is firstly carried out:where ⊖ and ⊕ represent the morphological erosion and dilation, respectively, [8,39]; B denotes the structuring element. In our case, we consider the squared structuring element of size .

- CountingThe counting process is an iterative procedure that combines the extraction of connected components with morphological operators. The stages of this procedure are described below:

- 1: Extracting all connected components.

- 2: Separating small connected components, , from greater ones, , through a threshold . and denote the images obtained after thresholding. was fixed through experiments, and it is given in pixels units. is related to the equivalent diameter: thte diameter of a circle which has the same area as the connected component to be considered in the counting process.

- 3: Morphological erosion of the image using a structuring element with a diamond shape of size 2, according to Equation (5):The purpose of the erosion filter is to separate in each iteration, as much as possible, the patterns corresponding to each individual plant. The erosion filter attenuates the overlap problem.

- 4: Finding all the connected components in the image . If there is any connected component, repeat the process from 1 to 4; otherwise, go to 5.

- 5: Counting of all connected components saved in the image , see Figure 12.

The threshold value, , and the size of the structuring elements for the mathematical morphology are the parameters of our proposal. They were tuned experimentally with respect to a fixed flight altitude and the segmentation results reported in [2]. According to our experimentation, connected components with an equivalent diameter less than 13 pixels are candidates for representing patterns of individual agave plants. For that reason, is 13. The proposed counting algorithm was written in Matlab (R2016a).

3.4. Evaluation Metrics

4. Results and Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Flores-Sahagun, T.H.; Dos Santos, L.P.; Dos Santos, J.; Mazzaro, I.; Mikowski, A. Characterization of blue agave bagasse fibers of Mexico. Compos. Part A Appl. Sci. Manuf. 2013, 45, 153–161. [Google Scholar]

- Calvario, G.; Sierra, B.; Alarcon, T.; Hernandez, C.; Dalmau, O. A multi-disciplinary approach to remote sensing through low-cost uavs. Sensors 2017, 17, 1411. [Google Scholar] [CrossRef] [PubMed]

- Gnädinger, F.; Schmidhalter, U. Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Kestur, R.; Angural, A.; Bashir, B.; Omkar, S.N.; Anand, G.; Meenavathi, M.B. Tree crown detection, delineation and counting in uav remote sensed images: A neural network based spectral–spatial method. J. Indian Soc. Remote Sens. 2018, 46, 991–1004. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Wheat ear counting in-field conditions: High throughput and low-cost approach using RGB images. Plant Methods 2018, 14, 22. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability; Volume 1: Statistics; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Dougherty, E. Mathematical Morphology in Image Processing; CRC Press: Boca Raton, FL, USA, 1992. [Google Scholar]

- Jean, S.; Pierre, S. Mathematical Morphology and Its Applications to Image Processing; Springer: Amsterdam, The Netherlands, 1994. [Google Scholar]

- Torres-Sánchez, J.; Peña, J.M.; Castro, A.I.D.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. Int. Conf. Adv. Intell. Mechatron. 2003, 2, 1079–1083. [Google Scholar]

- Woebbecke, D.; Meyer, G.; Von Bargen, K.; Mortensen, D. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Camargo Neto, J. A Combined Statistical-Soft Computing Approach for Classification and Mapping Weed Species in Minimum-Tillage Systems; ETD Collection for University of Nebraska: Lincoln, NE, USA, 2004; pp. 1–170. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.; Wheeler, H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cyber. 1979, 9, 62–69. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.; Burgos-Artizzu, X.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K.N. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the Dimension of a Model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Dempster, A.; Laird, N.; Rubin, D. Maximum likelihood from incomplete data via the EM algorithm. Statist. Soc. Ser. B (Methodol.) 1977, 39, 1–22. [Google Scholar]

- Wan, L.; Li, Y.; Cen, H.; Zhu, J.; Yin, W.; Wu, W.; Zhu, H.; Sun, D.; Zhou, W.; He, Y. Combining UAV-based vegetation indices and image classification to estimate flower number in oilseed rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.; Koetz, B.; Kneubuehler, M. Angular sensitivity analysis of vegetation indices derived from chris/proba data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining uav-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bertrand, G. On topological watersheds. J. Math. Imaging Vis. 2005, 22, 217–230. [Google Scholar] [CrossRef]

- Varghese, J.; Subash, S.; Tairan, N.; Babu, B. Laplacian-Based Frequency Domain Filter for the Restoration of Digital Images Corrupted by Periodic Noise. Can. J. Electr. Comput. Eng. 2016, 39, 82–91. [Google Scholar] [CrossRef]

- Schneider, C.A.; Rasband, W.S.; Eliceiri, K.W. NIH Image to ImageJ: 25 years of image analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Oliveira, H.C.; Guizilini, V.C.; Nunes, I.P.; Souza, J.R. Failure Detection in Row Crops From UAV Images Using Morphological Operators. IEEE Geosci. Remote Sens. Lett. 2018, 15, 991–995. [Google Scholar] [CrossRef]

- Kitano, B.T.; Mendes, C.C.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn Plant Counting Using Deep Learning and UAV Images. IEEE Geosci. Remote. Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic tobacco plant detection in UAV images via deep neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Ghosh, S.; Das, N.; Das, I.; Maulik, U. Understanding Deep Learning Techniques for Image Segmentation. ACM Comput. Surv. 2019, 52, 73–107. [Google Scholar] [CrossRef]

- Josefina, L.U.P. Evaluación de diferentes dosis de fertilización (npk) en el agave tequilero (agave tequilana weber). Ph.D. Thesis, Universidad de Guadalajara, Facultad de Agronomía, Zapopan, Jalisco, Mexico, 1987. [Google Scholar]

- Bueno, J.X.U.; Gutiérrez, C.V.; Figueroa, A.R. Muestreo y Análisis de Suelo en Plantaciones de Agave. In Conocimiento y Prácticas Agronómicas para la Producción de Agave Tequilana Weber en la Zona de Denominación de Origen del Tequila; Centro de Investigación Regional del Pacífico Centro, Campo Experimental Centro-Altos: Tepatitlán de Morelos, Jalisco, Mexico, 2007; p. 37. [Google Scholar]

- DJI. Available online: http://www.dji.com/mx/phantom-4 (accessed on 1 July 2020).

- Serra, J. Morphological filtering: An overview. Signal Process. 1994, 38, 3–11. [Google Scholar] [CrossRef]

- Lavrač, N.; Flach, P.; Zupan, B. Rule Evaluation Measures: A Unifying View. In Inductive Logic Programming; Džeroski, S., Flach, P., Eds.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 174–185. [Google Scholar]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Bazi, Y.; Malek, S.; Alajlan, N.; AlHichri, H. An automatic approach for palm tree counting in UAV images. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Québec City, QC, Canada, 13–18 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 537–540. [Google Scholar]

- Moranduzzo, T.; Melgani, F. Automatic car counting method for unmanned aerial vehicle images. IEEE Trans. Geosci. Remote Sens. 2013, 52, 1635–1647. [Google Scholar] [CrossRef]

- Dufour, J.M.; Neves, J. Chapter 1—Finite-sample inference and nonstandard asymptotics with Monte Carlo tests and R. In Conceptual Econometrics Using R; Vinod, H.D., Rao, C., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; Volume 41, pp. 3–31. [Google Scholar]

- Larochelle, H.; Erhan, D.; Courville, A.; Bergstra, J.; Bengio, Y. An empirical evaluation of deep architectures on problems with many factors of variation. In ICML; Association for Computing Machinery: New York, NY, USA, 2007. [Google Scholar]

- Hinton, G.E. A Practical Guide to Training Restricted Boltzmann Machines. In Neural Networks: Tricks of the Trade, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619. [Google Scholar]

- Chung Chang, C.; Jen Lin, C. LIBSVM: A Library for Support Vector Machines. 2001. Available online: http://www.csie.ntu.edu.tw/~cjlin/libsvm (accessed on 30 June 2020).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Calvario, G.; Sierra, B.; Martinez, J.; Monter, E. Un Enfoque Multidisciplinario de Sensado Remoto a Través de UAV de Bajo Costo. In I Simposio de Aplicaciones Científicas y Técnicas de los Vehículos no Tripulados; Universidad Nacional Autonoma de Mexico, (UNAM): Mexico City, Mexico, 2017; pp. 24–32. [Google Scholar]

| Field | Geographical Position | Age | Area (ha) |

|---|---|---|---|

| 1 | 32.22 N, 10.06 W | 2–4 years | 2.494 |

| 2 | 34.49 N, 30.14 W | 4 years | 0.4293 |

| 3 | 05.69 N, 06.23 W | 4 years | 4.018 |

| S | TP | FN | FP | GT | Pacc | Uacc | Recall | Acc |

|---|---|---|---|---|---|---|---|---|

| Field 1-Blue | 198 | 25 | 1 | 223 | 0.8879 | 0.9950 | 0.8979 | 0.9414 |

| Field 1-Purple | 336 | 33 | 9 | 369 | 0.9106 | 0.9739 | 0.9106 | 0.9422 |

| Field 1-Red | 344 | 70 | 11 | 414 | 0.8309 | 0.9690 | 0.9309 | 0.9000 |

| Field 2-Blue | 326 | 60 | 25 | 386 | 0.8446 | 0.9288 | 0.8446 | 0.8867 |

| Field 2-Purple | 99 | 6 | 13 | 105 | 0.9429 | 0.8839 | 0.9429 | 0.9134 |

| Field 2-Red | 177 | 8 | 7 | 185 | 0.9568 | 0.9620 | 0.9568 | 0.9594 |

| Field 3-Blue | 101 | 2 | 10 | 103 | 0.9806 | 0.9099 | 0.9806 | 0.9452 |

| Field 3-Purple | 192 | 7 | 25 | 199 | 0.9648 | 0.8848 | 0.9648 | 0.9248 |

| Field 3-Red | 78 | 5 | 15 | 83 | 0.9398 | 0.8387 | 0.9398 | 0.8892 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calvario, G.; Alarcón, T.E.; Dalmau, O.; Sierra, B.; Hernandez, C. An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles. Sensors 2020, 20, 6247. https://doi.org/10.3390/s20216247

Calvario G, Alarcón TE, Dalmau O, Sierra B, Hernandez C. An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles. Sensors. 2020; 20(21):6247. https://doi.org/10.3390/s20216247

Chicago/Turabian StyleCalvario, Gabriela, Teresa E. Alarcón, Oscar Dalmau, Basilio Sierra, and Carmen Hernandez. 2020. "An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles" Sensors 20, no. 21: 6247. https://doi.org/10.3390/s20216247

APA StyleCalvario, G., Alarcón, T. E., Dalmau, O., Sierra, B., & Hernandez, C. (2020). An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles. Sensors, 20(21), 6247. https://doi.org/10.3390/s20216247