Recognition and Grasping of Disorderly Stacked Wood Planks Using a Local Image Patch and Point Pair Feature Method

Abstract

1. Introduction

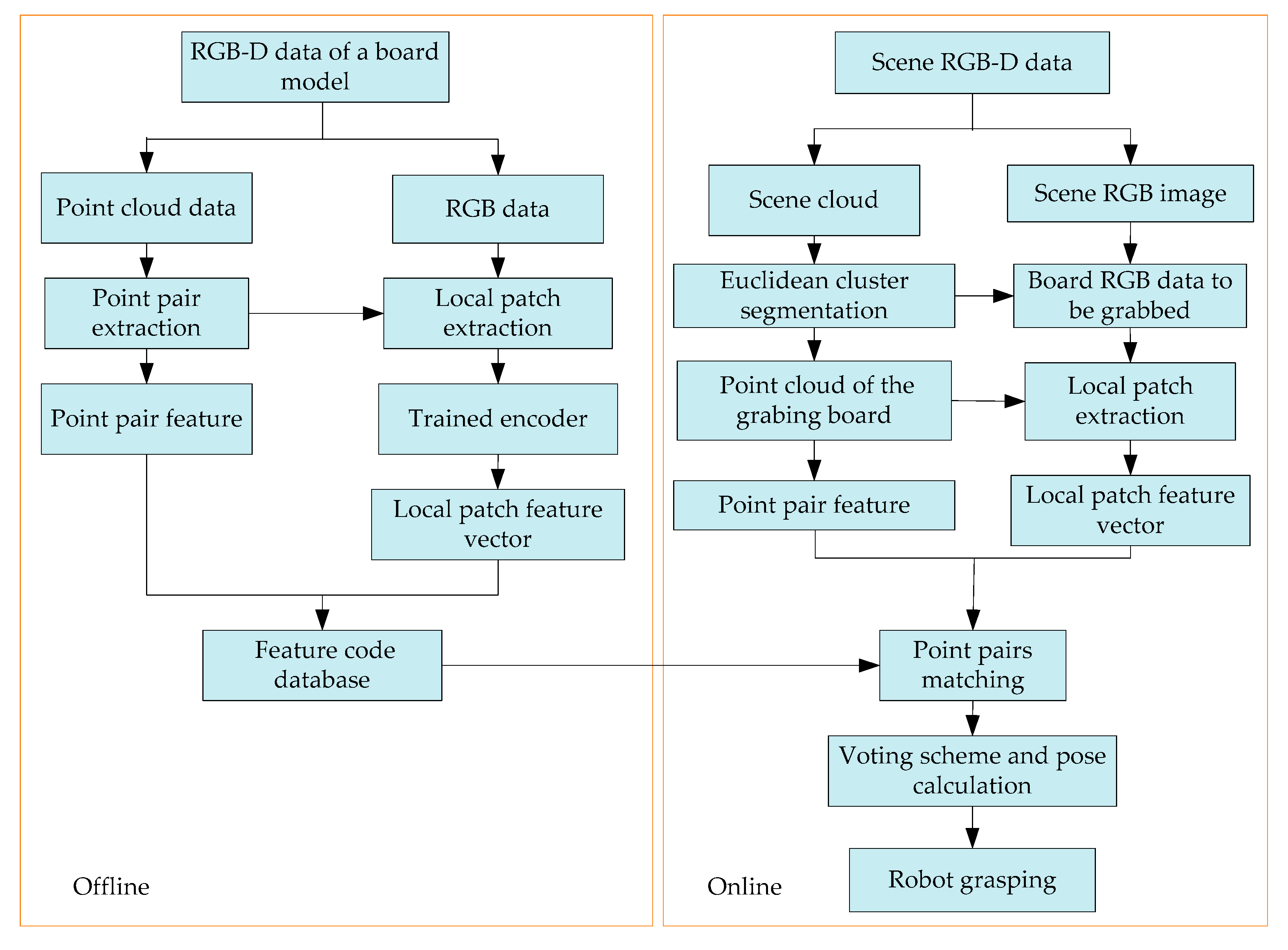

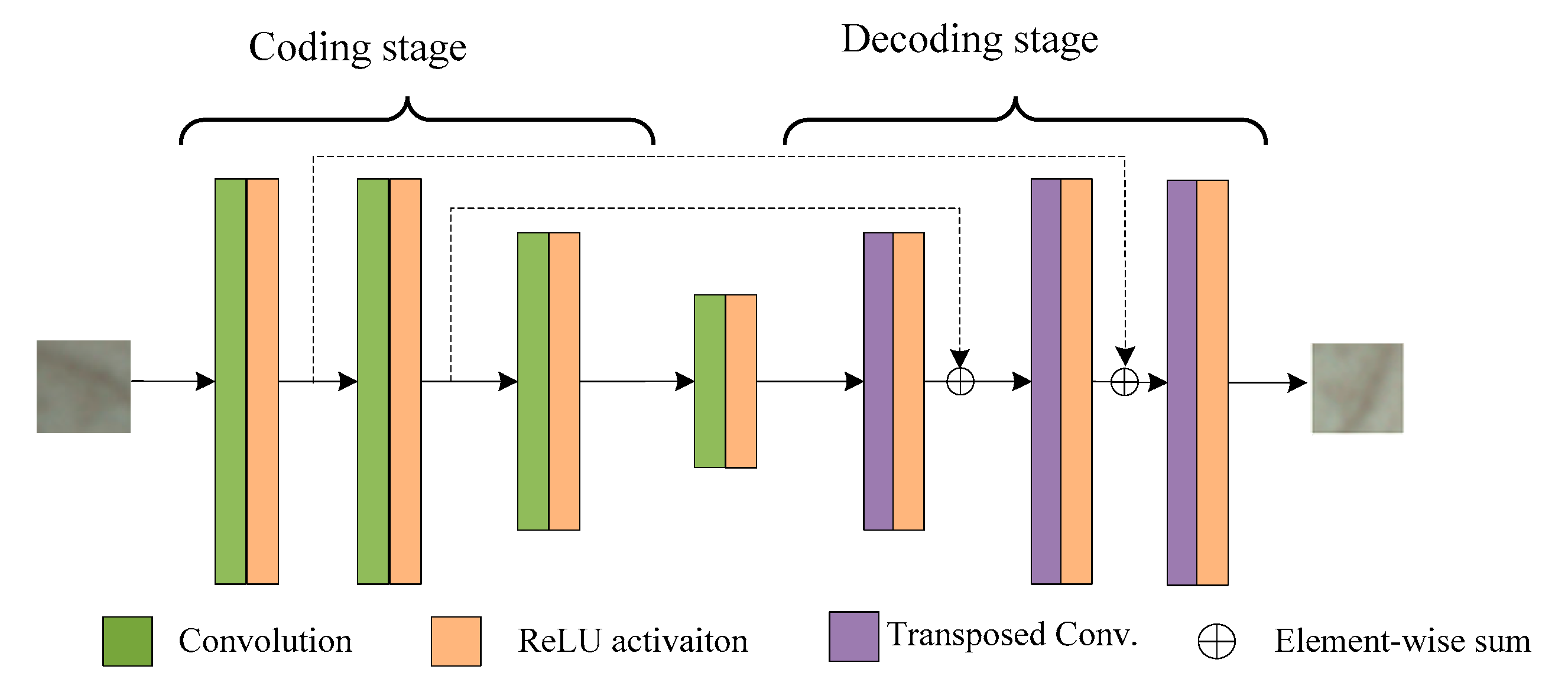

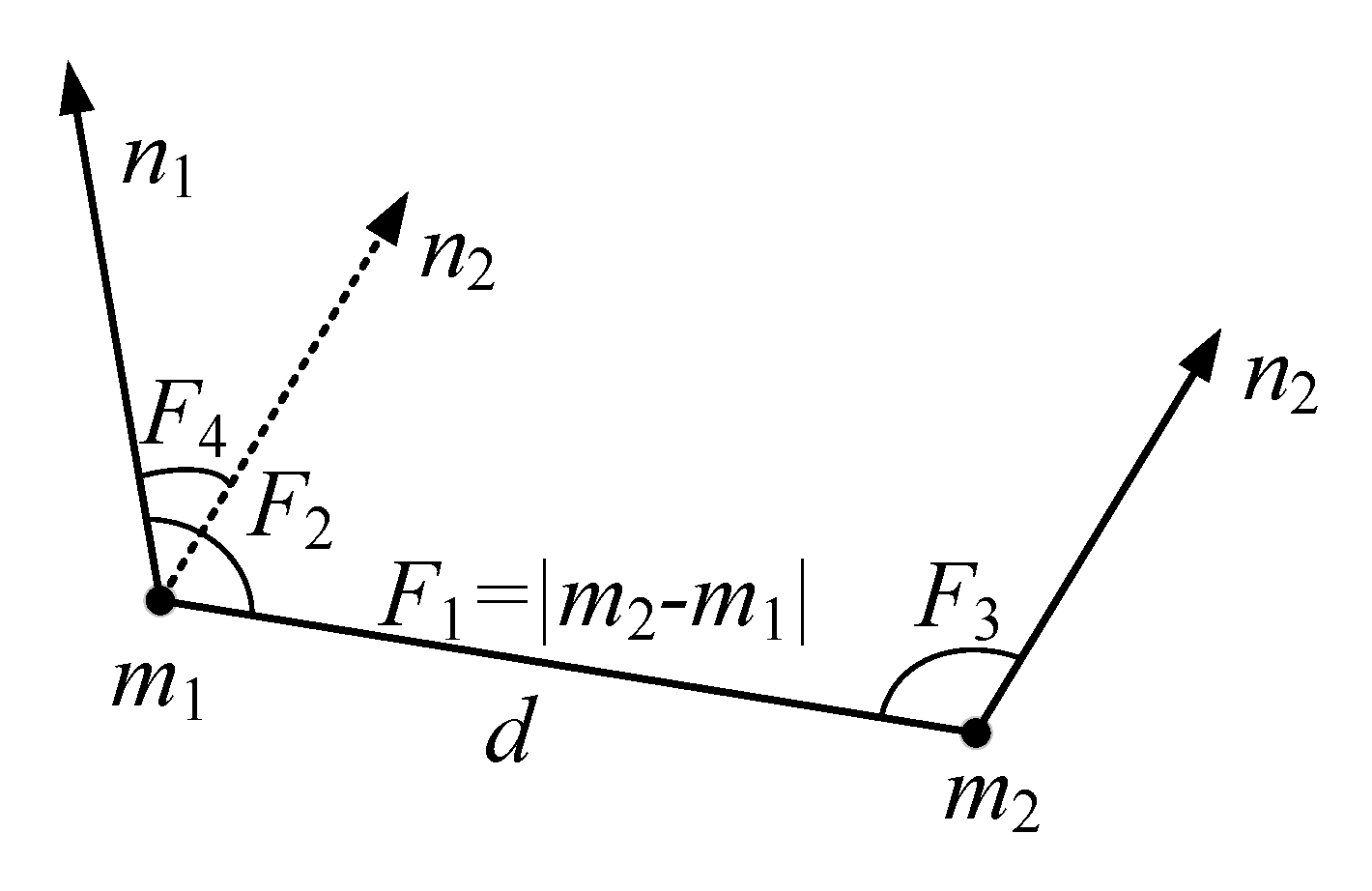

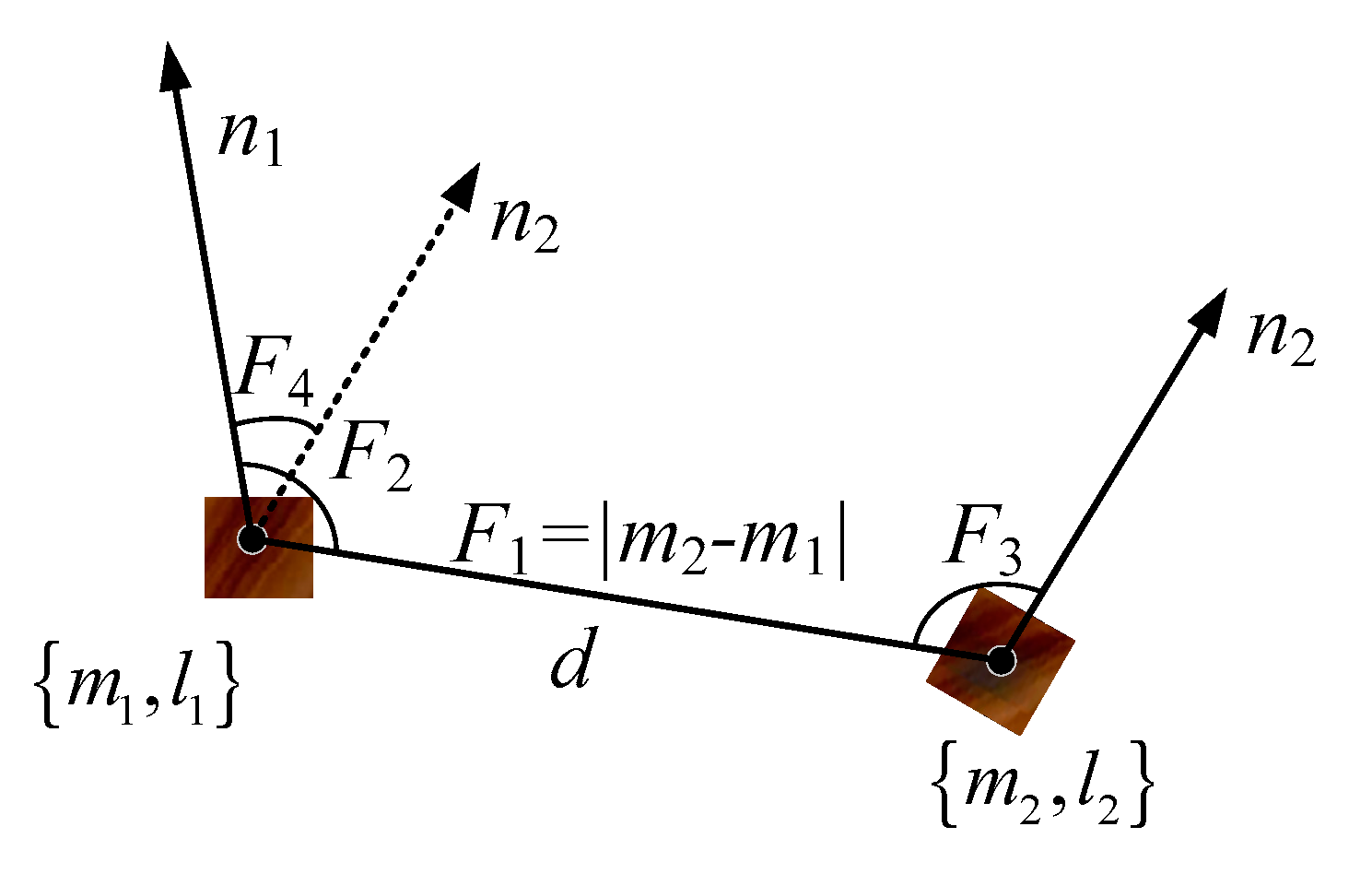

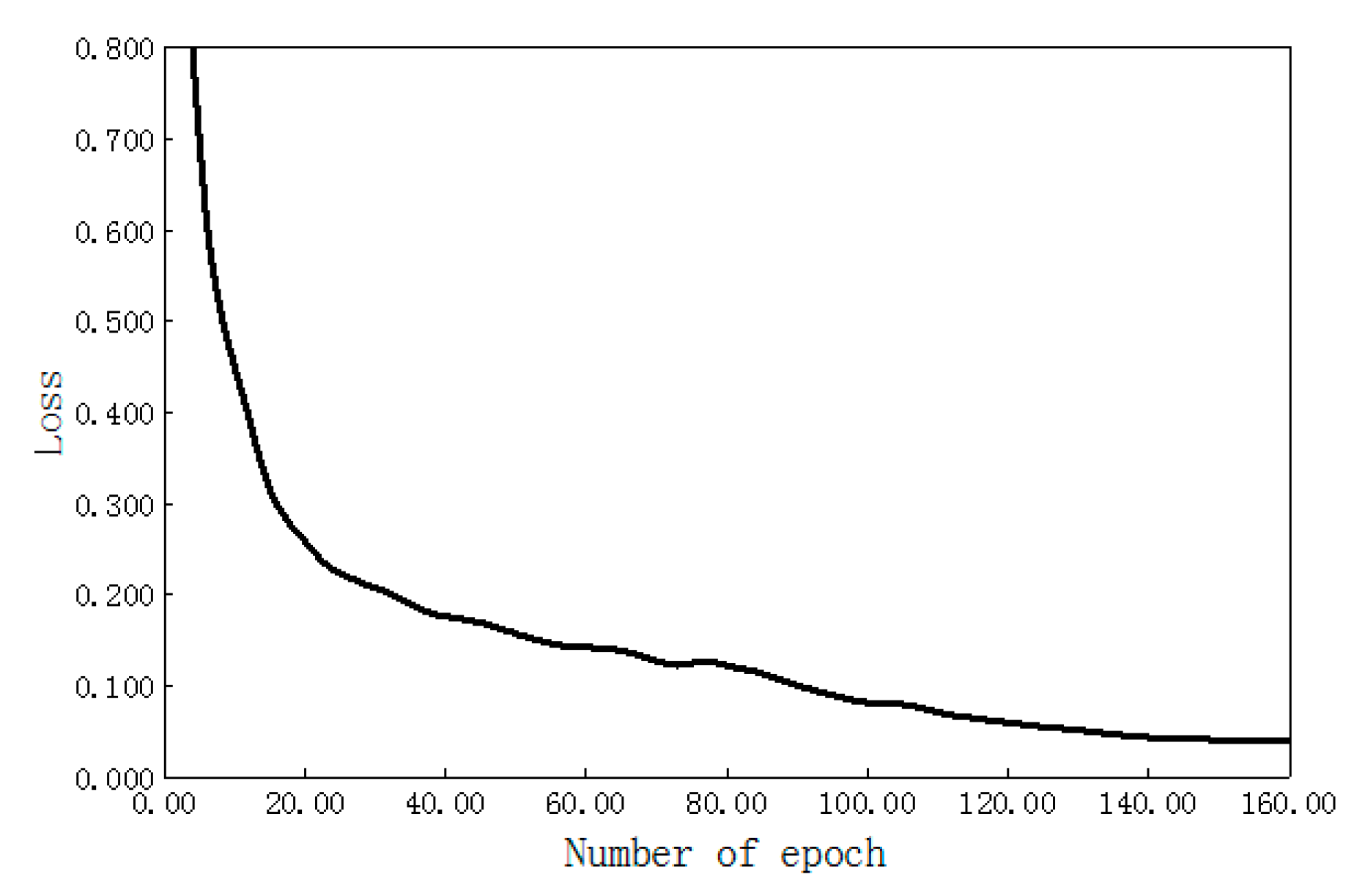

2. Methods of the Local Feature Descriptor Based on the Convolutional Autoencoder

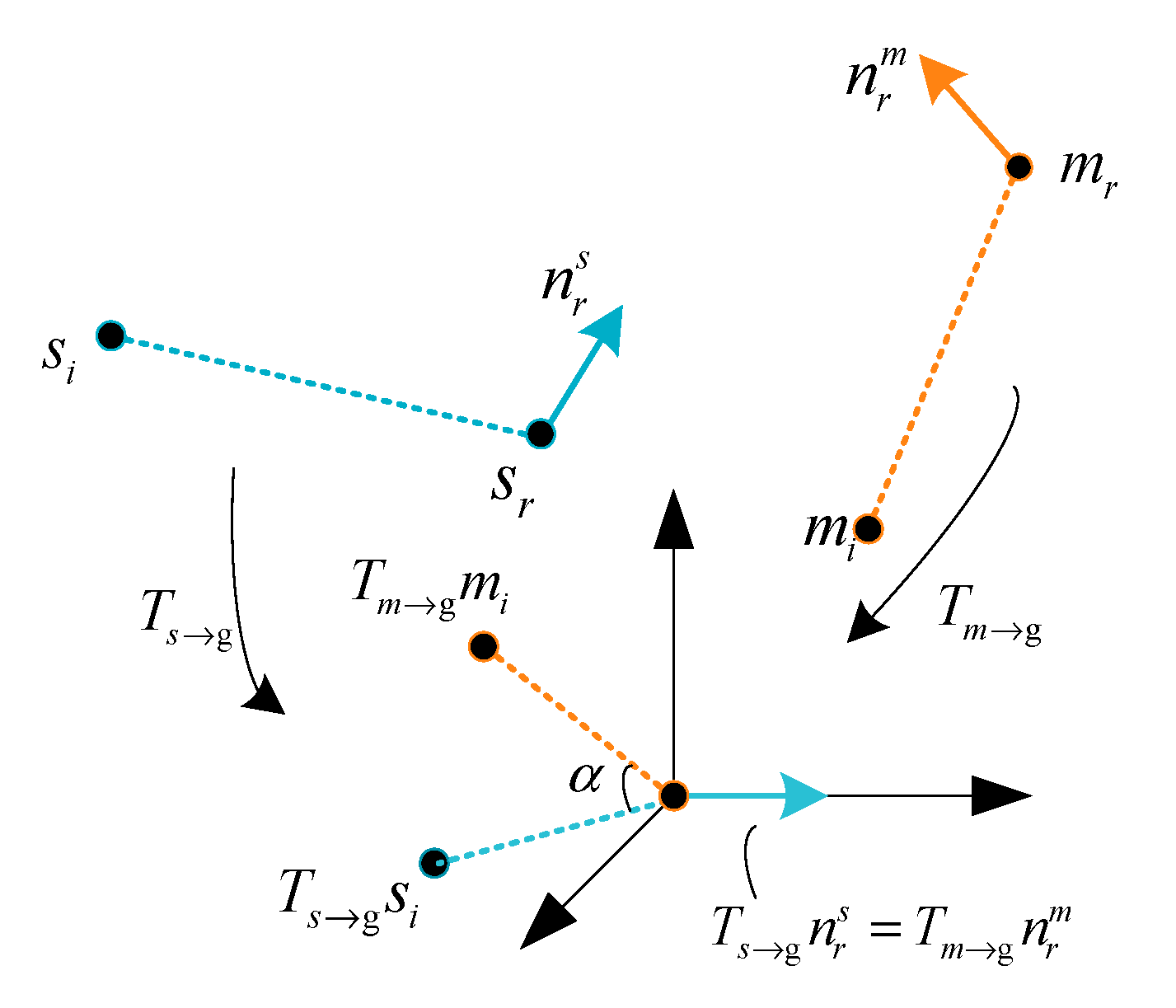

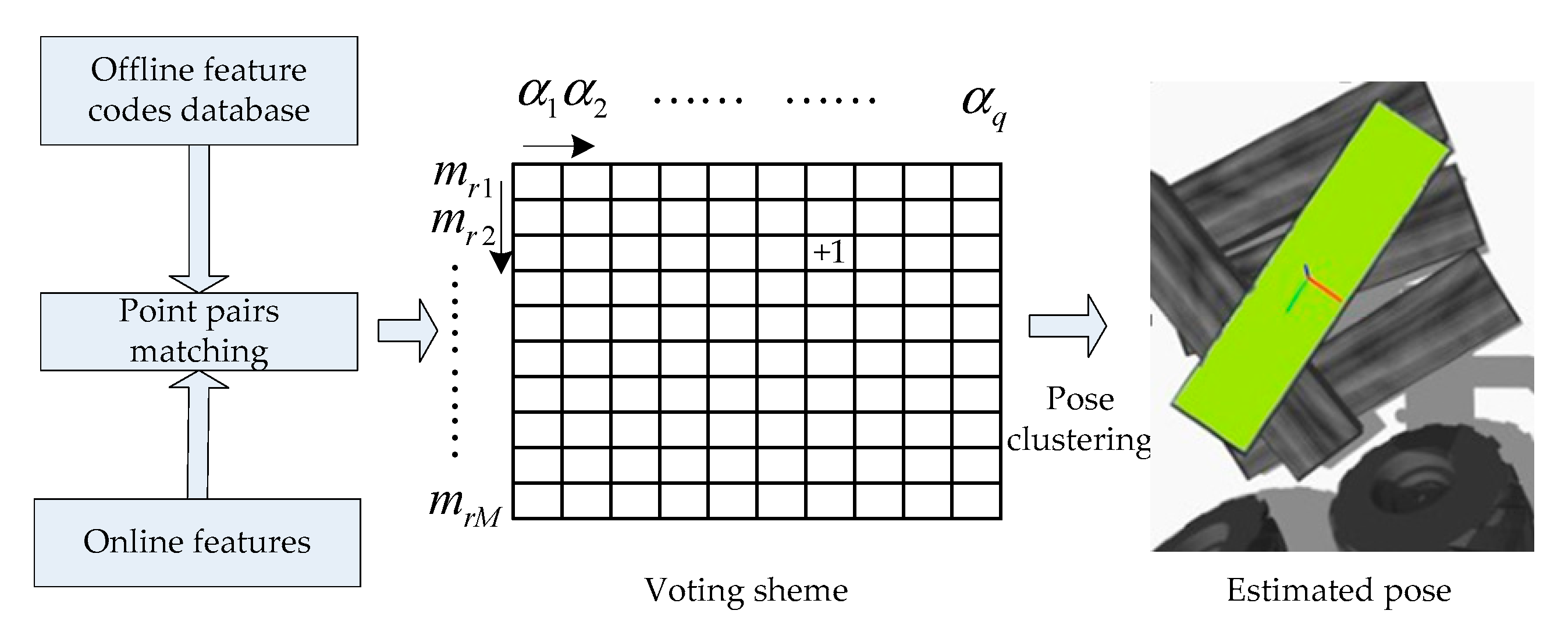

3. Offline Calculation Process: Model Feature Description Using Point Pair Features and Local Image Patch Features

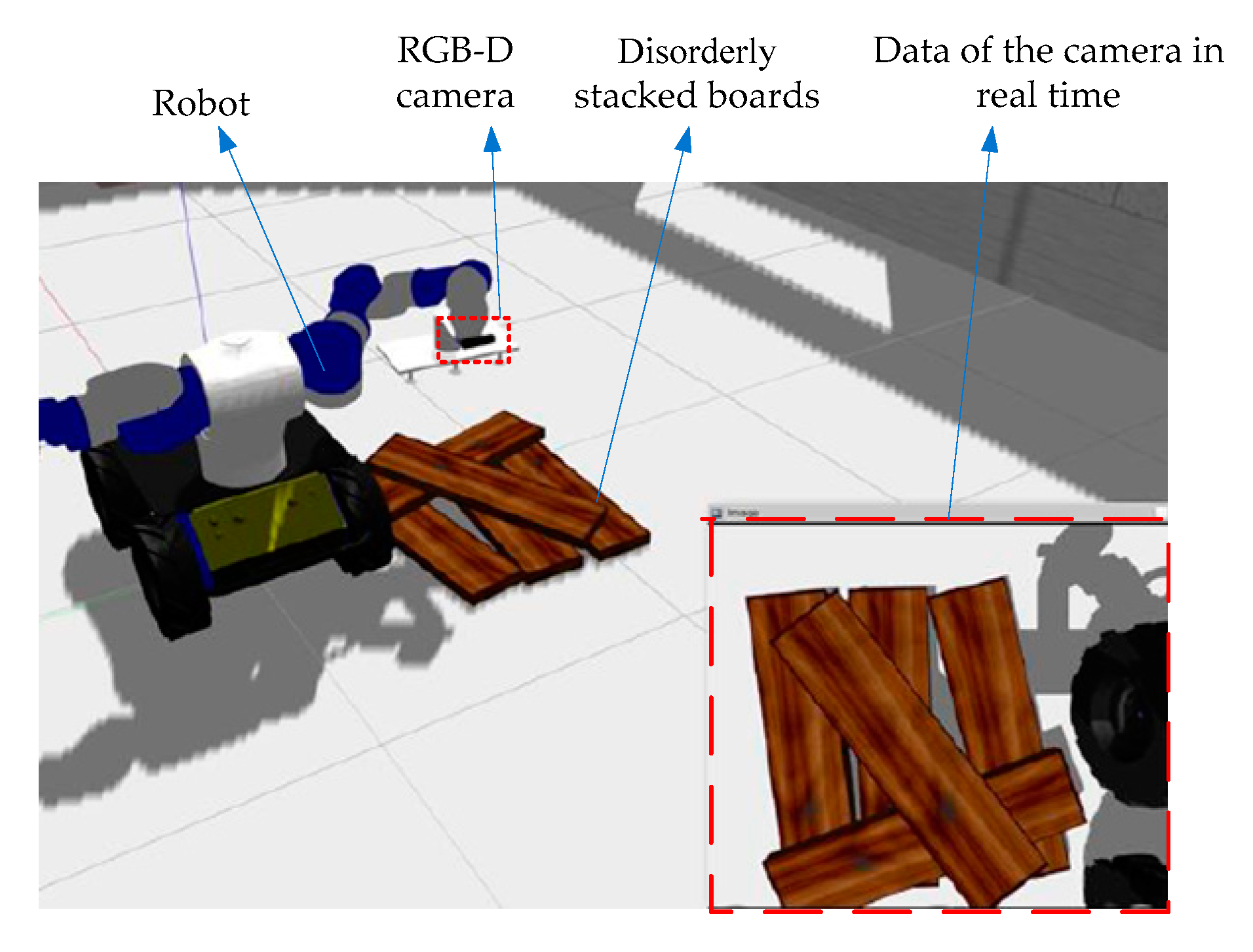

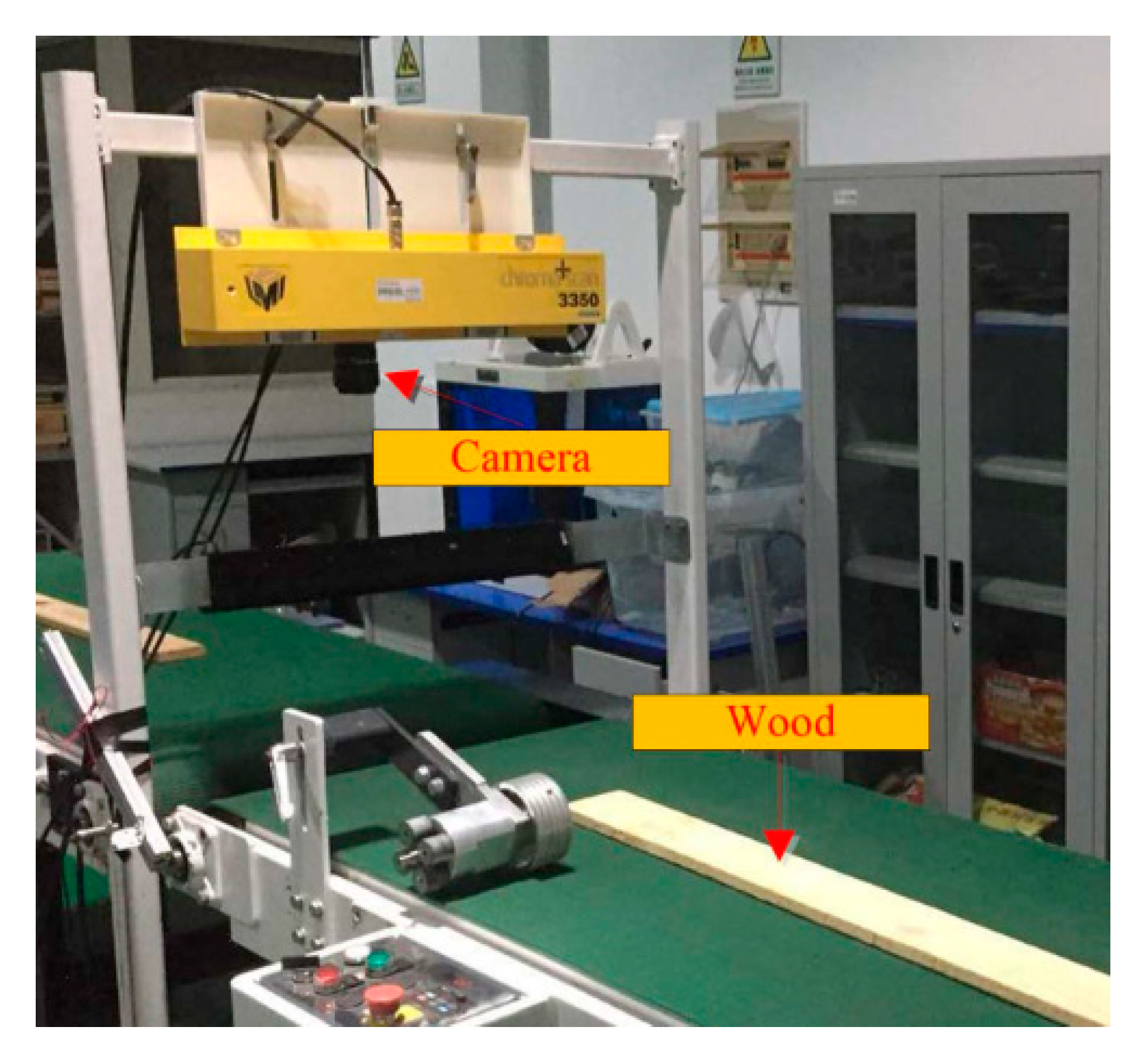

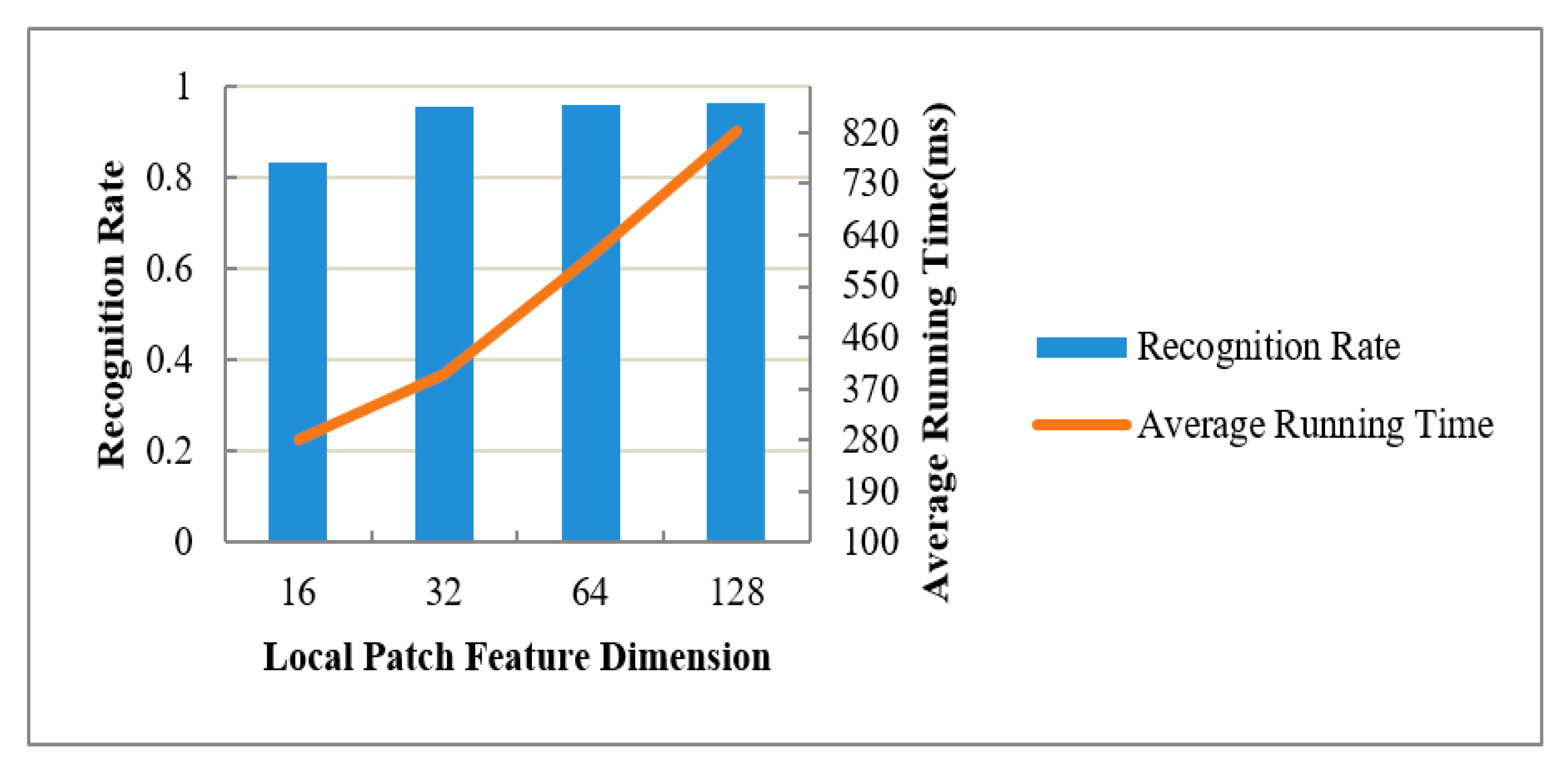

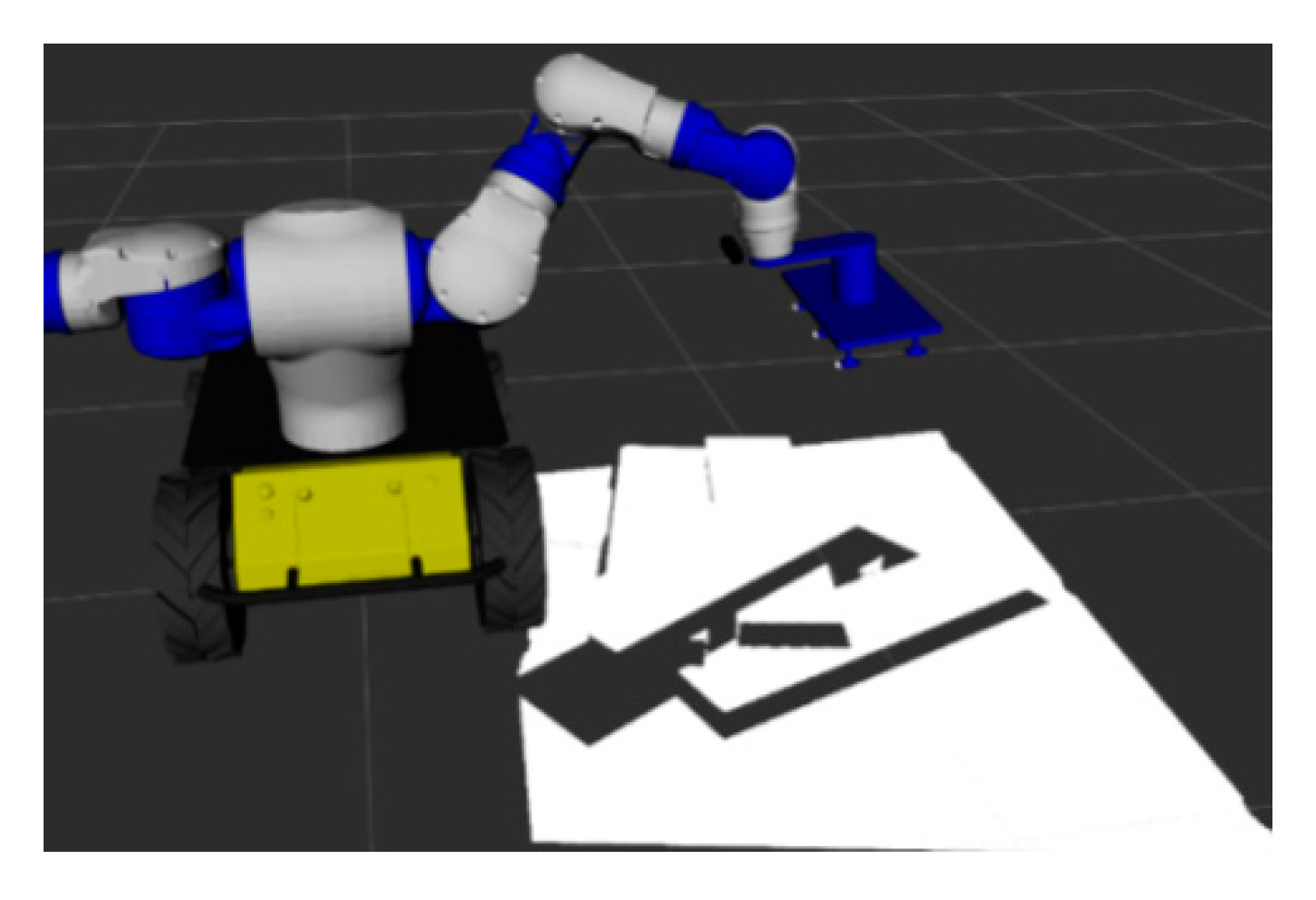

4. Online Calculation Process

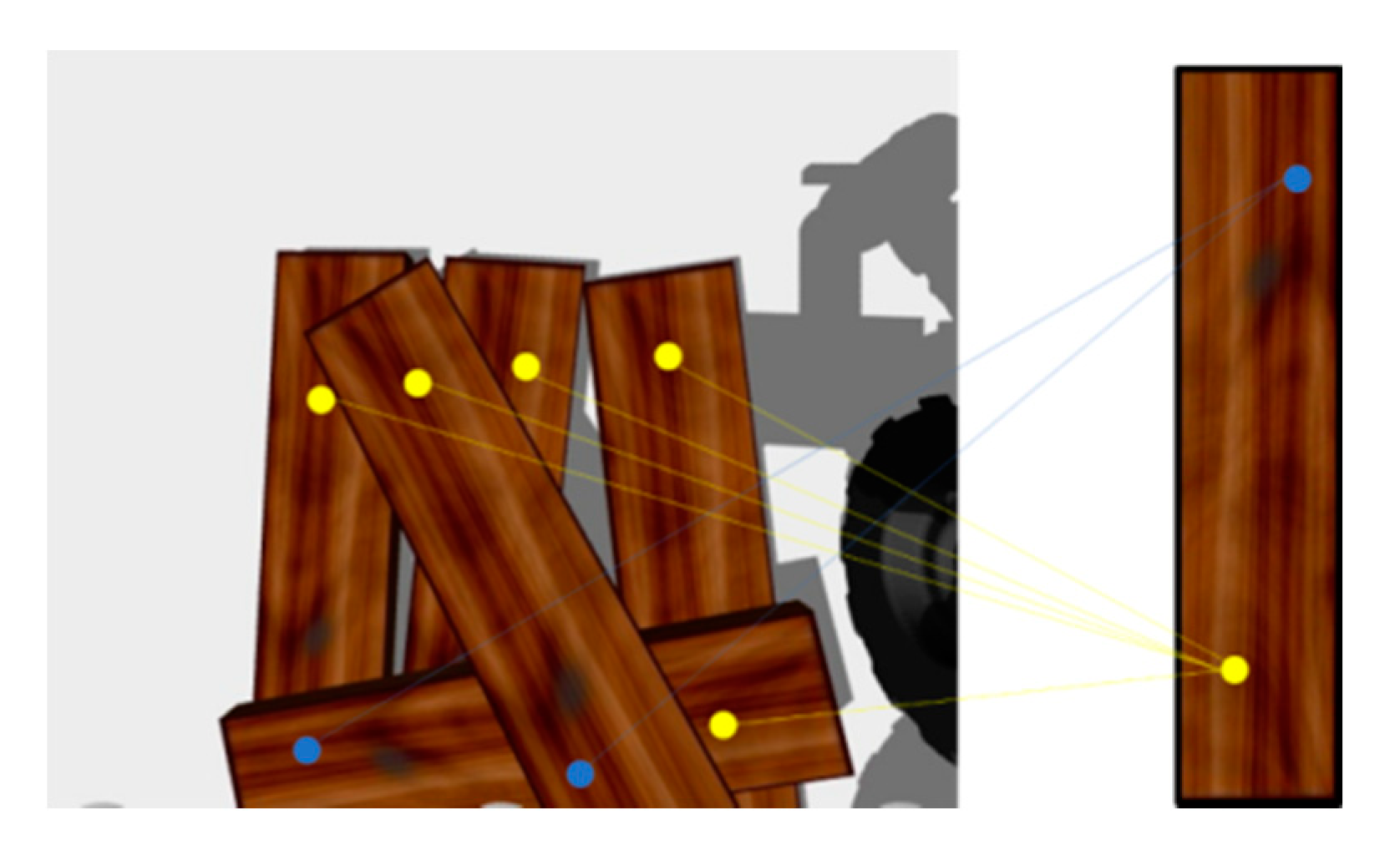

4.1. Generating the Feature Code of the Plank to Be Grasped

4.2. Plank Pose Voting and Pose Clustering

5. Experiments Results and Discussion

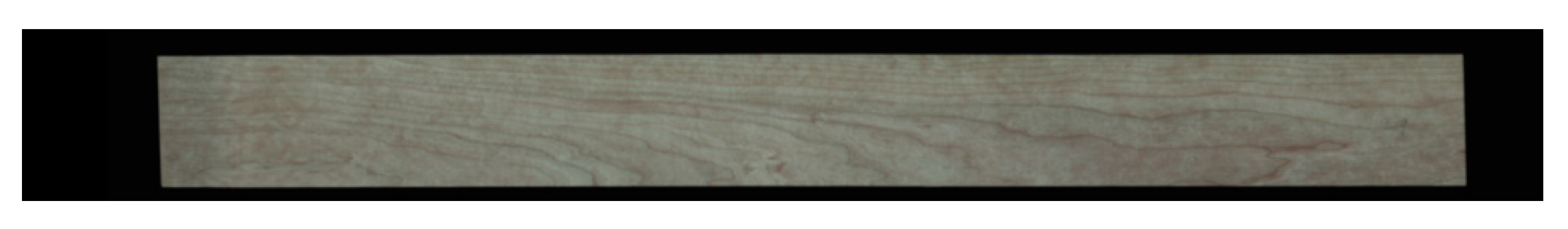

5.1. Data Preparation and Convolutional Autoencoder Training

5.2. Grasping of Planks

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, Y.; Wei, X.; Shen, H.; Ding, L.; Wan, J. Robust Fusion for Rgb-d Tracking Using Cnn Features. Appl. Soft Comput. 2020, 92, 106302. [Google Scholar] [CrossRef]

- Hinterstoisser, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Bradski, G.; Konolige, K.; Navab, N. Model based training, detection and pose estimation of texture-less 3D objects in heavily cluttered scenes. In Proceedings of the Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012; pp. 548–562. [Google Scholar]

- Rios-Cabrera, R.; Tuytelaars, T. Discriminatively Trained Templates for 3D Object Detection: A Real Time Scalable Approach. In Proceedings of the International Conference on Computer Vision (ICCV 2013), Sydney, Australia, 1–8 December 2013; pp. 2048–2055. [Google Scholar]

- Rusu, R.; Bradski, G.; Thibaux, R.; HsuRetrievalb, J. Fast 3D recognition and pose using the viewpoint feature histogram. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2155–2162. [Google Scholar]

- Wang, F.; Liang, C.; Ru, C.; Cheng, H. An Improved Point Cloud Descriptor for Vision Based Robotic Grasping System. Sensors 2019, 19, 2225. [Google Scholar] [CrossRef] [PubMed]

- Birdal, T.; Ilic, S. Point pair features based object detection and pose estimation revisited. In Proceedings of the 2015 International Conference on 3D Vision (3DV), Lyon, France, 19–22 October 2015; pp. 527–535. [Google Scholar]

- Drost, B.; Ulrich, M.; Navab, N.; Ilic, S. Model globally, match locally: Efficient and robust 3D object recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 998–1005. [Google Scholar]

- Li, D.; Wang, H.; Liu, N.; Wang, X.; Xu, J. 3D Object Recognition and Pose Estimation from Point Cloud Using Stably Observed Point Pair Feature. IEEE Access 2020, 8, 44335–44345. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Yan, K.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. 2. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Johnson, A.E.; Hebert, M. Using spin images for efficient object recognition in cluttered 3D scenes. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 433–449. [Google Scholar] [CrossRef]

- Salti, S.; Tombari, F.; di Stefano, L. SHOT: Unique signatures of histograms for surface and texture description. Comput. Vis. Image Underst. 2014, 125, 251–264. [Google Scholar] [CrossRef]

- Choi, C.; Christensen, H.I. 3D pose estimation of daily objects using an RGB-D camera. In Proceedings of the 25th IEEE/RSJ International Conference on Robotics and Intelligent Systems, IROS 2012, Vilamoura, Algarve, Portugal, 7–12 October 2012; pp. 3342–3349. [Google Scholar]

- Ye, C.; Li, K.; Jia, L.; Zhuang, C.; Xiong, Z. Fast hierarchical template matching strategy for real-time pose estimation of texture-less objects. In Proceedings of the International Conference on Intelligent Robotics and Applications, Hachioji, Japan, 22–24 August 2016; pp. 225–236. [Google Scholar]

- Muñoz, E.; Konishi, Y.; Beltran, C.; Murino, V.; Del Bue, A. Fast 6D pose from a single RGB image using Cascaded Forests Templates. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4062–4069. [Google Scholar]

- Liu, D.; Arai, S.; Miao, J.; Kinugawa, J.; Wang, Z.; Kosuge, K. Point Pair Feature-Based Pose Estimation with Multiple Edge Appearance Models (PPF-MEAM) for Robotic Bin Picking. Sensors 2018, 18, 2719. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Hashimoto, K. Curve Set Feature-Based Robust and Fast Pose Estimation Algorithm. Sensors 2017, 17, 1782. [Google Scholar]

- Wu, C.H.; Jiang, S.Y.; Song, K.T. CAD-based pose estimation for random bin-picking of multiple objects using a RGB-D camera. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 1645–1649. [Google Scholar]

- Chen, Y.K.; Sun, G.J.; Lin, H.Y.; Chen, S.L. Random bin picking with multi-view image acquisition and CAD-based pose estimation. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 2218–2223. [Google Scholar]

- Kehl, W.; Manhardt, F.; Tombari, F.; Ilic, S.; Navab, N. SSD-6D: Making RGB-based 3D detection and 6D pose estimation great again. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Caldera, S.; Rassau, A.; Chai, D. Review of Deep Learning Methods in Robotic Grasp Detection. Multimodal Technol. Interact. 2018, 2, 57. [Google Scholar] [CrossRef]

- Kumra, S.; Kanan, C. Robotic grasp detection using deep convolutional neural networks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 769–776. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for roboticgrasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Yu, K.T.; Donlon, E.; Hogan, F.R.; Bauza, M.; Ma, D.; Taylor, O.; Liu, M.; Romo, E.; et al. Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Kehl, W.; Milletari, F.; Tombari, F.; Ilic, S.; Navab, N. Deep learning of local RGB–D patches for 3D object detection and 6D pose estimation. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Zhang, H.; Cao, Q. Holistic and local patch framework for 6D object pose estimation in RGB-D images. Comput. Vis. Image Underst. 2019, 180, 59–73. [Google Scholar] [CrossRef]

- Le, T.-T.; Lin, C.-Y. Bin-Picking for Planar Objects Based on a Deep Learning Network: A Case Study of USB Packs. Sensors 2019, 19, 3602. [Google Scholar] [CrossRef] [PubMed]

- Tong, X.; Li, R.; Ge, L.; Zhao, L.; Wang, K. A New Edge Patch with Rotation Invariance for Object Detection and Pose Estimation. Sensors 2020, 20, 887. [Google Scholar] [CrossRef]

- Jiang, P.; Ishihara, Y.; Sugiyama, N.; Oaki, J.; Tokura, S.; Sugahara, A.; Ogawa, A. Depth Image–Based Deep Learning of Grasp Planning for Textureless Planar-Faced Objects in Vision-Guided Robotic Bin-Picking. Sensors 2020, 20, 706. [Google Scholar] [CrossRef] [PubMed]

- Vidal, J.; Lin, C.-Y.; Lladó, X.; Martí, R. A Method for 6D Pose Estimation of Free-Form Rigid Objects Using Point Pair Features on Range Data. Sensors 2018, 18, 2678. [Google Scholar] [CrossRef]

- Ni, C.; Zhang, Y.; Wang, D. Moisture Content Quantization of Masson Pine Seedling Leaf Based on StackedAutoencoder with Near-Infrared Spectroscopy. J. Electr. Comput. Eng. 2018, 2018, 8696202. [Google Scholar]

- Shen, L.; Wang, H.; Liu, Y.; Liu, Y.; Zhang, X.; Fei, Y. Prediction of Soluble Solids Content in Green Plum by Using a Sparse Autoencoder. Appl. Sci. 2020, 10, 3769. [Google Scholar] [CrossRef]

- Ni, C.; Li, Z.; Zhang, X.; Sun, X.; Huang, Y.; Zhao, L.; Zhu, T.; Wang, D. Online Sorting of the Film on CottonBased on Deep Learning and Hyperspectral Imaging. IEEE Access. 2020, 8, 93028–93038. [Google Scholar] [CrossRef]

- Li, Y.; Hu, W.; Dong, H.; Zhang, X. Building Damage Detection from Post-Event Aerial Imagery Using Single Shot Multibox Detector. Appl. Sci. 2019, 9, 1128. [Google Scholar] [CrossRef]

- Ranjan, R.; Patel, V.M.; Chellappa, R. Hyperface: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 121–135. [Google Scholar] [CrossRef]

- Zhao, W.; Jia, Z.; Wei, X.; Wang, H. An FPGA Implementation of a Convolutional Auto-Encoder. Appl. Sci. 2018, 8, 504. [Google Scholar] [CrossRef]

- Ni, C.; Wang, D.; Vinson, R.; Holmes, M. Automatic inspection machine for maize kernels based on deep convolutional neural networks. Biosyst. Eng. 2019, 178, 131–144. [Google Scholar] [CrossRef]

- Ni, C.; Wang, D.; Tao, Y. Variable Weighted Convolutional Neural Network for the Nitrogen Content Quantization of Masson Pine Seedling Leaves with Near-Infrared Spectroscopy. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2019, 209, 32–39. [Google Scholar] [CrossRef] [PubMed]

- Gallego, A.-J.; Gil, P.; Pertusa, A.; Fisher, R.B. Semantic Segmentation of SLAR Imagery with Convolutional LSTM Selectional AutoEncoders. Remote Sens. 2019, 11, 1402. [Google Scholar] [CrossRef]

- Aloise, D.; Deshpande, A.; Hansen, P.; Popat, P. NP-hardness of Euclidean sum-of-squares clustering. Mach. Learn. 2009, 75, 245–248. [Google Scholar] [CrossRef]

- Dong, J.; Peng, Y.; Ying, S.; Hu, Z. LieTrICP: An improvement of trimmed iterative closest point algorithm. Neurocomputing 2014, 140, 67–76. [Google Scholar] [CrossRef]

| Connection Layer | Filter Size | Feature Size | Stride Size | Activation Function | |

|---|---|---|---|---|---|

| Coding stage | Input layer | —— | 22 × 22 | —— | —— |

| Convolution layer 1 | 3 × 3 | 20 × 20 × 128 | 1 | Relu | |

| Convolution layer 2 | 3 × 3× 128 | 18 × 18 × 128 | 1 | Relu | |

| Convolution layer 3 | 3 × 3 × 128 | 9 × 9 × 256 | 2 | Relu | |

| Convolution layer 4 | 9 × 9 × 256 | 1 × 1 × 32 | —— | Relu | |

| Decoding stage | Transposed convolution 1 | 9 × 9 × 32 | 9 × 9 × 256 | —— | Relu |

| Transposed convolution 2 | 3 × 3 × 256 | 18 × 18 × 128 | 2 | Relu | |

| Transposed convolution 3 | 3 × 3 × 128 | 20 × 20 × 128 | 1 | Relu | |

| Transposed convolution 4 | 3 × 3 × 128 | 22 × 22 | 1 | Relu |

| PPF | CPPF | SSD-6D | LPI | LPPPF (Proposed Method) | |

|---|---|---|---|---|---|

| Recognition rate | 0.115 | 0.753 | 0.837 | 0.851 | 0.953 |

| Average operation time (ms) | 327 | 322 | 409 | 563 | 396 |

| Grasping success rate | 0.105 | 0.746 | 0.825 | 0.835 | 0.938 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, C.; Liu, Y.; Ding, F.; Zhuang, Z. Recognition and Grasping of Disorderly Stacked Wood Planks Using a Local Image Patch and Point Pair Feature Method. Sensors 2020, 20, 6235. https://doi.org/10.3390/s20216235

Xu C, Liu Y, Ding F, Zhuang Z. Recognition and Grasping of Disorderly Stacked Wood Planks Using a Local Image Patch and Point Pair Feature Method. Sensors. 2020; 20(21):6235. https://doi.org/10.3390/s20216235

Chicago/Turabian StyleXu, Chengyi, Ying Liu, Fenglong Ding, and Zilong Zhuang. 2020. "Recognition and Grasping of Disorderly Stacked Wood Planks Using a Local Image Patch and Point Pair Feature Method" Sensors 20, no. 21: 6235. https://doi.org/10.3390/s20216235

APA StyleXu, C., Liu, Y., Ding, F., & Zhuang, Z. (2020). Recognition and Grasping of Disorderly Stacked Wood Planks Using a Local Image Patch and Point Pair Feature Method. Sensors, 20(21), 6235. https://doi.org/10.3390/s20216235