Abstract

The installation of solar plants everywhere in the world increases year by year. Automated diagnostic methods are needed to inspect the solar plants and to identify anomalies within these photovoltaic panels. The inspection is usually carried out by unmanned aerial vehicles (UAVs) using thermal imaging sensors. The first step in the whole process is to detect the solar panels in those images. However, standard image processing techniques fail in case of low-contrast images or images with complex backgrounds. Moreover, the shades of power lines or structures similar to solar panels impede the automated detection process. In this research, two self-developed methods are compared for the detection of panels in this context, one based on classical techniques and another one based on deep learning, both with a common post-processing step. The first method is based on edge detection and classification, in contrast to the second method is based on training a region based convolutional neural networks to identify a panel. The first method corrects for the low contrast of the thermal image using several preprocessing techniques. Subsequently, edge detection, segmentation and segment classification are applied. The latter is done using a support vector machine trained with an optimized texture descriptor vector. The second method is based on deep learning trained with images that have been subjected to three different pre-processing operations. The postprocessing use the detected panels to infer the location of panels that were not detected. This step selects contours from detected panels based on the panel area and the angle of rotation. Then new panels are determined by the extrapolation of these contours. The panels in 100 random images taken from eleven UAV flights over three solar plants are labeled and used to evaluate the detection methods. The metrics for the new method based on classical techniques reaches a precision of 0.997, a recall of 0.970 and a score of 0.983. The metrics for the method of deep learning reaches a precision of 0.996, a recall of 0.981 and a score of 0.989. The two panel detection methods are highly effective in the presence of complex backgrounds.

1. Introduction

The increased use of renewable and low-carbon energy has led to economic [1] and environmental benefits [2]. Among the renewable sources is the use of solar energy in for example on the rooftop of houses [2,3], buildings [4] or on wide fields for power plants [5]. Solar energy is commonly captured by photovoltaic panels. However, the efficiency of the panels is deteriorates in the presence of anomalies, such as hot spots. Hot spots occur due to non-uniform energy generation between the photo-voltaic cells, with differences that exceed 5% of the temperature admitted in standard test periods. These sudden temperature rises have the potential to lead to spontaneous ignition [6].

Anomalies in solar panels lead to energy and temperature changes, so they are measured with current and voltage indicators [7,8] and thermal sensors [9,10,11]. The anomalies measured by thermal sensors are changes in energy efficiency [12], material fatigue [13,14] and hot spots [15,16]. However, hot spots can be catalogued at different levels of failure, which are associated with their geometry [17]. Since the classification of anomalies with thermal cameras is based on their geometry, a first step for their correct classification is the identification of the solar panel. Related research has also focused on the detection of the solar panels array [18,19].

Due to the creation of large solar plants, it has been required to incorporate the use of drones for the inspection of massive amounts of solar panels [20]. The images generated by this technology, when processed with photogrammetric methods, allow generating orthomosaics and incorporating thermal and RGB image layers to geographic information systems (GIS) [21,22,23]. For the identification of the panels, information from the thermal image has been used, together with the RGB image [24] or the 3D models [25]. However, the developments are focused on the identification of the panels using only thermal video [26,27] or thermal imaging [28].

Panel detection focuses on identifying rectangular structures. However, this identification is difficult in thermal images because not all panel edges are visible [24] and because of irregularities by weeds shades, sunlight reflection [20] or hot spots. By adding this type of diagnostic difficulties with other variables such as different flight heights, changes in lighting [29], panel like structures, energy lines and images with lens distortion, which are all considered as complex backgrounds [30] (Figure 1).

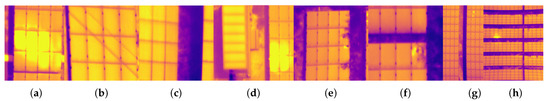

Figure 1.

Examples of problems in thermal imaging acquired with drones with complex backgrounds. (a) Sunlight reflection. (b) Energy lines. (c) Poorly defined panel edges. (d) Like panel structures. (e) weeds shades. (f) Edges distortion by hot spots. (g) Lens distortion. (h) Display of up to 350 panels per image.

The identification of solar panels in thermal images with complex backgrounds has five challenges:

- Hot spots create an atypical distribution of data, which leads to a loss of image contrast.

- The edges suffer from distortion and diffusion.

- There are structures that have a panel-like geometry.

- Edge detection fails due to image saturation in areas with hot spots or sunlight reflections.

- Other occlude obstruct the panel, making its full geometry invisible.

This paper focuses on the first step for a proper diagnosis of the fault, in which a solar panel detection is necessary to delimit the region of interest for the detection and classification of the anomaly, because the correct classification is based on geometries in the panel or between panels. The development of methodologies for the detection of solar panels directly from the thermal image will allow the design of a fully automated anomaly classification system with real time processing. Therefore, this work is focused on reducing panel detection failures, since those that are not detected are those that typically contain anomalies. So, the main contributions are:

- A novel method based on classical Machine Learning is developed for the detection of solar panels using effective preprocessing steps.

- our novel method based on classical Machine Learning approach is compared against a state-of-the-art Deep Learning algorithm.

- A novel method predicting missing (i.e., undetected) solar panels. This method is used as a post-processing step for both detection algorithms: based on classical techniques and deep learning. The post-processing improves the recall of both methods.

Related Work

From the investigations with images taken with drones that only use thermal images as reference, some researches only focused on detecting the damage without discriminating the panels. To identify the damage, they calculate the temperature difference [31] with Canny edge detection [26]. However, the presence of hot spots creates an atypical distribution of data, which leads to a loss of image contrast, making it difficult to identify the panels. Alternatively, the panels were segmented by the following methods: Template Matching [32], segmenting the image using k-means clustering [33], the active contour level sets method (MCA) and the area filtering (AF) approach [28]. These methods have been referred to as classical methods, which fail to focus only on identifying panels and do not include a method for differentiating them from the environment with panel-like structures.

For the detection of the panels among the models used in deep learning are: an algorithm based on a fully convolutional neural network and a dense conditional random field [30] and a convolutional neural network framework called ’You Only Look Once’ (YOLO) [29]. However, the presence of energy lines creates atypical geometries that none of the methods can overcome.

The weaknesses of the related works are grouped into three categories: the first category focuses only on the detection of hot spots; the second category uses photos with panels in high resolution and do not reference solutions for the features of the complex backgrounds; the third category handles deep learning techniques, but does not propose methods for projecting the missing panels. This information is presented in more detail in Table 1.

Table 1.

Comparison between the proposed method and other research identifying solar panels using only thermal images with a complex background.

2. Materials and Methods

2.1. Experimental Setup and Study Area

The thermal images were acquired with a DJI Zenmuse XT camera developed by FLIR [36], with 640 × 520 pixels, in TIF format with 14 bits. The data set contains 11 flights from three different solar plants, with a relative flight height of 34 to 56 m. The images acquired from these solar plants presented have complex backgrounds, and all the analysis is based only on the information available in the thermal image, so, other flight plan information is not included. In total, 18,244 panels are delimited out of 100 randomly selected thermal images.

Image processing was coded in the python 3 language with CUDA toolkit, the principal libraries are NumPy [37], OpenCV [38], scikit-image [39], SimpleITK, PyRadiomics [40,41] and Detectron 2 [42].

The new method based on classical techniques is described in Section 2.2. The method based on deep learning is described in Section 2.3. These methods are evaluated with the precision metrics described in Section 2.4.

2.2. Solar Panel Detection Using Our New Method Based on Classical Techniques

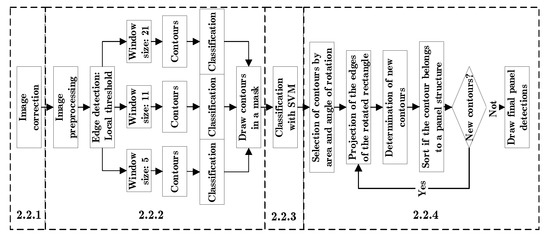

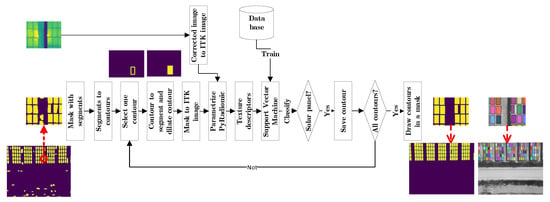

The first method to detect solar panels consists of the following steps: first an image correction; second, an image segmentation; third, a segment classification with machine learning; finally, a post-processing step based on the detected panels (Figure 2). The proposed method highlights the use of the OpenCV library, since it allows to calculate a contour and its characteristics such as: the image area, a rotated rectangle, the angle of rotation and convert the contour into a segment of the image, among others [38].

Figure 2.

Method based on classical techniques and the numbers of the sections where the algorithm is described.

2.2.1. Image Correction

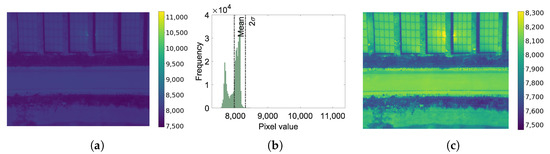

In image processing some data normalization steps are often required. However, in cases where hot spots or areas with sun reflection occur, the normalization implies the generation of images with low contrast and loss in the textural characteristics. Therefore, to avoid the loss of subtleties in the image it is proposed to remove the outlier data in the following way: the average plus twice the standard deviation is used as the reference value. Outliers are corrected if less than 5 percent of the data exceeds the reference value. To reduce the distribution tail, the outliers are assigned to the reference value (See Figure 3).

Figure 3.

Image correction to reduce the effect of hot spots or sun reflection: (a) the original image; (b) the histogram with the probability distribution function and the values of the mean and twice the standard deviation; (c) the corrected image.

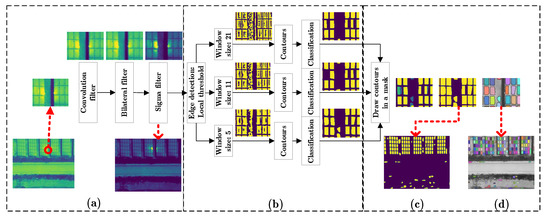

2.2.2. Image Segmentation

The detection of panels is based on the detection of their edges, which have a temperature lower than the center of the panel. Among the basic concepts used is that panels are four-sided structures that can be simplified as rectangles. However, solar panels on complex backgrounds have anomalies that lead to distortions, loss of continuity at the edges and diffuse edges that make it difficult to detect the rectangles. Therefore, the proposed algorithm consists of an image preprocessing, edge detection and segment determination. The image preprocessing uses a convolution filter, a bilateral filter and a gamma correction. The edge detection involves threshold settings at different ranges in order to determine most of the image edges. Each edge detection process involves the calculation of contours that are evaluated in shape and size. These contours are drawn as a mask in an iterative way in an image, which at the end collects all the segmentations made.

Correct line detection is based on changes in the pixel values that identify a border. However, the edges of certain panels are represented only by a dashed line one pixel wide and therefore our method seeks to make these types of edges visible. The image preprocessing has the following steps: first, a convolution filter is used with the following mask [1 1 1; 1 1 1; 1 1 1]. Usually, this filter is used in other contexts to generate noise, but in this case it is used to increase the width of the edge due to the displacement of the data by one pixel around it. Second, in certain panels in their corners there are no marked differences in temperature with their surroundings and in other cases the edges are diffuse, so a bilateral filter is used to homogenize the data in 3-pixel windows with the aim of emphasizing the borders. So, the bilateral filter is applied with the following configuration: 3 range pixels, a sigma filter for the color space of 200 and a sigma filter in space coordinates of 50. With this configuration, the edges are preserved by having a greater variation in intensity between the pixels. Third, In order to emphasize the edges of the panels that do not contrast with their surroundings, the contrast of the image is enhanced by using a gamma correction with a very high value such as 4.8 (Figure 4a). The value was selected after evaluating different settings on images with low edge contrast.

Figure 4.

The image segmentation. (a) Image reprocessing. (b) Edge detection. (c) Mask with segments. (d) Panel detection of the first step.

The temperature of the panels edges vary locally. Therefore, the edge detection uses an adaptive threshold, but the widths of the panel edges are of variable size. Therefore, our algorithm is used with three windows of different width (5, 11 and 21) so that with each pixel window panels are detected that with the other one are not (Figure 4b). After thresholding each of the images, edge detection is performed, calculating the internal contours of the panels. Since a panel is a rectangular structure, this step seeks to determine whether a segment contain that characteristic. The segment determination consists of a classification of the contours by their image area, the number of corners and their solidity. The contours are selected if they have: an area between 100 square pixels and the expected panel size, three to four corners and a solidity greater than 0.8. The contour is used to determine an area of the image called a segment, It is assumed that an segment smaller than 100 square pixels does not represent a panel. The number of contour corners are counted from the approximation of the contour to a shape with an OpenCV function using the Ramer–Douglas–Peucker algorithm. “Solidity is the ratio of contour area to its convex hull area” [38]. Finally, The segments are eroded to avoid overlapping and these are drawn as a mask on a zero matrix shaped like the original image (Figure 4c). To facilitate the evaluation each segment is drawn with a different color on the grayscale corrected image (Figure 4d).

2.2.3. Panel Classification with Svm

This is one of the most important steps of the proposed algorithm, because up to this point there is a series of contours that has a similar shape to a rectangle, but it is not known if this is a panel or not, so the method uses a supervised classification process based on the texture of the panels.

Using the mask with rectangular segments, the contours are calculated again. Each contour is converted to a segment of the image and this is dilated by circular structure element with a 6 pixel diameter to include the panel edges. An optimized set of texture descriptors are calculated for each segment, and it is classified whether it corresponds to a panel or not using a support vector machine (SVM).

The texture descriptors are calculated with the PyRadiomics library. This is a library developed for the extraction of features from medical images, which are based on texture descriptors. However, this library was chosen because it implements different transformations in the original image and characterizes it with different texture descriptors, allowing to evaluate subtle changes of the image. Moreover, with a configuration for 2D images it can be used in other contexts. The algorithm requires an image and a mask of the feature extraction area. The images must be converted to a Insight ToolKit image (ITK) [43] with the SimpleITK library. Two elements are parameterized in order to calculate as many features as possible. The algorithms that characterize the image with the specific texture descriptors (FeatureClass) and the image type (ImageType) which is the original image and its transformations. Six algorithms for texture decriptors (shape2D, firstorder, Gray Level Co-occurrence Matrix (GLCM), Gray Level Run Length Matrix (GLRLM), Gray Level Size Zone Matrix (GLSZM) and Gray Level Dependence Matrix (GLDM)) are applied on five transformed images (Original, LoG, sigma [3.0, 5.0] and Wavelet). This results in a total of 440 texture descriptors [40]. In this case the use of the image generated by the local and binary pattern algorithm was excluded because it was not efficient for classification.

A support vector machine is then used for the classification of a texture descriptor vector. Due to the amount of data, it uses the implementation of a regularized linear model with stochastic gradient descent (SGD) learning. The ten-fold cross validation method is used to determine the classification accuracy. After evaluating different configurations, a larger classification efficiency is obtained by: standardization of features by removing the mean and scaling to unit variance, max_iter = 1000, class_weight = “balanced”, learning_rate = “adaptive”, eta0 = 0.5, penalty = “elasticnet”, and tol = 1 × 10 [39,44].

The optimized texture descriptor group is calculated from a created database of texture descriptors with data corresponding to two classes: solar panels and other objects. The descriptors with a probability of less than 0.001 in an ANOVA test and a classification accuracy with SVM of more than 90 percent in cross validation are selected. Finally, a mask is created with the selected contours corresponding to the solar panels of the thermal image. To facilitate the evaluation each segment is drawn with a different color on the grayscale image (Figure 5).

Figure 5.

Panel classification with support vector machine.

2.2.4. Post-Processing

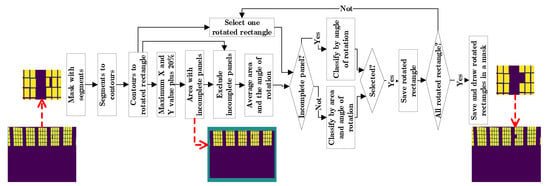

A final step is proposed in which the detected panels are used to infer the location of the panels that possibly remained undetected. This algorithm includes three steps: first, the selection of contours by image area and angle of rotation; second, the projection of edges of each rotated rectangle; third, the determination of new contours and their classification. The last two steps are repeated until no new contours are selected.

The selection of the contours of the detected panels is based on the following premises: the solar panels have four sides, the edges of the image have cut-out panels and most of the contours are solar panels. Therefore, each contour can be simplified to a rotated rectangle and the coordinates of the image where there are incomplete panels are related to the coordinates of the rotated rectangle. The rotated rectangle is composed of four points in the image, these points are represented by X and Y coordinates. The maximum X and Y shift value between points of each rotated rectangle is calculated and stored in a matrix. Finally, the section of the image border with incomplete panels, is calculated from the maximum value of the matrix of displacements in X and Y, plus a 20%. It is assumed that the region where there are incomplete panels is a rectangle with an edge shift towards the center with the maximum X value for vertical edges and the maximum Y value for horizontal edges. Thus, excluding the panels at the edges, the average area and the angle of rotation of the rotated rectangles are calculated. For the panels at the edges only the angle of rotation of the contour is calculated. Because images suffer from lens and perspective distortions, the contours with a 20% deviation from the rotation angle and a deviation of 20% below and 30% above the average area are eliminated (Figure 6).

Figure 6.

Selection of the contours of the detected panels, one panel is missing.

The projection of the edges of the rotated rectangle has as a premise that the solar panels are close to others in a structure that forms a grid. Therefore, the panels are represented by a rotated rectangle and each rectangle has four sides. Each side is two points joined together to represent a line, which is projected 1.1 times towards its ends. The projected lines of all rotated rectangles are drawn in a zeros image (Figure 7).

Figure 7.

Projection of the edges of the rotated rectangle, determination of new contours and their classification.

The determination of new contours and their classification is based on the premise that the panels have a homogeneous shape, are grouped and can be classified by texture descriptors. So, the contours are calculated from the matrix of zeros with the lines drawn. The contours are classified in different steps: first the contours with an area deviating less than 30% from the average area are selected. Second, contours that do not overlap with the detected panels are selected. Third, they are classified according to whether they belong to the panel structure or not. If they cannot be associated with the structure, they are classified using a support vector machine (see Section 2.2.3) (Figure 7).

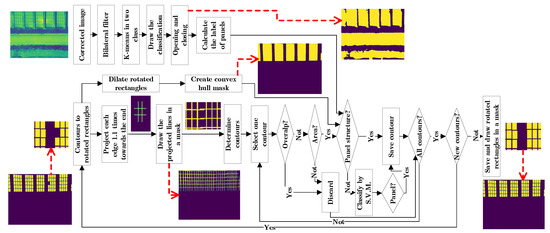

To evaluate whether an outline belongs to a panel structure, the following assumptions are made: if the detected panels are close enough, they are in structures and the pixels can be classified into two classes, one of which represents the panel structures. The first premise is represented in a mask, which is created in the following way: First, all detected panels are expanded until they overlap with their nearest neighbour. Second, all panels are drawn in a mask. Third, the contours of the mask are calculated. Fourth, the convex hull is calculated for each contour. Fifth, a mask is drawn with the convex hulls. The second premise is taken to a mask with the following steps: First, a bilateral filter is applied to the corrected image. Second, the pixels are classified in two classes using K-means. Third, a mask is drawn with the classification. Fourth, a morphological function of opening and closing is applied to eliminate the noise. Fifth, the label corresponding to the detected panels is determined. The selection takes into account the contour belonging to the convex hull mask and the k-means mask. Since undetected panels in the corners do not intersect with the entire area of the convex hull mask, then at least a 20% overlap is accepted. Because the k-means mask can have classification errors, it is acceptable for the contour to have 85% of the pixels classified as panel area.

2.3. Panel Prediction with Deep Learning

2.3.1. Detectron2

Regarding the panel prediction using deep learning, the PyTorch-based modular object detection library Detectron2 is employed. Detectron2 also has implementation of mask R-CNN and mask R-CNN have greater accuracy than those based on R-CNN, fast R-CNN, YOLO among others [45]. Furthermore, The Detectron2 library was chosen because it has better results on benchmarks compared to other popular open source mask R-CNN implementations [42]. The Mask R-CNN network is trained with thermal images with only one class: solar panels. With the support of the algorithm described in Section 2.2 and a graphical interface, all the panels present in an image are labelled in COCO format, even the panels at the edges of the image. Labeling is done in the visual interface by a user who eliminates false positives and corrects false negatives. Because Detectron2 only supports 8-bit images, a pre-treatment of the image is done and it is converted to three channels.

To accentuate the edges, a three-channel image is created as follows: first, a sigmoidal normalization of the 14-bit TIF image is applied; then the data is transformed in the range 0 to 255; the first channel has no transformation; the second channel has a bilateral filter as described in Section 2.2.2; and the third channel has a gamma correction as described in Section 2.2.2.

The detectron2 model is trained with the procedures reported for a new data set [42], the model is mask_rcnn_R_50_FPN_3x. The Mask R-CNN network is trained using 2000 cycles and a custom data loader. The custom data loader is used for data augmentation, with random changes of: 1 percent rotation, horizontal flip, contrast between 0.5 and 1.5, brightness between 0.6 and 1.5, lighting of 2, saturation between 0.5 and 1.5 and a crop function. Through previous evaluations it was determined that the prediction with the score threshold of 0.2 does not affect the false positives and allows not to exclude panels of difficult detection. Finally, the results are drawn on an image and each prediction is exported to a mask in a NumPy file [37].

2.3.2. Post-Processing

This algorithm follows the same process of Section 2.2.4 except for the following variation in the first step where the contours of the detected panels are selected. First, the predictions of the panels are drawn in masks and from these the contours are calculated and eroded to avoid overlapping. Second, before calculating the average area, the data is debugged by removing the tails of the distribution. Third, the rotation angle of the rotated rectangle is not used for the contour selection.

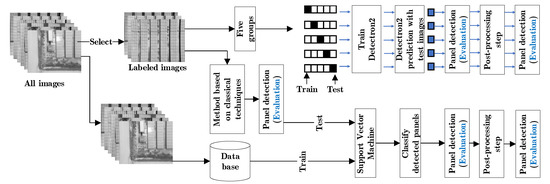

2.4. Evaluation

The detection efficiency of the methods is evaluated by a test data set, which is different from the training data set. The novel method based on classical techniques consists of a training part for the texture descriptors. The texture database is not part of the images selected for evaluation, so all labeled images are used as a test image. Prediction with deep learning requires training, therefore, to include all labeled images in the test the concept of cross validation is used. The images were divided into five groups, so that in each group 80% is used for training and 20% for testing, and the images selected for testing are different for all groups (Figure 8).

Figure 8.

Origin of the Images to train and test for each method and the steps in which the prediction of panels is evaluated.

All panel detection steps of both methods are evaluated with precision metrics (Figure 8), the classification metrics of a single class are used, in which positive and negative values are taken into account [46]. The metrics used to evaluate the effectiveness of classification are precision, recall and F-measure [32].

The precision represents the proportion of positive samples that were correctly classified compared to the total number of predicted positive samples [47] as indicated in Equation (1), where indicates the number of true positives and indicates the number of false positives:

The recall of a classifier represents the correctly classified positive samples to the total number of positive samples [47] and it is estimated according to Equation (2), where indicates the number of false negatives:

The main F-measure is the score which is defined as the harmonic mean of precision and recall [48]. It is estimated according to Equation (3):

The detected panels are drawn on the image and through visual inspection the false positives are determined. We define a false positive as a selection that is not a panel or a panel that presents: center shift, associate more than one panel, or an area less than 90%. The false negatives are the undetected panels. The total number of panels is the total number of labels used for training. The value of the true positive is the total number of panels minus the false negatives.

3. Results

Regarding the panel classification described in Section 2.2.3, 440 texture descriptors are calculated and a vector of 290 texture descriptors is optimized. A database with 1555 panel vectors and 302 non-panel vectors is created by a visual classification. This database is used to train a support vector machine. The database has a classification efficiency of 91% as it was evaluated in 10-fold cross validation.

For the deep learning based method, predictions of images not used for training were made. To that end the thermal photos were divided into five groups and 80 photos were used for training and 20 photos for prediction, alternating the groups so that the prediction photos were not repeated.

The detections made by each method in each step were drawn on the original image. These images were used for visual inspection and determining the false negatives and false positives.

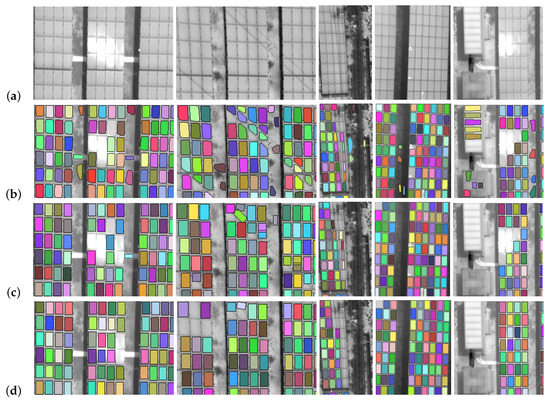

Figure 9 shows five cases of thermal images with complex backgrounds and panel detection with a novel method based on classic techniques with a post-processing step. The first is an image with sun reflection; the second is an image with the presence of overhead power lines with cuts close to 45 degrees with the geometry of the panels; the third an image with the presence of energy lines with cuts close to 90 degrees with the geometry of the panel; the fourth is an image with poorly defined panel edges; and the fifth presents structures like panels. In these images they show the detections made in each step of this method. Therefore, in the first step (see Section 2.2.2) the images show numerous false positives, which are reduced with the second step (see Section 2.2.3) when sorting with a support vector machine. Ending with the drawing of panels that have not been detected (see Section 2.2.4). However, due to the selection of outlines in the last step, some distorted panels (due to the distortion of the image by the camera lens) are removed from the edges.

Figure 9.

Detection of panels in thermal images with a novel method based on classical techniques and a post-processing step. Different cases of true positives, false positives and false negatives: (a) Thermal image in gray scale, (b) classification by classical segmentation with classical method, (c) classification with a support vector machine and (d) classification after post-processing.

The images of Figure 9 are consistent with the data shown in Table 2. This table shows that the classical method in complex environments without machine learning has a precision value of 0.886, which would lead to dismissing this method. However, the addition of the post-processing step allows to improve the quality of the method raising the value of precision to 0.997. However, the number of panels detected does not have such a large rise as the recall value is only increased by 0.009. This is because in the post-processing step some detections are eliminated because they are distorted. These variations are reflected in the value of the score, which has an increase between the first and third step of 0.06.

Table 2.

The precision, recall and score metrics for panel detection in thermal images for three steps of our novel method based on classical techniques: segmentation, classification with a support vector machine (SVM) and after post-processing.

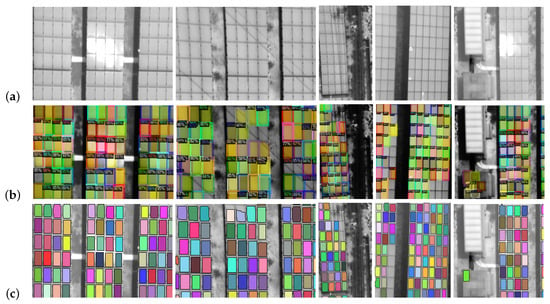

Figure 10 shows the same five cases of thermal images with complex backgrounds presented in the Figure 9 and panel detection with deep learning and a post-processing step. These images show that the deep learning method leads to a greater stability in the shape of the panel, with the four sides well defined. However, this method has failures in images with power lines, poorly defined panel edges and panel-like structures. These detection failures are partially solved in the post-processing step.

Figure 10.

Detection of panels in thermal images with our deep learning method and a post-processing step. Different cases of true positives, false positives and false negatives: (a) thermal image in gray scale, (b) panel detection with deep learning, (c) after post-processing.

Table 3 shows the values of the precision metrics for the test evaluations in the deep learning prediction and drawing step. The precision value shows that the deep learning method has few errors, but the recall shows the sensitivity of the method to the training data, so training with a large database is recommended. In this table, it is shown how the post-processing step affects the accuracy metrics. This is demonstrated in the change of the total data of the groups, reducing the false negatives by more than 60% that lead to an increase of the recall value of 0.032, reaching a precision value of 0.996.

Table 3.

The precision, recall and score metrics for panel detection in thermal images using deep learning method, before and after post-processing.

4. Discussion

The proposed methods solve the problems related to the complex backgrounds mentioned in this article. For the new method based on classical techniques, the following points were verified: first, the correction posed in Section 2.2.1 was effective in the case of images with an atypical distribution of data due to hot spots. Second, that the procedure posed in Section 2.2 allows the accentuation of diffuse edges. Third, that the classification with support vector machine allows the elimination of panel-like structures. However, this method is affected by objects that cause the panel geometry to be lost, as well as edge distortion. In the case of the proposed method with deep learning, the process of image normalization and training with data augmentation allows to overcome the new method. However, it fails in prediction due to loss of edges, diffuse edges and loss of panel geometry. The errors presented by each of the methods were overcome with the post-processing step, in which distorted predictions were eliminated, and the location of panels was inferred by taking prior information into account.

The value of the accuracy metrics in Table 2 and Table 3 for the post-processing step have a value greater than 0.97 which implies that the panel detection methods are reliable, confirmed by the precision value being greater than 0.996. Finally the value of score is similar for both methods with a value of 0.98 for all detections.

However, the reduction of false negatives by the post-processing step is greater in the case of deep learning. This is because it has a better geometry in the detection. In the new method, the greatest influence was in the reduction of false positives. Therefore the method of deep learning with the post-processing step of panels has greater feasibility of improving the number of panels detected in environments with the presence of greater amounts of anomalies.

5. Conclusions

The identification of solar panels is difficult with complex backgrounds especially when there are power lines parallel to the panel edges and when there are shadows of weeds on the panel edges. Nevertheless, the proposed methods for panel detection obtain a high precision in detecting the solar panels in these circumstances.

Two panel detection methods were evaluated on 100 thermal images from 11 drone flights at three solar plants. The first method involved image correction, image segmentation and classification of these segments using support vector machines trained with an optimized vector of texture descriptors, and a post-processing. This method obtained the following values of accuracy metrics in experiments: precision of 0.997, recall of 0.970 and score of 0.983. The second method is based on deep learning and a post-processing step, for which five groups of data were defined, in which 80 photos were used for training and 20 for testing, so that when all the test data were added up, the following values of accuracy metrics were obtained: precision of 0.996, recall of 0.981 and score of 0.989.

By comparing the false positives of the two methods, the post-processing step was more effective for the deep learning method, reducing them by more than 60%, demonstrating that this method allows for improved panel detection. In this paper, it is demonstrated that the two panel detection methods with a post-processing step are effective in complex backgrounds.

Future work involves the correction of the lens distortions present in the thermal images, the use of different methods for the projection of panels to locate the panels that have not been detected, the optimization of the method for use in orthomosaics, the incorporation of a layer of geographic information for the location of power lines, and the combination of the panel detection with algorithms for the detection of panel failures with their correct classification.

Author Contributions

Conceptualization, J.J.V.D., M.V., D.L., S.A.O.V. and H.L.; methodology, J.J.V.D., M.V. and H.L.; software, J.J.V.D. and M.V.; validation, J.J.V.D. and M.V.; formal analysis, J.J.V.D., M.V. and H.L.; investigation, J.J.V.D. and M.V.; resources, D.L. and H.L.; writing–original draft preparation, J.J.V.D.; writing–review and editing, M.V. and H.L.; visualization, J.J.V.D.; supervision, S.A.O.V. and H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

The project is co-financed by imec and received project support from Flanders Innovation & Entrepreneurship (project nr. HBC.2019.0050). The work presented in this paper has also been made possible through the COLCIENCIAS scholarship granted by the CEDAGRITOL research group. Finally, the work presented in this paper builds upon the expertise built up in the COMP4DRONES project. This project has received funding from the ECSEL Joint Undertaking (JU) under grant agreement No 826610.

Acknowledgments

This work was executed within the imec.icon project ANALYST-PV, a research project bringing together academic researchers and industry partners. The ANALYST-PV consortium aims at developing a fault diagnosis framework for PV power plants that relies on Internet of Things (IoT) sensors, AI-enabled root cause analysis and automatic image analysis.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Busu, M. Analyzing the impact of the renewable energy sources on economic growth at the EU level using an ARDL model. Mathematics 2020, 8, 1367. [Google Scholar] [CrossRef]

- Zheng, Y.; Weng, Q. Modeling the effect of green roof systems and photovoltaic panels for building energy savings to mitigate climate change. Remote Sens. 2020, 12, 2402. [Google Scholar] [CrossRef]

- Javadi, M.S.; Gough, M.; Lotfi, M.; Esmaeel Nezhad, A.; Santos, S.F.; Catalão, J.P.S. Optimal self-scheduling of home energy management system in the presence of photovoltaic power generation and batteries. Energy 2020, 210. [Google Scholar] [CrossRef]

- Dhriyyef, M.; El Mehdi, A.; Elhitmy, M.; Elhafyani, M. Management strategy of power exchange in a building between grid, photovoltaic and batteries. Lect. Notes Electr. Eng. 2020, 681, 831–841. [Google Scholar] [CrossRef]

- Thomas, A.; Racherla, P. Constructing statutory energy goal compliant wind and solar PV infrastructure pathways. Renew. Energy 2020, 161, 1–19. [Google Scholar] [CrossRef]

- Wu, Z.; Hu, Y.; Wen, J.X.; Zhou, F.; Ye, X. A Review for Solar Panel Fire Accident Prevention in Large-Scale PV Applications. IEEE Access 2020, 8, 132466–132480. [Google Scholar] [CrossRef]

- Yahyaoui, I.; Segatto, M.E.V. A practical technique for on-line monitoring of a photovoltaic plant connected to a single-phase grid. Energy Convers. Manag. 2017, 132, 198–206. [Google Scholar] [CrossRef]

- Beránek, V.; Olšan, T.; Libra, M.; Poulek, V.; Sedláček, J.; Dang, M.Q.; Tyukhov, I.I. New monitoring system for photovoltaic power plants’ management. Energies 2018, 11, 2495. [Google Scholar] [CrossRef]

- Wang, Y.L.; Sun, J.; Xu, H.W. Research on solar panels online defect detecting method. Appl. Mech. Mater. 2014, 635-637, 938–941. [Google Scholar] [CrossRef]

- Haider, M.; Doegar, A.; Verma, R.K. Fault identification in electrical equipment using thermal image processing. In Proceedings of the 2018 International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 28–29 September 2018; pp. 853–858. [Google Scholar] [CrossRef]

- Phoolwani, U.K.; Sharma, T.; Singh, A.; Gawre, S.K. IoT Based Solar Panel Analysis using Thermal Imaging. In Proceedings of the 2020 IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 22–23 February 2020. [Google Scholar] [CrossRef]

- Chen, J.; Lin, C.; Liu, C. The efficiency and performance detection algorithm and system development for photovoltaic system through use of thermal image processing technology. AIP Conf. Proc. 1978, 470088. [Google Scholar] [CrossRef]

- Chawla, R.; Singal, P.; Garg, A.K. A Mamdani Fuzzy Logic System to Enhance Solar Cell Micro-Cracks Image Processing. 3D Research 2018, 9, 34. [Google Scholar] [CrossRef]

- Sulas-Kern, D.B.; Johnston, S.; Meydbray, J. Fill Factor Loss in Fielded Photovoltaic Modules Due to Metallization Failures, Characterized by Luminescence and Thermal Imaging. In Proceedings of the 2019 IEEE 46th Photovoltaic Specialists Conference (PVSC), Chicago, IL, USA, 16–21 June 2019; pp. 2008–2012. [Google Scholar] [CrossRef]

- Alsafasfeh, M.; Abdel-Qader, I.; Bazuin, B. Fault detection in photovoltaic system using SLIC and thermal images. In Proceedings of the 22017 8th International Conference on Information Technology (ICIT), Amman, Jordan, 17–18 May 2017; pp. 672–676. [Google Scholar] [CrossRef]

- Menéndez, O.; Guamán, R.; Pérez, M.; Cheein, F.A. Photovoltaic modules diagnosis using artificial vision techniques for artifact minimization. Energies 2018, 11, 1688. [Google Scholar] [CrossRef]

- Jaffery, Z.A.; Dubey, A.K.; Haque, A. Scheme for predictive fault diagnosis in photo-voltaic modules using thermal imaging. Infrared Phys. Technol. 2017, 83, 182–187. [Google Scholar] [CrossRef]

- Gao, X.; Munson, E.; Abousleman, G.P.; Si, J. Automatic solar panel recognition and defect detection using infrared imaging. In Automatic Target Recognition XXV; International Society for Optics and Photonics: Bellingham, WA, USA, 2015; Volume 9476, p. 94760O. [Google Scholar] [CrossRef]

- Dávila-Sacoto, M.; Hernández-Callejo, L.; Alonso-Gómez, V.; Gallardo-Saavedra, S.; González, L.G. Detecting Hot Spots in Photovoltaic Panels Using Low-Cost Thermal Cameras. Commun. Comput. Inf. Sci. 2020, 1152, 38–53. [Google Scholar] [CrossRef]

- Henry, C.; Poudel, S.; Lee, S.W.; Jeong, H. Automatic detection system of deteriorated PV modules using drone with thermal camera. Appl. Sci. 2020, 10, 3802. [Google Scholar] [CrossRef]

- Lee, D.; Park, J. Development of Solar-Panel Monitoring Method Using Unmanned Aerial Vehicle and Thermal Infrared Sensor. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 611. [Google Scholar] [CrossRef]

- Lee, D.H.; Park, J.H. Developing inspection methodology of solar energy plants by thermal infrared sensor on board unmanned aerial vehicles. Energies 2019, 12, 2928. [Google Scholar] [CrossRef]

- Park, J.; Lee, D. Precise Inspection Method of Solar Photovoltaic Panel Using Optical and Thermal Infrared Sensor Image Taken by Drones. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 611. [Google Scholar] [CrossRef]

- Lee, S.; An, K.E.; Jeon, B.D.; Cho, K.Y.; Lee, S.J.; Seo, D. Detecting faulty solar panels based on thermal image processing. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–14 January 2018; pp. 1–2. [Google Scholar] [CrossRef]

- López-Fernández, L.; Lagüela, S.; Fernández, J.; González-Aguilera, D. Automatic evaluation of photovoltaic power stations from high-density RGB-T 3D point clouds. Remote Sens. 2017, 9, 631. [Google Scholar] [CrossRef]

- Alsafasfeh, M.; Abdel-Qader, I.; Bazuin, B.; Alsafasfeh, Q.; Su, W. Unsupervised fault detection and analysis for large photovoltaic systems using drones and machine vision. Energies 2018, 11, 2252. [Google Scholar] [CrossRef]

- Ismail, H.; Chikte, R.; Bandyopadhyay, A.; Al Jasmi, N. Autonomous detection of PV panels using a drone. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Salt Lake City, UT, USA, 11–14 November 2019; Volume 4. [Google Scholar] [CrossRef]

- Alfaro-Mejía, E.; Loaiza-Correa, H.; Franco-Mejía, E.; Hernández-Callejo, L. Segmentation of Thermography Image of Solar Cells and Panels. Commun. Comput. Inf. Sci. 2020, 1152, 1–8. [Google Scholar] [CrossRef]

- Greco, A.; Pironti, C.; Saggese, A.; Vento, M.; Vigilante, V. A deep learning based approach for detecting panels in photovoltaic plants. In Proceedings of the ACM International Conference Proceeding Series, Las Palmas de Gran Canaria, Spain, 7–9 January 2020. [Google Scholar] [CrossRef]

- Zhu, L.; Zhao, J.; Fu, Y.; Zhang, J.; Shen, H.; Zhang, S. Deep learning algorithm for the segmentation of the interested region of an infrared thermal image. J. Xidian Univ. 2019, 46, 107–114. [Google Scholar] [CrossRef]

- Liao, K.C.; Lu, J.H. Using Matlab real-time image analysis for solar panel fault detection with UAV. In Journal of Physics: Conference Series; IOP Publishing Ltd.: Bristol, UK, 2020; Volume 1509. [Google Scholar] [CrossRef]

- Addabbo, P.; Angrisano, A.; Bernardi, M.L.; Gagliarde, G.; Mennella, A.; Nisi, M.; Ullo, S. A UAV infrared measurement approach for defect detection in photovoltaic plants. In Proceedings of the 4th IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017; pp. 345–350. [Google Scholar] [CrossRef]

- Uma, J.; Muniraj, C.; Sathya, N. Diagnosis of photovoltaic (PV) panel defects based on testing and evaluation of thermal image. J. Test. Eval. 2019, 47. [Google Scholar] [CrossRef]

- Dhimish, M.; Alrashidi, A. Photovoltaic degradation rate affected by different weather conditions: A case study based on pv systems in the uk and australia. Electronics 2020, 9, 650. [Google Scholar] [CrossRef]

- Libra, M.; Daneček, M.; Lešetický, J.; Poulek, V.; Sedláček, J.; Beránek, V. Monitoring of defects of a photovoltaic power plant using a drone. Energies 2019, 12, 795. [Google Scholar] [CrossRef]

- Dji. Zenmuse XT Specs. 2020. Available online: https://www.dji.com/zenmuse-xt/specs (accessed on 20 September 2020).

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Garrido, G.; Joshi, P. OpenCV 3.x with Python By Example—Second Edition: Make the Most of OpenCV and Python to Build Applications for Object Recognition and Augmented Reality, 2nd ed.; Packt Publishing: Birmingham, UK, 2018. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Y.; Ijurra, O.M.; Zhang, L.; Shen, D.; Wang, Q. Curadiomics: A GPU-based radiomics feature extraction toolkit. Lect. Notes Comput. Sci. 2020, 11991, 44–52. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 20 September 2020).

- Avants, B.B.; Tustison, N.J.; Stauffer, M.; Song, G.; Wu, B.; Gee, J.C. The Insight ToolKit image registration framework. Front. Neuroinform. 2014, 8, 44. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Sckit Learn. 1.5. Stochastic Gradient Descent. 2020. Available online: https://scikit-learn.org/stable/modules/sgd.html (accessed on 20 September 2020).

- Bharati, P.; Pramanik, A. Deep Learning Techniques—R-CNN to Mask R-CNN: A Survey. In Computational Intelligence in Pattern Recognition; Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; Volume 999, pp. 657–668. [Google Scholar] [CrossRef]

- Hoffmann, F.; Bertram, T.; Mikut, R.; Reischl, M.; Nelles, O. Benchmarking in classification and regression. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1318. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2018. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).