Mapping and Discriminating Rural Settlements Using Gaofen-2 Images and a Fully Convolutional Network

Abstract

1. Introduction

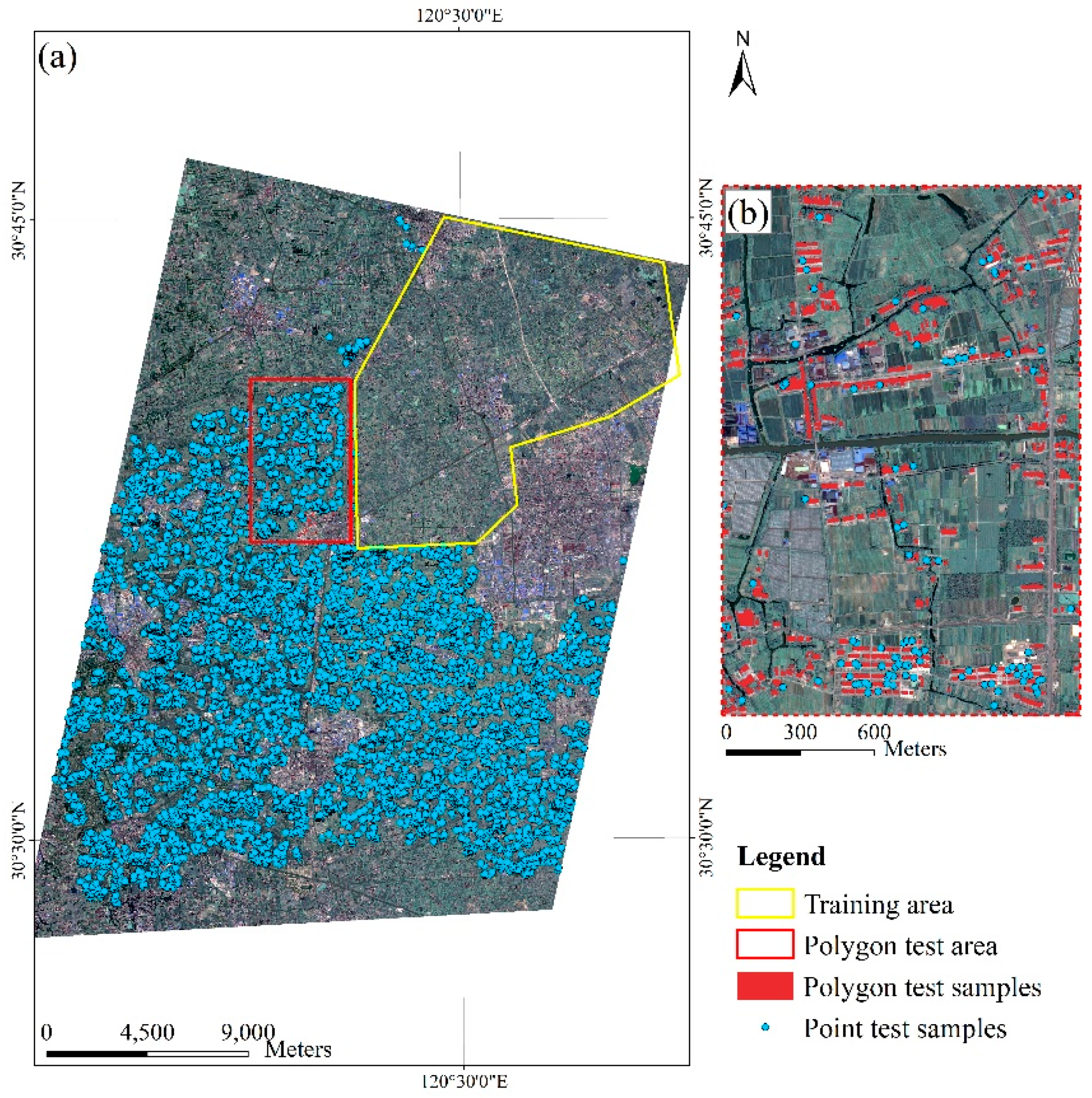

2. Study Area and Data

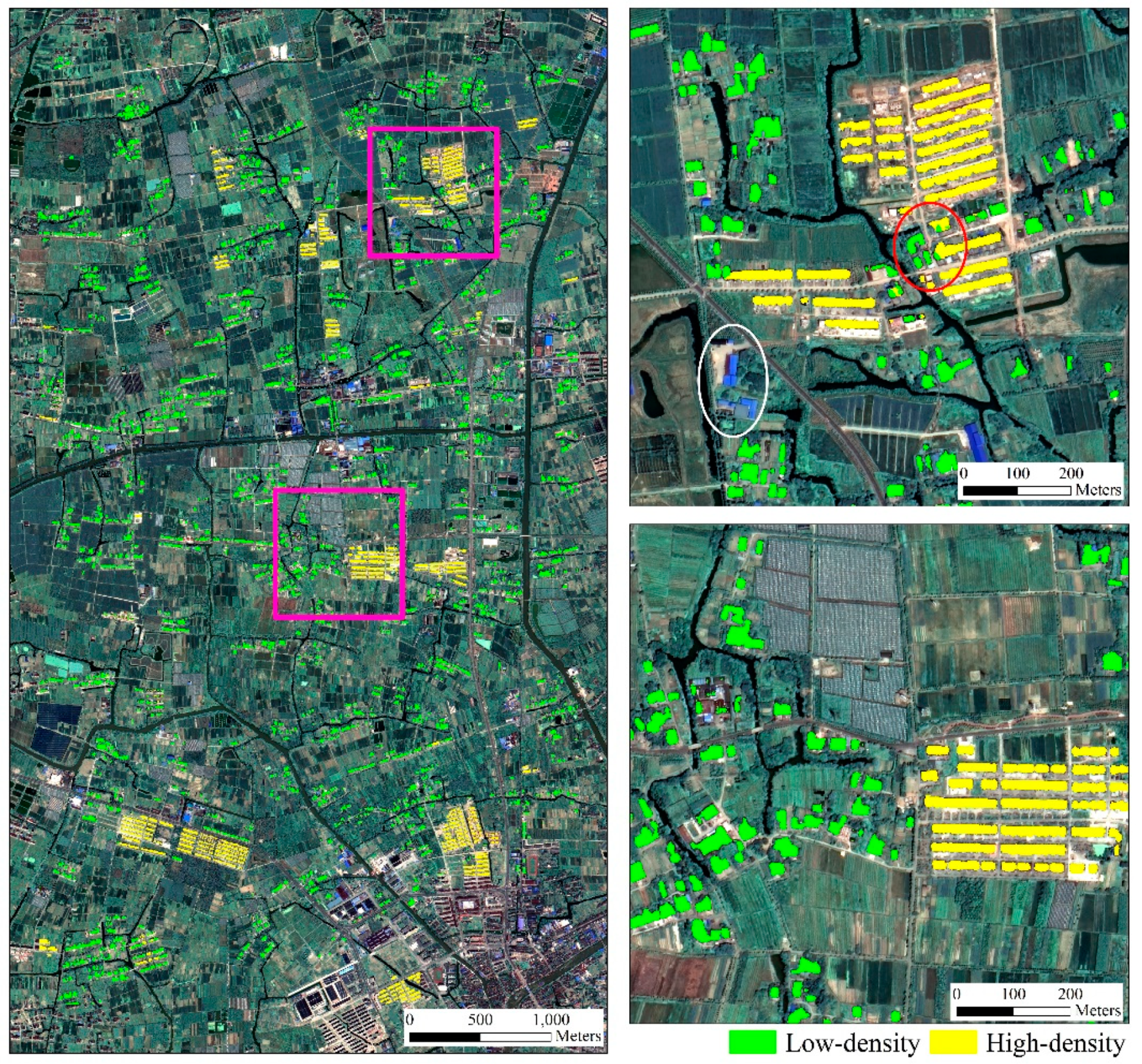

- Low-density settlements (LDS): most of LDS are old-style rural settlements which are scattered and disorderly distributed and have different orientations. These low-density rural settlements are mainly located close to rivers and streams in support of farming and transportation of smallholders. The boundaries of low-density settlements are obscured by the surrounding vegetation.

- High-density settlements (HDS): newly built residential areas where multi-story buildings accommodate several families. Such settlements have a higher building density than low-density settlements, and buildings inside these settlements have an identical spacing and the same surface. High-density settlements mainly distribute adjacent to the newly built transportation roads, providing easy access to nearby towns.

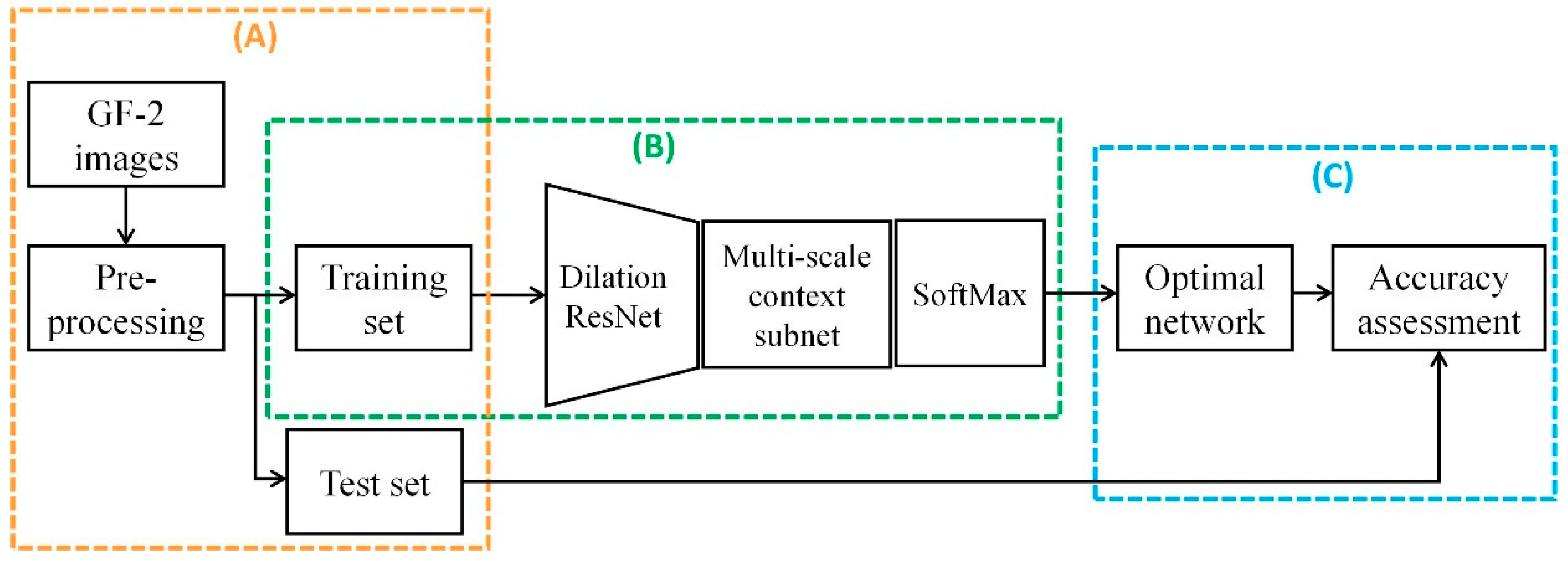

3. Methods

3.1. Data Preprocessing

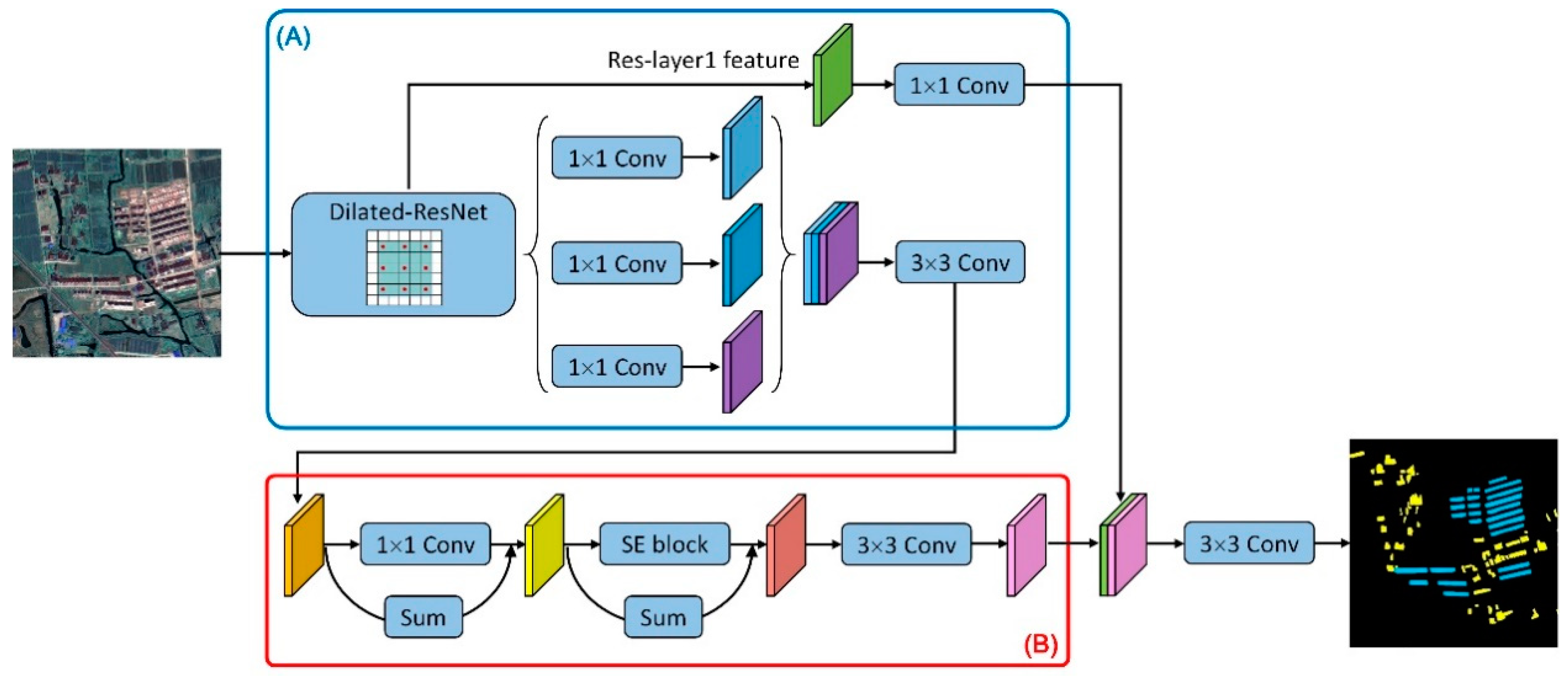

3.2. Rural Settlement Detection Using FCN

3.2.1. Dilated Residual Convolutional Network

3.2.2. Multi-Scale Context Subnetwork

3.2.3. Multi-Spectral Images-Based Transfer Learning

3.3. Method Implementation and Accuracy Assessment

4. Results and Discussions

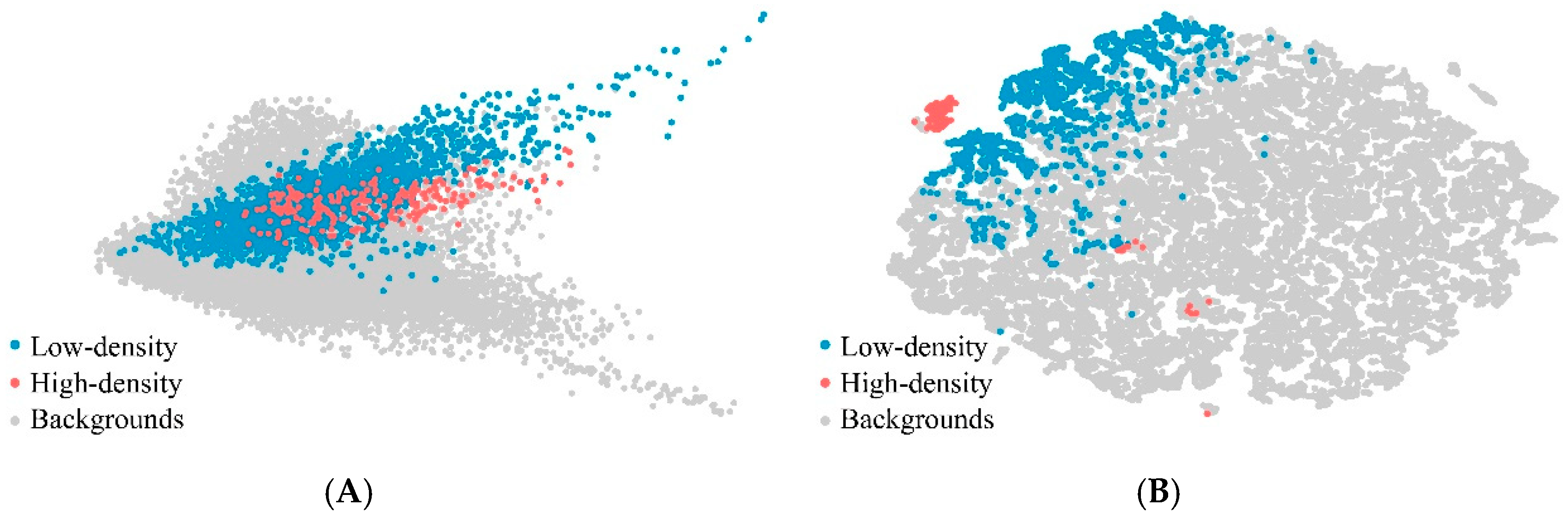

4.1. Rural Settlements Identification

4.2. Ablation Experiments of Model

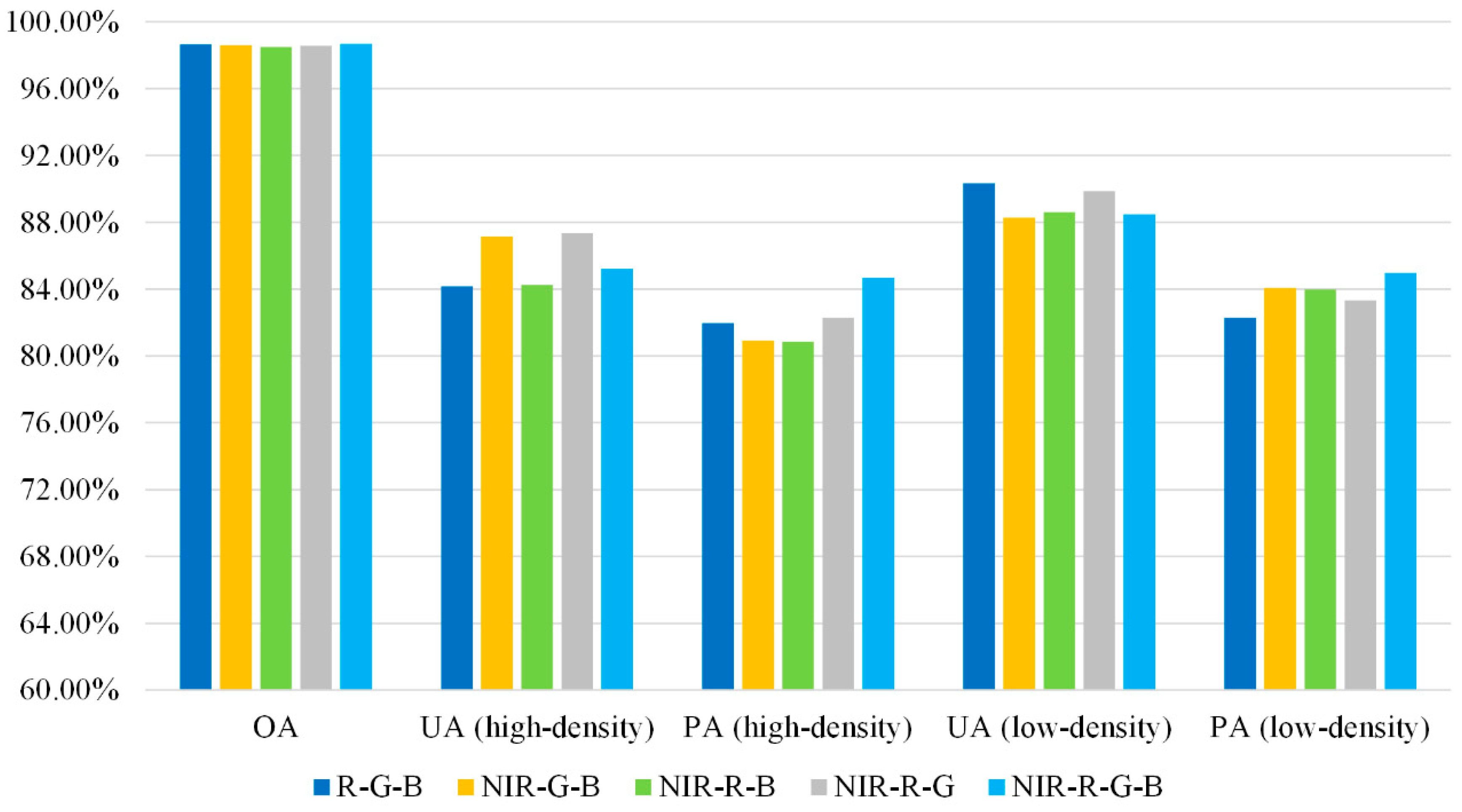

4.3. Data Input Strategies

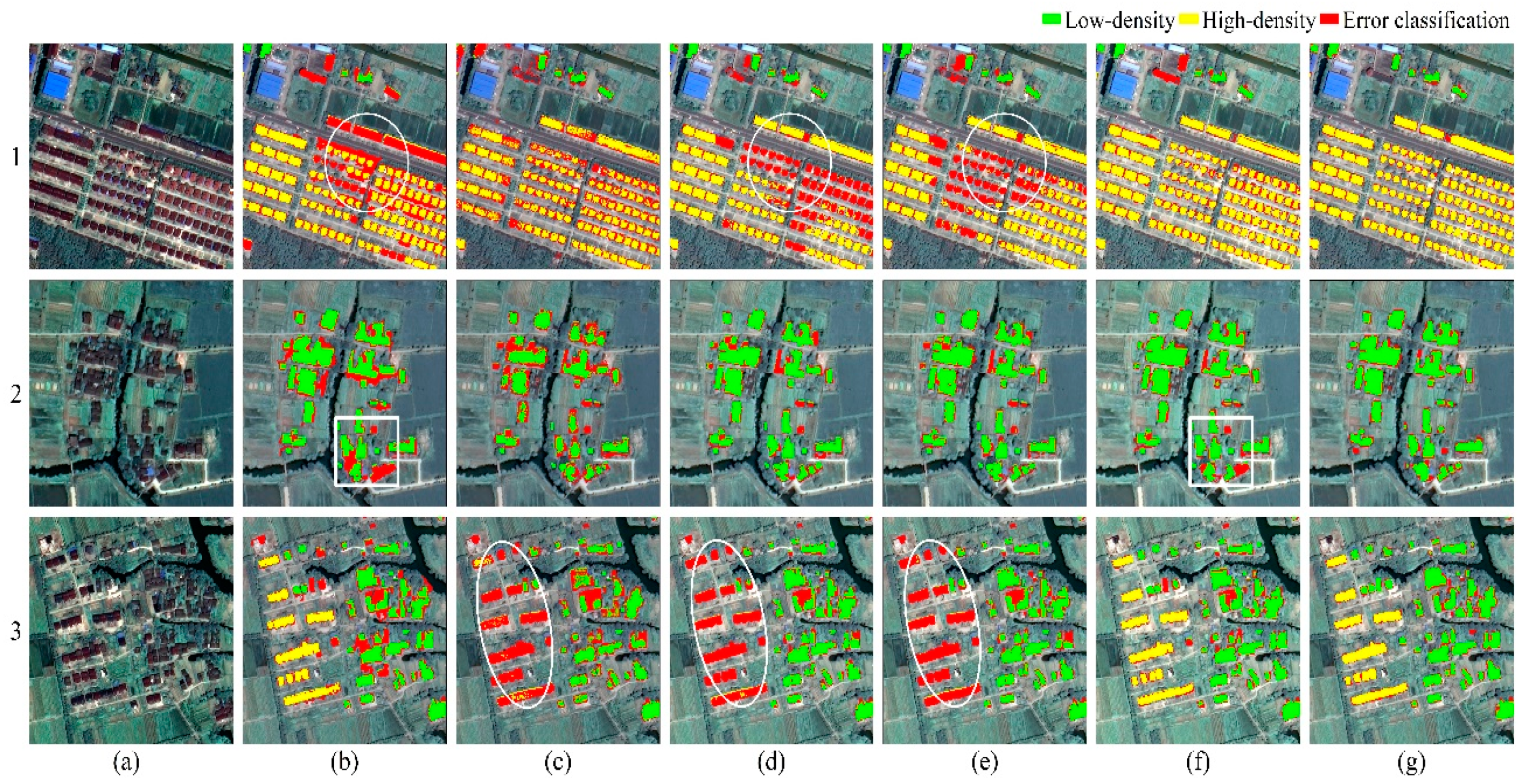

4.4. Comparative Studies with Different Methods

- OBIA [12]: a novel object-based image classification method which integrates hierarchical multi-scale segmentation and landscape analysis. This method makes use of spatial contextual information and subdivides different types of rural settlements with high accuracy.

- FCN [25]: a proposed fully convolutional network which comprises an encoder based on the VGG-16 network and a decoder consists of three stacked deconvolution layers. As far as we know, this is the first time that a deep learning FCN model has been used for rural residential areas extraction.

- SegNet [43]: an encoder-decoder architecture uses the pooling indices to perform upsampling. It is a classic and efficient model that is often used as a baseline for semantic segmentation. Persello et al. [44] successfully delineated agricultural fields in smallholder farms from satellite images using SegNet.

- DeeplabV3+ [20]: a state-of-the-art semantic segmentation model combining spatial pyramid pooling module and encode-decoder structure. It has achieve a performance of 89% on the PASCAL VOC 2012 semantic segmentation dataset.

4.5. Analysis and Potential Improvements

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Long, H.; Liu, Y.; Wu, X.; Dong, G. Spatio-temporal dynamic patterns of farmland and rural settlements in Su–Xi–Chang region: Implications for building a new countryside in coastal China. Land Use Policy 2009, 26, 322–333. [Google Scholar] [CrossRef]

- Shan, Z.; Feng, C. The Redundancy of Residential Land in Rural China: The evolution process, current status and policy implications. Land Use Policy 2018, 74, 179–186. [Google Scholar] [CrossRef]

- Kit, O.; Ludeke, M.K.B.; Reckien, D. Texture-based identification of urban slums in Hyderabad, India using remote sensing data. Appl. Geogr. 2012, 32, 660–667. [Google Scholar] [CrossRef]

- Conrad, C.; Rudloff, M.; Abdullaev, I.; Thiel, M.; Löw, F.; Lamers, J. Measuring rural settlement expansion in Uzbekistan using remote sensing to support spatial planning. Appl. Geogr. 2015, 62, 29–43. [Google Scholar] [CrossRef]

- Yang, C.; Wang, X.; Huang, H. Comparison of Extracting Rural Residential Area from Satellite Images with Multiresolution. In Proceedings of the 2008 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2008), Boston, MA, USA, 8–11 July 2008. [Google Scholar]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote. Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; Van Der Meer, F.; Van Der Werff, H.; Van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote. Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Du, S.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Persello, C.; Stein, A. Deep Fully Convolutional Networks for the Detection of Informal Settlements in VHR Images. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 2325–2329. [Google Scholar] [CrossRef]

- Hoffman-Hall, A.; Loboda, T.V.; Hall, J.V.; Carroll, M.L.; Chen, D. Mapping remote rural settlements at 30 m spatial resolution using geospatial data-fusion. Remote. Sens. Environ. 2019, 233, 111386. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, Y.; Zhang, Z.; Shen, Y. Land use and land cover classification for rural residential areas in China using soft-probability cascading of multifeatures. J. Appl. Remote. Sens. 2017, 11, 1. [Google Scholar] [CrossRef][Green Version]

- Zheng, X.; Wu, B.; Weston, M.; Zhang, J.; Gan, M.; Zhu, J.; Deng, J.; Wang, K.; Teng, L. Rural Settlement Subdivision by Using Landscape Metrics as Spatial Contextual Information. Remote. Sens. 2017, 9, 486. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, Y.; Gan, M.; Zhang, J.; Teng, L.; Wang, K.; Shen, Z.; Zhang, L. Discrimination of Settlement and Industrial Area Using Landscape Metrics in Rural Region. Remote. Sens. 2016, 8, 845. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote. Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D. Dense Semantic Labeling of Subdecimeter Resolution Images with Convolutional Neural Networks. IEEE Trans. Geosci. Remote. Sens. 2016, 55, 881–893. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Liu, Y.; Fan, B.; Wang, L.; Bai, J.; Xiang, S.; Pan, C. Semantic labeling in very high resolution images via a self-cascaded convolutional neural network. ISPRS J. Photogramm. Remote. Sens. 2018, 145, 78–95. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Zhang, Q.; Xiang, S.; Pan, C. Gated Convolutional Neural Network for Semantic Segmentation in High-Resolution Images. Remote. Sens. 2017, 9, 446. [Google Scholar] [CrossRef]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote. Sens. 2018, 10, 407. [Google Scholar] [CrossRef]

- Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. Available online: https://arxiv.org/abs/1802.02611 (accessed on 25 October 2020).

- Ye, Z.; Fu, Y.; Gan, M.; Deng, J.; Comber, A.; Wang, K. Building Extraction from Very High Resolution Aerial Imagery Using Joint Attention Deep Neural Network. Remote. Sens. 2019, 11, 2970. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote. Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Qiu, C.; Schmitt, M.; Geiß, C.; Chen, T.-H.K.; Zhu, X.X. A framework for large-scale mapping of human settlement extent from Sentinel-2 images via fully convolutional neural networks. ISPRS J. Photogramm. Remote. Sens. 2020, 163, 152–170. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Persello, C.; Sliuzas, R.; Vosselman, G. Monitoring household upgrading in unplanned settlements with unmanned aerial vehicles. Int. J. Appl. Earth Obs. Geoinform. 2020, 90, 102117. [Google Scholar] [CrossRef]

- Lu, C.; Yang, X.; Wang, Z.; Liu, Y. Extracting Rural Residential Areas from High-Resolution Remote Sensing Images in the Coastal Area of Shandong, China Based on Fast Acquisition of Training Samples and Fully Convoluted Network. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Beijing, China, 19–20 August 2018; pp. 1–4. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. Available online: https://www.lens.org/lens/patent/US_6011875_A?locale=es (accessed on 25 October 2020).

- Deep Residual Learning for Image Recognition. Available online: https://arxiv.org/abs/1512.03385 (accessed on 25 October 2020).

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated Residual Networks. Available online: https://arxiv.org/abs/1705.09914 (accessed on 25 October 2020).

- Multi-Scale Context Aggregation by Dilated Convolutions. Available online: https://arxiv.org/abs/1511.07122 (accessed on 25 October 2020).

- Razavian, A.S.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN Features Off-the-Shelf: An Astounding Baseline for Recognition. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep Learning Earth Observation Classification Using ImageNet Pretrained Networks. IEEE Geosci. Remote. Sens. Lett. 2015, 13, 105–109. [Google Scholar] [CrossRef]

- Zhao, B.; Huang, B.; Zhong, Y. Transfer Learning With Fully Pretrained Deep Convolution Networks for Land-Use Classification. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 1436–1440. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote. Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, X.; Xin, Q.; Sun, Y.; Zhang, P. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote. Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Adam: A Method for Stochastic Optimization. Available online: https://arxiv.org/abs/1412.6980 (accessed on 25 October 2020).

- Create Random Points. Available online: https://desktop.arcgis.com/en/arcmap/10.3/tools/data-management-toolbox/create-random-points.htm (accessed on 4 May 2020).

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote. Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Bishop, Y.M.M.; Fienberg, S.E.; Holland, P.W. Discrete Multivariate Analysis; Springer: New York, NY, USA, 2007. [Google Scholar]

- Maaten, L.V.D.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention(MICCAI 2015), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinform. 2019, 82, 101897. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.; Bergado, J.; De By, R. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote. Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Dua, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote. Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

| LDS | HDS | Backgrounds | Sum | |

|---|---|---|---|---|

| Point-based testing samples | 6125 | 2616 | 2887 | 11,628 |

| Polygon-based testing samples | 1831 | 438 | / | 2269 |

| Predicted Class | |||||

|---|---|---|---|---|---|

| LDS | HDS | Backgrounds | Sum | ||

| Ground truth | LDS | 5997 | 3 | 125 | 6125 |

| HDS | 4 | 2551 | 61 | 2616 | |

| Backgrounds | 4 | 0 | 2883 | 2887 | |

| Sum | 6005 | 2554 | 3069 | 11,628 | |

| UA | 99.87% | 99.88% | 93.94% | ||

| PA | 97.91% | 97.52% | 99.86% | ||

| OA | 98.31% | ||||

| Kappa | 0.9724 | ||||

| Predicted Class | |||||

|---|---|---|---|---|---|

| LDS | HDS | Backgrounds | Sum | ||

| Ground truth | LDS | 720,551 | 9228 | 118,198 | 847,977 |

| HDS | 2673 | 349,060 | 60,476 | 412,209 | |

| Backgrounds | 95,539 | 51,323 | 24,231,862 | 24,378,724 | |

| Sum | 818,763 | 409,611 | 24,410,536 | 25,638,910 | |

| UA | 88.00% | 85.22% | 99.27% | ||

| PA | 84.97% | 84.68% | 99.40% | ||

| OA | 98.68% | ||||

| Kappa | 0.8591 | ||||

| OA | UA | PA | Kappa | |||

|---|---|---|---|---|---|---|

| LDS | HDS | LDS | HDS | |||

| Res50Seg (Baseline) | 98.36% | 82.50% | 80.45% | 83.30% | 67.75% | 0.8329 |

| +Dilation | 98.39% | 84.25% | 78.76% | 80.53% | 76.90% | 0.8363 |

| +Dilation+Multiscale | 98.53% | 87.24% | 84.88% | 81.90% | 83.19% | 0.8513 |

| +Dilation+Multiscale+SE (Ours) | 98.68% | 88.00% | 85.22% | 84.97% | 84.68% | 0.8591 |

| Method | OA | UA | PA | Kappa | ||

|---|---|---|---|---|---|---|

| LDS | HDS | LDS | HDS | |||

| OBIA | 97.54% | 75.24% | 71.44% | 72.24% | 79.95% | 0.7397 |

| FCN | 97.46% | 73.11% | 75.44% | 70.28% | 55.46% | 0.7205 |

| UNet | 98.39% | 84.58% | 77.08% | 80.32% | 66.45% | 0.8245 |

| SegNet | 98.37% | 84.06% | 78.51% | 80.20% | 68.79% | 0.8232 |

| DeeplabV3+ | 98.69% | 87.92% | 83.43% | 85.51% | 82.93% | 0.8520 |

| Ours | 98.68% | 88.00% | 85.22% | 84.97% | 84.68% | 0.8591 |

| Method | Parameters | Training Time | Inference Time |

|---|---|---|---|

| OBIA | ~0.5 h | ~10 m | |

| FCN | 12.38 million | ~3.1 h | 0 m 17 s |

| UNet | 33.40 million | ~11.8 h | 0 m 39 s |

| SegNet | 29.44 million | ~ 8.2 h | 0 m 31 s |

| DeeplabV3+ | 39.76 million | ~12.9 h | 0 m 32 s |

| Ours | 28.04 million | ~5.8 h | 0 m 25 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, Z.; Si, B.; Lin, Y.; Zheng, Q.; Zhou, R.; Huang, L.; Wang, K. Mapping and Discriminating Rural Settlements Using Gaofen-2 Images and a Fully Convolutional Network. Sensors 2020, 20, 6062. https://doi.org/10.3390/s20216062

Ye Z, Si B, Lin Y, Zheng Q, Zhou R, Huang L, Wang K. Mapping and Discriminating Rural Settlements Using Gaofen-2 Images and a Fully Convolutional Network. Sensors. 2020; 20(21):6062. https://doi.org/10.3390/s20216062

Chicago/Turabian StyleYe, Ziran, Bo Si, Yue Lin, Qiming Zheng, Ran Zhou, Lu Huang, and Ke Wang. 2020. "Mapping and Discriminating Rural Settlements Using Gaofen-2 Images and a Fully Convolutional Network" Sensors 20, no. 21: 6062. https://doi.org/10.3390/s20216062

APA StyleYe, Z., Si, B., Lin, Y., Zheng, Q., Zhou, R., Huang, L., & Wang, K. (2020). Mapping and Discriminating Rural Settlements Using Gaofen-2 Images and a Fully Convolutional Network. Sensors, 20(21), 6062. https://doi.org/10.3390/s20216062