Irradiance Restoration Based Shadow Compensation Approach for High Resolution Multispectral Satellite Remote Sensing Images

Abstract

1. Introduction

2. Method

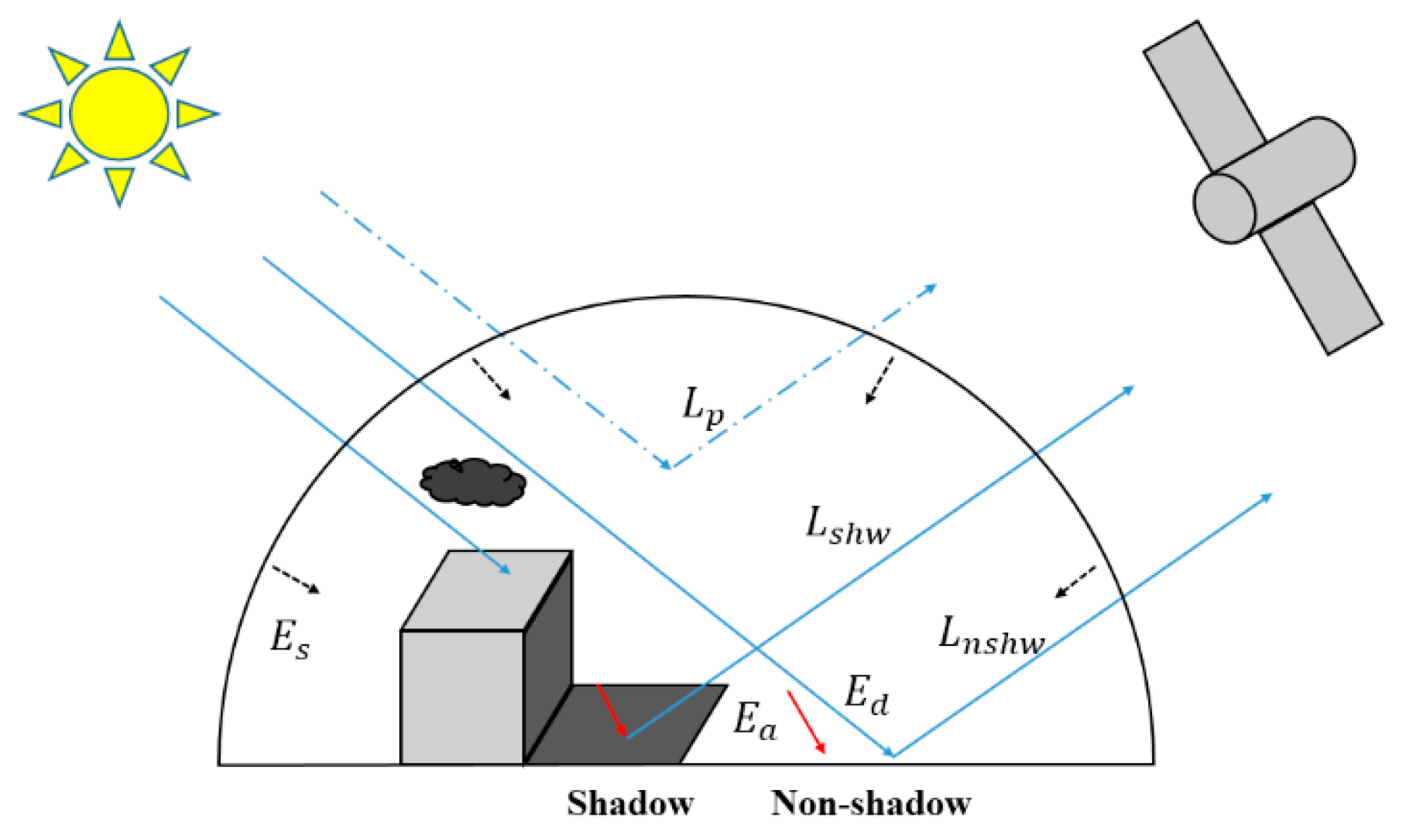

2.1. The Derivation of the Irradiance Restoration Based (IRB) Approach

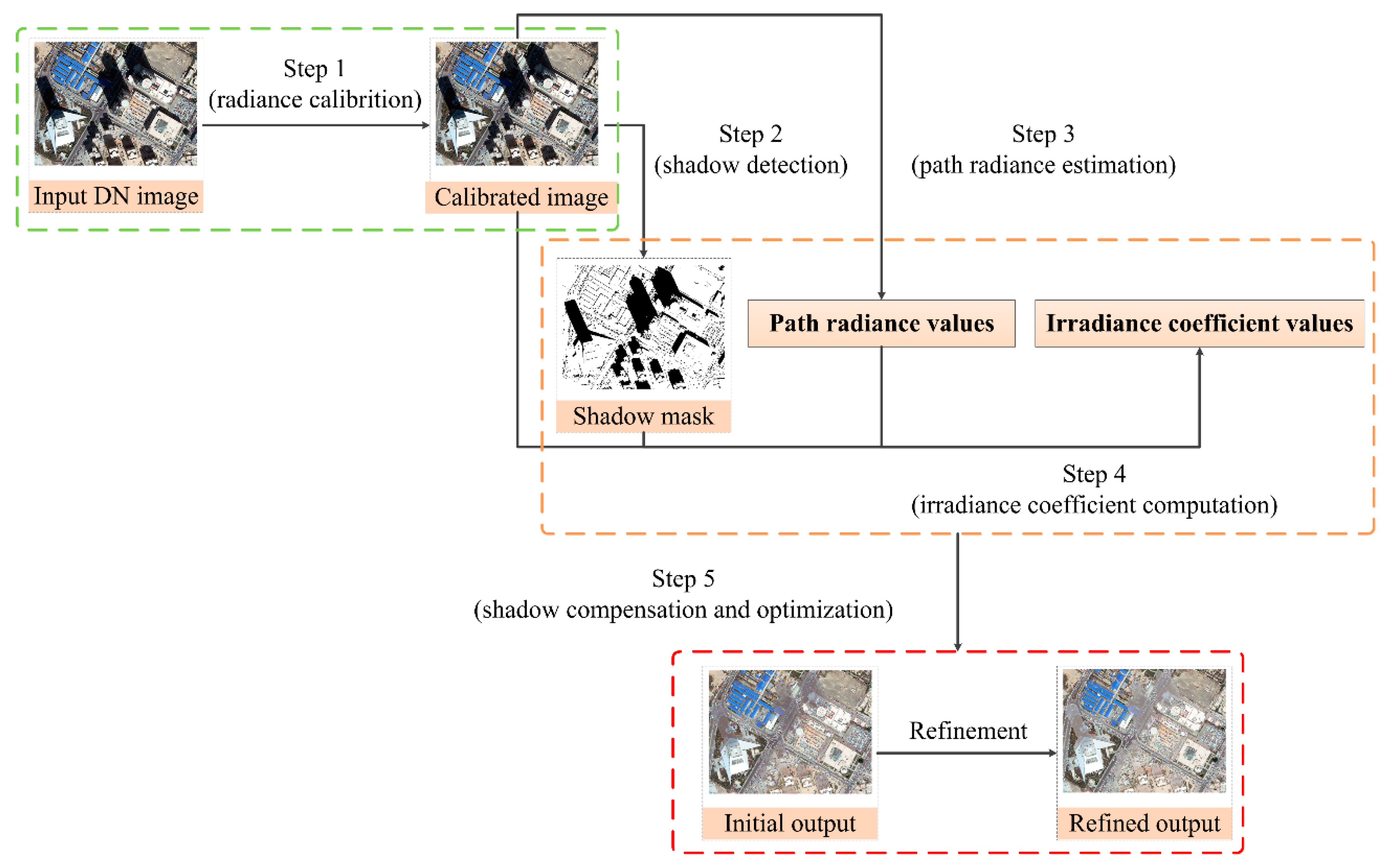

2.2. Workflow of the IRB Approach

- Step 1: Radiance calibration

- Step 2: Shadow detection

- Step 3: Path radiance estimation

- Step 4: Irradiance coefficient computation

- Step 5: Shadow compensation and optimization

3. Performance Evaluation

3.1. Test Images

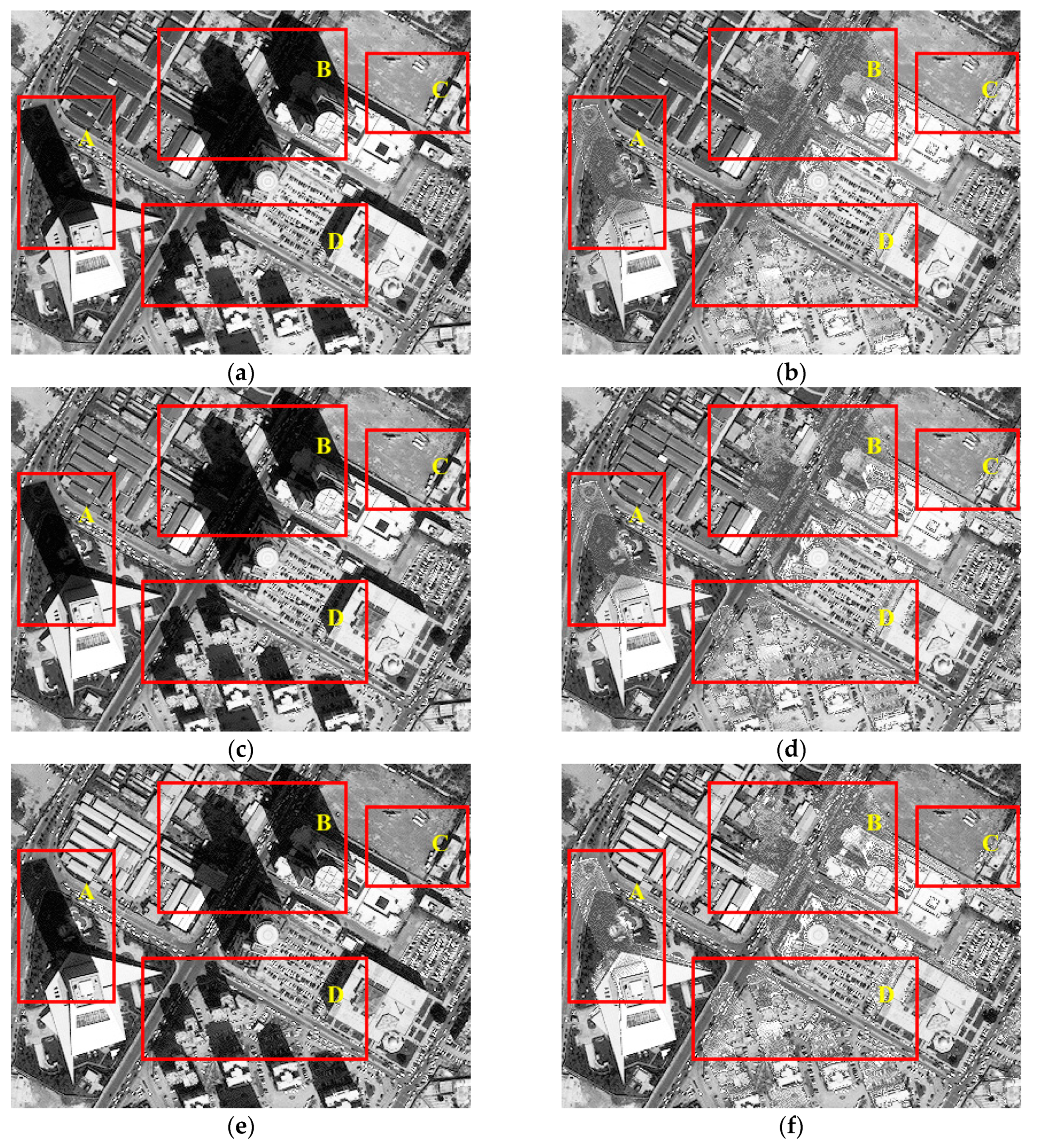

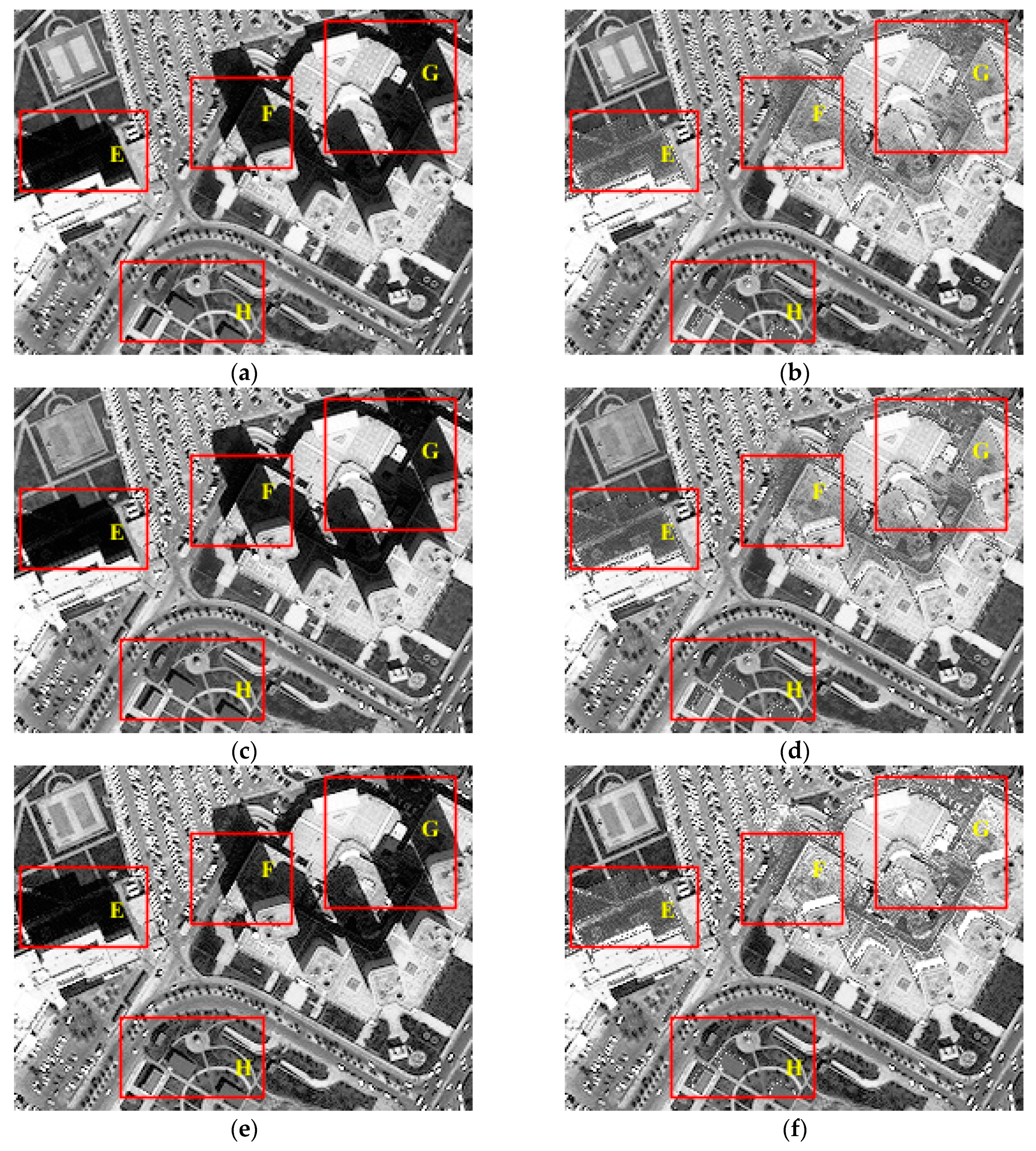

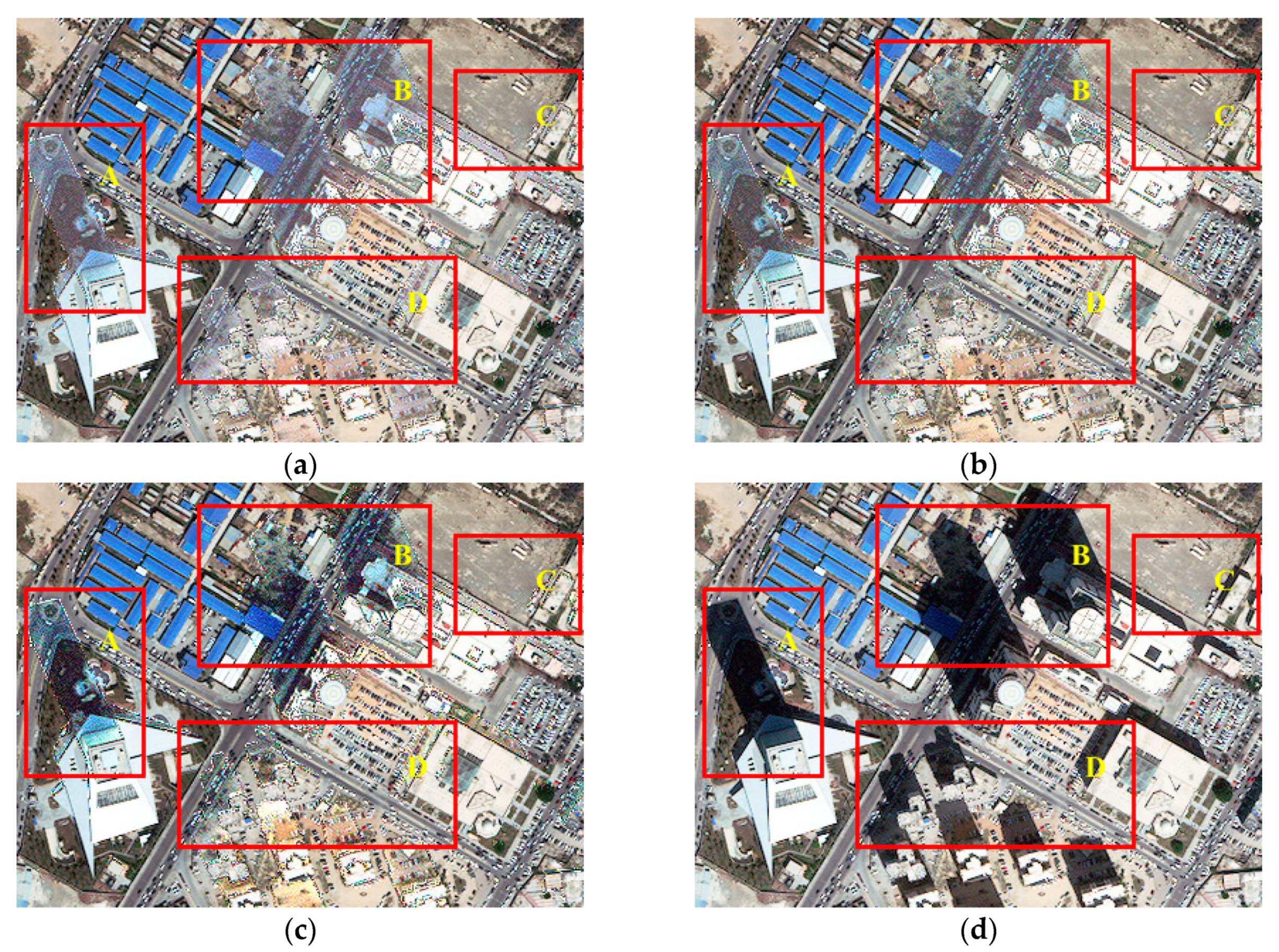

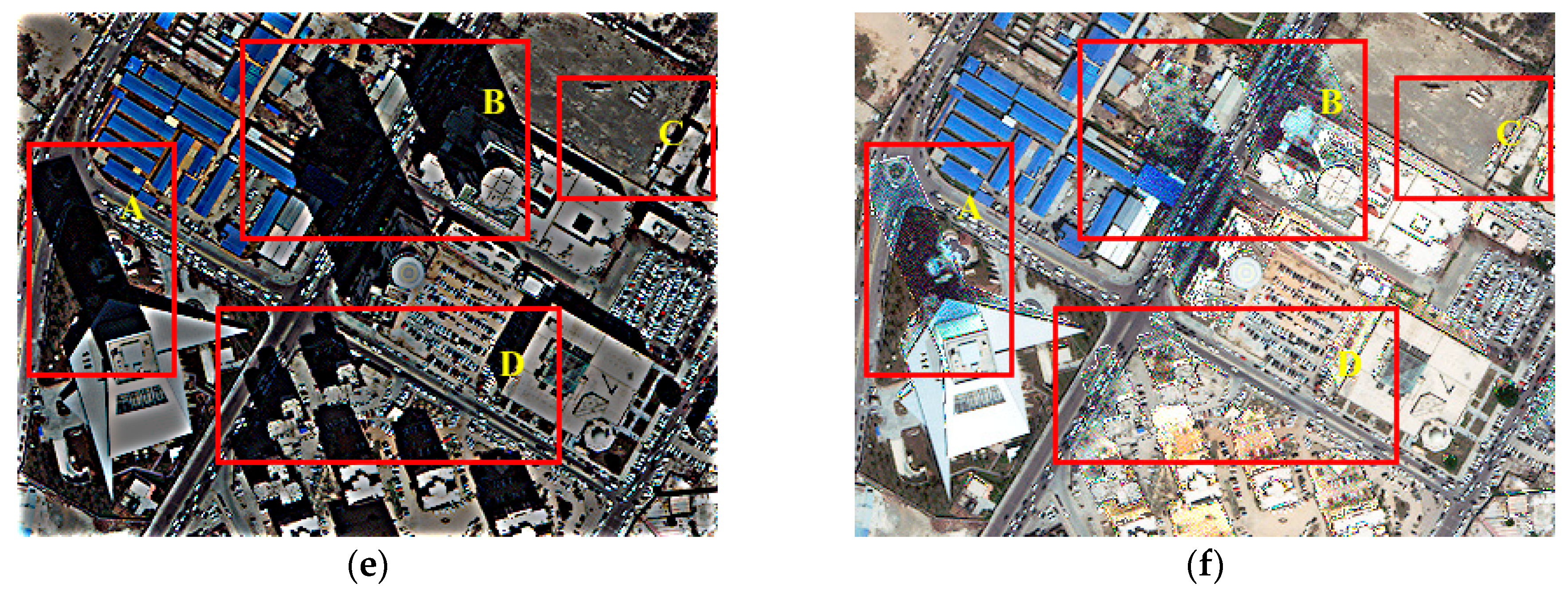

3.2. Qualititave Evaluation

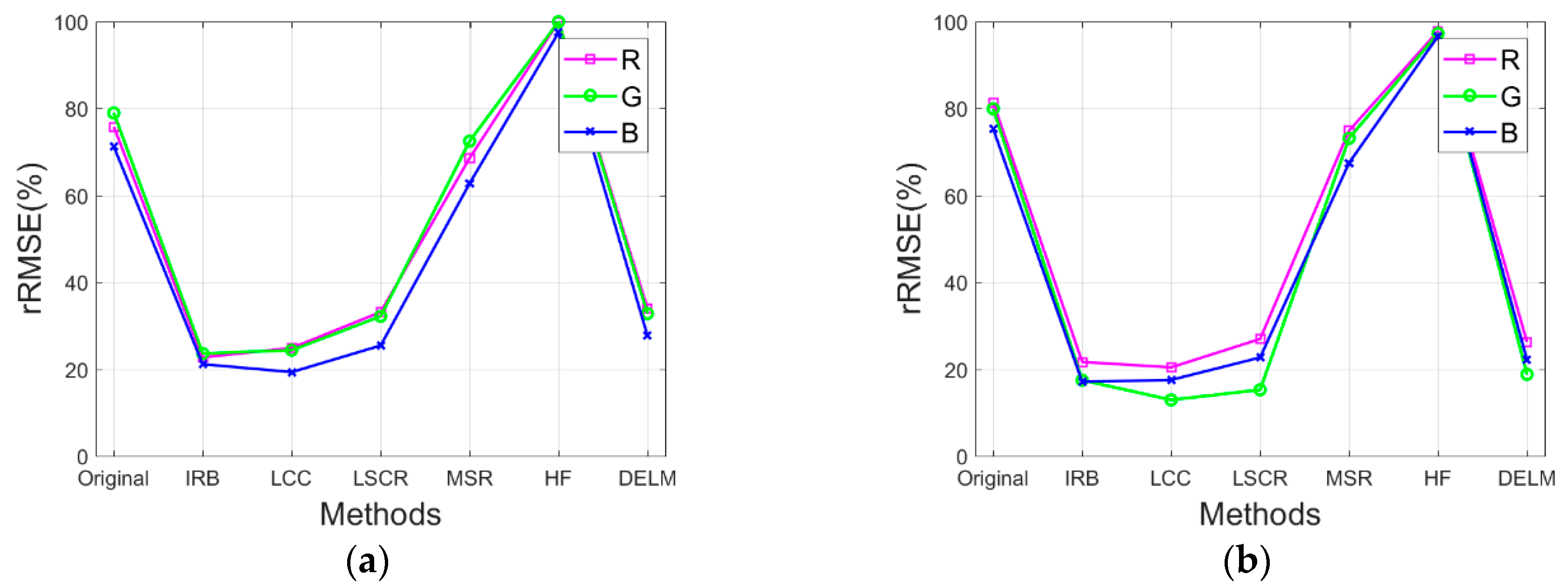

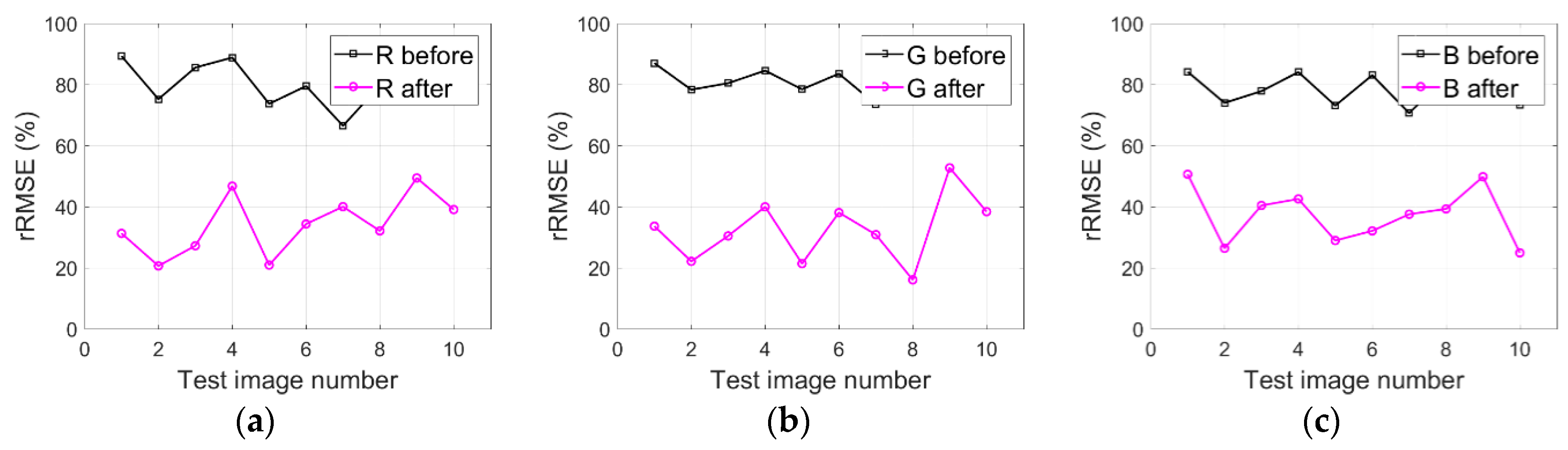

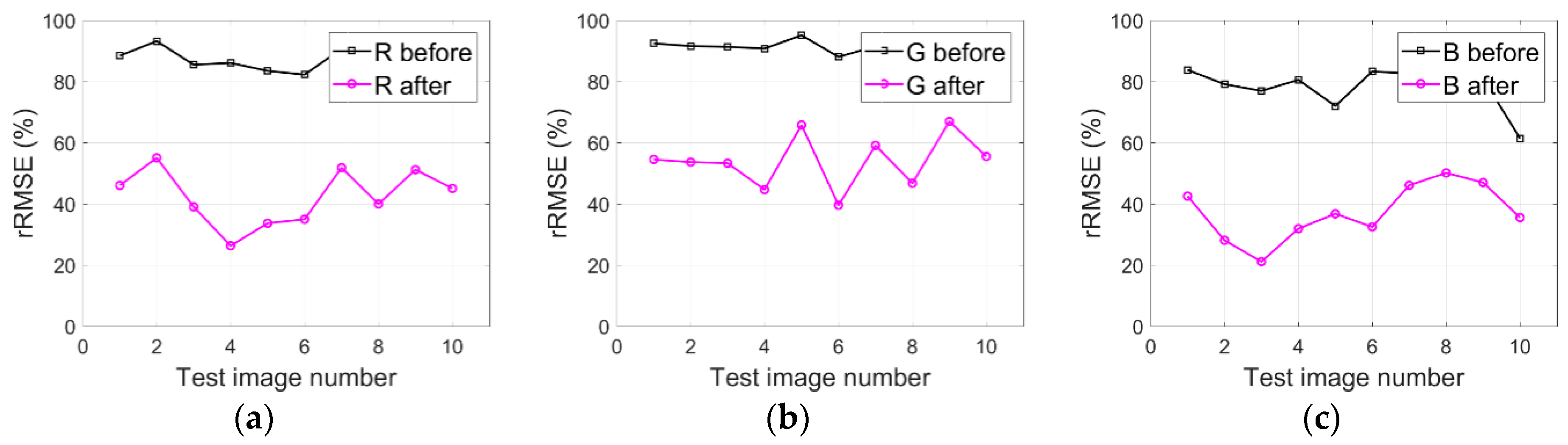

3.3. Quantitative Assessment

4. Discussion

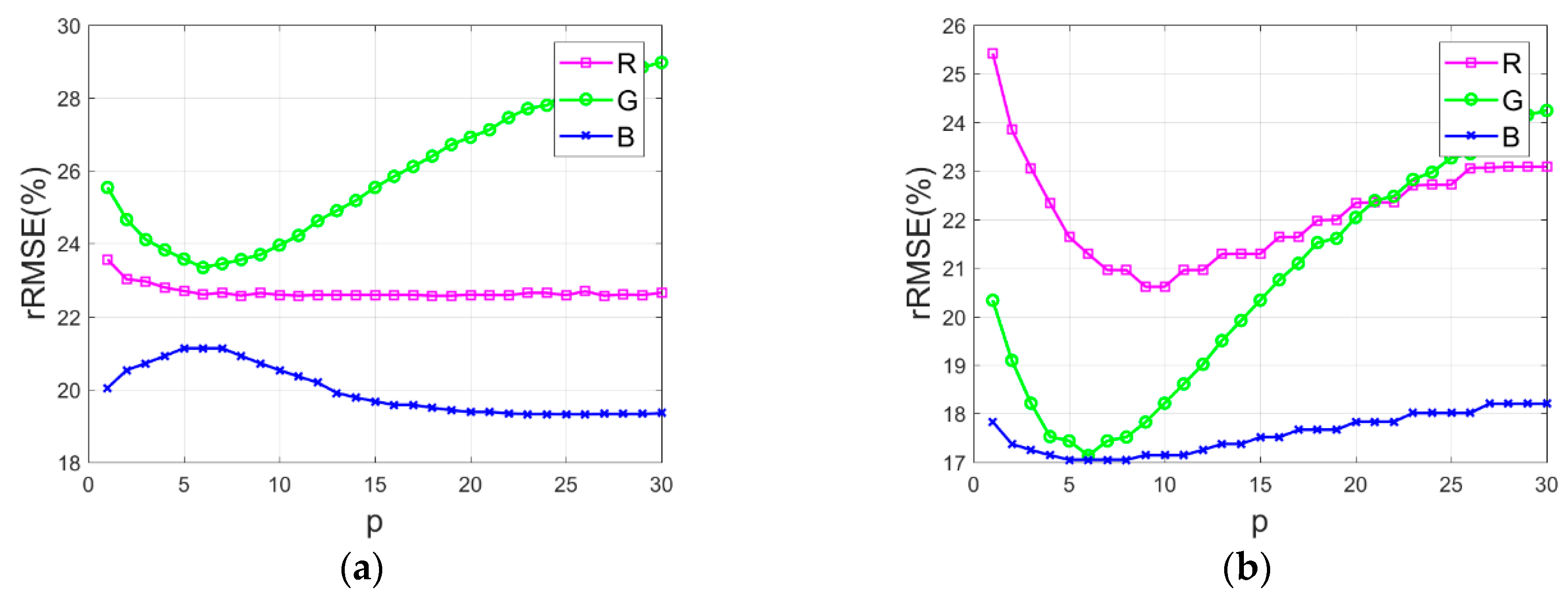

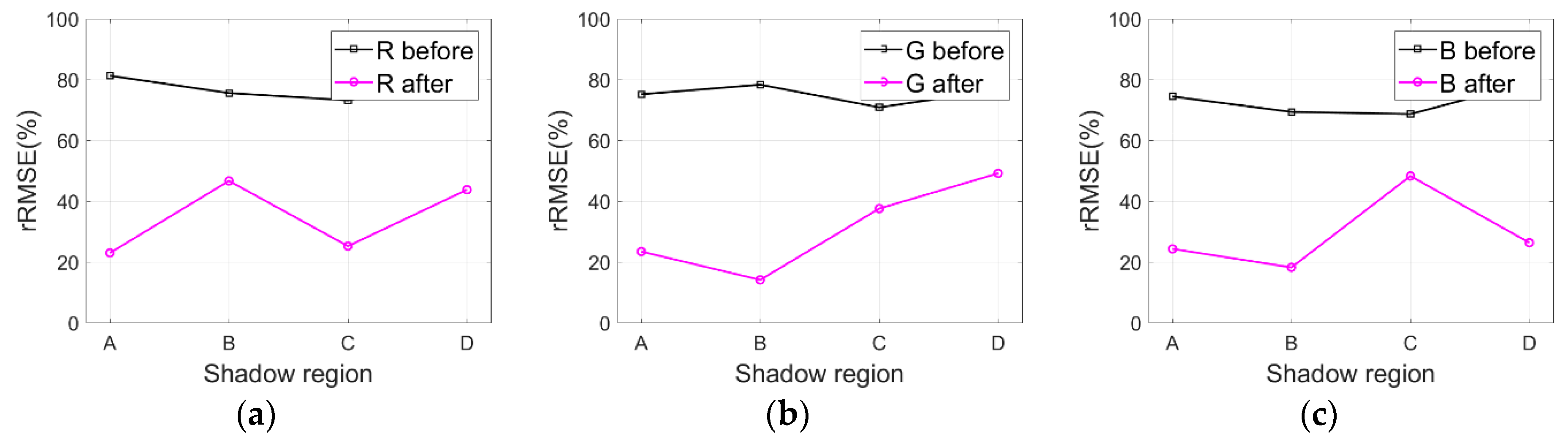

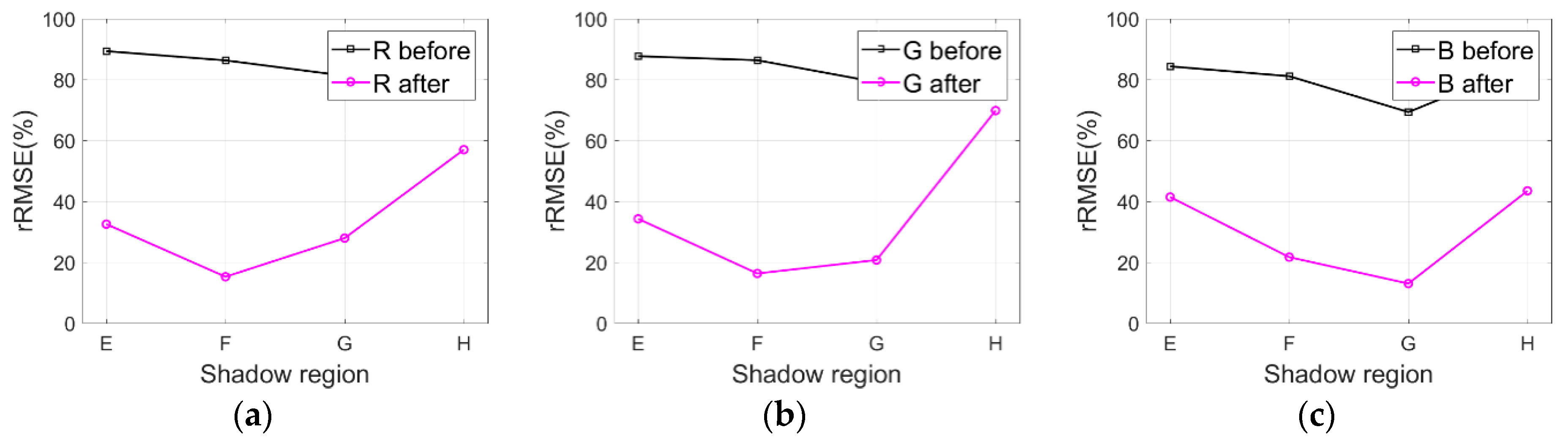

4.1. Influence Analysis of Path Radiance Estimation

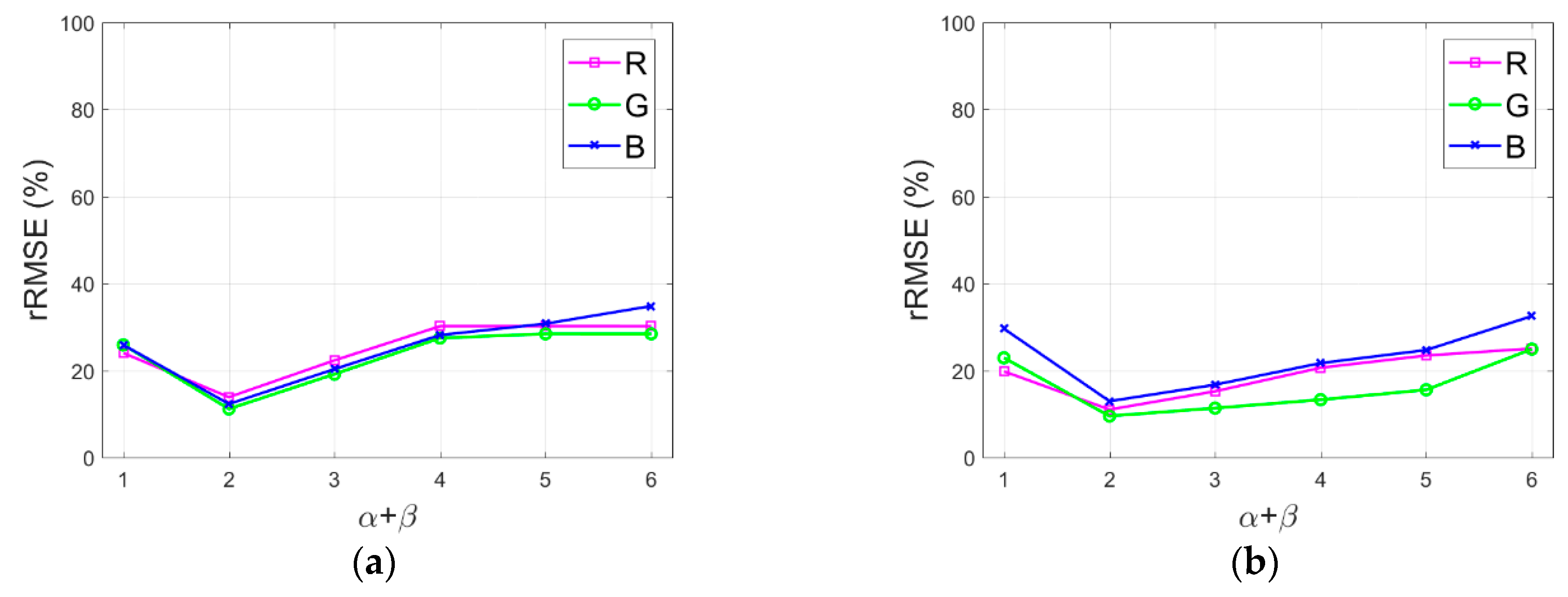

4.2. Influence Analysis of Irradiance Coefficient Computation

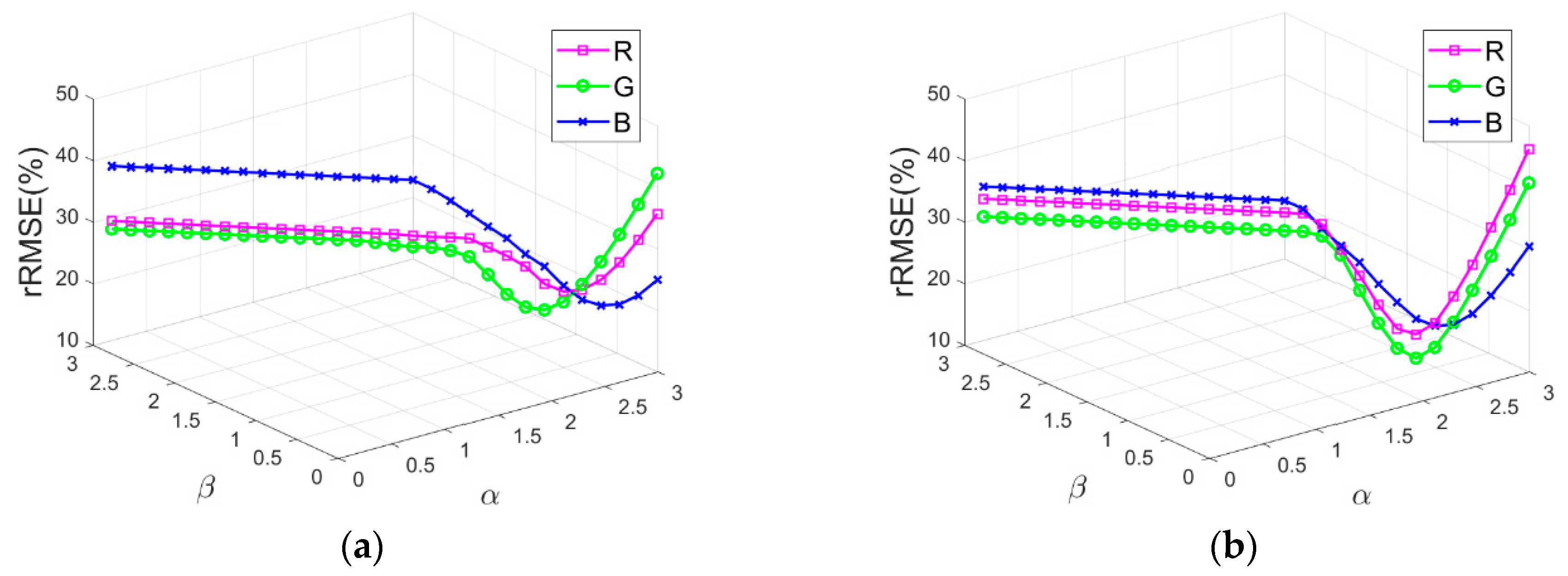

4.3. Sensitivity Analysis of Refining Parameters

4.4. Shadow Difference Analysis

4.5. IRB Method Generalization Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Marcello, J.; Medina, A.; Eugenio, F. Evaluation of spatial and spectral effectiveness of pixel-level fusion techniques. IEEE Geosci. Remote Sens. Lett. 2013, 10, 432–436. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. A spatial–spectral kernel-based approach for the classification of remote-sensing images. Pattern Recognit. 2012, 45, 381–392. [Google Scholar] [CrossRef]

- Eugenio, F.; Marcello, J.; Martin, J. High-resolution maps of Bathymetry and Benthic habitats in shallow-water environments using multispectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3539–3549. [Google Scholar] [CrossRef]

- Marcello, J.; Eugenio, F.; Perdomo, U.; Medina, A. Assessment of atmospheric algorithms to retrieve vegetation in natural protected areas using multispectral high resolution imagery. Sensors 2016, 16, 1624. [Google Scholar] [CrossRef] [PubMed]

- Martin, J.; Eugenio, F.; Marcello, J.; Medina, A. Automatic Sun glint removal of multispectral high-resolution Worldview-2 imagery for retrieving coastal shallow water parameters. Remote Sens. 2016, 8, 37. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Shu, H.; Zhang, L. High-resolution image classification integrating spectral-spatial-location cues by conditional random fields. IEEE Trans. Image Process. 2016, 25, 4033–4045. [Google Scholar] [CrossRef]

- Huang, S.; Miao, Y.; Yuan, F.; Gnyp, M.L.; Yao, Y.; Cao, Q.; Wang, H.; Lenz-Wiedemann, V.I.S.; Bareth, G. Potential of RapidEye and WorldView-2 satellite data for improving rice nitrogen status monitoring at different growth stages. Remote Sens. 2017, 9, 227. [Google Scholar] [CrossRef]

- Lan, T.; Xue, X.; Li, J.; Han, C.; Long, K. A high-dynamic-range optical remote sensing imaging method for digital TDI CMOS. Appl. Sci. 2017, 7, 1089. [Google Scholar] [CrossRef]

- Wen, Z.F.; Shao, G.F.; Mirza, Z.A.; Chen, J.L.; Lü, M.Q.; Wu, S.J. Restoration of shadows in multispectral imagery using surface reflectance relationships with nearby similar areas. Int. J. Remote Sens. 2015, 36, 4195–4212. [Google Scholar] [CrossRef]

- Ma, L.; Jiang, B.T.; Jiang, X.W.; Tian, Y. Shadow removal in remote sensing images using features sample matting. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; pp. 4412–4415. [Google Scholar]

- Silva, G.F.; Carneiro, G.B.; Doth, R.; Amaral, L.A.; Azevedo, D.F.G.d. Near real-time shadow detection and removal in aerial motion imagery application. J. Photogramm. Remote Sens. 2017, 2017, 104–121. [Google Scholar] [CrossRef]

- Tsai, V.J.D. Automated shadow compensation in color aerial images. In Proceedings of the 2006 Annual Conference of the American Society for Photogrammetry and Remote Sensing, Reno, NV, USA, 1–5 May 2006; pp. 1445–1452. [Google Scholar]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Yamazaki, F.; Liu, W.; Takasaki, M. Charactersitics of shadow and removal of its effects for remote sensing imagery. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; pp. 426–429. [Google Scholar]

- Guo, J.H.; Liang, L.; Gong, P. Removing shadows from Google Earth images. Int. J. Remote Sens. 2010, 31, 1379–1389. [Google Scholar] [CrossRef]

- Liu, W.; Yamazaki, F. Object-based shadow extraction and correction of high-resolution optical satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1296–1302. [Google Scholar] [CrossRef]

- Zigh, E.; Belbachir, M.F.; Kadiri, M.; Djebbouri, M.; Kouninef, B. New shadow detection and removal approach to improve neural stereo correspondence of dense urban VHR remote sensing images. Eur. J. Remote Sens. 2015, 48, 447–463. [Google Scholar] [CrossRef]

- Anoopa, S.; Dhanya, V.; Jubilant, J.K. Shadow detection and removal using tri-class based thresholding and shadow matting technique. Procedia Technol. 2016, 24, 1358–1365. [Google Scholar] [CrossRef]

- Su, N.; Zhang, Y.; Tian, S.; Yan, Y.M.; Miao, X.Y. Shadow detection and removal for occluded object information recovery in urban high-resolution panchromatic satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2568–2582. [Google Scholar] [CrossRef]

- Wang, Q.J.; Tian, Q.J.; Lin, Q.Z.; Li, M.X.; Wang, L.M. An improved algorithm for shadow restoration of high spatial resolution imagery. In Proceedings of the Remote Sensing of the Environment: 16th National Symposium on Remote Sensing of China, Beijing, China, 24 November 2008; pp. 1–7. [Google Scholar]

- Mostafa, Y. A review on various shadow detection and compensation techniques in remote sensing images. Can. J. Remote Sens. 2017, 43, 545–562. [Google Scholar] [CrossRef]

- Wu, S.-T.; Hsieh, Y.-T.; Chen, C.-T.; Chen, J.-C. A comparison of 4 shadow compensation techniques for land cover classification of shaded areas from high radiometric resolution aerial images. Can. J. Remote Sens. 2014, 40, 315–326. [Google Scholar] [CrossRef]

- Zhang, H.Y.; Sun, K.M.; Li, W.Z. Object-oriented shadow detection and removal from urban high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6972–6982. [Google Scholar] [CrossRef]

- Wang, S.G.; Wang, Y. Shadow detection and compensation in high resolution satellite image based on Retinex. In Proceedings of the 5th International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009; pp. 209–212. [Google Scholar]

- Hao, N.; Liao, H. Homomorphic filtering based shadow removing method for high resolution remote sensing images. Softw. Guide 2010, 9, 210–212. [Google Scholar]

- Suzuki, A.; Shio, A.; Ami, H.; Ohtsuka, S. Dynamic shadow compensation of aerial images based on color and spatial analysis. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000; pp. 317–320. [Google Scholar]

- Wang, S.G.; Guo, Z.J.; Li, D.R. Shadow detection and compensation for color aerial images. Geo-Spat. Inf. 2003, 6, 20–24. [Google Scholar]

- Guo, J.; Tian, Q. A shadow detection and compensation method for IKONOS imagery. Remote Sens. Inf. 2004, 4, 32–34. [Google Scholar]

- Sarabandi, P.; Yamazaki, F.; Matsuoka, M.; Kiremidjian, A. Shadow detection and radiometric restoration in satellite high resolution images. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 3744–3747. [Google Scholar]

- Su, J.; Lin, X.G.; Liu, D.Z. An automatic shadow detection and compensation method for remote sensed color images. In Proceedings of the 8th International Conference on Signal Processing, Guilin, China, 16–20 November 2006; pp. 1–4. [Google Scholar]

- Chen, Y.; Wen, D.; Jing, L.; Shi, P. Shadow information recovery in urban areas from very high resolution satellite imagery. Int. J. Remote Sens. 2007, 28, 3249–3254. [Google Scholar] [CrossRef]

- Xiao, Z.; Huang, J. Shadow eliminating using edge fuzzied retinex in urban colored aerial image. Chin. J. Stereol. Image Anal. 2004, 9, 95–98. [Google Scholar]

- Ye, Q.; Xie, H.; Xu, Q. Removing shadows from high-resolution urban aerial images based on color constancy. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012; pp. 525–530. [Google Scholar]

- Finlayson, G.D.; Trezzi, E. Shades of gray and color constancy. In Proceedings of the 12th Color Imaging Conference: Color Science and Engineering Systems, Technologies, Applications, Scottsdale, AZ, USA, 9 November 2004; pp. 37–41. [Google Scholar]

- Lin, Z.; Ren, C.; Yao, N.; Xie, F. A shadow compensation method for aerial image. Geomat. Inf. Sci. Wuhan Univ. 2013, 38, 431–435. [Google Scholar]

- Chen, S.; Zou, L. Chest radiographic image enhancement based on multi-scale Retinex technique. In Proceedings of the 3rd International Conference on Bioinformatics and Biomedical Engineering, Beijing, China, 11–13 June 2009; pp. 1–3. [Google Scholar]

- Guo, R.Q.; Dai, Q.Y.; Hoiem, D. Paired regions for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2956–2967. [Google Scholar] [CrossRef]

- Zheng, Q.; Wang, Q. A shadow reconstruction method for QuickBird satellite remote sensing imagery. Comput. Eng. Appl. 2008, 44, 30–32. [Google Scholar]

- Bai, T.; Jin, W. Priciple and Technology of Electronic Imaging; Beijing Institute of Technology Press: Beijing, China, 2006. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis, 5th ed.; Springer: Berlin, Germany, 2013. [Google Scholar]

- DG2017_WorldView-3_DS. Available online: https://dg-cms-uploads-production.s3.amazon-aws.com/uploads/document/file/95/DG2017_WorldView-3_DS.pdf (accessed on 25 July 2018).

- Mostafa, Y.; Abdelhafiz, A. Accurate shadow detection from high-resolution satellite images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 494–498. [Google Scholar] [CrossRef]

- Wang, Q.J.; Yan, L.; Yuan, Q.Q.; Ma, Z.L. An atomatic shadow detection method for VHR remote sensing orthoimagery. Remote Sens. 2017, 9, 469. [Google Scholar] [CrossRef]

- Mo, N.; Zhu, R.X.; Yan, L.; Zhao, Z. Deshadowing of urban airborne imagery based on object-oriented automatic shadow detection and regional matching compensation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 585–605. [Google Scholar] [CrossRef]

- Vicente, T.F.Y.; Hoai, M.; Samaras, D. Leave-one-out kernel optimization for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 682–695. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Han, C.; Lan, T.; Huang, L.; Hu, C.; Xue, X. Automatic shadow detection for multispectral satellite remote sensing images in invariant color spaces. Appl. Sci. 2020, 10, 6467. [Google Scholar] [CrossRef]

- Chavez, P.S. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Chavez, P.S. Image-based atmospheric corrections-revisited and improved. Photogramm. Eng. Remote Sens. 1996, 62, 1025–1036. [Google Scholar]

- Zhang, X.; Chen, F.; He, H. Shadow detection in high resolution remote sensing images using multiple features. ACA Autom. Sin. 2016, 42, 290–298. [Google Scholar]

| Band Number | Band Name | Wavelength Range (nm) |

|---|---|---|

| B1 | Coastal | 397–454 |

| B2 | Blue | 445–517 |

| B3 | Green | 507–586 |

| B4 | Yellow | 580–629 |

| B5 | Red | 626–696 |

| B6 | Red edge | 698–749 |

| B7 | Near-IR1 | 765–899 |

| B8 | Near-IR2 | 857–1039 |

| Atmospheric Conditions | Relative Scattering Model |

|---|---|

| Very clear | |

| Clear | |

| Moderate | |

| Hazy | |

| Very hazy |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, H.; Han, C.; Huang, L.; Lan, T.; Xue, X. Irradiance Restoration Based Shadow Compensation Approach for High Resolution Multispectral Satellite Remote Sensing Images. Sensors 2020, 20, 6053. https://doi.org/10.3390/s20216053

Han H, Han C, Huang L, Lan T, Xue X. Irradiance Restoration Based Shadow Compensation Approach for High Resolution Multispectral Satellite Remote Sensing Images. Sensors. 2020; 20(21):6053. https://doi.org/10.3390/s20216053

Chicago/Turabian StyleHan, Hongyin, Chengshan Han, Liang Huang, Taiji Lan, and Xucheng Xue. 2020. "Irradiance Restoration Based Shadow Compensation Approach for High Resolution Multispectral Satellite Remote Sensing Images" Sensors 20, no. 21: 6053. https://doi.org/10.3390/s20216053

APA StyleHan, H., Han, C., Huang, L., Lan, T., & Xue, X. (2020). Irradiance Restoration Based Shadow Compensation Approach for High Resolution Multispectral Satellite Remote Sensing Images. Sensors, 20(21), 6053. https://doi.org/10.3390/s20216053