1. Introduction

Structural health monitoring, as a crucial technique ensuring long-term structural safety, plays an essential role in early warning, evaluation, and maintenance decisions during the structural life cycle. Accelerometers, one kind of data sensors widely used in structural health monitoring practices, can directly measure the acceleration response of structures while less effective in the low-frequency range [

1]. In contrast, displacement sensors can obtain sensitive low-frequency dynamic measurements but see relatively few applications due to the inconvenience of installation and monitoring. For instance, a linear variable differential transformer (LVDT) requires a fixed reference point to measure the displacement, and the laser Doppler vibrometer needs installing close to the object.

In recent years, with the development of image data acquisition and computer vision techniques, vision-based displacement measurement technology has attracted extensive research attention due to its advantages of no contact, long distance, and low cost [

2,

3]. According to target markers required or not, the computer vision technologies for displacement measurement can be classified into two categories. The first category using customized target markers [

4,

5,

6,

7,

8,

9,

10,

11] can increase the differentiation between the tracking target and the background to improve measurement accuracy. In the actual measurement, however, the installation of markers is labor-intensive, especially when multi-point displacements are desired.

In contrast, the non-target measurement category uses the texture features of the structure surface as pseudo markers. Various methods have been developed to track the features points and identify the corresponding displacements. For instance, with feature-point matching algorithms, Khuc [

12] and Dong [

13] adopted professional high-speed cameras to achieve displacement measurement without attaching any artificial target markers. This kind of algorithms mostly needs a clear texture of the object to reduce the false recognition of features. Moreover, the use of high-speed cameras also requires high-brightness lighting conditions to achieve a sharp contrast of imaging objects. Yoon [

14] applied an optical flow algorithm and adopted a consumer-grade camera to extract the displacement response and dynamic characteristics. Feng [

15] proposed a sub-pixel template matching technique based on image correlation, improving the accuracy of displacement extraction. It is worth noting that all the above studies are applied under ideal measurement conditions and require a stable lighting environment. The influence of unfavorable environmental factors such as illumination change, fog interference, camera motion, and complex background on the measurement results is not involved. Regarding this, Dong [

16] applied a spatio-temporal context (STC) algorithm to implement the displacement measurement with a high-speed camera in the illumination change and fog environment. The research realized single-point dynamic displacement measurement but not multi-point synchronous monitoring that is more favorable in structural health monitoring practice. Furthermore, the applied STC algorithm treats the whole region of the context equally, which weakens the effectiveness of the context information. As for the camera motion problem, which is getting increasing attention in vision measurement practices, a general solution is to compensate for camera motion-induced errors by subtracting the movement of reference points on the background [

17,

18]. The methods applied therein to identify the reference-point movement are critical to the measurement accuracy, especially when camera jitter, one particular kind of camera motion undergoing dramatic change and hence increasing challenges, is taken into account.

The present study proposed an improved displacement measurement method with computer vision technology, which allows the use of smartphone-recording video to achieve simultaneous monitoring of multi-point dynamic displacements under changing environmental conditions. It is noted that current smartphones with high-resolution cameras preserve many advantages, such as low cost, portability, and ease of use, making it a competitive device for optical sensing under various conditions [

19]. In the proposed method, after calibration of the sequential distorted images shot from the smartphone, multiple areas centered at the targeting points are predefined with permissible overlapping. A motion-enhanced spatio-temporal context (MSTC) algorithm is then developed for robust tracking of the moving targets. Therein, a motion-enhanced function is constructed with an optical flow (OF) algorithm to strengthen the beneficial information and weaken the interference information in the whole context area. Finally, based on the tracked pixel-level results from the MSTC algorithm, the OF algorithm is re-applied to estimate the subpixel-level moving, benefiting eventually to the achievement of high-precision displacement results. To validate the feasibility of the proposed method for monitoring vibrating displacements in a wide frequency range, a sine-sweep vibration experiment on a cantilever sphere model is carried out. In the test, an iPhone 7 was used to shoot the vibration process of the sine-sweep-excited sphere, and illumination change, fog interference, and camera jitter were artificially simulated to represent the interference environment. By referring to the displacement sensor data, comparative studies between results from the proposed method and current vision methods were presented.

2. Integrated Process for Multi-Point Displacement Measurement

Implementation of the proposed measurement method focuses on three aspects: smartphone application, multi-point monitoring, and high-precision displacement extraction.

The first aspect needs to solve the calibration of the wide-angle distortion from the consumer-grade camera of the smartphone. Therein, Zhang’s method [

20] is employed to implement the camera calibration, and we further propose an iterative calibration procedure based on a continually expanding image library to achieve calibrated parameters converged.

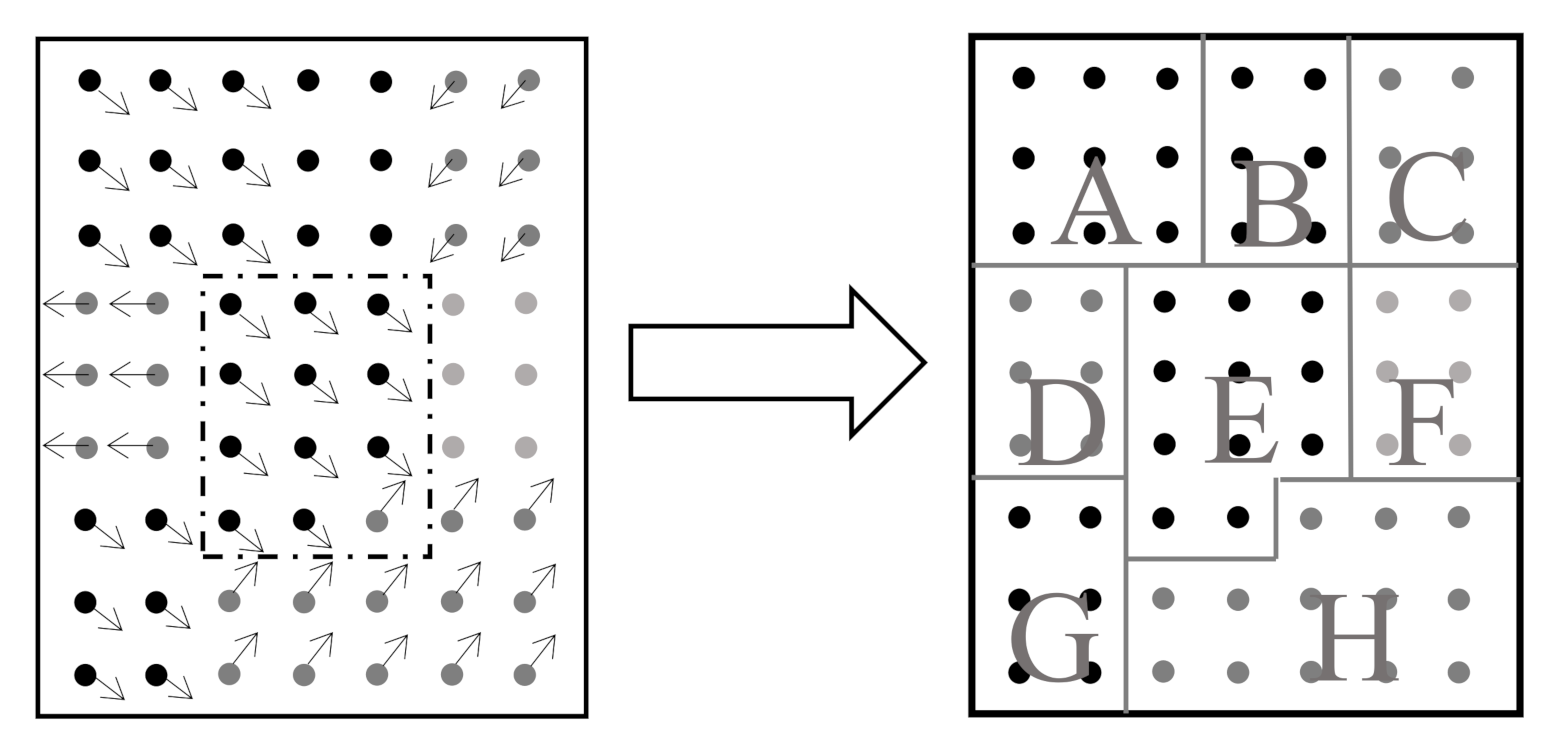

The second aspect concerns the issue of multi-target selection and definition. Therein, through introducing multi-region compatibility and parallel design, we extend the range of the STC algorithm from conventional single-target tracking to multi-target tracking.

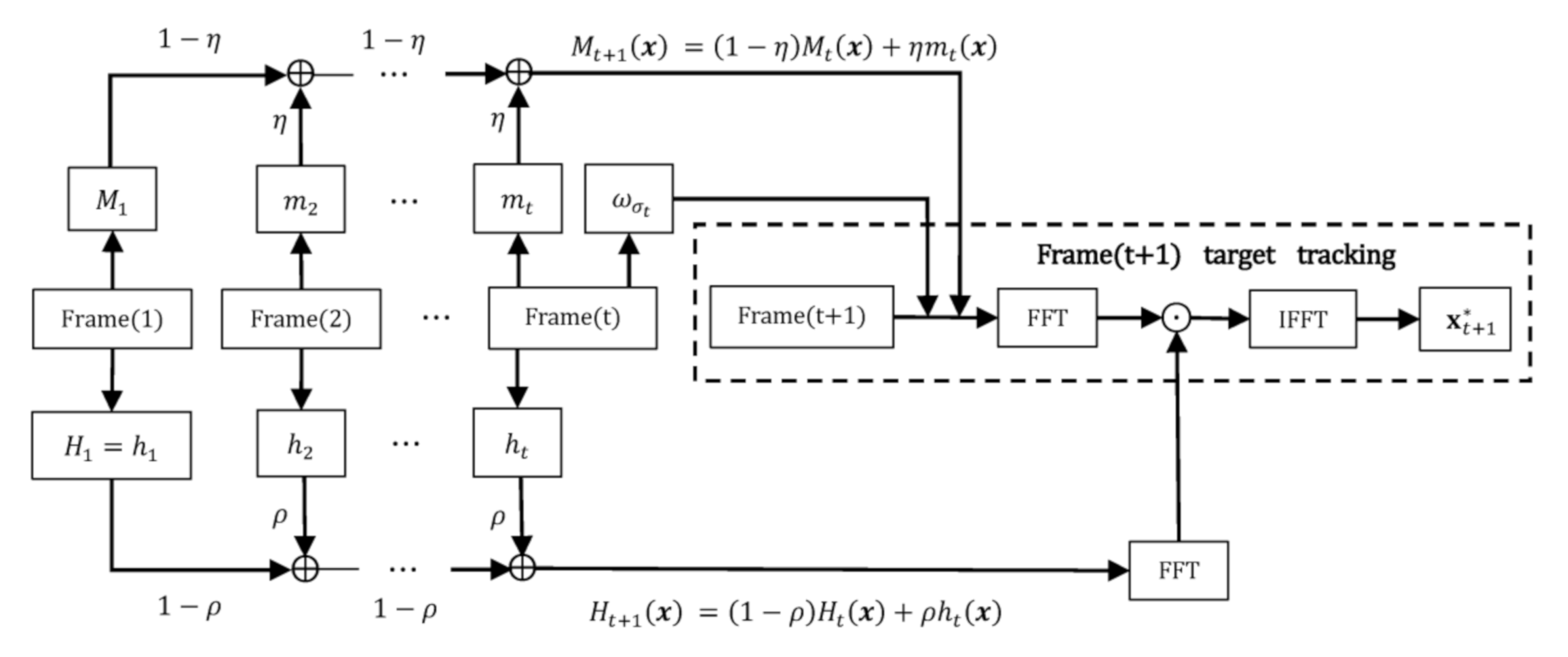

The last aspect deals with the algorithms about multi-target tracking and high-precision displacement extraction under environmental interference, which is the most crucial part of the integrated process. Therein, we develop a new tracking algorithm, i.e., the MSTC algorithm, to implement robust tracking of multiple objects. A sub-pixel displacement estimation method [

21] is then employed to refine the measurement.

Figure 1 shows the flowchart of the monitoring process, in which three main parts are integrated with sequence and will be illustrated in detail in the following.

3. Smartphone-Shoot Image Calibration

In most smartphones, the consumer-grade cameras are equipped with a wide-angle lens to increase the shooting range, while it will introduce large radial and tangential distortion to the image. Therefore, to reduce the position error caused by the image distortion, it is necessary to calibrate the camera and identify the internal/external parameters and distortion coefficients.

According to the pinhole model used for camera imaging [

20], the relationship between a real point

A (

X,

Y,

Z) and the corresponding point

A’(

u,

v) in the camera image can be described as

where (

X,

Y,

Z), (

x,

y), and (

u,

v) represent the coordinates of point

A in the real-world coordinate system, camera coordinate system, and pixel coordinate system, respectively;

s is a scaling factor; the external parameter matrices

R and

t, respectively, represent the rotation matrix and the translation matrix, related to the physical position of the camera; the internal parameter matrix

K is determined by the camera structure and material, represented as

where

and

are scale factors of the

x and

y axes in the pixel coordinate system;

is the inclination parameter of the axis;

and

are coordinates of the origin.

Equations (1) and (2) represent an ideal imaging relationship. However, the camera lens produces radial and tangential distortions, causing the imaging point to shift. A nonlinear distortion model [

22] can be used to describe the distortions as

in which (

,

) and (

,

) are the ideal exact coordinates and actual distorted coordinates of the target point in the camera coordinate system, respectively;

and

are radial distortion coefficients;

and

are tangential distortion coefficients;

r is a radius defined by

Identification of internal/external parameters and distortion coefficients, namely, camera calibration, is based on the accurate coordinates (X, Y, Z) of several predefined points and the distorted pixel coordinates (, ) of the corresponding imaging points. Specific values of all the parameters and coefficients can be solved by minimizing the sum of square errors between the distorted pixel coordinates (, ) and the pixel coordinates projected from (X, Y, Z) with Equations (1)–(5).

In the present study, we use the method proposed in Reference [

20] to implement the camera calibration. Firstly, an image database is established by taking photos at a predefined chessboard with the smartphone, an iPhone 7, of different locations and postures. Then a series of calibration analyses adopting various numbers of images are carried out until a converged result is reached, as listed in

Table 1 and

Table 2 for final results of all the parameters and coefficients of the iPhone 7 rear camera.

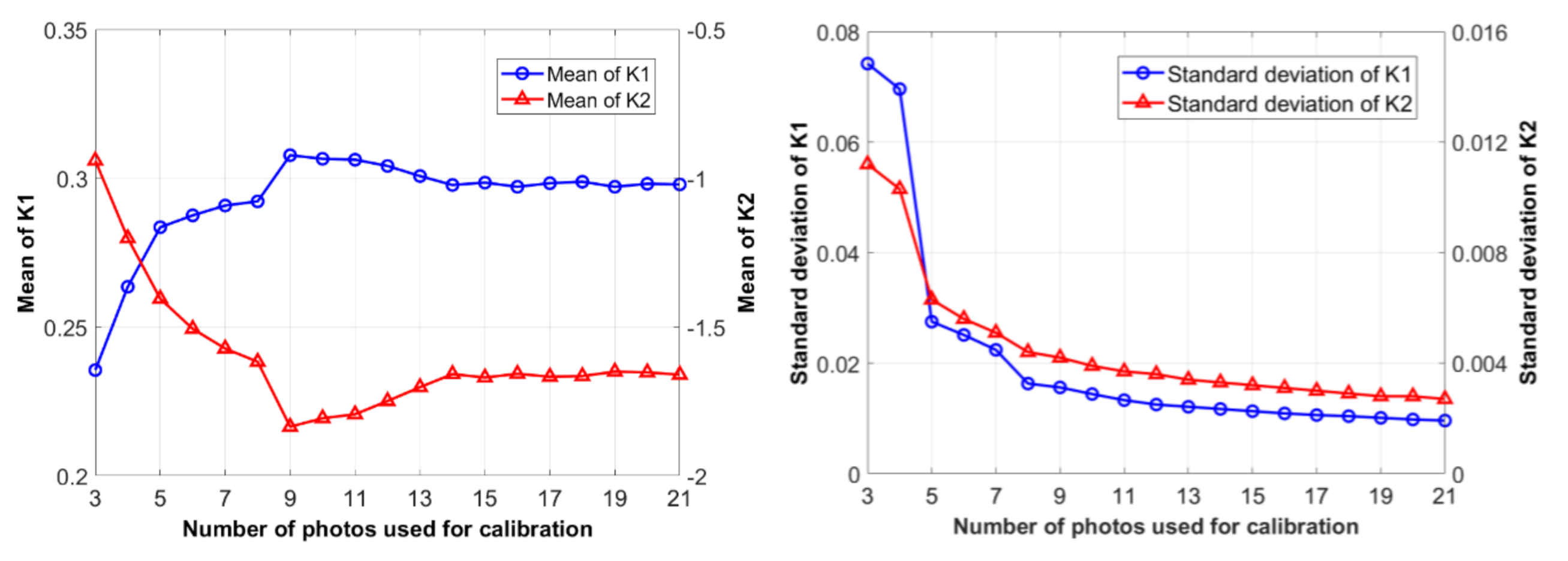

Figure 2 shows the variation of identified coefficients

and

versus the number of images used. It can be observed that nearly 20 images are sufficient to meet a convergence requirement for the camera calibration.

After the camera calibration, we apply Equation (4) for every interested image to adjust the distorted pixel coordinates (

,

) to a more rational one

, which is finally used in Equations (1) and (2) to get the real-world coordinates.

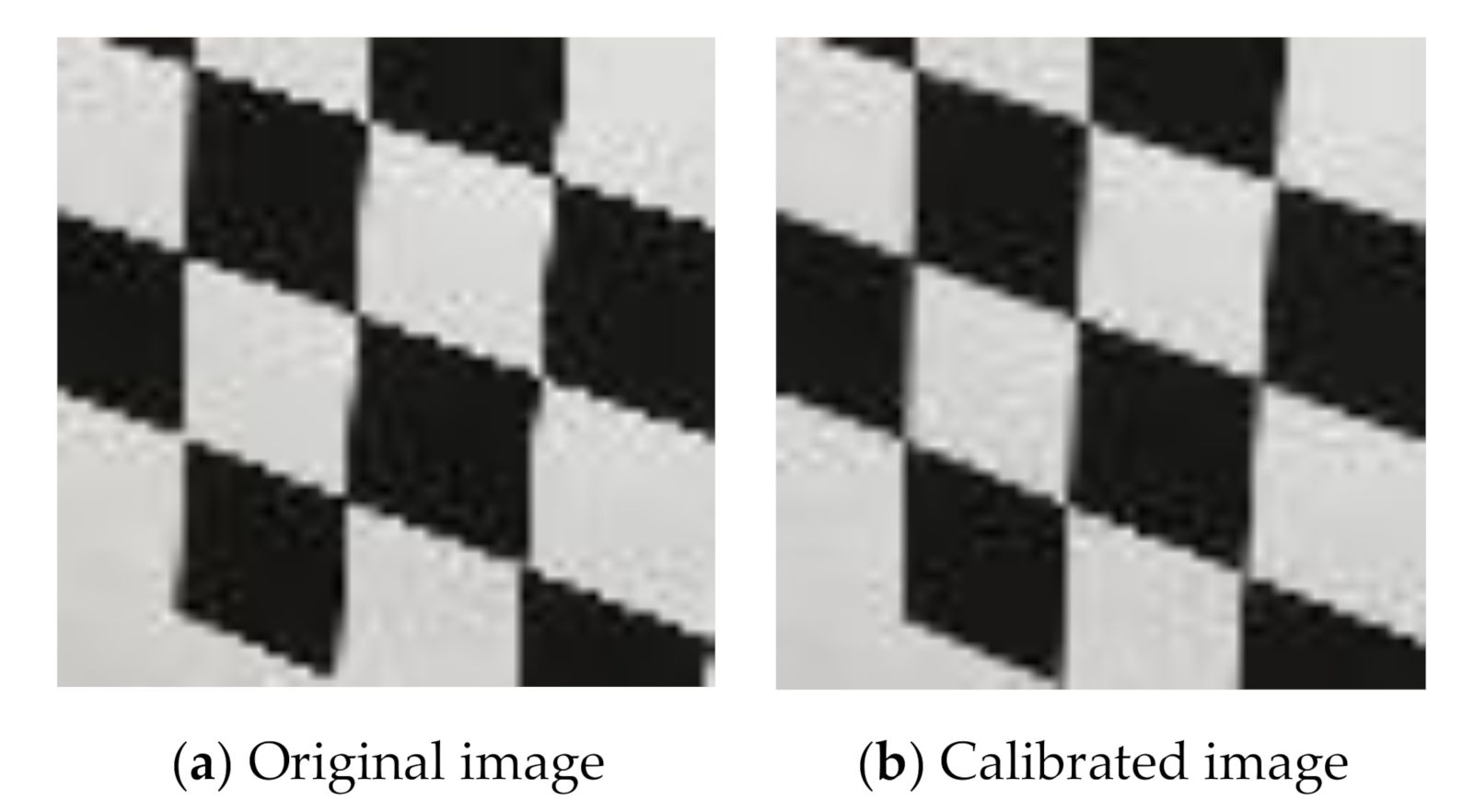

Figure 3 shows a comparison of a partial image of the chessboard before and after calibration. It can be seen in the original image that, due to radial and tangential distortions, vertical edges of black and white squares are projected as curves and fail to form a straight line, especially for the most marginal black square in the bottom left corner. At the same time, apparent zigzag happens on horizontal edges. In the calibrated image, the distorted vertical edges have been recovered nearly straight, and the zigzagged horizontal edges have been partly smoothed. The calibration, although not 100% recovery, still favors in an error reduction of the final displacement.

6. Sub-Pixel Displacement Estimation with OF Algorithm

The target position obtained above is of integer pixel, resulting in a pixel-level displacement. To achieve more accurate movement, besides choosing a higher-resolution camera, one can also use the OF algorithm to estimate the sub-pixel displacement [

21].

In general, the following relationship holds between the target intensities in two adjacent frame images, e.g.,

and

,

where the target displacement

can be expressed as the sum of the pixel-level part

and the sub-pixel part

in which

≪1 and

≪1.

After the pixel-level displacement

has been identified by using the MSTC algorithm, we can construct a new template image relating to the pixel-level displacement, then apply the OF algorithm to expand the

in terms of

and

,

where

represents the intensity of the new template image.

Then, by applying the same least-square OF method as in

Section 5.2.2, we can get

where all the partial derivative can be estimated similarly as in

Section 5.2.2.

Related research [

21] has shown that the accuracy of sub-pixel displacement estimated by the OF algorithm can reach as small as 0.0125 pixel. Therefore, the combination of the MSTC tracking algorithm to identify the pixel-level movement and the OF algorithm to estimate the sub-pixel displacement can effectively improve the measurement precision of the target displacement. It is also worth noting that the same OF algorithm is adopted in the present study to estimate both the pixel-wise motion tendency and the sub-pixel displacement, facilitating the implementation of compact and unified programming.

7. Laboratory Verification

In the following, we will carry out forced vibration experiments of a cantilever sphere model to verify whether the proposed method can accurately obtain the multi-point displacement time history of the model subject to light change, fog interference, and camera jitter. Based on smartphone-shoot videos recording the model vibration process, measurement results with the proposed MSTC method are compared to the displacement sensor data, as well as those obtained with two current vision methods, e.g., the conventional STC method [

16] and the characteristic OF method [

14].

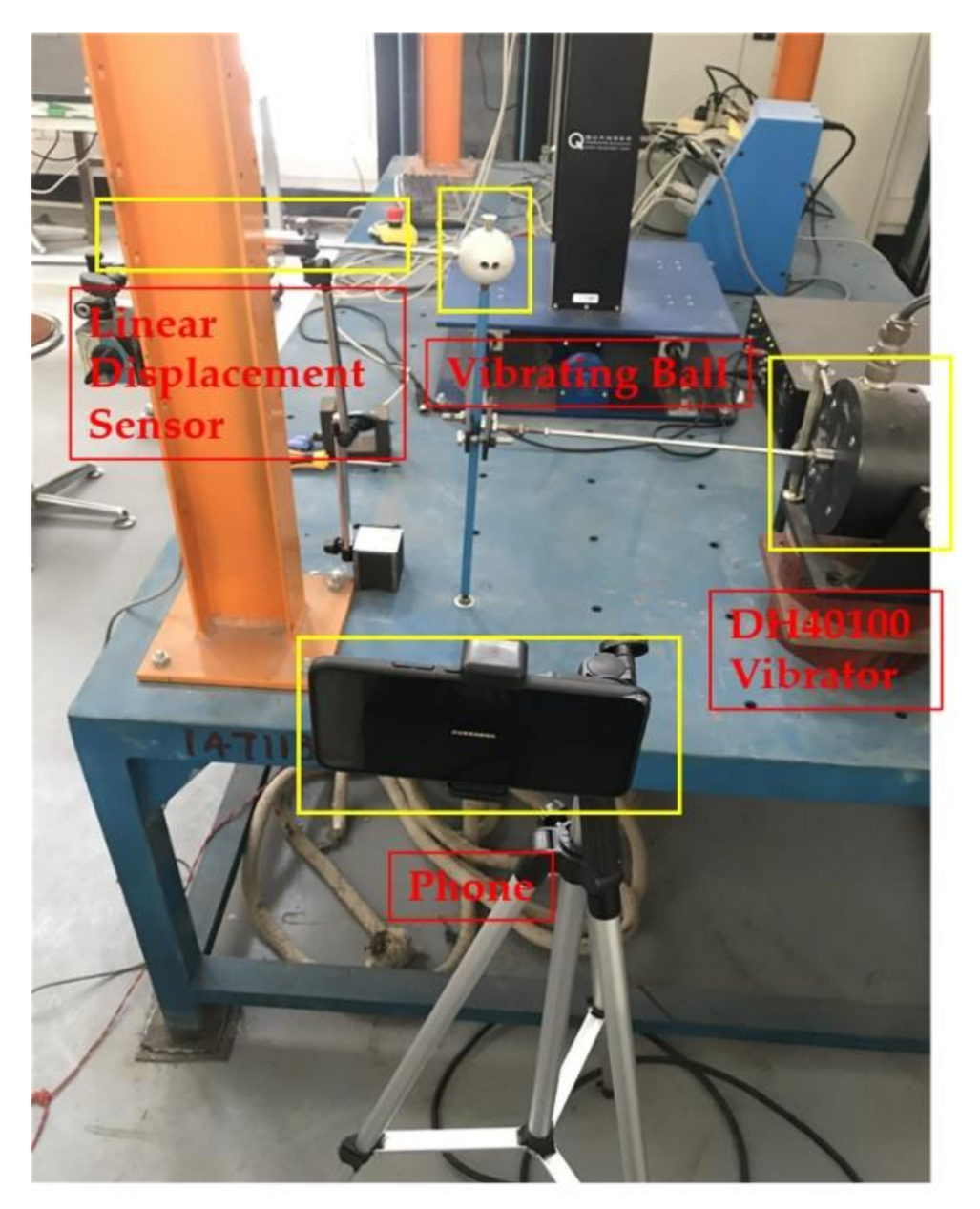

7.1. Experiment Setup

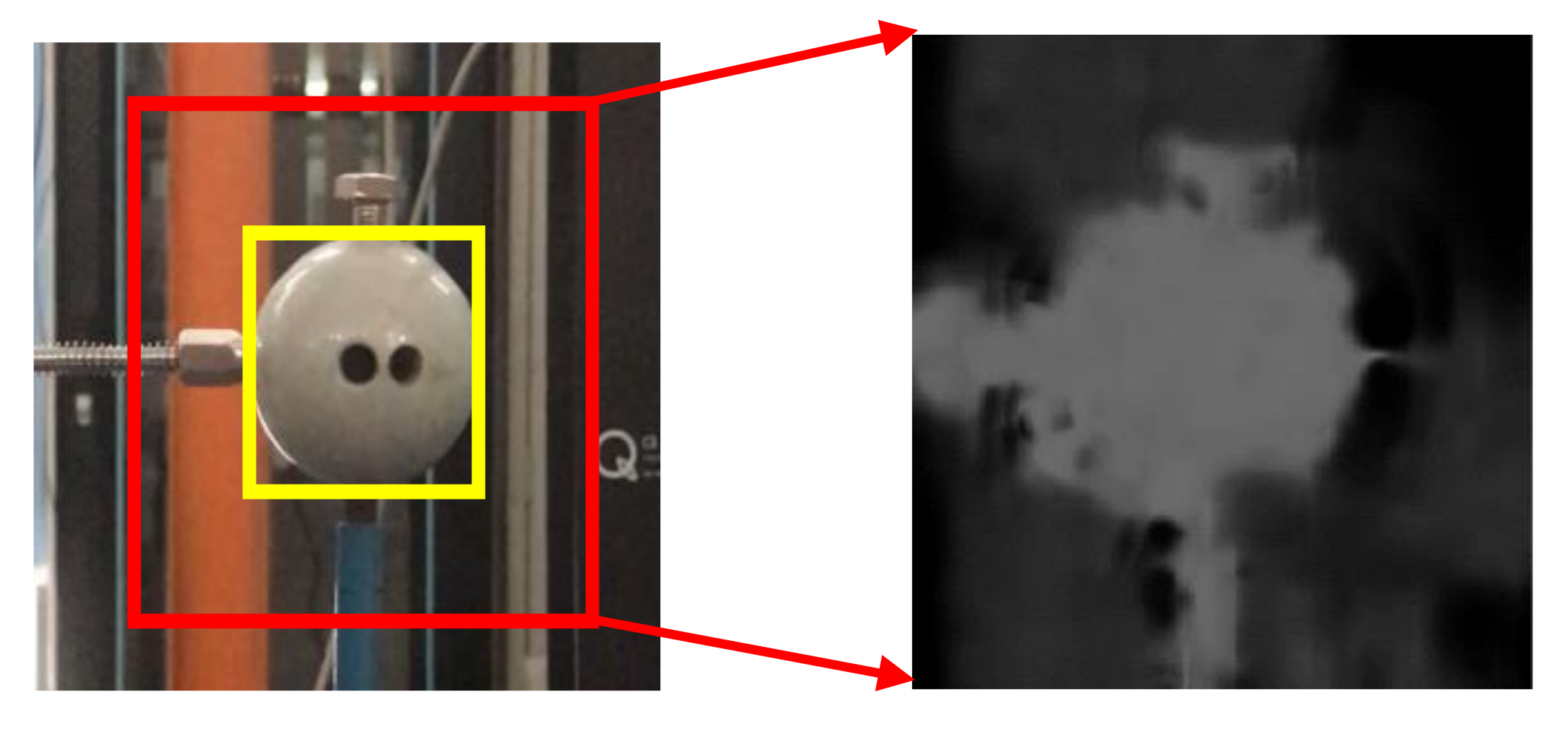

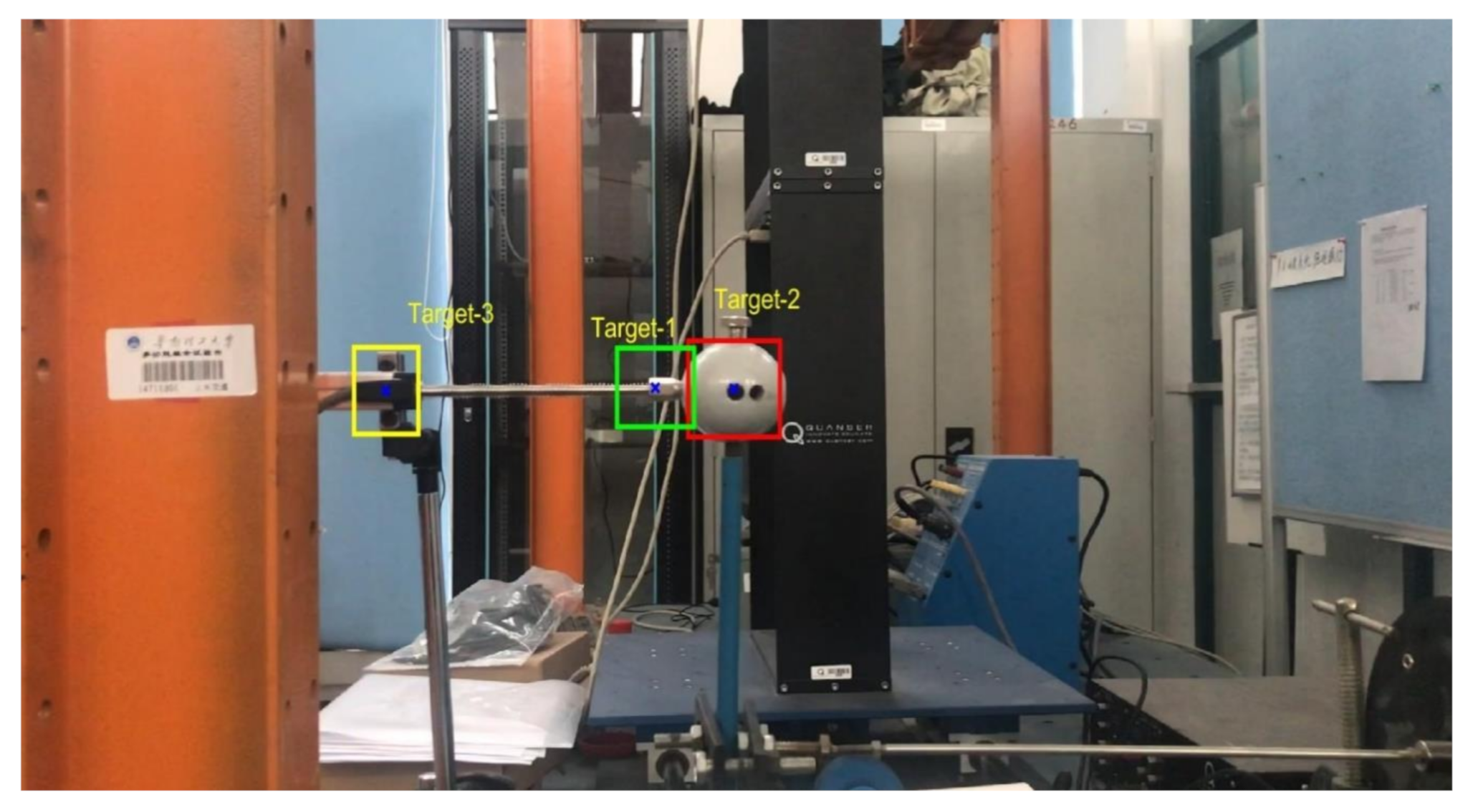

Figure 9 shows the experiment layout. A steel sphere is installed on top of a vertical steel rod that is bottom-fixed on a rigid support and connected on the middle to a vibration exciter, which will input external excitation to the structural model and force the sphere to vibrate horizontally. A linear variable differential transformer (LVDT), in which one end connected to the sphere and the other end fixed to the left steel column, is used to measure the displacement of the sphere, with data automatically collected by a computer. An iPhone7 smartphone mounted on a tripod in front of the model is used to shoot the sphere vibration video. The resolution of the iPhone7 rear camera is set to 1920 × 1080 pixels and the theoretical frame rate set to 60 frames per second (FPS). The actual frame rate of the video, however, is 59.96 FPS. This subtle FPS difference may cause significant errors to the displacement result, as will be illustrated in detail later. It should also be noted that we deliberately keep the complex background, without cover like usual doing, to approach the real application environment.

In the experiment, for verification in a wide-band frequency range, the exciter is set to produce repetitive sine-sweep excitation with frequency linearly varying from 1 Hz to 10 Hz. We design four scenarios to examine the application effect of the vision methods in different environments,

- (1)

Scenario I: no interference in the environment during the vibration.

- (2)

Scenario II: illumination variation in the environment during the vibration. We place a lamp near the sphere and switch it on/off several times during the vibration. As shown in

Figure 10, the left image represents the case when the lamp is switched off, and the right image when switched on. A significant difference in image brightness can be observed between the two cases.

- (3)

Scenario III: fog interference in the environment during the vibration. We place a humidifier between the sphere and the smartphone and turn it on during the vibration to simulate the foggy environment. As shown in

Figure 11, the left image represents the case when the humidifier is turned off and the right image when turned on. A significant difference in image contrast can be observed between the two cases.

- (4)

Scenario IV: camera jitter during the vibration. We use a pencil to tap the tripod three times during the vibration to make the camera jitter happen after each tapping. As shown in

Figure 12 are typical frame images taken from the video at the time right after each tapping. A comparison between

Figure 12 and

Figure 13 (see later for non-vibrating state) shows that the images are severely blurred due to the camera jitter, in which one can hardly find clear features points.

7.2. Measurement Results

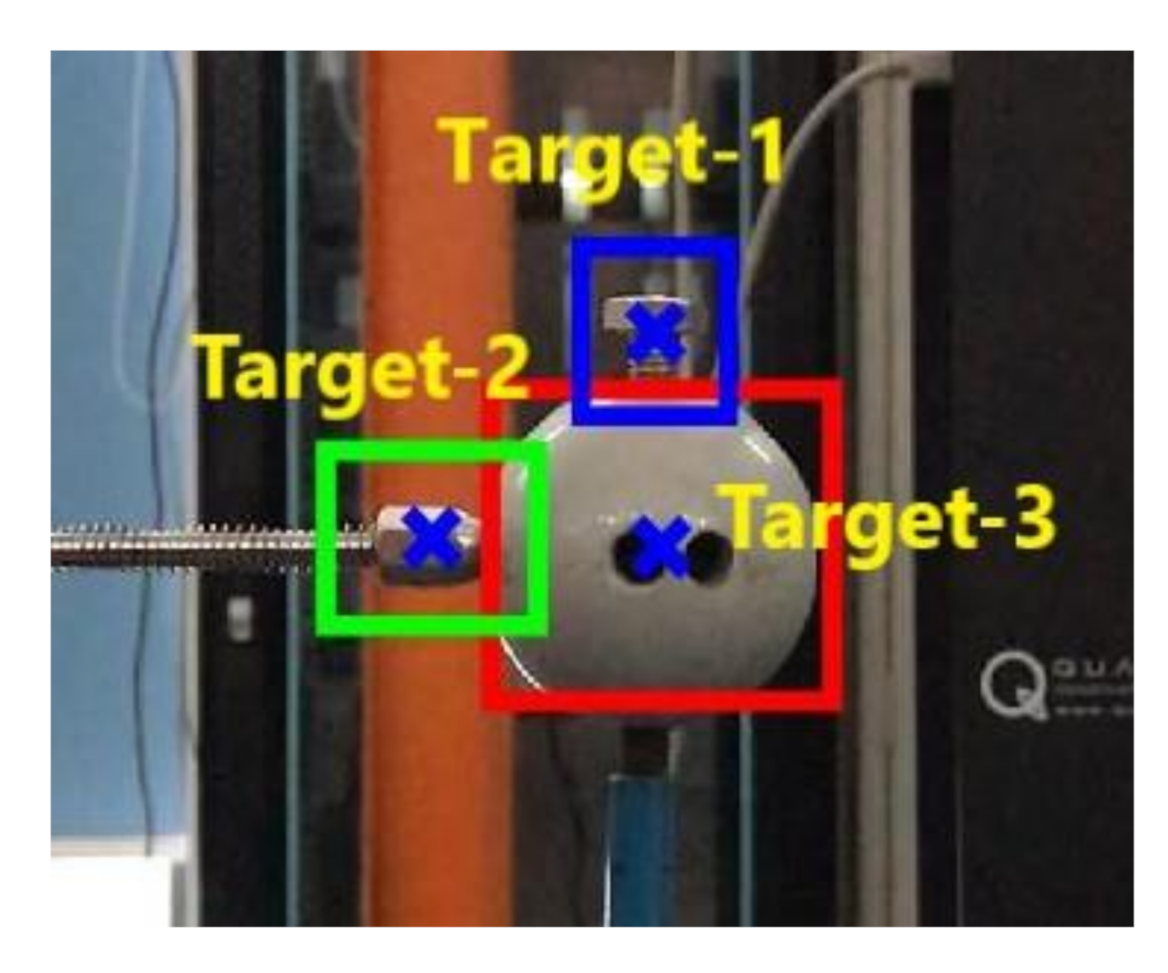

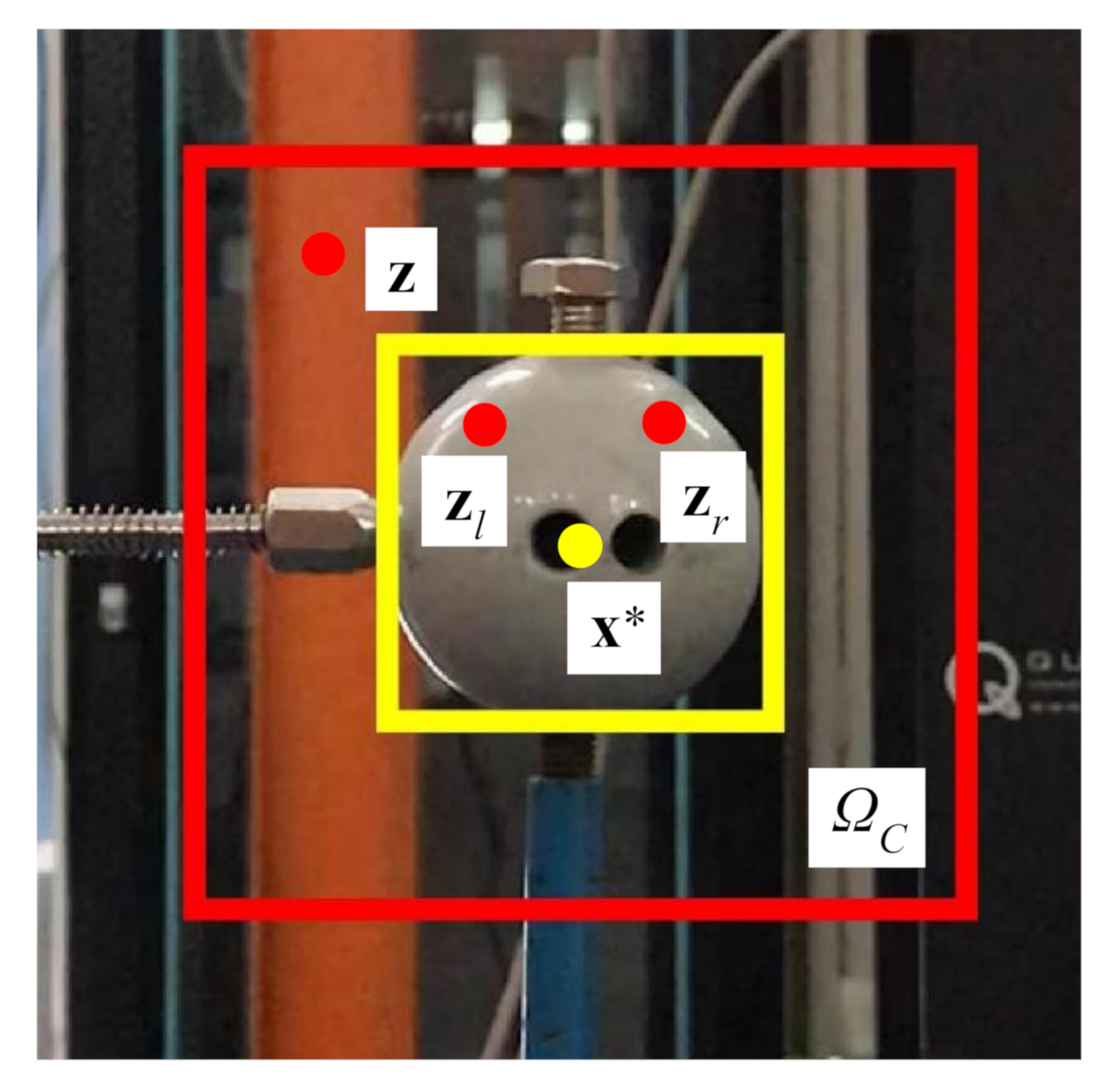

We define three points as the target points that locates at a similar height as the measured point of the LVDT. As shown in

Figure 13 are the definition, in the first frame image, of the three target points denoted with blue cross marks and the corresponding target areas indicated with green, red, and yellow boxes. It can be seen that the first target point is sitting on the tip end of the LVDT, the second one is nearly the center of the sphere, and the third one is on the fixed end of the LVDT. During the vibration, the third target point is indeed non-moving. Therefore, in Scenarios I-III, we only track the first and second target points. Moreover, in Scenario IV, we further track the third target point for the sake of excluding the camera-jitter influence from the measurements of the first and second target points.

We firstly adjust the original vibration video shot by the smartphone to a new one by using all the calibrated parameters and coefficients obtained in

Section 3, then apply three vision-based measurement methods, i.e., MSTC, STC, and characteristic OF, to the new video to get the corresponding time–history displacements. The characteristic OF method [

14] applied herein uses a tracking algorithm that combines Lucas-Kanade optical flow and Harris corners, in which the points for tracking cannot be artificially defined but automatically determined with critical features in the first frame image. So, the tracked points with this OF method cannot be exactly the ones defined above for the MSTC and STC, and we choose one of those feature points that is nearest to the second target point for comparative study.

In the comparative study, we use two types of evaluation indicators [

26], e.g., the normalized mean square error indicator (

NMSE) and the correlation coefficient indicator (

), by referring to the LVDT data to evaluate the overall performances of the vision-based measurement methods,

where,

and

are the

i-th time-instant displacements obtained with the vision method and the LVDT, respectively;

and

represent the corresponding mean values of the time–history displacements. For both indicators, a value closer to 1 indicates a better agreement between the vision-measured result and the LVDT data. It is noted that, besides these two overall indicators, some local indices such as deviations from maximum or minimum values are also investigated in the present study for comprehensive understanding.

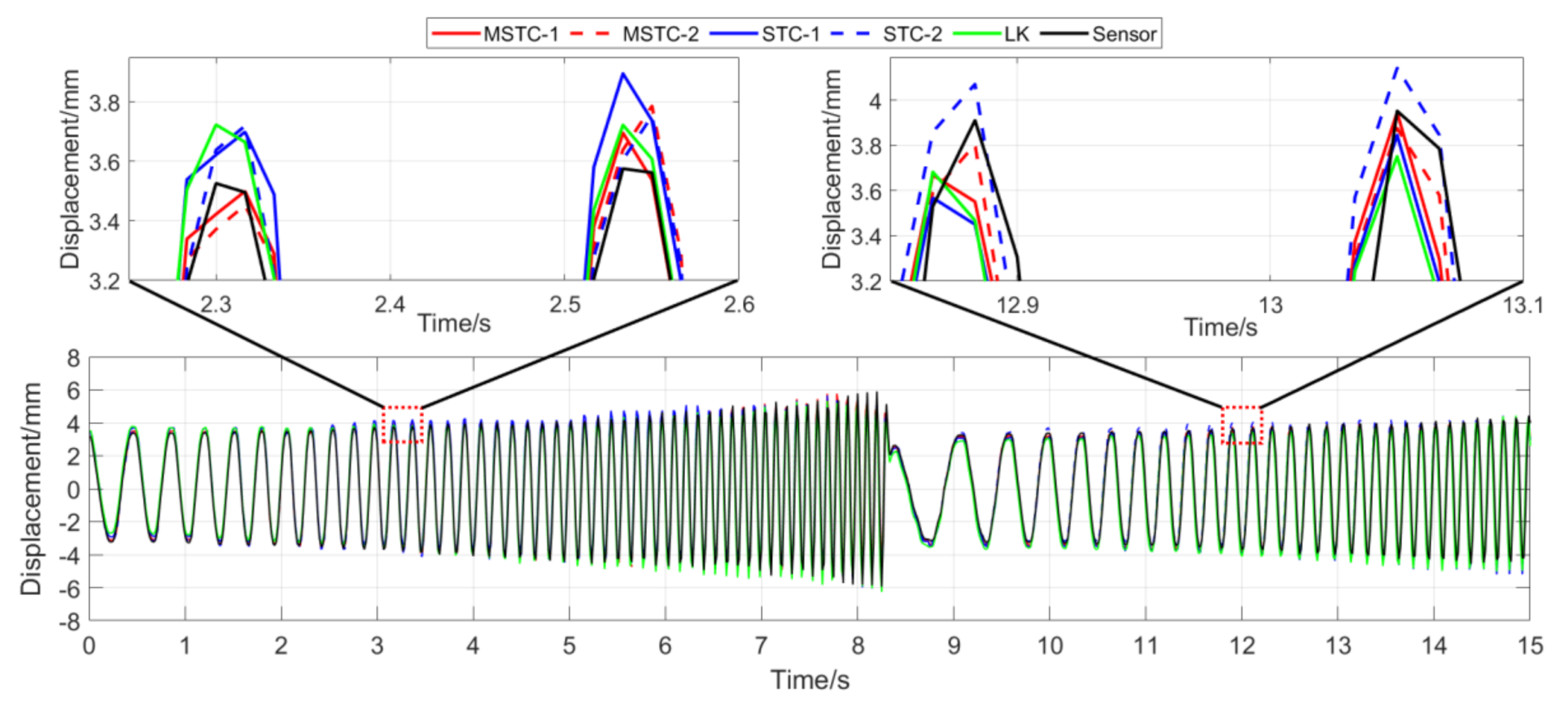

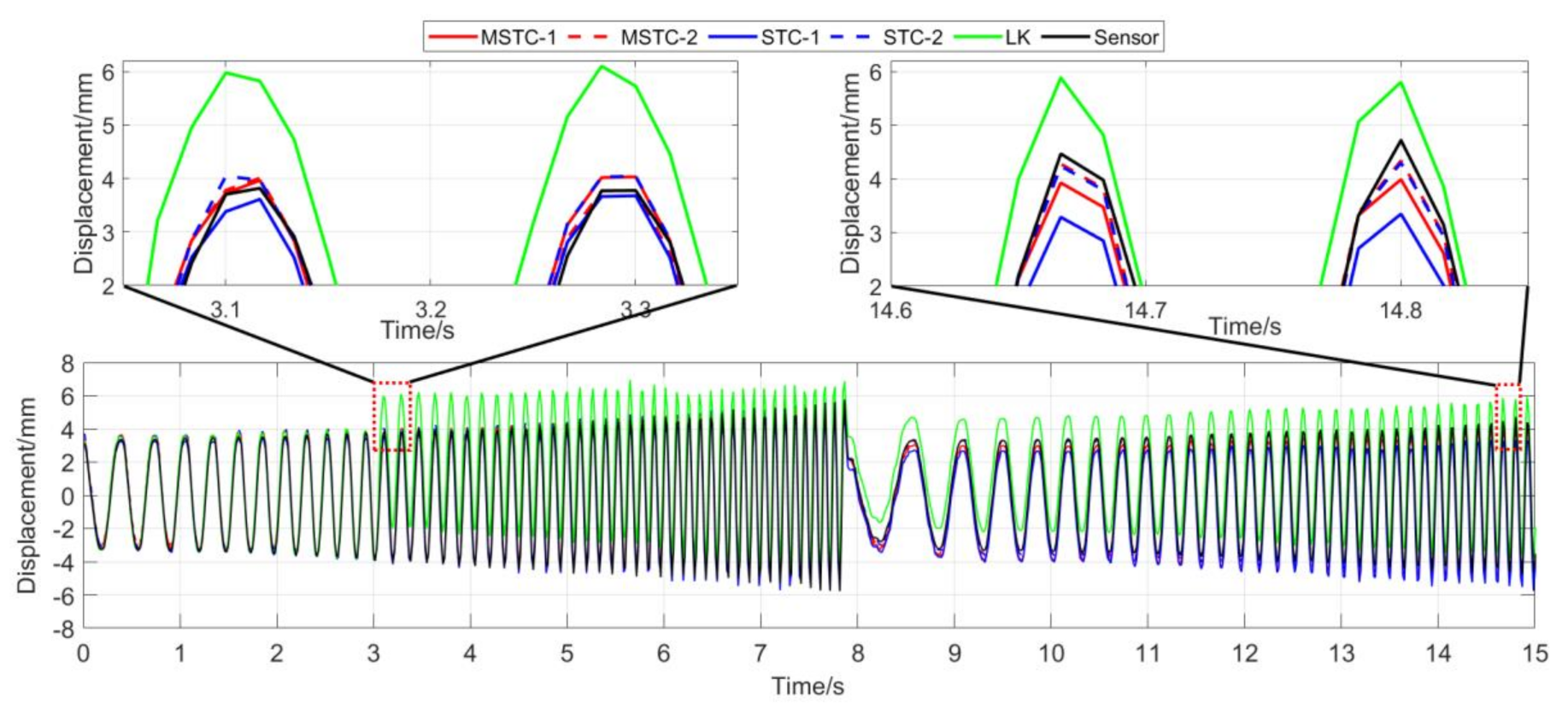

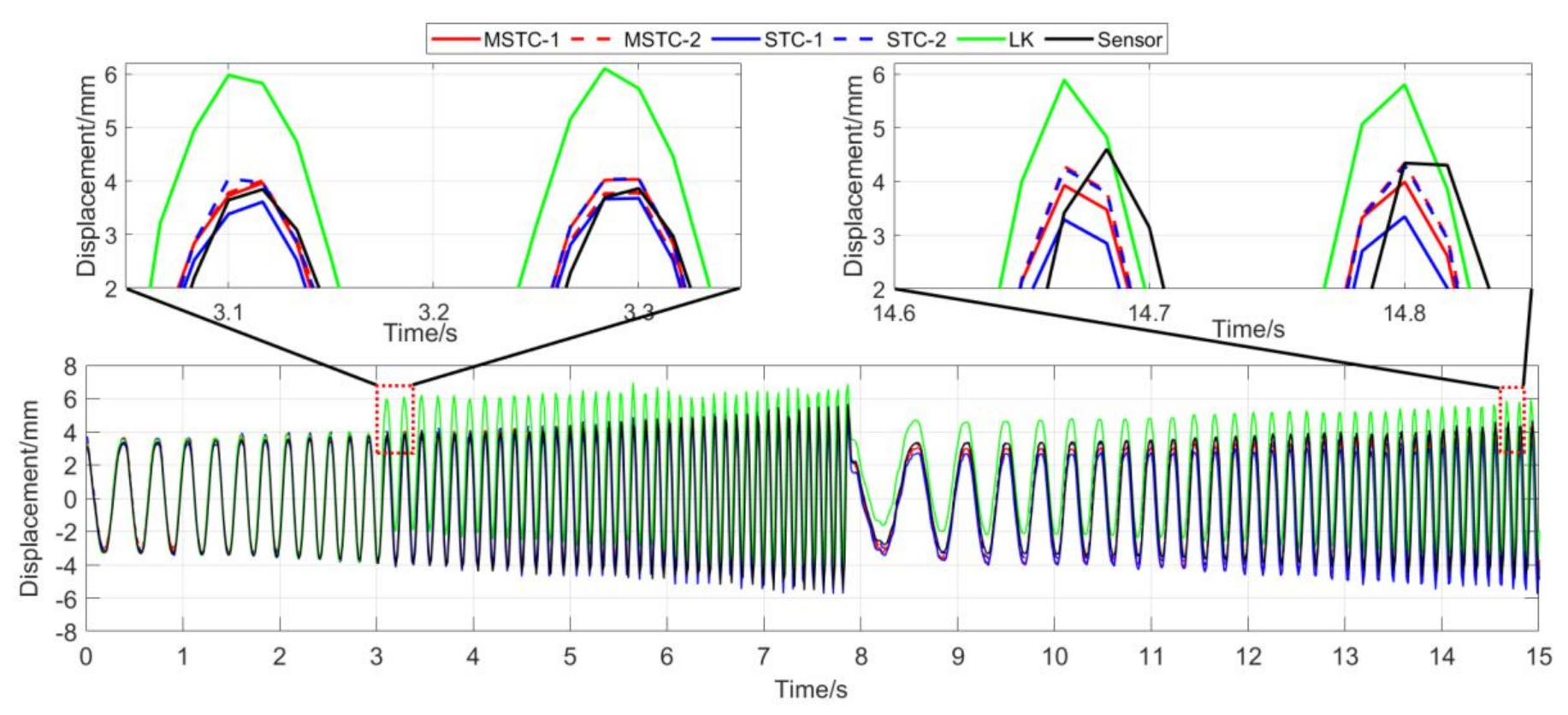

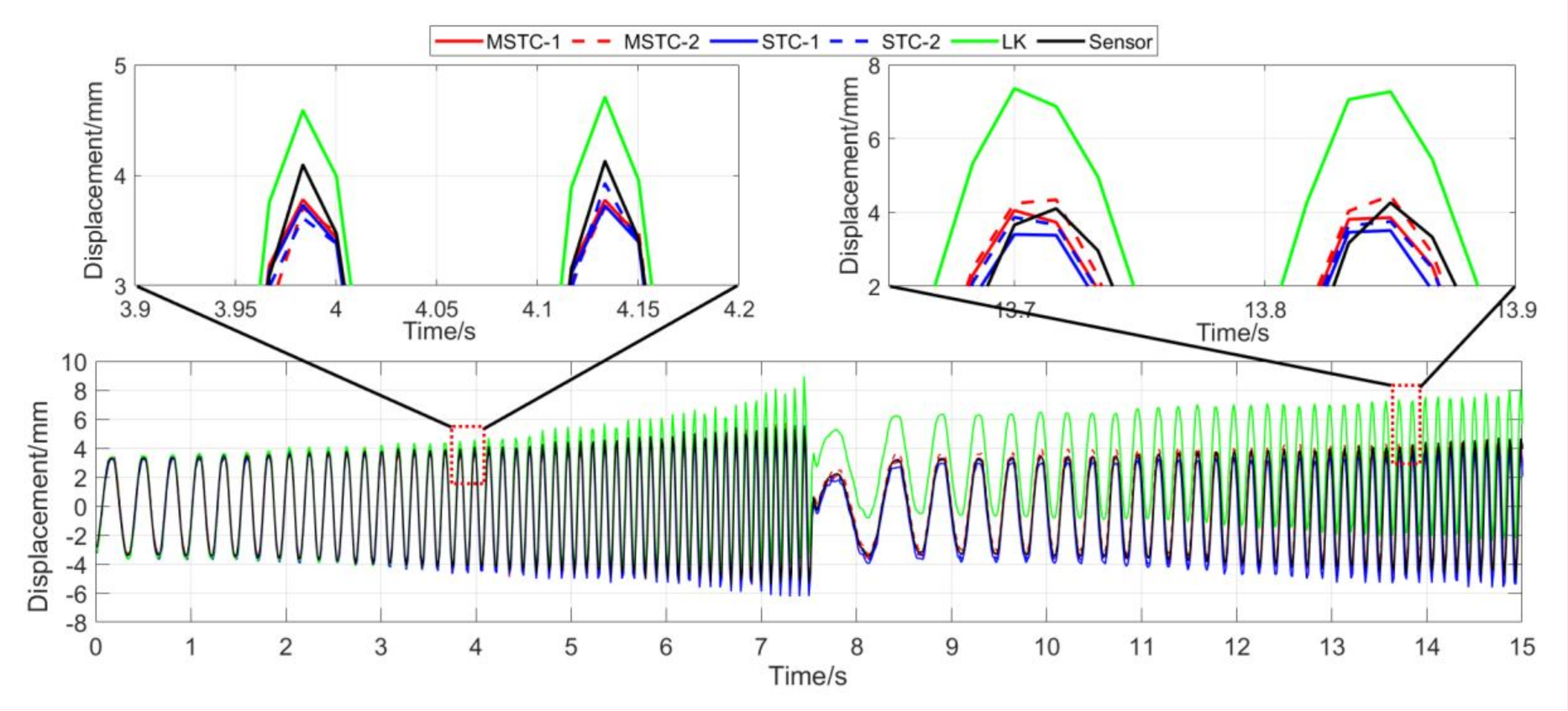

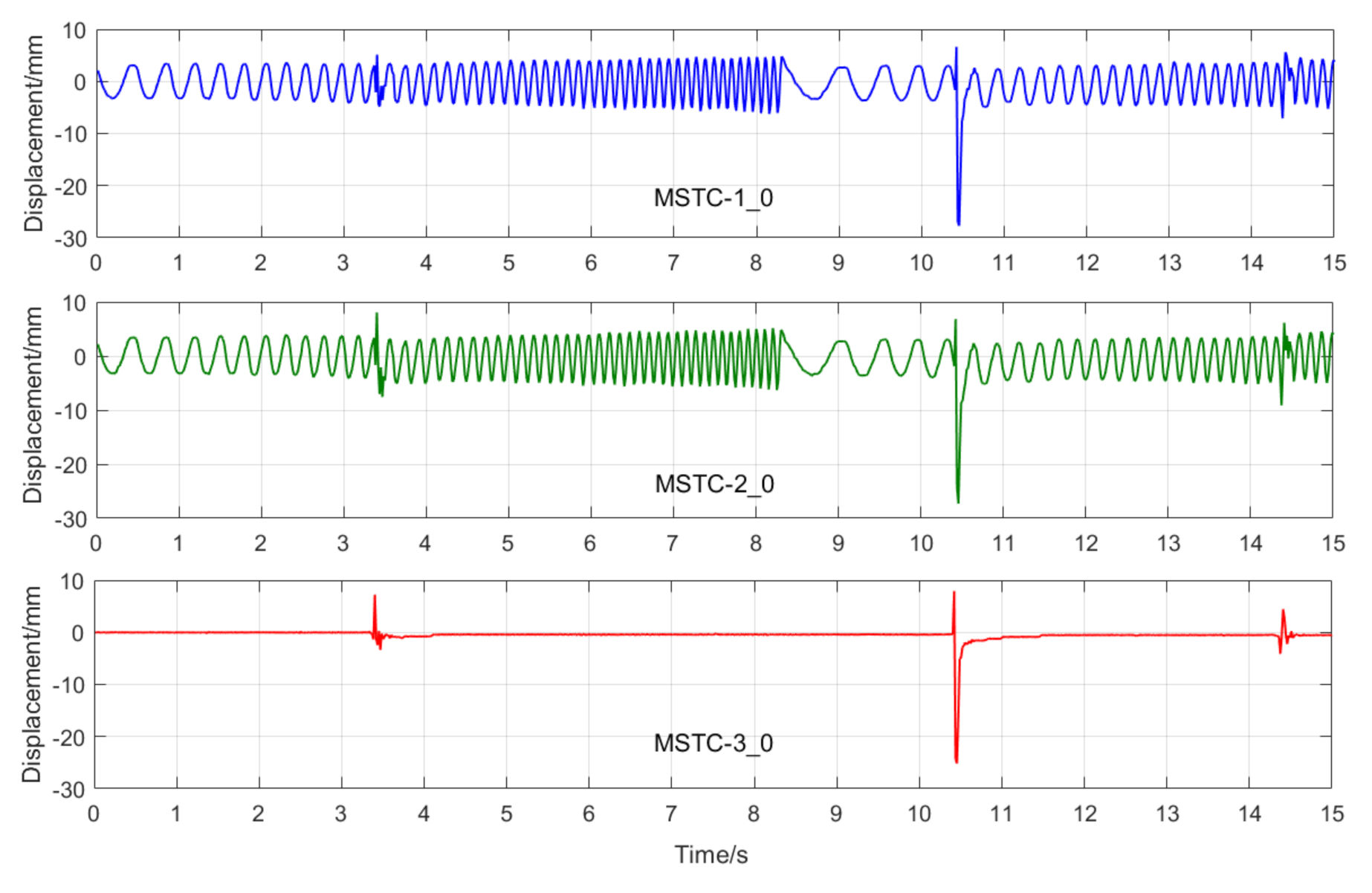

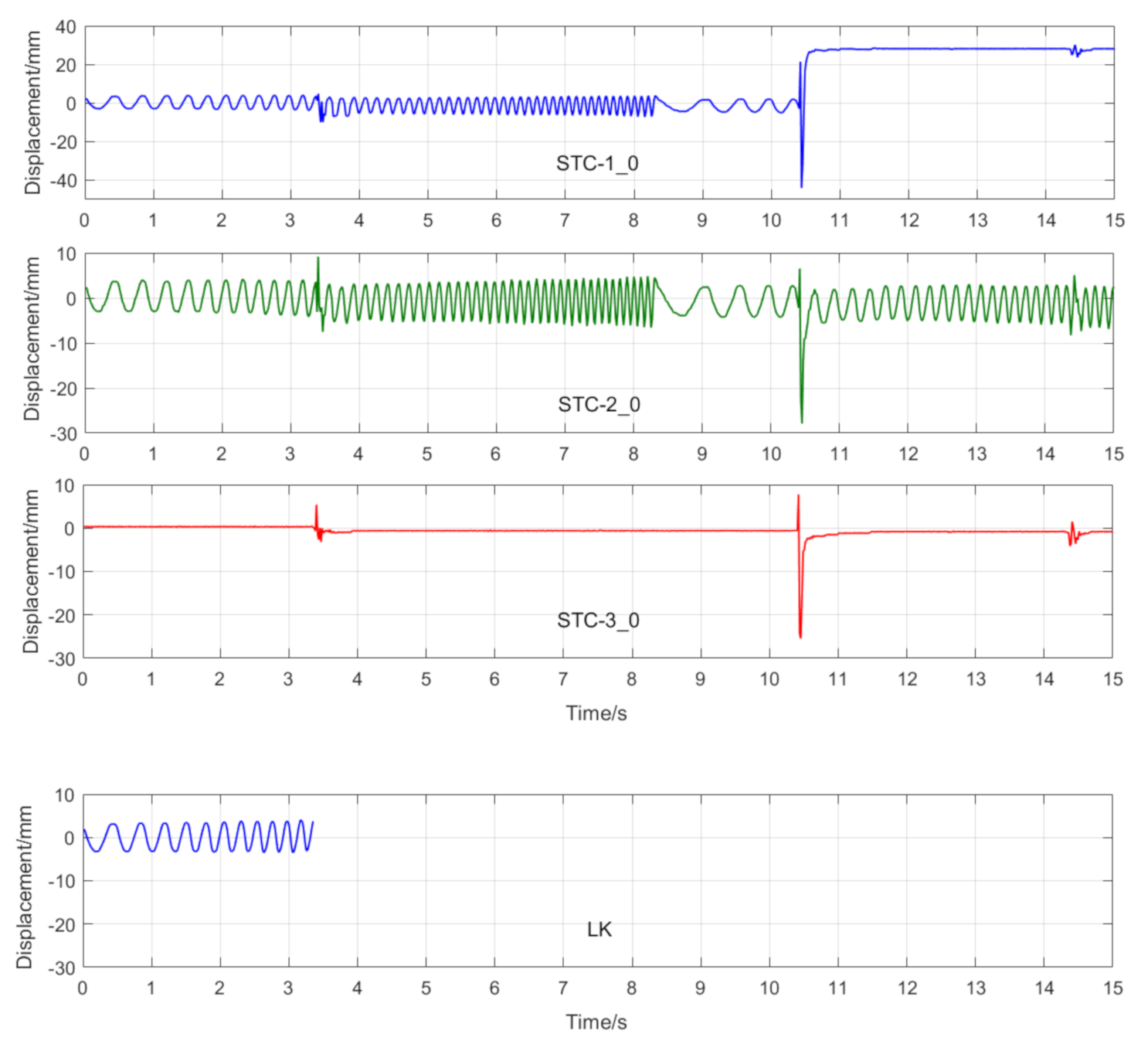

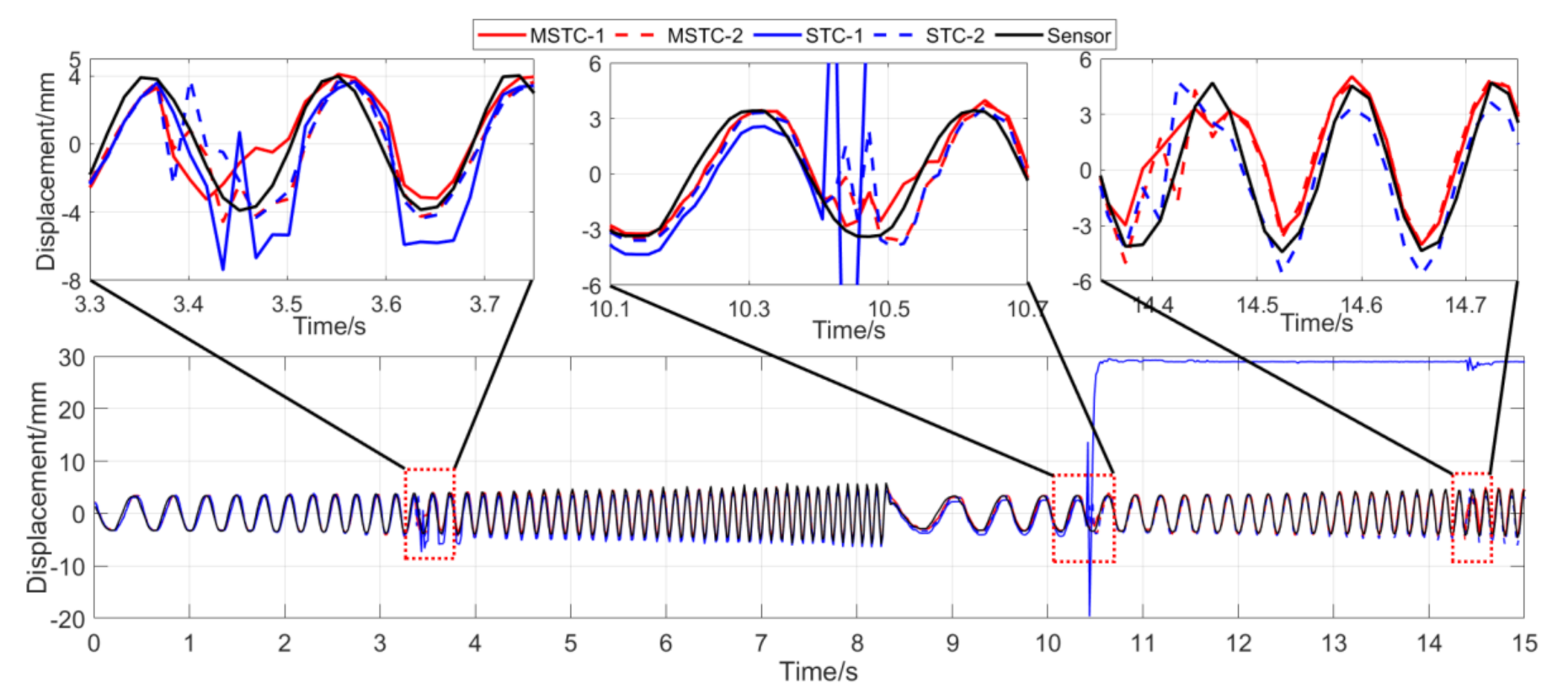

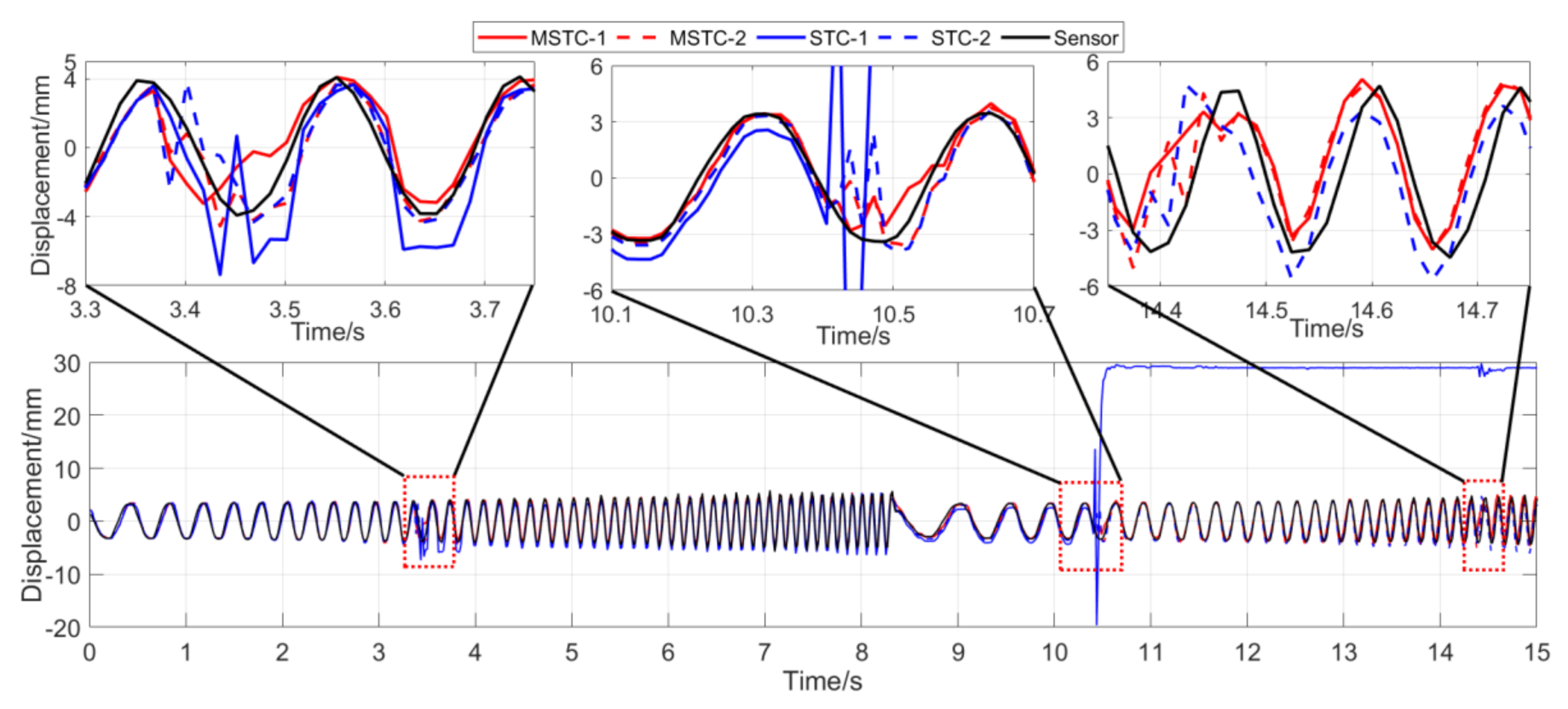

With the first 15-s time history, the final measurement results in all four scenarios are shown and compared in

Figure 14,

Figure 15,

Figure 16,

Figure 17,

Figure 18,

Figure 19,

Figure 20,

Figure 21 and

Figure 22 and

Table 3,

Table 4,

Table 5 and

Table 6. Therein, MSTC-1 and MSTC-2 denote the results of the first target point and the second target point identified by the MSTC method, respectively; STC-1 and STC-2 denote the ones by the STC method; LK denotes the ones by the characteristic OF method; sensor denotes the LVDT data. It is noted that the sampling rate for the LVDT is 100 Hz and it is down-sampled to be comparable to the vision measurements with the theoretical or actual frame rate [

27].

In Scenario IV, MSTC-1_0, MSTC-2_0, and MSTC-3_0 denote the overall results of the three target points identified by the MSTC method, which include the camera-jitter influence. Corresponding displacements of the first and second target points, denoted by MSTC-1 and MSTC-2, respectively, are then obtained by excluding the camera-jitter influence, e.g., subtracting MSTC-3_0 from MSTC-1_0 and MSTC-2_0. With the STC method, similar definitions hold for STC-1_0, STC-2_0, STC-3_0, STC-1, and STC-2.

Observations on the results will be illustrated in the following.

7.2.1. Scenario I

- (1)

In the absence of interference factors, all three vision methods can accurately capture the sphere’s movement under the complex background, among which the two STC-type methods perform better than the OF method, and the MSTC method provides the most consistent results with the LVDT data.

- (2)

Both overall indicators, i.e., NMSE and , can reach the same conclusion in terms of distinguishing the advantages and disadvantages of different methods, and NMSE shows higher sensitivity. See the 59.95 FPS case, for example, keeps larger than 0.99 with all methods while NMSE takes 0.992, 0.988, and 0.987 for MSTC-1, STC-1, and LK, respectively.

- (3)

The measurement results obtained with the actual frame rate (59.96 FPS) are more accurate than the ones with the theoretical frame rate (60 FPS). The inconsistency of the frame rate of smartphones between theory and practice has been noticed by Yoon [

14], and our study further shows that corresponding errors in displacement measurement even deteriorate in a frequency-varying vibration practice. In the theoretical FPS case, significant shifting happens during the whole time history and exaggerates in later-stage vibration due to error accumulation. Variations of overall indicators also demonstrate the influence. See the MSTC-1 measurement, for example, due to inadequate choice of 60 FPS instead of 59.96 FPS,

NMSE drops from 0.992 to 0.953 and

from 0.996 to 0.977.

- (4)

The maximum and minimum values from the sensor vary with different frame rates due to the down-sampled operation. The corresponding deviations, although not wholly accurate, can still provide an estimation of the local accuracy. For the MSTC method, deviation results are relatively small, e.g., less than 10%, implying a good accuracy of the proposed method.

7.2.2. Scenario II

- (1)

When the illumination undergoes a sudden change during the vibration, the displacement time history obtained with the OF method varies abruptly, indicating failure of the target tracking. However, results with the STC-type methods still keep stable and consistent with the LVDT data in shape and trend. Besides, again, the MSTC method gives the best performance.

- (2)

Both overall indicators can lead to the same conclusion in comparing different methods’ performance under illumination variation, and, again, NMSE is more sensitive. See the 59.95 FPS case, for example, keeps larger than 0.96 with all methods, while NMSE takes 0.977, 0.957, and 0.778 for MSTC-1, STC-1, and LK, respectively. Besides, when compared with Scenario I, NMSE drops from 0.987 to 0.778 for LK, from 0.988 to 0.957 for STC-1, and from 0.992 to 0.977 for MSTC-1. The minimum loss of NMSE indicates the best performance of the MSTC method in resisting the interference of illumination variation.

- (3)

A significant influence on the measurement results similar to Scenario I still holds for the frame rate of the smartphone. See the MSTC-1 measurement, for example, due to an inadequate choice of 60 FPS instead of 59.96 FPS, NMSE drops from 0.977 to 0.885 and from 0.989 to 0.945.

- (4)

The MSTC method and the STC method perform almost the same in measuring target 2, while the former one shows better performance in measuring target 1. See also in

Table 4,

NMSE takes 0.988 and 0.987 for MSTC-2 and STC-2, respectively, while 0.977 and 0.957 for MSTC-1 and STC-1, respectively. As shown in

Figure 13, the proportion of pixels in the target-1 context with similar motion tendency to the target center is much smaller than that in the target-2 one, implying fewer auxiliary information and more interference one for target 1. It results in a more difficult tracking of target 1 under an interference environment. At the same time, the MSTC method can achieve better indices than the STC method by extracting the favorable and unfavorable information from the context and correspondingly strengthening or weakening its influence during the target tracking.

- (5)

The most significant deviations happen to the OF method. Moreover, deviations of the MSTC method are smaller than those of the STC method, in which the target 2 shows better performance than the target 1.

7.2.3. Scenario III

- (1)

When the fog continuously interferes with the shooting environment during the vibration, the displacement time history obtained with the OF method significantly shifts from the accurate one, indicating apparent failure of the target tracking. However, the same excellent performance of the MSTC method and the STC method, as in Scenario II, still holds.

- (2)

Both overall indicators can lead to the same conclusion in comparing different methods’ performance under fog interference, and, again, NMSE is more sensitive. See the 59.95 FPS case, for example, keeps larger than 0.91 with all methods while NMSE takes 0.969, 0.948, and 0.501 for MSTC-1, STC-1, and LK, respectively. Besides, when compared with Scenario I, NMSE drops from 0.987 to 0.501 for LK, from 0.988 to 0.948 for STC-1, and from 0.992 to 0.969 for MSTC-1. The minimum loss of NMSE indicates the best performance of the MSTC method in resisting the fog interference.

- (3)

A significant influence on the measurement results similar to Scenarios I and II still holds for the frame rate of the smartphone. See the MSTC-1 measurement, for example, due to an inadequate choice of 60 FPS instead of 59.96 FPS, NMSE drops from 0.969 to 0.872 and from 0.986 to 0.939.

- (4)

The MSTC method, again, shows similar performance as the STC method in measuring target 2 and better performance in measuring target 1, indicating a better anti-interference ability of the proposed method. See also in

Table 5,

NMSE takes 0.972 and 0.971 for MSTC-2 and STC-2, respectively, while 0.969 and 0.948 for MSTC-1 and STC-1, respectively.

- (5)

The most significant deviations happen to the OF method. Moreover, deviations of the MSTC method are smaller than those of the STC method.

7.2.4. Scenario IV

- (1)

When camera jitter happens during the vibration, the OF method fails to find and match the critical feature points in the blurred images. The STC method may significantly mistrack the target point when subjected to substantial camera jitter, as shown in STC-1_0 after the second tapping. The MSTC method, however, can deliver excellent performance in tracking all three target points under all camera-jitter circumstances.

- (2)

Both overall indicators can lead to the same conclusion in comparing different methods’ performance under camera jitter, and, again, NMSE is more sensitive. Due to the failure of tracking feature points after camera jitter, the OF method produces no values for both indicators. Moreover, for the STC method, the target mistracking caused by substantial camera jitter even leads to a negative value of NMSE, much smaller than the positive . Besides, when compared with Scenario I, NMSE varies from 0.987 to no value for LK, from 0.988 to negative value for STC-1, and from 0.992 to 0.925 for MSTC-1. The minimum loss of NMSE indicates the best performance of the MSTC method in resisting the interference of camera jitter.

- (3)

A significant influence on the measurement results similar to Scenarios I-III still holds for the frame rate of the smartphone. See the MSTC-1 measurement, for example, due to inadequate choice of 60 FPS instead of 59.96 FPS, NMSE drops from 0.925 to 0.894 and from 0.964 to 0.948.

- (4)

The MSTC method, as compared to the STC method, shows slightly better performance in measuring target 2 and much better performance in measuring target 1, proving once again a better anti-interference ability of the proposed method. See also in

Table 6,

NMSE takes 0.933 and 0.926 for MSTC-2 and STC-2, respectively, while 0.925 and negative value for MSTC-1 and STC-1, respectively.

- (5)

Deviations of the MSTC method are smaller than those of the STC method, in which the target 2 shows better performance than the target 1.

7.3. Computation Efficiency

After the iPhone 7 recorded the videos of sphere vibration in four scenarios, we implemented all the above displacement identification with Matlab codes on a laptop computer, which is of moderate hardware configuration, e.g., Intel Core i5-4200H 2.8GHz and 4G memory.

During the identification, each 15-s vibration video with 60 FPS contains 900 frame images to be analyzed, among which the first one is shown in

Figure 13. The time consumed for the identification with different vision methods in four scenarios is listed in

Table 7.

It can be seen from

Table 7 that the MSTC method takes 0.14s~0.18s to identify the displacement of one target between two adjacent frame images. Comparatively, the STC method and the OF method take 0.12~0.16 s and 0.08~0.10 s, respectively. Despite a slight increment, i.e., 0.02 s introduced by additional calculation of the context influence matrix, the MSTC method is still applicable for the design of online measurement via its high efficiency, similarly to the STC method that has seen online tracking practice.

7.4. Summary

To sum up, several conclusions can be drawn from all the above observations,

- (1)

In the vision-based measurement of engineering vibration, the actual frame rate, instead of the theoretical one of the camera, is crucial to achieving a high-accuracy result of displacement time history.

- (2)

To evaluate the performance of vision methods, the indicator, NMSE, is more critical than when interference is involved.

- (3)

In an interference environment, the OF method is prone to mismatch the feature points and lead to data deviated or lost. The conventional STC method is sensitive to target selection and can effectively track those targets having a large proportion of pixels in the context with motion tendency similar to the target center. The proposed MSTC method, however, can ease the sensitivity to target selection through in-depth processing of the information in the context and finally enhance the robustness of the target tracking.

- (4)

The OF method has the highest computation efficiency in measuring target displacement, followed by the STC method and the MSTC method. Although the MSTC method requires nearly 10% more time than the STC method to measure displacement, the average time cost consumed for tracking one target between adjacent frame images is far less than 1 s, favoring its potential application in online measurement.

9. Conclusions

In this paper, we studied the application of computer vision technology in the field of structural vibration. A multi-target monitoring method based on a motion-enhanced spatio-temporal context (MSTC) algorithm developed herein was proposed, which realizes the synchronous measurement of multi-point dynamic displacement in an interference environment by using smartphone-recording video. A series of sine-sweep vibration experiments considering different interference factors were carried out to investigate the performance of the proposed method. Test results show good consistency in different scenarios, indicating that the proposed method preserves good adaptability to complex backgrounds and strong resistance to illumination variation, fog interference, and camera jitter. Besides, the proposed method acts insensitive in selecting the target region and efficient in tracking targets, which are beneficial to potential engineering applications.

Although the designed experiments have involved several interference factors, it is no doubt that, for comprehensive performance validation, more investigations on other objects of various profiles and textures are still required, as well as field tests on real vibrating structures. These ongoing validations and further studies on more complex environment changes, such as occlusion, strong wind, and rain, are crucial to extending the vision-related applications.