Stereo Imaging Using Hardwired Self-Organizing Object Segmentation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Stereo Vision Algorithms

2.1.1. Self-Organized Map

- (1)

- Initialize the weight vectors of the M × N neurons

- (2)

- Repeat until convergence

- (a)

- Select the next input vector xi from the data set:

- (i)

- Find the unit Wj* that best matches the input vector xi

- (ii)

- Update the weights of the winner Wj* and its neighboring neurons Wk

where hj∗k(t) is the Gaussian neighborhood function given as - (b)

- Select the next input vector xi from the data set:

- (c)

- Decrease neighborhood size σ(t) that defines the topological neighborhoods:

2.1.2. Connected Component Labeling

2.1.3. Stereo Matching

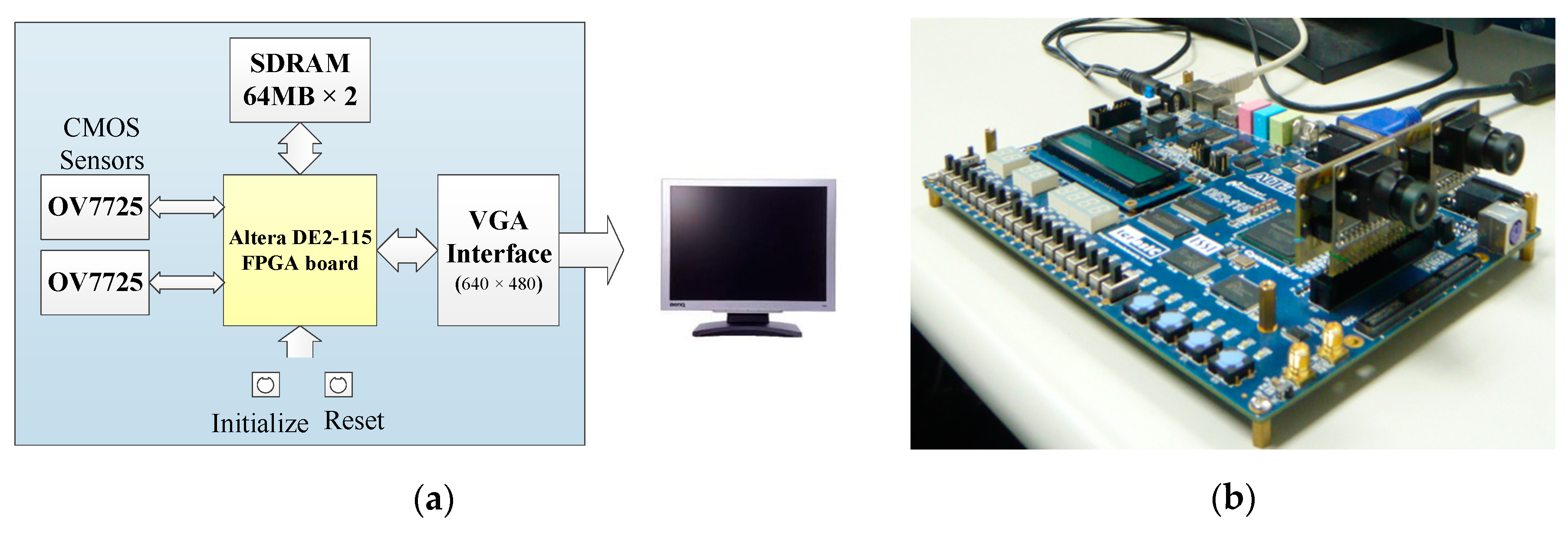

2.2. Hardware Architecture of the Embedded Stereo Vision System

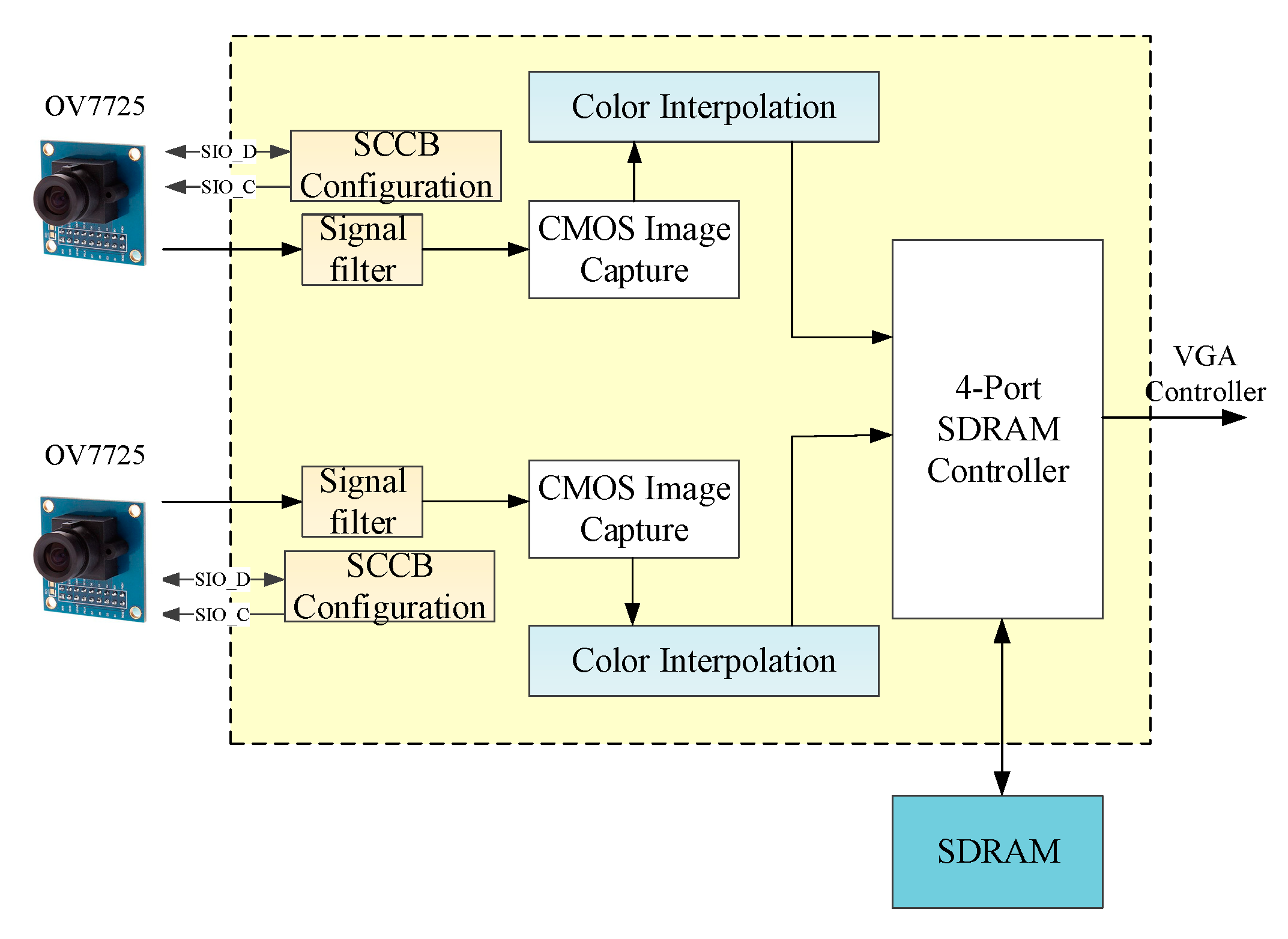

2.2.1. Dual Camera Vision Module

2.2.2. Self-Organized-Map-Based Image Segmentation Module

SOM Training Module

Random Generator

Lookup Table of Gaussian Function

SOM Color Classification Module

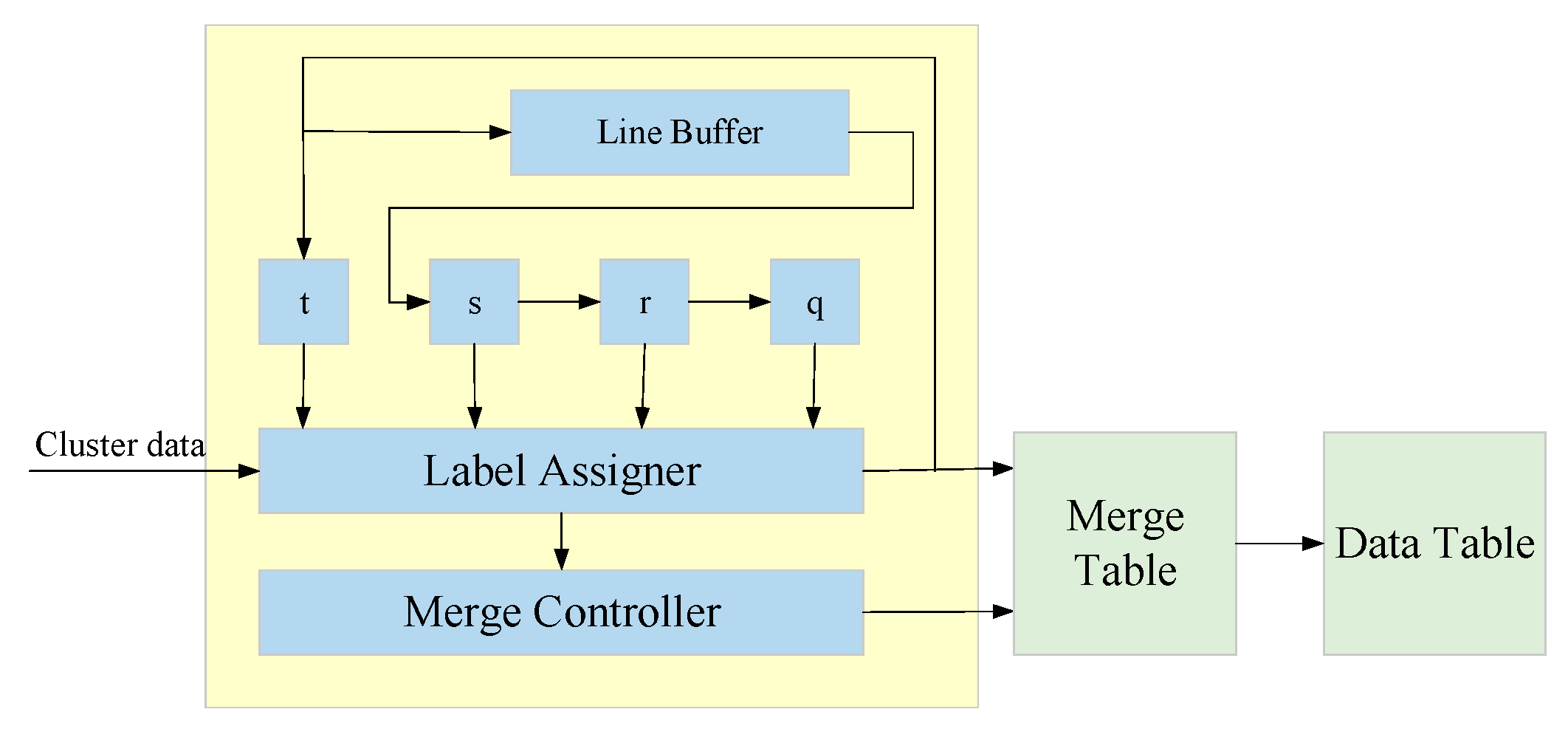

2.2.3. Connected Component Labeling Module

Sum-of-Absolute-Difference-Based Stereo Matching Module

Pipeline Controller and System Integration

3. Results

3.1. Software Simulation and Verification

3.2. Performance of the Embedded Stereo Vision System

3.2.1. System Performance Analysis

3.2.2. Hardware Resource Utilization and Performance Analysis

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sahoo, P.K.; Soltani, S.; Wong, A.K.; Chan, Y.C. A survey of thresholding techniques. Comput. Vis. Graph. Image Process. 1988, 4, 233–260. [Google Scholar] [CrossRef]

- Wesolkowski, S. Color image edge detection and segmentation: A comparison of the vector angle and the Euclidean distance color similarity measures. Master’s thesis, Systems Design Engineering, University of Waterloo, Waterloo, ON, Canada, 1999. [Google Scholar]

- Li, Z.; Yang, J.; Liu, G.; Cheng, Y.; Liu, C. Unsupervised range-constrained thresholding. Pattern Recognit. Lett. 2011, 32, 392–402. [Google Scholar] [CrossRef]

- Hu, M.; Li, M.; Wang, R.G. Application of an improved Otsu algorithm in image segmentation. J. Electron. Meas Instrum. 2010, 24, 443–449. [Google Scholar] [CrossRef]

- Long, J.W.; Shen, X.J.; Chen, H.P. Adaptive minimum error threshold algorithm. Acta Autom. Sin. 2012, 38, 1134–1144. [Google Scholar] [CrossRef]

- Liu, J.; Jin, W.D. Three-dimensional adaptive minimum error thresholding segmentation algorithm. J. Image Graph. 2013, 18, 1416–1424. [Google Scholar]

- Wu, Y.Q.; Zhang, B.C.; Wu, J.M. An infrared image segmentation method based on within-class absolute difference and chaotic particle swarm optimization. Acta Opt. Sin. 2010, 30, 79–85. [Google Scholar]

- Qiao, L.Y.; Xu, L.X.; Gao, M. Fast maximum entropy thresholding based on two-dime nsional histogram oblique segmentation in infrared imaging guidance. Infrared laser Eng. 2013, 42, 1691–1699. [Google Scholar]

- Al-Amri, S.S.; Kalyankar, N.V.; Khamitkar, S.D. Image segmentation by using edge detection. Int. J. Comput. Sci. Eng. 2010, 2, 804–807. [Google Scholar]

- Angelina, S.; Suresh, L.P.; Krishna Veni, S.H. Image segmentation based on genetic algorithm for region growth and region merging. In Proceedings of the International Conference on Computing, Electronics and Electrical Technologies (ICCEET), Kumaracoil, India, 21–22 March 2012; pp. 970–974. [Google Scholar]

- Khokher, M.R.; Ghafoor, A.; Siddiqui, A.M. Image segmentation using multilevel graph cuts and graph development using fuzzy rule-based system. IET Image Process. 2013, 7, 201–211. [Google Scholar] [CrossRef]

- Chai, Y.H.; Gao, L.Q.; Lu, S.; Tian, L. Wavelet-based watershed for image segmentation algorithm. Proceeding of The Sixth World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; pp. 9595–9599. [Google Scholar]

- Kang, W.X.; Yang, Q.Q.; Liang, R.R. The comparative research on image segmentation algorithms. In Proceedings of the 2009 First International Workshop on Education Technology and Computer Science, Wuhan, China, 7–8 March 2009; pp. 703–707. [Google Scholar]

- Ilea, D.E.; Whelan, P.F. Color image segmentation using a spatial k-means clustering algorithm. In Proceedings of the Irish Machine Vision & Image Processing Conference 2006 (IMVIP 2006), Dublin, Ireland, 30 August–1 September 2006; pp. 146–153. [Google Scholar]

- Shen, Y.; Li, Y. Robust image segmentation algorithm using fuzzy clustering based on kernel-induced distance measure. In Proceedings of the International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; pp. 1065–1068. [Google Scholar]

- Fauvel, M.; Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J.; Tilton, J.C. Advances in spectral-spatial classification of hyperspectral images. Proc. IEEE 2013, 101, 652–675. [Google Scholar] [CrossRef] [Green Version]

- Torbati, N.; Ayatollahi, A.; Kermani, A. An efficient neural network based method for medical image segmentation. Comput. Biol. Med. 2014, 44, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Yeo, N.C.; Lee, K.H.; Venkatesh, Y.V.; Ong, S.H. Colour image segmentation using the self-organizing map and adaptive resonance theory. Image Vis. Comput. 2005, 23, 1060–1079. [Google Scholar] [CrossRef]

- Ortiz, A.; Gorriz, J.M.; Ramirez, J.; Salas-Gonzalez, D. Improving MR brain image segmentation using self-organising maps and entropy-gradient clustering. Inf. Sci. 2014, 262, 117–136. [Google Scholar] [CrossRef]

- Kurdthongmee, W. A novel Kohonen SOM-based image compression architecture suitable for moderate density FPGAs. Image Vis. Comput. 2007, 26, 1094–1105. [Google Scholar] [CrossRef]

- Porrmann, M.; Ruping, S.; Ruckert, U. SOM hardware with acceleration module for graphical representation of the learning process. In Proceedings of the Seventh International Conference on Microelectronics for Neural, Fuzzy and Bio-Inspired Systems, Granada, Spain, 9 April 1999; pp. 380–386. [Google Scholar]

- Manolakos, I.; Logaras, E. High Throughput Systolic SOM IP Core for Fpgas. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Honolulu, HI, USA, 15–20 April 2007; 2, pp. II-61–II-64. [Google Scholar]

- Kohonen, T. Self-Organization and Associative Memory, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

- Zhang, X.; Chen, Z. SAD-Based Stereo Vision Machine on a System-on-Programmable-Chip (SoPC). Sensors 2013, 13, 3014–3027. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- David, R. Grafcet: A powerful tool for specification of logic controllers. IEEE Trans. Control. Syst. Technol. 1995, 3, 253–268. [Google Scholar] [CrossRef]

- Alfke, P. Efficient Shift Registers, LFSR Counters, and Long Pseudo-Random Sequence Generators. Available online: https://www.xilinx.com/support/documentation/application_notes/xapp052.pdf (accessed on 14 October 2020).

- Van Ginneken, B.; Frangi, A.F.; Staal, J.J.; ter Haar Romeny, B.M.; Viergever, M.A. Active shape model segmentation with optimal features. IEEE Trans. Med Imaging 2002, 21, 924–933. [Google Scholar] [CrossRef] [PubMed]

- Jin, M.; Maruyama, T. Fast and accurate stereo vision system on FPGA. ACM Trans. Reconfig. Technol. Syst. 2014, 7, 1–24. [Google Scholar] [CrossRef]

- Wang, W.; Yan, J.; Xu, N.; Wang, Y.; Hsu, F.H. Real-time high-quality stereo vision system in FPGA. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1696–1708. [Google Scholar] [CrossRef]

- Michalik, S.; Michalik, S.; Naghmouchi, J.; Berekovic, M. Real-time smart stereo camera based on FPGA-SoC. In Proceedings of the 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids), Birmingham, UK, 15–17 November 2017; pp. 311–317. [Google Scholar]

| Method | Image | Opera House | Sky | Ocean | Total | Correct Rate |

|---|---|---|---|---|---|---|

| K-Means | TP | 7058 | 38,400 | 15,994 | 61,452 | 0.9104 |

| FN | 0 | 26 | 0 | 26 | ||

| FP | 3416 | 131 | 1455 | 5002 | ||

| overlap | 0.673 | 0.995 | 0.916 | - | - | |

| SOM | TP | 9096 | 38,420 | 14,280 | 61796 | 0.9154 |

| FN | 12 | 16 | 0 | 28 | ||

| FP | 1378 | 111 | 3169 | 4658 | ||

| overlap | 0.867 | 0.996 | 0.818 | - | - | |

| Image size: 300 × 255 | ||||||

| Objects | Center-Point of Original Object | Center-Point of Target Block | Disparity | Estimated Depth | Observed Depth |

|---|---|---|---|---|---|

| 1 | (247,197) | (116,197) | 131 | 18.8 cm | 19.0 cm |

| 2 | (158,142) | (76,142) | 82 | 36.2 cm | 35.0 cm |

| 3 | (207,162) | (153,162) | 54 | 56.0 cm | 56.0 cm |

| Module | Dual Camera Vision System | SDRAM | SOM-Based Image Segmentation | VGA Controller | |

|---|---|---|---|---|---|

| Resources | |||||

| System clock | 48 MHz | 122.55 MHz | 6.63 MHz–105.88 MHz | 265.82 MHz | |

| Total Logic Element | 2117/114,480 (2%) | 1672/114,480 (1%) | 22,498/114,480 (20%) | 79/114,480 (<1%) | |

| Total Register | 1429 | 757 | 2709 | 56 | |

| Total Memory Bits | 425,952/3,981,312 (11%) | 49,152/3,981,312 (1%) | 0/3,981,312 (0%) | 0/3,981,312 | |

| Manolakos’s Method [22] | Kurdthongmee’s Method [20] | Porrmann’s Method [21] | Our Method | ||

|---|---|---|---|---|---|

| SOM Training Module | learning rate (vectors/s) | 68,900 | N/C | 94,000 | 413,125 |

| system clock | 148 MHz | 24.2 MHz | 40 MHz | 6.63 MHz | |

| SOM Color Classification Module | classification rate (vectors/s) | 144,000 | N/C | 250,000 | 42,265,000 |

| system clock | 148 MHz | 24.2 MHz | 40 MHz | 105.88 MHz |

| Software Program | Pipelined Hardware Module | |

|---|---|---|

| Clock Frequency | 3 GHz | 84.53 MHz |

| Object Segmentation | 0.039 s | 0.004 s |

| CCL | 0.037 s | 0.066 s |

| Stereo Matching | 0.122 s | 0.064 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.-H.; Lan, G.-W.; Chen, C.-Y.; Huang, Y.-H. Stereo Imaging Using Hardwired Self-Organizing Object Segmentation. Sensors 2020, 20, 5833. https://doi.org/10.3390/s20205833

Chen C-H, Lan G-W, Chen C-Y, Huang Y-H. Stereo Imaging Using Hardwired Self-Organizing Object Segmentation. Sensors. 2020; 20(20):5833. https://doi.org/10.3390/s20205833

Chicago/Turabian StyleChen, Ching-Han, Guan-Wei Lan, Ching-Yi Chen, and Yen-Hsiang Huang. 2020. "Stereo Imaging Using Hardwired Self-Organizing Object Segmentation" Sensors 20, no. 20: 5833. https://doi.org/10.3390/s20205833

APA StyleChen, C.-H., Lan, G.-W., Chen, C.-Y., & Huang, Y.-H. (2020). Stereo Imaging Using Hardwired Self-Organizing Object Segmentation. Sensors, 20(20), 5833. https://doi.org/10.3390/s20205833