A Real-Time Vehicle Detection System under Various Bad Weather Conditions Based on a Deep Learning Model without Retraining

Abstract

1. Introduction

2. The Proposed Method

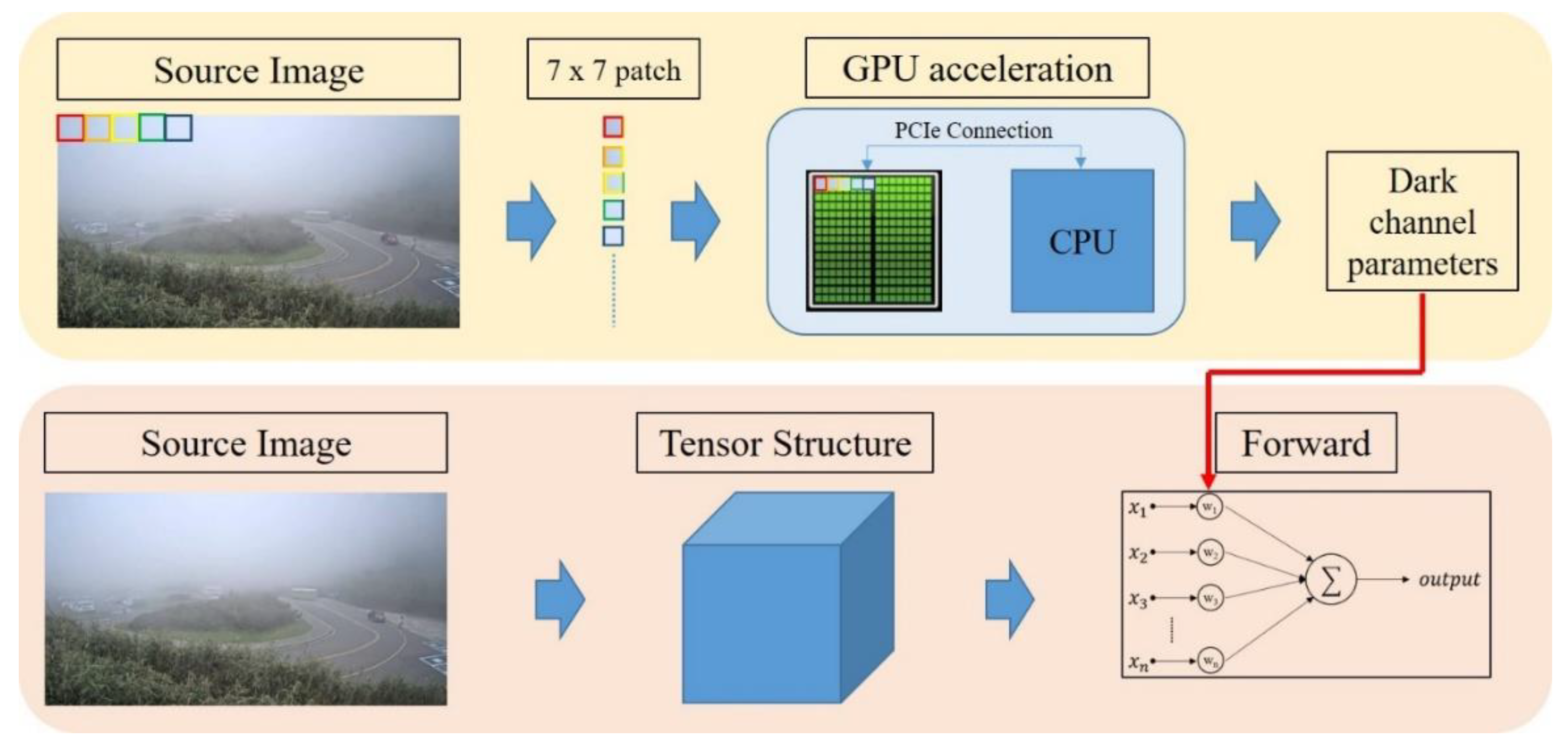

2.1. Visibility Complementation Module

2.1.1. Visibility Assessment

2.1.2. Visibility Correction

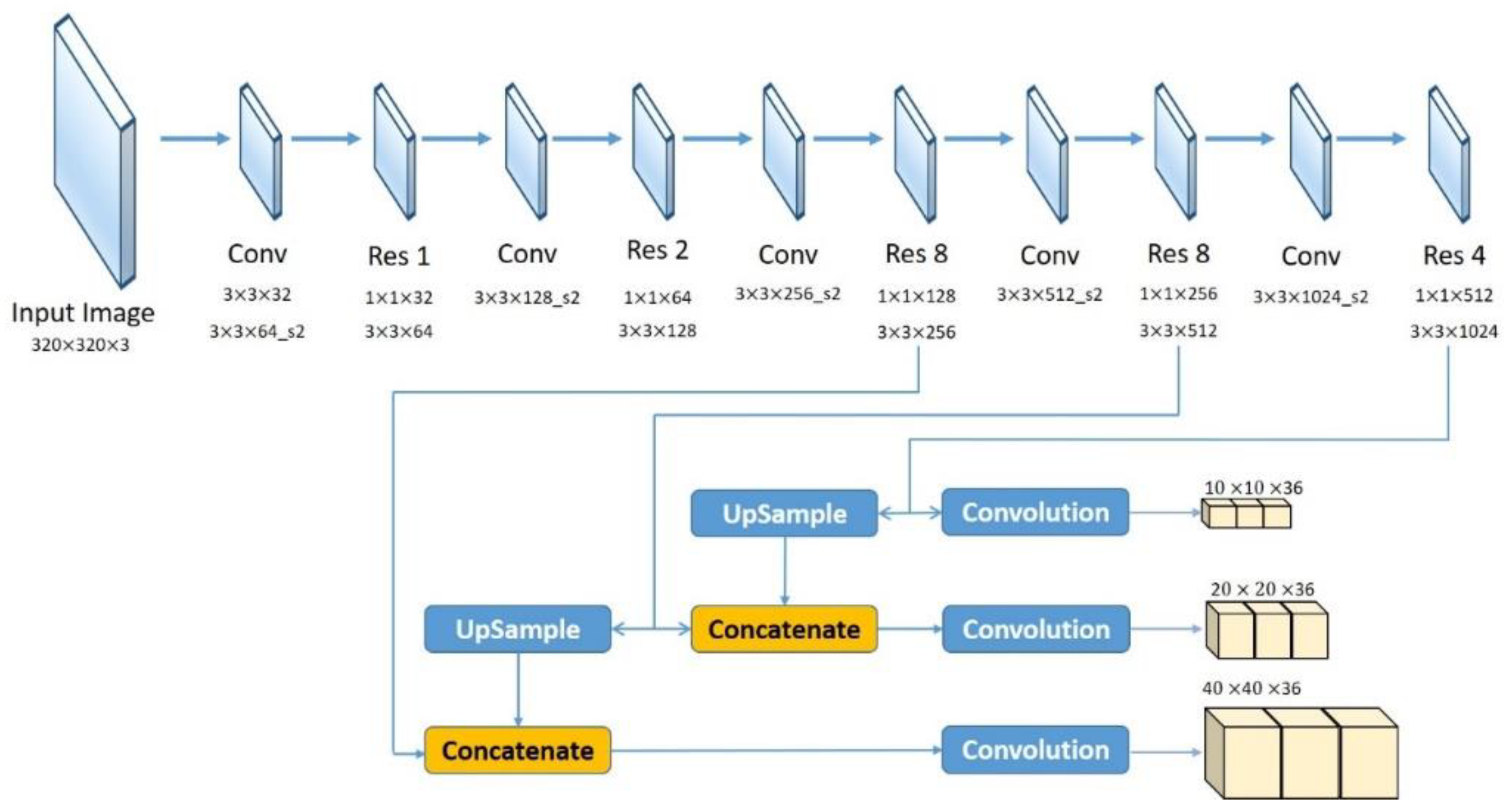

2.2. Vehicle Detection Module

3. Experimental Results

3.1. Visualization Results

3.2. Performance Evaluation

3.3. Comparative Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Lee, C.; Kim, J.; Park, E.; Lee, J.; Kim, H.; Kim, J.; Kim, H. Multi-feature Vehicle Detection Using Feature Selection. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013. [Google Scholar]

- Sajib, M.S.R.; Tareeq, S.M. A feature based method for real time vehicle detection and classification from on-road videos. In Proceedings of the 2017 20th International Conference of Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 22–24 December 2017. [Google Scholar]

- Moghimi, M.M.; Nayeri, M.; Pourahmadi, M.; Moghimi, M.K. Moving Vehicle Detection Using AdaBoost and Haar-Like Feature in Surveillance Videos. Int. J. Imaging Rob. 2018, 18, 94–106. [Google Scholar]

- Weia, Y.; Tianb, Q.; Guo, J.; Huang, W.; Cao, J. Multi-vehicle detection algorithm through combining Haar and HOG features. Math. Comput. Simul 2019, 155, 130–145. [Google Scholar] [CrossRef]

- Zhao, M.; Jia, J.; Sun, D.; Tang, Y. Vehicle detection method based on deep learning and multi-layer feature fusion. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018. [Google Scholar]

- Li, S.; Lin, J.; Li, G.; Bai, T.; Wang, H.; Pang, Y. Vehicle type detection based on deep learning in traffic scene. Procedia Comput. Sci. 2018, 131, 564–572. [Google Scholar]

- Song, H.; Liang, H.; Li, H.; Dai, Z.; Yun, X. Vision-based vehicle detection and counting system using deep learning in highway scenes. Eur. Transp. Res. Rev. 2019, 11, 1–16. [Google Scholar] [CrossRef]

- Murugan, V.; Vijaykumar, V.R.; Nidhila, A. A deep learning RCNN approach for vehicle recognition in traffic surveillance system. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019. [Google Scholar]

- Mouna, B.; Mohamed, O. A vehicle detection approach using deep learning network. In Proceedings of the 2019 International Conference on Internet of Things, Embedded Systems and Communications (IINTEC), Tunis, Tunisia, 20–22 December 2019. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.; van de Sande, K.; Gevers, T.; Smeulders, A. Selective Search for Object Recognition. Int. J. Comput. Vision 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Wang, X.; Yang, M.; Zhu, S.; Lin, Y. Regionlets for Generic Object Detection. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 17–24. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the CVPR, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Single Image Rain Streak Decomposition Using Layer Priors. IEEE Trans. Image Process. 2017, 26, 3874–3885. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Zhu, J.; Ling, J.; Wu, E. Fast Removal of Rain Streaks from a Single Image via a Shape Prior. IEEE Access 2018, 6, 60067–60078. [Google Scholar] [CrossRef]

- Gary, K.; Nayer, S.K. Detection and Removal of Rain from Videos. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Shen, M.; Xue, P. A fast algorithm for rain detection and removal from videos. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011. [Google Scholar]

- Leung, H.-K.; Chen, X.-Z.; Yu, C.-W.; Liang, H.-Y.; Wu, J.-Y.; Chen, Y.-L. A Deep-Learning-Based Vehicle Detection Approach for Insufficient and Nighttime Illumination Conditions. Appl. Sci. 2019, 9, 4769. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- PyTorch. Available online: https://pytorch.org/ (accessed on 6 July 2020).

- Liu, X.; Suganuma, M.; Sun, Z.; Okatani, T. Dual Residual Networks Leveraging the Potential of Paired Operations for Image Restoration. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yangmingshan National Park. Available online: https://www.ymsnp.gov.tw/main_ch/index.aspx (accessed on 2 June 2020).

| Acronyms. | Description |

|---|---|

| The contrast value of each pixel position in the input image. | |

| The mean value of the input image contrast result. | |

| The accepted region of the mean contrast value for a determined low-contrast image frame. | |

| The threshold for the determination of a high V value in the Hue-Saturation-Value (HSV) color space. | |

| The ratio of high V value pixels to the entire number of pixels in the image. | |

| The threshold of the ratio for the determination of low-contrast image frames. | |

| The threshold of the number of components for the determination of adherent raindrop conditions. | |

| User-defined value of the size of the confirmation reference frame. |

| Scene Condition | Sequence | Frame Amount (Frames) | Avg. Variance | Ratio of Pixel Amount | Avg. Component Amount |

|---|---|---|---|---|---|

| Glare | Glare1 | 1425 | 11,035.14 | 3.1874% | 1.5 |

| Glare2 | 1840 | 10,106.54 | 1.8145% | 2 | |

| Glare3 | 1780 | 9898.337 | 1.9105% | 2.5 | |

| Haze | Haze1 | 1276 | 2151.5 | 91.4775% | 1 |

| Haze2 | 135 | 2175.344 | 88.8172% | 1.5 | |

| Haze3 | 1276 | 943.6111 | 85.57% | 2 | |

| Sunny | Sunny1 | 1780 | 7855.636 | 8.1367% | 1.5 |

| Sunny2 | 900 | 14,106.04 | 14.9475% | 1 | |

| Rainy | Rainy1 | 900 | 3871.039 | 31.5765% | 4 |

| Rainy2 | 900 | 4926.419 | 32.217% | 4 | |

| Rainy3 | 900 | 4570.564 | 27.8319% | 5 |

| Weather Condition | Low Contrast | Adherent Raindrops | Sequence Name | Frame Amount (Frames) | Total Time (Seconds) | Frames per Second |

|---|---|---|---|---|---|---|

| Glare | ☑ | ⊠ | 20191002_072701.avi | 1779 | 61.25 | 29.06 |

| ☑ | ⊠ | 20191002_073357.avi | 1779 | 60.56 | 29.41 | |

| ☑ | ⊠ | 20191002_073456.avi | 1779 | 59.46 | 30.30 | |

| Haze | ☑ | ⊠ | media7.mp4 | 425 | 12.97 | 32.75 |

| ☑ | ⊠ | media8.mp4 | 425 | 13.00 | 32.69 | |

| ☑ | ⊠ | media12.mp4 | 353 | 10.89 | 32.40 | |

| Rainy | ⊠ | ☑ | 20190813_083935.avi | 899 | 29.15 | 31.05 |

| ⊠ | ☑ | 20190813_084746.avi | 899 | 29.19 | 30.79 | |

| ⊠ | ☑ | 20190813_090641.avi | 899 | 29.40 | 30.57 |

| Scene | Bounding Box Amount | Positive | False Detection | False Classification | ||||

|---|---|---|---|---|---|---|---|---|

| Glare | Without proposed | 5702 | 4859 | (85.22%) | 4 | (0.07%) | 839 | (14.71%) |

| With proposed | 8093 | 7269 | (89.82%) | 17 | (0.21%) | 807 | (9.97%) | |

| Haze | Without proposed | 97 | 80 | (82.47%) | 10 | (10.31%) | 7 | (7.22%) |

| With proposed | 760 | 602 | (79.21%) | 102 | (13.41%) | 65 | (8.55%) | |

| Rainy | Without proposed | 650 | 435 | (66.92%) | 172 | (26.46%) | 43 | (6.62%) |

| With proposed | 998 | 871 | (87.27%) | 52 | (5.21%) | 75 | (7.52%) | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.-Z.; Chang, C.-M.; Yu, C.-W.; Chen, Y.-L. A Real-Time Vehicle Detection System under Various Bad Weather Conditions Based on a Deep Learning Model without Retraining. Sensors 2020, 20, 5731. https://doi.org/10.3390/s20205731

Chen X-Z, Chang C-M, Yu C-W, Chen Y-L. A Real-Time Vehicle Detection System under Various Bad Weather Conditions Based on a Deep Learning Model without Retraining. Sensors. 2020; 20(20):5731. https://doi.org/10.3390/s20205731

Chicago/Turabian StyleChen, Xiu-Zhi, Chieh-Min Chang, Chao-Wei Yu, and Yen-Lin Chen. 2020. "A Real-Time Vehicle Detection System under Various Bad Weather Conditions Based on a Deep Learning Model without Retraining" Sensors 20, no. 20: 5731. https://doi.org/10.3390/s20205731

APA StyleChen, X.-Z., Chang, C.-M., Yu, C.-W., & Chen, Y.-L. (2020). A Real-Time Vehicle Detection System under Various Bad Weather Conditions Based on a Deep Learning Model without Retraining. Sensors, 20(20), 5731. https://doi.org/10.3390/s20205731