Abstract

The truncated signed distance field (TSDF) has been applied as a fast, accurate, and flexible geometric fusion method in 3D reconstruction of industrial products based on a hand-held laser line scanner. However, this method has some problems for the surface reconstruction of thin products. The surface mesh will collapse to the interior of the model, resulting in some topological errors, such as overlap, intersections, or gaps. Meanwhile, the existing TSDF method ensures real-time performance through significant graphics processing unit (GPU) memory usage, which limits the scale of reconstruction scene. In this work, we propose three improvements to the existing TSDF methods, including: (i) a thin surface attribution judgment method in real-time processing that solves the problem of interference between the opposite sides of the thin surface; we distinguish measurements originating from different parts of a thin surface by the angle between the surface normal and the observation line of sight; (ii) a post-processing method to automatically detect and repair the topological errors in some areas where misjudgment of thin-surface attribution may occur; (iii) a framework that integrates the central processing unit (CPU) and GPU resources to implement our 3D reconstruction approach, which ensures real-time performance and reduces GPU memory usage. The proposed results show that this method can provide more accurate 3D reconstruction of a thin surface, which is similar to the state-of-the-art laser line scanners with 0.02 mm accuracy. In terms of performance, the algorithm can guarantee a frame rate of more than 60 frames per second (FPS) with the GPU memory footprint under 500 MB. In total, the proposed method can achieve a real-time and high-precision 3D reconstruction of a thin surface.

1. Introduction

Recent years have witnessed the release of a large number of fast, accurate depth sensors. With the opening up of graphics processors to general computing, 3D reconstruction by the depth sensors has attracted new attention in the field of computer vision []. It has a wide range of applications in reverse engineering, 3D printing, industrial inspection, robotics, augmented reality (AR), autonomous driving, and other fields [,,]. Traditional depth sensors, such as the Structured Light Camera, Time of Flight (ToF) Camera, and Terrestrial Laser Scanner (TLS), provide depth data as depth images or sets of unorganized points. These sensors have particular tradeoffs in terms of speed, accuracy, flexibility, and price []. The hand-held laser line scanner projects a laser pattern onto the surface of the object, and densely measures the surface profiles (3D curves) based on the optical triangulation method. Given its advantages in speed, accuracy, and flexibility [], this kind of scanner, such as HandySCAN [], HSCAN [], and FreeScan [] have been widely used in high-precision 3D measurement applications in industrial manufacturing.

The scanning process of 3D scanners can be divided into two parts: tracking and mapping []. Tracking solves the problem of multiple views registration. These scanners usually use external positioning devices or stable features in the observed scene to estimate the sensor’s position and orientation, which are usually represented by a 6-degree of freedom (DOF) transformation matrix []. In the mapping part, we need to align the depth data obtained from different viewpoints into a common reference frame by using the transformation matrix, and then use the depth data to build a model with a given resolution []; the mapping process needs to remove redundant data to the resolution of the final model and refine the observed surface continuously, since it is almost impossible to build a complete model without observing some areas twice or more, and the position estimation of the sensor is always inaccurate to some degree []. In other words, real-time 3D reconstruction requires incremental fusion of the depth data from different viewpoints into a single 3D representation that is continuously reparametrized []. This process is usually referred to as geometric fusion [].

Fusion approaches based on different principles have been proposed. Point-based fusion methods use simple unstructured representations that closely map to the input depth data through the measured ray to merge overlapping points [,,]. However, these methods cannot generate continuous surfaces directly. Height-map based methods use a more compact 2.5D continuous surface representation [,]. Although these methods effectively compress the surface, they fail to reconstruct the complex 3D structure. Implicit volumetric methods have demonstrated convincing results for incremental 3D reconstruction [,,]. The volumetric representation is also referred to as the truncated signed distance field (TSDF), which represents the reconstructed surface by samples of a distance function continuously stored in volumetric grids (voxels) []. The reconstruction result can be obtained by extracting the isosurface from the zero-level set of the signed distance function [,]. This approach is topologically flexible and has linear complexity. With the help of massively parallel processors, it has become very popular in real-time 3D reconstruction [,,,,,].

In the voxel-based fusion method, Bylow et al. [] improve the weight function to reduce the impact of unreliable measurements. Dong et al. [] model the internal probability distribution of SDF values to eliminate outlier observations. Other methods use RGB image to improve the level of detail of surface representation, but they cannot achieve real-time performance [,,].

Some high-precision sensor-tracking methods [,,] using the features of volumetric representation are also proposed. Furthermore, some other research has focused on introducing global adjustment in real-time reconstruction to reduce the tracking drift accumulation caused by frame-to-frame registration, as well as the corresponding voxel data fast update strategy [,,,,]. In addition to the static scene reconstruction, voxel-based methods can also be used in the 3D reconstruction of some dynamic scenes, such as reconstructing the human body surface undergoing free non-rigid motion [,,,,,].

Recently, the application of machine-learning methods in voxel-based 3D reconstruction, especially in the form of deep neural networks, has become a new trend []. Deep learning has achieved impressive results in processing sensor noise [], completing scans of scenes [], and 3D scene semantic segmentation [,,,,,].

Most of the fusion methods require the depth maps or sets of unorganized points as the input. Little research has been based on 3D laser curves. Tubić et al. first proposed a method of constructing TSDF using intersecting curves and a method of the pose refinement of curves by TSDF []. This method has been successfully exploited in the hand-held laser line 3D scanning system.

It was mentioned by [] that TSDF is problematic for reconstructing thin surfaces, but did not provide corresponding solutions. Moreover, no relevant research has been found to address this problem. The influence of the observed surface extends behind their estimated position and interferes with the TSDF from the opposite side of the thin surface. This will cause the surface to shrink towards the interior of the model, and make some topological errors appear on the surface, such as overlap, intersections, or gaps. This effect can be mitigated by reducing truncation, but there is still a set limit for the object’s thickness. The first contribution of this paper is to propose a thin-surface attribution judgment method to distinguish opposite surfaces and store them separately, which effectively avoids the interference of observations of thin surfaces. In some exceptional cases, real-time thin-surface attribution judgment may fail, so we have developed a post-processing method to detect and repair regions that have topological problems.

The efficiency of the underlying data structure is another critical issue in real-time 3D reconstruction. Many advanced real-time reconstruction systems have implemented different underlying data structures on graphics processors, but they have made tradeoffs in terms of speed, quality, and scale. The kinect fusion [] achieves a real-time frame rate but has a large memory footprint since it uses the volume to represent the whole space instead of only reconstructing densely near the observed surface. The moving volume method uses the motion of a camera to stream data from the graphics processing unit (GPU) to central processing unit (CPU) to expand the space []. However, the current active volume structure is the same as the Kinect Fusion, and a lot of memory is required to represent the empty space. In recent years, sparse volumetric representations have been developed to compress space []. The main idea is to reconstruct densely only near the observed surface and discard empty space. To index the allocated data, octree [,,], and hash table [,,,,,] have been successfully applied. An octree is unsuitable for graphics processors because of its low degree of parallelism. Nießner et al. proposed a voxel-hashing method for storing TSDF. The complexity is O (1) for accessing, inserting, and deleting data in hash tables; this method both effectively reduces the memory footprint and has a high-speed advantage [].

Hashing-based fusion methods can even run on mobile platforms with relatively low computing capability, such as tablets and mobile phones [,,,]. Based on the work of Nießner et al., Kahler et al. improved the storage structure of the hash table to reduce the impact of hash conflict []. Moreover, they have developed a hierarchical structure based on hash with few levels to improve storage efficiency further []. A method called structured skip list also has been proposed to increase the number of voxels that a hash table can accommodate []. Although these improved hashing methods alleviate the memory pressure to some degree by compressing data, they do not solve the problem from the root. GPU memory still limits the spatial extent and resolution of the voxel grid. Nießner et al. alleviate the memory pressure of GPU through bidirectional GPU–CPU streaming, but the GPU memory may still be exhausted when reconstruction scenarios are very dense. Our second contribution is to improve the data structure of voxel hashing. We only store hash tables on the GPU, and all voxels are stored on the CPU; thus, the limitation of GPU memory capacity is lifted on the scale of the reconstructed scene.

In this work, aiming at the problem of thin-surface reconstruction in the existing TSDF fusion method, we add a step of thin-surface attribute judgment in the real-time process to eliminate the mutual interference between the opposite sides of the thin surface; the angle between the surface and the observed ray is used to distinguish the TSDF observations from different parts of the thin surface. For the regions where surface attribution misjudgment may occur, we exploit a method of weak topological regions repairing to fix the possible topological errors in these regions. To solve the problem that the underlying data structure of the existing TSDF method takes up too much memory of the GPU, we design a new memory management method to reduce the memory utilization of GPU. By moving all voxel data to CPU, the memory pressure of GPU is alleviated, and the scale of reconstruction scene is further increased.

The remainder of this paper is structured as follows: Section 2.1. outlines the TSDF implementation based on 3D curves. Section 2.2. describes our improvements to thin-surface reconstruction in real-time processing; Section 2.3. explains a post-processing method of repairing weak topological regions, Section 2.4. exposes the underlying data structure for storing TSDF. The results and discussion follow in Section 3. Finally, we conclude and outline some ideas for future work in Section 4.

2. Methodology

2.1. Truncated Signed Distance Field (TSDF) Computation from 3D Curves

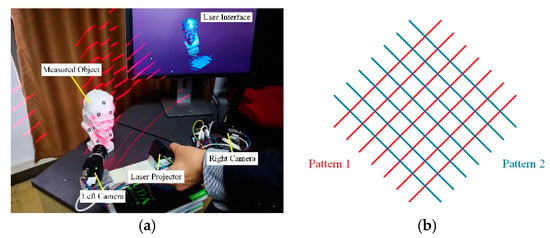

Our real-time 3D reconstruction approach is implemented with a highly integrated hand-held laser scanner, as shown in Figure 1. The scanner measures the 3D curves (a set of 3D points) of the object’s surface using laser triangulation []. A laser pattern is projected onto the measured object, and the left and right cameras capture the 2D images of the object at the same time; from the 2D images, we extract the 2D surface curves of the projection pattern reflected on the object; a set of 3D curves is computed in the sensor coordinate system using the 2D surface curves. Meanwhile, the 3D curves measured from different frames are aligned to the global coordinate system by the sensor’s position and orientation, which are continuously computed from the circular mark-points pasted on the object surface. Our 3D reconstruction algorithm integrates these arbitrary intersecting 3D curves into a single volumetric representation and refines the reconstructed surface continuously based on [].

Figure 1.

Schematic of the 3D laser scanning system; (a) components of the 3D scanner; (b) laser patterns; there are two kinds of projection patterns shown as red and blue respectively; we alternately project them onto the measured object; once one of the patterns is extracted, it will switch to the other immediately; the reflection of these two patterns will form multiple intersecting curves on the object.

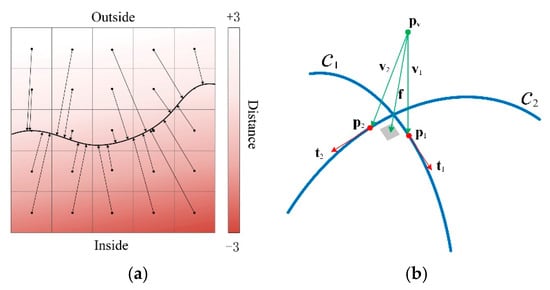

TSDF constructed from 3D curves is a vector field, which is different from the classical signed distance field []. As shown in Figure 2a, each voxel v in a field stores the distance vector to its closest point on the reconstructed surface. The vector’s direction is parallel to the normal of the closest point on the surface. The sign of the distance indicates whether a voxel v is inside or outside the surface. If v is outside of the surface, its sign is positive, and if v is inside the surface, its sign is negative. Since voxels that are too far from the surface have little effect on the surface construction, the distance field is truncated at distance . Truncation T affects not only the effect of surface reconstruction but also the runtime performance and memory consumption of the algorithm. On the one hand, if T is set too large, the surface details will be smoothed out. At the same time, increasing T means processing more voxels, thus slowing down the algorithm and consuming more memory. On the other hand, if T is smaller than the level of noise, it will lead to the appearance of isolated patches around the reconstructed surface. Therefore, it is generally appropriate to set T to the minimum value larger than the noise level of the apparatus, which is 0.9 mm for the scanner used in our experiment. Truncation T is also the leading cause of thin-surface problems in TSDF. How T affects thin-surface reconstruction will be mentioned in the next section.

Figure 2.

Schematic of truncated signed distance field (TSDF) constructed from intersecting curves; (a) a 2D slice of the TSDF computed on a regular voxel grid; each arrow indicates the distance vector of a voxel; gradients of color encode the signs and values of the distances; (b) a simple example of computing the value f of a vector field.

Reconstruction of surfaces is based on such a fundamental principle that two intersecting lines can determine a plane. Then the local reconstructed surface patch can be approximated in the neighborhood of the intersection points of all the curves on the surface. Figure 2b illustrates the principle of reconstruction in a simple case where two curves intersect. Assuming that p1 and p2 are the closest points to voxel v on curves c1 and c2, respectively, v1 and v2 are two vectors from the voxel center point pv to p1 and p2, respectively. The tangents of the curves at p1 and p2 have been computed in advance and are expressed as t1 and t2, respectively. Since the estimated normal n of the tangent plane to the surface should be perpendicular to t1 and t2, n should be the cross-product of t1 and t2. The distance d from pv to the closest surface can be approximated as the average of the projections of v1 and v2 on n. Therefore, the value f of the distance vector field at v is dn. A full description of how to locate the closest points on a curve and how to calculate the tangent at the closest point is beyond the scope of this paper. Interested readers can find relevant content in [].

More formally, let n curves of c1, …, cn pass through the local surface patch; p1, …, pn are their respective closest points to voxel v and t1, …, tn are the tangents of p1, …, pn respectively. Then the distance from the central point pv of voxel v to the surface can be obtained as the average value of the projected distance vectors on the estimated normal, i.e.,:

where pi is the closest point to pv on the curve ci, and n is the estimated normal of the surface.

Since the normal n is perpendicular to all tangents t1, …, tn, the following equation can be obtained:

C is the covariance matrix of tangents, and the eigenvector associated with the minimum eigenvalue of C is the solution to n. Then, the value of vector field f: at v is:

Therefore, the closest surface point pc of voxel v can be obtained by:

A curve can be considered as a linear interpolation of measure points {p1, …, pn} represented by a set of line segments c = {l1, …, ln-1}, where li = . In online scanning, the measured surface curves are used to update the covariance matrix C and distance d of all neighboring voxels incrementally. Each curve only affects voxels within a certain vicinity. As shown in Figure 3, the voxels affected by each line segment on a curve can form an approximate cylinder (called a fundamental cell) whose radius is determined by the truncation distance, which is called the envelope size in []. The envelope here serves the same purpose as the truncation mentioned earlier. Curves only affect voxels in the envelope and do not affect voxels beyond it. The following low-pass weight function prevents the discontinuity of the field over the envelope boundary:

Figure 3.

An example of the fundamental cell of the line segment ; t1 and t2 are the tangents at each endpoint of the line segment; all voxels in the cell must be located between the two planes perpendicular to the two tangents and the distance from the central point pv of voxel v toward the closest point pc must be less than the cylinder’s radius (envelope size).

σ stands for the size of the envelope. Increasing the envelope size can make the reconstructed surface smoother, but it will also lose any sharp features. Conversely, reducing the envelope size makes the surface noisy. Tubić et al. recommended as the envelope size as in [], and as the voxel size.

After weighting with ωi, new observations are integrated into the individual field as follows:

The average distance-vector is projected onto the normal n calculated from the covariance matrix C to obtain the value of TSDF at v:

f(v) is only calculated via real-time rendering and post-processing to generate surface meshes. The reconstructed surface can be obtained by extracting the isosurface from the zero-level set of f(v). In the online process, raycasting is used to render the reconstructed surface from the current view. After stopping scanning, a marching cubes method can be used to generate mesh models.

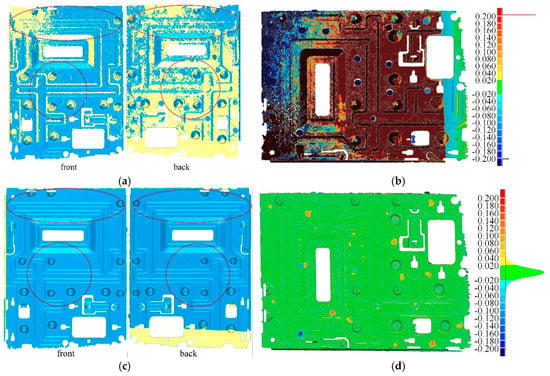

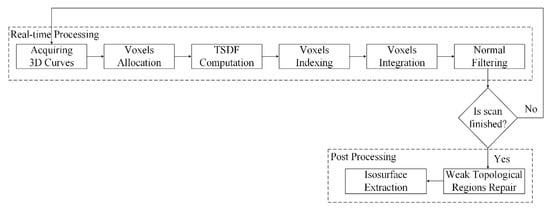

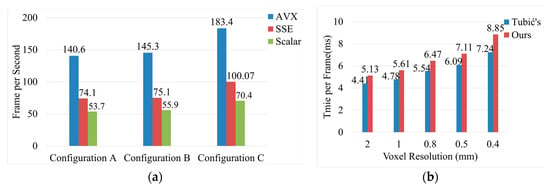

The above content briefly introduces method of Tubić et al. [] of generating a surface model from a set of intersecting 3D curves. This method is topologically flexible and has linear complexity, but it cannot reconstruct the surface of very thin objects. We use a laser line scanner to measure the 3D curves from the surface of a sheet metal part with a thickness of 0.8 mm and use method of Tubić et al. to reconstruct the surface mesh model of this object, which is shown in Figure 4a. The resolution (voxel size) of the model is 0.5 mm, and the size of the truncation (also known as the fundamental envelope) is 0.9 mm. Tubić’s output has a large area of data missing, and its distance (one-sided Hausdorff) to the computer-aided design (CAD) model is greater than 0.2mm (Figure 4b). We optimize the robustness of Tubić’s method to thin objects. With the same resolution and truncation, the optimized results not only guarantee the completeness of data (Figure 4c) but also guarantee its maximum distance (one-sided Hausdorff) to the CAD model within 0.2 mm (Figure 4d). The proposed thin-surface optimization of Tubić’s method is described in Section 2.2 and Section 2.3. Figure 5 shows the whole pipeline of the proposed algorithm. The algorithm employs a hash table to organize the underlying voxel data. The content related to the underlying data structure can be found in Section 2.4. The whole procedure of the proposed 3D reconstruction algorithm can be summarized as the following seven steps:

Figure 4.

Optimization of the 3D surface reconstruction of a sheet metal part; (a) results of Tubić et al. []; (b) deviation colormap between Tubić’s 3D model and the computer-aided design (CAD) model; (c) results of the proposed method; (d) deviation colormap of the proposed method.

Figure 5.

Pipeline of the proposed surface reconstruction method based on 3D curves.

- Voxels Allocation: after receiving a frame of 3D curves data, we compute the pv of all voxels that fall within the fundamental envelopes of the curves and find the corresponding voxel block of pv in the hash table. If no block is found, we allocate a new block for it on the heap;

- TSDF Computation: compute the TSDF observations of these voxels (including the closest point pc on the curve and tangent tc on pc);

- Voxels Indexing: use pv to index the address of voxel v in hash table;

- Voxel Integration: before integrating the new TSDF, it is necessary to determine whether the new one is on the same side as the surface already encoded by v. If so, the new TSDF is integrated to voxel v by Equations (6)–(8) (updating weight ω, matrix C and distance vector ). Conversely, we find the inverse voxel v’ on the other side of the thin surface and update v’. The method of determining which side of the thin surface the current observation belongs to will be elaborated in the next section;

- Normal filtering: the surface normal plays a vital role in the judgment of thin-surface attribution, and also affects the real-time display quality. Therefore, we need to denoise it to make it reliable. Firstly, the surface point pc (Equation (4)) and normal n (through a singular value decomposition (SVD) of matrix C) encoded by voxel v are computed. Then the neighborhood points of v are found in the hash table, and n is recomputed with the surface points and normals of v’s neighbors. The related content of normal filtering will also be described in the next section.

- Weak Topological Regions Repair: although we have distinguished the opposite sides of the observed thin surface when integrating voxels, it is difficult to distinguish them in some areas with complex topology. When the user stops scanning, we adopt a post-processing method to detect and repair the topological errors in these areas automatically.

- Isosurface Extraction: after repairing the topological errors in TSDF, we use the marching cubes algorithm to extract the isosurface and generate a mesh model.

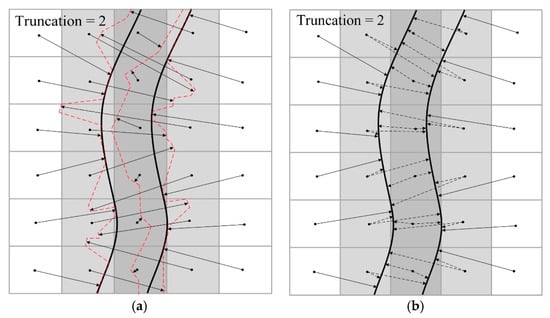

2.2. TSDF for Thin Surface

As mentioned earlier, TSDF has a disadvantage for reconstructing thin surfaces. The distance field extends a band, i.e., truncation, both inside and outside the observed surface. When the surface’s thickness is less than the width of the truncation band, the values of distance fields that encode opposite sides of the surface will interfere with each other, leading to the effect that the rear surface cannot be recovered from the fields. Figure 6a shows a 2D slice of a corrupted thin surface. Black solid lines represent the real surface of a thin object, and each solid arrow indicates a value in the distance field. The truncation distance of the field is two times of voxel size, and the interfered voxels are colored gray; the darker their color, the higher the impact. Unaffected lattices are white because they are too far from the other side of the surface. These arrows of interfered voxels do not point to the real surface, but to multiple false surfaces that are represented by red dotted lines. Therefore, the correct method is to store two vectors (dotted arrows) in a gray lattice, as shown in Figure 6b. That is to say, each voxel in the overlapping region should encode two tangent planes that are on different real surfaces. It is first necessary to determine which side of the thin surface each TSDF observation originates from during online scanning; we call this process thin-surfaces attribution judgment.

Figure 6.

Corrupted thin surface vs. the correct thin surface; (a) interfered TSDF and corrupted surfaces; (b) correct TSDF.

When one side of a thin surface is observed, the opposite side can be occluded. The view direction of the current observations implies that the current observations belong to one side of the thin surface. Moreover, to obtain the most accurate observations, the direction of the sensor’s view usually needs to be approximately perpendicular to the object’s surface. Therefore, the correlation between the direction of view and the attribution of the thin surface is stronger. Thus, we store the line of sight of each voxel and continuously update it during scanning as follows:

here, nv denotes the normal of the surface encoded by voxel v, and vp represents the current viewpoint. Each normal nv computed from the covariance matrix C in Equation (6) must be oriented consistently towards the viewpoint vp (so that the angle between nv and the line of sight is less than ). Equation (10) guarantees that the angle between the line of sight and the surface normal is the smallest so that the sign of the normal nv determined by the line of sight is reliable.

In the online scanning process, there are two cases of the viewpoint orientation:

- One Side Visible: when the line of sight is oriented toward one side of the thin surface, the sensor can only measure the current side and cannot measure the opposite side because the dominant side occludes it.

- Both Sides Visible: when the line of sight is approximately parallel to a thin surface and perpendicular to the edge of a thin surface, the thin surface on the opposite sides near the edge can be observed.

The first case (One Side Visible) must be addressed to prevent the thin surface from being totally corrupted during online scanning. The second case (Both Sides Visible) will only affect the reconstruction quality near the edge of the thin surface. Moreover, it requires a more complex mechanism, and its computation cost is very high. Therefore, we put this in the post-processing process, whose specific details are described in Section 2.3.

The signs of TSDF indicate whether a voxel is inside or outside an object. We use the following procedure to determine the signs of TSDF. Firstly, the orientation of the normal nv is adjusted to be consistent with the distance vector (Equation (7)) to get the vector ndis, that is, ndis needs to satisfy the equation:

Then the sign Sv at voxel v is determined by the dot product of ndis and the line of sight Vv stored in v:

With the change of signs, we can quickly judge the surface attribution online. When scanning from one side of a thin surface to the other, the current direction of view will deviate significantly from the previous Vv stored in voxel v. If the normal is oriented to the current viewpoint, the sign determined by and is contrary to the sign Sv previously stored in v. Meanwhile, if the signs and Sv are the same, the current measurement is on the same side as the previous cumulative measurement. If the current observation is judged to belong to the other side of the thin surface, then a new voxel is allocated on the heap, the observed value is integrated into , and the address of the new voxel is stored in the original voxel v. When querying the value of voxels in TSDF, we need to know not only the coordinates of the query point but also the line-of-sight at this moment to determine whether the voxels we are looking for are v or v’. Section 2.4 provides more details about the data structure.

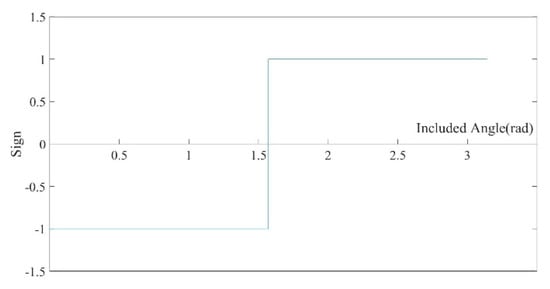

Figure 7 depicts the plot of the sign S varying with the angle α between vector ndis and line of sight Vv. As shown in Figure 7, near the critical point (), a slight change in the angle will cause the sign S to be flipped. Small perturbations of data (related to the accuracy of apparatus) will lead to a certain degree of deviation in normal estimation. When the angle between the line of sight and the surface normal , the error of normal estimation will lead to an inaccurate sign of TSDF, which will make the misjudgment of the thin-surface attribution. Meanwhile, the reliability of the measures obtained in this case is also relatively low. Therefore, a current measurement reliability judgment is added to the process to determine whether the thin-surface attribution judgment can be turned on. When the line of sight and the surface normal are almost perpendicular (), the current measurement is judged to be unreliable and discarded. Conversely, the thin-surface attribution judgment is turned on, and the current observation is integrated into the corresponding voxel determined by the thin-surface attribution judgment.

Figure 7.

The plot of the sign S varying with the angle α between vector ndis and line of sight Vv; at the angle , the sign of TSDF is flipped.

From the above description, we can see that the accuracy of a normal estimation will affect the thin-surface attribution judgment and the accuracy of the distance vector field f(v). Figure 8 shows the surface points (Equation (4)) and normal (through a SVD decomposition of matrix C in Equation (6)) of a scanned model. As can be seen in Figure 8a, the normals are very noisy and unreliable. A normal filtering method with lower computational complexity is used in the online scanning process to improve the accuracy of normal estimation. Figure 8b shows the filtered results. The overall flow of online normal filtering is:

Figure 8.

The comparison between the normal computed by Tubić et al. [] and that filtered by our method; (a) normal before filtering; (b) normal after filtering.

- Perform the approach proposed in [] to estimate the first normal nf and compute the reliability measure λr of nf;

- For each voxel v in the field, estimate the normal ns based on an anisotropic neighborhood;

- Update the normals by combining the first normal nf with ns under the influence of coefficient λr until either convergence or a maximum number of iterations is reached.

When estimating the first normal nf of voxel v, the three eigenvalues λ1 > λ2 > λ3 of the covariance matrix C in Equation (6) are used to evaluate the reliability coefficient λ of nf Let

Pauly et al. [] defined this coefficient as the surface variation. This measures the ratio of variance in the normal direction to the total variance and can be used to express the reliability of normal estimation. This quantity ranges between 0 (flat case) and 1/3 (anisotropic case). Add the following weight function to λσ so that the range of coefficient λr is [0, 1]:

The parameters ρ, φ, and ψ can be determined experimentally. Here, we use ρ = 0.01, φ = 0.1, ψ = 0.15. The purpose of introducing coefficient λr is to preserve the features of the first normals as much as possible and to prevent over-smoothing.

Let v be the voxel at which we need to re-estimate the normal; p represents the point closest to v on the surface, and n represents the first normal. pi is the surface point at voxel vi () in the neighborhood of voxel v, and ni is first normal at vi. is under a neighborhood size σp specified by users. A covariance matrix Cn can be constructed from all normals ni in the neighborhood of voxel v as follows:

here, nm is the average of ni. The weight ω consists of two factors:

where ωr is used to evaluate the reliability of neighborhood point vi equal to the weight (Equation (8)) accumulated at vi. Therefore, ωr is related to the number of observations at voxel vi and the distance from voxel vi to the surface. The weights are more significant when there are more observations, or the distance is closer, whereas the weights are smaller otherwise.

ωθ is an anisotropic weight function, as in the bilateral normal filtering method []; it is defined as follows:

where σp is the size of the neighborhood, and the angle parameter σn measures the similarity of the neighborhood normals. pm and nm are the average of pi and ni, respectively. The eigenvector associated with the minimum eigenvalue of Cn is the new estimate ns at voxel v, since it corresponds to the direction of the smallest variance of the neighborhood normal.

The final normal estimate is updated iteratively by the following formula:

where denotes the normalization of the length such that n is a unit vector. Whenever voxel v gets a new nfi, a new normal estimate ni is obtained by the above method until the deviation between ni and ni−1 is less than a certain threshold, or the number of updated times reaches a limit. As a local filtering method, our method has a low computational cost. Its time-consuming is related to the neighborhood’s size; a large neighborhood may increase the time consumption but will also lead to over-smoothing. The 3 × 3 × 3 neighborhood proved sufficient.

2.3. Repairing Weak Topological Regions

As mentioned in the previous section, when the line-of-sight is almost parallel to the thin surface, the thin-surface attribution judgment may fail, so the distance vector in the vector field cannot point to the correct position. The reconstructed surface mesh data from the vector field will deviate significantly from the object’s actual surface and will result in topological errors such as overlap, intersections, or gaps. Without prior knowledge, it is impossible to get the desired surface shape by merely repairing the topological errors in the surface mesh. Therefore, the distance vectors in TSDF must be repaired to restore the locus of the real surface. We need to analyze TSDF to find areas that may have topological problems and then repair the values of these areas.

2.3.1. Detecting Weak Topological Regions

Our method of detecting weak topological regions in TSDF is inspired by Sharf’s method []. Sharf’s approach for repairing weak topological regions is to find the critical point of the signed distance function (that is the point where the gradient disappears), and then specify the locus of the correct surface at the critical point by user interaction. Sharf finds discrete critical points, not contiguous regions. Many points with topological errors may still be undetected near these critical points, but Sharf compensates for this problem through specific user interface interactions, which violates our original intention of automatically detecting and repairing weak topological areas. Here, we make some adjustments to Sharf’s method so that the weak areas we find are continuous and complete. Specifically, the methods of detecting weak regions can be summarized as follows:

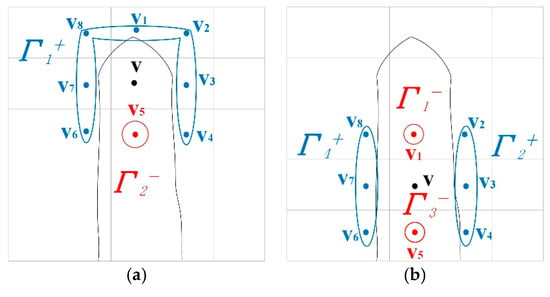

- Find the critical points: find all voxels in a neighborhood with v as the center. vi is divided into two sets, one f(vi) > f(v) is defined as positive, and one f(vi) ≤ f(v) is defined as negative. Within each set, adjacent voxels are joined together to obtain several connected groups Γi±, as shown in Figure 9. If the number of connected groups of v is 2, then v is not the critical point. If the number of connection groups of v is 1 or ≥ 2, then v is a critical point;

Figure 9. The 2D representation of the critical points identification method; the solid black line represents the actual surface; the neighborhood of voxel v is divided into two sets; (a) voxel v, positive set {v5}, negative set {v1, v2, v3, v4, v6, v7, v8}, positive set and negative set constitute two connection groups Γ1+ and Γ2− (red and blue circle), so v is not a critical point; (b) the voxel v, positive set {v2, v3, v4, v6, v7, v8} and negative set {v1, v5}; the two sets are divided into four connection groups Γ1−, Γ2+, Γ3−, and Γ4+, so v is a critical point (there are four groups in 2D, but only three in 3D).

Figure 9. The 2D representation of the critical points identification method; the solid black line represents the actual surface; the neighborhood of voxel v is divided into two sets; (a) voxel v, positive set {v5}, negative set {v1, v2, v3, v4, v6, v7, v8}, positive set and negative set constitute two connection groups Γ1+ and Γ2− (red and blue circle), so v is not a critical point; (b) the voxel v, positive set {v2, v3, v4, v6, v7, v8} and negative set {v1, v5}; the two sets are divided into four connection groups Γ1−, Γ2+, Γ3−, and Γ4+, so v is a critical point (there are four groups in 2D, but only three in 3D). - Generate weak topological regions: take each critical point Pc as a seed point, find connected component Pg (a region of adjacent voxels) of Pc within a specified range (such as two times of the voxel size), and the dot product of the normal less than a certain threshold Tdot. Figure 10 shows weak regions detected by our method. This model has many stiffeners with a thickness of about 1 mm. When the truncation is also set to 1 mm, the surface model is prone to generate topological errors in the location of these stiffeners. The proposed method can detect these weak topological regions (the red areas in the figure), and the method of repairing topology errors will be described in the next section.

Figure 10. The effect of weak regions detection; (a) point clouds are generated from TSDF by Equation (4); the non-critical point is marked blue, and the critical point is marked red; (b) a cross-section of a weak region (the green box in Figure (a)); there are many points inside the surface that do not fall on the actual surface; these cause the generated surface mesh model to shrink to the inside of the object.

Figure 10. The effect of weak regions detection; (a) point clouds are generated from TSDF by Equation (4); the non-critical point is marked blue, and the critical point is marked red; (b) a cross-section of a weak region (the green box in Figure (a)); there are many points inside the surface that do not fall on the actual surface; these cause the generated surface mesh model to shrink to the inside of the object.

2.3.2. Repairing Weak Topological Regions

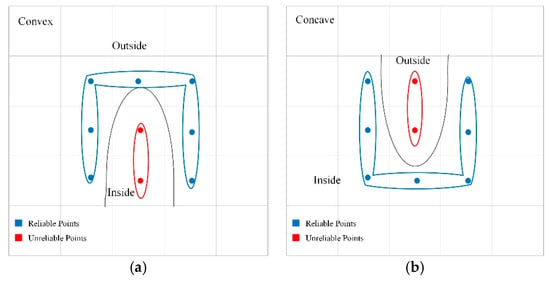

Figure 10b shows that some points in the weak region are unaffected by the thin-surface effect and can fall on the actual surface. By a reasonable parameter configuration (including voxel size and truncation width), we can ensure that there will always be some reliable points in the weak region. Therefore, the task of repairing weak areas can be transformed into the process of finding reliable points and inferring the value of unreliable points from reliable points.

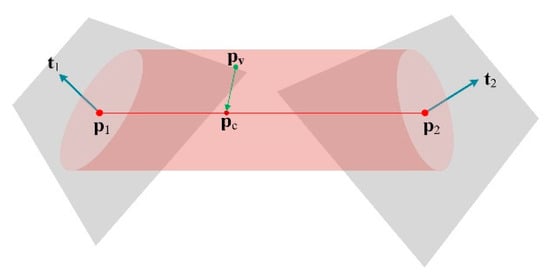

As shown in Figure 11, voxels inside convex surfaces are easily affected by observations on both sides of the thin wall, while voxels outside are unaffected. Therefore, for convex surfaces, the TSDF values of voxels with positive signs can be used as input to infer the values of voxels with negative signs. Conversely, for concave surfaces, the TSDF values of positive voxels can be inferred from negative voxels. The number of positive and negative connection groups in weak regions can be used to judge whether a surface is convex or concave. When the number of positive connection groups is larger than that of the negative connection groups, the surface is convex, whereas the surface is concave otherwise.

Figure 11.

The distribution of reliable and unreliable points in weak regions of convex and concave surfaces; reliable voxel centers are blue, unreliable voxel centers are red; (a) distribution of weak regions for convex surfaces; (b) distribution of weak regions for concave surfaces.

After identifying reliable voxels, we need to infer the actual surface from them. pi and ni are the surface points and normals of reliable voxels, respectively. Our goal is to construct a directed distance field so that f (pi) = 0 and . The surface represented by f passes through all pi, and the normal of the implicit surface matches ni at pi. In calculating f, we use the Hermite radial basis function (HRBF) [] interpolation method since it considers both points and normals. Then, we define f as follows:

where x∈ is used to indicate the location to be evaluated. Function ϕ: is a real-value function on [0, ∞) called the radial basis function. When fitting functions with three variables, biharmonic (ϕ(r) = r) and triharmonic (ϕ(r) = r3) splines are good choices.

is the column vector of dimension d = 3, and can be calculated by the following formula:

where ,

is a diagonal matrix of dimension d × d with ,

To calculate the value of f, we must find N αi∈ and βi∈. According to the constraints of f (pi) = 0 and , the following linear equations are obtained:

Therefore, the equation system AxT = bT can be written as:

A single matrix block (d + 1) × (d + 1) of coefficient A (N(d+1))×(N(d+1)) can be written as:

After calculating the weights of αi and βi, the distance from any spatial position x to the implicit surface can be calculated using Formula (17), and the gradient can be calculated by Formula (18). The normal of the implicit surface encoded by x can be obtained by normalizing the gradient. Therefore, the distance vector and normal of every unreliable voxel in the weak region of topology can be recomputed from the HRBF.

2.4. Data Structure

We defined the following structure to store the TSDF properties at each voxel:

- struct Voxel {

- -

- float sight [];

- -

- float C [];

- -

- float distance [];

- -

- float weight;

- -

- unsigned int pointer;

- -

- unsigned int address;

- };

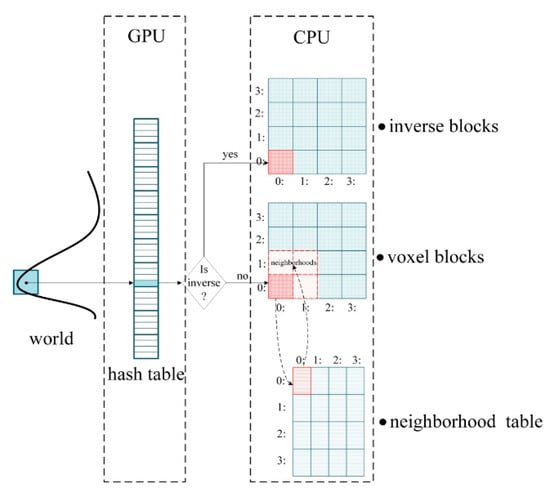

Each voxel stores the sight, the covariance matrix C defined in Equation (6) (Since matrix C is a symmetric matrix, only six independent elements in the corresponding upper triangular matrix are stored), the distance defined in Equation (7), the accumulated weight defined in Equation (8), the pointer to the voxel belongs to the other side of the thin surface and the address of the surface point. The normal is stored in the surface point, which can be used to judge the surface attribution and interactive visualization. A certain number of voxels (the default is 83) are merged to store, which is called a block. All voxels in a block are stored continuously in the order of x–y–z, and the coordinates of each voxel center can be obtained from the coordinates of the block.

All voxel blocks are organized by a hash table implemented on GPU []. The hash table stores hash entities, each of which points to an allocated voxel block. Their corresponding relationship is established by converting the spatial coordinates into hash values through a hash function. Nießner provided a complete method for searching, inserting, and deleting data in hash tables and a mechanism for resolving hash collisions. Since the hash table is implemented on the GPU, it can index all allocated data in parallel and thus has a considerable speed advantage.

Unlike Nießner, which stores some voxel blocks on the GPU and others on the CPU, we directly store all voxel blocks on the CPU to reduce the memory footprint of the GPU. Only hash tables are stored on the GPU, and each entity in the hash table contains a pointer to the voxel blocks allocated on the CPU. Once we have inserted a new entry into the hash table, we allocate a voxel block to the heap memory on the CPU. The heap is a dynamic 2D array that supports dynamic scaling. It is divided into contiguous blocks (mapped to the size of voxel blocks). Once allocated heap memory is exhausted, a fixed-length array is appended to the back of the 2D array. Since the array is accessed in parallel, synchronization is necessary, by incrementing the pointer of the array using atomic operation.

Before integrating the new TSDFs, all voxel blocks must be allocated that fall on the input 3D curves, and are also within the fundamental envelope of the curves. For each line segment on the curve, we first calculate the bounding box determined by , and then for all the voxels in the bounding box, we determine whether it falls into the fundamental envelope of . If it is so, we find the corresponding entity of it in the hash table. If no entity is found, we insert an entity in the hash table and allocate a voxel block on the heap.

In addition to the voxel block array, the CPU also stores an inverse voxel block array and an array of neighborhood lookup tables, which are also maintained by dynamic 2D arrays. Inverse voxel blocks are used to store the opposite sides of a thin surface. We also store each element in the inverse voxel blocks as a Voxel struct. Before integrating each new TSDF, we need to determine whether it belongs to the same side of the surface that has been encoded in the non-inverse voxel v. If it is so, we integrate the new value to v, and vice versa integrate it to the inverted voxel v’ pointed by v.pointer. If the value of v.pointer is empty, a new voxel v’ is allocated on the heap of the inverse voxel blocks, and the address of v’ is assigned to v.pointer. Then we compute the new TSDF (the closet point pc on the curve and tangent t at pc) and integrate the new value to its corresponding voxel. When assigning a new inverse voxel v’, we lock voxel v atomically to prevent other threads from writing to it at the same time.

| Algorithm 1. Computation of the TSDF from a set of curves. |

| Input: Set of curves ci = {pi,1, …, pi,ni}, i[1,N]; tangent ti,j at each point pi,j, j[1,n]. Output: TSDF: . 1: Initialize matrix C, distance vector and weight ω in all voxels to zero 2: for each curve i[1, N] (number of curves) do 3: for each point j[1, n] (number of points on the curves) do 4: Compute the bounding box of the line segment ; 5: for all voxels pv (the central point of the voxel) in the bounding box do 6: if pv is in the fundamental envelope associated with then 7: Compute the closest point pc to pv on the curve; 8: Compute the tangent tc on the point pc; 9: Find the voxel v associated pv in the hash table; 10: Find 26 neighborhoods of the voxel block where v resides; 11: Update the elements corresponding to v’s block in the neighborhood lookup table; 12: if current TSDF observations (pc and tc) belong to the surface encoded by v then 13: Update matrix C at voxel v (Equation (6)); 14: Update the sum of distance vectors with pc–pv (Equation (7)); 15: Update the sum of weight ω at voxel v (Equation (8)); 16: Compute the surface point ps (Equation (4)) and normal n (by SVD decomposition of 17: matrix C); 18: Find the neighborhoods of v; 19: Recompute n (Equation (16)) with the normals of the neighborhood points; 20: else 21: Find inverse voxel v’; 22: Update matrix C at voxel v’ (Equation (6)); 23: Update the sum of distance vectors with pc–pv (Equation (7)); 24: Update the sum of weight ω at voxel v’ (Equation (8)); 25: Compute the surface point ps’ (Equation (4)) and normal n’ (by SVD decomposition of 26: matrix C); 27: Find the neighborhoods of v’; 28: Recompute n’ (Equation (16)) with the normals of the neighborhood points. |

To search voxels neighborhood information conveniently and avoid frequent data transmission between CPU and GPU, we maintain a neighborhood lookup table of all blocks on CPU. Neighborhood lookup table plays a vital role in normal filtering operation. It stores the pointers of 26 neighborhood voxel blocks for all voxel blocks. For each voxel v, if it is located at the center of the voxel block B, the 26 neighboring voxels adjacent to it can be found in the block B; its neighbors can be found by simple subscript addition and subtraction since the elements in a voxel block are stored continuously. If it is on the edge of the voxel block B, the neighboring voxels need to be found in the voxel blocks adjacent to B. Neighborhood lookup tables are divided into contiguous buckets, each consisting of 26 elements that store pointers to the neighborhood voxel blocks. The storage order of these buckets is the same as that of the voxel blocks. Therefore, all neighbors of each voxel block can be found through its pointer. Neighborhood lookup tables are also updated in real time during scanning. First, we find the voxel blocks in the fundamental envelopes of the current input frame, and then find 26 neighbors for each of them by the hash table to update the corresponding elements of the lookup table. The pseudocode Algorithm 1 shows the whole procedure of computing TSDF based on the above data structure. It is assumed that the tangent of each point on the curve has been calculated before field computation. Figure 12 shows our entire data structure.

Figure 12.

The whole data structure; first, the world coordinates of voxel center are mapped to the hash table to get the address of the voxel block on the central processing unit (CPU), and a thin-surface attribution judgment is then made to store the new TSDFs in either the non-inverse block or the inverse block; the subscripts of the neighborhood lookup table correspond to the individual blocks and each element of the lookup table stores the corresponding neighborhoods of the blocks; only the hash table is stored on the graphics processing unit (GPU), and all other data is stored on the CPU.

3. Results

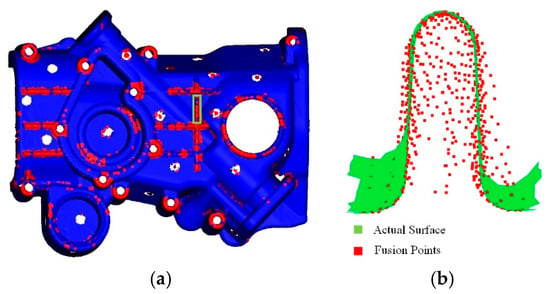

Here, we verify the performance of our proposed algorithm through a series of experiments. The experimental data are all registered 3D curves collected by the laser scanner shown in Figure 1. Figure 13 shows an example of the input data of our algorithm. We use the proposed method to integrate all 3D curves of real objects into TSDF, extract the isosurface, and construct the mesh model.

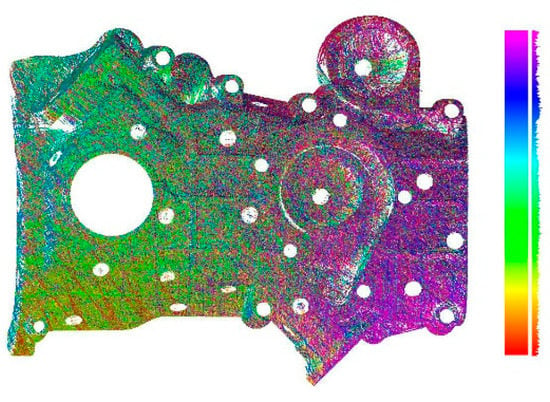

Figure 13.

An example of input data of our algorithm; curves are displayed in different colors, and histograms of different color intervals are displayed on the right side.

To verify the effectiveness of the proposed thin-surface improvement, we compare the reconstruction results of the proposed method with Tubić et al. [] for industrial products of different thicknesses. The accuracy of the proposed method is evaluated by comparing it with two existing hand-held 3D laser line scanners: Creaform HandySCAN700 [] and Scantech HSCAN771 []. As shown in Table 1, these two kinds of scanner can achieve a volumetric accuracy of 0.02 mm (part size within 1 m) and have been widely used in the field of industrial 3D scanning. We use cloud to mesh (C2M) distance metric to quantify the accuracy of surface reconstruction []. The distance from each vertex in the source model to the closest triangle in the reference model is recorded, and two standard statistics are computed for all distances: Mean and Standard Deviation (Std.) []. In our experiments, the CAD model is used as a reference model. We do statistical hypothesis tests (Welch’s t-test [] and F-test []) on these two statistics to evaluate the significance of the improvement. All the statistical tests are implemented by R programming language version 3.0.0 [].

Table 1.

Accuracy of the scanners involved in the comparison.

In terms of computational efficiency, we test the speed of the proposed algorithm on three hardware devices with different configurations shown in Table 2. The performance of the devices we used is equivalent to the hardware configuration recommended by mainstream industrial 3D scanner manufacturers (Creaform) []. Furthermore, we verify the effectiveness of the proposed method to improve the underlying data structure by comparing its GPU memory usage with VoxelHashing [].

Table 2.

Personal computer (PC) configuration for performance test.

3.1. Experimental Object and Parameter Setting

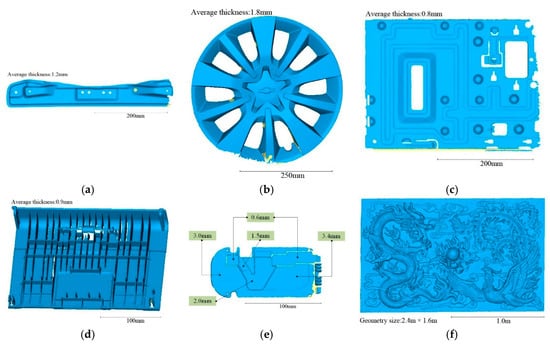

Figure 14 shows the 3D model of six different objects scanned by the proposed method in our experiment. Figure 14a–e are five thin industrial products with different thicknesses, which are used to verify the effectiveness of the proposed thin-surface optimization. The thickness of these objects is indicated in each subfigure. All of the 3D models in the thin-surface verification experiment are generated with the same configuration parameters: voxel size = 0.5 mm, truncation width = 0.9 mm. For comparison purposes, we also set the same parameters for Tubić et al. []. When using the scanners of Creaform [] and Scannech [], we also set the resolution of scan to 0.5 mm.

Figure 14.

Six experimental objects: (a) metal bar; (b) automobile hub; (c) sheet metal part; (d) plastic shell; (e) metal panel; and (f) relief; the metal panel is composed of five parts with different thickness.

The “relief” (f) is 2.4 m × 1.6 m in size. The total number of curves acquired from the “relief” is 472,612, and the total number of 3D surface points is 3,365,470,052. Since this object has large size and low self-occlusion, we use it to test the algorithm time and memory efficiency. This object can ensure that around 7000 laser points are obtained at each frame, basically reaching the upper limit of the single-frame data of the apparatus we used. In the performance test, we compare the speed and memory footprint of the algorithm in different resolutions. The truncation width is set to ( as the voxel size), which is recommended by Tubić et al. [].

3.2. Qualitative Results

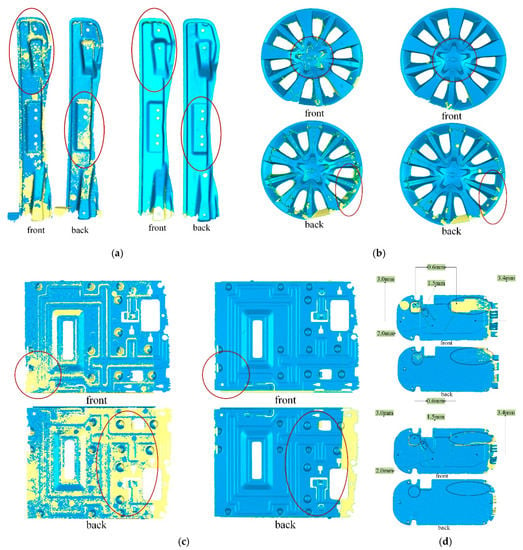

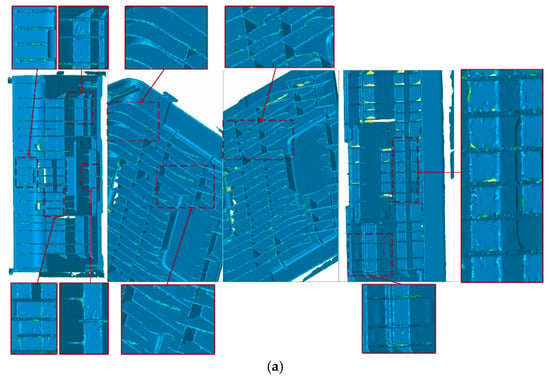

Figure 15 compares the surface reconstruction results of Tubić et al. [] with ours for the same thin objects. The front and back views of each model are shown in these figures, and the front and back sides of the surface model are rendered in different colors (the front is given blue, the back is made yellow). The apparent improvements in the figures are marked with red ellipses. From these figures, we can see that many parts of results of Tubić et al. [] have been lost, while our results are complete and smooth.

Figure 15.

Comparison of scan models from thin products; (a) Tubić et al. [] (left) and our (right) results of the metal bar; (b) Tubić et al. [] (left) and our (right) results of the automobile hub; (c) Tubić et al. [] (left) and our (right) results of the sheet metal part; (d) Tubić et al. [] (top) and our (bottom) results of the metal panel.

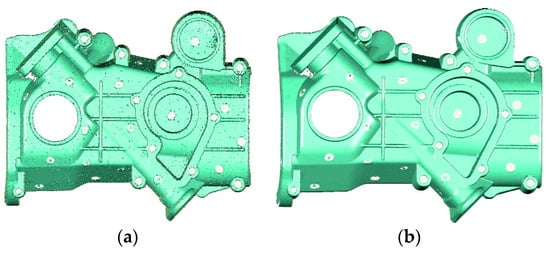

We also used Creaform HandySCAN700 [] to scan the “sheet metal part” with a resolution of 0.5 mm, as shown in Figure 16. There are some small spikes and holes in Creaform’s [] model, which are not found in the scanning process. The same defects were not found on the surface of our model. However, compared with Creaform’s [] results, our surface is not smooth enough on the edges. Alias artifacts appear at sharp features on the extracted surfaces since TSDF is sampled on a regular grid with a fixed step length. In contrast, Creaform [] uses higher resolution at sharp features.

Figure 16.

Comparison of our scan results (top row) with the Creaform scanner [] (bottom row); (a) a comparison of zoomed details at the same location; (b) the front and back views; (c) the details of another region; (d) triangles of the mesh models.

The “plastic shell” is a more extreme example with a very complex topology. When scanning this object, it is almost impossible to ensure that the line of sight can be perpendicular to the sheets, so almost all of the sheets are corrupted (Figure 17a). In Figure 17b, Creaform’s [] scanning results show similar problems, and the model surface at the edge of the sheet is messy and incomplete. By repairing the topological structure of these weak topological regions, the shape of these sheets is well restored (the missing parts are caused by occlusion, not by our method).

Figure 17.

Comparison of the plastic shell model; (a) the interference of TSDF brings holes and spikes on the stiffener of the model; (b) Creaform’s [] results show similar problems; (c) the proposed post-processing approach repaired the surface of the stiffener.

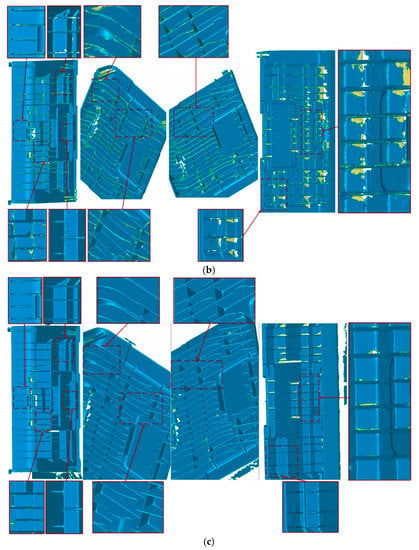

3.3. Quantitative Results

In the accuracy evaluation, we choose two typical thin objects: sheet metal part and plastic shell. The topological structure of the sheet metal part is simple, so it is used to verify the effectiveness of thin-surface improvement (thin-surface attribution judgment) in real-time reconstruction. The plastic shell has a complex topology, which makes it easy to misjudge the attribution of a thin surface in the scanning process; so we use it to verify the effectiveness of the post-processing method for weak topological region repairing.

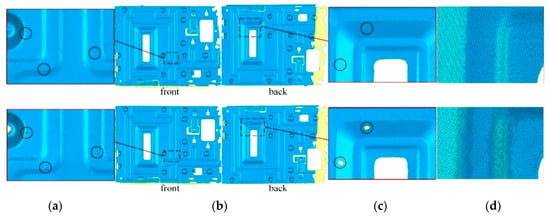

The accuracy of surface reconstruction is evaluated by the C2M distances [] between reconstructions and CAD models. Figure 18 plots color maps of C2M distances for the two thin objects. In each subfigure, we visualize the C2M errors through a jet color map and a histogram. Distances greater than 0.2 mm are depicted with deeper colors, and the red/blue gradations indicate positive/negative distances, respectively. We evaluate the C2M errors quantitatively for each method with two statistics, as shown in Table 3. Our real-time processing approach is more accurate than those of Tubić et al. [], but is similar to the existing scanners (Creaform, Levi, QC, Canada [] and Scannech []). After the proposed post-processing, we get the minimum values on both Mean and Std.

Figure 18.

Cloud to mesh (C2M) error colormaps for surface reconstructions of two thin products; the left column is the reconstruction of the sheet metal part, and the right column is the reconstruction of the plastic shell; The compared method are (a) Tubić et al. []; (b) Creaform []; (c) Scantech []; (d) our real-time processing approach; and (e) our post-processing approach. In each subfigure, frequencies with C2M errors in the interval of [−0.02 mm, 0.02 mm] are also indicated.

Table 3.

C2M error statistics (mean ± Std.) of different methods in millimeters.

We randomly selected 1000 samples from the C2M distance data population of each method and carried out statistical tests on the samples to evaluate the significance of the proposed improvement. The mean and Std. of each group of sample data are listed in Table 4. Normal distribution is the basic hypothesis of a statistical test, so we use the Pearson’s test [] to judge whether the samples are well-modeled by a normal distribution. As shown in Table 5, the p-value of all samples is higher than 0.05, so we conclude that the samples are consistent with the normal distribution at the significance level of α = 0.05.

Table 4.

C2M error statistics (mean ± Std.) for the random samples of different methods.

Table 5.

Pearson’s test for samples of different methods.

Here we apply Welch’s t-test (unequal variances t-test) [] to compare the significance of the differences in the mean values between groups of samples, since the Std. between groups is different. Table 6 and Table 7 show the t-test results of compared methods for two thin objects. Let μi be the mean of the population corresponding to a column in the table, and μj be the mean of the population corresponding to a row. We applied a one-tailed test [] to each grid in the table at a significance level of 0.05. That is, H0: μi = μj, H1: μi > μj. If p < 0.05, we reject the null hypothesis and conclude that μi and μj have a significant difference. For the two thin objects we selected, all the methods involved in the comparison have smaller mean values than Tubić et al. [], but the differences between the other methods are not significant.

Table 6.

Welch’s t-test for the sheet metal part.

Table 7.

Welch’s t-test for the plastic shell.

For the difference of Std. between each group of samples, we conduct the F-test of equality of variances [], as shown in Table 8 and Table 9. Let σi2 be the variance of the population corresponding to a column in the table, and σj2 be the variance of the population corresponding to a row. For each grid in the table, H0: σi2 = σj2, H1: σi2 > σj2. If p < 0.05, we reject the null hypothesis and conclude that σi2 and σj2 have a significant difference. For the sheet metal part, the variance of each group can be sorted as follows: Tubić et al. [] > Creaform [] > Scantech [] > Our approach; there is no significant difference between our real-time approach and post-processing approach at the significance level of 0.05. For the plastic shell, Tubić et al. [] > Creaform [] > Our real-time approach > Scantech [] > Our post-processing approach.

Table 8.

F-test for the sheet metal part.

Table 9.

F-test for the plastic shell.

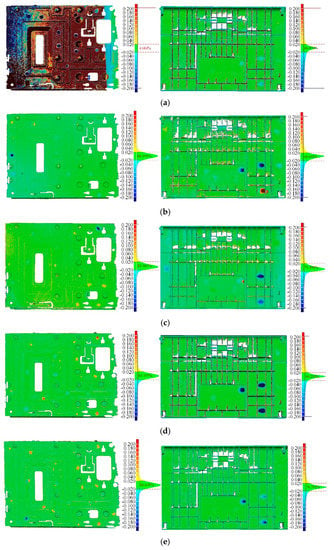

3.4. Performance

We have implemented various Single Instruction Multiple Data (SIMD) [] instruction set versions of the proposed algorithm in C++ language, and the program was compiled with Visual Studio 2015. Figure 19a compares the frame rates of different instruction set versions for the online proposed reconstruction method on different hardware devices shown in Table 2. The voxel resolution of scanning is set to 0.5 mm, and about 40,000 voxels are inserted in every single frame. Although on different devices, the algorithm uses CPU multithreading and GPU parallelism to speed up, the use of SIMD instruction set still leads to a frame rate increase. Our algorithm can achieve a real-time frame rate above 60 FPS since most of the CPUs support instruction sets above SSE now. Figure 19b compares the time per frame for our method with Tubić et al. Although our method has additional operations such as thin-surface attribution judgment and normal filtering, the time-consumption increase is not obvious due to the superior performance of the underlying data structure we adopted. The comparison was made on a device with Configuration A.

Figure 19.

Time efficiency comparison; (a) frame rate comparison of real-time reconstruction algorithms on three different hardware configurations in Table 2; the blue bars represent the algorithm implemented with Advanced Vector Extension Instruction Set (AVX) [], red bars represent Streaming SIMD Extensions Set (SSE) [], and the green bars represent the version with no instruction sets; (b) performance comparison with Tubić et al. [].

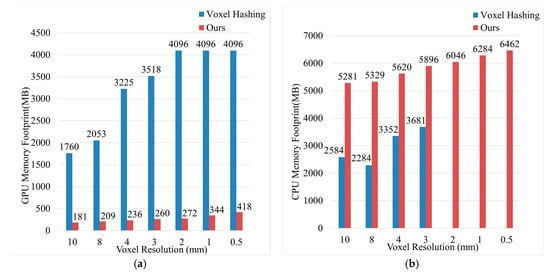

Figure 20 compares the CPU memory usage and GPU memory usage of our proposed method and VoxelHashing [] method. The comparison was made on a device with Configuration A. When the voxel resolution was increased to 2 mm, VoxelHashing [] had already filled up all of the 4 GB GPU memory and made the program crashed, which significantly limited the algorithm’s practicability since most of the GPU hardware devices on the market only supported 4 GB memory. In contrast, the GPU memory footprint of our proposed method was insignificant compared to voxel hashing []. Although the CPU memory footprint had increased, it was acceptable (up to 6 GB). After all, most computer devices support CPU memory expansion.

Figure 20.

Memory footprint comparisons; (a) GPU memory footprint comparisons; (b) CPU memory footprint comparisons.

3.5. Discussion

From the qualitative results shown in Figure 15, we can see that our proposed modifications have visible progress in the reconstruction of thin surfaces compared with the original method of Tubić et al. []. The surface reconstructed by our method is complete and smooth, and it is difficult to see the interference between the opposite sides of the thin surface. However, Tubić et al. [] cannot achieve the desired results. Both methods use the same input data and configuration parameters (voxel size and truncation width). It can be seen from Figure 17 that the proposed post-processing method is useful and necessary for thin objects with complex topology.

Quantitative analysis results can confirm the conclusion of qualitative evaluation. Whether it is a thin object with simple topological structure (sheet metal part) or complex topological structure (plastic shell), the deviation between the reconstruction of our method and CAD model is the smallest (the minimum mean and Std.). Taking the mean as the evaluation metric, our method significantly improves the accuracy of the original method of Tubić et al. [], but it shows similar accuracy to the other two existing scanners (Creaform [] and Scantech []). If Std. is used as the evaluation metric, our method is significantly different from other existing methods (Tubić et al. [], Creaform [], and Scantech []). Our method is more stable than the existing approaches. Moreover, for the thin object with complex topological structure, it can significantly improve the reconstruction accuracy by the proposed post-processing method.

As can be seen from Figure 19, the proposed algorithm can achieve a frame rate of more than 60 FPS on the hardware devices used in the experiment. These devices are not expensive, and their performance is the same as the hardware configuration recommended by mainstream industrial 3D scanner manufacturers. In terms of efficiency, the proposed algorithm can meet the requirements of industrial real-time 3D reconstruction. As can be seen from Figure 20, the proposed method can effectively reduce the GPU memory footprint of voxel hashing []. Although the memory footprint of the CPU has increased, it is within a reasonable range. After all, most computer devices support CPU memory expansion, while GPU cannot expand memory capacity. Therefore, our algorithm has high practicability.

Although the proposed algorithm improves the accuracy of thin-surface reconstruction and can meet the scanning requirements of most thin industrial products, the post-processing method of thin-surface topology repair still has some limitations on parameter setting. When the voxel size or truncation width is too large relative to the thickness of the object, the topology-repairing method will lose its effect. It is because our method of topological repair is based on the assumption that some voxels in the distance field are not interfered by the overlap of truncation bands on thin surfaces. The topology repair method uses these unaffected voxels as input to fit the surface and infer the values of the voxels in the affected area. If the voxel size or the truncation width are so large that the truncation bands on opposite sides of the thin surface overlap entirely, then the surface-fitting method loses reliable input, which leads to the inability to reconstruct the accurate surface. Therefore, for thin objects with complex topology, we recommend that users use such parameter configurations as voxel size ≤ 0.5 mm and truncation width ≤ 1 mm. There is no such limitation for thin objects with simple topology, such as a thin plate.

We use hash tables to compress the space and only reconstruct densely near the observed surface to eliminate the memory footprint of the empty space. However, it is not necessary to use regular grids of fixed voxel size to represent all parts of the scene. For example, a desktop needs less detail than a highly structured keyboard on it. Selecting different resolutions to represent different parts of the scene can reduce memory consumption and improve the reconstruction speed at the same time. It is also advantageous to use small voxel sizes to represent areas with complex topology or sharp features. Therefore, in the future, we will study how to build a 3D model with different resolutions adaptively according to the shape of the surface.

4. Conclusions

An improved 3D reconstruction method for a thin surface based on a hand-held laser line scanner is proposed. Aiming at the problems existing in the TSDF method for the 3D reconstruction of thin object surfaces, we propose two main improvements: first, in the real-time scanning process, we use the angle between the surface normal and the line of sight to determine the attribution of the thin surface, avoiding the mutual interference between the observation surfaces belonging to different positions; second, we exploit a post-processing method for automatically detecting and repairing topological errors in some areas where misjudgment of thin-surface attribution may occur. Besides, we also improve the underlying data structure of the existing TSDF method to reduce the GPU memory usage. Qualitative and quantitative experimental results provide evidence that the proposed 3D reconstruction approach to a thin surface is valid and can significantly improve the accuracy of thin-surface reconstruction. In comparison with the state-of-the-art laser line scanners, our method shows a similar (C2M distance mean []) or even better accuracy (C2M distance Std. []). In terms of computing efficiency, the proposed method effectively reduces the GPU memory consumption, which can guarantee a frame rate of more than 60 FPS with the GPU memory footprint under 500 MB.

Although the proposed method can reconstruct a thin surface accurately in most cases, there are still some limitations in parameter setting when reconstructing thin objects with complex topology. If the voxel size or truncation width is too large, mutual interference between the opposite sides of the thin surfaces may still occur. Meanwhile, it is a waste of memory to represent all parts of the scene with a regular grid. In the future, we plan to further improve the robustness and memory efficiency of complex thin-surface reconstruction by adaptively setting different resolutions to the reconstructed model according to the shape of the surface.

Author Contributions

Conceptualization, Y.H. and S.Z.; Methodology, Y.H. and S.Z.; Software, Y.H., S.Z. and F.Z.; Validation, Y.H. and F.Z.; Formal Analysis, Y.H., X.H. and F.Z.; Investigation, Y.H., S.Z., X.H. and F.Z.; Resources, S.Z.; Data Curation, Y.H.; Writing, Y.H., S.Z., X.H. and F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Sciences Foundation of China (41671452 and 41701532).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kahler, O.; Prisacariu, V.; Valentin, J.; Murray, D. Hierarchical Voxel Block Hashing for Efficient Integration of Depth Images. IEEE Robot. Autom. Lett. 2016, 1, 192–197. [Google Scholar] [CrossRef]

- Bourke, P. Automatic 3D reconstruction: An exploration of the state of the art. GSTF J. Comput. 2018, 2, 71–75. [Google Scholar]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D Mapping: Using Depth Cameras for Dense 3D Modeling of Indoor Environments. In Experimental Robotics; Springer: Marrakech, Morocco, 2014; pp. 477–491. [Google Scholar]

- Klingensmith, M.; Dryanovski, I.; Srinivasa, S.; Xiao, J. Chisel: Real Time Large Scale 3D Reconstruction Onboard a Mobile Device using Spatially Hashed Signed Distance Fields. In Proceedings of the Robotics: Science and Systems (RSS), Rome, Italy, 13–17 July 2015. [Google Scholar]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and calibration of a RGB-D camera (Kinect v2 Sensor) towards a potential use for close-range 3D modeling. Remote Sens.-Basel 2015, 7, 13070–13097. [Google Scholar] [CrossRef]

- Tubić, D.; Hébert, P.; Laurendeau, D. 3D surface modeling from curves. Image Vis. Comput. 2004, 22, 719–734. [Google Scholar] [CrossRef]

- HandySCAN. Available online: https://www.creaform3d.com/en/portable-3d-scanner-handyscan-3d (accessed on 25 October 2019).

- HSCAN. Available online: https://www.3d-scantech.com/product/hscan771-3d-scanner/ (accessed on 25 October 2019).

- FreeScan. Available online: https://www.shining3d.com/solutions/freescan-x7 (accessed on 25 October 2019).

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the Art on 3D Reconstruction with RGB-D Cameras. Comput. Graphics Forum 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Hinzmann, T.; Schönberger, J.L.; Pollefeys, M.; Siegwart, R. Mapping on the Fly: Real-Time 3D Dense Reconstruction, Digital Surface map and Incremental Orthomosaic Generation for Unmanned Aerial Vehicles. In Field and Service Robotics; Springer: Zurich, Switzerland, 2018; pp. 383–396. [Google Scholar]

- Cao, Y.; Kobbelt, L.; Hu, S. Real-time High-accuracy Three-Dimensional Reconstruction with Consumer RGB-D Cameras. ACM Trans. Graph. 2018, 37, 171. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient Variants of the ICP Algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Stückler, J.; Behnke, S. Integrating Depth and Color Cues for Dense Multi-Resolution Scene Mapping Using Rgb-d Cameras. In Proceedings of the 2012 IEEE Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Hamburg, Germany, 13–15 September 2012; pp. 162–167. [Google Scholar]

- Weise, T.; Wismer, T.; Leibe, B.; Van Gool, L. In-Hand Scanning with Online Loop Closure. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops (ICCV Workshops), Kyoto, Japan, 29 September–2 October 2009; pp. 1630–1637. [Google Scholar]

- Gallup, D.; Pollefeys, M.; Frahm, J. 3D Reconstruction Using an n-Layer Heightmap. In Joint Pattern Recognition Symposium; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–10. [Google Scholar]

- Pollefeys, M.; Nistér, D.; Frahm, J.; Akbarzadeh, A.; Mordohai, P.; Clipp, B.; Engels, C.; Gallup, D.; Kim, S.; Merrell, P. Detailed real-time urban 3D reconstruction from video. Int. J. Comput. Vis. 2008, 78, 143–167. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-Time 3D Reconstruction and Interaction Using a Moving Depth Camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Minneapolis, MN, USA, 20–23 October 2011; pp. 559–568. [Google Scholar]

- Kähler, O.; Prisacariu, V.A.; Murray, D.W. Real-Time Large-Scale Dense 3D Reconstruction with Loop Closure. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 500–516. [Google Scholar]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. 2013, 32, 169. [Google Scholar] [CrossRef]

- Curless, B.; Levoy, M. A Volumetric Method for Building Complex Models from Range Images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 303–312. [Google Scholar]

- Fedkiw, S.O.R.; Osher, S. Level set methods and dynamic implicit surfaces. Surfaces 2002, 44, 77. [Google Scholar]

- Lorensen, W.E.; Cline, H.E. Marching Cubes: A High Resolution 3D Surface Construction Algorithm. In Proceedings of the 14th annual Conference on Computer graphics and Interactive Techniques, Anaheim, CA, USA, 27–31 July 1987; pp. 163–169. [Google Scholar]

- Kähler, O.; Prisacariu, V.A.; Ren, C.Y.; Sun, X.; Torr, P.; Murray, D. Very high frame rate volumetric integration of depth images on mobile devices. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1241–1250. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. Dynamicfusion: Reconstruction and Tracking of Non-Rigid Scenes in Real-Time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Bylow, E.; Sturm, J.; Kerl, C.; Kahl, F.; Cremers, D. Real-Time Camera Tracking and 3D Reconstruction Using Signed Distance Functions. In Proceedings of the Robotics: Science and Systems (RSS), Berlin, Germany, 24–28 June 2013. [Google Scholar]

- Dong, W.; Wang, Q.; Wang, X.; Zha, H. PSDF Fusion: Probabilistic Signed Distance Function for On-the-fly 3D Data Fusion and Scene Reconstruction. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 701–717. [Google Scholar]

- Choe, G.; Park, J.; Tai, Y.; Kweon, I.S. Refining geometry from depth sensors using IR shading images. Int. J. Comput. Vis. 2017, 122, 1–16. [Google Scholar] [CrossRef]

- Maier, R.; Kim, K.; Cremers, D.; Kautz, J.; Nießner, M. Intrinsic3d: High-Quality 3D Reconstruction by Joint Appearance and Geometry Optimization with Spatially-Varying Lighting. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 3114–3122. [Google Scholar]

- Zollhöfer, M.; Dai, A.; Innmann, M.; Wu, C.; Stamminger, M.; Theobalt, C.; Nießner, M. Shading-based refinement on volumetric signed distance functions. ACM Trans. Graph. 2015, 34, 96. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, W.; Hu, Z. Geometrically stable tracking for depth images based 3D reconstruction on mobile devices. ISPRS J. Photogramm. 2018, 143, 222–232. [Google Scholar] [CrossRef]

- Slavcheva, M.; Kehl, W.; Navab, N.; Ilic, S. SDF-2-SDF Registration for Real-Time 3D Reconstruction from RGB-D Data. Int. J. Comput. Vis. 2018, 126, 615–636. [Google Scholar] [CrossRef]

- Choi, S.; Zhou, Q.; Koltun, V. Robust Reconstruction of Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5556–5565. [Google Scholar]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. Bundlefusion: Real-time globally consistent 3D reconstruction using on-the-fly surface reintegration. ACM Trans.Graph. 2017, 36, 76a. [Google Scholar] [CrossRef]

- Han, L.; Fang, L. FlashFusion: Real-time Globally Consistent Dense 3D Reconstruction Using CPU Computing. In Proceedings of the Robotics: Science and Systems (RSS), Pittsburgh, PA, USA, 26–30 June 2018. [Google Scholar]

- Maier, R.; Schaller, R.; Cremers, D. Efficient online surface correction for real-time large-scale 3D reconstruction. arXiv 2017, arXiv:1709.03763. [Google Scholar]

- Guo, K.; Taylor, J.; Fanello, S.; Tagliasacchi, A.; Dou, M.; Davidson, P.; Kowdle, A.; Izadi, S. TwinFusion: High Framerate Non-Rigid Fusion Through Fast Correspondence Tracking. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 596–605. [Google Scholar]

- Palazzolo, E.; Behley, J.; Lottes, P.; Giguère, P.; Stachniss, C. ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals. arXiv 2019, arXiv:1905.02082. [Google Scholar]