Vision-Based Localization System Suited to Resident Underwater Vehicles

Abstract

1. Introduction

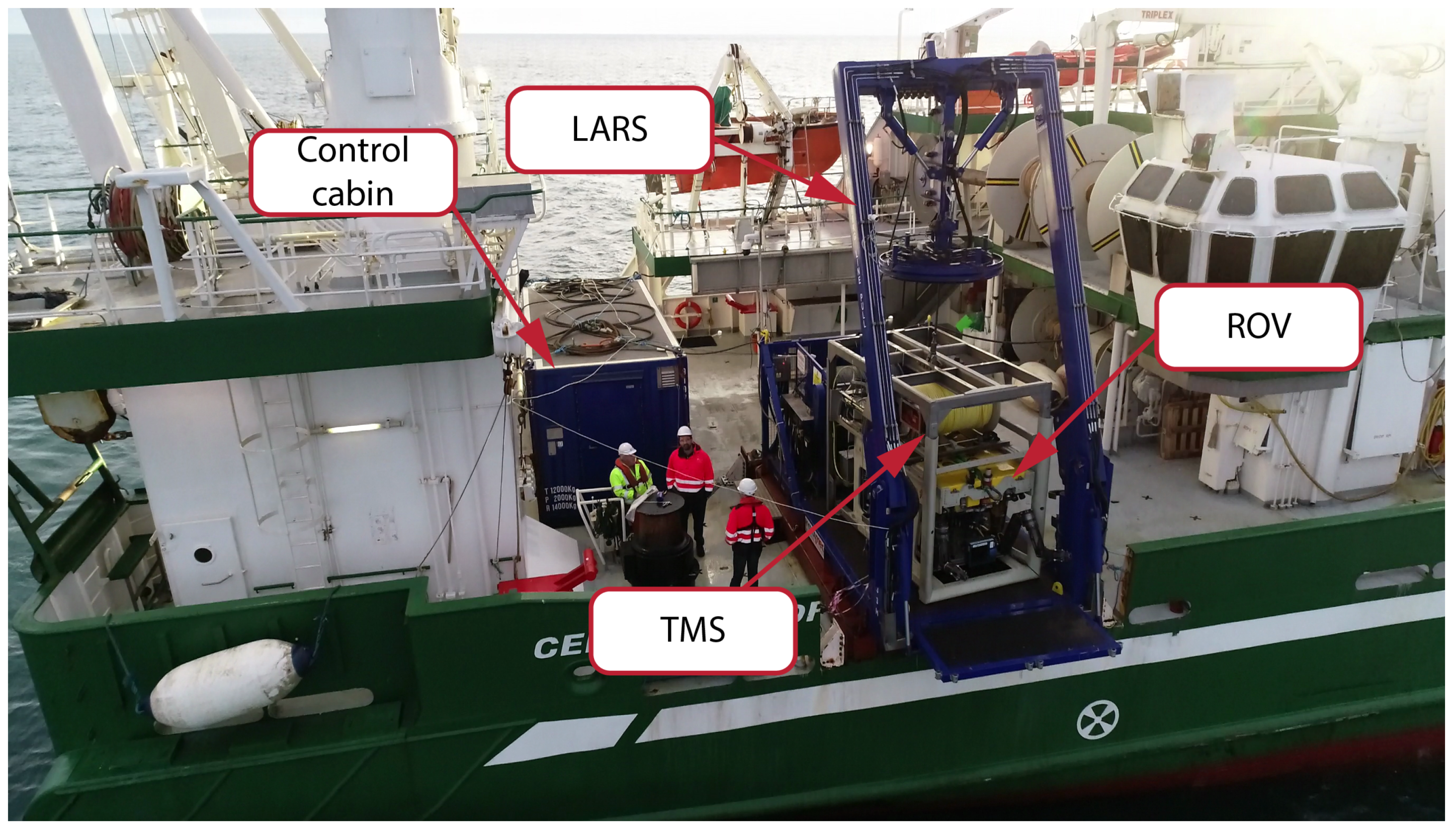

2. Hardware and Experimental Setup

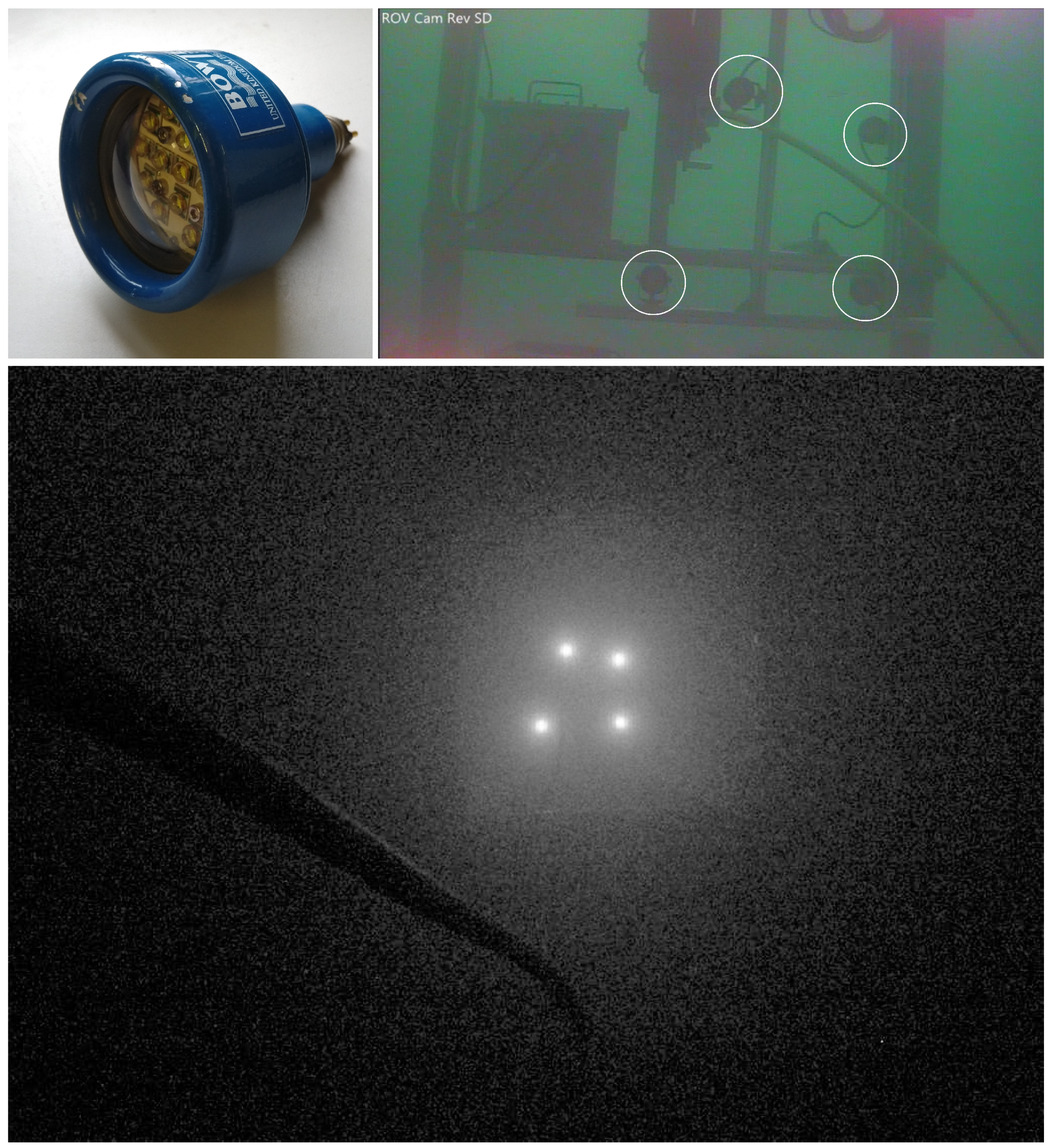

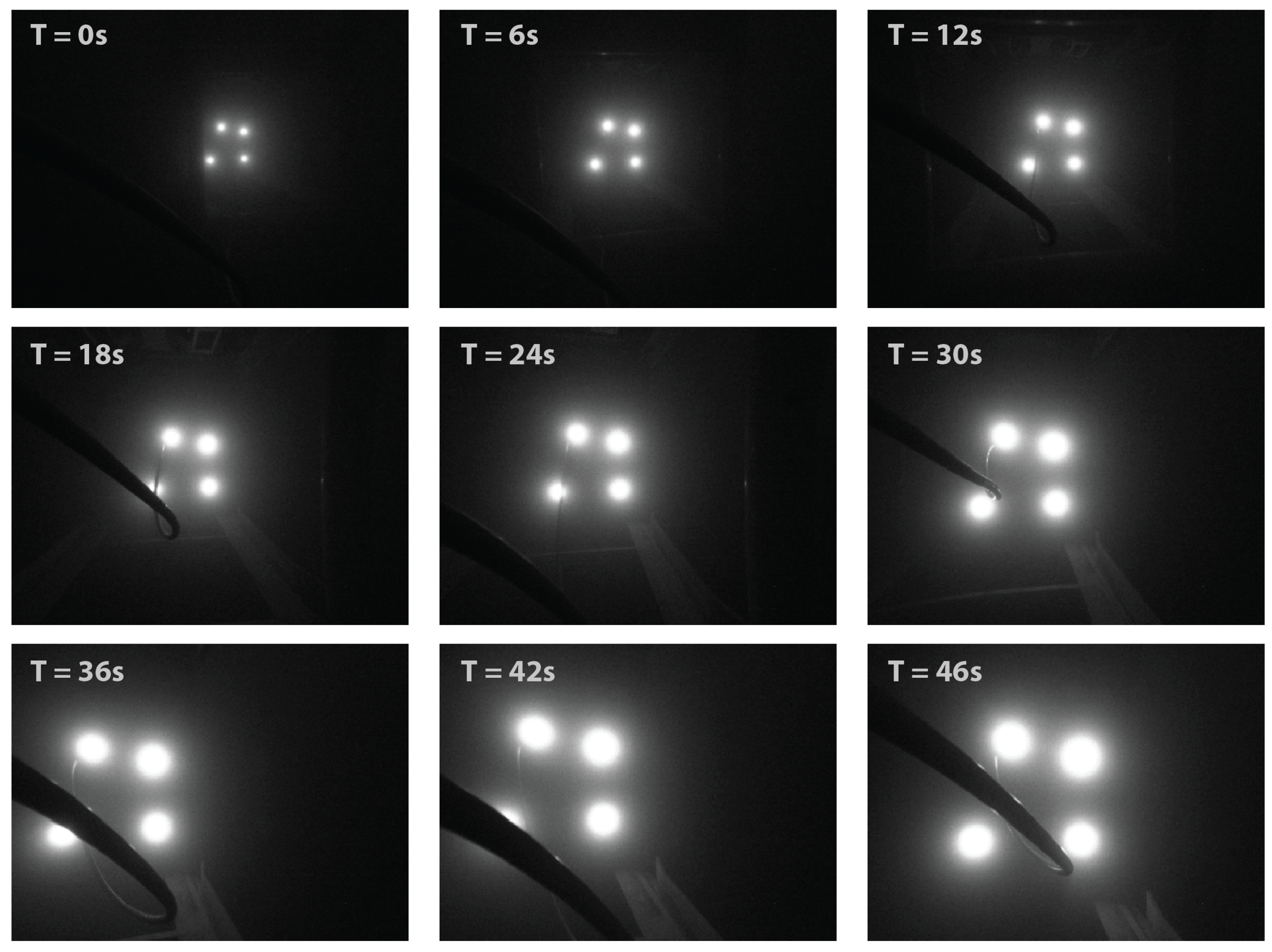

2.1. Light Marker and Camera

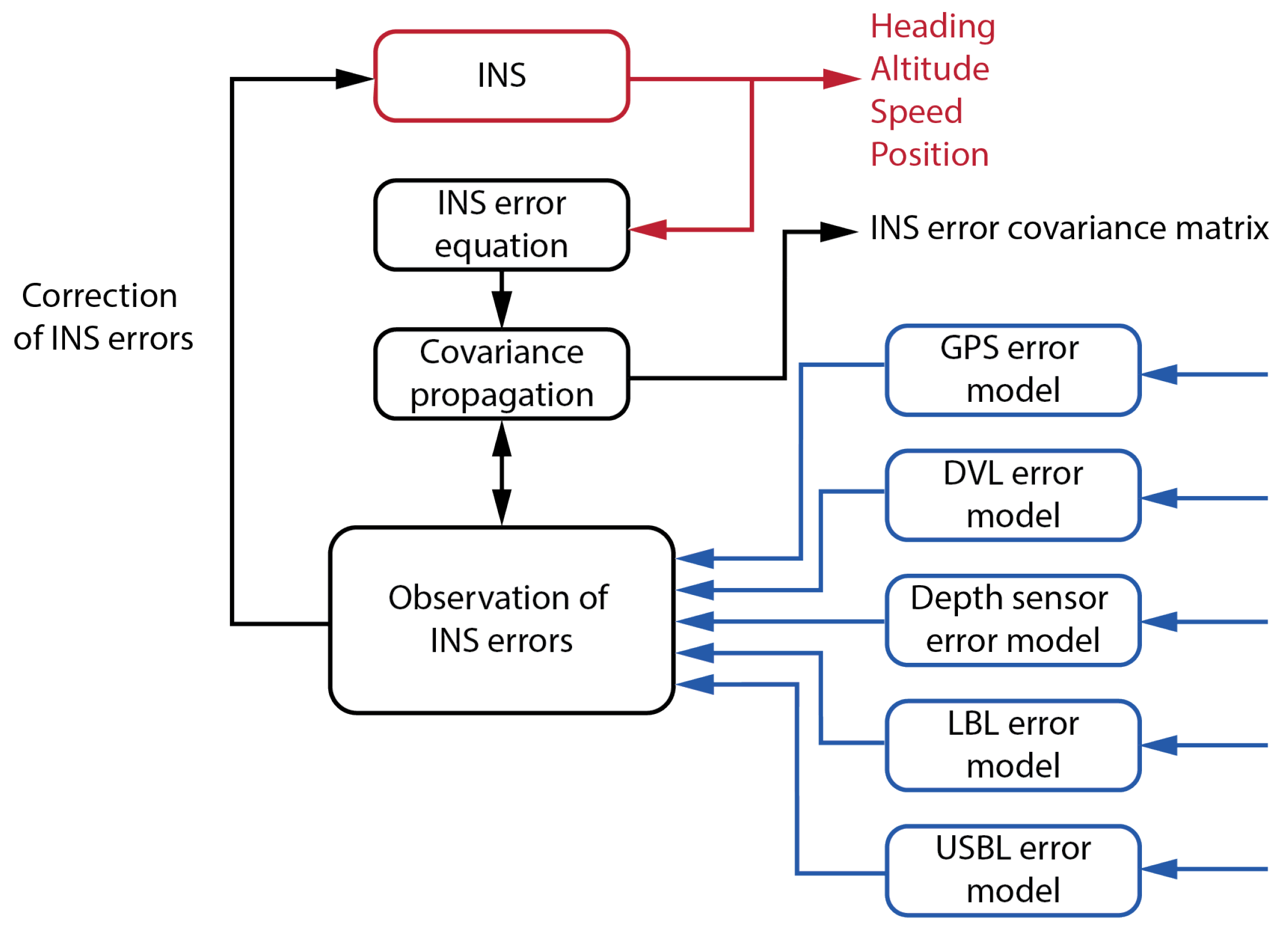

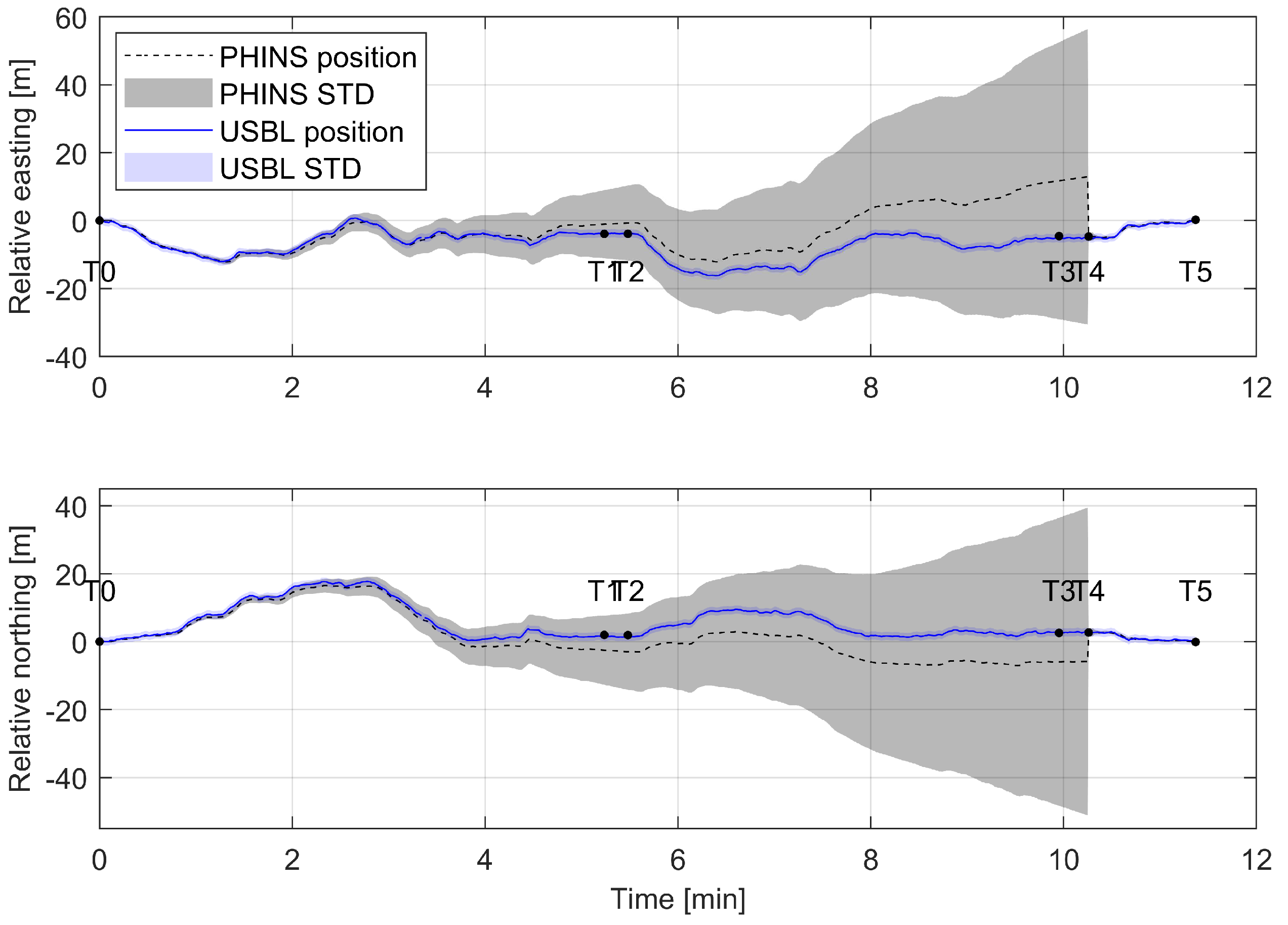

2.2. The Navigation System

3. Inertial Navigation System PHINS 6000

- Inertial measurement unit (IMU) which consists of three fibre optic gyroscopes and three accelerometers,

- Inertial navigation system (INS) resolving inertial measurements and updating position, velocity, and attitude,

- Extended Kalman filter for optimal integration of external and internal sensor measurements.

3.1. PHINS Operating Modes

- INS + USBL/LBL + (DVL),

- INS + DVL,

- INS (Pure inertial).

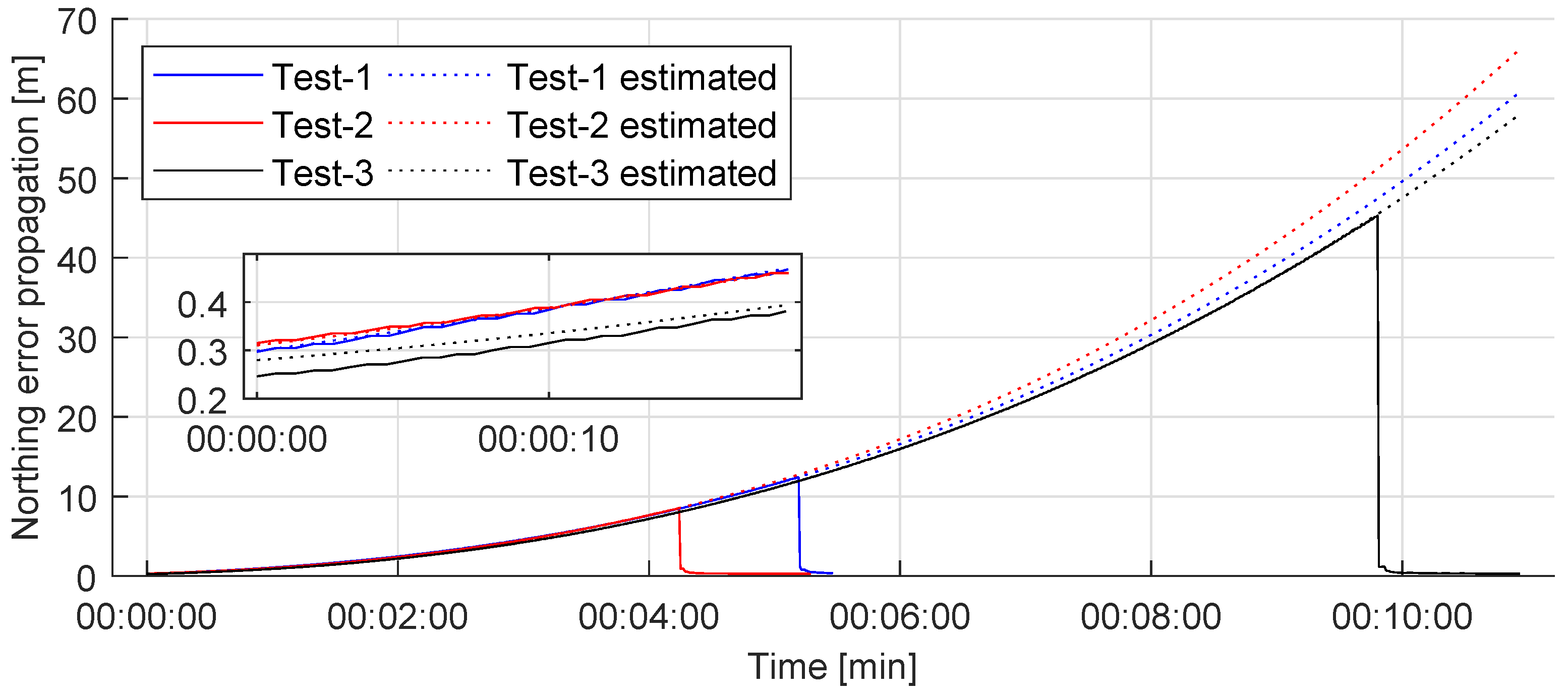

3.2. Propagation of Errors in Pure Inertial Mode

Additional Considerations

4. Visual Pose Estimation

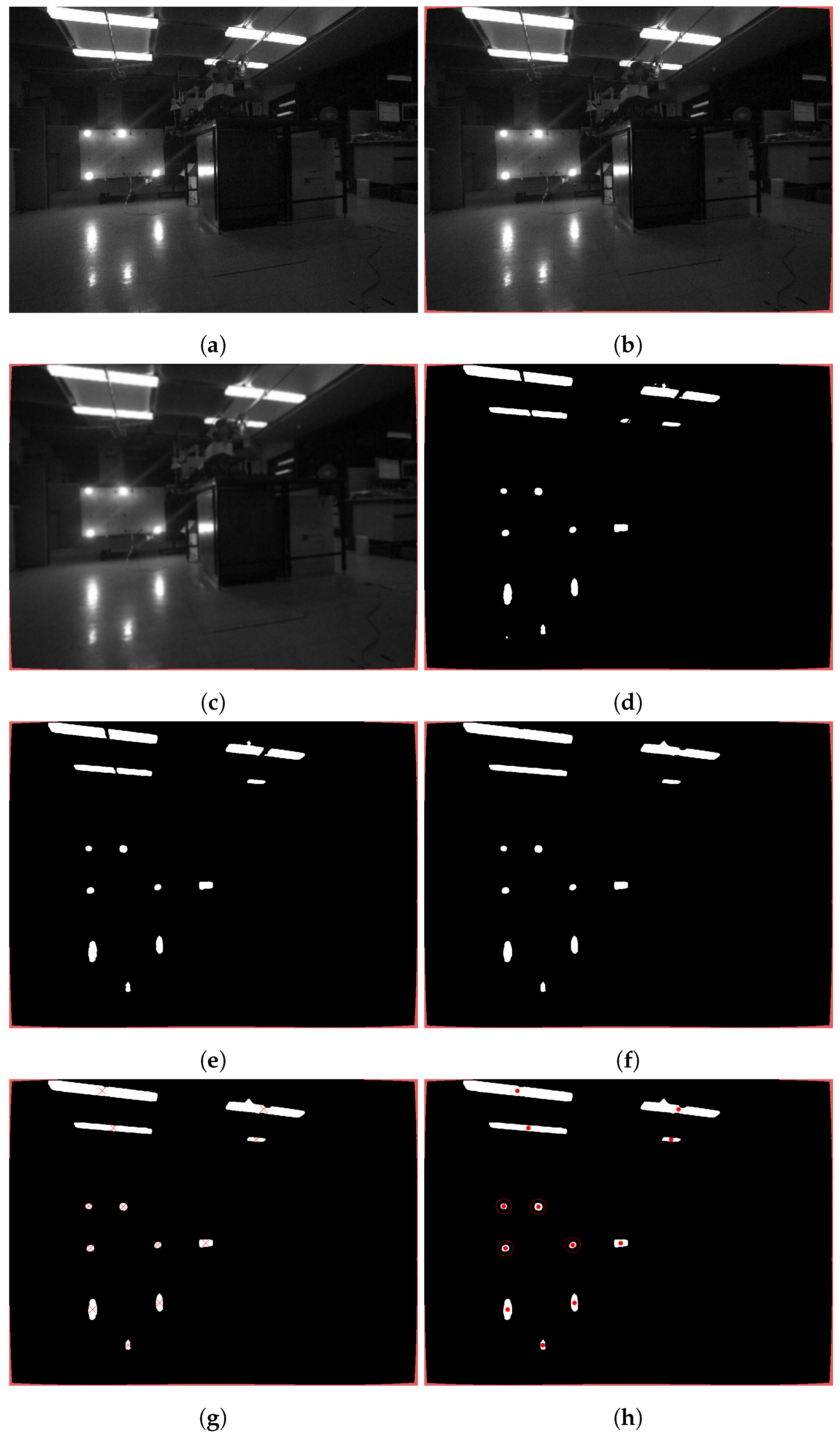

4.1. Image Processing

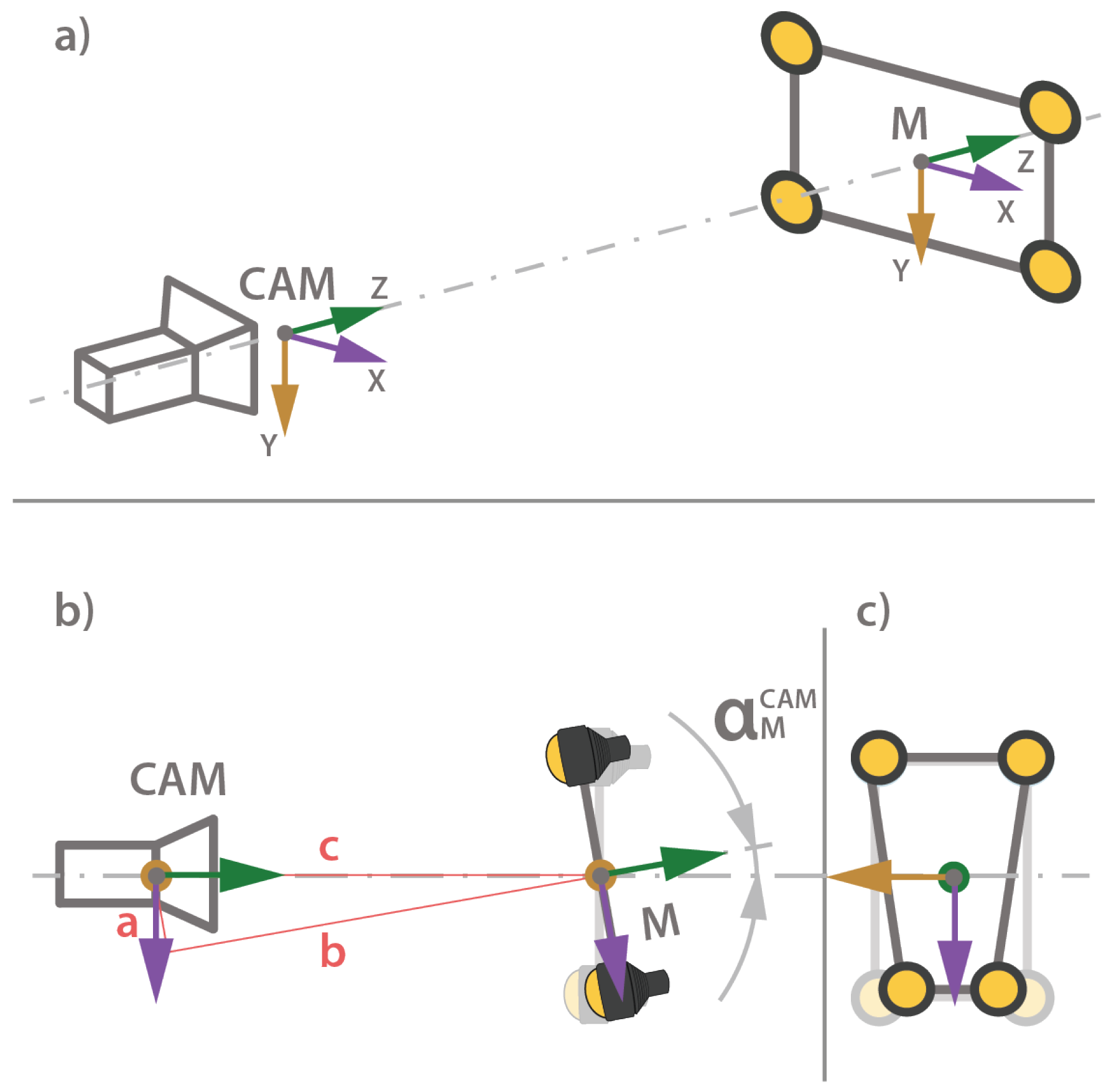

4.2. Pose Estimation

5. Results

5.1. Visual Pose Estimation—Static Test

5.2. Visual Pose Estimation—Dynamic Test

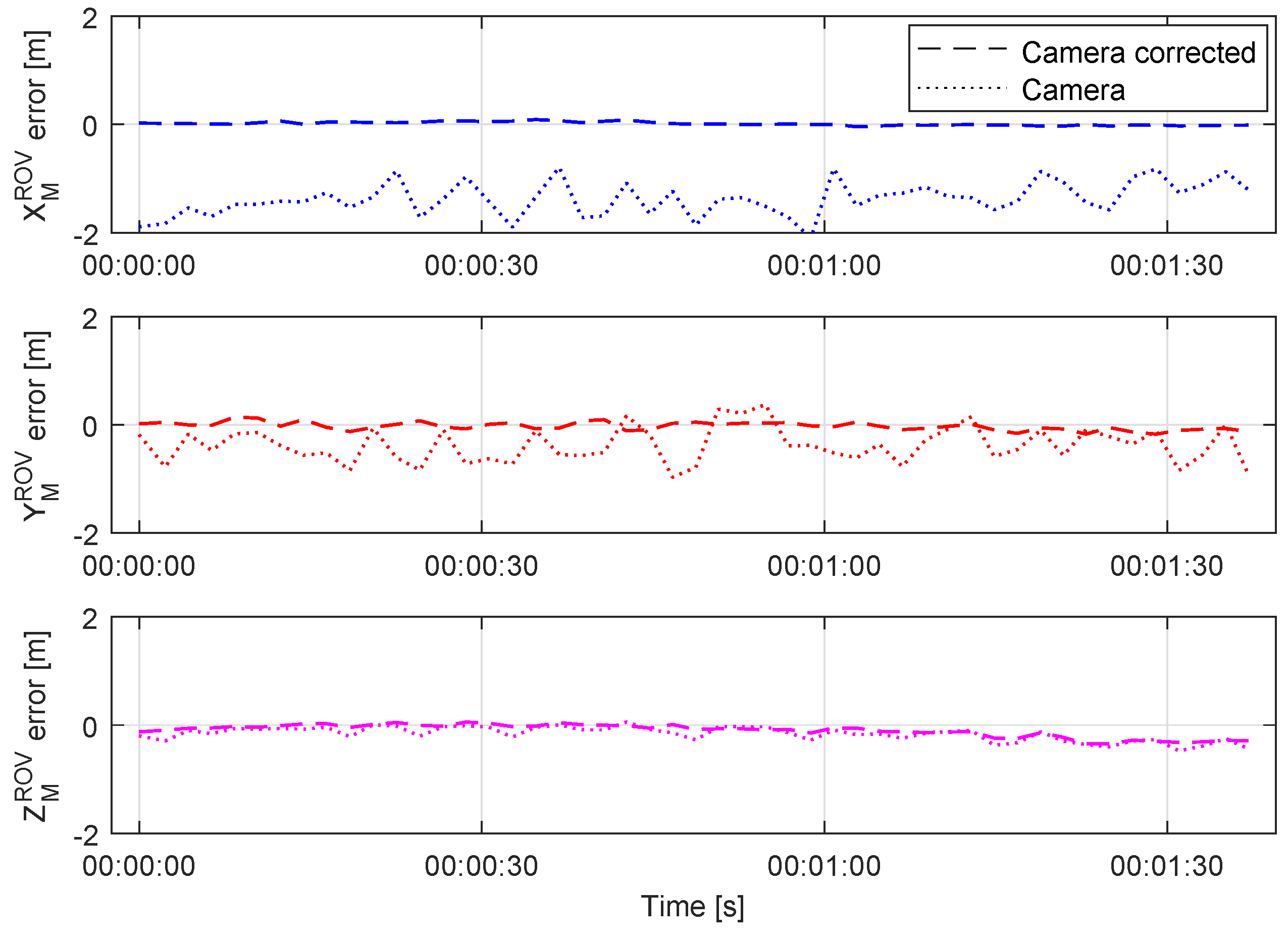

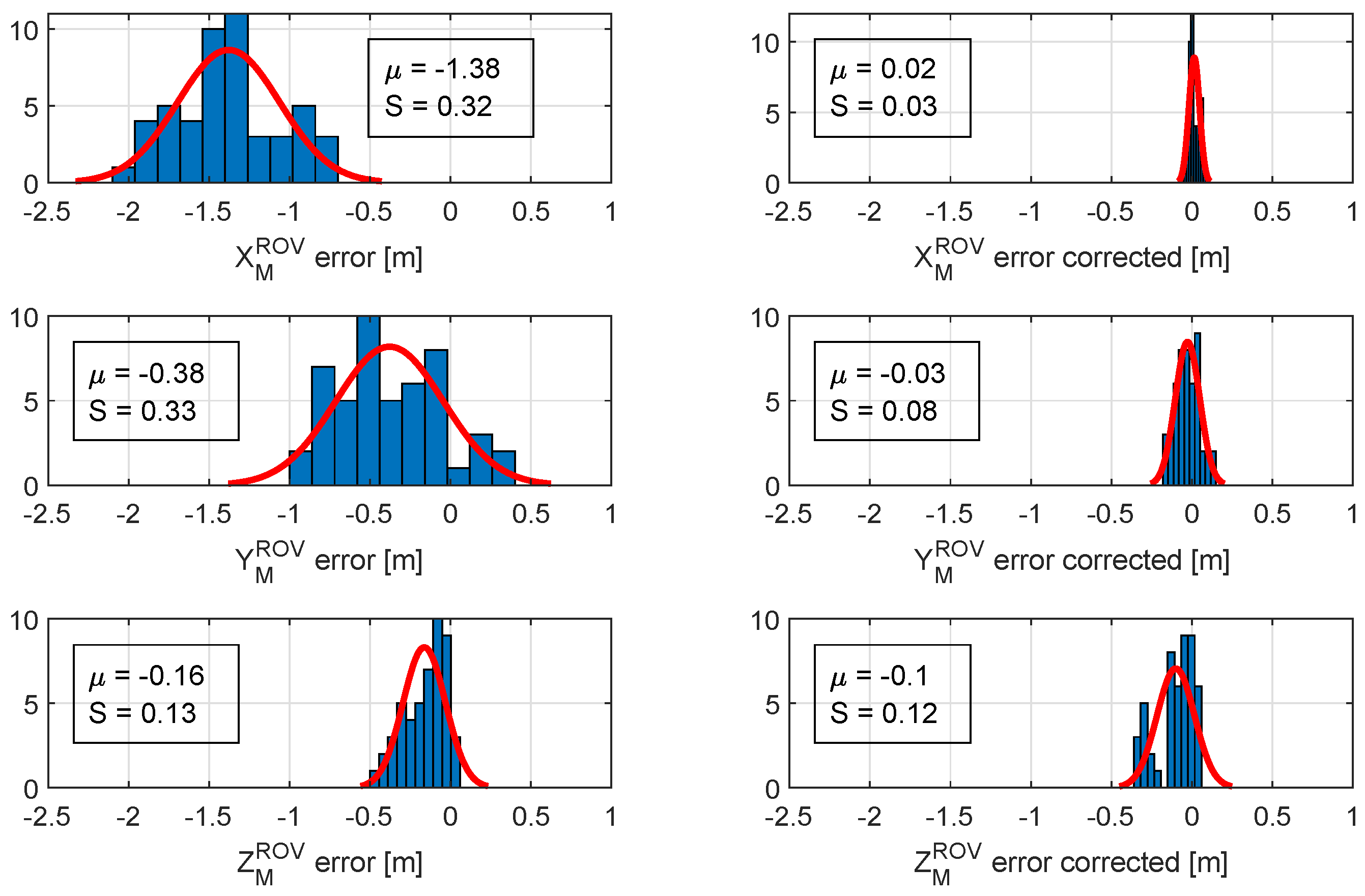

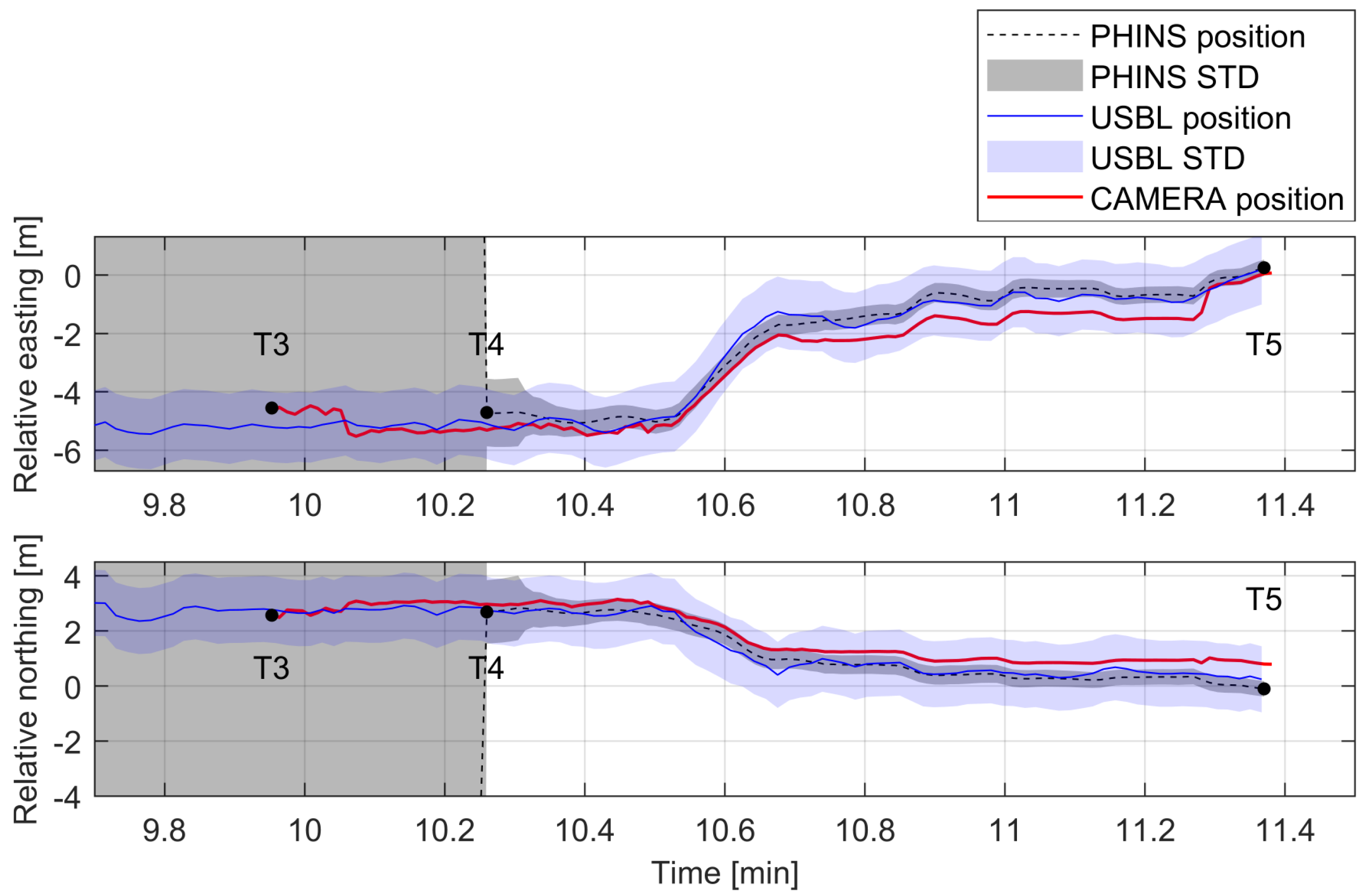

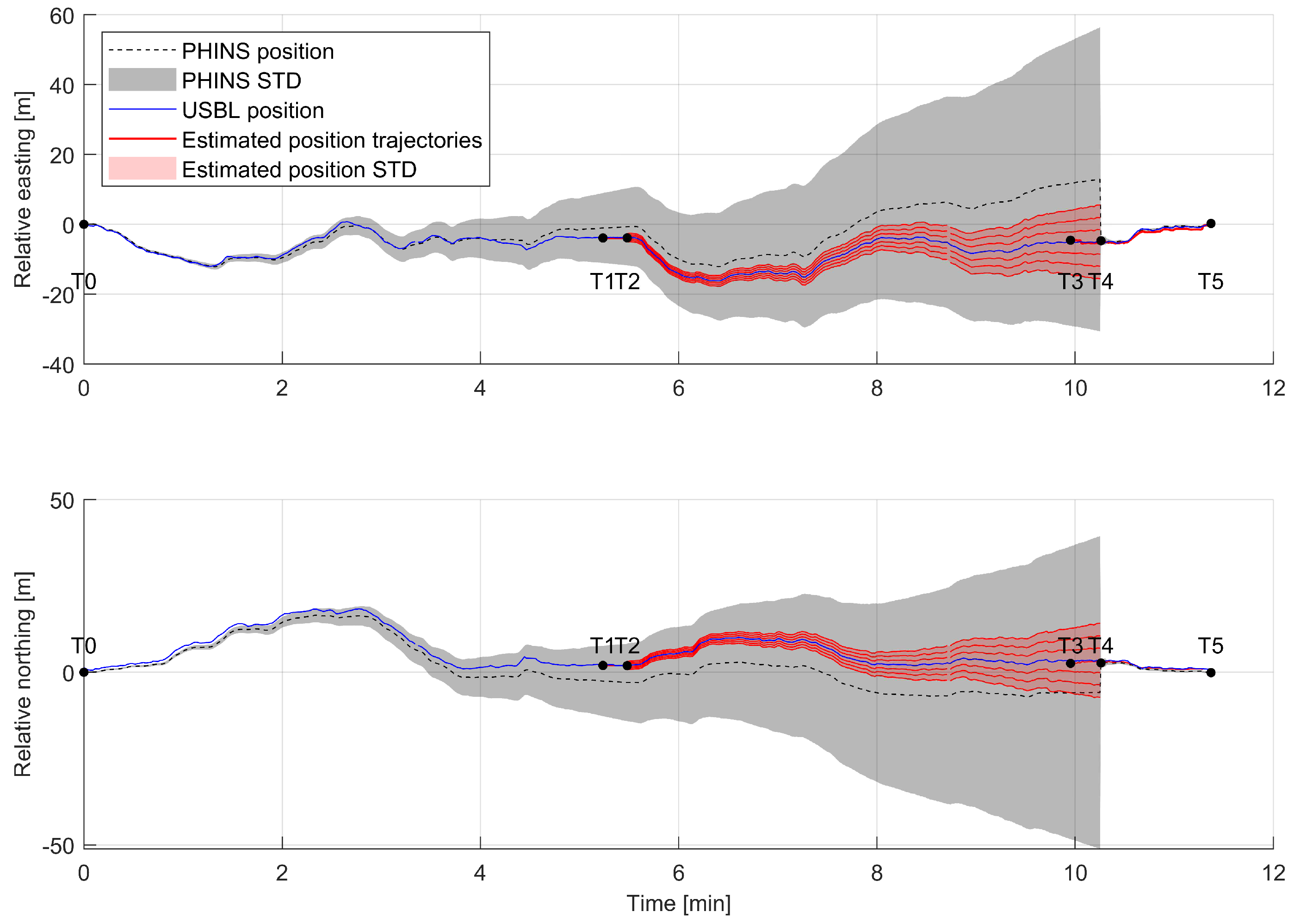

5.3. Visual Pose Estimation for INS Position Update

- The vehicle has no USBL onboard and is travelling at cruising speed with navigation system based solely on INS.

- During the trial, the vehicle DVL system is disabled in order to effect a faster integration drift in INS system over time than just 10 minutes operation with DVL.

- The vehicle operates in a structured environment.

- Approximately halfway to the docking station, there is a known landmark which can be recognized with the onboard vision system, and the position and orientation of landmark is known in world frame.

6. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AUV | Autonomous unmanned vehicle |

| CMOS | Complementary metal-oxide-semiconductor |

| DVL | Doppler velocity log |

| EKF | Extended Kalman filter |

| FOG | Fibre optic gyroscope |

| GPS | Global positioning system |

| IMR | Inspection, maintenance and repair |

| IMU | Inertial measurement unit |

| INS | Inertial navigation system |

| LARS | Launch and recovery system |

| LBL | Long baseline |

| LCOE | Levelized cost of energy |

| LED | Light emitted diode |

| MRE | Marine renowable energy |

| OPEX | Operational expenditure |

| ROV | Remotely operated vehicle |

| RV | Research vessel |

| SLAM | Simultaneous localization and mapping |

| STD | Standard deviation |

| TMS | Tether management system |

| USBL | Ultra-short baseline |

References

- Gilmour, B.; Niccum, G.; O’Donnell, T. Field resident AUV systems—Chevron’s long-term goal for AUV development. In Proceedings of the 2012 IEEE/OES Autonomous Underwater Vehicles (AUV), Southampton, UK, 24–27 September 2012; pp. 1–5, ISSN: 1522-3167. [Google Scholar] [CrossRef]

- UT2. UT3—Resident ROVs. UT3 2018, 12, 26–31. [Google Scholar]

- MacDonald, A.; Torkilsden, S.E. ROV in residence. Offshore Eng. Mag. 2019, 44, 52–55. [Google Scholar]

- MacDonald, A.; Torkilsden, S.E. IKM Subsea wins contract for Statoil’s Visund and Snorre B platforms. Offshore Technol. Mag. 2016. [Google Scholar]

- McPhee, D. Equinor awards Saipem £35m subsea service deal at Njord field - News for the Oil and Gas Sector. Energy Voice 2019. [Google Scholar]

- Anonymous. Saipem continues with Shell license for subsea robotics development—Energy Northern Perspective. Energy North. Perspect. Mag. 2019. [Google Scholar]

- Zagatti, R.; Juliano, D.R.; Doak, R.; Souza, G.M.; de Paula Nardy, L.; Lepikson, H.A.; Gaudig, C.; Kirchner, F. FlatFish Resident AUV: Leading the Autonomy Era for Subsea Oil and Gas Operations. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 30 April–3 May 2018. [Google Scholar] [CrossRef]

- Matsuda, T.; Maki, T.; Masuda, K.; Sakamaki, T. Resident autonomous underwater vehicle: Underwater system for prolonged and continuous monitoring based at a seafloor station. Robot. Auton. Syst. 2019, 120, 103231. [Google Scholar] [CrossRef]

- Newell, T. Technical Building Blocks for a Resident Subsea Vehicle. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 30 April–3 May 2018. [Google Scholar] [CrossRef]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV Navigation and Localization: A Review. IEEE J. Ocean. Eng. 2014, 39, 131–149. [Google Scholar] [CrossRef]

- Nicosevici, T.; Garcia, R.; Carreras, M.; Villanueva, M. A review of sensor fusion techniques for underwater vehicle navigation. In Proceedings of the Oceans ’04 MTS/IEEE Techno-Ocean ’04 (IEEE Cat. No. 04CH37600), Kobe, Japan, 9–12 November 2004; Volume 3, pp. 1600–1605. [Google Scholar] [CrossRef]

- Majumder, S.; Scheding, S.; Durrant-Whyte, H.F. Multisensor data fusion for underwater navigation. Robot. Auton. Syst. 2001, 35, 97–108. [Google Scholar] [CrossRef]

- Subsea Inertial Navigation, iXblue. Available online: https://www.ixblue.com/products/range/subsea-inertial-navigation (accessed on 7 December 2019).

- SPRINT—Subsea Inertial Navigation System, Sonardyne. Available online: https://www.sonardyne.com/ (accessed on 7 December 2019).

- Balasuriya, B.; Takai, M.; Lam, W.; Ura, T.; Kuroda, Y. Vision based autonomous underwater vehicle navigation: Underwater cable tracking. In Proceedings of the Oceans ’97 MTS/IEEE Conference Proceedings, Halifax, NS, Canada, 6–9 October 1997; Volume 2, pp. 1418–1424. [Google Scholar] [CrossRef]

- Ortiz, A.; Simó, M.; Oliver, G. A vision system for an underwater cable tracker. Mach. Vis. Appl. 2002, 13, 129–140. [Google Scholar] [CrossRef]

- Carreras, M.; Ridao, P.; Garcia, R.; Nicosevici, T. Vision-based localization of an underwater robot in a structured environment. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 971–976, ISSN: 1050-4729. [Google Scholar] [CrossRef]

- Sivčev, S.; Rossi, M.; Coleman, J.; Dooly, G.; Omerdić, E.; Toal, D. Fully automatic visual servoing control for work-class marine intervention ROVs. Control Eng. Pract. 2018, 74, 153–167. [Google Scholar] [CrossRef]

- Cieslak, P.; Ridao, P.; Giergiel, M. Autonomous underwater panel operation by GIRONA500 UVMS: A practical approach to autonomous underwater manipulation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 529–536, ISSN: 1050-4729. [Google Scholar] [CrossRef]

- Hidalgo, F.; Bräunl, T. Review of underwater SLAM techniques. In Proceedings of the 2015 6th International Conference on Automation, Robotics and Applications (ICARA), Queenstown, New Zealand, 17–19 February 2015; pp. 306–311. [Google Scholar] [CrossRef]

- Ribas, D.; Ridao, P.; Neira, J. Underwater SLAM for Structured Environments Using an Imaging Sonar; Springer: Berlin, Germany, 2010. [Google Scholar]

- Guth, F.; Silveira, L.; Botelho, S.; Drews, P.; Ballester, P. Underwater SLAM: Challenges, state of the art, algorithms and a new biologically-inspired approach. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014; pp. 981–986, ISSN: 2155-1782. [Google Scholar] [CrossRef]

- Köser, K.; Frese, U. Challenges in Underwater Visual Navigation and SLAM. In AI Technology for Underwater Robots; Intelligent Systems, Control and Automation: Science and Engineering; Kirchner, F., Straube, S., Kühn, D., Hoyer, N., Eds.; Springer: Cham, Switzerland, 2020; pp. 125–135. [Google Scholar]

- Trslic, P.; Rossi, M.; Sivcev, S.; Dooly, G.; Coleman, J.; Omerdic, E.; Toal, D. Long term, inspection class ROV deployment approach for remote monitoring and inspection. In Proceedings of the MTS/IEEE OCEANS 2018, Charleston, SC, USA, 22–25 October 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Trslic, P.; Rossi, M.; Robinson, L.; O’Donnel, C.W.; Weir, A.; Coleman, J.; Riordan, J.; Omerdic, E.; Dooly, G.; Toal, D. Vision based autonomous docking for work class ROVs. Ocean Eng. 2020, 196, 106840. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Rossi, M.; Trslić, P.; Sivčev, S.; Riordan, J.; Toal, D.; Dooly, G. Real-Time Underwater StereoFusion. Sensors 2018, 18, 3936. [Google Scholar] [CrossRef]

| Nortek 500 DVL | |

| Bottom velocity | |

| Single ping std @ 3m/s | 5 mm/s |

| Long term accuracy | mm/s |

| Minimum altitude | 0.3 m |

| Maximum altitude | 200 m |

| Velocity resolution | 0.01 mm/s |

| Current profiling | |

| Minimum accuracy | of measured value / mm/s |

| Velocity resolution | 1 mm/s |

| Teledyne Ranger 2 USBL | |

| Operating range | >6000 m |

| System accuracy | 0.2% of Slant range |

| Position update rate | 1 s |

| Okeanus DGPS | |

| Position accuracy GPS | <15 m |

| Position accuracy DGPS (WAAS) | <3 m |

| PPS Time | ±1 us |

| Position accuracy with USBL/LBL | Three times better than USBL/LBL accuracy |

| Position accuracy with DVL | 0.1% of travelled distance |

| Position accuracy with no aiding for 1 min/ 2 min | 0.8 m/ 3.2 m |

| Heading accuracy with GPS | 0.01 deg secant latitude |

| Heading accuracy with DVL/USBL/LBL | 0.02 deg secant latitude |

| Roll and Pitch accuracy | 0.01 deg |

| Heave accuracy | 5 cm or 5% (Whichever is greater) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trslić, P.; Weir, A.; Riordan, J.; Omerdic, E.; Toal, D.; Dooly, G. Vision-Based Localization System Suited to Resident Underwater Vehicles. Sensors 2020, 20, 529. https://doi.org/10.3390/s20020529

Trslić P, Weir A, Riordan J, Omerdic E, Toal D, Dooly G. Vision-Based Localization System Suited to Resident Underwater Vehicles. Sensors. 2020; 20(2):529. https://doi.org/10.3390/s20020529

Chicago/Turabian StyleTrslić, Petar, Anthony Weir, James Riordan, Edin Omerdic, Daniel Toal, and Gerard Dooly. 2020. "Vision-Based Localization System Suited to Resident Underwater Vehicles" Sensors 20, no. 2: 529. https://doi.org/10.3390/s20020529

APA StyleTrslić, P., Weir, A., Riordan, J., Omerdic, E., Toal, D., & Dooly, G. (2020). Vision-Based Localization System Suited to Resident Underwater Vehicles. Sensors, 20(2), 529. https://doi.org/10.3390/s20020529