An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy

Abstract

:1. Introduction

2. VR-Based Phobia Therapy

2.1. Platforms

2.2. Academic Research Projects and Experiments

2.3. Mobile/Desktop Game Applications

3. Emotion Models and Physiological Data

3.1. Emotion Models

3.2. Physiological Data

4. Physiological Data in VR-Based Machine Learning Applications for Treating Phobias

4.1. Physiological Data in VR-Based Applications for Treating Phobias

4.2. Machine Learning for Emotion Recognition

4.3. Machine Learning for Identifying Anxiety Level in Phobia Therapy

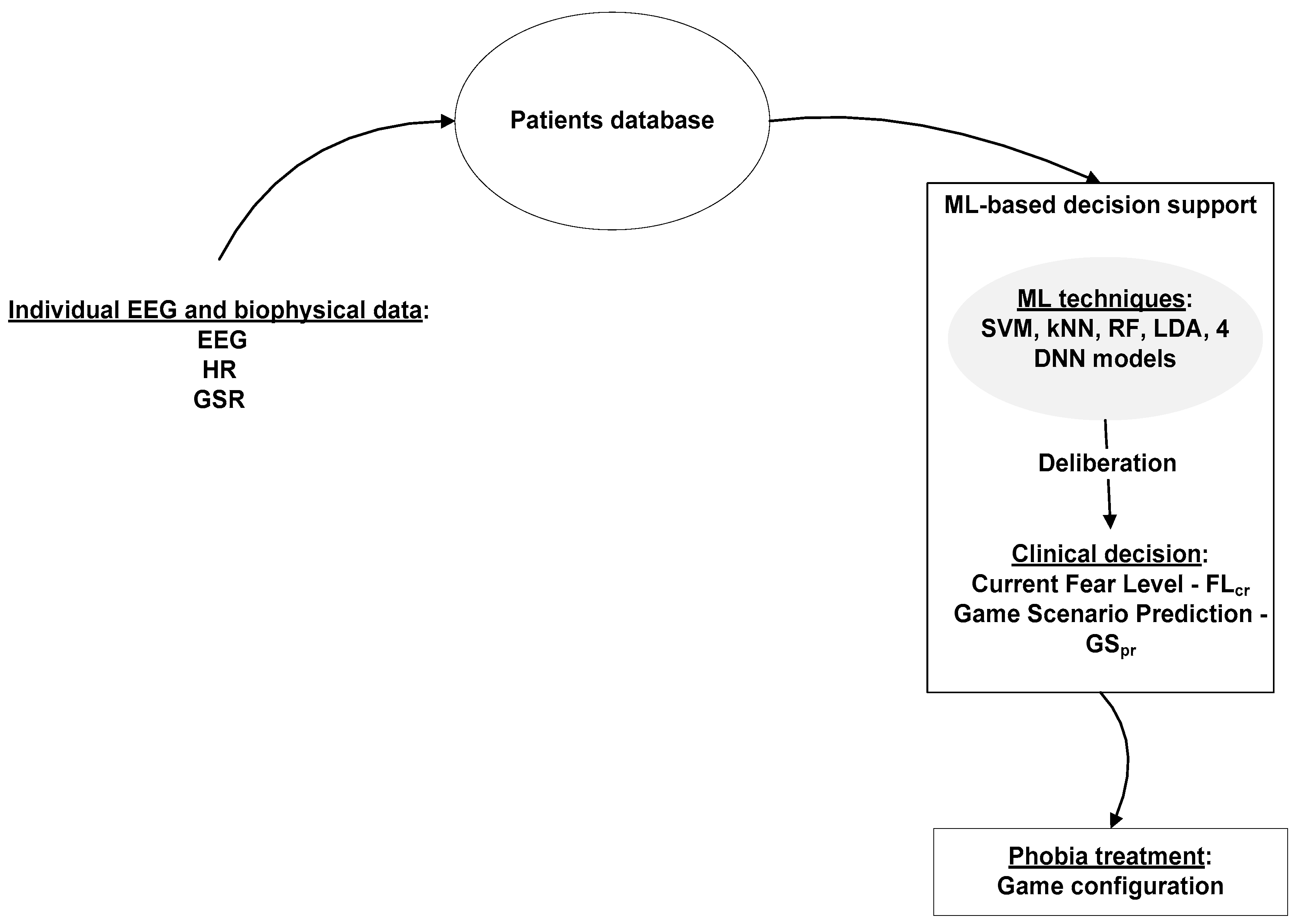

5. The Machine Learning and Deep Neural Networks Approach for the Acrophobia VRET Game

- -

- 2-choice scale, with 2 possible values, 0 and 1. 0 stands for relaxation and 1 stands for fear.

- -

- 4-choice scale, with 4 possible values (0–3). 0—complete relaxation, 1—low fear, 2—moderate fear and 3—high level of anxiety.

| 2-choice scale | 4-choice scale |

| if FLcr = = 0 then FLt = 1 if FLcr = =1 then FLt = 0 | if FLcr = = 0 or FLcr = = 1 then FLt = FLcr + 1 if FLcr = = 2 then FLt = FLcr if FLcr= = 3 then FLt = FLcr − 1 |

6. Experimental Methodology

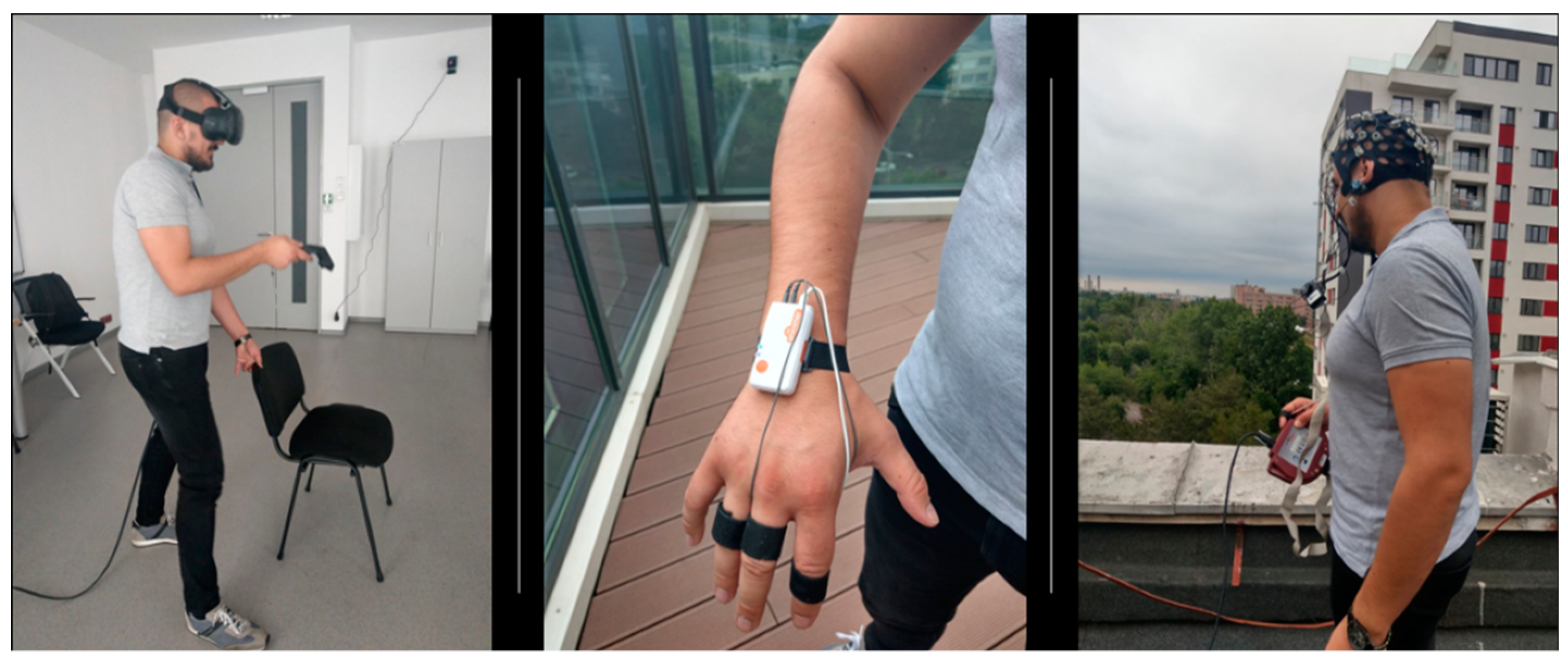

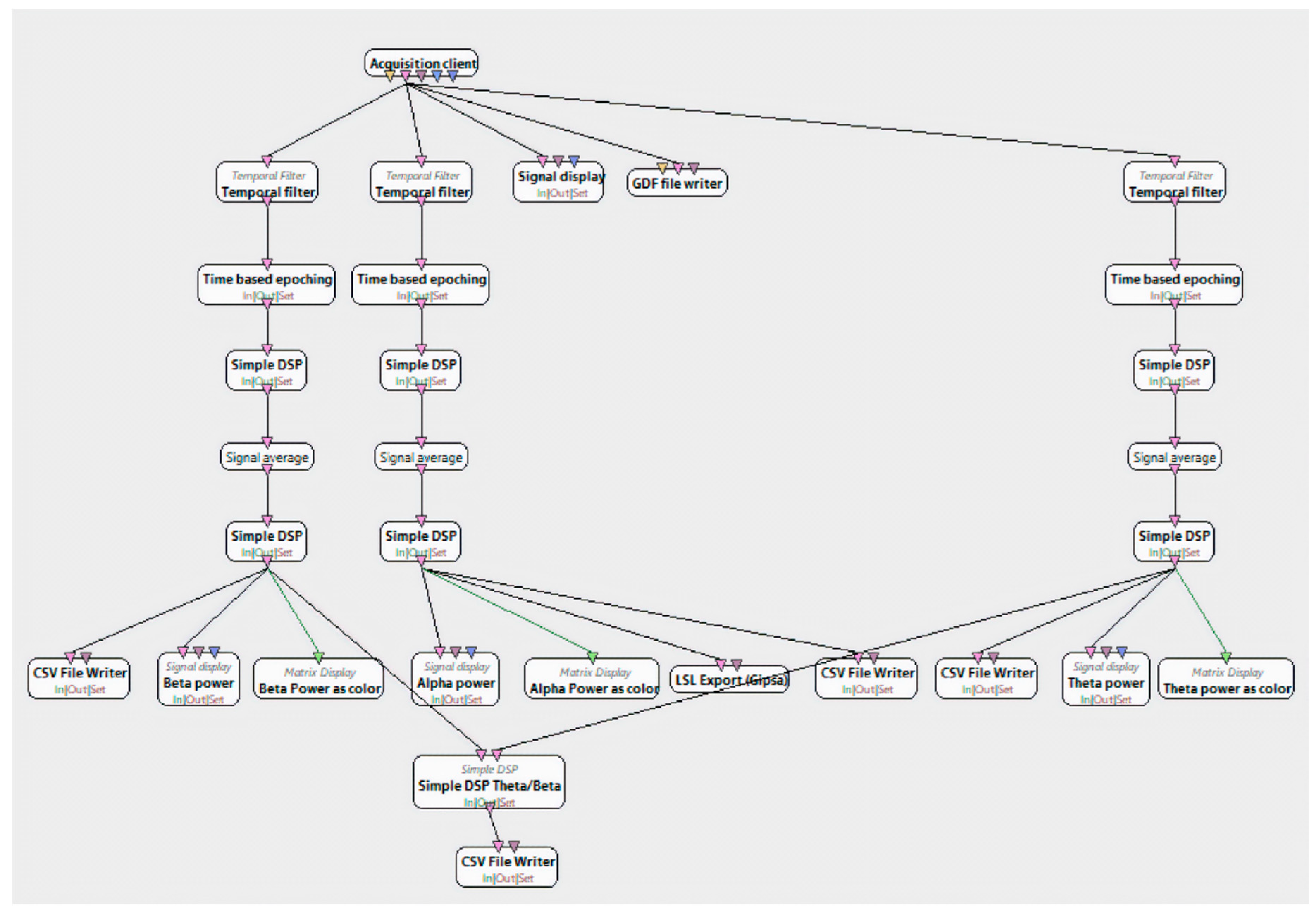

6.1. Experiments and Dataset Construction

- -

- 0 (relaxation)—rating 0 in the 11-choice-scale

- -

- 1 (low fear)—ratings 1–3 in the 11-choice-scale

- -

- 2 (medium fear)—ratings 4–7 in the 11-choice scale

- -

- 3 (high fear)—ratings 8–10 in the 11-choice scale

- -

- 0 (relaxation)—ratings 0–1 in the 4-choice scale

- -

- 1 (fear)—ratings 2–3 in the 4-choice scale.

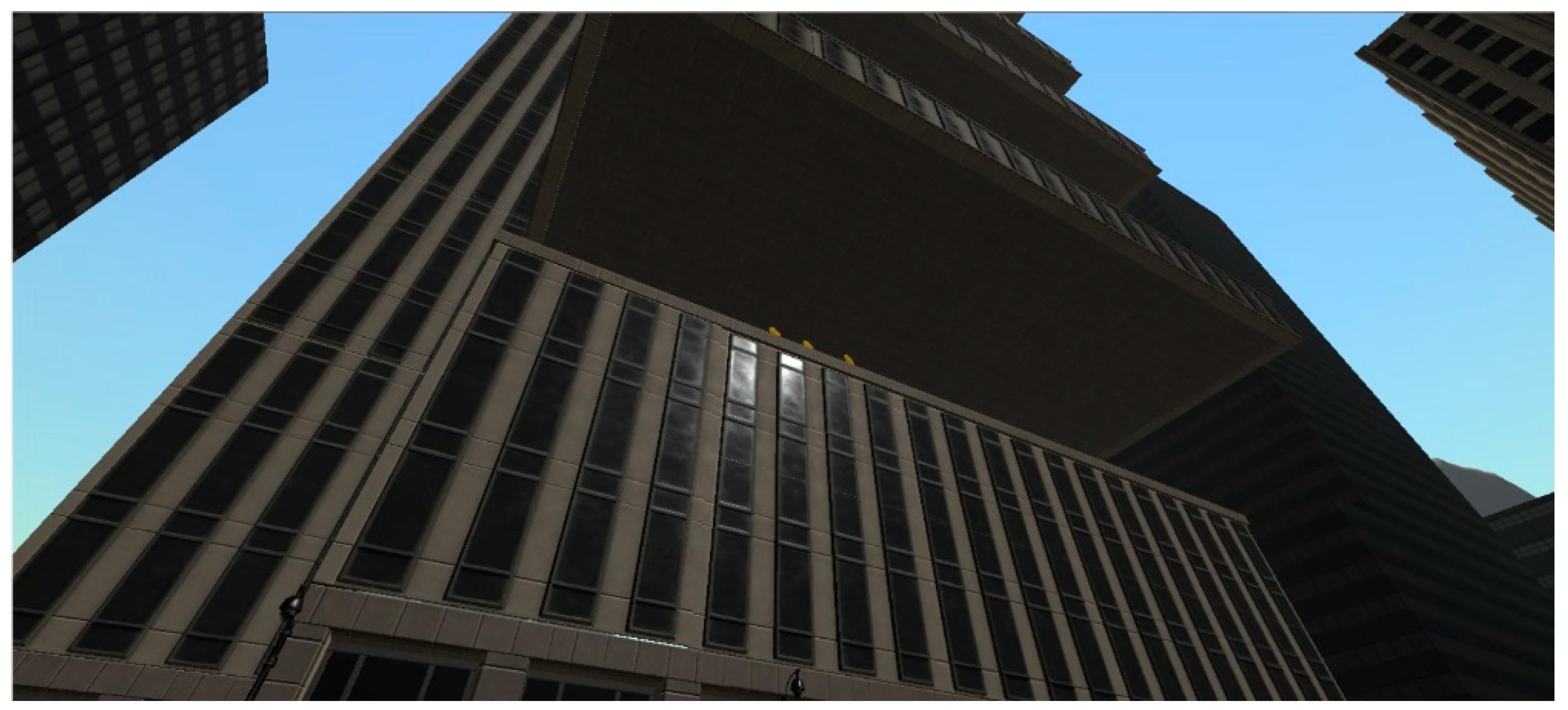

6.2. The Acrophobia Game

7. Results

8. Discussion

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Depression and Other Common Mental Disorders: Global Health Estimates; World Health Organization: Geneva, Switzerland, 2017.

- Olesen, J. What Is Fear? Types of Phobias and Their Meanings. 2015. Available online: https://www.fearof.net/what-is-fear-types-of-phobias-and-their-meanings/ (accessed on 20 October 2019).

- Olesen, J. Phobia Statistics and Surprising Facts about Our Biggest Fears. 2015. Available online: http://www.fearof.net/phobia-statistics-and-surprising-facts-about-our-biggest-fears/ (accessed on 20 October 2019).

- Uncover the Facts Behind Our Most Common Phobias [Infographic. (2017)]. Available online: https://blog.nationwide.com/common-phobias-statistics/ (accessed on 20 October 2019).

- Cognitive Behavioral Therapy. 2018. Available online: https://www.psychologytoday.com/us/basics/cognitive-behavioral-therapy (accessed on 20 October 2019).

- Lamson, R.J. Virtual Reality Immersion Therapy for Treating Psychological, Psychiatric, Medical, Educational and Self-Help Problems; U.S. Patent Office: San Rafael, CA, USA, 2002.

- Fadden, H. Acrophobia (Definition, Causes, Symptoms and Treatment). 2018. Available online: https://www.thehealthyapron.com/acrophobia-definition-causes-symptoms-treatment.html (accessed on 20 October 2019).

- Opris, D.; Pintea, S.; Garcia-Palacios, A.; Botella, C.; Szamoskozi, S.; David, D. Virtual Reality Exposure Therapy in Anxiety Disorders: A Quantitative Meta-Analysis. Depress. Anxiety 2012, 29, 85–93. [Google Scholar] [PubMed]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Calvo, R.; D’Mello, S.; Gratch, J.; Kappas, A. Introduction to Affective Computing. In The Oxford Handbook of Affective Computing; Calvo, R., D’Mello, S., Gratch, J., Kappas, A., Eds.; Oxford University Press: Oxford, UK, 2015; pp. 1–8. [Google Scholar]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment from Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 2011, 41, 1052–1063. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Agrawal, P.; Sarkar, N.; Chen, S. Dynamic Difficulty Adjustment in Computer Games through Real-Time Anxiety-Based Affective Feedback. Int. J. Hum.-Comput. Interact. 2009, 25, 506–529. [Google Scholar] [CrossRef]

- Bickmore, T.W. Relational Agents in Health Applications: Leveraging Affective Computing to Promote Healing and Wellness. In The Oxford Handbook of Affective Computing; Calvo, R., D’Mello, S., Gratch, J., Kappas, A., Eds.; Oxford University Press: Oxford, UK, 2015; pp. 537–558. [Google Scholar]

- Messinger, D.S.; Duvivier, L.L.; Warren, Z.E.; Mahoor, M.; Baker, J.; Warlaumont, A.S.; Ruvolo, P. Affective Computing, Emotional Development, and Autism. In The Oxford Handbook of Affective Computing; Calvo, R., D’Mello, S., Gratch, J., Kappas, A., Eds.; Oxford University Press: Oxford, UK, 2015; pp. 516–536. [Google Scholar]

- North, M.M.; North, S.M.; Coble, J.R. Effectiveness of Virtual Environment Desensitization in the Treatment of Agoraphobia. Int. J. Virtual Real. 1995, 1, 25–34. [Google Scholar] [CrossRef] [Green Version]

- Coelho, C.M.; Waters, A.M.; Hine, T.J.; Wallis, G. The use of virtual reality in acrophobia research and treatment. J. Anxiety Disord. 2009, 23, 563–574. [Google Scholar] [CrossRef] [PubMed]

- Bălan, O.; Moise, G.; Petrescu, L.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Towards a Human-Centered Approach for VRET Systems—Case study for acrophobia. In Proceedings of the 28th International Conference on Information Systems Development, Toulon, France, 28–30 August 2019. [Google Scholar]

- Garcia-Palacios, A.; Hoffman, H.G.; See, S.K.; Tsai, A.; Botella, C. Redefining Therapeutic Success with Virtual Reality Exposure Therapy. CyberPsychol. Behav. 2001, 4, 341–348. [Google Scholar] [CrossRef]

- C2Phobia. Available online: https://www.c2.care/en/c2phobia-treating-phobias-in-virtual-reality/ (accessed on 20 October 2019).

- PSIOUS. Available online: https://www.psious.com/ (accessed on 20 October 2019).

- Stim Response Virtual Reality. Available online: https://www.biopac.com/application/virtual-reality/ (accessed on 20 October 2019).

- Virtual Reality Medical Center. Available online: https://vrphobia.com/ (accessed on 20 October 2019).

- Virtually Better. Available online: www.virtuallybetter.com (accessed on 20 October 2019).

- VR Treatment Program at Duke University School of Medicine. Available online: https://psychiatry.duke.edu/virtual-reality-therapy-phobias (accessed on 20 October 2019).

- Bravemind. Available online: http://medvr.ict.usc.edu/projects/bravemind/ (accessed on 20 October 2019).

- Limbix. Available online: https://www.limbix.com/ (accessed on 20 October 2019).

- Phobos. Available online: https://samsungvr.com/view/Uu9ME9YXR_B (accessed on 20 October 2019).

- Activity. Available online: https://www.unitylab.de/ (accessed on 20 October 2019).

- Oculus Rift. Available online: https://www.oculus.com/?locale=en_US (accessed on 20 October 2019).

- Microsoft Kinect. Available online: https://developer.microsoft.com/en-us/windows/kinect (accessed on 20 October 2019).

- Coelho, C.M.; Silva, C.F.; Santos, J.A.; Tichon, J.; Wallis, G. Contrasting the Effectiveness and Efficiency of Virtual Reality and Real Environments in the Treatment of Acrophobia. Psychol. J. 2008, 6, 203–216. [Google Scholar]

- Emmelkamp, P.M.; Krijn, M.; Hulsbosch, A.M.; de Vries, S.; Schuemie, M.J.; van der Mast, C.A. Virtual Reality Treatment Versus Exposure in Vivo: A comparative Evaluation in Acrophobia. Behav. Res. Ther. 2002, 40, 509–516. [Google Scholar] [CrossRef]

- HTC Vive. Available online: https://www.vive.com/eu/ (accessed on 20 October 2019).

- The Climb. Available online: http://www.theclimbgame.com/ (accessed on 20 October 2019).

- Ritchie’s Plank Experience. Available online: https://www.viveport.com/apps/9347a360-c6ea-4e35-aaf1-9fab4f41cb79/Richie’s_Plank_Experience/ (accessed on 20 October 2019).

- Arachnophobia. Available online: https://store.steampowered.com/app/485270/Arachnophobia/ (accessed on 20 October 2019).

- Limelight. Available online: https://store.steampowered.com/app/544880/Limelight_VR/ (accessed on 20 October 2019).

- Samsung Fearless Cityscapes. Available online: https://www.oculus.com/experiences/gear-vr/821606624632569/ (accessed on 20 October 2019).

- Samsung Fearless Landscapes. Available online: https://www.oculus.com/experiences/gear-vr/1290835750988761/ (accessed on 20 October 2019).

- Samsung Gear V.R. Available online: https://www.samsung.com/global/galaxy/gear-vr/ (accessed on 20 October 2019).

- Samsung Gear S2. Available online: https://www.samsung.com/global/galaxy/gear-s2/ (accessed on 20 October 2019).

- Emotion Definition. Available online: https://en.oxforddictionaries.com/definition/emotion (accessed on 20 October 2019).

- Koelstra, S.; Muehl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Solomon, C.R. Emotion. Available online: https://www.britannica.com/science/emotion#ref283140 (accessed on 20 October 2019).

- Ekman, P.; Sorenson, E.R.; Friesen, W.V. Pan-Cultural Elements in Facial Displays of Emotion. Science 1969, 164, 86–88. [Google Scholar] [CrossRef] [Green Version]

- Ekman, P.; Cordaro, D. What is Meant by Calling Emotions Basic. Emot. Rev. 2011, 3, 364–370. [Google Scholar] [CrossRef]

- Plutchik, R. Emotion: Theory, Research, and Experience. In Theories of Emotion: 1; New York Academic: New York, NY, USA, 1980; Volume 1. [Google Scholar]

- Sacharin, V.; Schlegel, K.; Scherer, K.R. Geneva Emotion Wheel Rating Study (Report); University of Geneva, Swiss Center for Affective Sciences: Geneva, Switzerland, 2012. [Google Scholar]

- Russell, J.A. A Circumplex Model of Affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Bontchev, B. Adaptation in Affective Video Games: A Literature Review. Cybern. Inf. Technol. 2016, 16, 3–34. [Google Scholar] [CrossRef] [Green Version]

- Steimer, T. The Biology of Fear and Anxiety-Related Behaviors. Dialogues Clin. Neurosci. 2002, 4, 231–249. [Google Scholar]

- Golumbic-Zion, E. What Is EEG? Available online: https://www.mada.org.il/brain/articles/faces-e.pdf (accessed on 20 October 2019).

- Solomon, C.R. The Neurobiology of Emotion. Available online: https://www.britannica.com/science/emotion#ref283143 (accessed on 20 October 2019).

- Davidson, R.J. Cerebral Asymmetry and Emotion: Conceptual and Methodological Conundrums. Cognit. Emot. 1993, 7, 115–138. [Google Scholar] [CrossRef]

- Davidson, R.J. Affective Style and Affective Disorders: Perspectives from Affective Neuroscience. Cognit. Emot. 1998, 12, 307–330. [Google Scholar] [CrossRef]

- Coan, J.A.; Allen, J.J.B.; Harmon-Jones, E. Voluntary Facial Expression and Hemispheric Asymmetry over the Frontal Cortex. Psychophysiology 2001, 38, 912–925. [Google Scholar]

- Coan, J.A.; Allen, J.J.B. Frontal EEG Asymmetry and the Behavioral Activation and Inhibition Systems. Psychophysiology 2003, 40, 106. [Google Scholar] [CrossRef]

- Park, K.S.; Choi, H.; Lee, K.J.; Lee, J.Y.; An, K.O.; Kim, E.J. Emotion Recognition Based on the Asymmetric Left and Right Activation. Int. J. Med. Med Sci. 2011, 3, 201–209. [Google Scholar]

- Wise, V.; McFarlane, A.C.; Clark, C.R.; Battersby, M. An Integrative Assessment of Brain and Body Function ‘at rest’ in Panic Disorder: A Combined Quantitative EEG/Autonomic Function Study. Int. J. Psychophysiol. 2011, 79, 155–165. [Google Scholar] [CrossRef]

- Knott, V.; Lapierre, Y.D.; Fraser, G.; Johnson, N. Auditory Evoked Potentials in Panic Disorder. J. Psychiatry Neurosci. 1991, 16, 215–220. [Google Scholar] [PubMed]

- Engelbregt, H.J.; Keeser, D.; Promes, V.H.; Verhagen-Schouten, S.; Deijen, J.B. In-Vivo EEG Changes During a Panic Attack in a Patient with Specific Phobia. J. Med. Cases 2012, 3, 34–38. [Google Scholar] [CrossRef] [Green Version]

- Gordeev, S.A. Clinical and Psychophysiological Study of Patients with Panic Attacks with or without Agoraphobic Disorders. Zh. Nevrol. Psikhiatr. Im. S. S. Korsakova. 2007, 107, 54–58. [Google Scholar] [CrossRef] [PubMed]

- Knyazev, G.G.; Savostyanov, A.N.; Levin, E.A. Alpha Oscillations as a Correlate of Trait Anxiety. Int. J. Psychophysiol. 2004, 53, 147–160. [Google Scholar] [CrossRef]

- Suetsugi, M.; Mizuki, Y.; Ushijima, I.; Kobayashi, T.; Tsuchiya, K.; Aoki, T.; Watanabe, Y. Appearance of Frontal Midline Theta Activity in Patients with Generalized Anxiety Disorder. Neuropsychobiology 2000, 41, 108–112. [Google Scholar] [CrossRef]

- Schutter, D.J.; Van Honk, J. A eElectrophysiological Ratio Markers for the Balance between Reward and Punishment. Cogntive Brain Res. 2005, 24, 685–690. [Google Scholar] [CrossRef]

- Putman, P.; Van, P.J.; Maimari, I.; Vander, W.S. EEG Theta/Beta Ratio in Relation to Fear-Modulated Response-Inhibition, Attentional Control, and Affective Traits. Biol. Psychol. 2010, 83, 73–78. [Google Scholar] [CrossRef]

- Choi, J.S.; Bang, J.W.; Heo, H.; Park, K.R. Evaluation of Fear Using Nonintrusive Measurement of Multimodal Sensors. Sensors 2015, 15, 17507–17533. [Google Scholar] [CrossRef] [Green Version]

- Cheemalapati, S.; Adithya, P.C.; Valle, M.D.; Gubanov, M.; Pyayt, A. Real Time Fear Detection Using Wearable Single Channel Electroencephalogram. Sensor Netw. Data Commun. 2016, 5, 140. [Google Scholar] [CrossRef] [Green Version]

- Petrantonakis, P.G.; Hadjileontiadis, L.J. EEG-based Emotion Recognition Using Hybrid Filtering and Higher Order Crossings. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–6. [Google Scholar]

- Carvalho, M.R.; Velasques, B.B.; Cagy, M.; Marques, J.B.; Teixeira, S.; Nardi, A.E.; Piedade, R.; Ribeiro, P. Electroencephalographic Findings in Panic Disorder. Trends Psychiatry Psychother. 2013, 35, 238–251. [Google Scholar] [CrossRef] [Green Version]

- Rainville, P.; Bechara, A.; Naqvi, N.; Damasio, A.R. Basic Emotions are Associated with Distinct Patterns of Cardiorespiratory Activity. Int. J. Psychophysiol. 2006, 61, 5–18. [Google Scholar] [CrossRef]

- Gouizi, K.; Reguig, F.B.; Maaoui, C. Analysis Physiological Signals for Emotion Recognition. In Proceedings of the 2011 7th International Workshop on Systems, Signal Processing and their Applications (WOSSPA), Tipaza, Algeria, 9–11 May 2011; pp. 147–150. [Google Scholar]

- Muhl, C.; Heylen, D. Cross-Modal Elicitation of Affective Experience. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2019; pp. 1–2. [Google Scholar]

- Agrafioti, F.; Hatzinakos, D.; Anderson, A. ECG Pattern Analysis for Emotion Detection. IEEE Trans. Affect. Comput. 2012, 3, 102–115. [Google Scholar] [CrossRef]

- Healey, J.A. Affect Detection in the Real World: Recording and Processing Physiological Signals. In Proceedings of the IEEE 3rd International Conference on Affective Computing and Intelligent Interaction, Amsterdam, The Netherlands, 10–12 September 2009; IEEE Press: Amsterdam, The Netherlands, 2009; Volume 1, pp. 729–734. [Google Scholar]

- Fleureau, J.; Guillotel, P.; Huynh-Thu, Q. Physiological-based Affect Event Detector for Entertainment Video Applications. IEEE Trans. Affect. Comput. 2012, 3, 379–385. [Google Scholar] [CrossRef]

- AlZoubi, O.; D’Mello, S.K.; Calvo, R.A. Detecting Naturalistic Expressions of Nonbasic Affect using Physiological Signals. IEEE Trans. Affect. Comput. 2010, 3, 298–310. [Google Scholar] [CrossRef]

- Westerink, J.; Ouwerkerk, M.; de Vries, G.-J.; de Waele, S.; van den Eerenbeemd, J.; van Boven, M. Emotion Measurement Platform for Daily Life Situations. In Proceedings of the International Conference (ACII 2009), Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar]

- Wiederhold, B.K.; Jang, D.P.; KIM, S.I.; Wiederhold, M.D. Physiological Monitoring as an Objective Tool in Virtual Reality Therapy. Cyberpsychol. Behav. 2002, 5, 77–82. [Google Scholar] [CrossRef]

- Peterson, S.M.; Furuichi, E.; Ferris, D.P. Effects of virtual reality high heights exposure during beam-walking on physiological stress and cognitive loading. PLoS ONE 2018, 13, 1–17. [Google Scholar] [CrossRef]

- Kritikos, J.; Tzannetos, G.; Zoitaki, C.; Poulopoulou, S.; Koutsouris, D. Anxiety detection from Electrodermal Activity Sensor with movement & interaction during Virtual Reality Simulation. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019. [Google Scholar]

- Lee, M.; Bruder, G.; Welch, G. The Virtual Pole: Exploring Human Responses to Fear of Heights in Immersive Virtual Environments. J. Virtual Real. Broadcast. 2017, 6, 1–14. [Google Scholar]

- Wang, X.W.; Nie, D.; Lu, B.L. Emotional State Classification from EEG Data Using Machine Learning Approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing. M.I.T Media Laboratory Perceptual Computing Section Technical Report No. 321. 1995. Available online: http://vismod.media.mit.edu/tech-reports/TR-321.pdf (accessed on 20 October 2019).

- Nasoz, F.; Alvarez, K.; Lisetti, C.L.; Finkelstein, N. Emotion Recognition from Physiological Signals Using Wireless Sensors for Presence Technologies. Cognit. Technol. Work 2004, 6, 4–14. [Google Scholar] [CrossRef]

- Jirayucharoensak, S.; Pan-Ngum, S.; Israsena, P. EEG-Based Emotion Recognition Using Deep Learning Network with Principal Component Based Covariate Shift Adaptation. Hindawi Publishing Corporation. Sci. World J. 2014, 2014, 627892. [Google Scholar] [CrossRef] [Green Version]

- Fazi, M.B. Can a Machine Think (Anything New)? Automation Beyond Simulation. AI Soc. 2019, 34, 813–824. [Google Scholar] [CrossRef] [Green Version]

- Jerritta, S.; Murugappan, M.; Nagarajan, R.; Wan, K. Physiological Signals Based Human Emotion Recognition: A Review. In Proceedings of the IEEE 7th International Colloquium on Signal Processing and its Applications, Penang, Malaysia, 4–6 March 2011. [Google Scholar] [CrossRef]

- Jonghwa, K.; Ande, E. Emotion Recognition Based on Physiological Changes in Music Listening. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2067–2083. [Google Scholar]

- Wan-Hui, W.; Yu-Hui, Q.; Guang-Yuan, L. Electrocardiography Recording, Feature Extraction and Classification for Emotion Recognition. In Proceedings of the WRI World Congress on Computer Science and Information Engineering Los Angeles, Los Angeles, CA, USA, 31 March–2 April 2009. [Google Scholar]

- Caridakis, G.; Castellano, G.; Kessous, L.; Raouzaiou, A.; Malatesta, L.; Asteriadis, S.; Karpouzis, K. Multimodal Emotion Recognition from Expressive Faces, Body Gestures and Speech. In Artificial Intelligence and Innovations 2007: From Theory to Applications; Boukis, C., Pnevmatikakis, L., Polymenakos, L., Eds.; Springer: Boston, MA, USA, 2007; Volume 247, pp. 375–388. [Google Scholar]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep Learning for Emotion Recognition on Small Datasets using Transfer Learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction (ICMI ’15), New York, NY, USA, 9–13 November 2015; pp. 443–449. [Google Scholar] [CrossRef]

- Teo, J.; Chew, L.H.; Chia, J.T.; Mountstephens, J. Classification of Affective States via EEG and Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2018, 9. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Rani, P.; Sarkar, N. An Empirical Study of Machine Learning Techniques for Affect Recognition in Human-Robot Interaction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 1–8. [Google Scholar] [CrossRef]

- Bălan, O.; Moise, G.; Petrescu, L.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Emotion Classification Based on Biophysical Signals and Machine Learning Techniques. Symmetry 2020, 12, 21. [Google Scholar] [CrossRef] [Green Version]

- Ray, L.C.; Fukuoka, Y. Machine Learning and Therapeutic Strategies in VR. In Proceedings of the 9th International Conference on Digital and Interactive Arts (Artech 2019), Braga, Portugal, 23–25 October 2019. [Google Scholar]

- Hu, F.; Wang, H.; Chen, J.; Gong, J. Research on the characteristics of acrophobia in virtual altitude environment. In Proceedings of the 2018 IEEE International Conference on Intelligence and Safety for Robotics, Shenyang, China, 24–27 August 2018. [Google Scholar]

- Šalkevicius, J.; Damaševičius, R.; Maskeliunas, R.; Laukienė, I. Anxiety Level Recognition for Virtual Reality Therapy System Using Physiological Signals. Electronics 2019, 8, 1039. [Google Scholar] [CrossRef] [Green Version]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Automatic Adaptation of Exposure Intensity in VR Acrophobia Therapy, Based on Deep Neural Networks. In Proceedings of the Twenty-Seventh European Conference on Information Systems (ECIS2019), Stockholm-Uppsala, Sweden, 8–14 June 2019. [Google Scholar]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Moldoveanu, F.; Leordeanu, M. Does Automatic Game Difficulty Level Adjustment Improve Acrophobia Therapy? Differences from Baseline. In Proceedings of the VRST ’18, Tokyo, Japan, 28 November–1 December 2018. [Google Scholar] [CrossRef]

- Huppert, D.; Grill, E.; Brandt, T. A New Questionnaire for Estimating the Severity of Visual Height Intolerance and Acrophobia by a Metric Interval Scale. Front. Neurol. 2017, 8, 211. [Google Scholar] [CrossRef] [Green Version]

- Acticap Xpress Bundle. Available online: https://www.brainproducts.com/productdetails.php?id=66 (accessed on 20 October 2019).

- Shimmers Multisensory. Available online: http://www.shimmersensing.com/ (accessed on 20 October 2019).

- Shimmer3 GSR+ Unit. Available online: https://www.shimmersensing.com/products/shimmer3-wireless-gsr-sensor (accessed on 20 October 2019).

- Scikit Learn Python Library. Available online: http://scikit-learn.org (accessed on 20 November 2018).

- Tensor Flow Python Framework. Available online: https://www.tensorflow.org/ (accessed on 20 October 2019).

- Keras Library. Available online: https://keras.io/ (accessed on 20 October 2019).

- Unity Game Engine. Available online: https://unity.com/ (accessed on 20 October 2019).

- Open Vibe. Available online: http://openvibe.inria.fr/ (accessed on 20 October 2019).

- Lab Stream Layer. Available online: https://github.com/sccn/labstreaminglayer (accessed on 20 October 2019).

- Arikan, K.; Boutros, N.N.; Bozhuyuk, E.; Poyraz, B.C.; Savrun, B.M.; Bayar, R.; Gunduz, A.; Karaali-Savrun, F.; Yaman, M. EEG Correlates of Startle Reflex with Reactivity to Eye Opening in Psychiatric Disorders: Preliminary Results. Clin. EEG Neurosci. 2006, 37, 230–234. [Google Scholar] [CrossRef]

- Kometer, H.; Luedtke, S.; Stanuch, K.; Walczuk, S.; Wettstein, J. The Effects Virtual Reality Has on Physiological Responses as Compared to Two-Dimensional Video. J. Adv. Stud. Sci. 2010, 1, 1–21. [Google Scholar]

- Lisetti, C.L.; Nasoz, F. Using Noninvasive Wearable Computers to Recognize Human Emotions from Physiological Signals. J. Appl. Signal Process. 2004, 11, 1672–1687. [Google Scholar] [CrossRef] [Green Version]

| Classifiers | Goal | Signals | Number of Subjects | Performance or Significant Results | |

|---|---|---|---|---|---|

| [97] 2018 | CNN with VGG-16 | Detect acrophobia level | EEG | 60 subjects | average accuracy 88.77% |

| [98] 2019 | SVM with RBF kernel | Predict anxiety level (public speaking fear) | GSR, BVP, skin temperature | 30 persons | BVP accuracy window size 18 s 74.1% GSR accuracy window size 23 s 76.6% Skin temperature accuracy window size 18 s 75.1% Signal fusion (early) window size 20 s 86.3% Signal fusion (late) window size 20 s 83.2% |

| 11-Choice-Scale | 4-Choice-Scale | 2-Choice-Scale |

|---|---|---|

| 0 | 0 (relaxation) | 0 (relaxation) |

| 1 | 1 (low fear) | |

| 2 | ||

| 3 | ||

| 4 | 2 (medium fear) | 1 (fear) |

| 5 | ||

| 6 | ||

| 7 | ||

| 8 | 3 (high fear) | |

| 9 | ||

| 10 |

| DNN Models | Activation Function | Activation Function in the Output Layer | Loss Function | Optimization Algorithm | Epochs and Batch Size |

|---|---|---|---|---|---|

| DNN_Model_1 3 hidden layers, with 150 neurons on each hidden | Rectified Linear Unit (RELU) | Adam gradient descent | |||

| layer DNN_Model_2 3 hidden layers, with 300 neurons on each hidden | 2-choice scale Sigmoid activation function | 2-choice scale Binary crossentropy | 1000 epochs for training | ||

| layer DNN_Model_3 6 hidden layers, with 150 neurons on each hidden | 4-choice scale Softmax activation function | 4-choice scale Categorical crossentropy and one-hot encoding | Batch size of 20 | ||

| layer 6 hidden layers, with 300 neurons on each hidden layer |

| Classifier Type | C1 | ||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| SVM | 80.5 | 64.75 | 60.5 | 46 | 59.5 |

| kNN | 99.5 | 43.75 | 99 | 52.75 | 98.25 |

| RF | 99.25 | 66.5 | 99 | 39.25 | 99 |

| LDA | 79.5 | 64.75 | 57.5 | 37.75 | 49.25 |

| DNN_Model_1 | 95 | 58.3 | 87.825 | 45.425 | 79.4 |

| DNN_Model_2 | 95.77 | 58.15 | 90.525 | 20.8 | 84.95 |

| DNN_Model_3 | 94.75 | 58.3 | 86.55 | 37.7 | 74.025 |

| DNN_Model_4 | 94.7 | 79.12 | 88.275 | 37.1 | 80.85 |

| C2 | |||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| SVM | 64.25 | - | 69 | - | 71 |

| kNN | 22.75 | - | 22.75 | - | 22.75 |

| RF | 99.75 | - | 100 | - | 100 |

| LDA | 24.5 | - | 25.75 | - | 29.5 |

| DNN_Model_1 | 98.325 | - | 98.6 | - | 98.475 |

| DNN_Model_2 | 98.5 | - | 98.725 | - | 98.3 |

| DNN_Model_3 | 97.675 | - | 97.825 | - | 98.325 |

| DNN_Model_4 | 97.8 | - | 98.15 | - | 97.575 |

| Classifier Type | C1 | ||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| kNN | 54 | 49.9175 | 32.25 | 30.24 | 25 |

| RF | 54.5 | 60.4175 | 33.25 | 38.5725 | 29.75 |

| LDA | 65.75 | 64.585 | 35.25 | 33.5725 | 25.25 |

| C2 | |||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| kNN | 32.75 | - | 36 | - | 41.75 |

| RF | 35.5 | - | 40.5 | - | 41.75 |

| LDA | 37.25 | - | 42.75 | - | 44.5 |

| Classifier Type | C1 | ||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| SVM | 88 | 89.5 | 74.75 | 42.5 | 77.75 |

| kNN | 99.5 | 77 | 99 | 29.25 | 98.25 |

| RF | 99.75 | 77 | 99.25 | 21 | 99 |

| LDA | 87 | 60.5 | 71.25 | 21.75 | 64 |

| DNN_Model_1 | 95.03 | 72.9 | 87.945 | 41.8975 | 79.485 |

| DNN_Model_2 | 95.51 | 68.735 | 90.4975 | 24.9925 | 85.095 |

| DNN_Model_3 | 94.4375 | 62.45 | 86.325 | 34.15 | 74.275 |

| DNN_Model_4 | 94.575 | 54.125 | 88.28 | 38.325 | 80.45 |

| C2 | |||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| SVM | 82.75 | - | 86.5 | - | 86.5 |

| kNN | 23.75 | - | 23.75 | - | 23.75 |

| RF | 99.75 | - | 99.75 | - | 100 |

| LDA | 23 | - | 20.5 | - | 27.5 |

| DNN_Model_1 | 98.4 | - | 98.675 | - | 98.75 |

| DNN_Model_2 | 98.725 | - | 98.5 | - | 98.65 |

| DNN_Model_3 | 97.45 | - | 97.825 | - | 98.5 |

| DNN_Model_4 | 97.375 | - | 97.775 | - | 98.175 |

| Classifier Type | C1 | ||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| kNN | 76.75 | 72.9175 | 52.25 | 16.665 | 42 |

| RF | 77 | 68.75 | 49.75 | 28.5725 | 45.75 |

| LDA | 81 | 85.4175 | 54.5 | 17.5 | 40.5 |

| C2 | |||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| kNN | 50.25 | - | 52.25 | - | 53.25 |

| RF | 50.5 | - | 53.5 | - | 56.5 |

| LDA | 52 | - | 56 | - | 56.75 |

| C1 | C2 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | ||||||

| F | FI | F | FI | F | FI | F | FI | F | FI | F | FI |

| GSR | 0.41 | GSR | 0.45 | GSR | 0.49 | GSR | 0.44 | FLt | 0.69 | FLt | 0.87 |

| HR | 0.28 | HR | 0.28 | HR | 0.24 | FLt | 0.37 | GSR | 0.41 | GSR | 0.39 |

| B_C3 | 0.15 | B_FC6 | 0.15 | B_FC6 | 0.14 | HR | 0.23 | HR | 0.20 | HR | 0.18 |

| B_P3 | 0.13 | B_C3 | 0.13 | B_FC5 | 0.12 | B_FC6 | 0.14 | A_FC6 | 0.12 | B_FC6 | 0.13 |

| B_FC2 | 0.13 | B_FC2 | 0.12 | B_C3 | 0.12 | A_FC6 | 0.13 | B_FC6 | 0.12 | A_FC6 | 0.11 |

| B_FC6 | 0.13 | B_FP1 | 0.12 | B_FC2 | 0.12 | B_FC5 | 0.10 | B_P3 | 0.10 | B_P3 | 0.09 |

| B_FP2 | 0.12 | B_P3 | 0.12 | B_P3 | 0.11 | T_FC6 | 0.10 | B_T8 | 0.09 | B_FC2 | 0.09 |

| A_FC6 | 0.12 | T_FC6 | 0.12 | T_FC6 | 0.11 | B_P3 | 0.09 | B_FC2 | 0.09 | T_FC6 | 0.08 |

| B_C4 | 0.10 | B_O1 | 0.11 | B_FP1 | 0.10 | B_T8 | 0.09 | B_C3 | 0.09 | B_T8 | 0.08 |

| B_FC5 | 0.10 | B_FC5 | 0.11 | A_FC6 | 0.10 | B_O1 | 0.09 | T_FC6 | 0.08 | B_FC5 | 0.07 |

| B_FP1 | 0.09 | B_T8 | 0.09 | B_T8 | 0.10 | B_C3 | 0.09 | B_O2 | 0.08 | B_O2 | 0.07 |

| T_FC6 | 0.08 | B_P2 | 0.09 | B_O1 | 0.08 | B_FC2 | 0.09 | B_FC5 | 0.08 | B_FP1 | 0.07 |

| A_FP1 | 0.08 | B_FC1 | 0.08 | A_FP1 | 0.08 | B_O2 | 0.09 | B_FP1 | 0.07 | B_C3 | 0.07 |

| A_FP2 | 0.08 | A_FP1 | 0.08 | B_P2 | 0.08 | B_P2 | 0.08 | A_FP1 | 0.07 | B_P2 | 0.06 |

| B_T8 | 0.08 | A_FC6 | 0.08 | T_FP1 | 0.08 | B_FP1 | 0.08 | A_O1 | 0.06 | B_O1 | 0.06 |

| C1 | C2 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | ||||||

| F | FI | F | FI | F | FI | F | FI | F | FI | F | FI |

| GSR | 0.40 | GSR | 0.46 | GSR | 0.48 | GSR | 0.54 | FLt | 0.66 | FLt | 0.79 |

| HR | 0.25 | HR | 0.32 | HR | 0.27 | FLt | 0.32 | GSR | 0.47 | GSR | 0.42 |

| B_FC2 | 0.22 | B_FC6 | 0.17 | B_FP1 | 0.14 | HR | 0.24 | HR | 0.20 | HR | 0.18 |

| B_C4 | 0.15 | B_FC2 | 0.16 | A_FC6 | 0.14 | A_FC6 | 0.15 | B_FC6 | 0.14 | T_FC6 | 0.12 |

| B_FC6 | 0.14 | B_P2 | 0.12 | B_FC2 | 0.14 | B_FC6 | 0.14 | A_FC6 | 0.11 | B_FC6 | 0.12 |

| A_FP1 | 0.14 | B_FP1 | 0.12 | B_FC6 | 0.13 | B_FP1 | 0.12 | B_FC2 | 0.10 | A_FC6 | 0.12 |

| B_P2 | 0.13 | T_FC6 | 0.11 | T_FC6 | 0.12 | T_FC6 | 0.10 | T_FC6 | 0.09 | B_P3 | 0.11 |

| A_FC6 | 0.12 | B_O1 | 0.10 | B_O1 | 0.12 | B_FC2 | 0.10 | B_FC5 | 0.08 | B_FC2 | 0.11 |

| B_FP1 | 0.10 | A_FC6 | 0.10 | A_FP1 | 0.11 | B_O2 | 0.09 | B_O2 | 0.08 | B_FP1 | 0.08 |

| B_O2 | 0.10 | A_FP1 | 0.10 | B_FC5 | 0.11 | B_P1 | 0.09 | B_C4 | 0.08 | A_FC1 | 0.08 |

| T_P2 | 0.08 | B_P3 | 0.10 | B_P2 | 0.10 | B_O1 | 0.08 | B_FP1 | 0.07 | T_FP1 | 0.07 |

| T_FC6 | 0.08 | B_C4 | 0.09 | B_P3 | 0.10 | A_O1 | 0.08 | A_P4 | 0.07 | A_O1 | 0.07 |

| B_O1 | 0.08 | B_FC5 | 0.09 | B_C3 | 0.09 | B_P2 | 0.08 | A_FP1 | 0.07 | B_T8 | 0.07 |

| B_C3 | 0.08 | A_P2 | 0.08 | B_T8 | 0.09 | T_P3 | 0.07 | B_P2 | 0.07 | B_C4 | 0.07 |

| B_P3 | 0.08 | B_C3 | 0.08 | B_C4 | 0.08 | A_P2 | 0.07 | B_C3 | 0.07 | B_O2 | 0.07 |

| Method | C1 | ||||

|---|---|---|---|---|---|

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| Player-independent | kNN 99.5% | DNN_Model_4 79.12% | kNN 99% | kNN 52.75% | kNN 98.25% |

| RF 99.25% | RF 99% | RF 99% | |||

| Player-dependent | kNN 99.5% | SVM 89.5% | kNN 99% | SVM 42.5% | kNN 98.25% |

| RF 99.75% | RF 99.25% | RF 99% | |||

| C2 | |||||

| 2-Choice Scale | 4-Choice Scale | 11-Choice Scale | |||

| Cross-Validation | Test | Cross-Validation | Test | Cross-Validation | |

| Player-independent | RF 99.75% | - | RF 100% | - | RF 100% |

| Player-dependent | RF 99.75% | - | RF 99.75% | - | RF 100% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy. Sensors 2020, 20, 496. https://doi.org/10.3390/s20020496

Bălan O, Moise G, Moldoveanu A, Leordeanu M, Moldoveanu F. An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy. Sensors. 2020; 20(2):496. https://doi.org/10.3390/s20020496

Chicago/Turabian StyleBălan, Oana, Gabriela Moise, Alin Moldoveanu, Marius Leordeanu, and Florica Moldoveanu. 2020. "An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy" Sensors 20, no. 2: 496. https://doi.org/10.3390/s20020496

APA StyleBălan, O., Moise, G., Moldoveanu, A., Leordeanu, M., & Moldoveanu, F. (2020). An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy. Sensors, 20(2), 496. https://doi.org/10.3390/s20020496