Multi-GPU, Multi-Node Algorithms for Acceleration of Image Reconstruction in 3D Electrical Capacitance Tomography in Heterogeneous Distributed System †

Abstract

1. Introduction

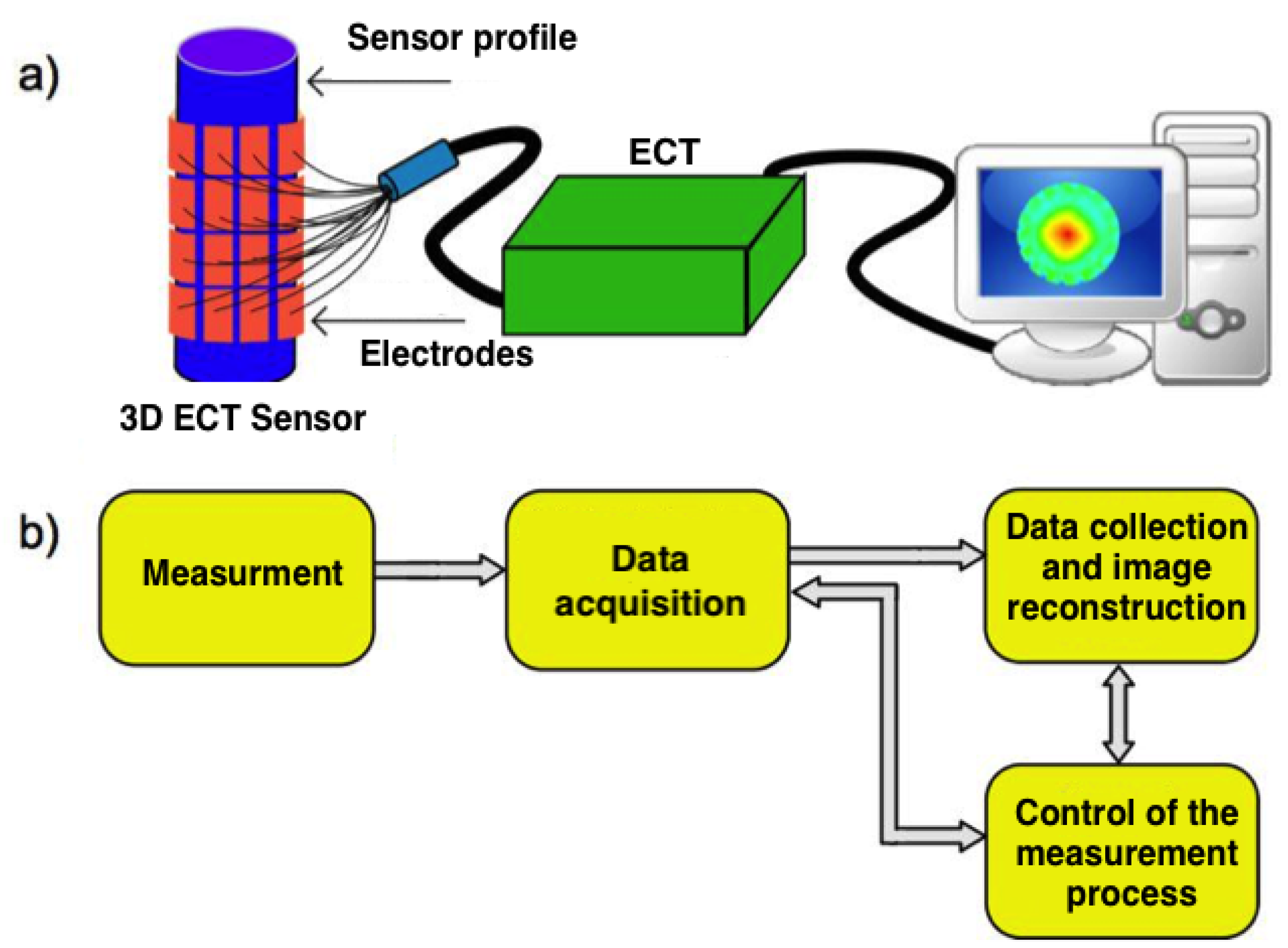

2. Image Reconstruction in ECT

- —electric permittivity vector (output image),

- —sensitivity matrix, transposed

- —capacitance measurements vector.

- —image obtained in current iteration,

- —image from the previous iteration,

- —convergence factor (scalar),

- —sensitivity matrix, transposed,

- —sensitivity matrix,

- —capacitance measurements vector.

- —Capacitance between electrodes e1 and e2

- —distribution of electric permittivity in domain

- —distribution of electric potential in domain

- —electric potential applied to electrode e1

- —electric potential applied to electrode e2

- —Cartesian coordinates in domain

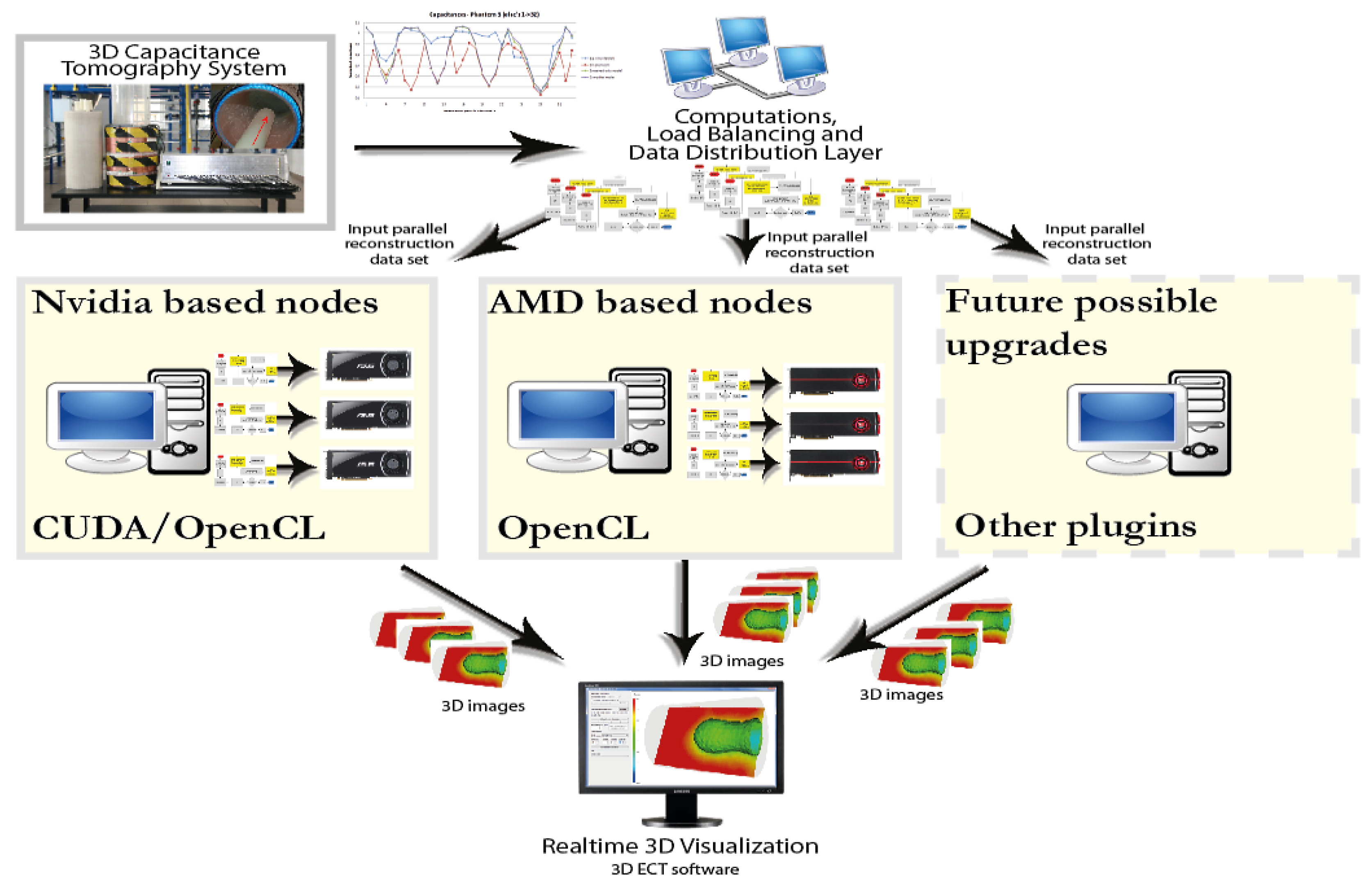

3. Developed Distributed System

3.1. Design Assumptions

- Division of matrices between nodes

- Basic operations of linear algebra (transposition, addition, subtraction, multiplication)

- Data transfer between nodes

- Planning and division of tasks

- Support for heterogeneous devices

- Support for calculations using graphics cards

- Support for modern multi-core processors as a set of devices

- Possibility to extend existing solutions with pseudo inheritance from implemented layouts

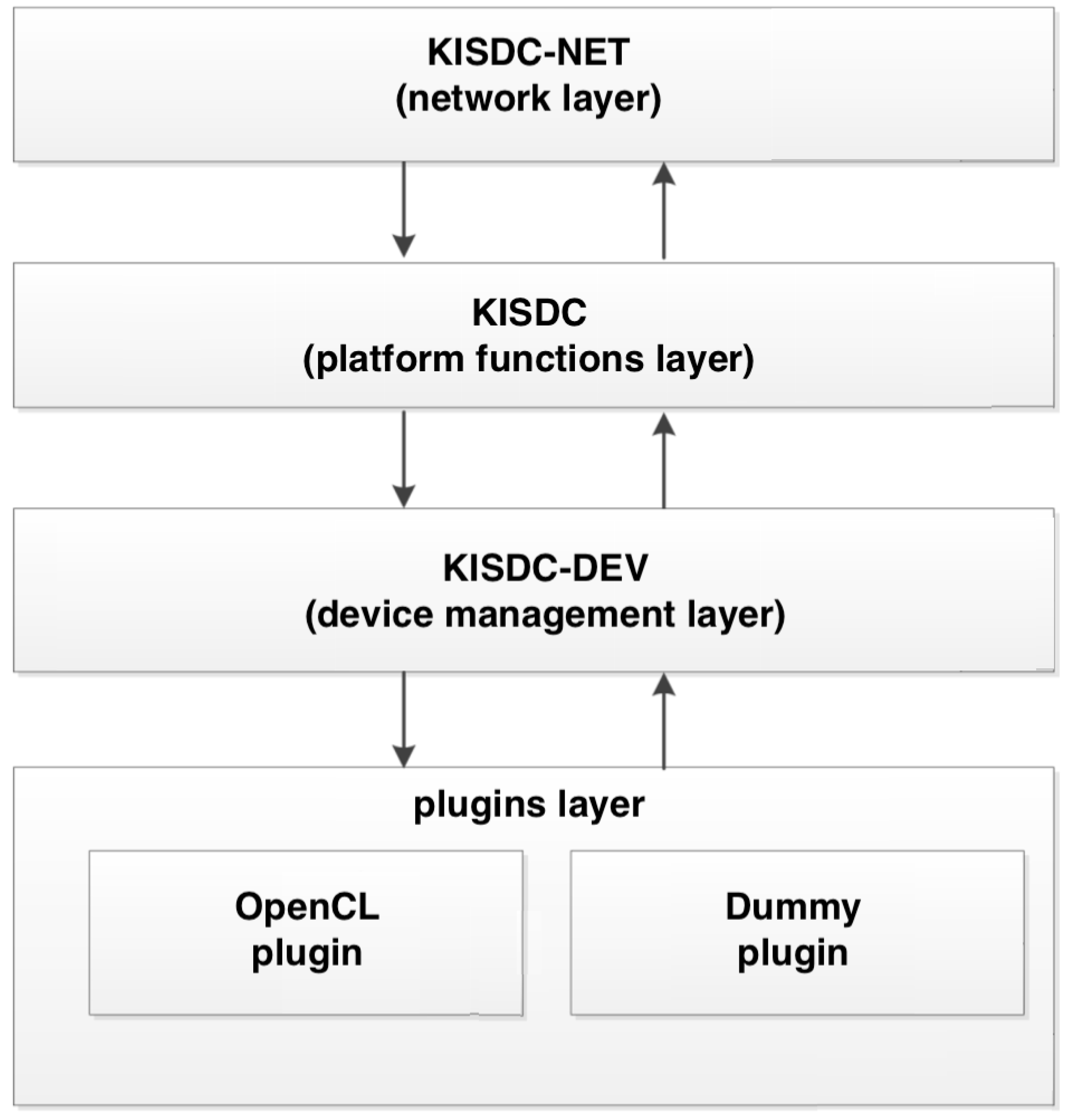

3.2. Implementation

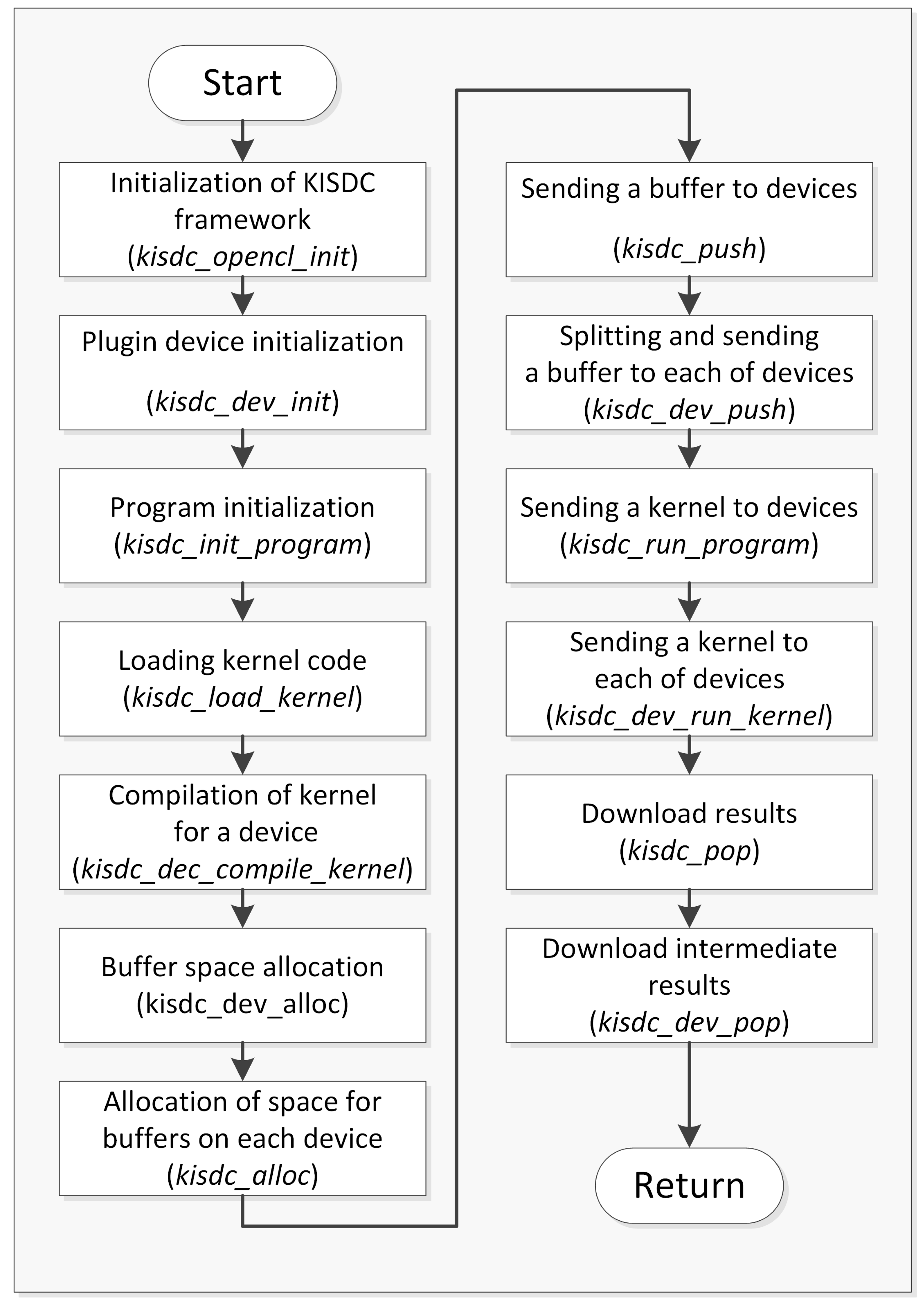

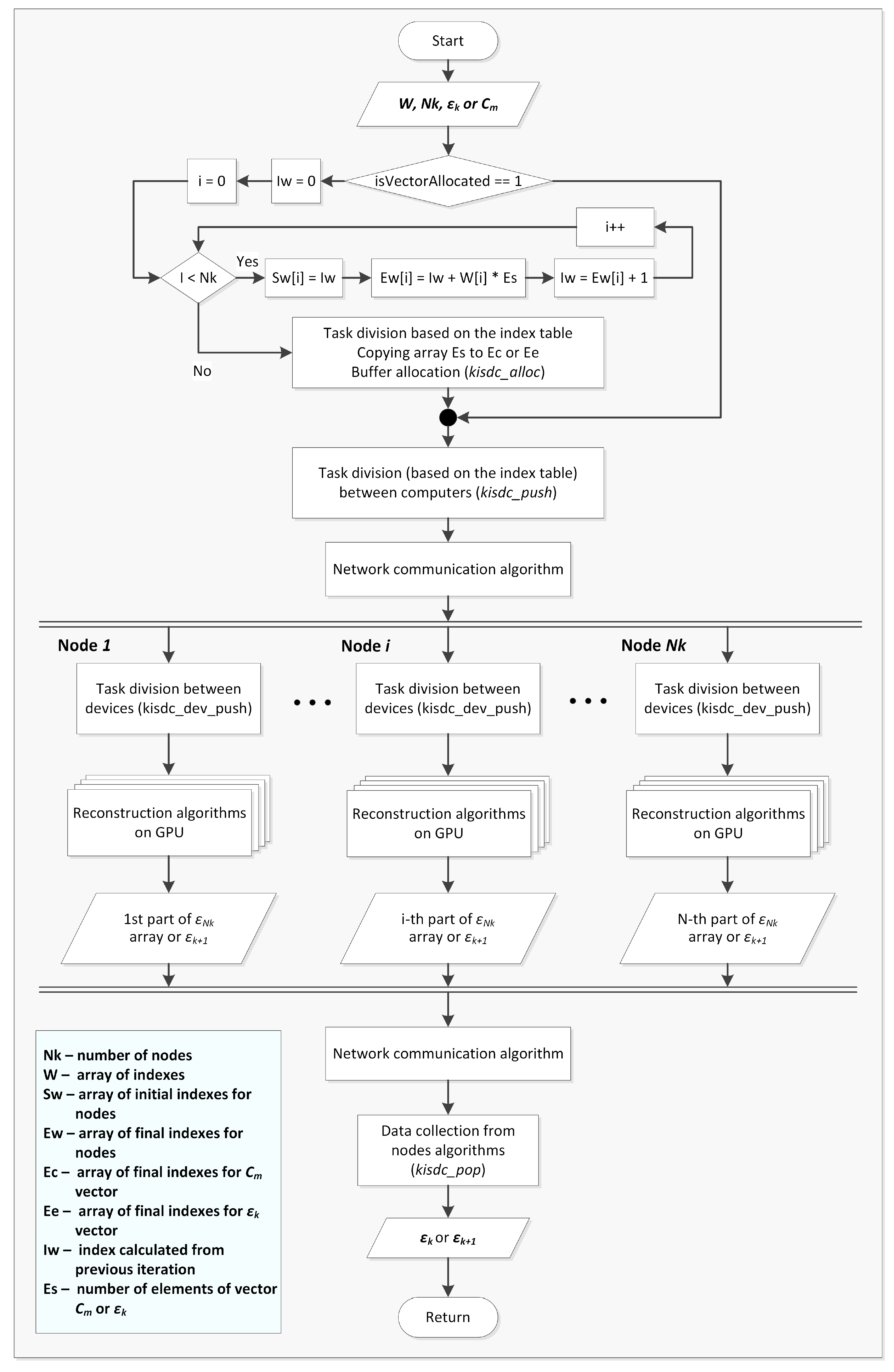

4. Parallel Algorithm of ECT Image Reconstruction in Distributed System

5. Test Results

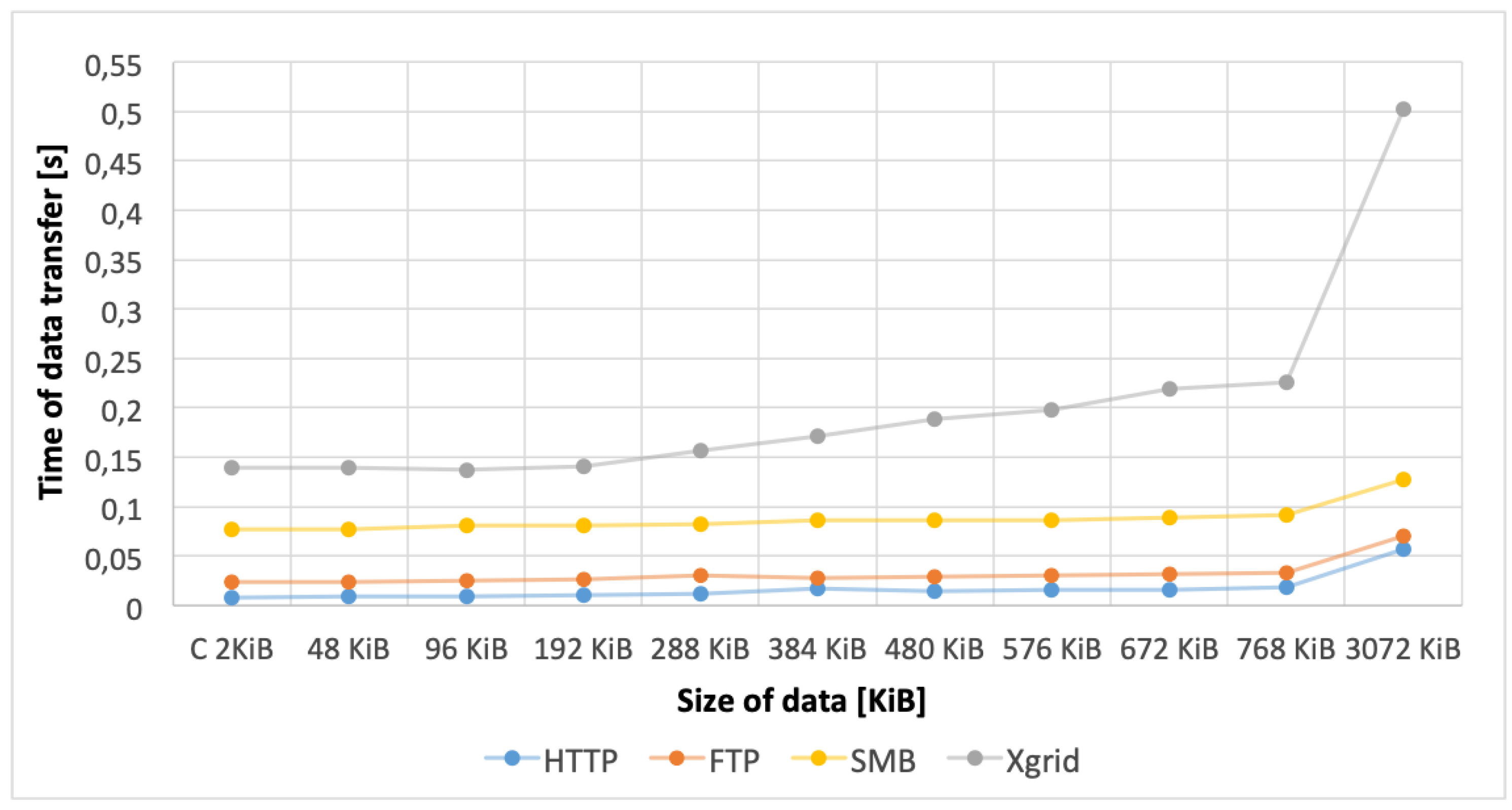

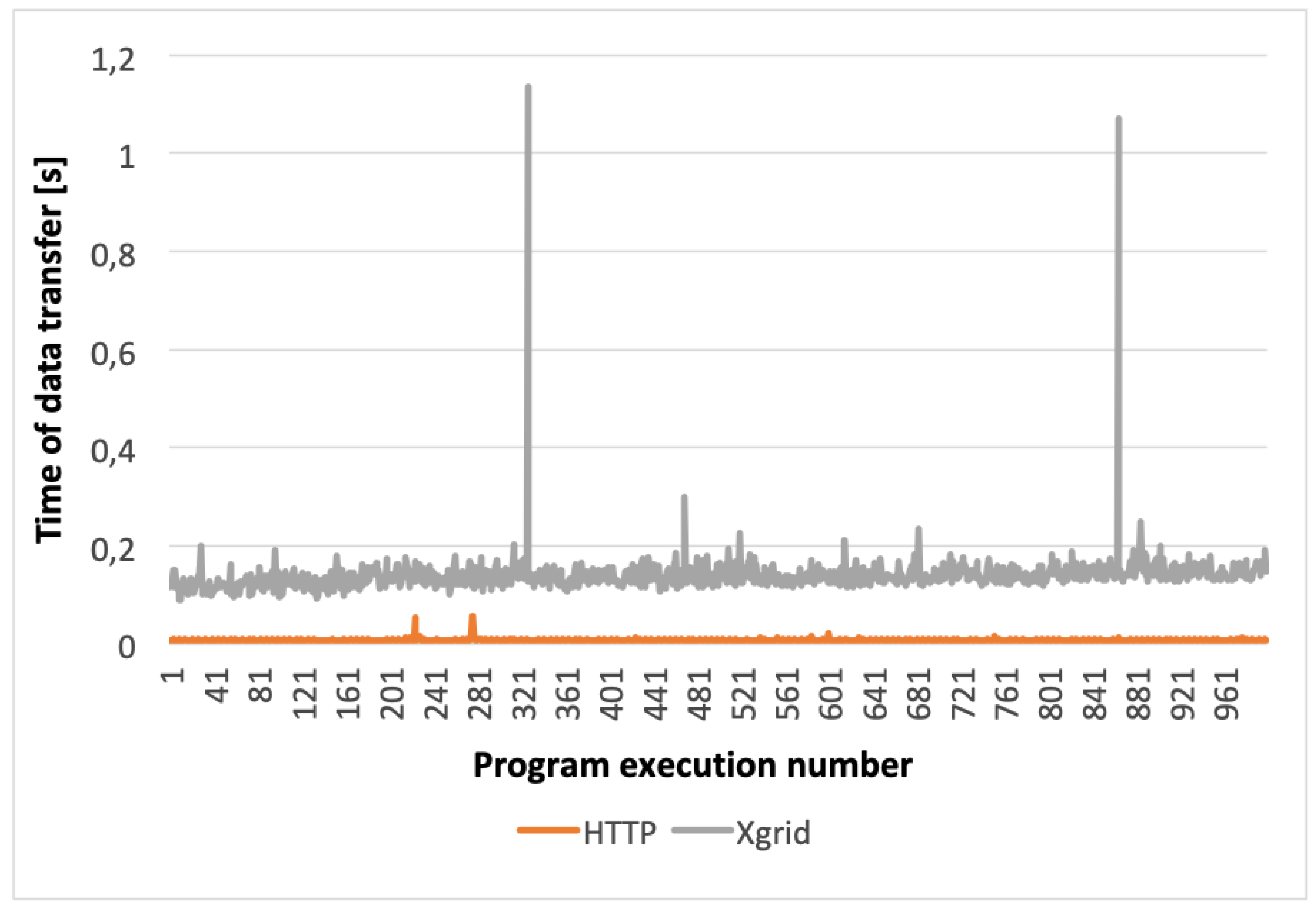

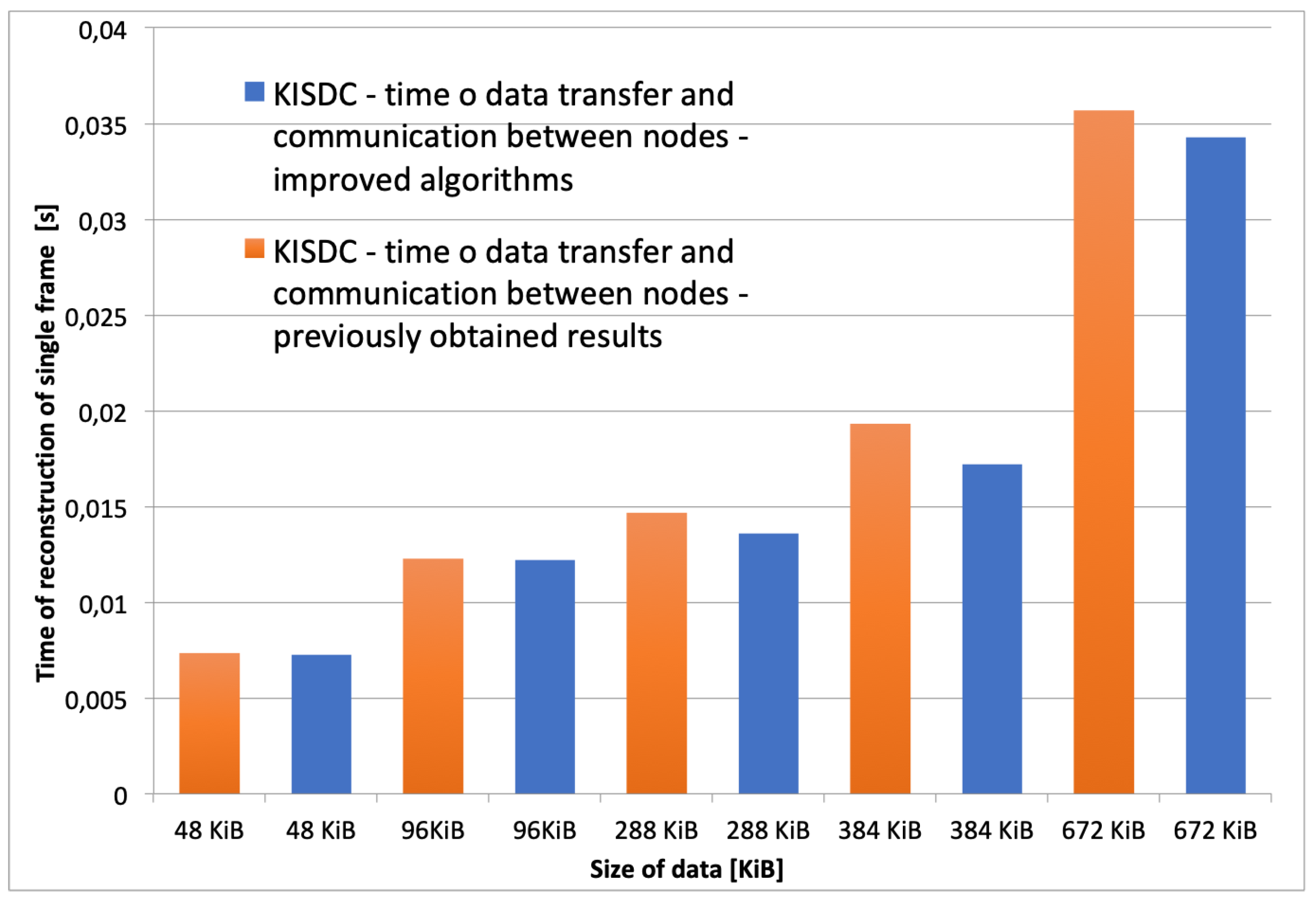

5.1. Network Layer Performance

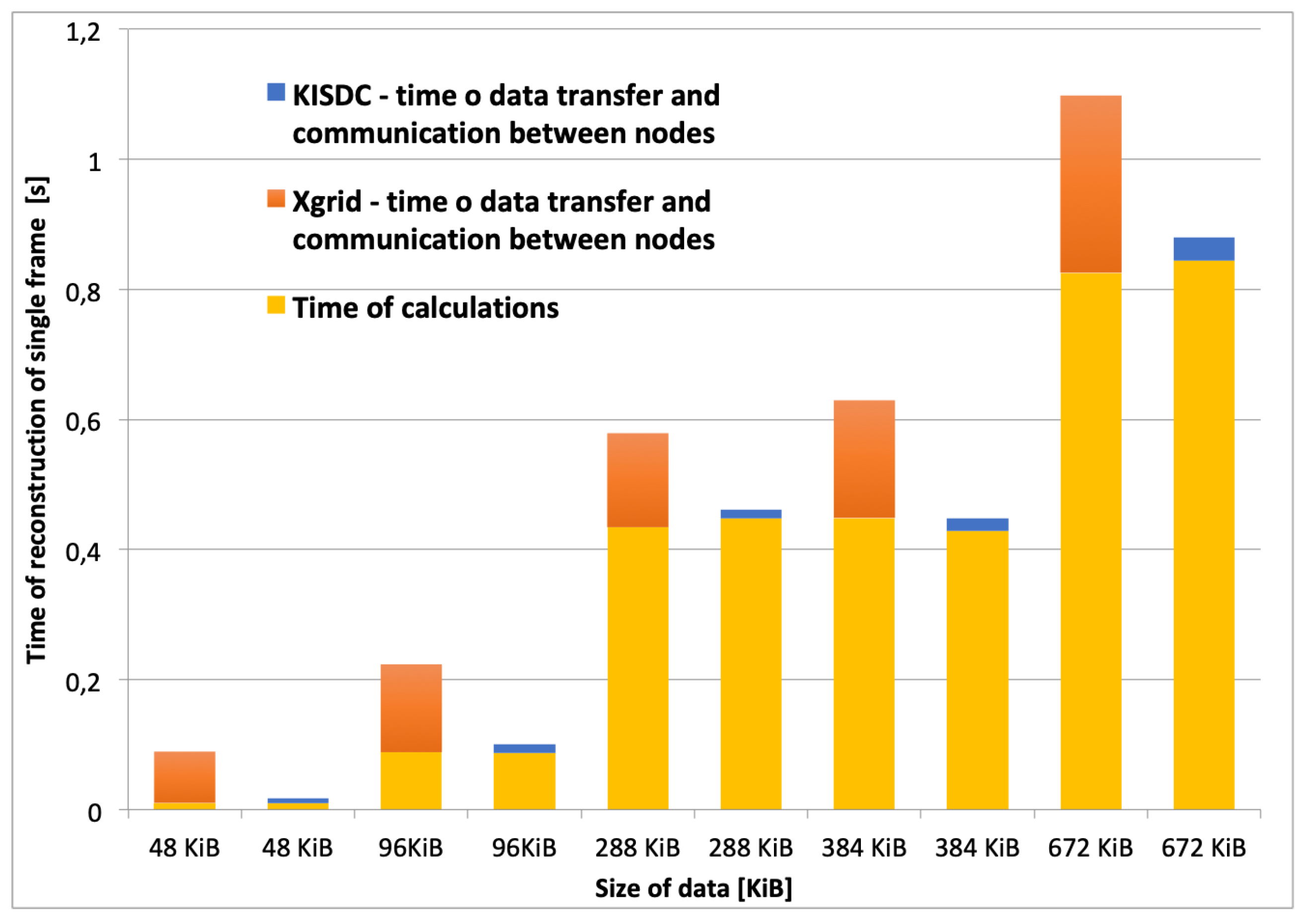

5.2. System Performance

5.3. Monitoring of Industrial Processes

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 3D | three dimensional |

| API | Application programming interface |

| CPU | Central processing unit |

| ECT | Electrical capacitance tomography |

| FPGA | Field-programmable gate array |

| FTP | File transfer protocol |

| GPU | Graphics processing unit |

| HTTP | Hypertext transfer protocol |

| KIS | Computer engineering department (in Polish: Katedra Informatyki Stosowanej) |

| KISDC | KIS digital computing |

| LAN | Local area network |

| LBP | Linear back projection |

| RAM | Random access memory |

| SMB | Server message block |

References

- Grudzień, K.; Chaniecki, Z.; Romanowski, A.; Niedostatkiewicz, M.; Sankowski, D. ECT Image Analysis Methods for Shear Zone Measurements during Silo Discharging Process. Chin. J. Chem. Eng. 2012, 20, 337–345. [Google Scholar] [CrossRef]

- Garbaa, H.; Jackowska-Strumiłło, L.; Grudzień, K.; Romanowski, A. Funnel Flow Dynamics Prediction Based on Electrical Capacitance Tomography and Artificial Neural Networks. In Proceedings of the International Interdisciplinary PhD Workshop, Brno, Czech Republic, 12–15 Sepember 2016; pp. 58–62. [Google Scholar]

- Banasiak, R.; Wajman, R.; Fidos, H.; Jaworski, T.; Fiderek, P.; Kapusta, P.; Majchrowicz, M.; Sankowski, D. Fusion of three-dimensional electrical capacitance tomography and fuzzy logic inference for phases fraction and flow structures identification in horizontal and vertical gas-liquid flow pipelines. In Proceedings of the 7th World Congress on Industrial Process Tomography, Kraków, Poland, 2–5 September 2013; pp. 818–827. [Google Scholar]

- Soleimani, M.; Mitchell, C.N.; Banasiak, R.; Wajman, R.; Adler, A. Four-dimensional electrical capacitance tomography imaging using experimental data. Prog. Electromagn. Res. PIER 2009, 90, 171–186. [Google Scholar] [CrossRef]

- Minervini, M.; Rusu, C.; Tsaftaris, S. Computationally Efficient Data and Application Driven Color Transforms for the Compression and Enhancement of Images and Video. In Color Image and Video Enhancement; Celebi, E., Lecca, M., Smolka, B., Eds.; Springer: Cham, Switzerland, 2015; pp. 371–393. [Google Scholar]

- Polakowski, K.; Filipowicz, S.; Sikora, J.; Rymarczyk, T. Quality of Imaging in Multipath Tomography. Prz. Elektrotech. 2009, 2009 85, 134–136. [Google Scholar]

- Rymarczyk, T.; Sikora, J.; Adamkiewicz, P. Effective algorithm for tomography imaging in three-dimensional problems. Prz. Elektrotech. 2019, 95, 115–118. [Google Scholar]

- Yang, W.Q.; Peng, L. Review of image reconstruction algorithms for electrical capacitance tomography, Part 1: Principles. In Proceedings of the International Symposium on Process Tomography, Wrocław, Poland, 11–12 September 2002; pp. 123–132. [Google Scholar]

- Yang, W.Q.; Peng, L. Review of image reconstruction algorithms for electrical capacitance tomography, Part 2: Evaluation. In Proceedings of the International Symposium on Process Tomography, Wrocław, Poland, 11–12 September 2002; pp. 133–140. [Google Scholar]

- Rashetnia, R.; Alla, O.; Gonzalez-berrios, G.; Seppanen, A.; Ghaz, M. Electrical Resistance Tomography Based Sensing Skin with Internal Electrodes for Crack Detection in Large Structures: Preliminary Results Standard Difference Imaging Study. Mater. Eval. 2018, 10, 1405–1413. [Google Scholar]

- Bieberle, A.; Frust, T.; Wagner, M.; Bieberle, M.; Hampel, U. Data processing performance analysis for ultrafast electron beam X-ray CT using parallel processing hardware architectures. Flow Meas. Instrum. 2017, 53A, 180–188. [Google Scholar] [CrossRef]

- Kapusta, P.; Majchrowicz, M. Accelerating Image reconstruction algorithms in Electrical Capacitance Tomography using Multi-GPU system. In Advanced Numerical Modelling, International Interdisciplinary PhD Workshop; Electrotechnical Institute: Warsaw, Poland, 2011; pp. 47–49. [Google Scholar]

- Kapusta, P.; Majchrowicz, M.; Sankowski, D.; Jackowska-Strumiłło, L.; Banasiak, R. Distributed multi-node, multi-GPU, heterogeneous system for 3D image reconstruction in Electrical Capacitance Tomography—Network performance and application analysis. Przegląd Elektrotech. 2013, 89, 339–342. [Google Scholar]

- Majchrowicz, M.; Kapusta, P.; Banasiak, R. Applying parallel and distributed computing for image reconstruction in 3D Electrical Capacitance Tomography. Zesz. Nauk. Agh Autom. 2010, 14, 711–722. [Google Scholar]

- Majchrowicz, M.; Kapusta, P.; Jackowska-Strumiłło, L.; Sankowski, D. Acceleration of image reconstruction process in the Electrical Capacitance Tomography 3D in heterogeneous, multi-GPU system. Inform. Control Meas. Econ. Environ. Prot. (IAPGOS) 2017, 7, 37–41. [Google Scholar] [CrossRef]

- Kapusta, P.; Majchrowicz, M.; Sankowski, D.; Jackowska-Strumiłło, L. Acceleration of image reconstruction in 3D Electrical Capacitance Tomography in heterogeneous, multi-GPU system using sparse matrix computations and Finite Element Method. In Proceedings of the 2016 Federated Conference on Computer Science and Information Systems (ACSIS), Gdańsk, Polan, 11–14 September 2016; Volume 8, pp. 679–683. [Google Scholar]

- Gunes, C.; Acero, D.O.; Marashdeh, Q.M.; Teixeira, F.L. Acceleration of Electrical Capacitance Volume Tomography Imaging by Fourier-Based Sparse Representations. IEEE Sens. J. 2018, 18, 9649–9659. [Google Scholar] [CrossRef]

- Garbaa, H.; Jackowska-Strumiłło, L.; Grudzień, K.; Romanowski, A. Neural network approach to ECT inverse problem solving for estimation of gravitational solids flow. In Proceedings of the 2014 Federated Conference on Computer Science and Inf. Systems (AAIA’14), Warsaw, Poland, 7–10 September 2014; Volume 2, pp. 19–26. [Google Scholar]

- Garbaa, H.; Jackowska-Strumiłło, L.; Grudzień, K.; Romanowski, A. Application of electrical capacitance tomography and artificial neural networks to rapid estimation of cylindrical shape parameters of industrial flow structure. Arch. Electr. Eng. 2016, 65, 657–669. [Google Scholar] [CrossRef]

- Fiderek, P.; Kucharski, J.; Wajman, R. Fuzzy inference for two-phase gas-liquid flow type evaluation based on raw 3D ECT measurement data. Flow Meas. Instrum. 2017, 54, 88–96. [Google Scholar] [CrossRef]

- Deabes, W. FPGA Implementation of ECT Digital System for Imaging Conductive Materials. Algorithms 2019, 12, 28. [Google Scholar] [CrossRef]

- Sankowski, D.; Grudzień, K.; Chaniecki, Z.; Banasiak, R.; Wajman, R.; Romanowski, A. Process tomograhy development at Technical University of Lodz. In Electrical Capacitance Tomography Theoretical Basis and Applications; Sankowski, D., Sikora, J., Eds.; Wydawnictwo Książkowe Instytutu Elektrotechniki: Warszawa, Poland, 2010; pp. 70–95. [Google Scholar]

- Cugola, G.; Di Nitto, E.; Fuggetta, A. Exploiting an Event-Based Infrastructure to Develop Complex Distributed Systems. In Proceedings of the 20th International Conference on Software Engineering (ICSE’98), Kyoto, Japan, 19–25 April 1998; pp. 261–270. [Google Scholar]

- Gabassi, M.; Dupouy, B. Przetwarzanie Rozproszone w Systemie UNIX; Lupus: Warszawa, Poland, 1995. [Google Scholar]

- Czajkowski, K.; Fitzgerald, S.; Foster, I.; Kesselman, C. Grid Information Services for Distributed Resource Sharing. In Proceedings of the 10th IEEE International Symposium on High Performance Distributed Computing (HPDC-10), San Francisco, CA, USA, 7–9 August 2001; pp. 181–194. [Google Scholar]

- Czajkowski, K.; Foster, I.; Kesselman, C.; Sanger, V.; Tuecke, S. SNAP: A Protocol for Negociating Service Level Agreements and Coordinating Resource Management in Distributed Systems. In Job Scheduling Strategies for Parallel Processing. JSSPP 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 153–183. [Google Scholar]

- Nawrocki, W. LAN-Based Measurement Systems. In Measurement Systems and Sensors, 2nd ed.; Nawrocki, W., Ed.; Artech House Remote Sensing Library: London, UK, 2016; pp. 339–358. [Google Scholar]

- Carzaniga, A.; Rosenblum, D.; Wolf, A. Challenges for Distributed Event Services: Scalability vs. Expressiveness. In Proceedings of the ICSE’99 Workshop on Engineering Distributed Objects (EDO’99), Los Angeles, CA, USA, 17–18 May 1999. [Google Scholar]

- Carzaniga, A.; Rosenblum, D.; Wolf, A. Interfaces and Algorithms for a Wide-Area Event Notification Service. In Technical Report CU-CS-888-99; Department of Computer Science, University of Colorado: Boulder, CO, USA, 1999. [Google Scholar]

- Casanova, H.; Legrand, A.; Zagorodnov, D.; Berman, F. Heuristics for Scheduling Parameter Sweep Applications in Grid Environments. In Proceedings of the 9th Heterogeneous Computing Workshop (HCW’00), Cancun, Mexico, 1 May 2000; pp. 349–363. [Google Scholar]

- Chen, Y.; Katz, R.; Kubiatowicz, J. Dynamic Replica Placement for Scalable Content Delivery. In Peer-to-Peer Systems. IPTPS 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 306–318. [Google Scholar]

- Chervenak, A.; Foster, I.; Kesselman, C.; Salisbury, C.; Tuecke, S. The Data Grid: Towards and Architecture for the Distributed Management and Analysis of Large Scientific Data Sets. J. Netw. Comput. Appl. 2000, 23, 187–200. [Google Scholar] [CrossRef]

- Majchrowicz, M.; Kapusta, P.; Jackowska-Strumiłło, L.; Sankowski, D. Analysis of Application of Distributed Multi-Node, Multi-GPU Heterogeneous System for Acceleration of Image Reconstruction in Electrical Capacitance Tomography. Image Process. Commun. 2015, 20, 5–14. [Google Scholar] [CrossRef]

- Majchrowicz, M.; Kapusta, P.; Jackowska-Strumiłło, L.; Sankowski, D. Acceleration of image reconstruction in 3D Electrical Capacitance Tomography in heterogeneous, multi-GPU, multi-node distributed system. In Proceedings of the International Interdisciplinary PhD Workshop 2017, Lodz, Poland, 9–11 Sepember 2017; pp. 164–169. [Google Scholar]

- Majchrowicz, M.; Kapusta, P.; Jackowska-Strumiłło, L.; Sankowski, D. Acceleration of 3D ECT image reconstruction in heterogeneous, multi-GPU, multi-node distributed system. In Proceedings of the 2018 Federated Conference on Computer Science and Information Systems, Poznan, Poland, 9–12 September 2018; pp. 347–350. [Google Scholar]

- Tchorzewski, P.; Rymarczyk, T.; Sikora, J. Using Topological Algorithms to Solve Inverse Problem in Electrical Impedance Tomography. In Proceedings of the International Interdisciplinary PhD Workshop, Brno, Czech Republic, 12–15 Sepember 2016; pp. 46–50. [Google Scholar]

- Sun, B.; Yue, S.; Cui, Z.; Wang, H. A new linear back projection algorithm to electrical tomography based on measuring data decomposition. Meas. Sci. Technol. 2015, 26, 1270–1283. [Google Scholar] [CrossRef]

- Cui, Z.; Wang, Q.; Xue, Q.; Fan, W.; Zhang, L.; Cao, Z.; Sun, B.; Wang, H.; Yang, W. A review on image reconstruction algorithms for electrical capacitance/resistance tomography. Sens. Rev. 2016, 36, 429–445. [Google Scholar] [CrossRef]

- Wajman, R. New image reconstruction method for capacitance process tomography. Zesz. Nauk. Politech. Łódzkiej Elektr. 2008, 113, 107–113. [Google Scholar]

- Kapusta, P.; Duch, P.; Jaworski, T.; Kucharski, J.; Ślot, K. Generative network input shaping for controlling visual object rendition in Adversarial Networks. In Proceedings of the International Interdisciplinary PhD Workshop 2017, Lodz, Poland, 9–11 Sepember 2017; pp. 380–385. [Google Scholar]

- Majchrowicz, M.; Kapusta, P. Application of General-Purpose Computing on Graphics Processing Units for Acceleration of Basic Linear Algebra Operations and Principal Components Analysis Method. In Man-Machine Interactions 3, Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2014; Volume 242, pp. 519–527. [Google Scholar]

- Gall, F.L. Faster Algorithms for Rectangular Matrix Multiplication. arXiv 2012, arXiv:1204.1111v2. [Google Scholar]

- Romanowski, A.; Grudzien, K.; Banasiak, R.; Williams, R.A.; Sankowski, D. Hopper Flow Measurement Data Visualization: Developments Towards 3D. In Proceedings of the 5th World Congress on Industrial Process Tomography, Bergen, Norway, 3–6 September 2007. [Google Scholar]

- Fielding, R.; Gettys, J.; Mogul, J.; Frystyk, H.; Masinter, L.; Leach, P.; Berners-Lee, T. Hypertext Transfer Protocol–HTTP/1.1. Request For Comments (RFC); Internet Engineering Task Force: Wilmington, DE, USA, 1999. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Majchrowicz, M.; Kapusta, P.; Jackowska-Strumiłło, L.; Banasiak, R.; Sankowski, D. Multi-GPU, Multi-Node Algorithms for Acceleration of Image Reconstruction in 3D Electrical Capacitance Tomography in Heterogeneous Distributed System. Sensors 2020, 20, 391. https://doi.org/10.3390/s20020391

Majchrowicz M, Kapusta P, Jackowska-Strumiłło L, Banasiak R, Sankowski D. Multi-GPU, Multi-Node Algorithms for Acceleration of Image Reconstruction in 3D Electrical Capacitance Tomography in Heterogeneous Distributed System. Sensors. 2020; 20(2):391. https://doi.org/10.3390/s20020391

Chicago/Turabian StyleMajchrowicz, Michał, Paweł Kapusta, Lidia Jackowska-Strumiłło, Robert Banasiak, and Dominik Sankowski. 2020. "Multi-GPU, Multi-Node Algorithms for Acceleration of Image Reconstruction in 3D Electrical Capacitance Tomography in Heterogeneous Distributed System" Sensors 20, no. 2: 391. https://doi.org/10.3390/s20020391

APA StyleMajchrowicz, M., Kapusta, P., Jackowska-Strumiłło, L., Banasiak, R., & Sankowski, D. (2020). Multi-GPU, Multi-Node Algorithms for Acceleration of Image Reconstruction in 3D Electrical Capacitance Tomography in Heterogeneous Distributed System. Sensors, 20(2), 391. https://doi.org/10.3390/s20020391