Rough or Noisy? Metrics for Noise Estimation in SfM Reconstructions

Abstract

1. Introduction

2. State of the Art

- We present a number of metrics that can be easily calculated as part of the normal SfM workflow;

- We explore the correlation between each metric and the presence of noise on reconstructed objects;

- We train classical supervised learning methods using combinations of these metrics and demonstrate how to verify their accuracy;

- To verify the robustness of the metrics, we test them on objects with varying surface textures, shapes and sizes;

- We provide the captured database of images used to create the SfM reconstructions, together with the manually annotated ground truth data as part of the paper. This way others can use it for comparison and testing noise estimation and removal implementations.

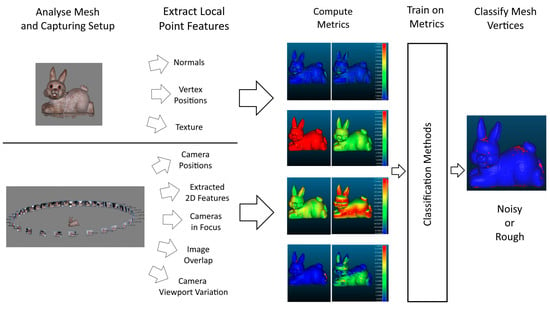

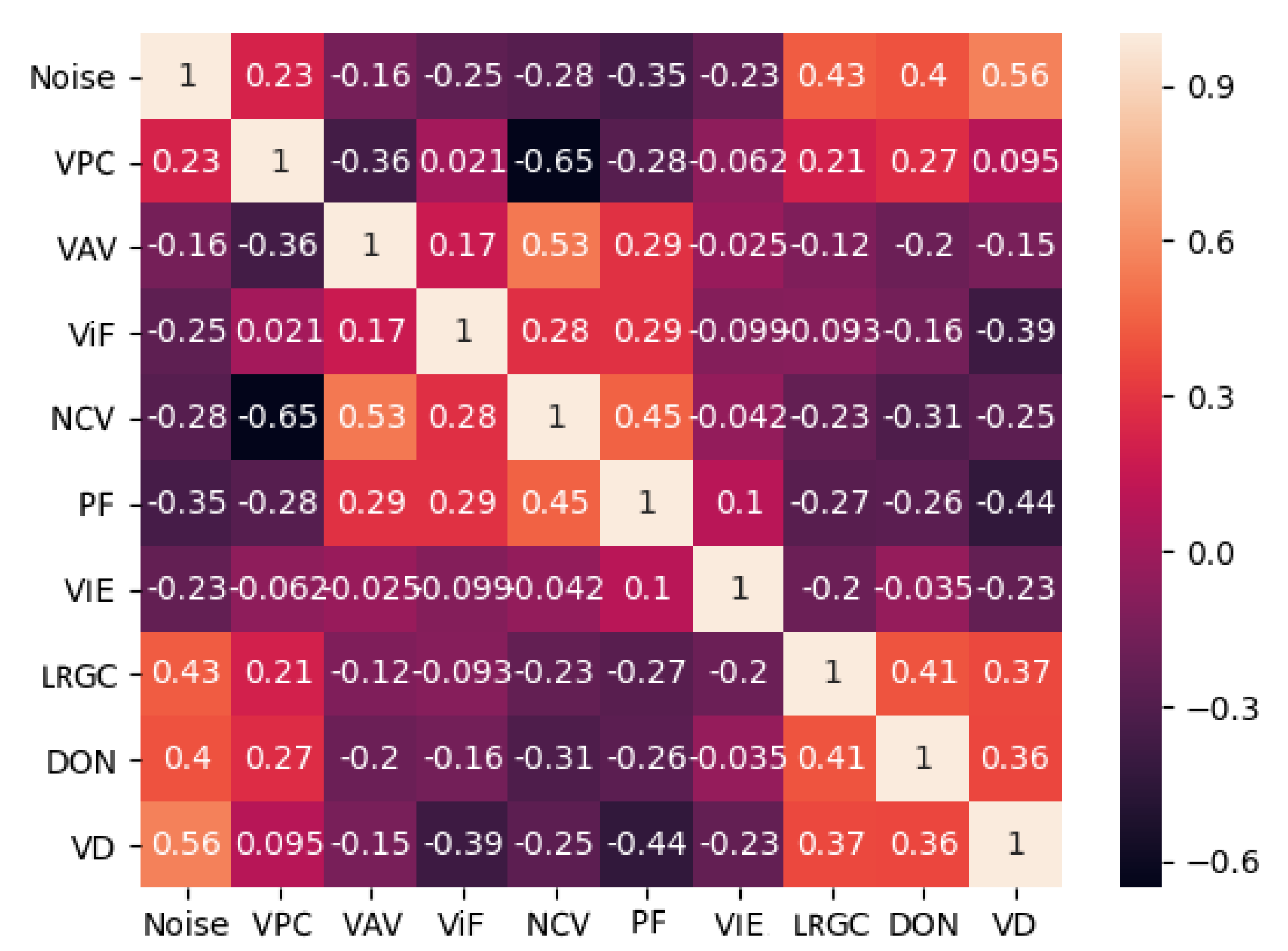

3. Methodology

- Noise manifests as either clumped together high frequency vertices or flat patches and holes—when the initial feature detection and matching methods in the SfM pipeline do not produce enough correct matches, the produced 3D surfaces can end up with overlapping or missing parts. These manifest in geometrical surface errors, as seen in Figure 3a;

- SfM noise normally comes from smooth, monochrome colored surfaces—monochrome surfaces normally lack robust features like edges and angles, while smooth and transparent surfaces, produce reflections, which change with the view direction, making correct feature matching impossible (Figure 3b);

- Noise is present on parts of the object that have not been seen from enough camera positions—SfM needs to gather information of the object from multiple directions, to provide a correct geometrical representation of the micro and macro shape of the surfaces. Not enough camera variation can lead to 3D surface “guessing” and deformed patches. An example of this can be seen in Figure 3c, where one object obscures another surface from being seen by the cameras resulting in noise;

- Noise is present on parts of the object that have been seen from enough camera positions, but were not in focus—surface features need to be extracted and matched, but if parts of the object are blurred and out of focus, not enough information can be extracted from them. This is visualized in Figure 3d, where the back of the object becomes out of focus, resulting in not enough features captured;

- Noise is present on parts of the object that have been seen from enough camera positions, but those positions were not diverse enough—if all the capturing positions are from the same direction, not enough information can be extracted for the shape of the surface. This can be seen in Figure 3e, where multiple images are taken from a surface, but none of them have enough angular diversity in vertical direction, resulting in the reconstruction of the bottom of the surface being noisy.

3.1. General Mesh-Based Metrics

3.1.1. Local Roughness from Gaussian Curvature (LRGCm)

3.1.2. Difference of Normals (DONm)

3.1.3. Vertex Local Spatial Density (VDm)

3.1.4. Vertex Local Intensity Entropy (VIEm)

3.2. Capturing Setup-Based Metrics

3.2.1. Number of Cameras Seeing Each Vertex (NCVs)

3.2.2. Projected 2D Features (PFs)

3.2.3. Vertices in Focus (ViFs)

3.2.4. Vertices Seen from Parallel Cameras (VPCs)

3.2.5. Vertex Area of Visibility (VAVs)

4. Implementation

5. Testing and Results

5.1. Data Gathering

- By material—we had objects made from stone, ceramics, plastic, clay, wood and metal. This guaranteed that we could have varying surface properties like reflectivity, texture and color variation;

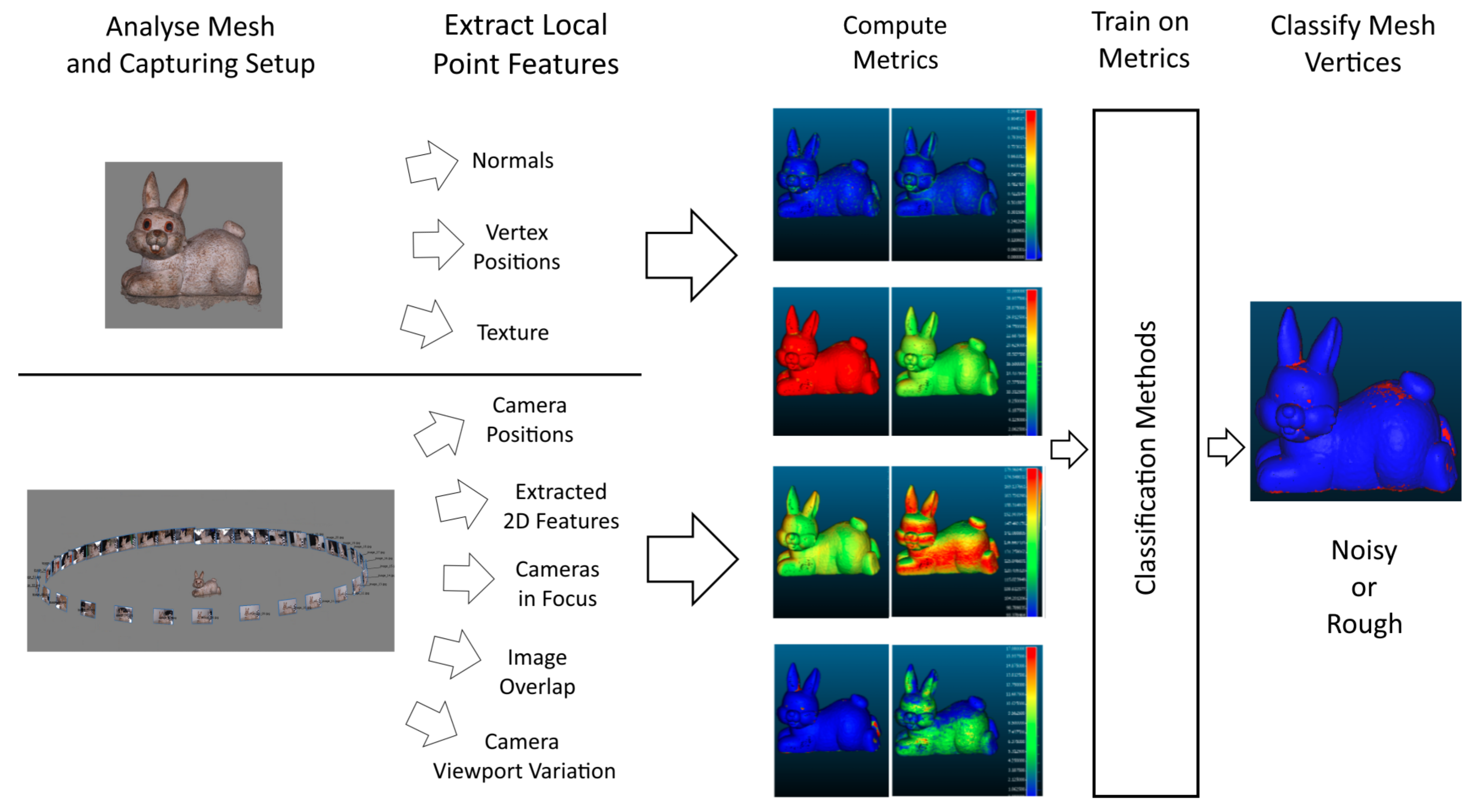

5.2. Correlation Analysis

5.3. Initial Testing

5.4. Subset Testing

5.5. Industrial Context Test

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| SfM | Structure from Motion |

| LRGC | Local Roughness from Gaussian Curvature |

| DON | Difference of Normals |

| VD | Vertex Local Spatial Density |

| VIE | Vertex Local Intensity Entropy |

| NCV | Number of Cameras Seeing Each Vertex |

| PF | Projected 2D features |

| ViF | Vertices in Focus |

| VPC | Vertices Seen from Parallel Cameras |

| VAV | Vetex Area of Visibility |

| GC | Gaussian Curvature |

| SVM | Support Vector Machines |

| KNN | K-nearest Neighbours |

| NB | Naive Bayes |

| DT | Decision Trees |

| RF | Random Forest |

| AB | AdaBoost |

References

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Tuttas, S.; Braun, A.; Borrmann, A.; Stilla, U. Acquisition and consecutive registration of photogrammetric point clouds for construction progress monitoring using a 4D BIM. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 3–15. [Google Scholar] [CrossRef]

- Chaiyasarn, K.; Kim, T.K.; Viola, F.; Cipolla, R.; Soga, K. Distortion-free image mosaicing for tunnel inspection based on robust cylindrical surface estimation through structure from motion. J. Comput. Civ. Eng. 2015, 30. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D. Hierarchical dense structure-from-motion reconstructions for infrastructure condition assessment. J. Comput. Civ. Eng. 2016, 31. [Google Scholar] [CrossRef]

- Zhang, D.; Burnham, K.; Mcdonald, L.; Macleod, C.; Dobie, G.; Summan, R.; Pierce, G. Remote inspection of wind turbine blades using UAV with photogrammetry payload. In Proceedings of the 56th Annual British Conference of Non-Destructive Testing-NDT 2017, Telford, UK, 4–7 September 2017. [Google Scholar]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; James, M.R.; Akciz, S.; Thiele, S.T.; Bangash, H.A. Ground-based and UAV-based photogrammetry: A multi-scale, high-resolution mapping tool for structural geology and paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Cho, Y.; Clary, R. Application of SfM-MVS Photogrammetry in Geology Virtual Field Trips. In Proceedings of the 81st Annual Meeting of Mississippi Academy Sciences, Hattiesburg, MS, USA, 23–24 February 2017. [Google Scholar]

- Bi, H.; Zheng, W.; Ren, Z.; Zeng, J.; Yu, J. Using an unmanned aerial vehicle for topography mapping of the fault zone based on structure from motion photogrammetry. Int. J. Remote Sens. 2017, 38, 2495–2510. [Google Scholar] [CrossRef]

- Kersten, T.P.; Lindstaedt, M. Image-based low-cost systems for automatic 3D recording and modelling of archaeological finds and objects. In Euro-Mediterranean Conference; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–10. [Google Scholar]

- Kyriakaki, G.; Doulamis, A.; Doulamis, N.; Ioannides, M.; Makantasis, K.; Protopapadakis, E.; Hadjiprocopis, A.; Wenzel, K.; Fritsch, D.; Klein, M.; et al. 4D reconstruction of tangible cultural heritage objects from web-retrieved images. Int. J. Herit. Digit. Era 2014, 3, 431–451. [Google Scholar] [CrossRef]

- Hixon, S.W.; Lipo, C.P.; Hunt, T.L.; Lee, C. Using Structure from Motion mapping to record and analyze details of the Colossal Hats (Pukao) of monumental statues on Rapa Nui (Easter Island). Adv. Archaeol. Pract. 2018, 6, 42–57. [Google Scholar] [CrossRef]

- Thoeni, K.; Giacomini, A.; Murtagh, R.; Kniest, E. A comparison of multi-view 3D reconstruction of a rock wall using several cameras and a laser scanner. Int. Arch. Photogramm. Remote Sens. 2014, 40, 573. [Google Scholar] [CrossRef]

- Schöning, J.; Heidemann, G. Evaluation of multi-view 3D reconstruction software. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 2015; pp. 450–461. [Google Scholar]

- Nikolov, I.; Madsen, C. Benchmarking close-range structure from motion 3D reconstruction software under varying capturing conditions. In Proceedings of the Euro-Mediterranean Conference Conference, Nicosia, Cyprus, 31 October–5 November 2016. [Google Scholar]

- Agisoft. Metashape. 2010. Available online: http://www.agisoft.com/ (accessed on 20 September 2019).

- Bentley. ContextCapture. 2016. Available online: https://www.bentley.com/ (accessed on 20 September 2019).

- CapturingReality. Reality Capture. 2016. Available online: https://www.capturingreality.com/ (accessed on 20 September 2019).

- Kim, B.; Rossignac, J. Geofilter: Geometric selection of mesh filter parameters. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2005; Volume 24, pp. 295–302. [Google Scholar]

- Nealen, A.; Igarashi, T.; Sorkine, O.; Alexa, M. Laplacian mesh optimization. In Proceedings of the 4th International Conference on Computer Graphics and Interactive Techniques in Australasia and Southeast Asia 2006, Kuala Lumpur, Malaysia, 29 November–2 December 2006; pp. 381–389. [Google Scholar]

- Su, Z.X.; Wang, H.; Cao, J.J. Mesh denoising based on differential coordinates. In Proceedings of the 2009 IEEE International Conference on Shape Modeling and Applications, Beijing, China, 26–28 June 2009; pp. 1–6. [Google Scholar]

- Lu, X.; Chen, W.; Schaefer, S. Robust mesh denoising via vertex pre-filtering and l1-median normal filtering. Comput. Aided Geom. Des. 2017, 54, 49–60. [Google Scholar] [CrossRef]

- Wang, P.S.; Liu, Y.; Tong, X. Mesh denoising via cascaded normal regression. ACM Trans. Graph. 2016, 35, 232. [Google Scholar] [CrossRef]

- Lu, X.; Liu, X.; Deng, Z.; Chen, W. An efficient approach for feature-preserving mesh denoising. Opt. Lasers Eng. 2017, 90, 186–195. [Google Scholar] [CrossRef]

- Zheng, Y.; Fu, H.; Au, O.K.C.; Tai, C.L. Bilateral normal filtering for mesh denoising. IEEE Trans. Vis. Comput. Graph. 2010, 17, 1521–1530. [Google Scholar] [CrossRef] [PubMed]

- Wasenmüller, O.; Bleser, G.; Stricker, D. Joint bilateral mesh denoising using color information and local anti-shrinking. J. Wscg. 2015, 23, 27–34. [Google Scholar]

- Lavoué, G. A local roughness measure for 3D meshes and its application to visual masking. ACM Trans. Appl. Percept. 2009, 5, 21. [Google Scholar] [CrossRef]

- Wang, K.; Torkhani, F.; Montanvert, A. A fast roughness-based approach to the assessment of 3D mesh visual quality. Comput. Graph. 2012, 36, 808–818. [Google Scholar] [CrossRef]

- Song, R.; Liu, Y.; Martin, R.R.; Rosin, P.L. Mesh saliency via spectral processing. ACM Trans. Graph. 2014, 33, 6. [Google Scholar] [CrossRef]

- Guo, J.; Vidal, V.; Baskurt, A.; Lavoué, G. Evaluating the local visibility of geometric artifacts. In Proceedings of the ACM SIGGRAPH Symposium on Applied Perception, Tübingen, Germany, 13–14 September 2015; pp. 91–98. [Google Scholar]

- Ioannou, Y.; Taati, B.; Harrap, R.; Greenspan, M. Difference of normals as a multi-scale operator in unorganized point clouds. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 501–508. [Google Scholar]

- Rabbani, T.; van den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2006, 36, 248–253. [Google Scholar]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote. Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Huhle, B.; Schairer, T.; Jenke, P.; Straßer, W. Robust non-local denoising of colored depth data. In Proceedings of the 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Anchorage, AK, USA, 23–28 June 2008; pp. 1–7. [Google Scholar]

- Shiozaki, A. Edge extraction using entropy operator. Comput. Vision Graph. Image Process. 1986, 36, 1–9. [Google Scholar] [CrossRef]

- Favalli, M.; Fornaciai, A.; Isola, I.; Tarquini, S.; Nannipieri, L. Multiview 3D reconstruction in geosciences. Comput. Geosci. 2012, 44, 168–176. [Google Scholar] [CrossRef]

- D’Amico, N.; Yu, T. Accuracy analysis of point cloud modeling for evaluating concrete specimens. Nondestructive Characterization and Monitoring of Advanced Materials, Aerospace, and Civil Infrastructure 2017. In In Proceedings of the International Society for Optics and Photonics, San Diego, CA, USA, 25–29 March 2017; Volume 10169, p. 101691. [Google Scholar]

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion*. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; van Gool, L. Surf: Speeded up robust features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Kodak. Optical Formulas and Their Applications; Kodak: Rochester, NY, USA, 1969. [Google Scholar]

- Marčiš, M. Quality of 3D models generated by SFM technology. Slovak J.Civ. Eng. 2013, 21, 13–24. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Bradski, G. The OpenCV Library. J. Softw. Tools 2000, 25, 120–125. [Google Scholar]

- Technologies, U. Unity. 2005. Available online: unity.com (accessed on 20 July 2020).

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson correlation coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Zhang, D.; Watson, R.; Dobie, G.; MacLeod, C.; Khan, A.; Pierce, G. Quantifying impacts on remote photogrammetric inspection using unmanned aerial vehicles. Eng. Struct. 2020, 209, 109940. [Google Scholar] [CrossRef]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

| Observation | Metrics | Type |

|---|---|---|

| 1 | Local Roughness from Gaussian Curvature () Difference of Normals () Vertex Local Spatial Density () | Mesh-based |

| 2 | Vertex Local Intensity Entropy () | Mesh-based |

| 3 | Number of Cameras Seeing Each Vertex () Projected 2D Features () | Capturing Setup-based |

| 4 | Vertices in Focus () | Capturing Setup-based |

| 5 | Vertices Seen from Parallel Cameras () Vertex Area of Visibility () | Capturing Setup-based |

| Method | Parameters |

|---|---|

| SVM | C = 8, kernel = linear, gamma = scale |

| RF | n_estimators=150, max_depth=10, min_sample_split = 3 |

| AB | n_estimators=150, learning_rate = 0.5 |

| KNN | n_neighbors = 5, weights = uniform, algorithm = auto |

| NB | default parameters |

| DT | criterion= entropy, max_depth=10, min_sample_split = 2 |

| Method | ACC | Precision | ||

|---|---|---|---|---|

| SVM | 0.816 | 0.569 | 0.842 | 0.679 |

| RF | 0.824 | 0.580 | 0.879 | 0.699 |

| AB | 0.851 | 0.630 | 0.844 | 0.742 |

| KNN | 0.812 | 0.568 | 0.789 | 0.660 |

| NB | 0.809 | 0.558 | 0.832 | 0.668 |

| DT | 0.824 | 0.578 | 0.885 | 0.699 |

| Testing Scenario | Description |

|---|---|

| 1 | and separately |

| 2 | All metrics, with and without the most correlated metric— |

| 3 | Mesh-based versus capturing setup-based metrics |

| 4 | Each capturing setup-based metric’s impact on the results |

| 5 | Impact on the results from different combinations of setup-based metrics |

| Subsets | ACC | Precision | Recall | F1 | Testing Scenario |

|---|---|---|---|---|---|

| Only | 0.723 | 0.492 | 0.652 | 0.574 | 1 |

| Only | 0.686 | 0.407 | 0.788 | 0.537 | 1 |

| All, without | 0.889 | 0.674 | 0.863 | 0.756 | 2 |

| All, with | 0.852 | 0.635 | 0.848 | 0.725 | 2 |

| , , | 0.828 | 0.592 | 0.833 | 0.692 | 3 |

| , , , | 0.837 | 0.611 | 0.822 | 0.701 | 3 |

| , , , | 0.707 | 0.425 | 0.753 | 0.544 | 3 |

| , , , | 0.840 | 0.615 | 0.829 | 0.706 | 4 |

| , , , | 0.838 | 0.615 | 0.809 | 0.699 | 4 |

| , , , | 0.837 | 0.612 | 0.811 | 0.698 | 4 |

| , , , | 0.839 | 0.614 | 0.824 | 0.704 | 4 |

| , , , | 0.831 | 0.603 | 0.799 | 0.701 | 4 |

| , , , , | 0.814 | 0.565 | 0.869 | 0.683 | 5 |

| , , , , , | 0.839 | 0.615 | 0.822 | 0.703 | 5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nikolov, I.; Madsen, C. Rough or Noisy? Metrics for Noise Estimation in SfM Reconstructions. Sensors 2020, 20, 5725. https://doi.org/10.3390/s20195725

Nikolov I, Madsen C. Rough or Noisy? Metrics for Noise Estimation in SfM Reconstructions. Sensors. 2020; 20(19):5725. https://doi.org/10.3390/s20195725

Chicago/Turabian StyleNikolov, Ivan, and Claus Madsen. 2020. "Rough or Noisy? Metrics for Noise Estimation in SfM Reconstructions" Sensors 20, no. 19: 5725. https://doi.org/10.3390/s20195725

APA StyleNikolov, I., & Madsen, C. (2020). Rough or Noisy? Metrics for Noise Estimation in SfM Reconstructions. Sensors, 20(19), 5725. https://doi.org/10.3390/s20195725