HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming

Abstract

1. Introduction

2. Related Works

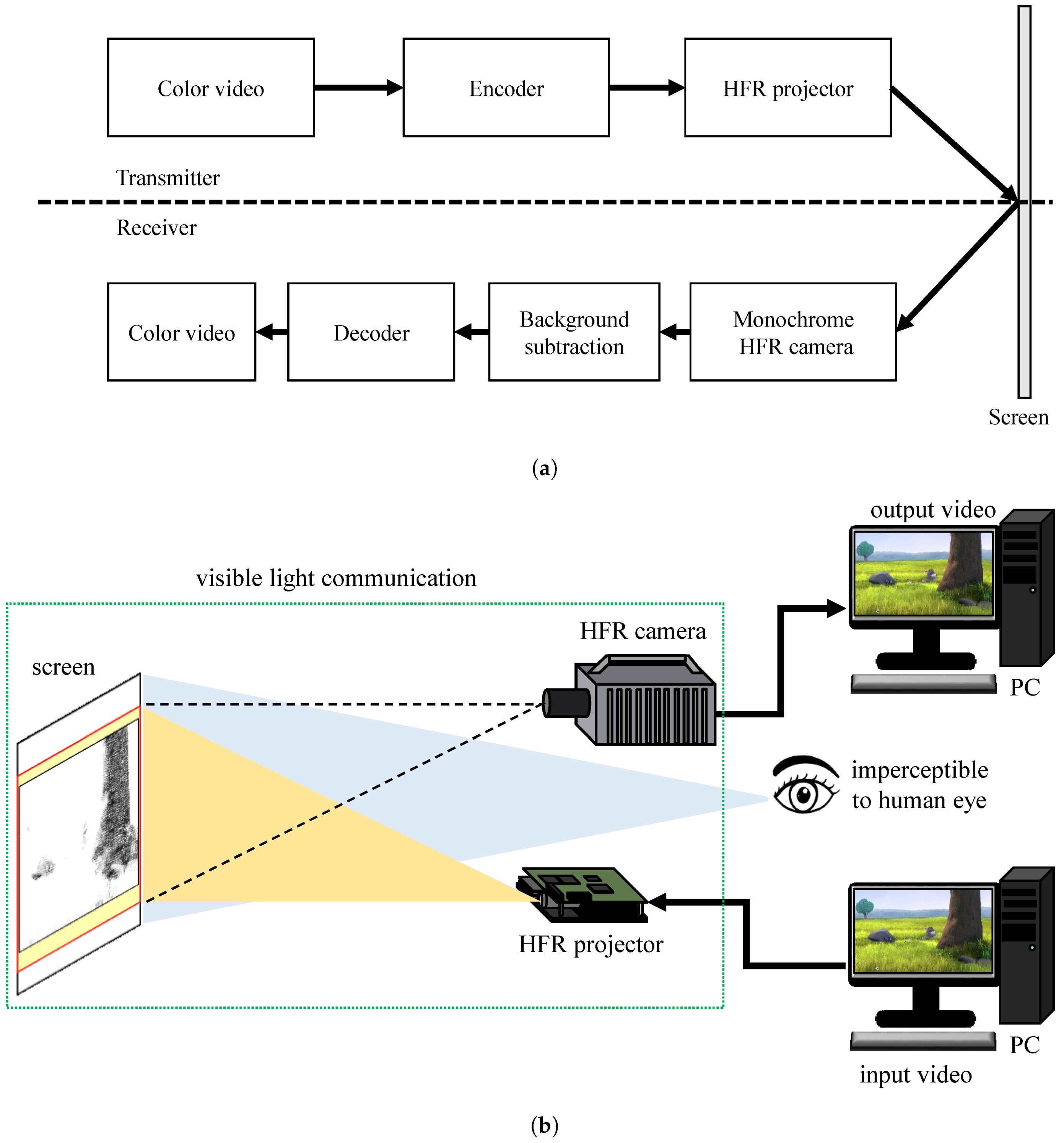

3. HFR Projector-Camera-Based VLC System

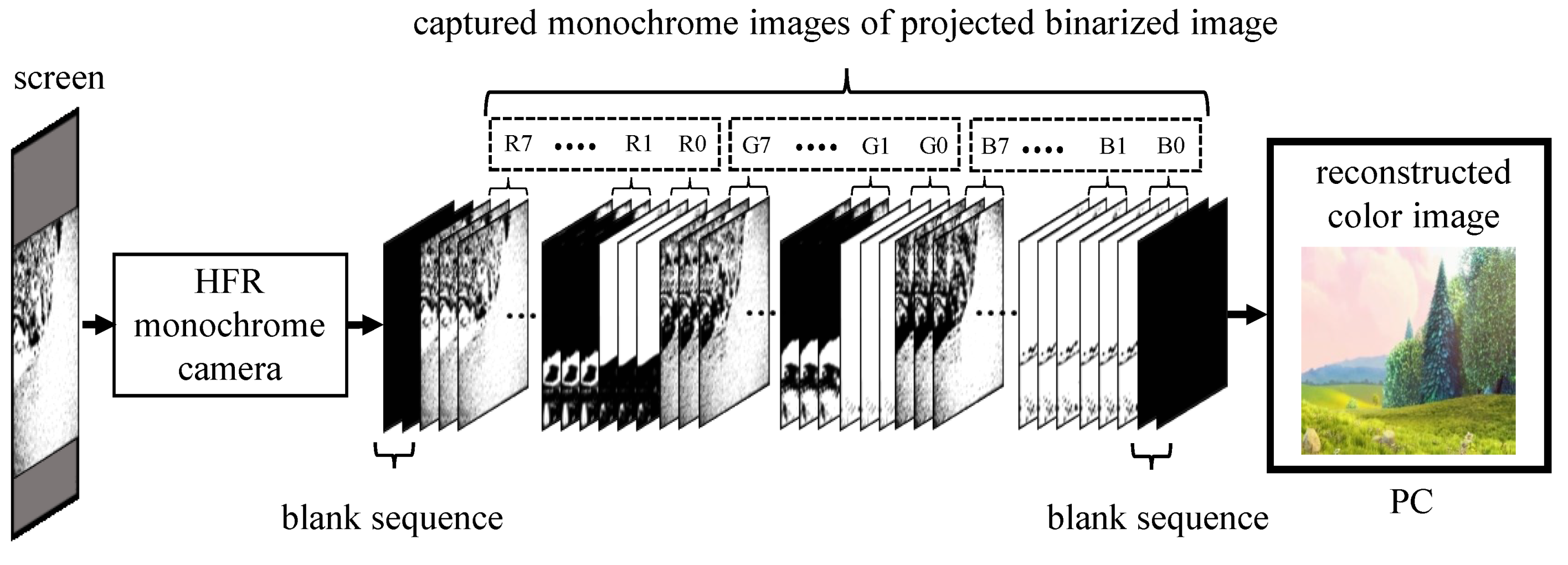

3.1. VLC System

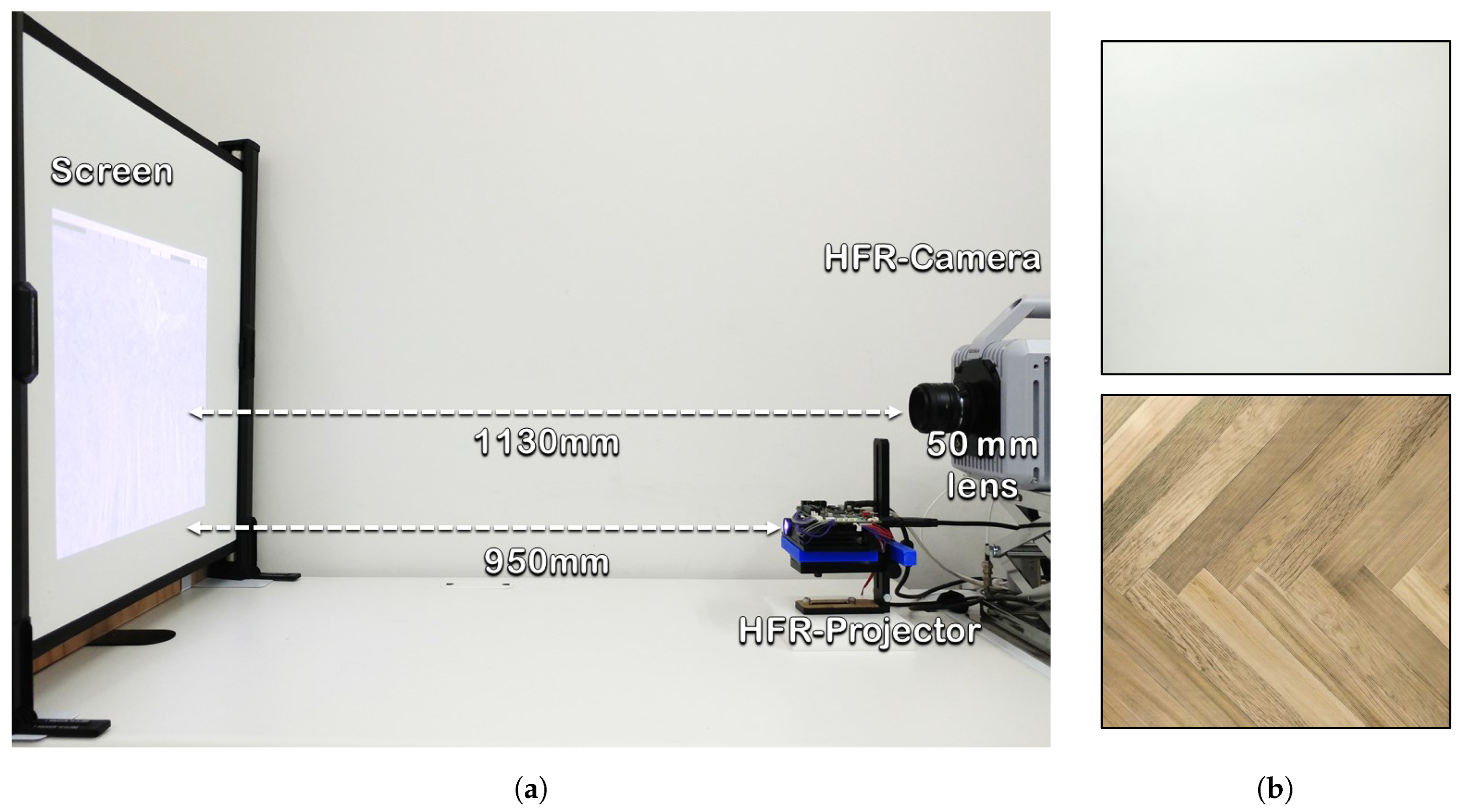

3.2. System Configuration

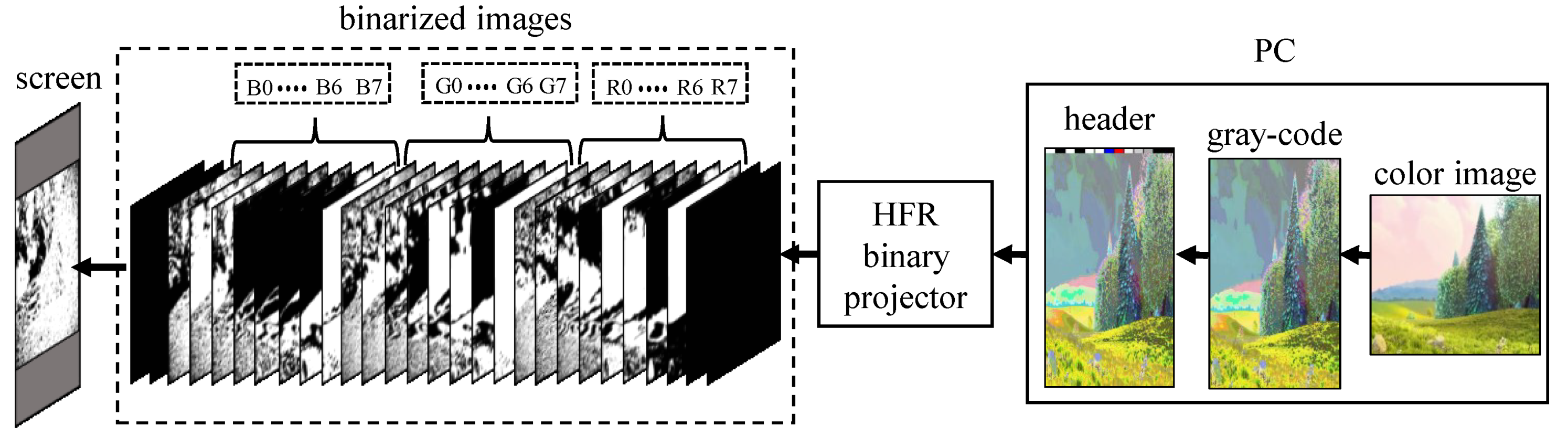

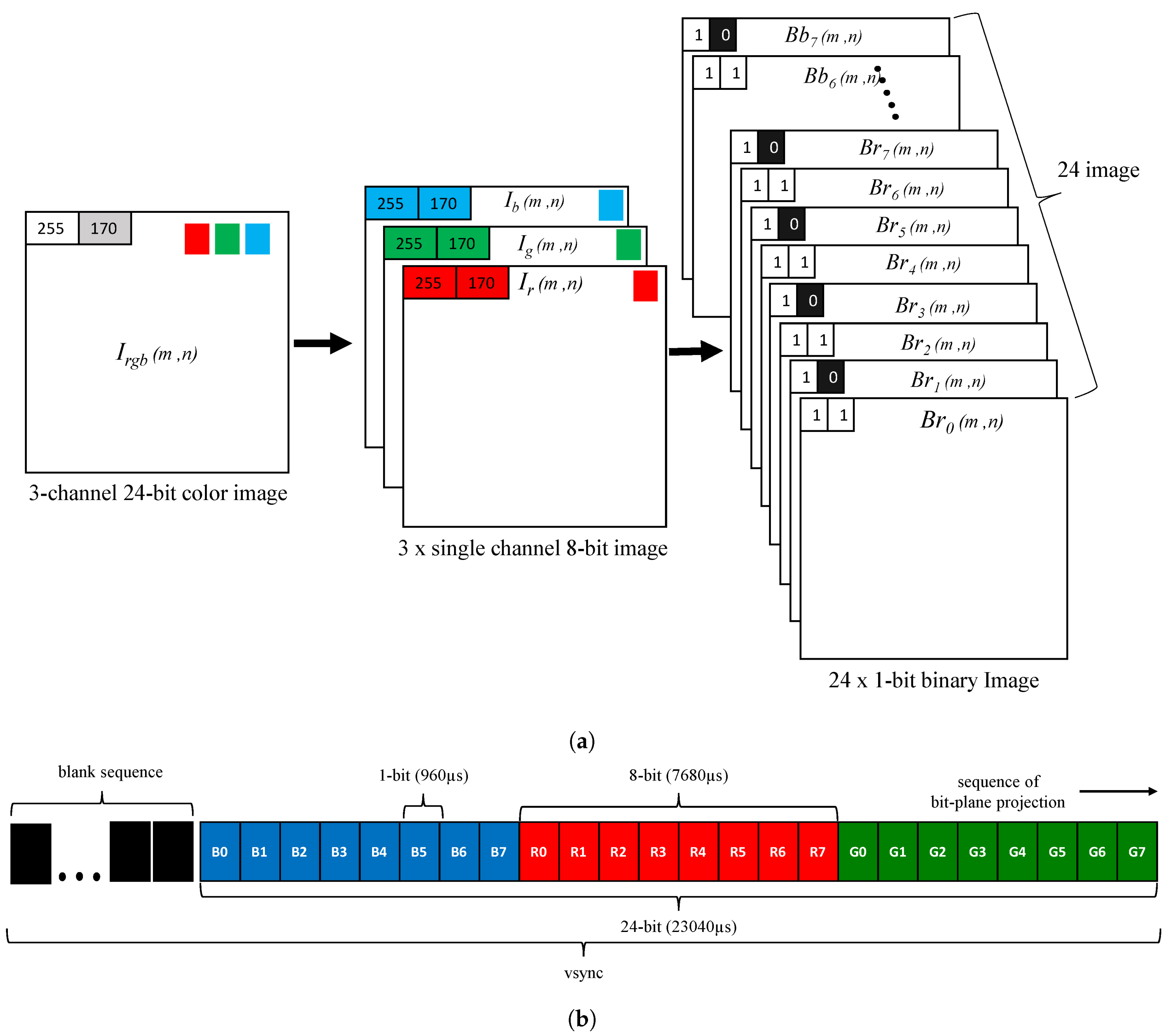

4. Transmitter Encoding System

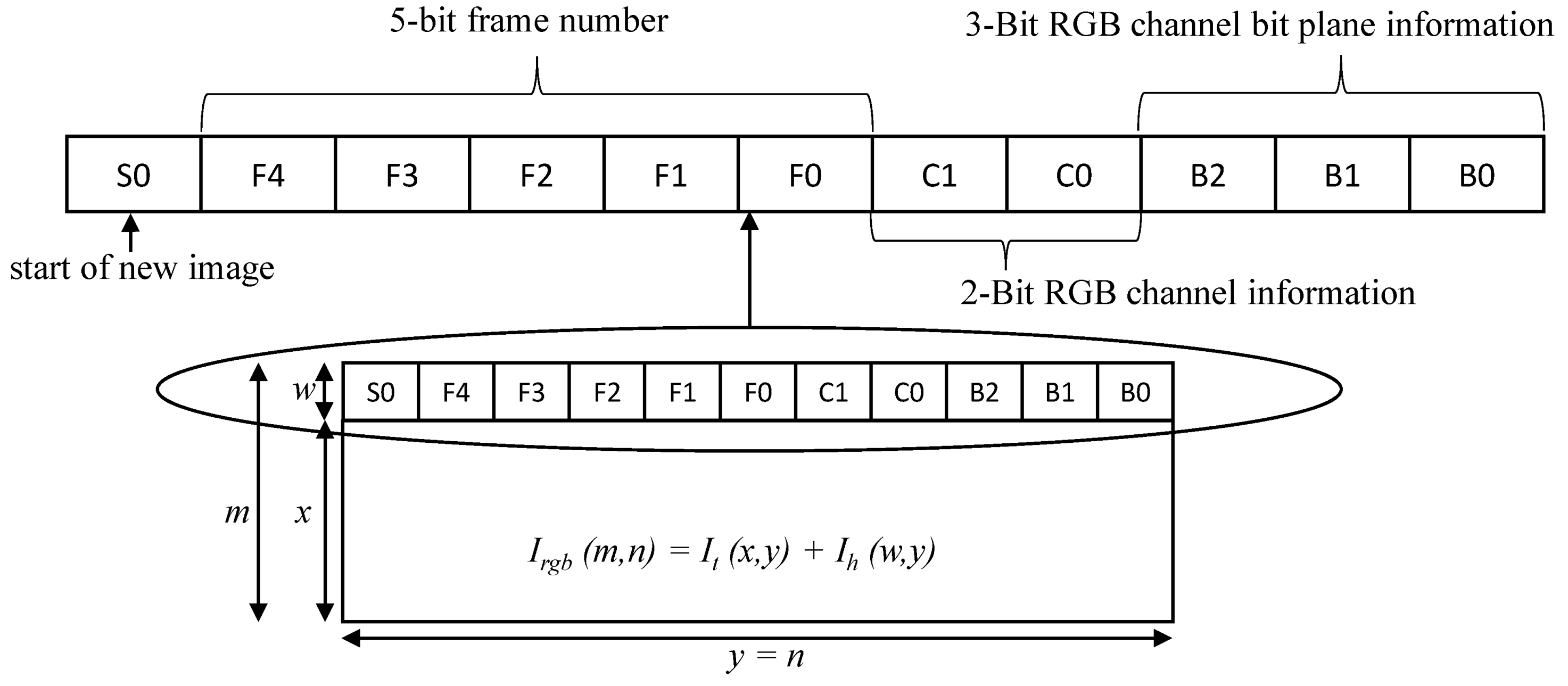

4.1. Header Information

4.2. Projection Pattern

4.3. Gray-Code Encoding

5. Receiver Decoding System

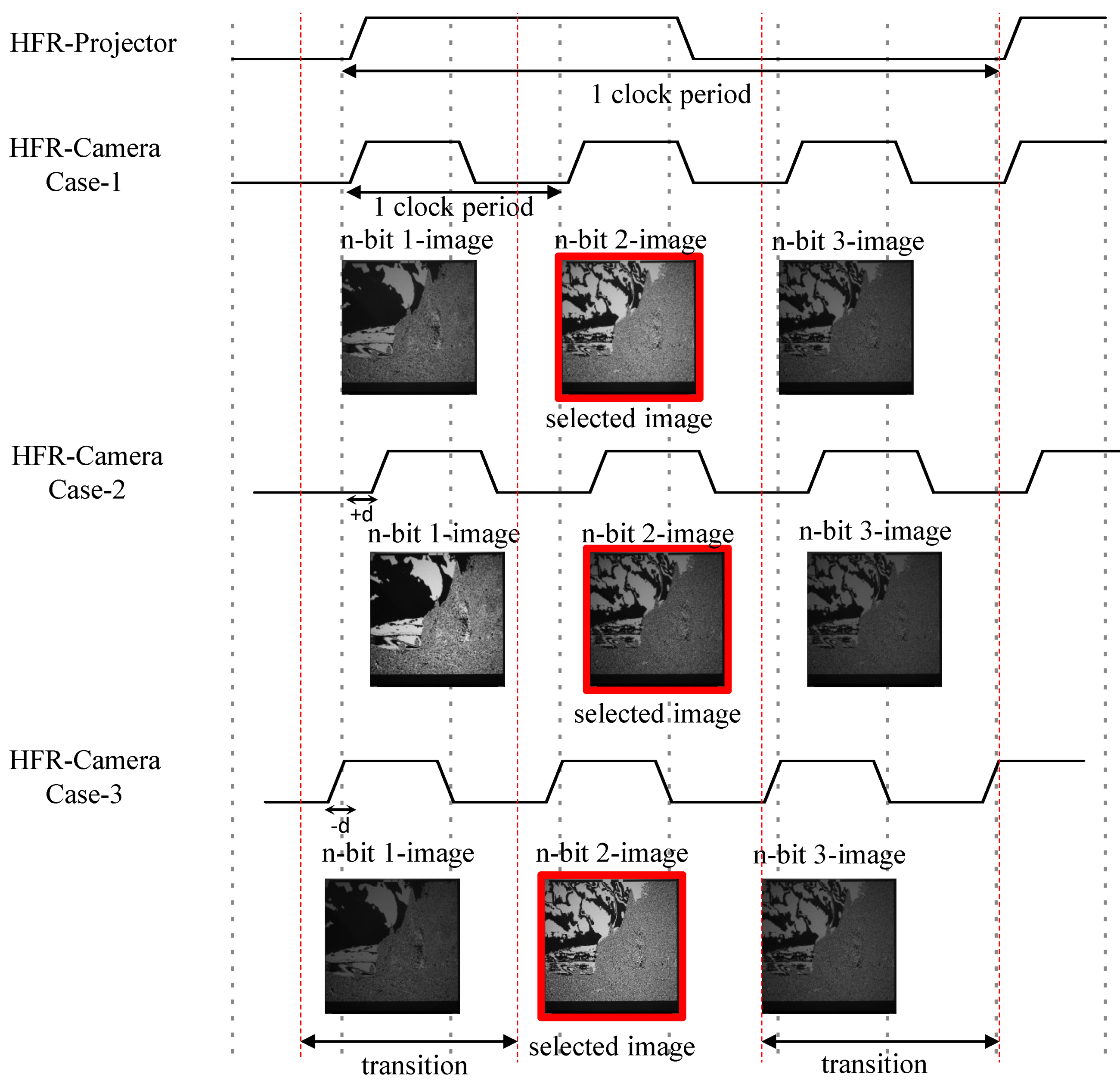

5.1. Software-Based Synchronization

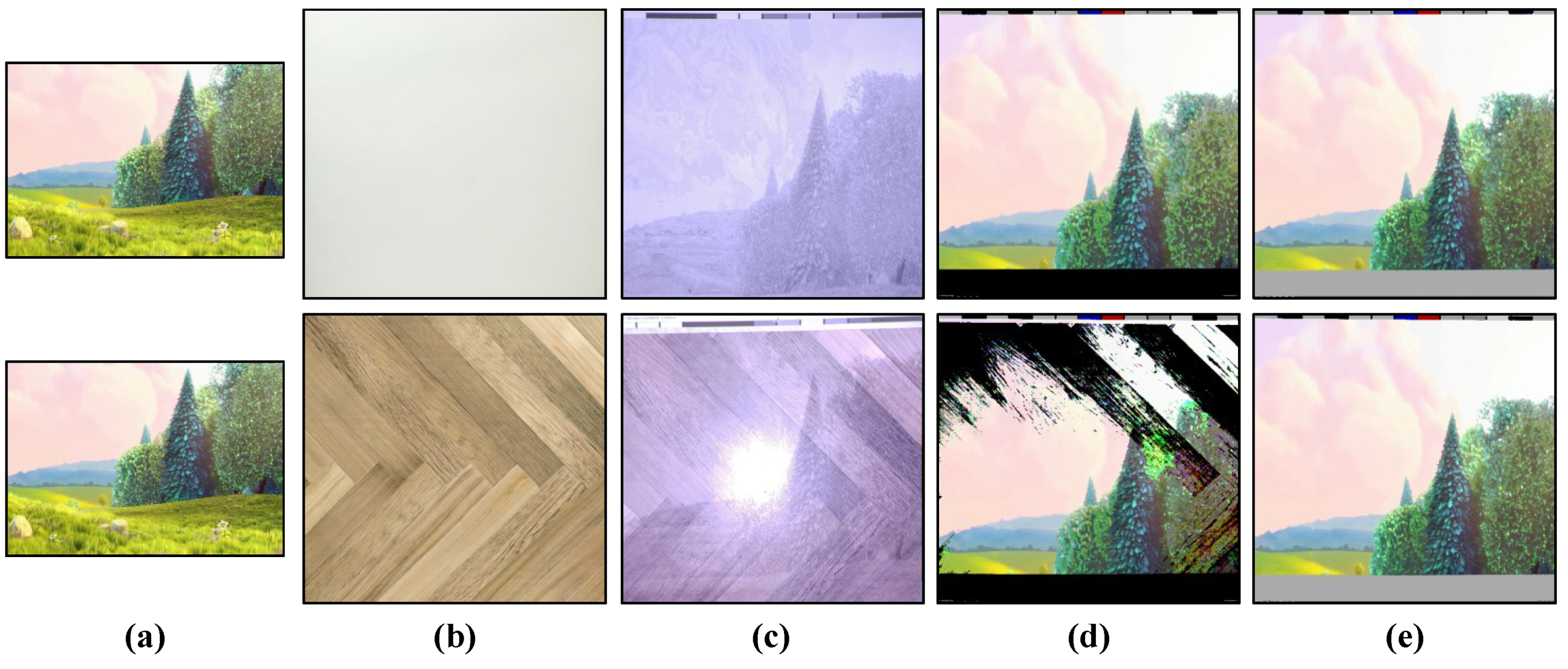

5.2. Background Subtraction

5.3. Synthesizing 24-Bit RGB Image

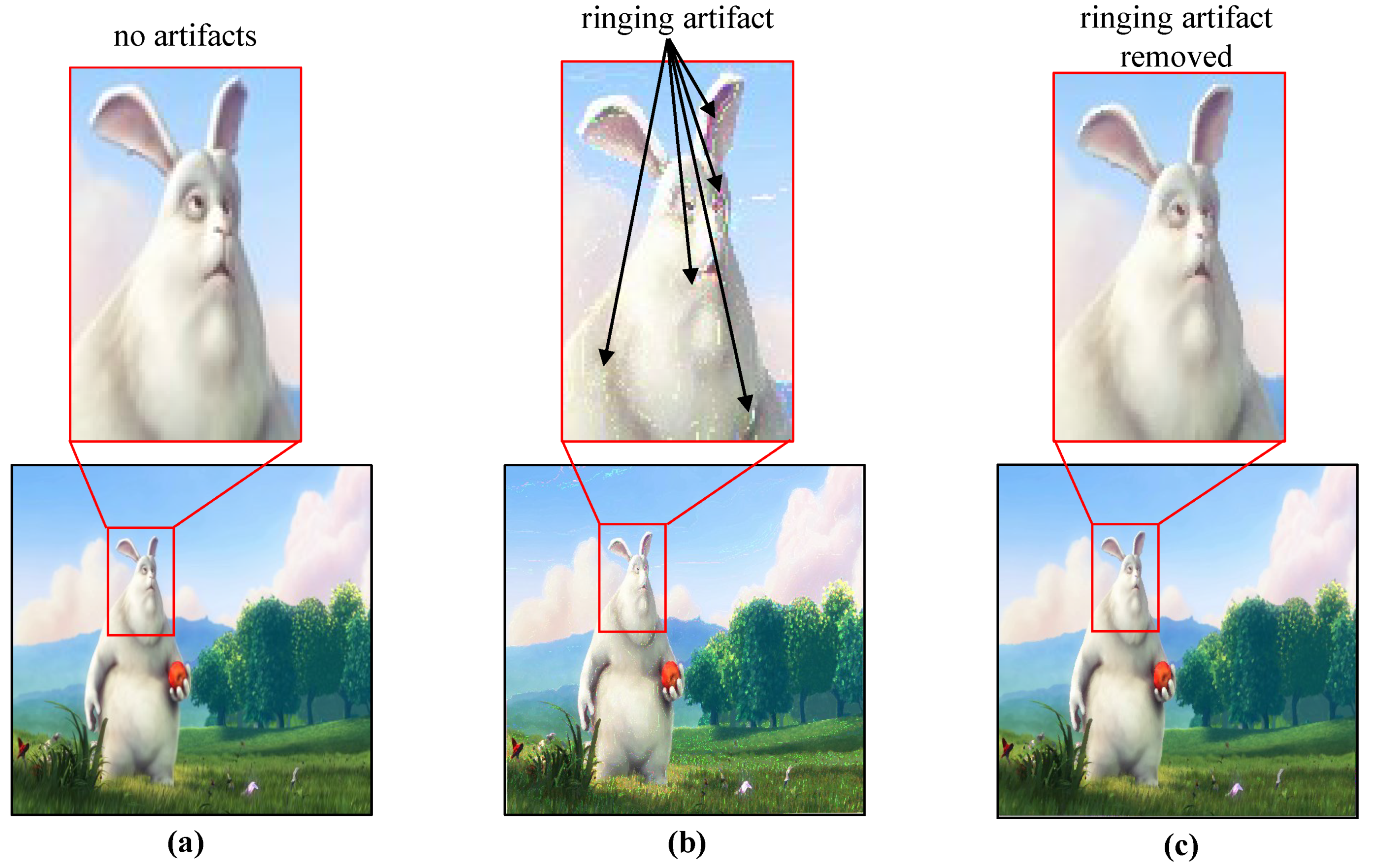

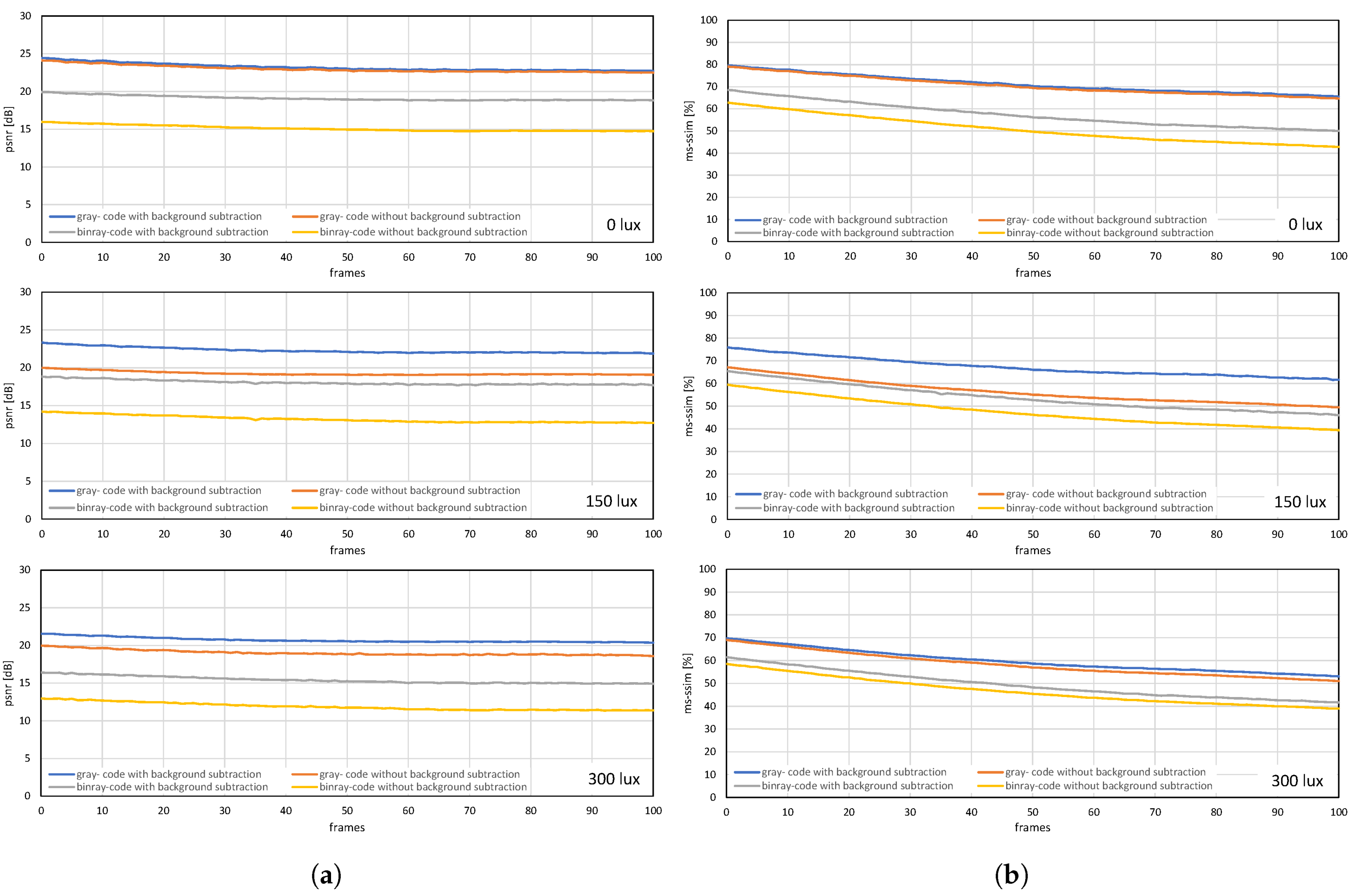

6. Image Quality in VLC

7. Experiments

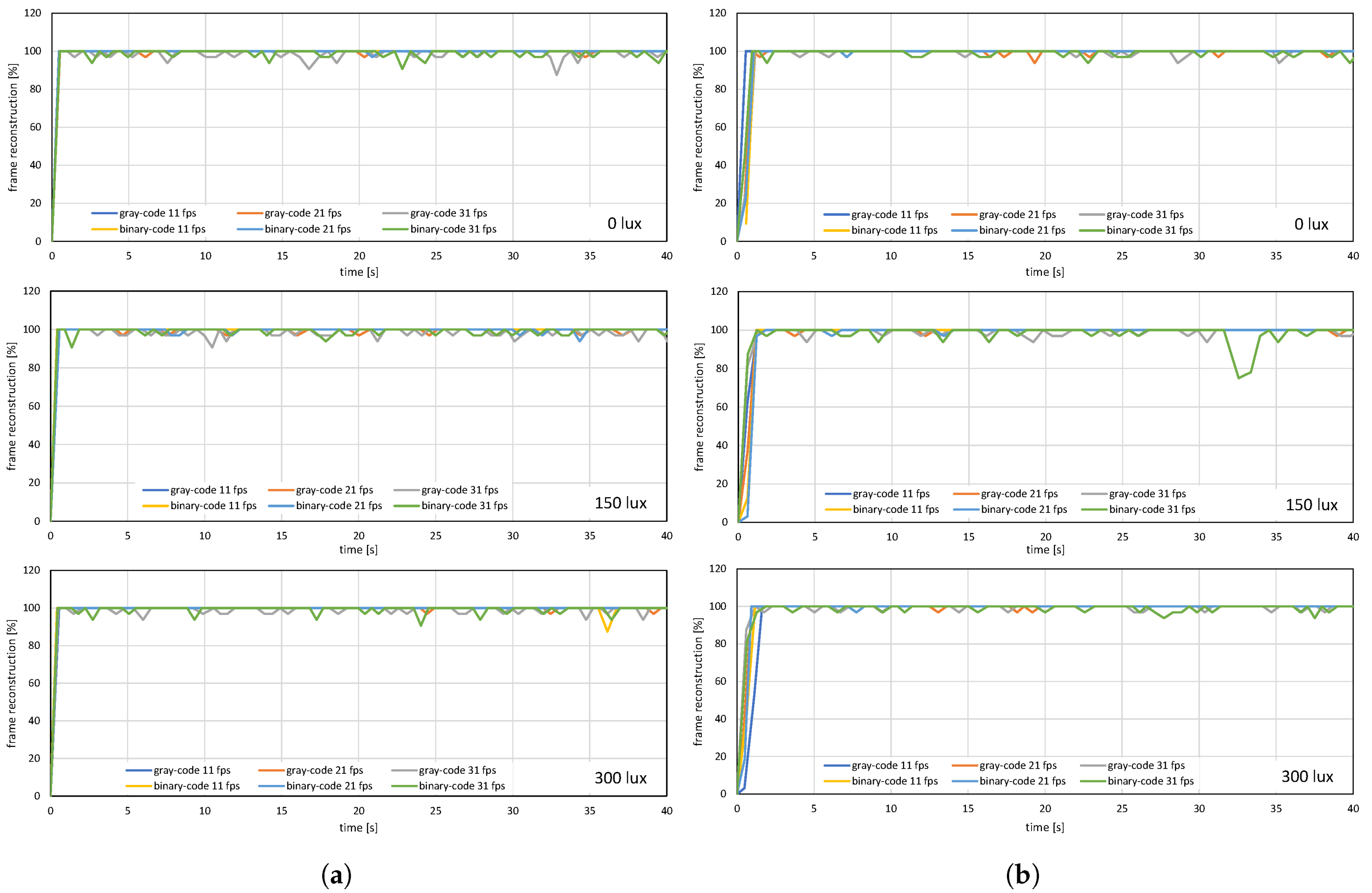

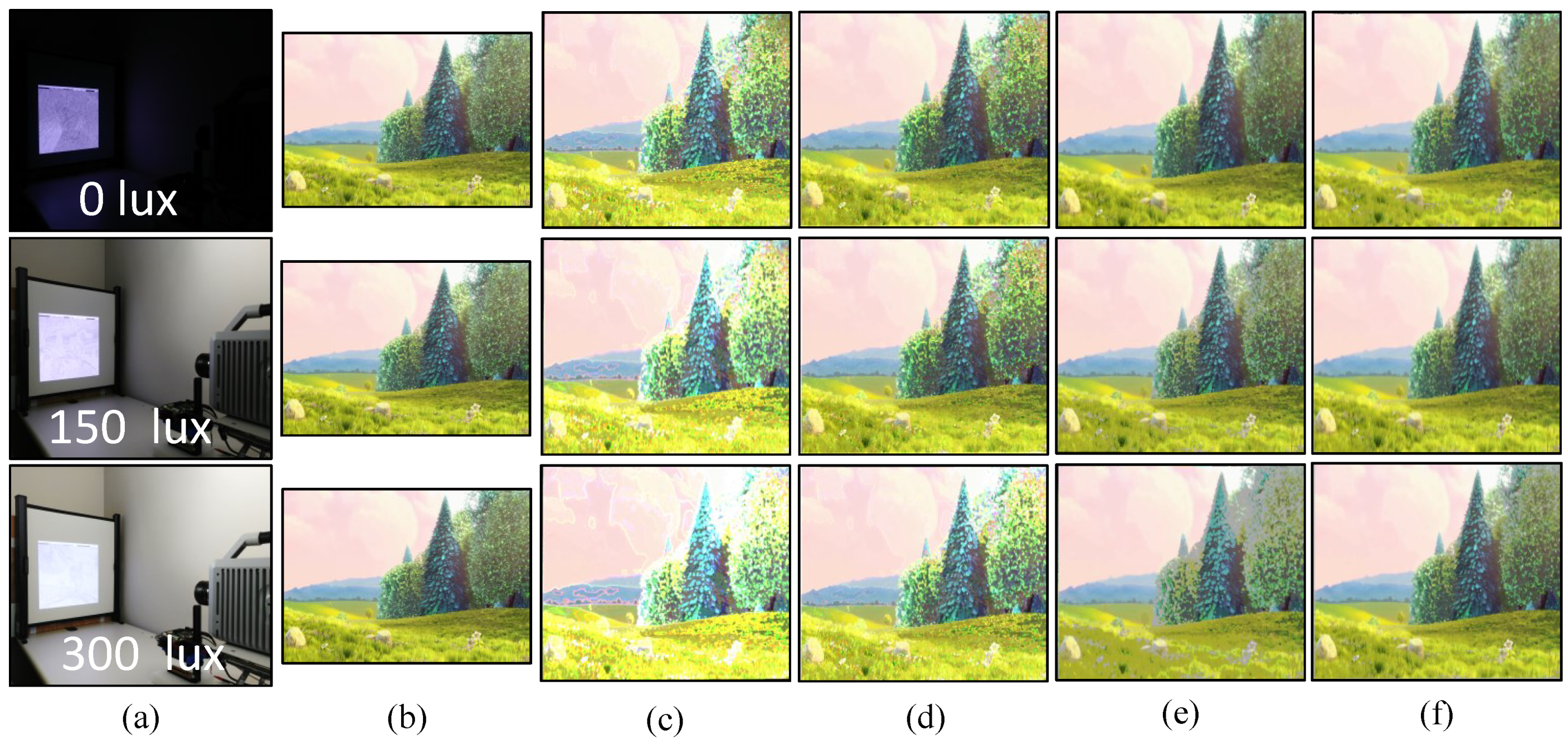

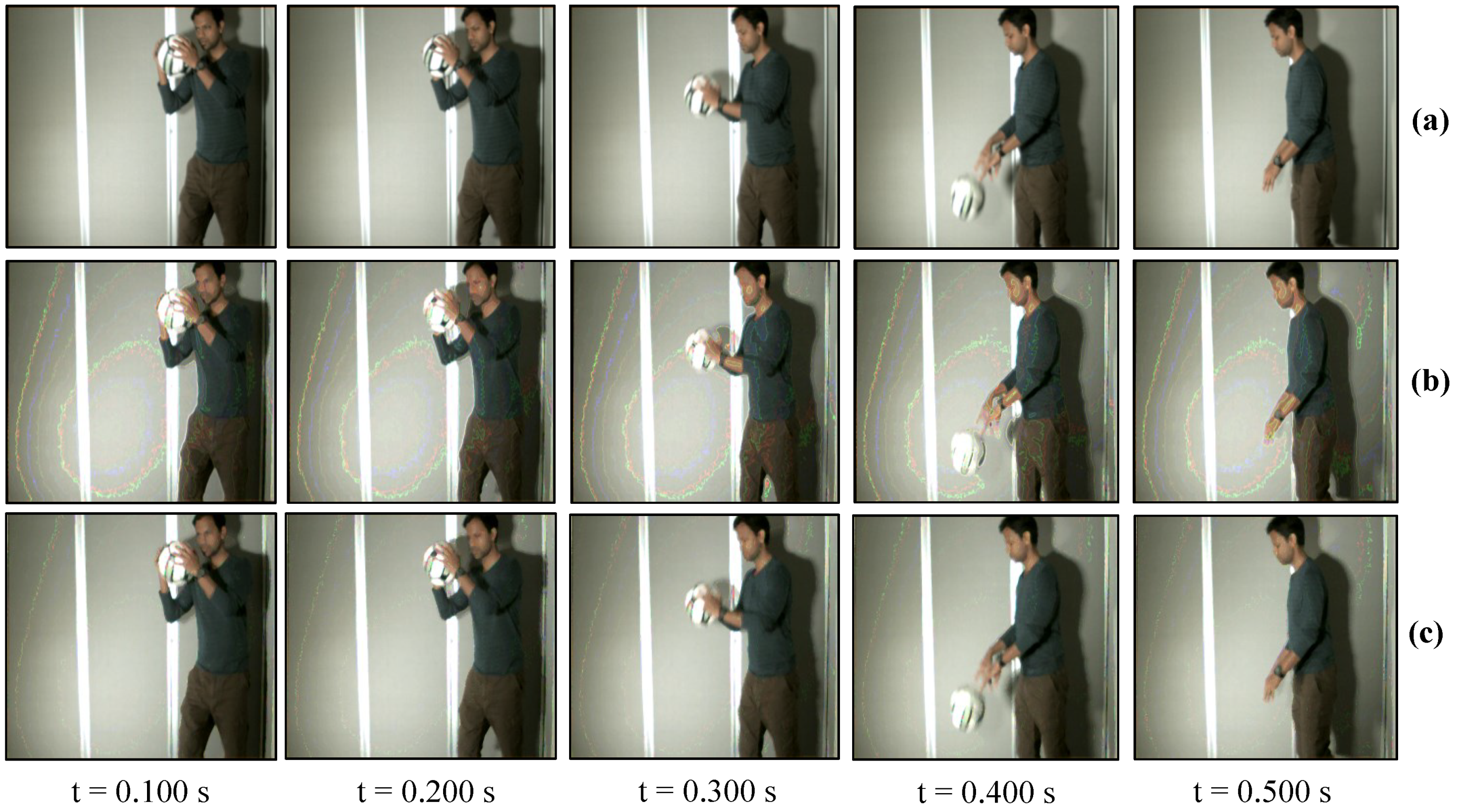

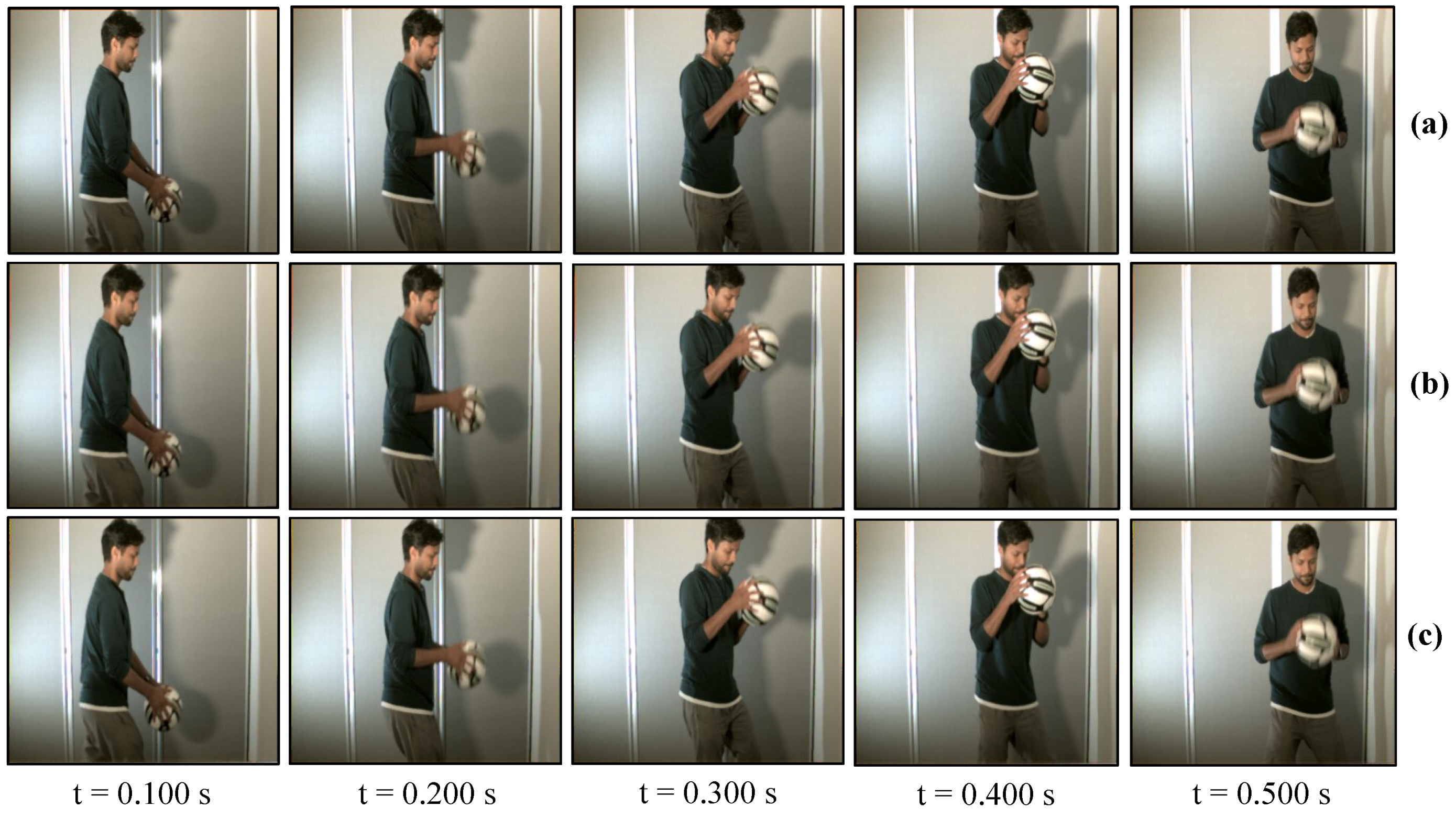

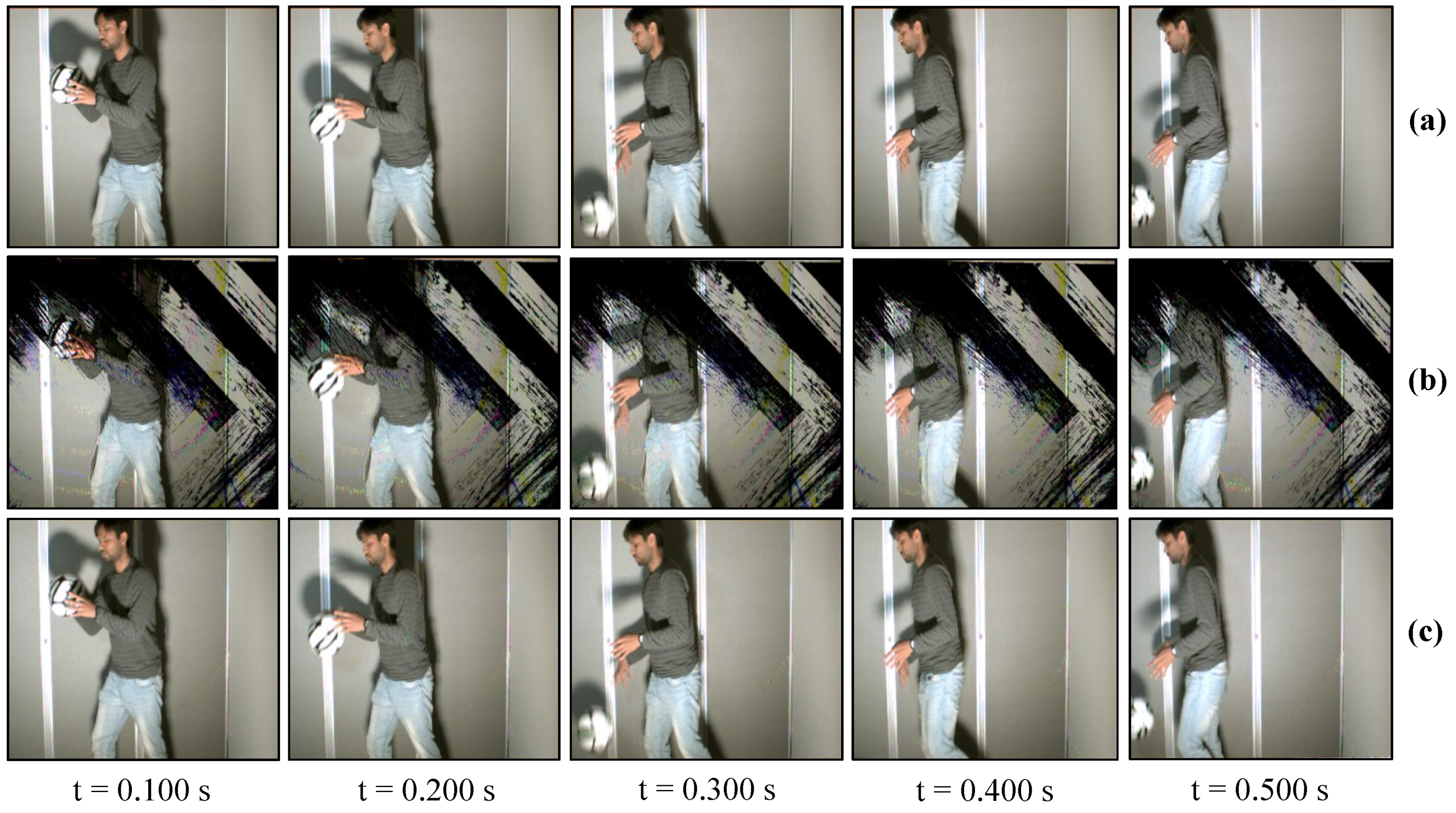

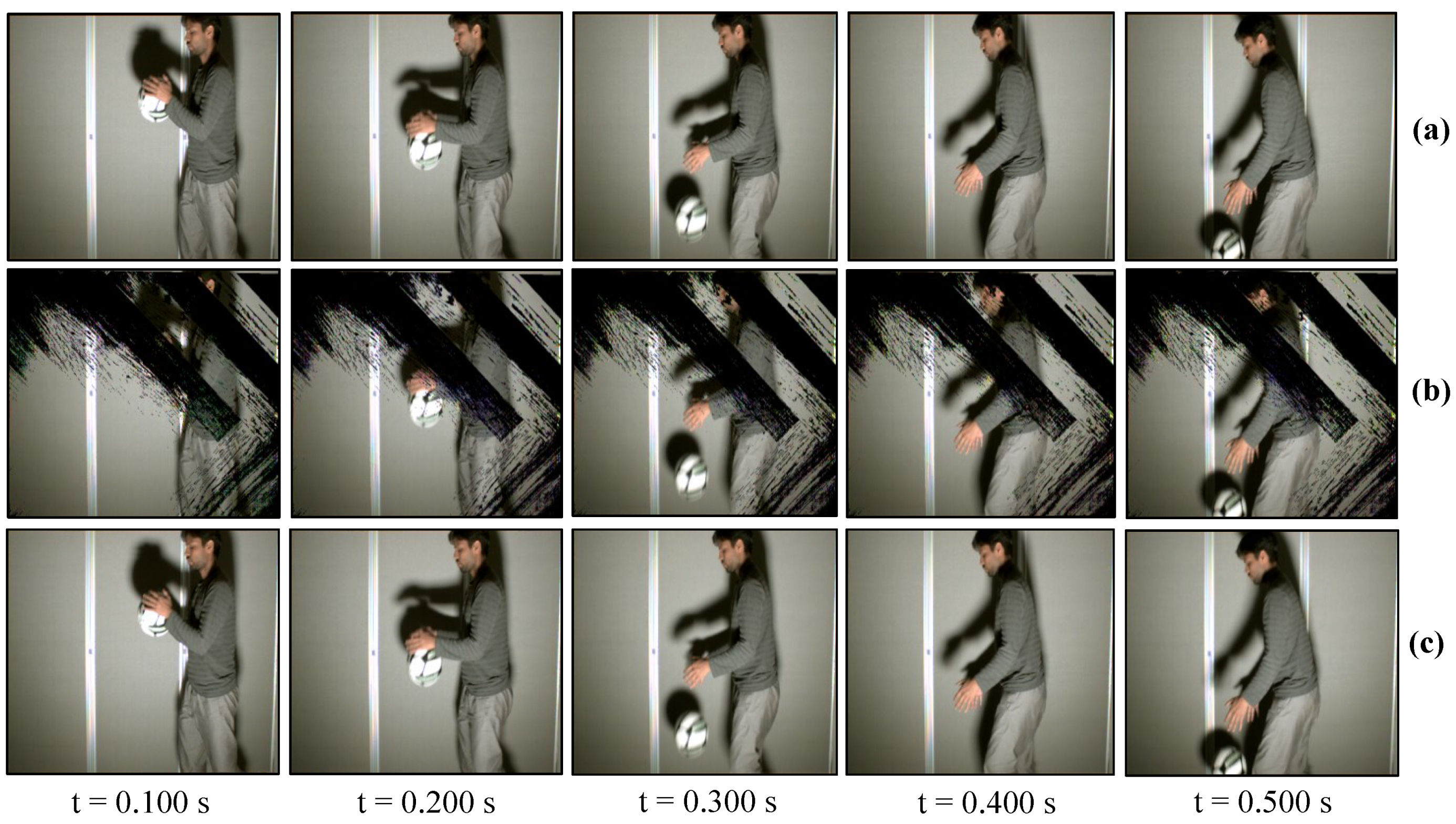

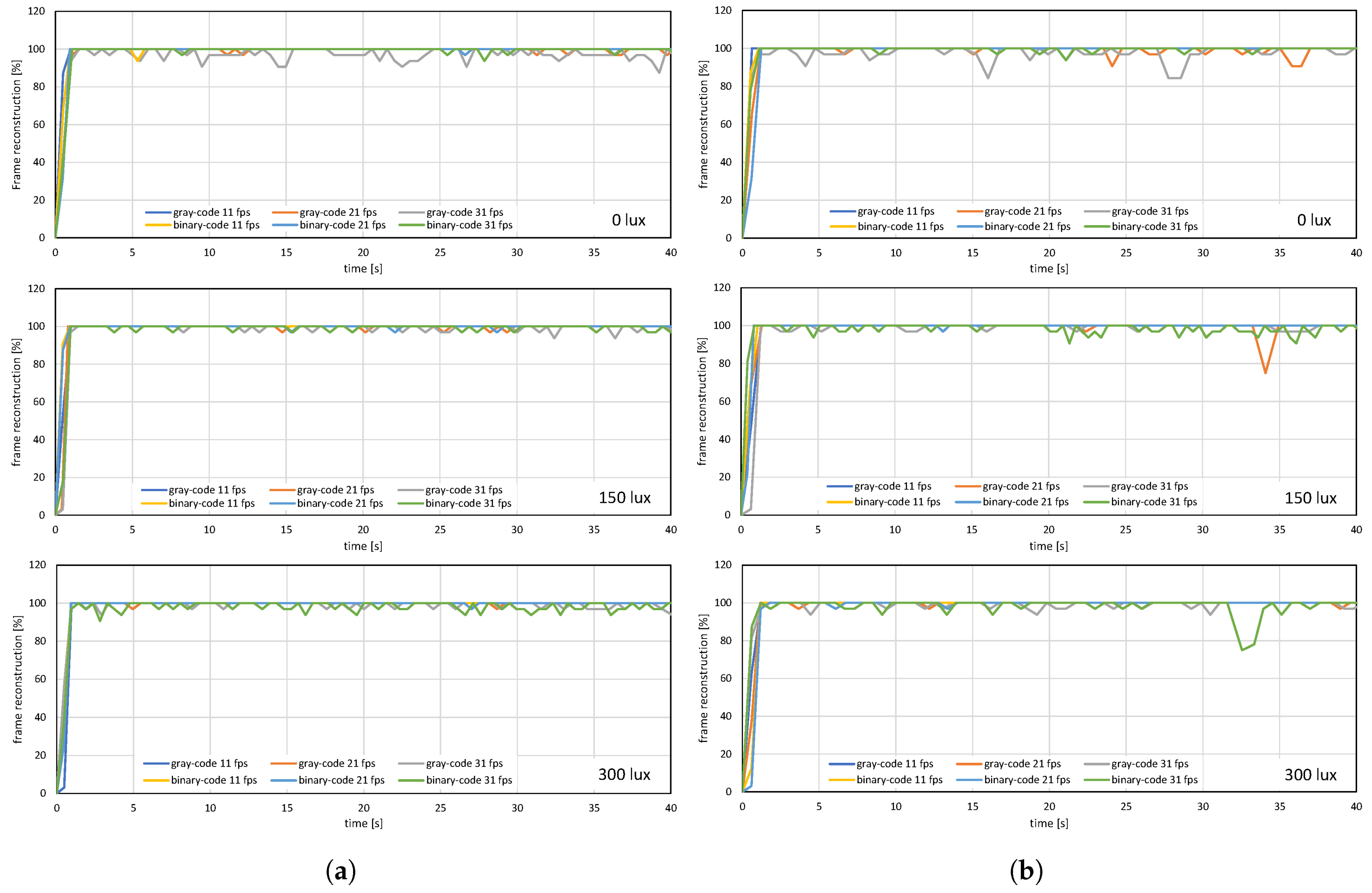

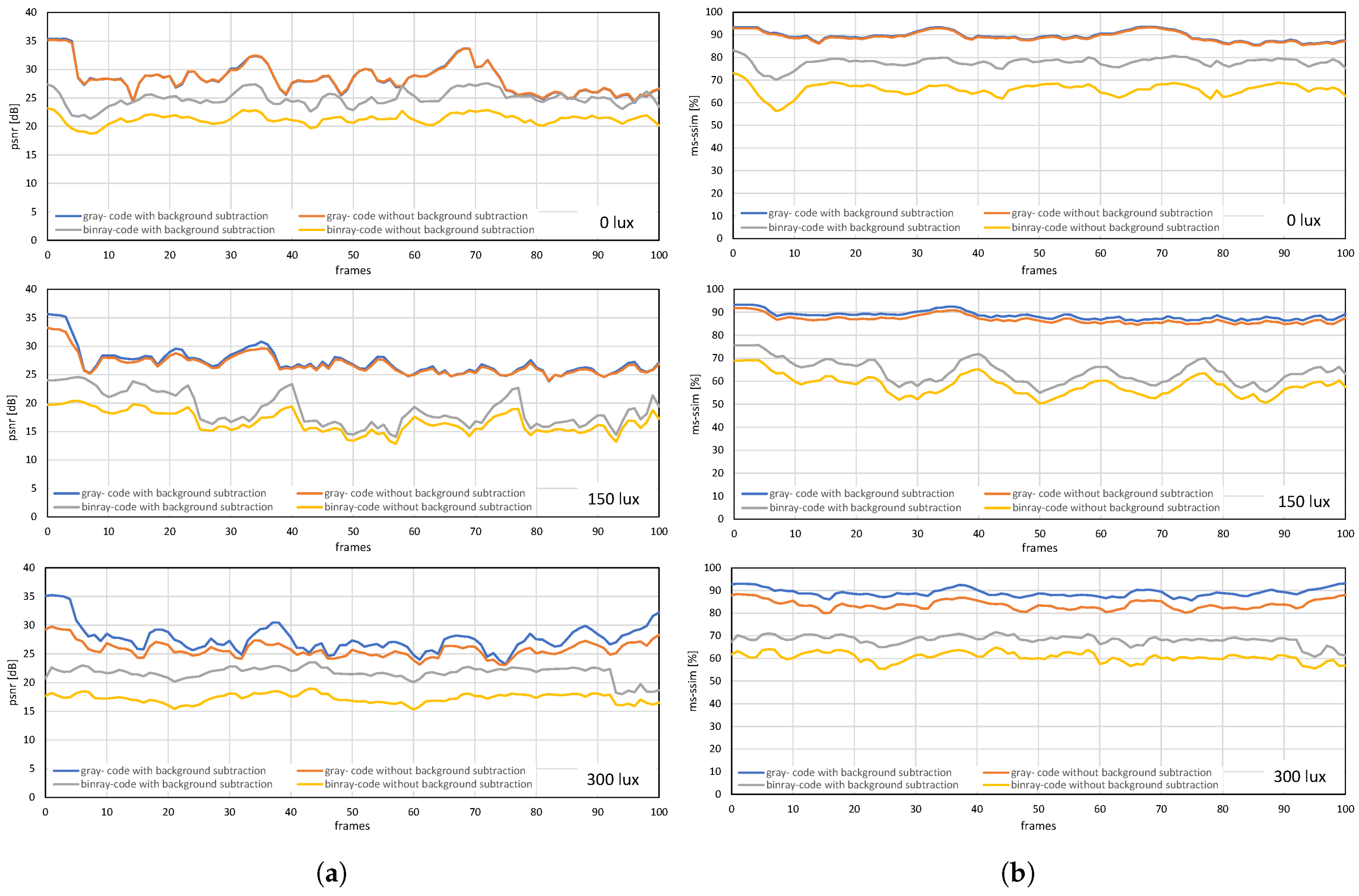

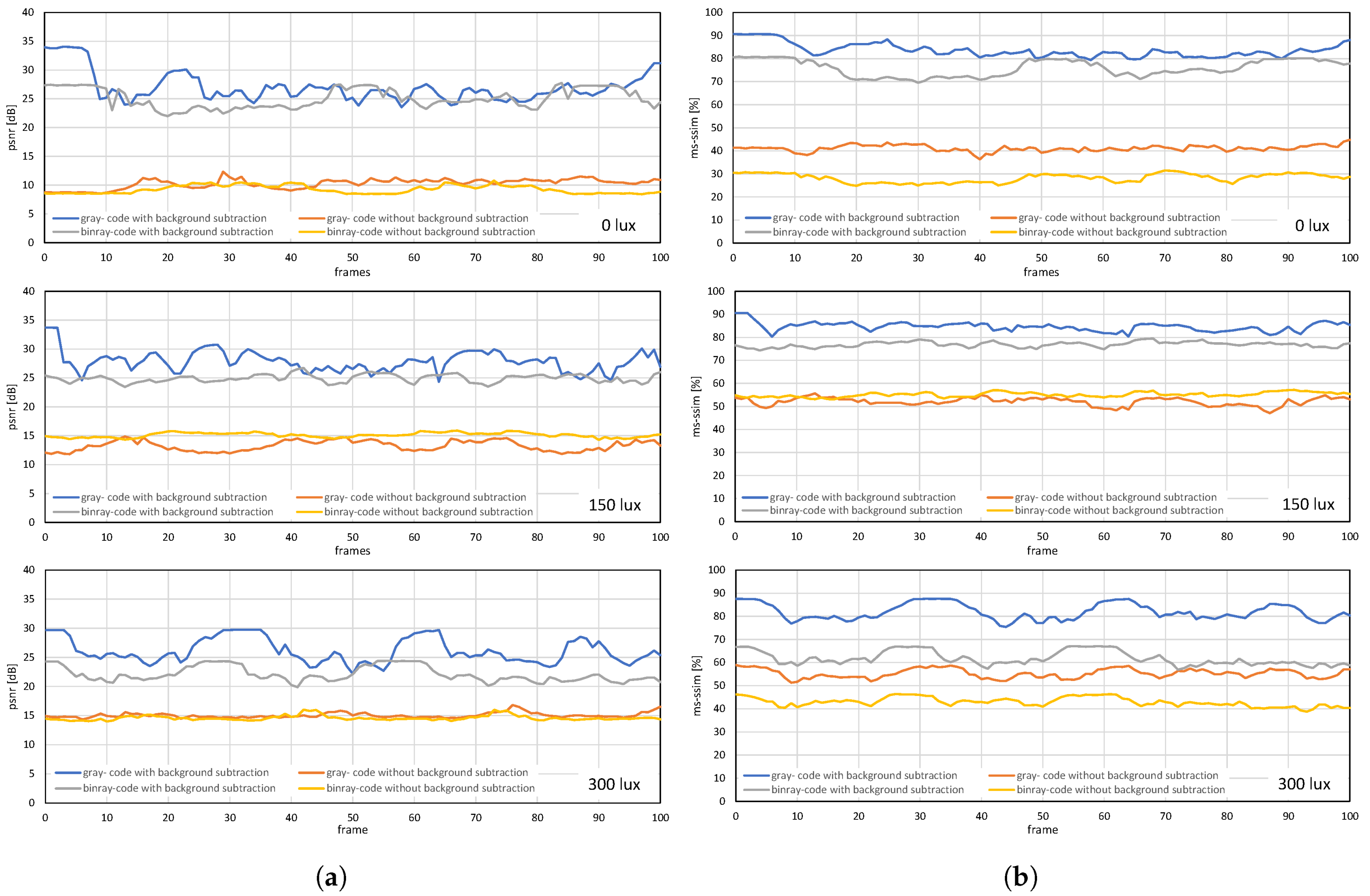

7.1. Real-time Video Streaming—Stored Video Sequence

7.2. Real-Time Video Streaming—USB Camera

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Watanabe, Y.; Komuro, T.; Ishikawa, M. 955-fps real-time shape measurement of a moving/deforming object using high-speed vision for numerous-point analysis. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3192–3197. [Google Scholar]

- Ishii, I.; Taniguchi, T.; Sukenobe, R.; Yamamoto, K. Development of high-speed and real-time vision platform, H3 vision. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), St. Louis, MO, USA, 10–15 October 2009; pp. 3671–3678. [Google Scholar]

- Ishii, I.; Tatebe, T.; Gu, Q.; Moriue, Y.; Takaki, T.; Tajima, K. 2000 fps real-time vision system with high-frame-rate video recording. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 1536–1541. [Google Scholar]

- Sharma, A.; Shimasaki, K.; Gu, Q.; Chen, J.; Aoyama, T.; Takaki, T.; Ishii, I.; Tamura, K.; Tajima, K. Super high-speed vision platform that can process 1024 × 1024 images in real time at 12,500 fps. In Proceedings of the IEEE/SICE International Symposium on System Integration, Sapporo, Japan, 13–15 December 2016; pp. 544–549. [Google Scholar]

- Yamazaki, T.; Katayama, H.; Uehara, S.; Nose, A.; Kobayashi, M.; Shida, S.; Odahara, M.; Takamiya, K.; Hisamatsu, Y.; Matsumoto, S.; et al. A 1ms high-speed vision chip with 3D-stacked 140GOPS column-parallel PEs for spatio-temporal image processing. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 82–83. [Google Scholar]

- Ishii, I.; Taniguchi, T.; Yamamoto, K.; Takaki, T. High-frame-rate optical flow system. IEEE Trans. Circ. Sys. Video Tech. 2012, 22, 105–112. [Google Scholar] [CrossRef]

- Gu, Q.; Nakamura, N.; Aoyama, T.; Takaki, T.; Ishii, I. A full-pixel optical flow system using a GPU-based high-frame-rate vision. In Proceedings of the 2015 Conference on Advances In Robotics, Goa, India, 2–4 July 2015. Article 52. [Google Scholar]

- Ishii, I.; Tatebe, T.; Gu, Q.; Takaki, T. Color-histogram-based tracking at 2000 fps. J. Electron. Imaging 2012, 21, 1–14. [Google Scholar] [CrossRef]

- Gu, Q.; Raut, S.; Okumura, K.; Aoyama, T.; Takaki, T.; Ishii, I. Real-time image mosaicing system using a high-frame-rate video sequence. J. Robot. Mechatronics 2015, 27, 204–215. [Google Scholar] [CrossRef]

- Jiang, M.; Aoyama, T.; Takaki, T.; Ishii, I. Pixel-level and robust vibration source sensing in high-frame-rate video analysis. Sensors 2016, 16, 1842. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. Real-time vibration source tracking using high-speed vision. IEEE Sens. J. 2017, 17, 1513–1527. [Google Scholar] [CrossRef]

- Ueno, T.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I.; Kawahara, T. Motion-blur-free microscopic video shooting based on frame-by-frame intermittent tracking. In Proceedings of the IEEE Conference on Automation Science and Engineering, Gothenburg, Sweden, 24–28 August 2015; pp. 837–842. [Google Scholar]

- Hayakawa, T.; Watanabe, T.; Ishikawa, M. Real-time high-speed motion blur compensation system based on back-and-forth motion control of galvanometer mirror. Opt. Express 2015, 23, 31648–31661. [Google Scholar] [CrossRef]

- Hayakawa, T.; Ishikawa, M. Development of motion-blur-compensated high-speed moving visual inspection vehicle for tunnels. Int. J. Civ. Struct. Eng. Res. 2016, 5, 151–155. [Google Scholar] [CrossRef]

- Inoue, M.; Gu, Q.; Jiang, M.; Takaki, T.; Ishii, I.; Tajima, K. Motion-blur-free high-speed video shooting using a resonant mirror. Sensors 2017, 17, 2483. [Google Scholar] [CrossRef]

- Yang, H.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. Dynamics-based stereo visual inspection using multidimensional modal analysis. IEEE Sens. J. 2013, 13, 4831–4843. [Google Scholar] [CrossRef]

- Aoyama, T.; Li, L.; Jiang, M.; Inoue, K.; Takaki, T.; Ishii, I.; Yang, H.; Umemoto, C.; Matsuda, H.; Chikaraishi, M.; et al. Vibration sensing of a bridge model using a multithread active vision system. IEEE/ASME Trans. Mechatronics 2018, 23, 179–189. [Google Scholar] [CrossRef]

- Oku, H.; Ishii, I.; Ishikawa, M. Tracking a protozoon using high-speed visual feedback. In Proceedings of the IEEE Conference on Microtechnologies in Medicine and Biology, Lyon, France, 12–14 October 2000; pp. 156–159. [Google Scholar]

- Sakuma, S.; Kuroda, K.; Tsai, C.; Fukui, W.; Arai, F.; Kaneko, M. Red blood cell fatigue evaluation based on the close-encountering point between extensibility and recoverability. Lab Chip 2014, 14, 1135–1141. [Google Scholar] [CrossRef] [PubMed]

- Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. Simultaneous vision-based shape and motion analysis of cells fast-flowing in a microchannel. IEEE Trans. Autom. Sci. Eng. 2015, 12, 204–215. [Google Scholar] [CrossRef]

- Gu, Q.; Kawahara, T.; Aoyama, T.; Takaki, T.; Ishii, I.; Takemoto, A.; Sakamoto, N. LOC-based high-throughput cell morphology analysis system. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1346–1356. [Google Scholar] [CrossRef]

- Hornbeck, L.J. Digital light processing and MEMS: Timely convergence for a bright future. In Proceedings of the Plenary Session, SPIE Micromachining and Microfabrication’95, Austin, TX, USA, 24 October 1995. [Google Scholar]

- Younse, J.M. Projection display systems based on the Digital Micromirror Device (DMD). In Proceedings of the SPIE Conference on Microelectronic Structures and Microelectromechanical Devices for Optical Processing and Multimedia Applications, Austin, TX, USA, 24 October 1995; Volume 2641, pp. 64–75. [Google Scholar]

- Bimber, O.; Iwai, D.; Wetzstein, G.; Grundhöfer, A. The visual computing of projector–camera systems. In Proceedings of the SIGGRAPH ’08 ACM, Los Angeles, CA, USA, 11–15 August 2008. [Google Scholar]

- Takei, J.; Kagami, S.; Hashimoto, K. 3000-fps 3-D shape measurement using a high-speed camera-projector system. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]

- Kagami, S. High-speed vision systems and projectors for real-time perception of the world. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 100–107. [Google Scholar]

- Gao, H.; Aoyama, T.; Takaki, T.; Ishii, I. A Self-Projected Light-Section Method for Fast Three-Dimensional Shape Inspection. Int. J. Optomechatronics 2012, 6, 289–303. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, H.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. High-frame-rate structured light 3-D vision for fast moving objects. J. Robot. Mechatronics 2014, 26, 311–320. [Google Scholar] [CrossRef]

- Li, B.; An, Y.; Cappelleri, D.; Xu, J.; Zhang, S. High-accuracy, high-speed 3D structured light imaging techniques and potential applications to intelligent robotics. Int. J. Intell. Robot. Appl. 2017, 1, 86–103. [Google Scholar] [CrossRef]

- Moreno, D.; Calakli, F.; Taubin, G. Unsynchronized structured light. ACM Trans. Graph. 2015, 34, 178. [Google Scholar] [CrossRef]

- Chen, J.; Yamamoto, T.; Aoyama, T.; Takaki, T.; Ishii, I. Simultaneous projection mapping using high-frame-rate depth vision. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 4506–4511. [Google Scholar]

- Watanabe, Y.; Narita, G.; Tatsuno, S.; Yuasa, T.; Sumino, K.; Ishikawa, M. High-speed 8-bit image projector at 1000 fps with 3 ms delay. In Proceedings of the International Display Workshops (IDW2015), Shiga, Japan, 11 December 2015; pp. 1064–1065. [Google Scholar]

- Narita, G.; Watanabe, Y.; Ishikawa, M. Dynamic projection mapping onto deforming non-rigid surface using deformable dot cluster marker. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1235–1248. [Google Scholar] [CrossRef]

- Fleischmann, O.; Koch, R. Fast projector–camera calibration for interactive projection mapping. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3798–3803. [Google Scholar]

- Cevik, T.; Yilmaz, S. An overview of visible light communication systems. IJCNC 2015, 7, 139–150. [Google Scholar] [CrossRef]

- Bhalerao, M.; Sonavane, S.; Kumar, V. A survey of wireless communication using visible light. Int. J. Adv. Eng. Technol. 2013, 5, 188–197. [Google Scholar]

- Jovicic, A.; Li, J.; Richardson, T. Visible light communication: Opportunities, challenges and the path to market. IEEE Commun. Mag. 2013, 51, 26–32. [Google Scholar] [CrossRef]

- Fath, T.; Haas, H. Performance comparison of mimo techniques for optical wireless communications in indoor environments. IEEE Trans. Commun. 2013, 6, 733–742. [Google Scholar] [CrossRef]

- Kumar, N.; Lourenco, N.R. Led-based visible light communication system: A brief survey and investigation. J. Eng. Appl. Sci. 2010, 5, 296–307. [Google Scholar] [CrossRef]

- Komine, T.; Nakagawa, M. Fundamental analysis for visible-light communication system using LED lights. IEEE Trans. Consum. Electron. 2004, 50, 100–107. [Google Scholar] [CrossRef]

- Bui, T.; Kiravittaya, S.; Sripimanwat, K.; Nguyen, N. A comprehensive lighting configuration for efficient indoor visible light communication networks. Int. J. Opt. 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Sindhubala, K.; Vijayalakshmi, B. Ecofriendly data transmission in visible light communication. In Proceedings of the Third International Conference on Computer, Communication, Control and Information Technology (C3IT), Hooghly, India, 7–8 February 2015; pp. 1–4. [Google Scholar]

- Zafar, F.; Karunatilaka, D.; Parthiban, R. Dimming schemes for visible light communication: The state of research. IEEE Wirel. Commun. 2015, 22, 29–35. [Google Scholar] [CrossRef]

- Rajagopal, S.; Roberts, R.D.; Lim, S.K. IEEE 802.15.7 visible light communication: Modulation schemes and dimming support. IEEE Commun. Mag. 2012, 50, 72–82. [Google Scholar] [CrossRef]

- Takai, I.; Ito, S.; Yasutomi, K.; Kagawa, K.; Andoh, M.; Kawahito, S. LED and CMOS image sensor based optical wireless communication system for automotive applications. IEEE Photonics J. 2013, 5, 6801418–6801418. [Google Scholar] [CrossRef]

- Takai, I.; Harada, T.; Andoh, M.; Yasutomi, K.; Kagawa, K.; Kawahito, S. Optical vehicle-to-vehicle communication system using LED transmitter and camera receiver. IEEE Photonics J. 2014, 6, 1–14. [Google Scholar] [CrossRef]

- Kasashima, T.; Yamazato, T.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S. Interpixel interference cancellation method for road-to-vehicle visible light communication. In Proceedings of the IEEE 5th International Symposium on Wireless Vehicular Communications (WiVeC), Dresden, Germany, 2–3 June 2013; pp. 1–5. [Google Scholar]

- Chinthaka, H.; Premachandra, N.; Yendo, T.; Yamasato, T.; Fujii, T.; Tanimoto, M.; Kimura, Y. Detection of LED traffic light by image processing for visible light communication system. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 179–184. [Google Scholar]

- Yamazato, T.; Takai, I.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S.; Andoh, M.; Harada, T.; Yasutomi, K.; Kagawa, K.; et al. Image-sensor-based visible light communication for automotive applications. IEEE Commun. Mag. 2014, 52, 88–97. [Google Scholar] [CrossRef]

- Rajagopal, N.; Lazik, P.; Rowe, A. Visual light landmarks for mobile devices. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks, Berlin, Germany, 15–17 April 2014; pp. 249–260. [Google Scholar]

- Boubezari, R.; Le Minh, H.; Ghassemlooy, Z.; Bouridane, A.; Pham, A. Data detection for Smartphone visible light communications. In Proceedings of the 9th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Manchester, UK, 23–25 July 2014; pp. 1034–1038. [Google Scholar]

- Corbellini, G.; Akşit, K.; Schmid, S.; Mangold, S.; Gross, T. Connecting networks of toys and smartphones with visible light communication. IEEE Commun. Mag 2014, 52, 72–78. [Google Scholar] [CrossRef]

- Wang, M.; Wu, J.; Yu, W.; Wang, H.; Li, J.; Shi, J.; Luo, C. Efficient coding modulation and seamless rate adaptation for visible light communications. IEEE Wirel. Commun. 2015, 22, 86–93. [Google Scholar] [CrossRef]

- Li, T.; An, C.; Tian, Z.; Campbell, A.T.; Zhou, X. Human sensing using visible light communication. In Proceedings of the MobiCom’15, Paris, France, 7–11 September 2015. [Google Scholar]

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS camera sensor for visible light communication. In Proceedings of the IEEE GlobecomWorkshops (GC Wkshps), Anaheim, CA, USA, 3–7 December 2012; pp. 1244–1248. [Google Scholar]

- Wang, J.; Kang, Z.; Zou, N. Research on indoor visible light communication system employing white LED lightings. In Proceedings of the IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011; pp. 934–937. [Google Scholar]

- Bui, T.C.; Kiravittaya, S. Demonstration of using camera communication based infrared LED for uplink in indoor visible light communication. In Proceedings of the IEEE Sixth International Conference on Communications and Electronics (ICCE), Ha Long, Vietnam, 27–29 July 2016; pp. 71–76. [Google Scholar]

- Chow, C.; Chen, C.; Chen, S. Enhancement of signal performance in LED visible light communications using mobile phone camera. IEEE Photonics J. 2015, 7, 1–7. [Google Scholar] [CrossRef]

- Xu, Y.; Zhao, J.; Shi, J.; Chi, N. Reversed three-dimensional visible light indoor positioning utilizing annular receivers with multi-photodiodes. Sensors 2016, 16, 1254. [Google Scholar] [CrossRef]

- Kuo, Y.; Pannuto, P.; Hsiao, K.; Dutta, P. Luxapose: Indoor positioning with mobile phones and visible light. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 447–458. [Google Scholar]

- Jerome, K.; Tony, V.; Vinayak, R.; Dhanaraj, K.J. Indoor navigation using visible light communication. In Proceedings of the 2014 Texas Instruments India Educators’ Conference (TIIEC), Bangalore, India, 4–5 April 2014; pp. 46–52. [Google Scholar]

- Ganti, D.; Zhang, W.; Kavehrad, M. VLC-based indoor positioning system with tracking capability using Kalman and particle filters. In Proceedings of the 2014 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–13 January 2014; pp. 476–477. [Google Scholar]

- Do, T.; Yoo, M. An in-depth survey of visible light communication based positioning systems. Sensors 2016, 16, 678. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Lin, J. Maximum likelihood estimation of vehicle position for outdoor image sensor-based visible light positioning system. Opt. Eng. 2016, 55, 1–8. [Google Scholar] [CrossRef]

- Do, T.; Yoo, M. Performance analysis of visible light communication using CMOS sensors. Sensors 2016, 16, 309. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Hong, C.H.; Le, N.T.; Jang, Y.M. High-speed asynchronous optical camera communication using LED and rolling shutter camera. In Proceedings of the Seventh International Conference on Ubiquitous and Future Networks (ICUFN), Sapporo, Japan, 7–10 July 2015; pp. 214–219. [Google Scholar]

- Liu, Y.F.; Chen, H.; Liang, K.J.; Hsu, C.; Chow, C.; Yeh, C. Visible light communication using receivers of camera image sensor and solar Cell. IEEE Photonics J. 2016, 8, 1–7. [Google Scholar] [CrossRef]

- Hao, T.; Zhou, R.; Xing, G. Cobra: Color barcode streaming for smartphone systems. In Proceedings of the MobiSys 2012, Low Wood Bay, Lake District, UK, 25–29 June 2012; pp. 85–98. [Google Scholar]

- Hu, W.; Gu, H.; Pu, Q. Lightsync: Unsynchronized visual communication over screen-camera links. In Proceedings of the MobiCom 2013, Miami, FL, USA, 30 September–4 October 2013; pp. 15–26. [Google Scholar]

- Perli, S.D.; Ahmed, N.; Katabi, D. PixNet: LCD-Camera pairs as communication links. In Proceedings of the SIGCOMM ’10, New Delhi, India, 30 August–2 September 2010. [Google Scholar]

- Gao, Z.; Zhai, G.; Wu, X.; Min, X.; Zhi, C. DLP based anti-piracy display system. In Proceedings of the IEEE VCIP’14, Valletta, Malta, 7–10 December 2014. [Google Scholar]

- Dai, J.; Chung, R. Embedding imperceptible codes into video projection and applications in robotics. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 4399–4404. [Google Scholar]

- Zhang, B.; Ren, K.; Xing, G.; Fu, X.; Wang, C. SBVLC: Secure barcode-based visible light communication for smartphones. IEEE Trans. Mob. Comput. 2016, 15, 432–446. [Google Scholar] [CrossRef]

- Wang, A.; Li, Z.; Peng, C.; Shen, G.; Fang, G.; Zeng, B. InFrame++: Achieve Simultaneous Screen-Human Viewing and Hidden Screen-Camera Communication. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys ’15), New York, NY, USA, May 2015; pp. 181–195. [Google Scholar]

- Wang, A.; Peng, C.; Zhang, O.; Shen, G.; Zeng, B. InFrame: Multiflexing full-frame visible communication channel for humans and devices. In Proceedings of the HotNets-XIII Proceedings of the 13th ACM Workshop on Hot Topics in Networks, Los Angeles, CA, USA, 27–28 October 2014. [Google Scholar]

- Hornbeck, L.J. Digital light processing: A new MEMS-based display technology. In Proceedings of the Technical Digest of the IEEJ 14th Sensor Symposium, Kawasaki, Japan, 4–5 June 1996; pp. 297–304. [Google Scholar]

- Gove, R.J. DMD Display Systems: The Impact of an All-digital Display. Available online: https://www.semanticscholar.org/paper/DMD-Display-Systems-%3A-The-Impact-of-an-All-Digital-Gove/e5167d04802842fda09251429636d7300d340146 (accessed on 18 September 2020).

- Hornbeck, L.J. Digital light processing and MEMS: An overview. In Proceedings of the Digest IEEE/Leos 1996 Summer Topical Meeting. Advanced Applications of Lasers in Materials and Processing, Keystone, CO, USA, 5–9 August 1996; pp. 7–8. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Big Buck Bunny. Available online: http://www.bigbuckbunny.org (accessed on 18 September 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, A.; Raut, S.; Shimasaki, K.; Senoo, T.; Ishii, I. HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming. Sensors 2020, 20, 5368. https://doi.org/10.3390/s20185368

Sharma A, Raut S, Shimasaki K, Senoo T, Ishii I. HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming. Sensors. 2020; 20(18):5368. https://doi.org/10.3390/s20185368

Chicago/Turabian StyleSharma, Atul, Sushil Raut, Kohei Shimasaki, Taku Senoo, and Idaku Ishii. 2020. "HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming" Sensors 20, no. 18: 5368. https://doi.org/10.3390/s20185368

APA StyleSharma, A., Raut, S., Shimasaki, K., Senoo, T., & Ishii, I. (2020). HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming. Sensors, 20(18), 5368. https://doi.org/10.3390/s20185368