Advances and Trends in Real Time Visual Crowd Analysis

Abstract

1. Introduction

2. Applications

- People counting in dense populated areas: Population of the world is growing day by day. Maintaining public order in certain crowded places such as airports, carnivals, sports events, and railway stations is very essential. In crowd management system, counting people is an essential factor. Particularly in smaller areas, increase in the number of people create problems such as fatalities, physical injury etc. Early detection of such kind of a crowd avoid these problems. In such sort of crowd management, counting the number of people provide accurate information about certain conditions such as blockage at some points and so on. Instead of large-scale research work, counting methods are still facing various challenges such as varying illumination conditions, occlusion problems, high cluttering, and some scale variations due to various perspectives. Due to a lot of development in the design of CMS, difficulties of people counting are now reduced to some extent. Some excellent works are proposed in [15,16,17,18,19], which address people counting through an efficient CMS.

- Public Events Management: Events such as concerts, political rallies, and sports events are managed and analysed to avoid specific disastrous situations. This is specifically beneficial in managing all available resources such as crowd movement optimization and spatial capacity [20,21,22]. Similarly crowd monitoring and management in religious events such as Hajj and Umrah is another issue to be addressed. Each year millions of people from different parts of the world visit the Mosque of Makkah for Hajj and Umrah. During Hajj and Umrah, Tawaf is an essential activity to be performed. In specific peak hours, crowd density in Mataf is extremely intense. Kissing the Black Stone in Hajj and Umrah is also a daunting task due to a large crowd. Controlling such a big crowd is a challenging task during Hajj and Umrah. An efficient real time crowd management system is extremely needed in such occasions. Some works which propose Hajj monitoring system can be explored in the papers [4,5,6,7,8,23,24,25].

- Disaster Management: There are various overcrowding conditions such as musical concerts and sports events etc., where when a portion of crowd charges in random directions, causing life-threatening conditions. In past, large numbers of people died due to suffocation in crowded areas in various public gathering events. Better crowd management can be made in such events to avoid accidents [29,30,31].

- Suspicious-Activity Detection: Crowd monitoring systems are used to minimize terror attacks in public gatherings. Traditional machine learning methods do not perform well in these situations. Some methods which are used for proper monitoring of such sort of detection activities can be explored in [32,33,34,35].

- Safety Monitoring: A large number of CCTV monitoring systems are installed at various places such as religious gatherings, airports, and public locations which enable better crowd monitoring systems. For example, [36] developed a system which analyze behaviors and congestion time slots for ensuring safety and security. Similarly, [37] presents a new method to detect dangers through analysis of crowd density. A better surveillance system is proposed which generates a graphical report through crowd analysis and its flow in different directions [38,39,40,41,42,43].

3. Motivations

- When two or more than two objects come close to each other and as a result merge, in such scenarios, it is hard to recognize each object individually. Consequently, monitoring and measuring accuracy of the system becomes difficult.

- A non-uniform sort of arrangement of various objects which are close to each other is faced by these systems. This arrangement is called clutter. Clutter is closely related to image noise which makes recognition and monitoring more challenging [43].

- Irregular object distribution is another serious problem faced by CMS. When density distribution in a video or image is varying, the condition is called irregular image distribution. Crowd monitoring in irregular object distribution is challenging [44].

- Another main problem faced in real time crowd monitoring systems is aspect ratio. In real time scenarios, normally a camera is attached to a drone which captures videos and images of the crowd under observation. In order to address the aspect ratio problem, the drone is flown at some specific height from the ground surface and installation of the camera is done such that the camera captures the top view of the crowd under observation. The top view results in properly addressing the aforementioned problem of aspect ratio.

4. Contributions

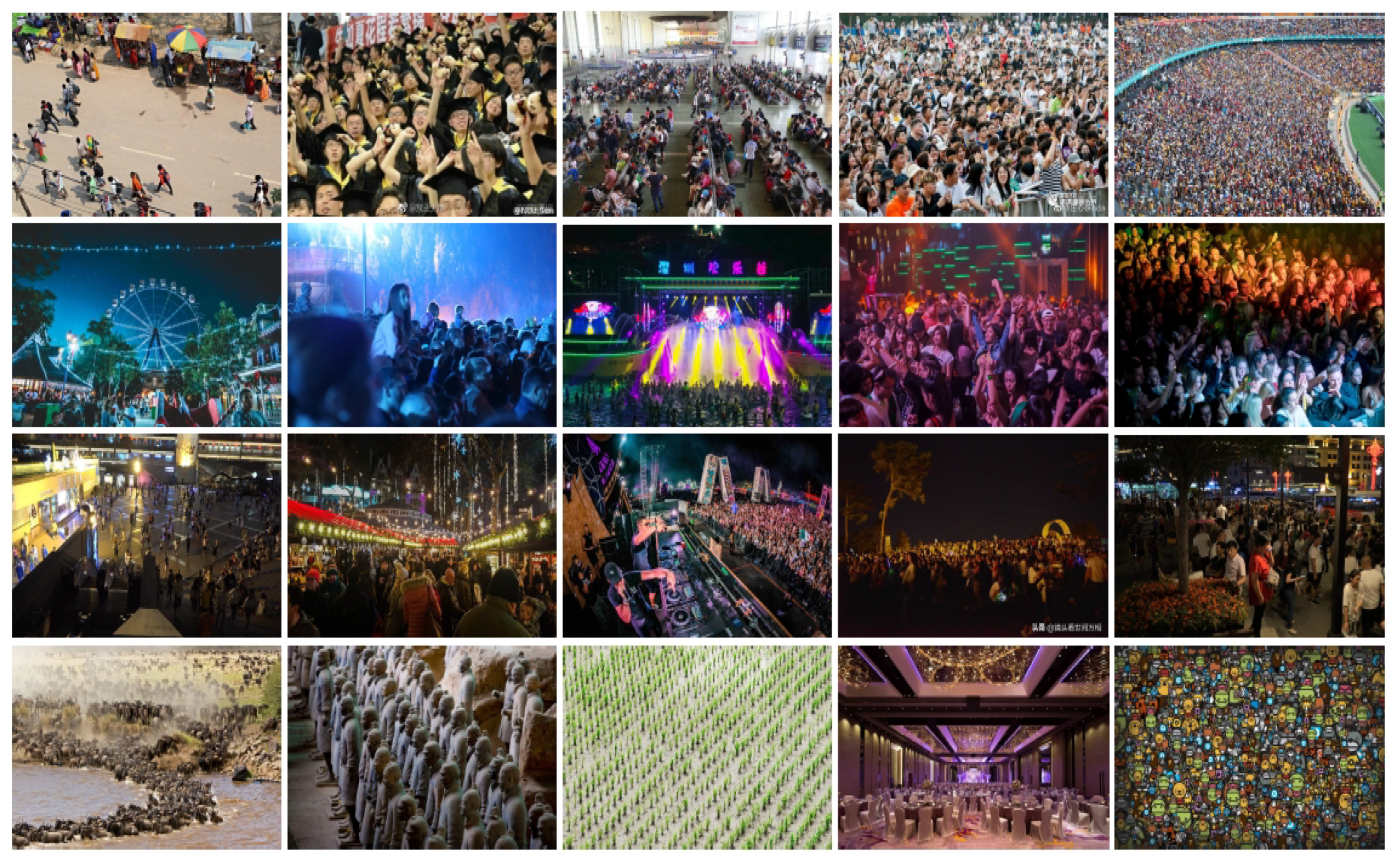

5. Databases

- Mecca [45]: This dataset is collected in the holy city of Makkah, Saudi Arabia during Hajj. Million of Muslims pray around the central Kaaba during Hajj each year. The duration of the video clip is short and for 10 min only. The video clip records specific period when pilgrims enter the Kaaba and occupy the place. The cameras are fixed at three specific directions, including south, east, and west. Video synchronization is performed for all the three cameras, as the videos are recorded from multiple cameras. The starting date and time is recorded along with other information. The ground truth data are created through labelling of pedestrians in every grid. The total number of frames in Mecca dataset is 480, whereas 8640 grid images are obtained.

- Kumbh Mela [46]: The Kumbh Mela dataset is collected with a drone camera for the holy Hindu festival at Allahabad, India. The dataset can be used both for crowd counting and modelling at religious gatherings. Kumbh Mela is a mass Hindu pilgrimage which is held after each 12 years. The Hindus gather at the confluence which is also called Sangam, invisible Saraswati, and Yamuna. In the last festival, held in 2013 a crowd of 120 million people was observed. All videos in Kumbh Mela are collected in a densely populated area. A drone with camera flying above the crowd captures video frames in this dataset. This is a large dataset which is recorded for around 6 h consisting of 600K frames.

- NWPU-crowd [47]: Most of the publicly available datasets are small-scale and cannot meet the needs of deep convolutional neural networks (DCNNs) based methods. To solve this problem, a large-scale dataset which can be used both for crowd counting and localization has been recently introduced. The NWPU-Crowd dataset consists of around 5K images where 2,133,375 heads are annotated. Compared to other datasets, NWPU-Crowd has large density range and different illumination scenes are considered. The data are collected both from Internet and self shooting. A very diverse data collection strategy has been adapted; for example, some of the typical crowd scenes are: resorts, malls, walking street, station, plaza, and museum.

- Beijing Bus Rapid Transit (BRT) [48]: The Beijing BRT database contains 1280 images captured from surveillance cameras fixed at various Beijing bus stations. The authors of the paper fixed the data for the training and testing phases; 720 images are used for training and remaining for testing phases. To make the database complex; shadows, sunshine interference, glare, and some other factors were also included.

- UCF-QNRF [49]: The latest dataset introduced is UCF-QNRF which has 1535 images. The dataset has massive variation in density. The resolution of the images is large (400 × 300 to 9000 × 6000) as compared to SOA datasets. It is the largest dataset used for dense crowd counting, localization, and density map estimation, particularly for the newly introduced deep learning methods. The images for UCF-QNRF are collected from the Hajj footage, flickr, and web search. The UCF-QNRF has the highest number of crowd images and annotation is also provided. A large variety of scenes containing very diverse set of densities, viewpoints and lighting variations are included. The UCF-QNRF contains buildings, sky, roads, and vegetation as present in realistic scenes captured in the unconstrained conditions. Due to all these conditions, the dataset is more difficult and realistic as well.

- The Shanghai Tech. [50]: This dataset has been introduced for comparatively large scale crowd counting. The dataset contains 1198 images whereas annotated heads are 330,165. This is one of the largest datasets as the number of annotated heads are sufficiently large for evaluation and training. The Shanghai dataset consists of two parts, namely Part A and B. Part A consists of 482 images, which are taken from the Internet; whereas Part B consists of 716 images which are collected from the metropolitan street in the city of Shanghai. The training and evaluation sets are defined by the authors. Part A has 300 images for training and remaining 182 for evaluation. Similarly, Part B has 400 images for training and 316 for the testing phases. The authors of the dataset attempt to make the dataset challenging as much diversity is included with diverse scenes and varying density levels. The training and testing phases are very biased in the Shanghai dataset as the images are of various density levels and are not uniform.

- WorldExpo [44]: This dataset is used for cross scene crowd management scenarios. The dataset consists of 3980 frames having size . The total number of labeled pedestrians is 199,923. The authors in WorldExpo dataset perform data drive cross scene counting in a crowded scene. All videos are collected through 108 cameras which are installed for surveillance applications. Diversity is ensured in the scenes as videos are collected from cameras with disjoint bird views. The training set consists of 1127 1-min long videos from 103 scenes and testing set consists of five 1-h videos which are collected from five different scenes. Due to limited data, the dataset is not sufficient for evaluating approaches designed for dense crowded scenes.

- UCF_CC_50 [43]: It is comparatively a difficult dataset as various scenes and different varieties of densities are considered. The database is collected from various places such as stadiums, concerts, and political protests. The total number of annotated images is 50 whereas the number of individuals is 1279. Only limited images are available for evaluation and training phases. The individuals varies from 94 to 4543, showing large-scale variations across the images. As the number of images for training and testing are limited, cross validation protocol is adapted for training and testing phases by the authors. Both 10-fold and 5-fold cross validation experiments are performed. Due to its complex nature, the results reported so far on recent deep learning based methods on this database are still far from optimal.

- Mall [52]: The Mall dataset is collected through surveillance cameras which are installed in a shopping mall. The total number of frames is the same as in University of California at San Diego (UCSD, whereas the size of each frame is . As compared to UCSD, little variation in the scenes can be seen. The dataset has various density levels and different activity patterns can also be noticed. Both static and moving crowd patterns are adapted. Severe perspective distortions are present in the videos, resulting in variations both in appearance and sizes of the objects. Some occlusion is also present in the scene objects such as indoor plants, stall etc. The training and testing sets are defined in the Mall dataset as well. The training phase consists of first 800 frames whereas remaining 1200 frames are used for testing.

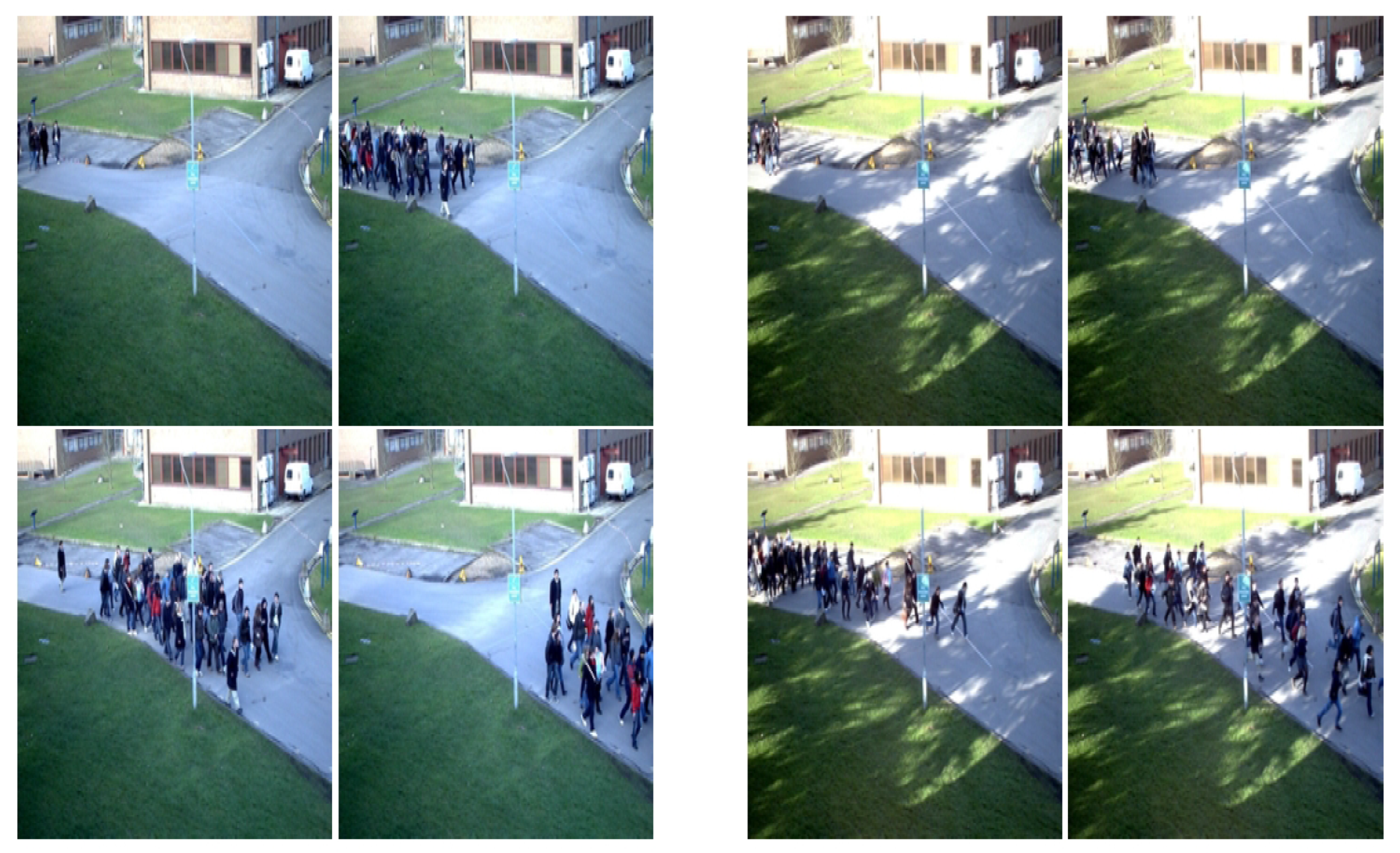

- PETS [53]: It is comparatively an old dataset, but is still used for research due to its diverse and challenging nature. These videos are collected through eight cameras which are installed in a campus. The dataset is used for surveillance applications, consequently complex videos can be seen. The dataset is mostly used for counting applications. Labelling is provided for all video sequences. PETS contains three kinds of movements and further each movement includes 221 frame images. The pedestrian level covers light and medium movements.

- UCSD [54]: The UCSD dataset is the first dataset which is used for counting people in a crowded place. The data in UCSD are collected through a camera which is installed on a pathway specified for pedestrians. All the recording is done at the University of California at San Diego (UCSD), USA. Annotation is provided for every fifth frame. Linear interpolation is used to annotate the remaining frames. To ignore unnecessary objects (for example trees and cars etc.), a region of interest is also defined. The total number of frames in the dataset is 2000, whereas the number of pedestrians is 49,885. The training and testing sets are defined, the training set starting from indices 600 to 1399, whereas testing set contains remaining 1200 sequences. The dataset is comparatively simple and an average of 15 people can be seen in a video. The dataset is collected from a single location, hence less complexity can be seen in the videos. No variation in the scene perspective across the videos can be noticed.

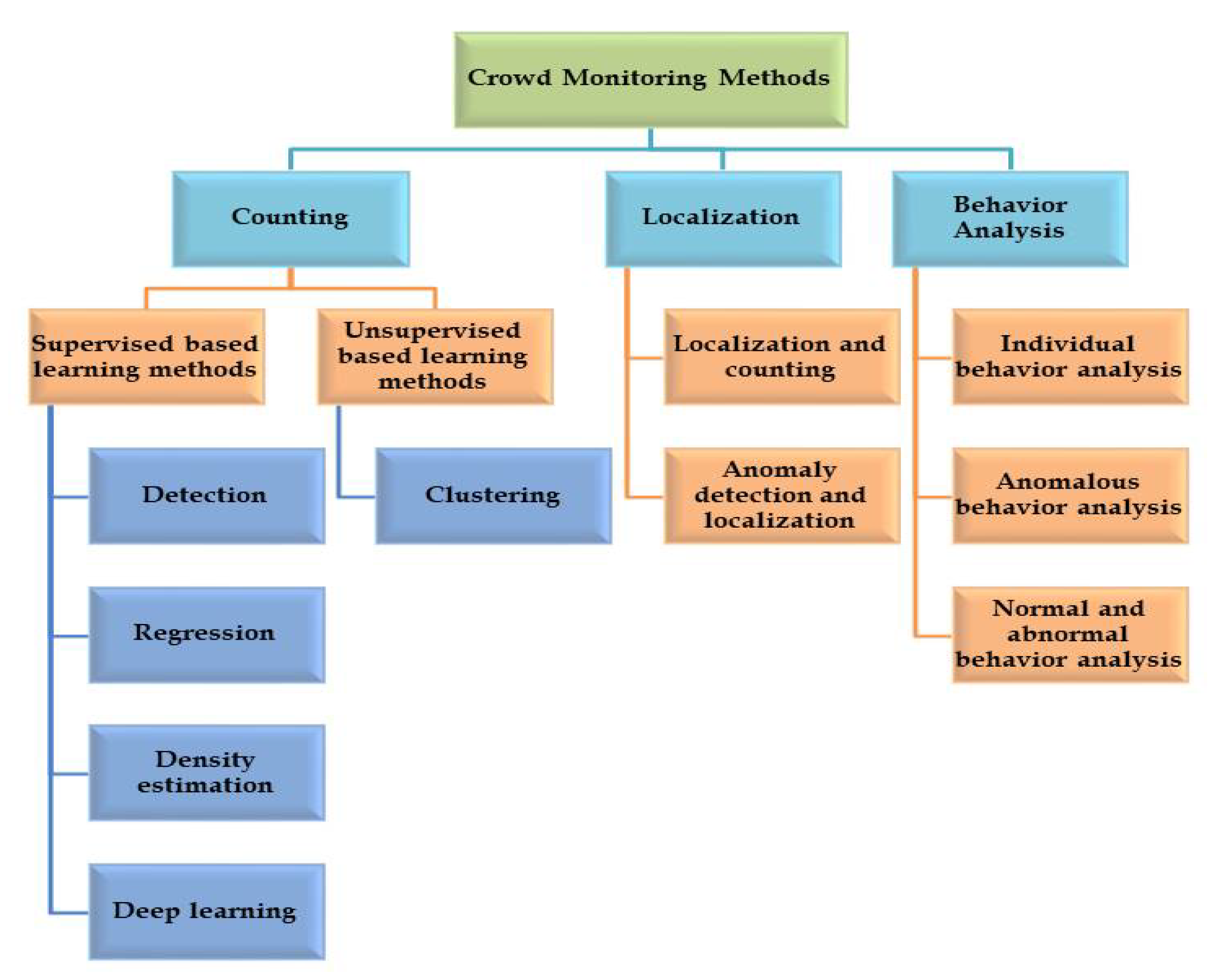

6. Approaches

6.1. Localization

6.2. Crowd Behaviour Detection

6.3. Counting

- Supervised learning based methods:

- –

- Counting by detection methods: A window of suitable size slides over the entire scene (video/image) to detect people. After detection, researchers came up with various methods using the concepts of histogram of oriented gradients (HOG) [67], shapelet [68], Haar features [69], and edgelet [70]. Various machine learning strategies are exploited by researchers [71,72], but most of these methods fail over highly crowded scenes. An excellent 3D shape modeling is used by Zhao et al. [73], reporting much better results as compared to SOA. The same work is further enhanced by Ge and Collins [74]. Some papers addressing counting by detection methods can be explored in the references [75,76,77].These methods fail when the density of crowd is high. Similarly, the performance of detection-based methods drop when a scene is highly cluttered.

- –

- Regression based method: The high density and cluttered problem faced by the aforementioned method is excellently addressed by this method. Regression based methods work in two steps: feature extraction and regression modelling. The feature extraction methods include subtraction of background, which is used for extracting the foreground information. Better results are also reported while using Blobs as a feature [39,54,78]. Local feature include extracting edge and texture information from data. Some of the local features used are Gray level co-occurrence matrices (GLCMs), Local binary pattern (LBP), and HoG. In the next stage mapping is performed from the extracted features through regression methods including Gaussian process regression, linear regression, and ridge regression [79]. An excellent strategy is adapted by Idrees et al. [43] by combining Fourier transform and SIFT features. Similarly, Chen et al. [39] extract features from sparse image samples and then mapping it to a cumulative attribute space. This strategy helps in handling the imbalanced data. Some more methods addressing counting problem can be explored in [15,16,17,39,80].The occlusion and cluttering problems faced by the initial two methods are solved with regression based methods. However, these methods still face the capitalized spatial information issue.

- –

- Estimation: A method incorporating the spatial information through linear mapping of local features is introduced by Lempitsky et al. [56]. The local patch features are mapped with object density maps in these methods. The authors develop the density maps by a convex quadratic optimization through cutting plane optimization algorithm. Similarly, Pham et al. [40] suggest a non-linear mapping method through Random Forest (RF) regression from patches in the image. The lastly mentioned method solve the challenge of variation invariance faced previously. Wang and Zou’s [38] work explores the computational complexity problem through subspace learning method. Similarly, Xu and Qiu [81] apply RF regression model for head counts. Some more algorithms which are estimation based methods can be explored in [56,82].We divide the density-level algorithms into three more categories:Low-level density estimation methods: These algorithms include methods such as optical flow, background segmentation method, and tracking methods [83,84]. These methods are based on motion elements. These elements are obtained from frame by frame modeling strategy, which is paving the path for object detection. Some more low density methods can be explored in [85,86,87].Middle-level density estimation methods: At this mid level of density estimation, the patterns in data become dependent upon the classification algorithms.High-level density estimation methods: In high level density estimation techniques, dynamic texture models are utilized [88]. These methods are dominant crowd modeling methods.

- Deep learning based methods (DLMs): As compared to TMLMs, recently introduced DLMs brought a large improvement in performance in various visual recognition tasks [89,90,91,92,93]. The TMLMs are based on handcrafted features, whereas, DLMs are more engineered. Apart from TMLMs, DLMs are also explored by researchers to address the counting problem in crowd. Wang et al. [47] perform experiments over AlexNet in dense crowded scene. Similarly Fu et al. [94] classify images into five levels considering the density in each image. The five levels defined by the authors include high density, very high density, low density, very low density, and medium density. Similarly Walach and Wolf [95] present a cross counting model. The residual error is estimated in the proposed model by adding layered boosting CNNs into the model. The method also performs selective sampling which reduces the effect of low quality images such as outliers. Zhang et al. [50] suggests a DCNNs multi-column based method for crowd counting. To cater various head sizes, three columns with various filter sizes are used. Similarly, Li et al. [96] use dialated DCNNs for better understanding of deeply congested scenes. Zhang et al. [73] present another crowd counting method through scale-adaptive DCNNs. To provide a regression based model, the authors suggest a multi-column DCNN model. Another method proposed in [97] use spatio-temporal DCNNs for counting in a crowded scene in videos. Another regression based model is proposed by Shang et al. [98]. Similarly Xu et al. [81] utilize the information at much deeper level for counting in complex scenes.

- Unsupervised learning based methods:

- –

- Clustering: These methods rely on the assumption that some visual features and motion fields are uniform. In these methods, similar features are grouped into various categories. For example, the work proposed in [18] uses Kanade–Lucas–Tomasi (KLT) tracker to obtain the features. The extracted features are comparatively low level. After extracting the features, Bayesian clustering [99] is employed to approximate the number of people in a scene. Such kind of algorithms model appearance-based features. In these methods, false estimation is obtained when people are in a static position. In a nutshell, clustering methods perform well in continuous image frames. Some additional methods are in the references [18,99,100,101].

7. Results and Discussion

7.1. Quantification of Tasks

- Counting:We represent estimation of count for crowded image i by . This single metric does not provide any information about the distribution or location of people in a video or image, but is still useful for various applications such as predicting the size of a crowd which is spanning many kilometres. A method proposed in [110] divides the whole area into smaller sections, which further finds the average number of people in each section, and also computes the mean density of the whole region. However, it is extremely difficult to obtain counts for many images at several locations, thereby, the more precise integration of density over specific area covered is permitted. Moreover, cartographic tools are required for counting through aerial images which map the crowd images onto the earth for computing ground areas. Due to its complex nature, mean absolute error (MAE) and mean squared error (MSE) are used for evaluation of a crowded scene for counting.The two evaluation metrics MAE and MSE can be defined as;In Equations (1) and (2), N represents the number of test samples, the ground truth count, and the estimated count for the ith sample.

- Localization: In many applications, the precise location of people is required, for example, initializing a tracking method in high density crowded scene. However, to calculate the localization error, predicted location is associated with ground truth location by performing 1-1 matching. This is performed with greedy association and then followed by computation of Precision, Recall, and F-measure. Moreover, the overall performance can also be computed through area under the Precision-Recall curve, also known as L-AUC.We argue here, precise crowd localization is comparatively less explored area. Evaluation metrics of localization problem are not firmly established by researchers. The only work which proposes 1-1 matching is reported in [43]. However, we observe that the metric defined in [43] leads to optimistic issues in some cases. No penalizing has been defined in over detection cases. For instance, if true head is matched with multiple heads, the nearest case will only be kept while ignoring the remaining heads without receiving any penalty. We believe that for a fair comparison, the discussed metric fails to be acknowledged widely. We define all the three evaluation metrics as:where represents true positive and represents false negative. For crowd localization task, normally box level Precision, Recall, and F-measure is used.

- Density estimation: Density estimation refers to calculating per-pixel density at a particular location in an image. Density estimation is different from counting as an image may have counts within particular safe limits, whereas containing some regions which will have comparatively higher density. This may happen to some empty regions located in a scene such as sky, walls, roads etc. in aerial cameras. The metrics which were used for counting estimation were also used for density estimation, however, MAE and MSE were measured on per pixel basis.

7.2. Data Annotation

7.3. Comparative Analysis

- In the last few years, significant research work has been reported in the area of crowd analysis. This can be seen from Table 2, Table 3 and Table 4. Many datasets have been introduced. However, most of these datasets address the counting problem. Less focus has been given to localization and behaviour analysis. The only datasets having sufficient information about localization and behaviour analysis are UCF-QNRF and NWPU-crowd. Therefore, there is still a lot of space regarding publicly available datasets in crowd analysis.

- Most of the labelling for creating ground truth data was performed manually. Commercial image editing softwares were used by the authors for creating ground truth data. In such kind of labelling process, no automatic tool was used. This labelling was totally dependent on subjective perception of a single participant involved in labelling. Hence, chances of error exist. Differentiation of certain regions in some cases was difficult.

- As compared to counting and behaviour analysis, localization is a less explored area. Some authors report 1-1 matching [43]. However, we believe that the metric defined in [43] leads to some optimistic problems. In this metric, no penalizing strategy has been defined in cases where multiple head detection occurs. Hence, still a proper performance metric has not been defined for behaviour analysis.

- Crowd analysis is an active area of research in CV. Table 4 shows a summary of the research conducted on crowd analysis between 2010 to 2020. A more detailed picture is presented in Table 2 and Table 3, as more detailed results are shown. The MAE, MSE, Precision, Recall, and F-1 measure values are reported from the original papers. As can be seen from Table 2 and Table 3, all the metric values were improved on the standard database, particularly with recently introduced deep learning method.

- Some papers report that a more detailed look into the crowd counting, localization, and behaviour analysis reveal that traditional machine learning methods perform better in some cases as compared to newly introduced deep learning based methods. Through this comparison, we do not claim that the performance of hand-crafted features is better than deep learning. We believe that better understanding of the deep learning based architectures is still needed for crowd analysis task. For example, most of the cases of poor performance while employing deep learning were limited data scenarios, a major drawback faced by deep learning based methods.

- Deep learning based algorithms have shown comparatively better performance in various visual recognition applications. These methods have particularly shown improvement in more complex scenarios in image processing and CV. Most of the limitations of the traditional machine learning methods are mitigated with these methods. Just like other applications, crowd monitoring and management has also shown significant improvements in the last 10 years.

- The performance of conventional machine learning methods was acceptable with data collected in simple and controlled environment scenes. However, when these methods are exposed to complex scenario, significant drop in performance was observed. Unlike these traditional methods, deep learning based methods learn comparatively higher level of abstraction from data. As a result, these deep learning based methods outperform previous methods by a large margin. These methods reduce the need of feature engineering significantly. However, these deep learning based methods are also facing some serious concerns from the research community. For example, deep learning is a complicated procedure, requiring various choices and inputs from the practitioner side. Researchers mostly rely on a trial and error strategy. Hence, these methods take more time to build as compared to the conventional machine learning models. In a nutshell, deep learning is the definitive choice for addressing the crowd management and monitoring task properly, but till date the use of these methods is still sporadic. Similarly, training a deep learning based model for crowd monitoring with different hidden layers and some filters which are flexible is a much better way to learn high level features. However, if training data are not sufficient, the whole process may under perform.

- We notice that DCNNs model with relatively more complex structure cannot deal with multi-scale problem in a better way, and still improvement is needed. Moreover, the existing methods have more focus on the system accuracy, whereas the correctness of density distribution is ignored. From the results, we notice that the reported accuracies are more close to the optimal ones, as the number of false negative and false positive are nearly the same.

- We argue that most of the existing methods for crowd monitoring and management are using CNNs based methods. However, these methods employ the pooling layer, resulting in low resolution and feature loss as well. The deeper layers extract the high level information, whereas the shallower layers extract the low level features including spatial information. We argue that combining both information from shallow and deep layers is the better option to be adapted. This will reduce the count error and will generate more reasonable and acceptable density map.

- Traditional machine learning methods have acceptable performance in controlled laboratory conditions. However, when these methods were applied to datasets with unconstrained and un-controlled conditions, significant drop in performance is noticed. However, deep learning based methods show much better performance in the wild conditions.

- Crowd analysis is an active area of research in CV. Tremendous progress has been seen in the last 10 years. From the results reported till date, it is clear that all the metrics (MAE, MSE, F-1 measure) are improved. We present a summary of all the papers published in Table 2 and Table 3. Noting the fast trends of the CV developments moving very rapidly towards recently introduced deep learning, progress in crowd analysis is not satisfactory. Given the difficulty of the training phase in deep learning based methods, particularly crowd analysis, knowledge transfer [111,112] is an option to be explored in future. In knowledge transferring strategy, benefits from the models already trained are taken. We also add here that a less investigated domain in transfer knowledge is heterogeneous strategy adoption considering deep learning based techniques for crowd analysis, the keywords are temporal pooling, 3D convolution, LSTMs, and optical flow frames. Similarly, better managed engineering techniques are also needed to improve SOA results. For instance, data augmentation is another possible option to be explored.

8. Summary and Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CV | Computer vision |

| CMS | Crowd Monitoring System |

| CCTV | Closed-circuit television |

| CNNs | Convolutional neural networks |

| DCNNs | Deep convolutional neural networks |

| GLCM | gray level co-occurrence matrices |

| HoG | Histogram of oriented gradients |

| IoT | Internet of Things |

| KLT | Kanade Lucas Tomasi |

| LBP | Local binary pattern |

| SVM | Support vector machine |

| MAE | Mean absolute error |

| MSE | Mean square error |

| RF | Random forest |

| SIFT | Scale Invariant Feature Transform |

| SOA | State of the art |

| TMLMs | Traditional machine learning methods |

| UAVs | Unmanned Aerial Vehicles |

References

- Khan, A.; Shah, J.; Kadir, K.; Albattah, W.; Khan, F. Crowd Monitoring and Localization Using Deep Convolutional Neural Network: A Review. Appl. Sci. 2020, 10, 4781. [Google Scholar] [CrossRef]

- Al-Salhie, L.; Al-Zuhair, M.; Al-Wabil, A. Multimedia Surveillance in Event Detection: Crowd Analytics in Hajj. In Proceedings of the Design, User Experience, and Usability, Crete, Greece, 22–27 June 2014; pp. 383–392. [Google Scholar]

- Alabdulkarim, L.; Alrajhi, W.; Aloboud, E. Urban Analytics in Crowd Management in the Context of Hajj. In Social Computing and Social Media. SCSM 2016. Lecture Notes in Computer Science; Meiselwitz, G., Ed.; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Mohamed, S.A.E.; Parvez, M.T. Crowd Modeling Based Auto Activated Barriers for Management of Pilgrims in Mataf. In Proceedings of the 2019 IEEE International Conference on Innovative Trends in Computer Engineering (ITCE), Aswan, Egypt, 2–4 February 2019; pp. 260–265. [Google Scholar]

- Rahim, M.S.M.; Fata, A.Z.A.; Basori, A.H.; Rosman, A.S.; Nizar, T.J.; Yusof, F.W.M. Development of 3D Tawaf Simulation for Hajj Training Application Using Virtual Environment. In Proceedings of the Visual Informatics: Sustaining Research and Innovations, International Visual Informatics Conference, Selangor, Malaysia, 9–11 November 2011; pp. 67–76. [Google Scholar]

- Othman, N.Z.S.; Rahim, M.S.M.; Ghazali, M. Integrating Perception into V Hajj: 3D Tawaf Training Simulation Application. Inform. Eng. Inf. Sci. 2011, 251, 79–92. [Google Scholar]

- Sarmady, S.; Haron, F.; Talib, A.Z.H. Agent-Based Simulation Of Crowd At The Tawaf Area. In 1st National Seminar on Hajj Best Practices Through Advances in Science and Technology; Science and Engineering Research Support Society: Sandy Bay, Australia, 2007; pp. 129–136. [Google Scholar]

- Majid, A.R.M.A.; Hamid, N.A.W.A.; Rahiman, A.R.; Zafar, B. GPU-based Optimization of Pilgrim Simulation for Hajj and Umrah Rituals. Pertan. J. Sci. Technol. 2018, 26, 1019–1038. [Google Scholar]

- Sjarif, N.N.A.; Shamsuddin, S.M.; Hashim, S.Z. Detection of abnormal behaviors in crowd scene: A Review. Int. J. Adv. Soft Comput. Appl. 2012, 4, 1–33. [Google Scholar]

- Rohit, K.; Mistree, K.; Lavji, J. A review on abnormal crowd behavior detection. In Proceedings of the International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–3. [Google Scholar]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-Based IoT Platform: A Crowd Surveillance Use Case. IEEE Commun. Mag. 2017, 55, 128–134. [Google Scholar] [CrossRef]

- Al-Sheary, A.; Almagbile, A. Crowd Monitoring System Using Unmanned Aerial Vehicle (UAV). J. Civ. Eng. Archit. 2017, 11, 1014–1024. [Google Scholar] [CrossRef]

- Alotibi, M.H.; Jarraya, S.K.; Ali, M.S.; Moria, K. CNN-Based Crowd Counting Through IoT: Application for Saudi Public Places. Procedia Comput. Sci. 2019, 163, 134–144. [Google Scholar] [CrossRef]

- “Hajj Statistics 2019–1440”, General Authority for Statistics, Kingdom of Saudi Arabia. Available online: https://www.stats.gov.sa/sites/default/files/haj_40_en.pdf (accessed on 10 August 2020).

- Fiaschi, L.; Köthe, U.; Nair, R.; Hamprecht, F.A. Learning to count with regression forest and structured labels. In Proceedings of the 2012 21st International Conference on Pattern Recognition (ICPR), Tsukuba Science City, Japan, 11–15 November 2012; pp. 2685–2688. [Google Scholar]

- Giuffrida, M.V.; Minervini, M.; Tsaftaris, S.A. Learning to count leaves in rosette plants. In Proceedings of the Computer Vision Problems in Plant Phenotyping (CVPPP), Swansea, UK, 7–10 September 2015; pp. 1.1–1.13. [Google Scholar]

- Chan, A.B.; Vasconcelos, N. Bayesian Poisson regression for crowd counting. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 545–551. [Google Scholar]

- Rabaud, V.; Belongie, S. Counting crowded moving objects. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 705–711. [Google Scholar]

- Bharti, Y.; Saharan, R.; Saxena, A. Counting the Number of People in Crowd as a Part of Automatic Crowd Monitoring: A Combined Approach. In Information and Communication Technology for Intelligent Systems; Springer: Singapore, 2019; pp. 545–552. [Google Scholar]

- Boulos, M.N.K.; Resch, B.; Crowley, D.N.; Breslin, J.G.; Sohn, G.; Burtner, R.; Pike, W.A.; Eduardo Jezierski, E.; Chuang, K.-Y.S. Crowdsourcing, citizen sensing and sensor web technologies for public and environmental health surveillance and crisis management: Trends, OGC standards and application examples. Int. J. Health Geogr. 2011, 10, 1–29. [Google Scholar]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.-Y. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2014, 16, 865–873. [Google Scholar] [CrossRef]

- Sadeghian, A.; Alahi, A.; Savarese, S. Tracking the untrackable: Learning to track multiple cues with long-term dependencies. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 300–311. [Google Scholar]

- Zainuddin, Z.; Thinakaran, K.; Shuaib, M. Simulation of the Pedestrian Flow in the Tawaf Area Using the Social Force Model. World Acad. Sci. Eng. Technol. Int. J. Math. Comput. Sci. 2010, 4, 789–794. [Google Scholar]

- Zainuddin, Z.; Thinakaran, K.; Abu-Sulyman, I.M. Simulating the Circumbulation of the Ka’aba using SimWalk. Eur. J. Sci. Res. 2009, 38, 454–464. [Google Scholar]

- Al-Ahmadi, H.M.; Alhalabi, W.S.; Malkawi, R.H.; Reza, I. Statistical analysis of the crowd dynamics in Al-Masjid Al-Nabawi in the city of Medina, Saudi Arabia. Int. J. Crowd Sci. 2018, 2, 64–73. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Albert, A.; Kaur, J.; Gonzalez, M.C. Using convolutional networks and satellite imagery to identify patterns in urban environments at a large scale. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1357–1366. [Google Scholar]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting mammals in UAV images: Best practices to address a substantially imbalanced dataset with deep learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef]

- Perez, H.; Hernandez, B.; Rudomin, I.; Ayguade, E. Task-based crowd simulation for heterogeneous architectures. In Innovative Research and Applications in Next-Generation High Performance Computing; IGI Global: Harrisburg, PA, USA, 2016; pp. 194–219. [Google Scholar]

- Martani, C.; Stent, S.; Acikgoz, S.; Soga, K.; Bain, D.; Jin, Y. Pedestrian monitoring techniques for crowd-flow prediction. P. I. Civil Eng-Eng. Su 2017, 2, 17–27. [Google Scholar] [CrossRef]

- Khouj, M.; López, C.; Sarkaria, S.; Marti, J. Disaster management in real time simulation using machine learning. In Proceedings of the 24th Canadian Conference on Electrical and Computer Engineering (CCECE), Niagara Falls, ON, Canada, 8–11 May 2011; pp. 1507–1510. [Google Scholar]

- Barr, J.R.; Bowyer, K.W.; Flynn, P.J. The effectiveness of face detection algorithms in unconstrained crowd scenes. In Proceedings of the 2014 IEEE Winter Conference on Applications of Computer Vision (WACV), Steamboat Springs, CO, USA, 24–26 March 2014; pp. 1020–1027. [Google Scholar]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep learning for emotion recognition on small datasets using transfer learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 443–449. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Chackravarthy, S.; Schmitt, S.; Yang, L. Intelligent Crime Anomaly Detection in Smart Cities Using Deep Learning. In Proceedings of the 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC), Philadelphia, PA, USA, 18–20 October 2018; pp. 399–404. [Google Scholar]

- Zhou, B.; Tang, X.; Wang, X. Learning collective crowd behaviors with dynamic pedestrian-agents. Int. J. Comput. Vis. 2015, 111, 50–68. [Google Scholar] [CrossRef]

- Danilkina, A.; Allard, G.; Baccelli, E.; Bartl, G.; Gendry, F.; Hahm, O.; Schmidt, T. Multi-Camera Crowd Monitoring: The SAFEST Approach. In Proceedings of the Workshop Interdisciplinaire sur la Sécurité Globale, Troyes, France, 4–6 February 2015. [Google Scholar]

- Wang, Y.; Zou, Y. Fast visual object counting via example-based density estimation. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3653–3657. [Google Scholar]

- Chen, K.; Loy, C.C.; Gong, S.; Xiang, T. Feature mining for localised crowd counting. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012. [Google Scholar]

- Pham, V.-Q.; Kozakaya, T.; Yamaguchi, O.; Okada, R. COUNT Forest: CO-voting uncertain number of targets using random forest for crowd density estimation. In Proceedings of the IEEE International Conference on Computer Vision, Araucano Park, Las Condes, Chile, 7–13 December 2015; pp. 3253–3261. [Google Scholar]

- Zhu, M.; Xuqing, W.; Tang, J.; Wang, N.; Qu, L. Attentive Multi-stage Convolutional Neural Network for Crowd Counting. Pattern Recognit. Lett. 2020, 135, 279–285. [Google Scholar] [CrossRef]

- Shao, J.; Loy, C.C.; Wang, X. Scene-independent group profiling in crowd. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2219–2226. [Google Scholar]

- Idrees, H.; Saleemi, I.; Seibert, C.; Shah, M. Multi-source Multi-scale counting in extremely dense crowd images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2547–2554. [Google Scholar]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Li, Y.; Sarvi, M.; Khoshelham, K.; Haghani, M. Multi-view crowd congestion monitoring system based on an ensemble of convolutional neural network classifiers. J. Intell. Transp. Syst. 2020, 1–12. [Google Scholar] [CrossRef]

- Pandey, A.; Pandey, M.; Singh, N.; Trivedi, A. KUMBH MELA: A case study for dense crowd counting and modeling. Multimed. Tools Appl. 2020, 79, 1–22. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Lin, W.; Li, X. NWPU-crowd: A large-scale benchmark for crowd counting. arXiv 2020, arXiv:2001.03360. [Google Scholar] [CrossRef]

- Ding, X.; Lin, Z.; He, F.; Wang, Y.; Huang, Y. A deeply-recursive convolutional network for crowd counting. In Proceedings of the IEEE Internation Conference on Acoustic, Speech Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1942–1946. [Google Scholar]

- Idrees, H.; Tayyab, M.; Athrey, K.; Zhang, D.; Al-Maadeed, S.; Rajpoot, N. Composition loss for counting, density map estimation and localization in dense crowds. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 532–546. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Shao, J.; Kang, K.; Loy, C.C.; Wang, X. Deeply learned attributes for crowded scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4657–4666. [Google Scholar]

- Chen, K.; Gong, S.; Xiang, T.; Loy, C.C. Cumulative attribute space for age and crowd density estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2467–2474. [Google Scholar]

- Ferryman, J.; Shahrokni, A. PETS2009: Dataset and challenge. In Proceedings of the Twelfth IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, Snowbird, UT, USA, 7–12 December 2009; pp. 1–6. [Google Scholar]

- Chan, A.B.; Liang, Z.-S.J.; Vasconcelos, N. Privacy preserving crowd monitoring: Counting people without people models or tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Anchorage, AK, USA, 23–28 June 2008; pp. 1–7. [Google Scholar]

- Rodriguez, M.; Laptev, I.; Sivic, J.; Audibert, J.-Y. Density-aware person detection and tracking in crowds. In Proceedings of the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2423–2430. [Google Scholar]

- Lempitsky, V.; Zisserman, A. Learning to count objects in images. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 6–11 December 2010; pp. 1324–1332. [Google Scholar]

- Ma, Z.; Yu, L.; Chan, A.B. Small instance detection by integer programming on object density maps. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Sánchez, F.L.; Hupont, I.; Tabik, S.; Herrera, F. Revisiting crowd behaviour analysis through deep learning: Taxonomy, anomaly detection, crowd emotions, datasets, opportunities and prospects. Inf. Fusion 2020, 64, 318–335. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ma, D.; Yu, H.; Huang, Y.; Howell, P.; Stevens, B. Scene Perception Guided Crowd Anomaly Detection. Neurocomputing. Available online: https://www.sciencedirect.com/science/article/abs/pii/S0925231220311267 (accessed on 30 July 2020).

- Sikdar, A.; Chowdhury, A.S. An Adaptive Training-less Framework for Anomaly Detection in Crowd Scenes. Neurocomputing 2020, 415, 317–331. [Google Scholar] [CrossRef]

- Tripathi, G.; Singh, K.; Vishwakarma, D.K. Convolutional neural networks for crowd behaviour analysis: A survey. Vis. Comput. 2019, 35, 753–776. [Google Scholar] [CrossRef]

- Lahiri, S.; Jyoti, N.; Pyati, S.; Dewan, J. Abnormal Crowd Behavior Detection Using Image Processing. In Proceedings of the Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–5. [Google Scholar]

- Yimin, D.; Fudong, C.; Jinping, L.; Wei, C. Abnormal Behavior Detection Based on Optical Flow Trajectory of Human Joint Points. In Proceedings of the Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 653–658. [Google Scholar]

- Wang, T.; Qiao, M.; Zhu, A.; Shan, G.; Snoussi, H. Abnormal event detection via the analysis of multi-frame optical flow information. Front. Comput. Sci. 2020, 14, 304–313. [Google Scholar] [CrossRef]

- Fradi, H.; Luvison, B.; Pham, Q.C. Crowd behavior analysis using local mid-level visual descriptors. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 589–602. [Google Scholar] [CrossRef]

- Rao, A.S.; Gubbi, J.; Palaniswami, M. Anomalous Crowd Event Analysis Using Isometric Mapping. In Advances in Signal Processing and Intelligent Recognition Systems; Springer: Berlin/Heidelberg, Germany, 2016; Volume 425, pp. 407–418. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), IEEE, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Sabzmeydani, P.; Mori, G. Detecting pedestrians by learning shapelet features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by Bayesian combination of edgelet part detectors. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 90–97. [Google Scholar]

- Gall, J.; Yao, A.; Razavi, N.; Gool, L.V.; Lempitsky, V. Hough forests for object detection, tracking, and action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2188–2202. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J.; Snow, D. Detecting pedestrians using patterns of motion and appearance. Int. J. Comput. Vis. 2005, 63, 153–161. [Google Scholar] [CrossRef]

- Zhao, T.; Nevatia, R.; Wu, B. Segmentation and tracking of multiple humans in crowded environments. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1198–1211. [Google Scholar] [CrossRef]

- Ge, W.; Collins, R.T. Marked point processes for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2020; pp. 2913–2920. [Google Scholar]

- Wang, H.; Cruz-Roa, A.; Basavanhally, A.; Gilmore, H.; Shih, N.; Feldman, M.; Tomaszewski, J.; Gonzalez, F.; Madabhushi, A. Mitosis detection in breast cancer pathology images by combining handcrafted and convolutional neural network features. J. Med. Imaging (Bellingham) 2014, 1, 034003. [Google Scholar] [CrossRef]

- Wang, H.; Cruz-Roa, A.; Basavanhally, A.; Gilmore, H.; Shih, N.; Feldman, M.; Tomaszewski, J.; Gonzalez, F.; Madabhushi, A. Cascaded ensemble of convolutional neural networks and handcrafted features for mitosis detection. In Medical Imaging 2014: Digital Pathology; International Society for Optics and Photonics: San Diego, CA, USA, 2014; Volume 9041, p. 90410B. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Ryan, D.; Denman, S.; Fookes, C.; Sridharan, S. Crowd counting using multiple local features. In Proceedings of the 2009 Digital Image Computing: Techniques and Applications DICTA’09, Melbourne, VIC, Australia, 1–3 December 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 81–88. [Google Scholar]

- Paragios, N.; Ramesh, V. A MRF-based approach for real-time subway monitoring. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Cheng, Z.; Qin, L.; Huang, Q.; Yan, S.; Tian, Q. Recognizing human group action by layered model with multiple cues. Neurocomputing 2014, 136, 124–135. [Google Scholar] [CrossRef]

- Xu, B.; Qiu, G. Crowd density estimation based on rich features and random projection forest. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Wu, X.; Liang, G.; Lee, K.K.; Xu, Y. Crowd density estimation using texture analysis and learning. In Proceedings of the 2006 IEEE International Conference on Robotics and Biomimetics, Kunming, China, 17–20 December 2006; pp. 214–219. [Google Scholar]

- McIvor, A.M. Background subtraction techniques, image and vision computing. Proc. Image Vis. Comput. 2000, 4, 3099–3104. [Google Scholar]

- Black, M.J.; Fleet, D.J. Probabilistic detection and tracking of motion boundaries. Int. J. Comput. Vis. 2000, 38, 231–245. [Google Scholar] [CrossRef]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Fort Collins, CO, USA, 23–25 June 1999. [Google Scholar]

- Chen, D.-Y.; Huang, P.-C. Visual-based human crowds behavior analysis based on graph modeling and matching. IEEE Sens. J. 2013, 13, 2129–2138. [Google Scholar] [CrossRef]

- Garcia-Bunster, G.; Torres-Torriti, M.; Oberli, C. Crowded pedestrian counting at bus stops from perspective transformations of foreground areas. IET Comput. Vis. 2012, 6, 296–305. [Google Scholar] [CrossRef]

- Chan, A.B.; Vasconcelos, N. Modeling, clustering, and segmenting video with mixtures of dynamic textures. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 909–926. [Google Scholar] [CrossRef]

- Khan, K.; Ahmad, N.; Khan, F.; Syed, I. A framework for head pose estimation and face segmentation through conditional random fields. Signal Image Video Process. 2020, 14, 159–166. [Google Scholar] [CrossRef]

- Khan, K.; Attique, M.; Khan, R.U.; Syed, I.; Chung, T.S. A multi-task framework for facial attributes classification through end-to-end face parsing and deep convolutional neural networks. Sensors 2020, 20, 328. [Google Scholar] [CrossRef]

- Khan, K.; Attique, M.; Syed, I.; Gul, A. Automatic gender classification through face segmentation. Symmetry 2019, 11, 770. [Google Scholar] [CrossRef]

- Ullah, F.; Zhang, B.; Khan, R.U.; Chung, T.S.; Attique, M.; Khan, K.; El Khediri, S.; Jan, S. Deep Edu: A Deep Neural Collaborative Filtering for Educational Services Recommendation. IEEE Access 2020, 8, 110915–110928. [Google Scholar] [CrossRef]

- Ahmad, K.; Khan, K.; Al-Fuqaha, A. Intelligent Fusion of Deep Features for Improved Waste Classification. IEEE Access 2020, 8, 96495–96504. [Google Scholar] [CrossRef]

- Fu, M.; Xu, P.; Li, X.; Liu, Q.; Ye, M.; Zhu, C. Fast crowd density estimation with convolutional neural networks. Eng. Appl. Artif. Intell. 2015, 43, 81–88. [Google Scholar] [CrossRef]

- Walach, E.; Wolf, L. Learning to count with CNN boosting. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 660–676. [Google Scholar]

- Li, Y.; Zhang, X.; Chen, D. CSRNET: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 8–23 June 2018; pp. 1091–1100. [Google Scholar]

- Miao, Y.; Han, J.; Gao, Y.; Zhang, B. ST-CNN: Spatial-Temporal Convolutional Neural Network for crowd counting in videos. Pattern Recogn. Lett. 2019, 125, 113–118. [Google Scholar] [CrossRef]

- Shang, C.; Ai, H.; Bai, B. End-to-end crowd counting via joint learning local and global count. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1215–1219. [Google Scholar]

- Brostow, G.J.; Cipolla, R. Unsupervised bayesian detection of independent motion in crowds. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 594–601. [Google Scholar]

- Duygulu, P.; Barnard, K.; de Freitas, J.F.; Forsyth, D.A. Object recognition as machine translation: Learning a lexicon for a fixed image vocabulary. In Proceedings of the European Conference on Computer Vision (ECCV), Copenhagen, Denmark, 28–31 May 2002; pp. 97–112. [Google Scholar]

- Moosmann, F.; Triggs, B.; Jurie, F. Fast discriminative visual codebooks using randomized clustering forests. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 985–992. [Google Scholar]

- Hao, Y.; Xu, Z.J.; Liu, Y.; Wang, J.; Fan, J.L. Effective Crowd Anomaly Detection Through Spatio-temporal Texture Analysis. Int. J. Autom. Comput. 2019, 16, 27–39. [Google Scholar] [CrossRef]

- Kaltsa, V.; Briassouli, A.; Kompatsiaris, I.; Hadjileontiadis, L.J.; Strintzis, M.G. Swarm Intelligence for Detecting Interesting Events in Crowded Environments. IEEE Trans. Image Process. 2015, 24, 2153–2166. [Google Scholar] [CrossRef]

- Anomaly Detection and Localization: A Novel Two-Phase Framework Based on Trajectory-Level Characteristics. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops, San Diego, CA, USA, 23–27 July 2018.

- Nguyen, M.-T.; Siritanawan, P.; Kotani, K. Saliency detection in human crowd images of different density levels using attention mechanism. Signal Process. Image Commun. 2020, in press. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, D.; Zheng, J.; Tang, X.; Fang, Y.; Yu, H. Detection of Salient Crowd Motion Based on Repulsive Force Network and Direction Entropy. Entropy 2019, 21, 608. [Google Scholar] [CrossRef]

- Lim, M.K.; Chan, C.S.; Monekosso, D.; Remagnino, P. Detection of salient regions in crowded scenes. Electron. Lett. 2014, 50, 363–365. [Google Scholar] [CrossRef]

- Lim, M.K.; Kok, V.J.; Loy, C.C.; Chan, C.S. Crowd Saliency Detection via Global Similarity Structure. In Proceedings of the 22nd International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24–28 August 2014. [Google Scholar]

- Khan, S.D. Congestion detection in pedestrian crowds using oscillation in motion trajectories. Eng. Appl. Artif. Intell. 2019, 85, 429–443. [Google Scholar] [CrossRef]

- Jacobs, H. To count a crowd. Columbia J. Rev. 1967, 6, 36–40. [Google Scholar]

- Tsai, Y.-H.H.; Yeh, Y.-R.; Wang, Y.-C.F. Learn Cross-Domain Landmarks Heterog Domain Adaptation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5081–5090. [Google Scholar]

- Hoffman, J.; Rod, E.; Donahue, J.; Kulis, B.; Saenko, K. Asymmetric Categ. Invariant Feature Transform. Domain Adaptation. Int. J. Comput. Vision. 2014, 109, 28–41. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Chang, H.; Nian, F.; Wang, Y.; Li, T. Dense crowd counting from still images with convolutional neural networks. J. Vis. Commun. Image Represent. 2016, 38, 530–539. [Google Scholar] [CrossRef]

- Gao, J.; Han, T.; Wang, Q.; Yuan, Y. Domain-adaptive crowd counting via inter-domain features segregation and gaussian-prior reconstruction. arXiv 2019, arXiv:1912.03677. [Google Scholar]

- Liu, C.; Weng, X.; Mu, Y. Recurrent attentive zooming for joint crowd counting and precise localization. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1217–1226. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, L.; Amirgholipour, S.; Jiang, J.; Jia, W.; Zeibots, M.; He, X. Performance-enhancing network pruning for crowd counting. Neurocomputing 2019, 360, 246–253. [Google Scholar] [CrossRef]

- Xue, Y.; Liu, S.; Li, Y.; Qian, X. Crowd Scene Analysis by Output Encoding. arXiv 2020, arXiv:2001.09556. [Google Scholar]

- Kumagai, S.; Hotta, K.; Kurita, T. Mixture of counting CNNs: Adaptive integration of CNNs specialized to specific appearance for crowd counting. arXiv 2017, arXiv:1703.09393. [Google Scholar]

- Sindagi, V.A.; Patel, V.M. CNN-Based cascaded multi-task learning of high-level prior and density estimation for crowd counting. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017. [Google Scholar]

- Sam, D.B.; Surya, S.; Babu, R.V. Switching convolutional neural network for crowd counting. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Han, K.; Wan, W.; Yao, H.; Hou, L. Image crowd counting using convolutional neural network and Markov random field. J. Adv. Comput. Intell. Intell. Inform. 2017, 21, 632–638. [Google Scholar] [CrossRef]

- Marsden, M.; McGuinness, K.; Little, S.; O’Connor, N.E. Fully convolutional crowd counting on highly congested scenes. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications—Volume 5: VISAPP, Porto, Portugal, 27 February–1 March 2017; pp. 27–33. [Google Scholar]

- Oñoro-Rubio, D.; López-Sastre, R.J. Towards perspective-free object counting with deep learning. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 615–629. [Google Scholar]

- Ding, X.; He, F.; Lin, Z.; Wang, Y.; Guo, H.; Huang, Y. Crowd Density Estimation Using Fusion of Multi-Layer Features. IEEE Trans. Intell. Transp. Syst. 2020, 1–12. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, M.; Zhao, Q.; Wang, X. Point in, box out: Beyond counting persons in crowds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 6469–6478. [Google Scholar]

- Sheng, B.; Shen, C.; Lin, G.; Li, J.; Yang, W.; Sun, C. Crowd counting via weighted VLAD on a dense attribute feature map. IEEE Trans. Circ. Syst. Video Technol. 2018, 28, 1788–1797. [Google Scholar] [CrossRef]

- Song, H.; Liu, X.; Zhang, X.; Hu, J. Real-time monitoring for crowd counting using video surveillance and GIS. In Proceedings of the 2012 2nd International Conference on Remote Sensing, Environment and Transportation Engineering (RSETE), Nanjing, China, 1–3 June 2012; pp. 1–4. [Google Scholar]

- Rodriguez, M.; Sivic, J.; Laptev, I.; Audibert, J.-Y. Data-driven crowd analysis in videos. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1235–1242. [Google Scholar]

| Database | Year | Task | # of Images | Head Count | Source Obtained |

|---|---|---|---|---|---|

| Mecca [45] | 2020 | crowd monitoring | – | – | surveillance |

| Kumbh Mela [46] | 2020 | crowd monitoring | 6k | – | surveillance |

| NWPU-Crowd [47] | 2020 | crowd counting and localization | 5109 | 2133,375 | surveillance and Internet |

| BRT [48] | 2018 | crowd monitoring | 1280 | 16795 | surveillance |

| UCF-QNRF [49] | 2018 | counting in a crowd and localization | 1525 | 1,251,642 | surveillance |

| Shanghai Tech [50] | 2016 | cross scene crowd counting | 482 | 241,677 | surveillance and Internet |

| WorldExpo’10 [44] | 2015 | counting in a crowd | 3980 | 199,923 | surveillance |

| WWW [51] | 2015 | crowd management | 10000 | 8 million | Internet |

| UCF_CC_50 [43] | 2013 | Density estimation | 50 | 63,974 | surveillance |

| The Mall [52] | 2012 | counting in a crowd | 2000 | 62,325 | surveillance |

| PETS [53] | 2009 | counting in a crowd | 8 | 4000 | surveillance |

| UCSD [54] | 2008 | counting in a crowd | 2000 | 49,885 | Internet |

| Database | Year | Method | Precision | Recall | L-AUC | F1-Measure |

|---|---|---|---|---|---|---|

| 2015 | Ren et al. [113] | 95.8 | 3.5 | – | 6.7 | |

| NWPU-crowd | 2017 | Hu et al. [114] | 52.9 | 61.1 | – | 56.7 |

| 2019 | Gao et al. [115] | 55.8 | 49.6 | – | 52.5 | |

| 2019 | Liu et al. [116] | 66.6 | 54.3 | – | 59.8 | |

| 2018 | Idrees et al. [43] | 75.8 | 63.5 | 0.591 | – | |

| 2015 | Badrinarayanan et al. [117] | 71.8 | 62.9 | 0.67 | ||

| 2016 | Huang et al. [118] | 70.1 | 58.1 | 0.637 | ||

| 2016 | He et al. [119] | 61.6 | 66.9 | 0.612 | ||

| UCF_QNRF | 2018 | Shen et al. [120] | 75.6 | 59.7 | – | – |

| 2018 | Liu et al. [116] | 59.3 | 63.0 | – | ||

| 2015 | Zhang et al. [44] | 78.1 | 65.1 | – | ||

| 2019 | Liu et al. [116] | 81.5 | 71.1 | – | ||

| 2016 | Zhang et al. [50] | 71.0 | 72.4 | – | ||

| 2020 | Xue et al. [121] | 82.4 | 78.3 | – | ||

| 2019 | Liu et al. [116] | 12.0 | 32.6 | – | ||

| 2018 | Shen et al. [120] | 79.2 | 82.2 | – | – | |

| 2018 | Liu et al. [116] | 82.2 | 73.3 | – | ||

| 2015 | Zhang et al. [44] | 81.9 | 77.9 | – | ||

| Shanghai Tech. A | 2019 | Liu et al. [116] | 86.5 | 69.7 | – | |

| 2018 | Idrees et al. [43] | 79.0 | 72.3 | – | ||

| 2016 | Zhang et al. [50] | 76.5 | 81.7 | – | ||

| 2020 | Xue et al. [121] | 87.3 | 79.2 | – | ||

| 2019 | Liu et al. [116] | 15.6 | 37.5 | – | ||

| 2019 | Liu et al. [116] | 79.1 | 60.1 | – | ||

| 2018 | Shen et al. [120] | 80.2 | 78.8 | – | – | |

| 2018 | Liu et al. [116] | 75.4 | 79.3 | – | ||

| Shanghai Tech. B | 2015 | Zhang et al. [44] | 84.1 | 75.8 | – | |

| 2019 | Liu et al. [116] | 78.1 | 73.9 | – | ||

| 2019 | Idrees et al. [43] | 76.8 | 78.0 | – | ||

| 2019 | Zhang et al. [50] | 82.4 | 76.0 | – | ||

| 2020 | Xue et al. [121] | 86.7 | 80.5 | – | ||

| 2019 | Liu et al. [116] | 60.0 | 23.0 | – | ||

| 2019 | Liu et al. [116] | 73.7 | 79.6 | – | ||

| 2018 | Shen et al. [120] | 68.5 | 81.2 | – | – | |

| 2018 | Liu et al. [116] | 73.8 | 78.2 | – | ||

| World Expo | 2015 | Zhang et al. [44] | 79.5 | 73.1 | – | |

| 2019 | Liu et al. [116] | 71.6 | 75.4 | – | ||

| 2019 | Idrees et al. [43] | 72.4 | 78.3 | – | ||

| 2019 | Zhang et al. [50] | 80.9 | 77.5 | – | ||

| 2020 | Xue et al. [121] | 82.0 | 81.5 | – |

| Year | Database | Year | MAE | MSE |

|---|---|---|---|---|

| Pandey et al. [46] | 2020 | 94 | 104 | |

| Kumagai et al. [122] | 2017 | 361 | 493 | |

| Sindagi et al. [123] | 2017 | 322 | 341 | |

| Li et al. [96] | 2017 | 266 | 397 | |

| Sam et al. [124] | 2017 | 318 | 439 | |

| Han et al. [125] | 2017 | 196 | 156 | |

| Yao et al. [123] | 2017 | 322 | 341 | |

| Zhang et al. [50] | 2016 | 377 | 509 | |

| Walach et al. [95] | 2016 | 364 | 341 | |

| Kumbh Mela | Marsden et al. [126] | 2016 | 126 | 173 |

| Hu et al. [114] | 2016 | 137 | 152 | |

| Rodriguez et al. [55] | 2015 | 655 | 697 | |

| Onoro et al. [127] | 2015 | 333 | 425 | |

| Zhang et al. [44] | 2015 | 467 | 498 | |

| Liu et al. [120] | 2014 | 197 | 273 | |

| Idrees et al. [43] | 2013 | 419 | 541 | |

| Chen et al. [39] | 2012 | 207 | 246 | |

| Lempitsky et al. [56] | 2010 | 493 | 487 | |

| Ding et al. [128] | 2020 | 1.4 | 2.0 | |

| Ding et al. [48] | 2018 | 1.4 | 2.0 | |

| BRT | Kumagai et al. [122] | 2017 | 1.7 | 2.4 |

| Zhang et al. [50] | 2016 | 2.2 | 3.4 | |

| Pandey et al. [46] | 2020 | 2.05 | 4.93 | |

| Li et al. [96] | 2017 | 1.1 | 1.4 | |

| Sam et al. [124] | 2017 | 1.6 | 2.1 | |

| UCSD | Zhang et al. [50] | 2016 | 1.0 | 1.3 |

| Onoro et al. [127] | 2016 | 1.5 | 3.1 | |

| Zhang et al. [44] | 2015 | 1.6 | 3.3 | |

| Ding et al. [128] | 2020 | 1.8 | 2.3 | |

| Pandey et al. [46] | 2020 | 4.09 | 14.9 | |

| Mall | Liu et al. [120] | 2019 | 2.4 | 9.8 |

| Wang et al. [38] | 2016 | 2.7 | 2.1 | |

| Xu et al. [81] | 2016 | 3.2 | 15.5 | |

| Pham et al. [40] | 2015 | 2.5 | 10 | |

| Ding et al. [128] | 2020 | 309 | 428 | |

| Pandey et al. [46] | 2020 | 483 | 397 | |

| Li et al. [96] | 2017 | 266 | 397 | |

| Zhang et al. [50] | 2016 | 338 | 424 | |

| Zhang et al. [44] | 2015 | 338 | 424 | |

| UCF_CC_50 | Onoro et al. [127] | 2016 | 465 | 371 |

| Sam et al. [124] | 2017 | 306 | 422 | |

| Onoro et al. [127] | 2016 | 465 | 371 | |

| Sam et al. [124] | 2017 | 306 | 422 | |

| Walach et al. [95] | 2016 | 364 | 341 | |

| Marsden et al. [126] | 2016 | 338 | 424 | |

| Sindagi et al. [123] | 2017 | 310 | 397 | |

| Ding et al. [128] | 2020 | 69 | 114 | |

| Pandey et al. [46] | 2020 | 179 | 232 | |

| Li et al. [96] | 2017 | 68 | 115 | |

| Shanghai Tech (Part A) | Zhang et al. [50] | 2016 | 110 | 173 |

| Zhang et al. [44] | 2015 | 181 | 277 | |

| Sam et al. [124] | 2017 | 90 | 135 | |

| Marsden et al. [126] | 2016 | 128 | 183 | |

| Sindagi et al. [123] | 2017 | 101 | 152 | |

| Ding et al. [128] | 2020 | 10 | 14 | |

| Pandey et al. [46] | 2020 | 43 | 67 | |

| Li et al. [96] | 2017 | 20 | 31 | |

| Zhang et al. [50] | 2016 | 23 | 33 | |

| Shanghai Tech (Part B) | Zhang et al. [44] | 2015 | 32 | 49 |

| Sam et al. [124] | 2017 | 10 | 16 | |

| Marsden et al. [126] | 2016 | 26 | 41 | |

| Sindagi et al. [123] | 2017 | 20 | 33 | |

| Ding et al. [128] | 2020 | 8 | – | |

| Pandey et al. [46] | 2020 | 18 | – | |

| Li et al. [96] | 2017 | 8 | – | |

| Zhang et al. [50] | 2016 | 11 | – | |

| World Expo | Zhang et al. [44] | 2015 | 12 | – |

| Sam et al. [124] | 2017 | 9 | – | |

| Shang et al. [98] | 2016 | 11 | – | |

| Sindagi et al. [123] | 2017 | 8 | – | |

| Chen et al. [39] | 2012 | 16 | – |

| Year | Reported Paper | Apporach Used | Task Performed |

|---|---|---|---|

| Fiaschi et al. [15] | Regression | Density estimation | |

| Pandey et al. [46] | deep learning | counting | |

| 2020 | Zhu et al. [41] | deep learning | counting |

| Ding et al. [128] | deep learning | density estimation | |

| Wang et al. [47] | deep learning | counting | |

| Li et al. [45] | deep learning | counting | |

| Alotibi et al. [13] | Deep learning | counting | |

| Alabdulkarim et al. [3] | Deep learning | ||

| Bharti et al. [19] | Deep learning | counting | |

| 2019 | Mohamed et al. [4] | deep learning | counting |

| Tripathi et al. [61] | deep learning | counting and localization | |

| Liu et al. [116] | deep learning | density estimation | |

| Liu et al. [129] | deep learning | density estimation | |

| Gao et al. [115] | regression | counting | |

| Miao et al. [97] | deep learning | counting | |

| Al-Ahmadi et al. [25] | detection | counting | |

| Majid et al. [8] | deep learning | counting and density estimation | |

| Idrees et al. [49] | deep learning | density estimation | |

| Li et al. [96] | deep learning | density estimation | |

| 2018 | Motlagh et al. [11] | regression | density estimation |

| Al-Sheary et al. [12] | detection | counting | |

| Chackravarthy et al. [35] | deep learning and detection | counting, localization | |

| Sheng et al. [130] | deep learning | density estimation and counting | |

| Ding et al. [48] | deep learning | counting | |

| Lahiri et al. [62] | detection | behavier analysis | |

| Sam et al. [124] | deep learning | counting | |

| Li et al. [96] | deep learning | density estimation | |

| 2017 | Martani et al. [29] | detection | localization |

| Kumagai et al. [122] | deep learning | counting | |

| Rohit et al. [10] | detection | behavier analysis | |

| Fradi et al. [65] | deep learning | counting | |

| Rao et al. [66] | detection | counting | |

| Zhang et al. [50] | deep learning | counting | |

| Jackson et al. | Deep learning | counting | |

| Li et al. [26] | deep learning | counting and density estimation | |

| Perez et al. [29] | detection | density estimation | |

| 2016 | Onoro et al. [127] | deep learning | counting |

| Hu et al. [114] | deep learning | counting | |

| Xu et al. [81] | detection | density estimation | |

| Marsden et al. [126] | deep learning | density estimation | |

| Walach et al. [95] | deep learning | counting | |

| Shang et al. [98] | detection | counting and density estimation | |

| Zhang et al. [44] | deep learning | counting | |

| Giuffrida et al. [16] | deep learning | counting | |

| Shao et al. [51] | deep learning | density estimation | |

| Giuffrida et al. [16] | deep learning | counting | |

| 2015 | Zhou et al. [36] | deep learning | counting and density estimation |

| Danilkina et al. [37] | detection | counting | |

| Pham et al. [40] | deep learning | counting and density estimation | |

| Fu et al. [94] | deep learning | density estimation | |

| Ma et al. [57] | deep learning | density estimation | |

| Al-Salhie et al. [2] | detection | density estimation | |

| Jackson et al. | Deep learning | density estimation | |

| 2014 | Barr et al. [32] | detection | counting |

| Shao et al. [42] | detection | counting | |

| Idrees et al. [43] | detection | counting | |

| 2013 | Chen et al. [52] | regression | density estimation |

| Chen et al. [86] | detection and regression | behaviour analysis | |

| Jackson et al. | regression | counting | |

| Fiaschi et al. [15] | Regression | counting | |

| 2012 | Chen et al. [39] | detection | counting and density estimation |

| Song et al. [131] | regression | counting | |

| Garcia et al. [87] | regression | behavier analysis and density estimation | |

| Khouj et al. [31] | detection | counting | |

| Rahim et al. [5] | detection | counting and density estimation | |

| 2011 | Othman et al. [6] | regression | density estimation |

| Rodriguez et al. [132] | detection | counting | |

| Lempitsky et al. [56] | detection | counting | |

| 2010 | Zainuddin et al. [23] | regression | density estimation and counting |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, K.; Albattah, W.; Khan, R.U.; Qamar, A.M.; Nayab, D. Advances and Trends in Real Time Visual Crowd Analysis. Sensors 2020, 20, 5073. https://doi.org/10.3390/s20185073

Khan K, Albattah W, Khan RU, Qamar AM, Nayab D. Advances and Trends in Real Time Visual Crowd Analysis. Sensors. 2020; 20(18):5073. https://doi.org/10.3390/s20185073

Chicago/Turabian StyleKhan, Khalil, Waleed Albattah, Rehan Ullah Khan, Ali Mustafa Qamar, and Durre Nayab. 2020. "Advances and Trends in Real Time Visual Crowd Analysis" Sensors 20, no. 18: 5073. https://doi.org/10.3390/s20185073

APA StyleKhan, K., Albattah, W., Khan, R. U., Qamar, A. M., & Nayab, D. (2020). Advances and Trends in Real Time Visual Crowd Analysis. Sensors, 20(18), 5073. https://doi.org/10.3390/s20185073