Application of Crowd Simulations in the Evaluation of Tracking Algorithms

Abstract

1. Introduction

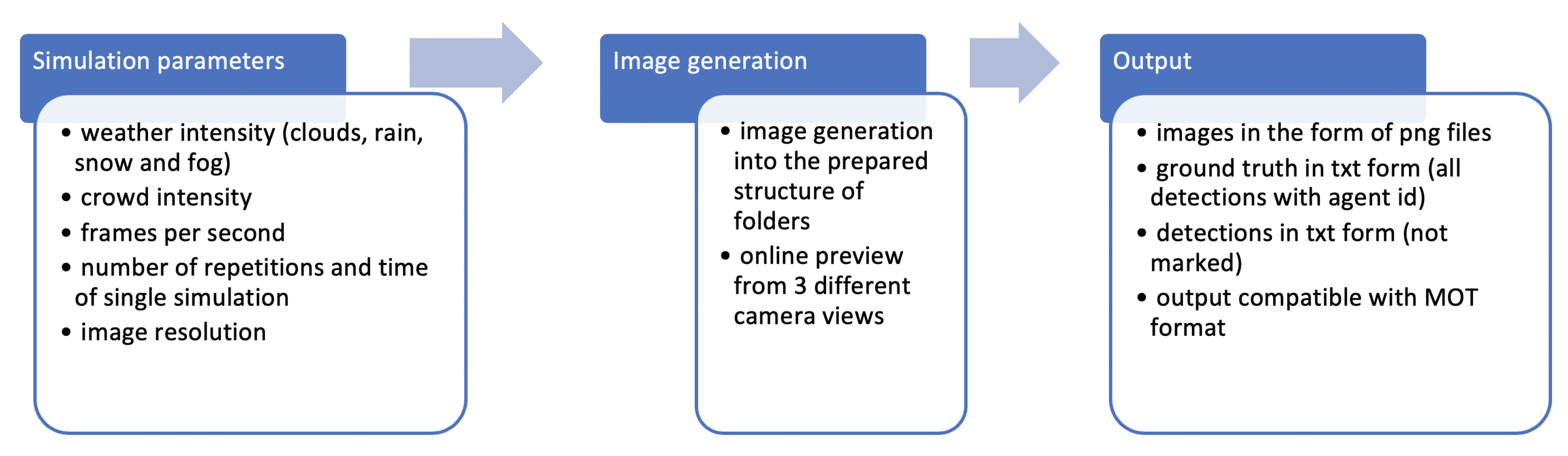

- random generation of images that can be used to evaluate different algorithms for tracking multiple objects by means of random starting position of pedestrians, their unpredictable interactions during simulation process and choice of different models

- application of the Unity game engine for crowd simulation

- automatic annotation of object detections and names of actions according to the MOT format

- application of prepared models, generated scenes, and changes in time of day and weather conditions during a simulation

2. Materials and Methods

2.1. Proposed Solution—CrowdSim

2.2. Evaluation Scheme

- adaptation of the input data to suit the input format of each algorithm

- conversion of algorithm outputs to the form standardized by MOTChallenge

- automation of triggering algorithms in the form of a pipeline

- harvest of standardized outputs and performance ratings with use of the MOTChallenge workspace

- workspace clean-up and preparation for another run

2.3. Tracking Algorithms

- Tracking by detection (TBD) by Andreas Geiger [6], in which multiple-object tracking is performed in three stages. All detections are correlated with detections from consecutive frames using bounding box overlap and appearance. A Kalman filter is used in predictions, and detections are than matched between frames using the Hungarian method for bipartite matching. In order to reduce the number of missed detections caused by gaps or occlusions, the author of TBD also employed associated tracklets. TBD was originally tested on author’s own dataset.

- Tracklet Confidence (TCF) by Seung-Hwan Bae and Kuk-Jin Yoon [31], who based the method on tracklet confidence so as to handle track fragments during unreliable detections and occurrence of occlusions and to attain online discriminative appearance learning to avoid errors. For the performance evaluation, the authors used the following datasets: CAVIAR [32], PETS09 [1], and ETH Mobile scene [33].

- Enhancing linear programming (ELP) with Motion Modeling for Multi-target Tracking [34]. During the detection phase, this algorithm employs the authors’ pedestrian detector, creating a group of detections in the form of bounding boxes. After that, all previously detected objects are gathered to create tracklets which include every object and form a network. Method was originally evaluated on Oxford town center [35] and PETS09 [1].

- High density homogeneous (HDH) [36]. Unlike most object detectors, this one requires no object texture or image learning. The detection algorithm localizes targets based on local maxima search, and tracking is based on a greedy, graph-based method, which matches objects with short tracks and performs backward validation in time windows. Method was verified on ETH [33], PETS09 [1] and TUD [37].

- Discrete continuous energy (DCT) [38]. This approach incorporates both data associations and trajectory estimations in one objective function. The biggest benefit from this approach is that the continuous factor allows many trajectory properties to be modeled that a regular discrete formulation would not be capable of capturing. This method gathers all unlabeled detections and then creates possible trajectory hypotheses to re-estimate those trajectories using discrete-continuous optimization. Method was evaluated on ETH [33], PETS09 [1] and TUD [37].

3. Results

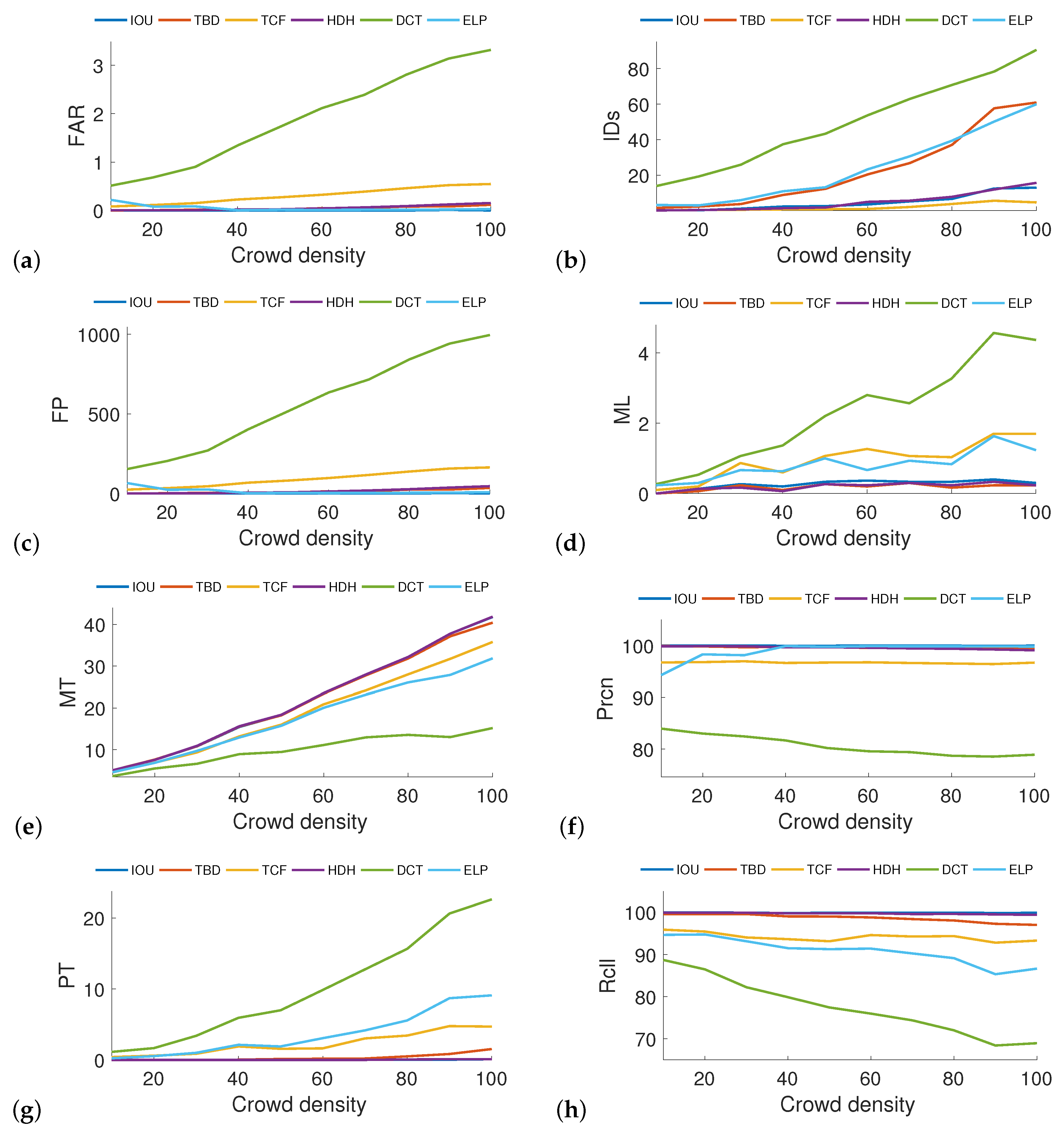

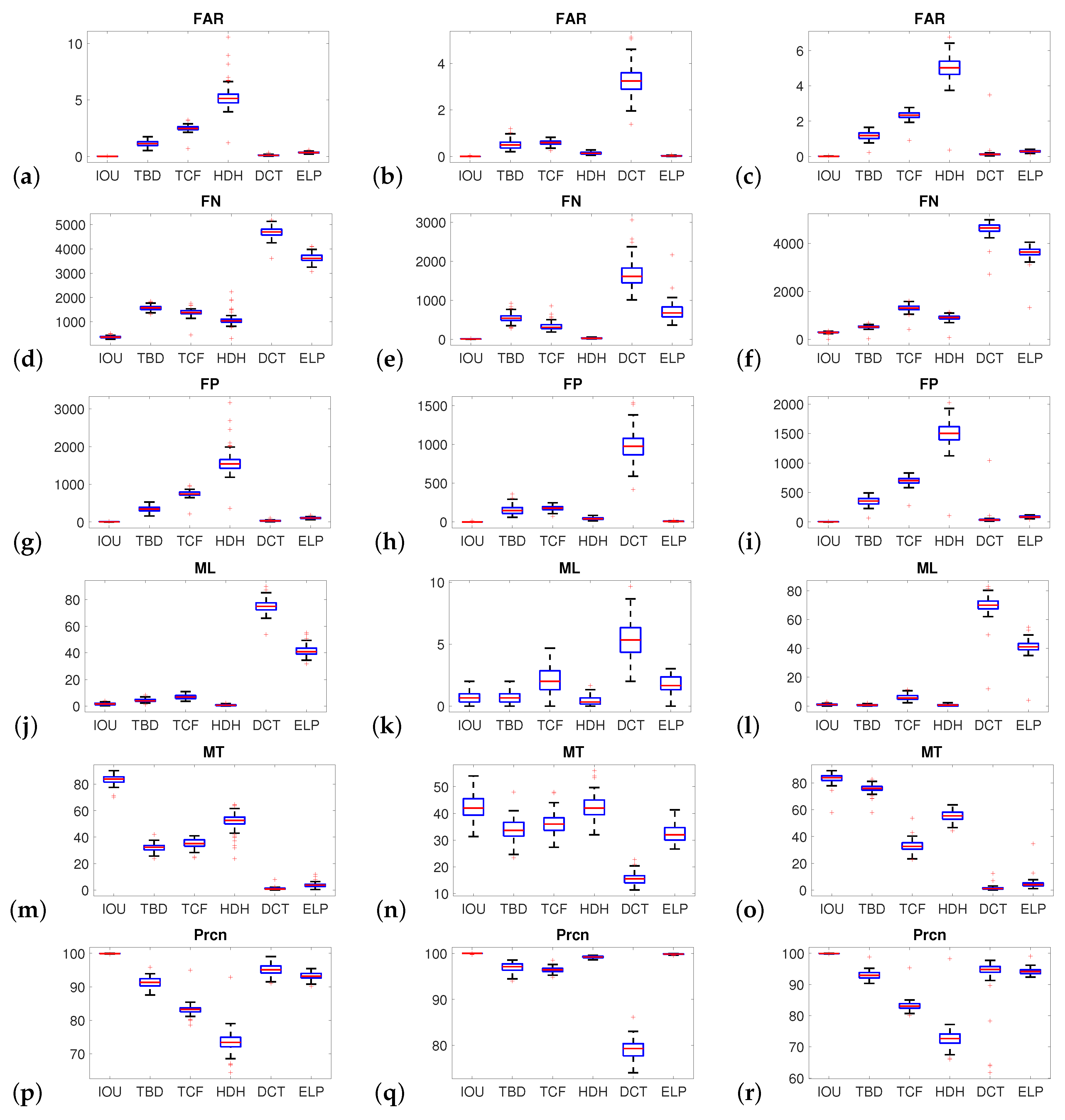

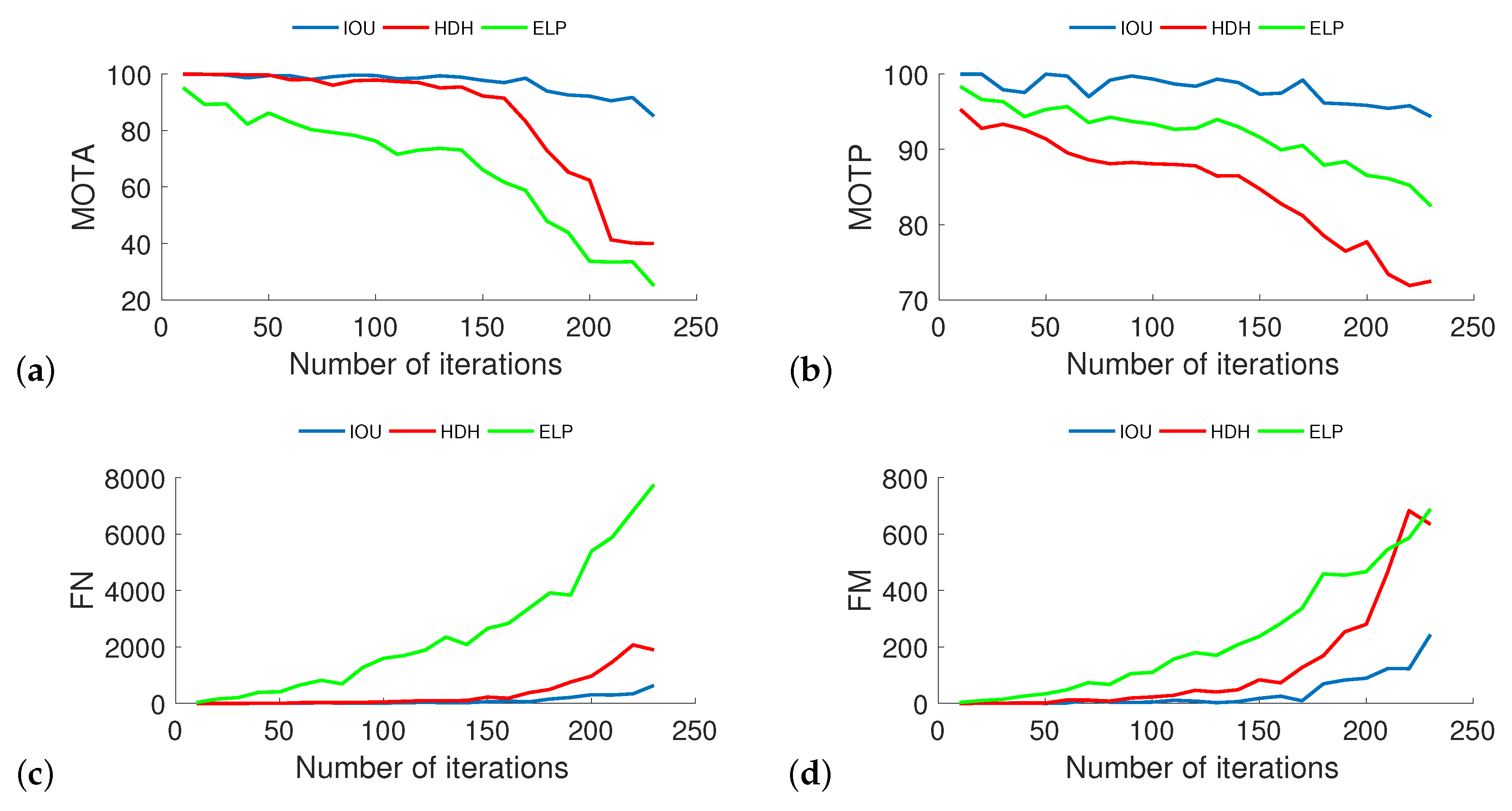

3.1. Crowd Density

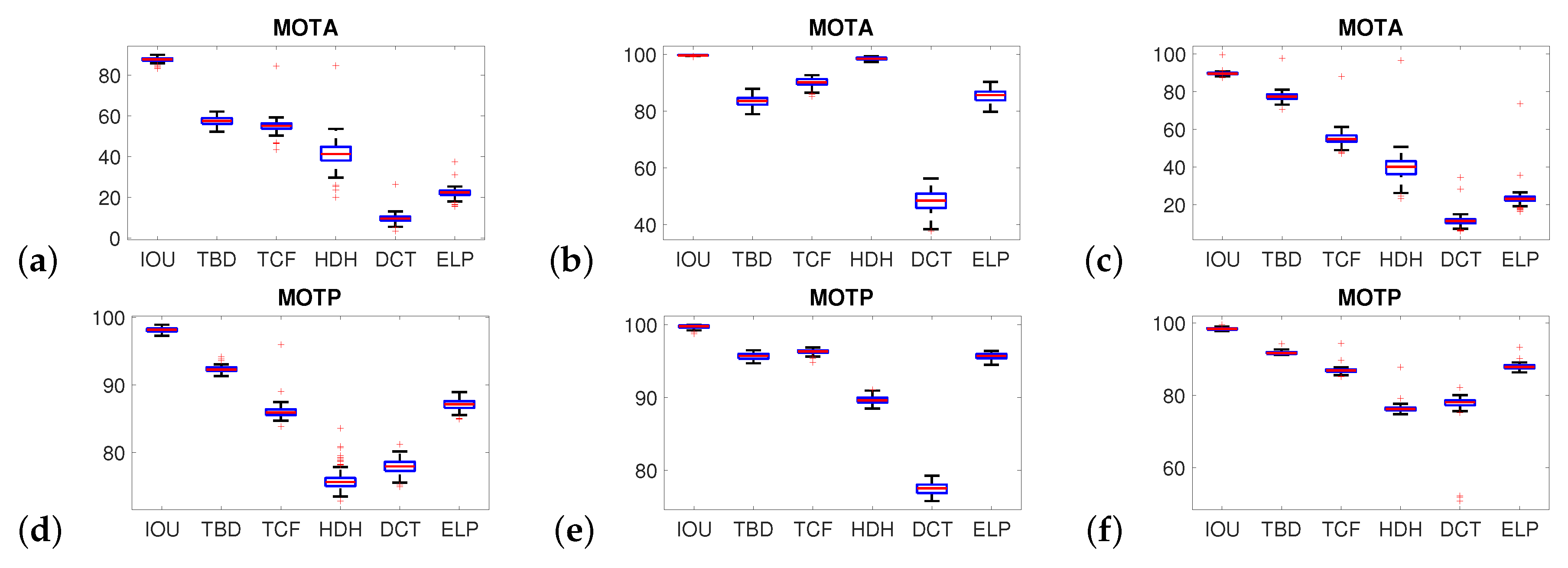

3.2. Weather Conditions

3.3. Comparison to MOTChallenge

4. Discussion

4.1. Application of the Concept of Crowd Simulations

4.2. Future Works

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| PT | Partially Tracked |

| MT | Mostly Tracked |

| ML | Mostly Loosed |

| FM | Fragments |

| IDs | ID switches |

| FP | False Positive |

| FN | False Negative |

| GT | Ground Truth |

| Rcll | Recall |

| Prcn | Precision |

| FAR | False positives Relative to the total number of frames |

| MOTA | Multiple-Object Tracking Accuracy |

| MOTP | Multiple-Object Tracking Precision |

| IOU | High-speed tracking by detection based on Intersection Over Union |

| TBD | Tracking By Detection |

| TCF | Tracklet Confidence |

| ELP | Enhancing Linear Programming |

| HDH | High Density Homogeneous |

| DCT | Discrete Continuous Energy |

| FPS | Frame Per Second |

Appendix A

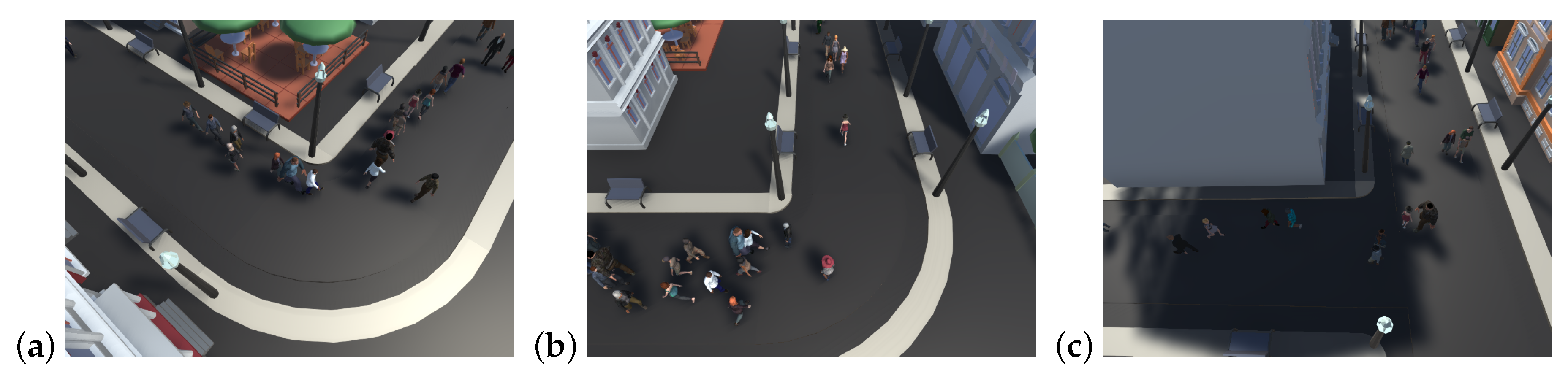

Appendix A.1. CrowdSim—Examples

Appendix A.2. Crowd Density

Appendix A.3. Weather Conditions

References

- Bashir, F.; Porikli, F. Performance Evaluation of Object Detection and Tracking Systems. In Proceedings of the IEEE International Workshop on Performance Evaluation of Tracking and Surveillance (PETS), Hyderabad, India, 13–16 January 2006; pp. 7–14. [Google Scholar]

- Leal-Taixé, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Dendorfer, P.; Rezatofighi, H.; Milan, A.; Shi, J.; Cremers, D.; Reid, I.; Roth, S.; Schindler, K.; Leal-Taixé, L. CVPR19 Tracking and Detection Challenge: How crowded can it get? arXiv 2019, arXiv:1906.04567. [Google Scholar]

- Dendorfer, P.; Rezatofighi, H.; Milan, A.; Shi, J.; Cremers, D.; Reid, I.; Roth, S.; Schindler, K.; Leal-Taixé, L. MOT20: A benchmark for multi object tracking in crowded scenes. arXiv 2020, arXiv:2003.09003. [Google Scholar]

- Geiger, A. Probabilistic Models for 3D Urban Scene Understanding from Movable Platforms. Pattern Anal. Mach. Intell. 2013. [Google Scholar] [CrossRef]

- Kulbacki, M.; Segen, J.; Wojciechowski, S.; Wereszczyński, K.; Nowacki, J.P.; Drabik, A.; Wojciechowski, K. Intelligent Video Monitoring System with the Functionality of Online Recognition of People’s Behavior and Interactions Between People. Lect. Notes Comput. Sci. 2018, 10752. [Google Scholar] [CrossRef]

- Staniszewski, M.; Kloszczyk, M.; Segen, J.; Wereszczyński, K.; Drabik, A.; Kulbacki, M. Recent developments in tracking objects in a video sequence. Lect. Notes Comput. Sci. 2016, 9622, 427–436. [Google Scholar]

- Wojciechowski, S.; Kulbacki, M.; Segen, J.; Wycislok, R.; Bąk, A.; Wereszczynski, K.; Wojciechowski, K. Selected Space-Time Based Methods for Action Recognition. Lect. Notes Comput. Sci. 2016, 9622, 417–426. [Google Scholar]

- Kim, S.; Bera, A.; Best, A.; Chabra, R.; Manocha, D. Interactive and adaptive data-driven crowd simulation. IEEE Virtual Reality (VR) 2016, 29–38. [Google Scholar] [CrossRef]

- Patil, S.; van den Berg, J.; Curtis, S.; Lin, M.; Manocha, D. Directing crowd simulations using navigation fields. IEEE Trans. Vis. Comput. Graph. 2011, 17, 244–254. [Google Scholar] [CrossRef]

- Sewall, J.; Wilkie, D.; Lin, M.C. Interactive hybrid simulation of large-scale traffic. ACM Trans. Graph. 2011, 30, 135:1–135:12. [Google Scholar] [CrossRef]

- Bi, H.; Mao, T.; Wang, Z.; Deng, Z. A Deep Learning-based Framework for Intersectional Traffic Simulation and Editing. IEEE Trans. Vis. Comput. Graph. 2019. [Google Scholar] [CrossRef] [PubMed]

- Mathew, T.; Benes, B.; Aliaga, D. Interactive Inverse Spatio-Temporal Crowd Motion Design. Symp. Interact. Graph. Games Assoc. Comput. Mach. 2020, 7, 1–9. [Google Scholar]

- Ren, J.; Xiang, W.; Xiao, Y.; Yang, R.; Manocha, D.; Jin, X. Heter-Sim: Heterogeneous Multi-Agent Systems Simulation by Interactive Data-Driven Optimization. IEEE Trans. Vis. Comput. Graph. 2019. [Google Scholar] [CrossRef] [PubMed]

- Karamouzas, I.; Overmars, M. Simulating and Evaluating the Local Behavior of Small Pedestrian Groups. IEEE Trans. Vis. Comput. Graph. 2012, 18, 394–406. [Google Scholar] [CrossRef] [PubMed]

- Pax, R.; Pavon, J. Multi-Agent System Simulation of Indoor Scenarios. Ann. Comput. Sci. Inf. Syst. 2015, 5, 1757–1763. [Google Scholar]

- Kormanová, A. A Review on Macroscopic Pedestrian Flow Modelling. Acta Inform. Pragensia 2013, 2, 39–50. [Google Scholar] [CrossRef]

- Golaem Crowd Simulation. Available online: http://golaem.com/content/product/golaem (accessed on 1 September 2020).

- Massive Simulating Life. Available online: http://www.massivesoftware.com/about.html (accessed on 1 September 2020).

- Van Toll, W.; Jaklin, N.; Geraerts, R. Towards believable crowds: A generic multi-level framework for agent navigation. In Proceedings of the 20th Annual Conference of the Advanced School for Computing and Imaging, Amersfoort, The Netherlands, 23 March 2015. [Google Scholar]

- He, Z.; Shi, M.; Li, C. Research and application of path-finding algorithm based on unity 3D. In Proceedings of the 2016 IEEE/ACIS 15th International Conference on Computer and Information Science (ICIS), Okayama, Japan, 26–29 June 2016; pp. 1–4. [Google Scholar]

- Kristinsson, K.V. Social Navigation in Unity 3D. MSc Project. Master’s Thesis, School of Computer Science, Reykjavik University, Reykjavik, Iceland, 2015. [Google Scholar]

- Forbus, F.D.; Wright, W. Some Notes on Programming Objects in The SimsTM. 2001. Available online: http://www.qrg.northwestern.edu/papers/Files/Programming_Objects_in_The_Sims.pdf (accessed on 1 September 2020).

- Musse, S.R.; Jung, C.R.; Jacques, J.C.S.; Braun, A. Using computer vision to simulate the motion of virtual agents. J. Vis. Comput. Animat. 2007, 18, 83–93. [Google Scholar] [CrossRef]

- Kim, S.; Bera, A.; Manocha, D. Interactive crowd content generation and analysis using trajectory-level behavior learning. In Proceedings of theIEEE International Symposium on Multimedia (ISM), Miami, FL, USA, 14–16 December 2015. [Google Scholar]

- Cui, X.; Shi, H. A*-based pathfinding in modern computer games. Int. J. Comput. Sci. Netw. Secur. 2011, 11, 125–130. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by- detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU Based Multi-Object Tracking by Visual Information. In Proceedings of the IEEE International Conference on Advanced Video and Signals-based Surveillance, Auckland, New Zealand, 27–30 November 2018. [Google Scholar]

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.; Qi, H.; Lim, J.; Yang, M.; Lyu, S. DETRAC: A new benchmark and protocol for multi-object detection and tracking. arXiv 2015, arXiv:1511.04136. [Google Scholar] [CrossRef]

- Bae, S.-H.; Yoon, K.-J. Robust Online Multi-Object Tracking based on Tracklet Confidence and Online Discriminative Appearance Learning. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Caviar Dataset. Available online: http://homepages.inf.ed.ac.uk/rbf/caviardata1/ (accessed on 1 September 2020).

- Ess, A.; Leibe, B.; Schindler, K.; Van Gool, L. A mobile vision system for robust multi-person tracking. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- McLaughlin, N.; Martinez Del Rincon, J.; Miller, P. Enhancing Linear Programming with Motion Modelling for Multi-target Tracking. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 71–77. [Google Scholar]

- Benfold, B.; Reid, I. Stable multi-target tracking in real-time surveillance video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 3457–3464. [Google Scholar]

- Poiesi, F.; Cavallaro, A. Tracking multiple high-density homogeneous targets. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 623–637. [Google Scholar] [CrossRef]

- TUD Dataset. Available online: https://www.d2.mpi-inf.mpg.de/node/428 (accessed on 1 September 2020).

- Milan, A.; Schindler, K.; Roth, S. Multi-Target Tracking by Discrete- Continuous Energy Minimization. IEEE Trans. Pattern Anal. Mach Intell. 2016, 38, 2054–2068. [Google Scholar] [CrossRef] [PubMed]

- Henschel, R.; Zou, Y.; Rosenhahn, B. Multiple People Tracking using Body and Joint Detections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Chu, P.; Ling, H. FAMNet: Joint Learning of Feature, Affinity and Multi-Dimensional Assignment for Online Multiple Object Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6171–6180. [Google Scholar]

- Sheng, H.; Zhang, Y.; Chen, J.; Xiong, Z.; Zhang, J. Heterogeneous Association Graph Fusion for Target Association in Multiple Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3269–3280. [Google Scholar] [CrossRef]

- Yoon, K.; Gwak, J.; Song, Y.; Yoon, Y.; Jeon, M. OneShotDA: Online Multi-Object Tracker with One-Shot-Learning-Based Data Association. IEEE Access 2020, 8, 38060–38072. [Google Scholar] [CrossRef]

- Henschel, R.; Leal-Taixe, L.; Cremers, D.; Rosenhahn, B. Fusion of Head and Full-Body Detectors for Multi-Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake, UT, USA, 19–21 June 2018. [Google Scholar]

- Keuper, M.; Tang, S.; Andres, B.; Brox, T.; Schiele, B. Motion Segmentation and Multiple Object Tracking by Correlation Co-Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 140–153. [Google Scholar] [CrossRef]

- Sheng, H.; Chen, J.; Zhang, Y.; Ke, W.; Xiong, Z.; Yu, J. Iterative Multiple Hypothesis Tracking with Tracklet-Level Association. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3660–3672. [Google Scholar] [CrossRef]

- Fu, Z.; Angelini, F.; Chambers, J.; Naqvi, S.M. Multi-Level Cooperative Fusion of GM-PHD Filters for Online Multiple Human Tracking. IEEE Trans. Multimed. 2019, 21, 2277–2291. [Google Scholar] [CrossRef]

- Kim, C.; Li, F.; Rehg, J.M. Multi-object Tracking with Neural Gating Using Bilinear LSTM. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 200–215. [Google Scholar]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple Object Tracking With Attention to Appearance, Structure, Motion and Size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. arXiv 2019, arXiv:1903.05625. [Google Scholar]

- Wang, G.; Wang, Y.; Zhang, H.; Gu, R.; Hwang, J. Exploit the connectivity: Multi-object tracking with trackletnet. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019. [Google Scholar]

- Xu, Y.; Osep, A.; Ban, Y.; Horaud, R.; Leal-Taixe, L.; Alameda-Pineda, X. How To Train Your Deep Multi-Object Tracker. arXiv 2019, arXiv:1906.06618. [Google Scholar]

- Rezatofighi, S.H.; Milan, A.; Zhang, Z.; Shi, Q.; Dick, A.; Reid, I. Joint Probabilistic Data Association Revisited. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Kitti Dataset. Available online: http://www.cvlibs.net/datasets/kitti/eval_tracking.php (accessed on 1 September 2020).

- Average Frame Rate Video Surveillance 2019 by: IPVM Team. Published on 23 May 2019. Available online: https://ipvm.com/reports/avg-frame-rate-2019 (accessed on 1 September 2020).

- Keval, H.; Sasse, M.A. To catch a thief—You need at least 8 frames per second: The impact of frame rates on user performance in a CCTV detection task. In Proceedings of the 16th ACM International Conference on Multimedia, Vancouver, BC, Canada, 27–31 October 2008. [Google Scholar]

- Choi, W. Near-Online Multi-target Tracking with Aggregated Local Flow Descriptor. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3029–3037. [Google Scholar]

| Parameter | Details |

|---|---|

| Number of pedestrians | 10–100 (step 10) |

| Frame rate | 10 FPS |

| Length | 30 s |

| Number of cameras | 3 |

| Total number of sessions | 300 |

| Parameter | Details |

|---|---|

| Number of sessions per weather condition | 100 |

| Number of pedestrians | 100 |

| Number of weather conditions | 3 |

| Frame rate | 10 FPS |

| Length | 30 s |

| Number of cameras | 3 |

| Total number of sessions | 900 |

| Parameter | Details |

|---|---|

| PT (partially tracked) | The number of objects tracked for more than 20% but less than 80% of their presence in a scene |

| MT (mostly tracked) | The number of objects tracked for more than 80% of their presence in a scene |

| ML (mostly loosed) | The number of objects tracked for less than 20% of their presence in a scene |

| FM (fragments) | The number of trajectories with one or more gaps in their tracklets. |

| IDs (ID switches) | One object occluding another so that the tracking algorithm continues tracking the wrong object |

| FP (false positive) | An instance where an algorithm identified a trajectory but could not relate it to an existing object |

| FN (false negative) | Describes the number of objects not tracked by an algorithm |

| GT (ground truth) | Number of objects described in the reference file |

| Recall | Ratio between objects that were possible to track (GT) and those actually tracked |

| Precision | Relationship of correct trajectories to the total number of possible trajectories |

| FAR | Number of false positives relative to the total number of frames comprising a tested sequence |

| MOTA | Three sources of error: false negatives, false positives, and ID switches. |

| MOTP | Average difference between true positives and corresponding ground truth targets. |

| Crowd Density | MOTChallenge | Rain | Snow | Fog/Steam | |||||

|---|---|---|---|---|---|---|---|---|---|

| Method | Average | Method | Average | Method | Average | Method | Average | Method | Average |

| IOU | 99.8 | IOU | 57.1 | IOU | 87.5 | IOU | 99.6 | IOU | 89.6 |

| HDH | 99.2 | TBD | 33.7 | TBD | 57.5 | HDH | 98.5 | TBD | 77.4 |

| TBD | 97.7 | DCT | 33.2 | TCF | 54.9 | TCF | 90.1 | TCF | 55.1 |

| TCF | 90.9 | ELP | 25 | HDH | 41.1 | ELP | 85.4 | HDH | 40 |

| ELP | 88.7 | TCF | 15.1 | ELP | 22.1 | TBD | 83.4 | ELP | 23.4 |

| DCT | 56.9 | HDH | n/c | DCT | 9.6 | DCT | 48.3 | DCT | 11.4 |

| Crowd Density | MOTChallenge | Rain | Snow | Fog/Steam | |||||

|---|---|---|---|---|---|---|---|---|---|

| Method | Average | Method | Average | Method | Average | Method | Average | Method | Average |

| IOU | 99.9 | IOU | 77.1 | IOU | 98.2 | IOU | 99.7 | IOU | 98.3 |

| TCF | 97.2 | TBD | 76.5 | TBD | 92.4 | TCF | 96.3 | TBD | 91.7 |

| ELP | 96.8 | DCT | 75.8 | ELP | 87.1 | TBD | 95.7 | ELP | 87.9 |

| TBD | 96.5 | ELP | 71.2 | TCF | 86.1 | ELP | 95.7 | TCF | 86.8 |

| HDH | 91.5 | TCF | 70.5 | DCT | 77.9 | HDH | 89.6 | DCT | 77.2 |

| DCT | 78.6 | HDH | n/c | HDH | 76 | DCT | 77.5 | HDH | 76.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Staniszewski, M.; Foszner, P.; Kostorz, K.; Michalczuk, A.; Wereszczyński, K.; Cogiel, M.; Golba, D.; Wojciechowski, K.; Polański, A. Application of Crowd Simulations in the Evaluation of Tracking Algorithms. Sensors 2020, 20, 4960. https://doi.org/10.3390/s20174960

Staniszewski M, Foszner P, Kostorz K, Michalczuk A, Wereszczyński K, Cogiel M, Golba D, Wojciechowski K, Polański A. Application of Crowd Simulations in the Evaluation of Tracking Algorithms. Sensors. 2020; 20(17):4960. https://doi.org/10.3390/s20174960

Chicago/Turabian StyleStaniszewski, Michał, Paweł Foszner, Karol Kostorz, Agnieszka Michalczuk, Kamil Wereszczyński, Michał Cogiel, Dominik Golba, Konrad Wojciechowski, and Andrzej Polański. 2020. "Application of Crowd Simulations in the Evaluation of Tracking Algorithms" Sensors 20, no. 17: 4960. https://doi.org/10.3390/s20174960

APA StyleStaniszewski, M., Foszner, P., Kostorz, K., Michalczuk, A., Wereszczyński, K., Cogiel, M., Golba, D., Wojciechowski, K., & Polański, A. (2020). Application of Crowd Simulations in the Evaluation of Tracking Algorithms. Sensors, 20(17), 4960. https://doi.org/10.3390/s20174960