1. Introduction

Bridges are exposed to many external loads, such as from traffic, wind, flooding, and earthquakes. Monitoring bridges is becoming increasingly important due to safety concerns. There are some direct measurement methods such as the use of linear variable differential transformer (LVDT) and laser-based displacement sensors. However, many LVDTs are required to measure the displacement across a bridge, which can be both costly and time-consuming. Another method for obtaining displacements is through a non-contact method, such as using GPS, but the precision of GPS displacement measurements is not satisfactory. Nassif et al. [

1] compared the displacement results of a girder using a non-contact laser Doppler vibrometer (LDV) system with those from a linear variable differential transducer (LVDT) system (deflection) and geophone sensors (velocity), both attached to the girder. Similar accuracy was reached. Gentile and Bernardini [

2] proposed a new radar system, called IBIS-S, which was used to measure the static or dynamic displacements at several points on a structure. Kohut et al. [

3] compared the structure’s static displacements under a load using the digital image correlation method with those from the radar method. The main advantage of the digital image correlation method is that it is cheaper and easier to obtain the displacements of multiple points simultaneously. Fukuda et al. [

4] proposed a vision-based displacement system to detect the displacement of large-scale structures. Their system requires a low-cost digital camcorder, a notebook computer, and a target panel with predesigned marks. Ribeiro et al. [

5] developed a non-contact dynamic displacement sensor system based on video technology to detect the displacements in both laboratory and field settings. Both works indicated similar or higher accuracy in displacement measurements compared to traditional methods such as LVDT measurements.

To adopt a computer vision method in structural health monitoring, template matching is typically used to trace deformation of structures. Olaszek [

6] first used the template matching method to obtain the dynamic characteristics of bridges. Sładek [

7] detected the in-plane displacement using template matching on a beam. Wu et al. [

8] developed a vision system that uses digital image processing and computer vision technologies to monitor the 2D plane vibrations of a reduced scale frame mounted on a shake table. Busca et al. [

9] proposed a vision-based displacement monitoring sensor system using three different template matching algorithms, namely, pattern matching, edge detection, and digital image correlation (DIC). Field testing was carried out to obtain the vertical displacement of a railway bridge by tracking high-contrast target panels fixed to the bridge.

Without template targets, computer vision methods can still be used to trace structural deformations. Poudel et al. [

10] proposed an algorithm for determining the edge location with sub-pixel precision using information from neighboring pixels and used these edge locations to trace structural deformations. Wahbeh et al. [

11] followed a similar approach to obtain direct measurements of displacement. Debella-Gilo et al. [

12] proposed two different approaches to achieve the sub-pixel precision. In the first approach, a bi-cubic gray scale interpolation scheme prior to the actual displacement calculations was used. In the second approach, image pairs were correlated at the original image resolution followed with bi-cubic interpolation, parabola fitting, or Gaussian fitting to achieve sub-pixel precision. Chen et al. [

13] proposed a digital photogrammetry method to measure the ambient vibration response at different locations using artificial targets. The mode shape ratio of stay cables with multiple camcorders was successfully identified. Feng et al. [

14] proposed a vision sensor system for remote measurement of structural displacements based on an advanced subpixel-level template matching technique using Fourier transforms.

The effectiveness of the computer vision method is affected by camera setup parameters and light intensity. Santos et al. [

15] used the factorization method to get an initial estimate of the object shape and the camera’s parameters. Knowledge of the distances between the calibration targets was incorporated in a non-linear optimization process to achieve metric shape reconstruction and to optimize the estimate of the camera’s parameters. Schumacher and Shariati [

16] developed a method that tracked the small changes in the intensity value of a monitored pixel to obtain the displacement. Park et al. [

17] proposed a method based on machine vision technology to obtain the displacement of high-rise building structures using the partitioning approach. They performed verification experiments on a flexible steel column and verified that a tall structure can be divided into several relatively short parts so that the visibility limitation of optical devices could be overcome by measuring the relative displacement of each part. Chan et al. [

18] proposed a charge-coupled device (CCD) camera-based method to measure the vertical displacement of bridges and compared the measured displacements with the results from optical fiber (FBG) sensors. It was concluded that both methods developed were superior to traditional methods, providing bridge managers with a simple, inexpensive, and practical method to measure bridge vertical displacements. Recently, Fioriti et al. [

19] adopted the motion magnification technique for modal identification of an on-the-field full-scale large historic masonry structure by using videos taken from a common smartphone device. The processed videos unveil displacements/deformations hardly seen by naked eyes and allow a more effective frequency domain analysis.

Many existing image-based algorithms are based on template matching, and satisfactory results have been obtained with target templates as reviewed above. However, artificial targets sometimes are not easy to set up, and not all-natural targets are suitable to be high-contrast templates such as the cables of suspension bridges. Moreover, when the targets largely deform or break away from structures, they cannot be detected successfully. Furthermore, template targets need time to be prepared.

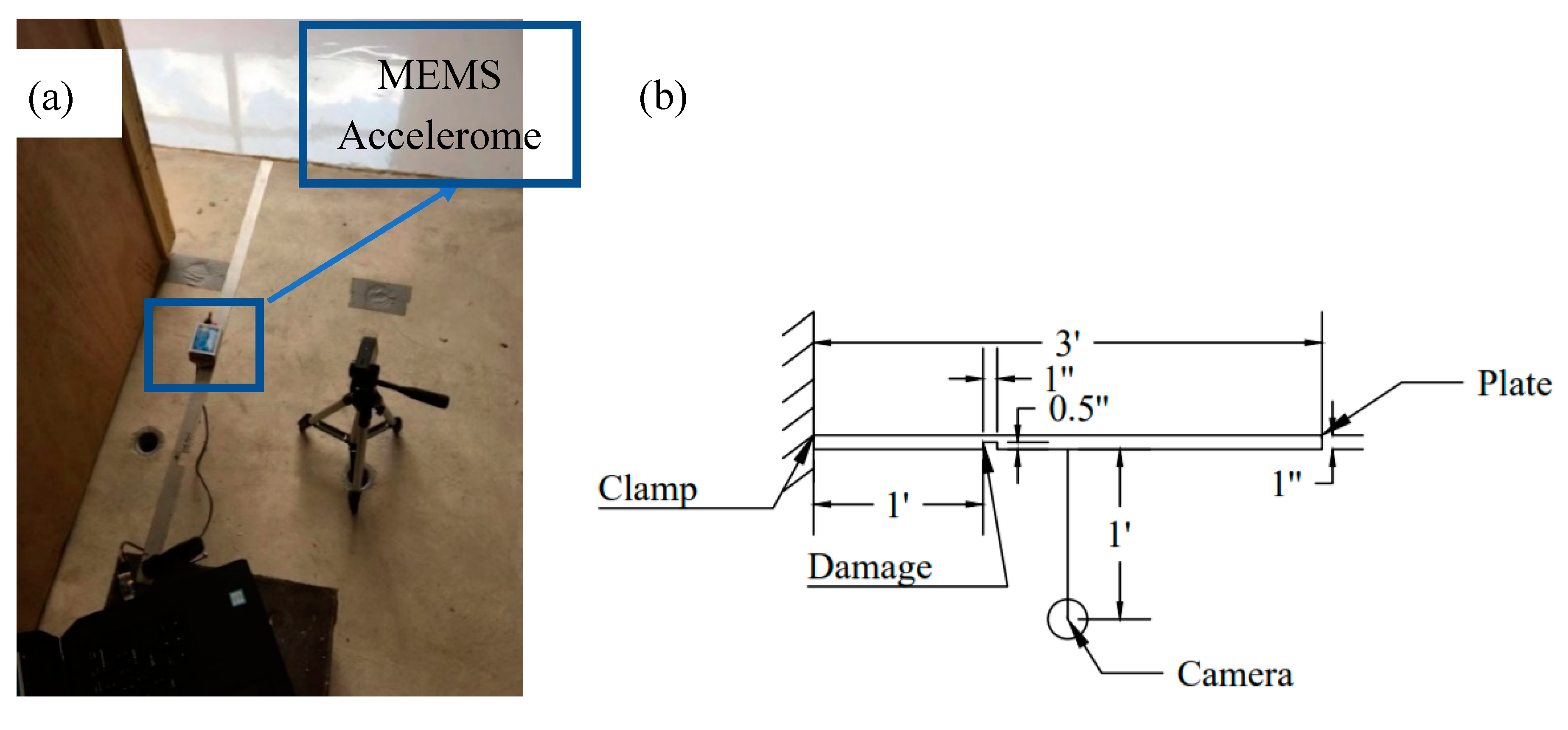

In this paper, a vision sensor system for monitoring the structural displacements based on advanced edge detection and subpixel technique is proposed. The performance of the proposed system is first verified through an MTS test. A field test is then carried out on a street sign, and the proposed vision system is used to provide the time history and natural frequencies of the street sign instantaneously. Finally, the use of such a system for damage detection in a steel beam is evaluated.

2. Proposed Displacement Measurement Method

Figure 1 shows the flowchart of the proposed displacement measurement method. A video of the vibration of a structure is first recorded using a high-speed camera. The captured video is then converted to images, which are further processed to grayscale. The edges in the images are calculated using the canny edge detector. A suitable edge at the measured point and its region of interest (ROI) are selected according to the position of the selected edge in the first image. The coordinates of the measured edge in every image are calculated using the proposed method at an integer pixel precision. Sometimes, the precision of the integer pixel is inadequate when the distance between the camera and the structure is large or the camera has a low resolution. Subpixels based on the Zernike moment developed in this paper is then used to obtain the displacements at the subpixel precision based on the integer pixel coordinates of the edge.

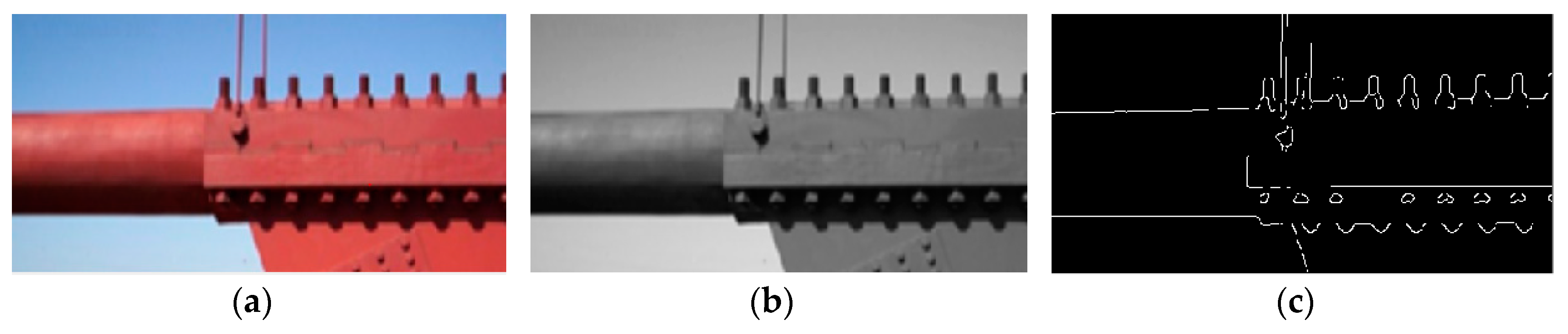

An edge-detection method for monitoring displacement through images captured by a single camera is illustrated in

Figure 2. Once the original images (

Figure 2a) are obtained through the camera, they are converted to gray-scale images (

Figure 2b). Edge detection using a Canny edge detector, which is defined on the integer pixel level, is then performed (

Figure 2c). The white lines shown in

Figure 2c indicate edges found while the background is set black through the greyscale thresholding process. Once the edges of a structure are found, a self-developed algorithm in MATLAB is executed to calculate the coordinates of the edges at measured points. Through this algorithm, displacements at any location of the target structure are measured. To reduce the runtime, the ROI is used to calculate displacements at certain locations of the structure. Most of the time, the displacements of a structure are small, so an image of the entire structure is not required.

The proposed displacement measurement method is like a group of laser displacement sensors and LVDTs. The cameras are the reference points because they do not move, similar to fixtures of laser sensors or LVDTs. Every point on the edges of measured structures could be selected as there is a laser sensor at each point of the edges. It is also convenient to change measured points since they are captured simultaneously.

When the camera is not very close to the object or the camera does not have high resolution, the accuracy obtained is usually not adequate. There are two approaches to enhance it. One is to use a higher resolution camera. The other way is to use the sub-pixel technology to improve accuracy of the image analysis. Some researchers have incorporated the subpixel technique to the conventional template matching methodology, mostly using interpolation. In this paper, edge detection method is combined with the subpixel technique to achieve better accuracy. After the displacement at an integer-pixel level is obtained, the subpixel technique based on Zernike moment method could be used to obtain subpixel level displacements and achieve more accurate results. At the same time, to convert the displacement in pixel to the real physical distance, a relationship between the pixel and physical coordinates needs to be established.

3. Principles of Zernike Moment-Based Subpixel Edge Detection

Photo images are very sensitive to noises such as change in brightness and the vibration of the camera. Zernike moment is an integral operator that filters these effects and helps improve the displacement measurement accuracy. Zernike moment has the property of rotation invariance [

20,

21], which can be written as,

where

is the original

n by m Zernike moment matrix;

is the transferred Zernike moment matrix;

j is the imagery identifier; and

is the rotation angle.

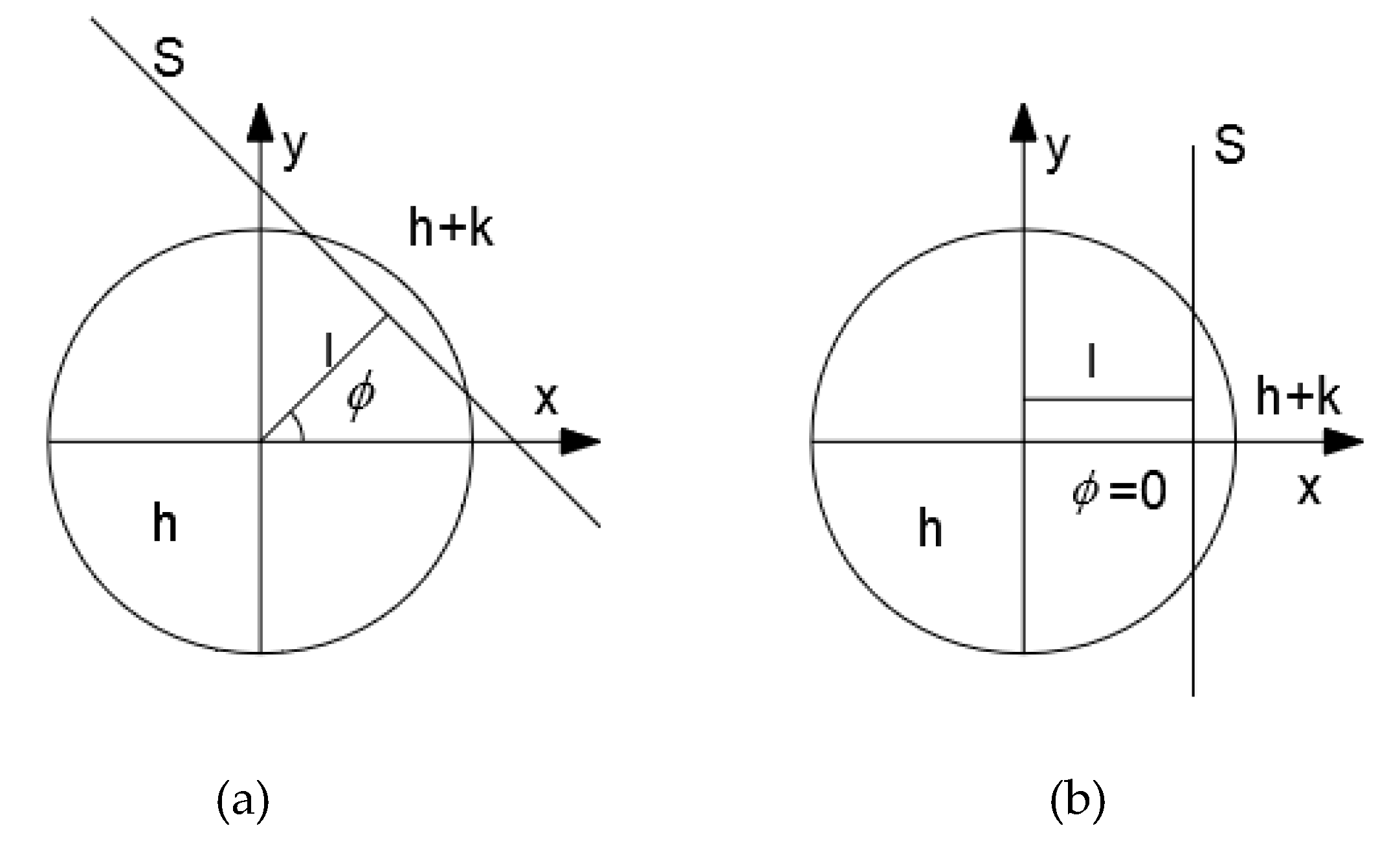

Figure 3 contains a model of the edge.

Figure 3b is obtained when

Figure 3a is rotated clockwise about the origin by

ϕ. In the figure,

S is the edge,

h and

h + k are the values of grayscale of two sides about

S,

l is the perpendicular length from the origin to

S and

ϕ is the angle between

l and

x-axis. In Equation (1) and

Figure 2,

Z’_(n,m) is the Zernike moment after the rotation. The exact coordinates of the edges will require

k,

l, and

ϕ. Each Zernike moment element is defined in Equation (2).

is the Zernike moment of

at rank

n.

is the conjugate function of

, the integral core function. The discrete form of Zernike moment can be written as in Equation (3).

where

N is the number of the integration points,

xi, yj are the coordinates of the integration points, and

f(xi, yj) is the grayscale of the pixel.

,

, and

are used to calculate the edges at subpixel level. Their integral core functions are

,

, and

, respectively (

Table 1).

The edge after rotation is symmetric about the x axis, so

where

is the image function after rotation.

where

Re is the real part of

,

Im is its imaginary part.

in which

and

are calculated using Equations (8) and (9),

l and

k are calculated from Equation (10).

When the values of

l, k, h and

are obtained, coordinates at subpixel level (asc and bsc) are calculated using Equation (11), where a and b are integer coordinates of the edge.

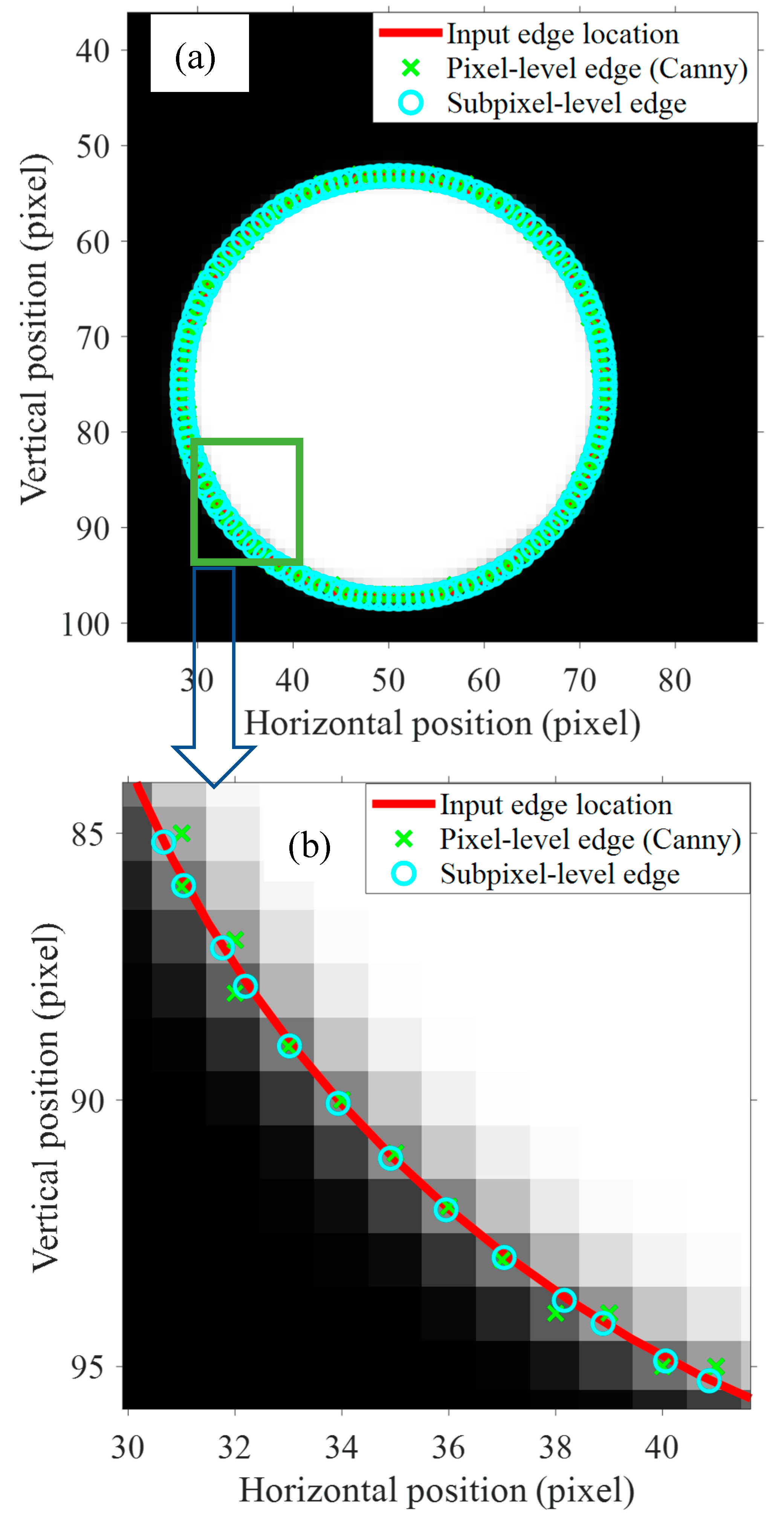

An example test is conducted to verify the accuracy of the subpixel method using MATLAB (

Figure 4). First, a circle with the center at (50, 75) and a diameter of 50 units is drawn using an equation. The color of the outside region is black, and the inside region is white. The line for the circle is the true edge between the black and white regions. The edges are calculated using both the integer-pixel edge detection and the subpixel edge detection, and they are compared with the real edge in red (

Figure 4b). The improved accuracy of the subpixel method over the integer-pixel method is clearly seen when the detected edge is compared with the true edge.

5. Conclusions and Future Work

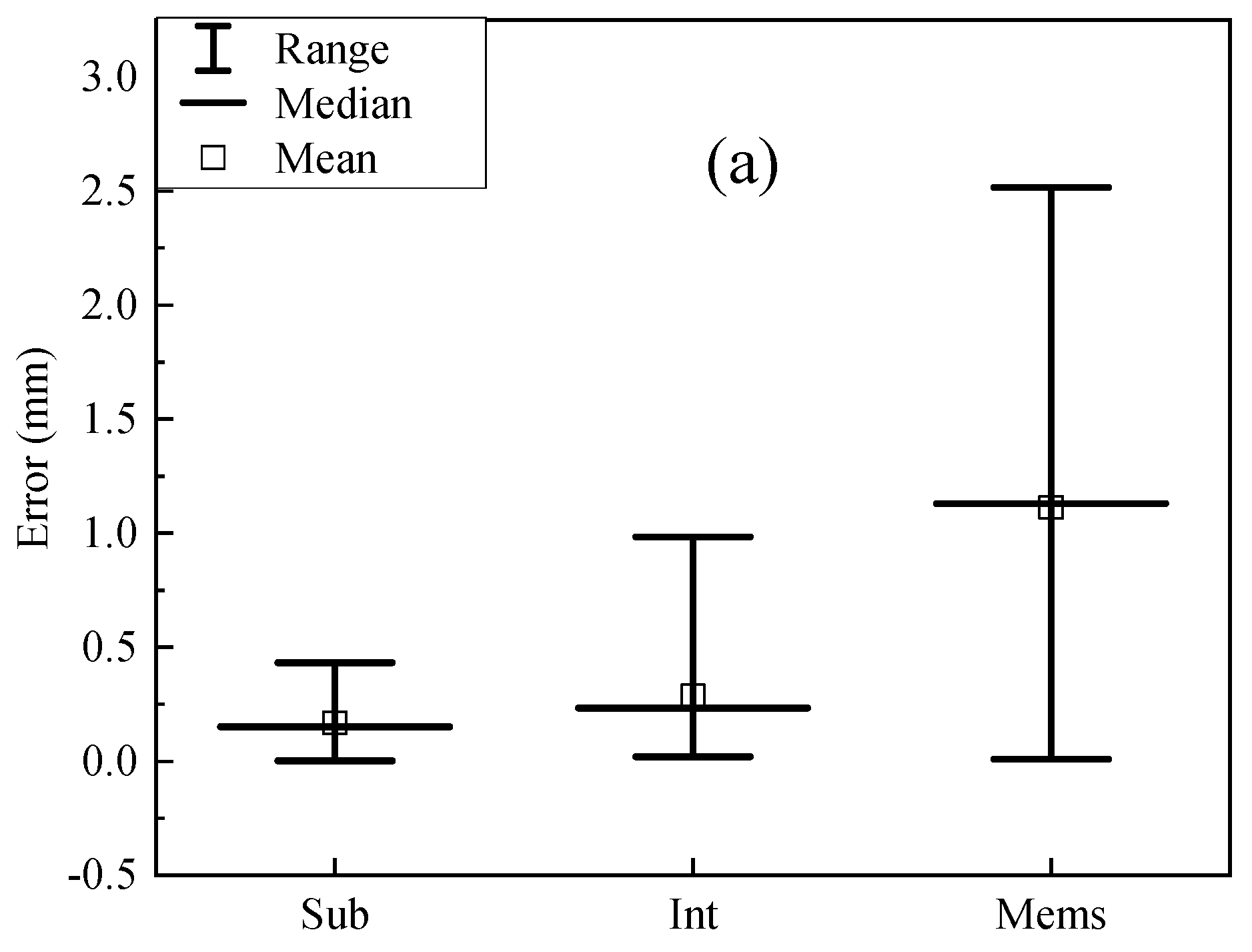

In this study, a novel vision system was developed for noncontact displacement measurement of structures using a subpixel Zernike edge detection technique. Comprehensive experiments, including tests using an MTS machine, vibration tests on street sign and steel beams, were carried out to verify the accuracy of the proposed method. The following conclusions are reached:

1. In the MTS test, satisfactory agreements were observed between the displacement measured by the vision system and those by the MTS sensors.

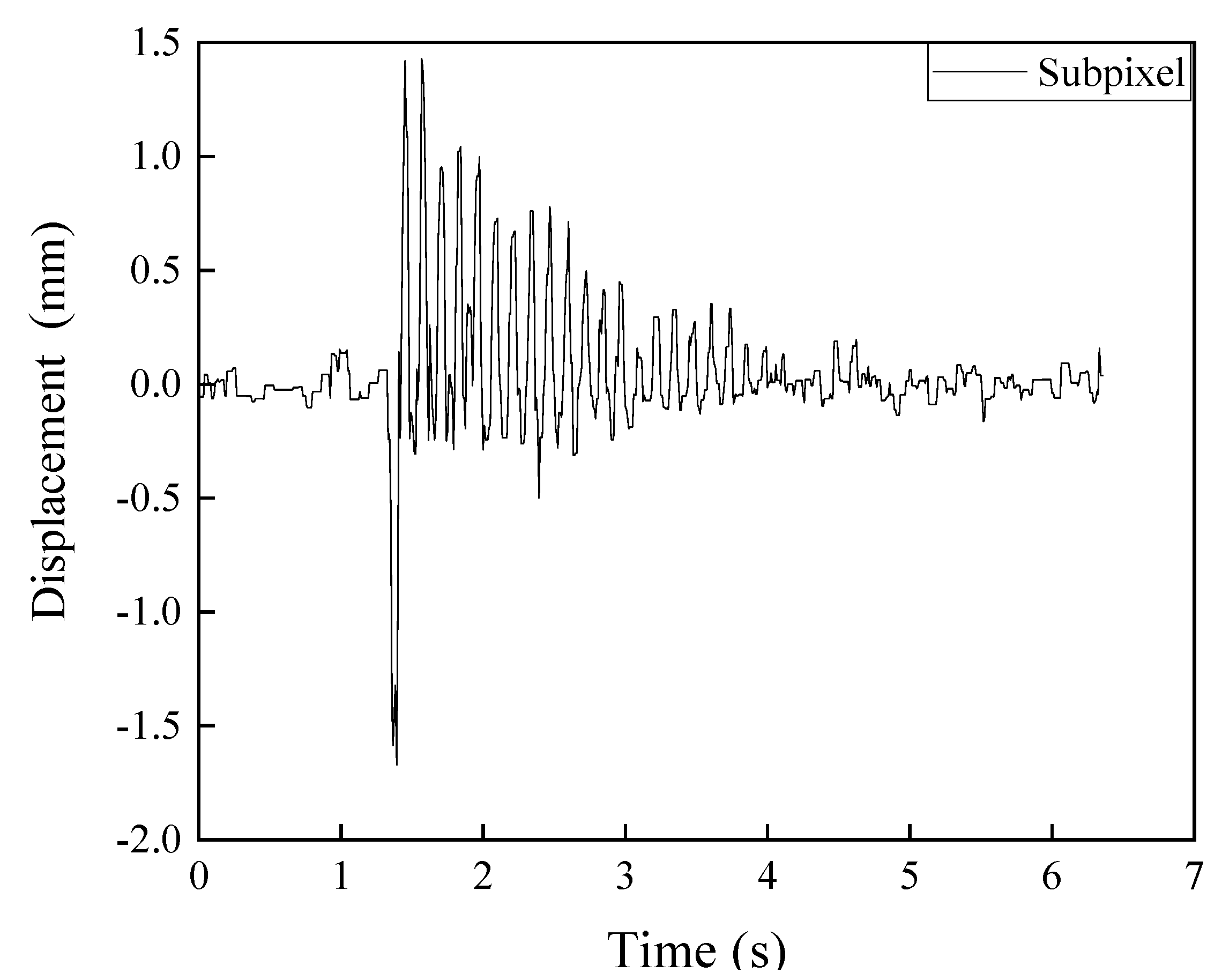

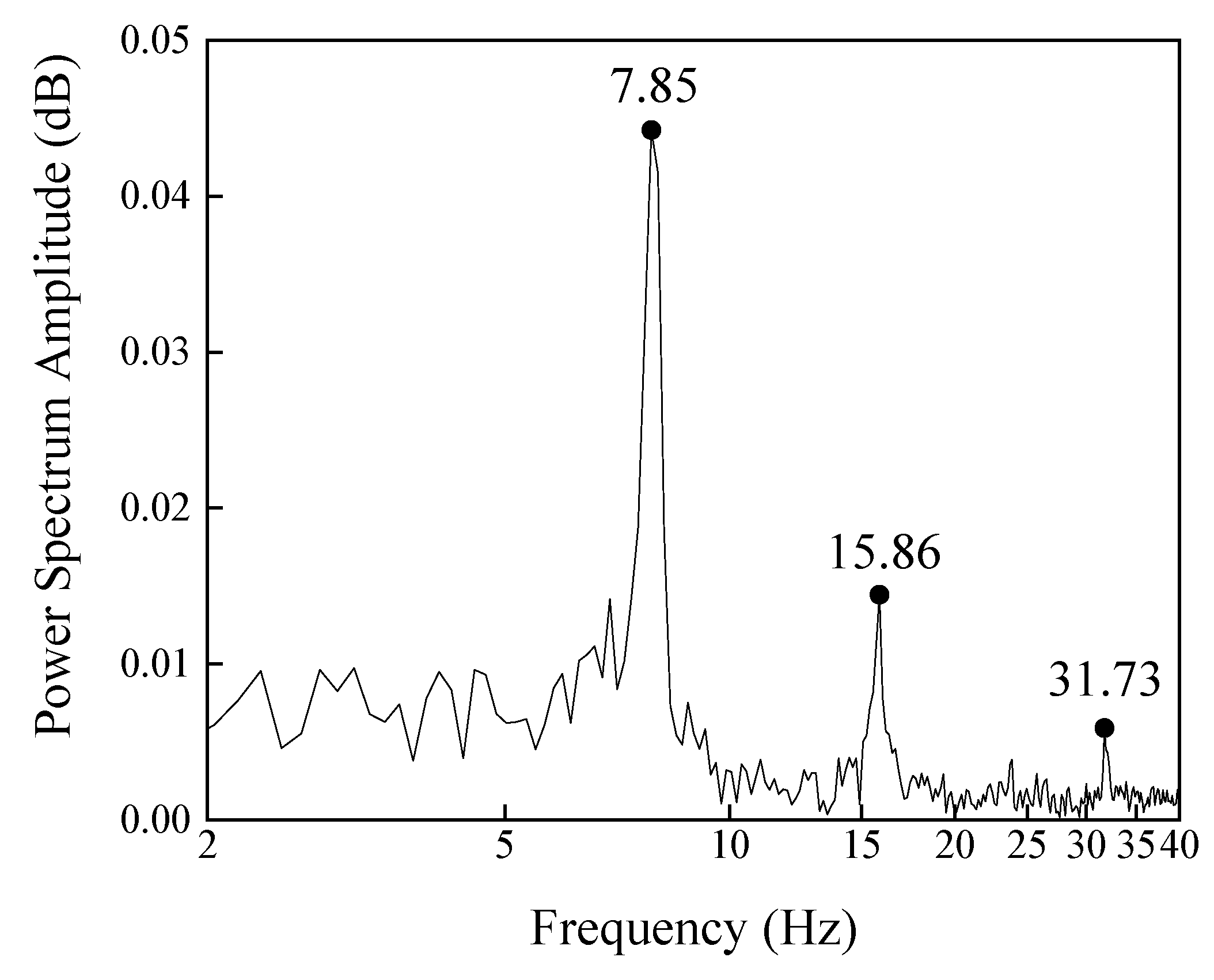

2. From the street sign test, vibration of the street sign after an excitation pulse was detected successfully. Using Fast Fourier Transform, several natural frequencies of the street sign were founded.

3. Subpixel-based Zernike matrix method is an innovative edge detection that could monitor structural displacements accurately at any location on the detected edges.

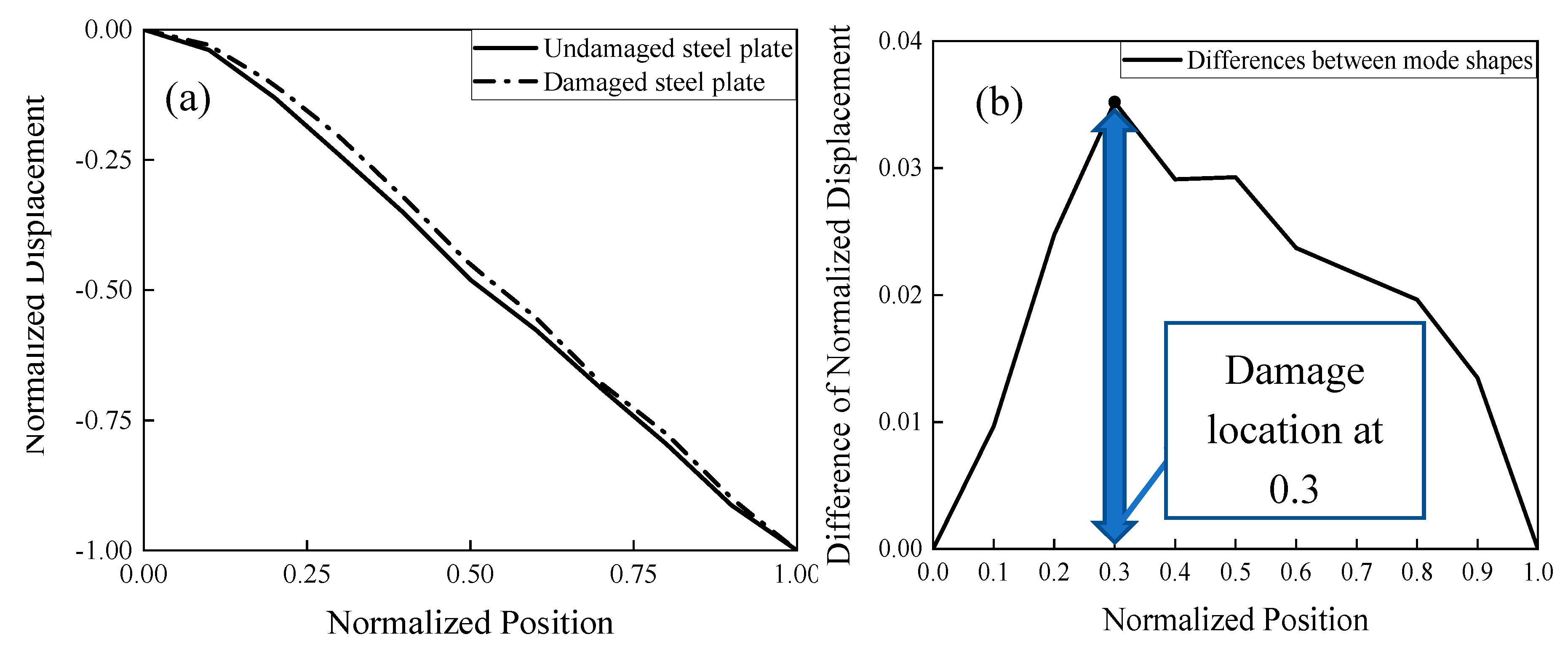

4. Through the analysis of mode shape obtained by the proposed image processing method, the damage location of the steel beam could be accurately detected.

By tracking existing natural edges of structures, the vision sensor method developed in this paper provides flexibility to change measured locations on structures. The availability of such a remote sensor will facilitate cost-effective monitoring of civil engineering structures.

The suggested method uses a camera with high resolution, which could be placed in a distant position to record the motion of target structures. The recorded videos could be then used to analyze the vibration and natural frequencies of the target bridge with sufficient accuracy. These scenarios are the real environment this method could be used. However, the flaws of this proposed method lie in the detection of damages in large structures. From mode shapes of a structure, one could find the structural conditions of the target (whether it has potential problems). However, to determine the damage location and its severity in terms of sizes and shapes, the current method needs further improvement.