Improved Pose Estimation of Aruco Tags Using a Novel 3D Placement Strategy

Abstract

1. Introduction

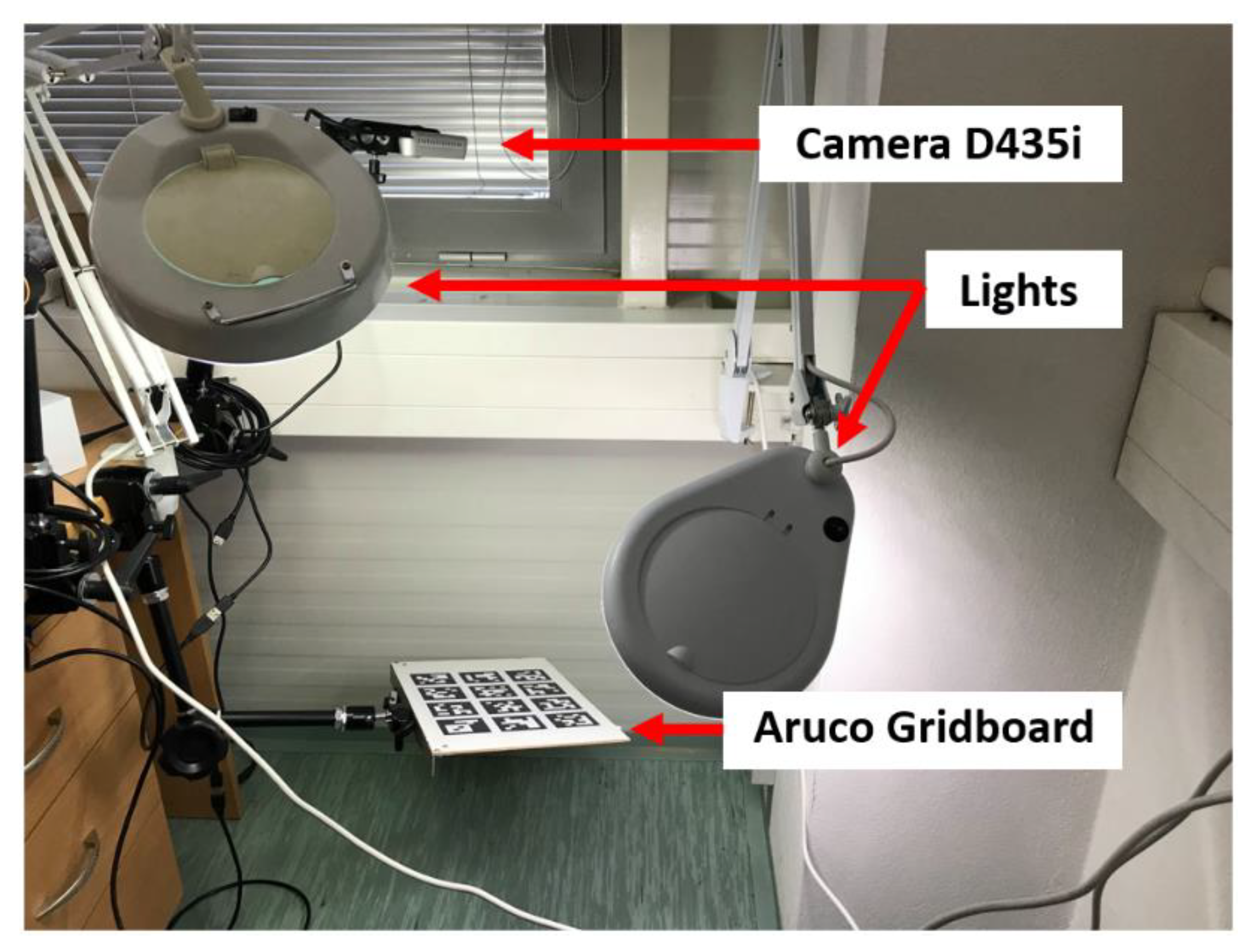

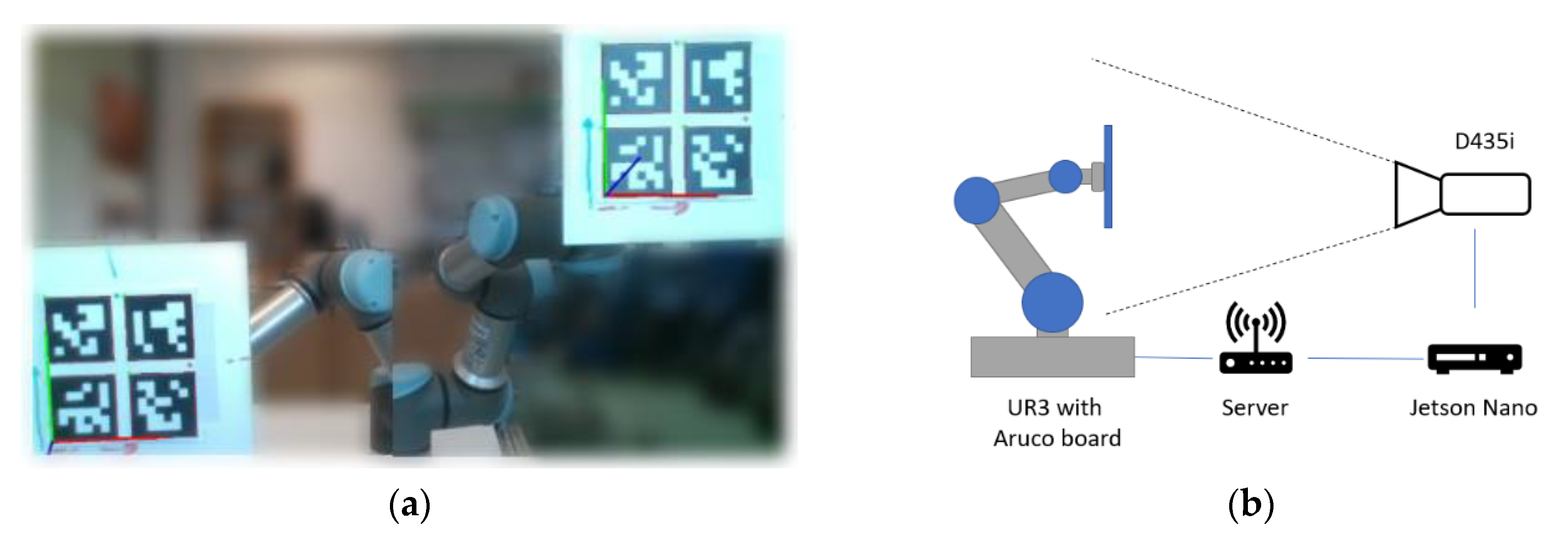

2. Calibrating the Camera

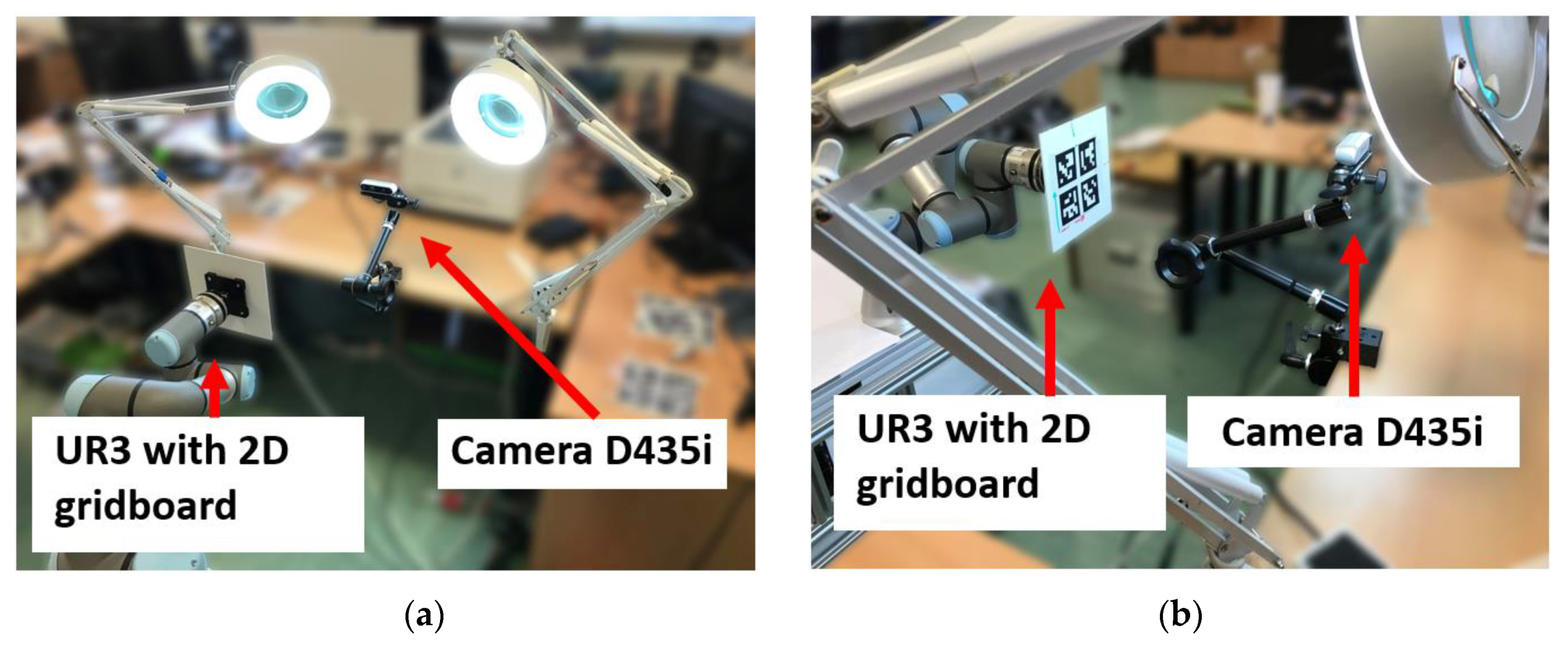

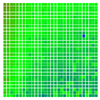

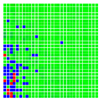

3. Benchmark for Pose Estimates

| Algorithm 1 The Measurement Process. |

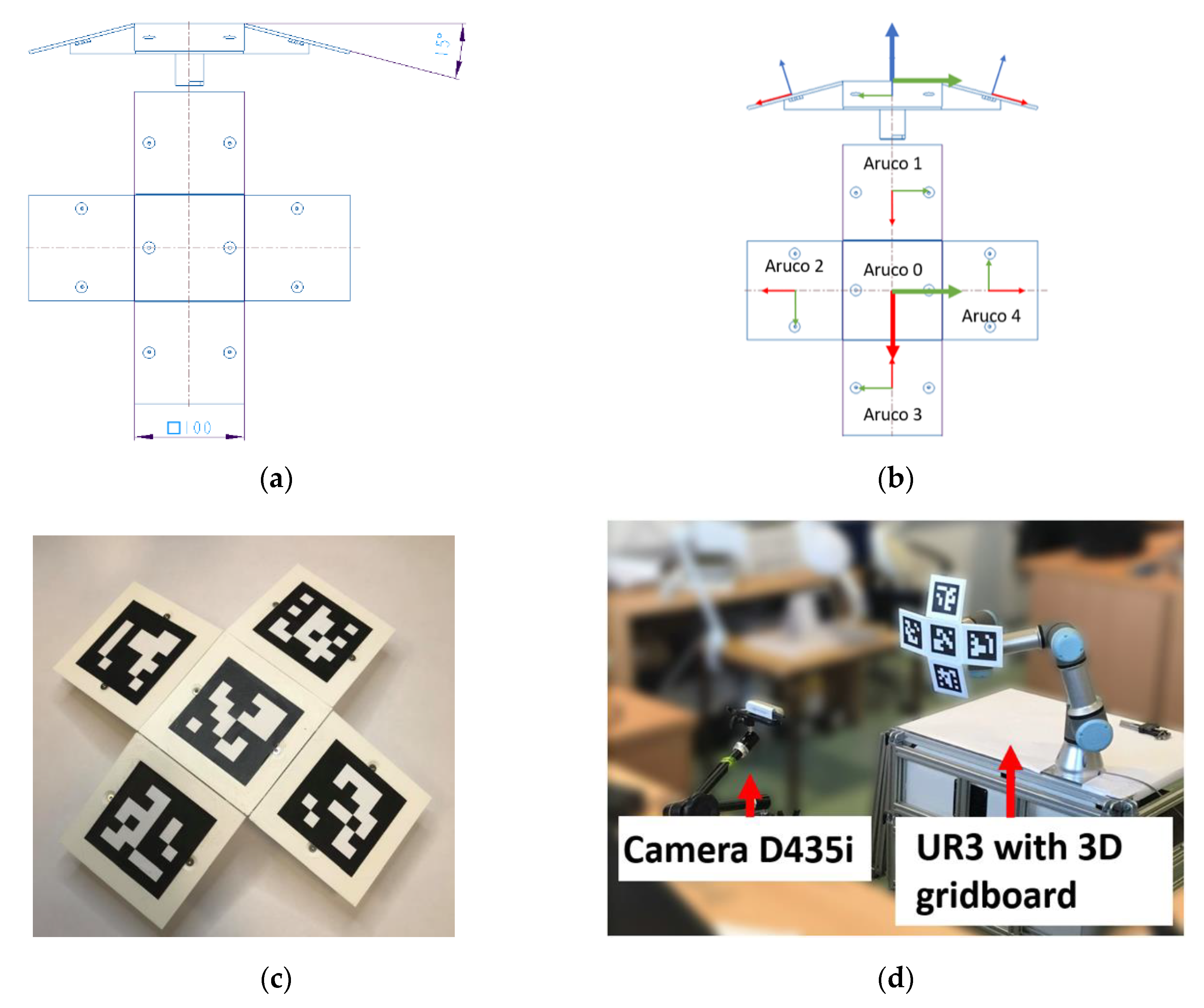

| fori = 0 to m // m[1:30] represents the number horizontal positions for the effector set_y_position_of_end_effector (i) for j = 0 to n // n[1:30] represents the number of measurements in a row set_x_position_of_end_effector (j) for k = 0 to o // o[1:10] represents the number of frames in the measured position Grab image Process image Save data after processing end for end for end for |

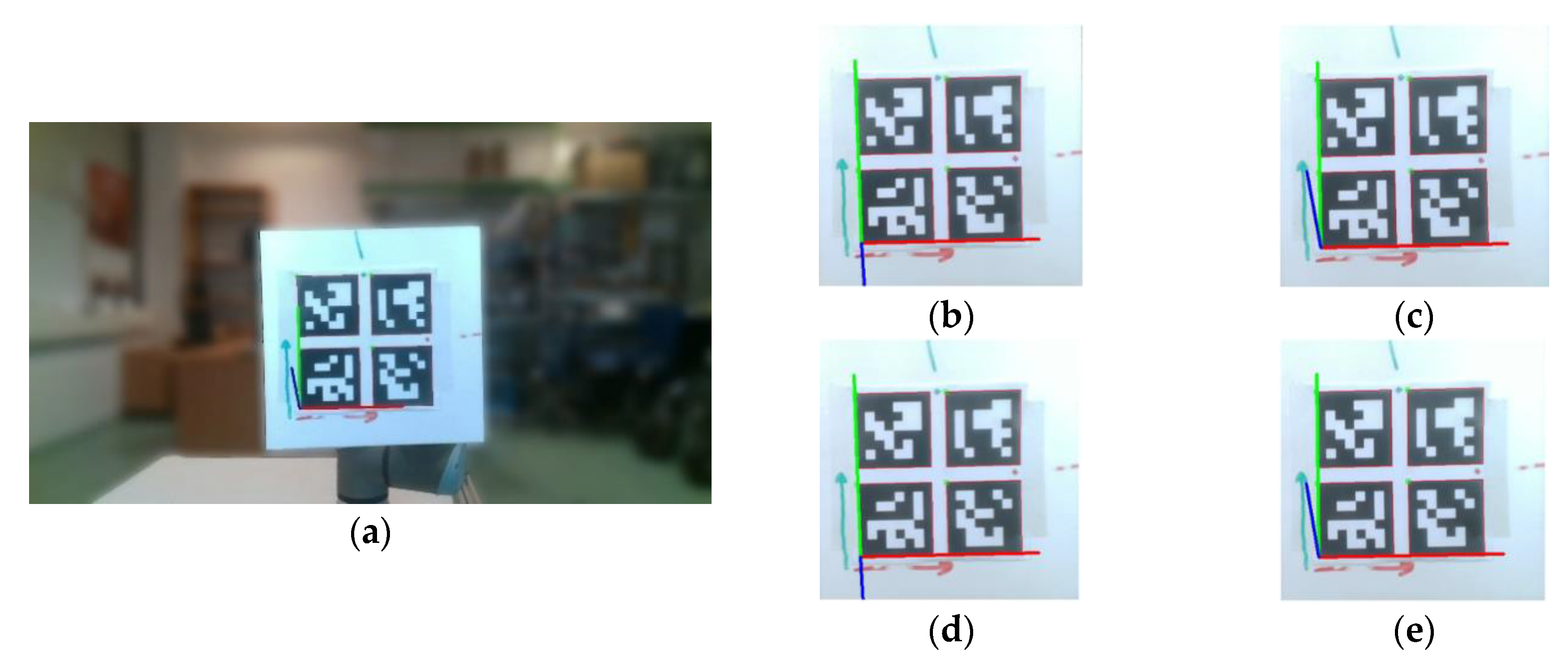

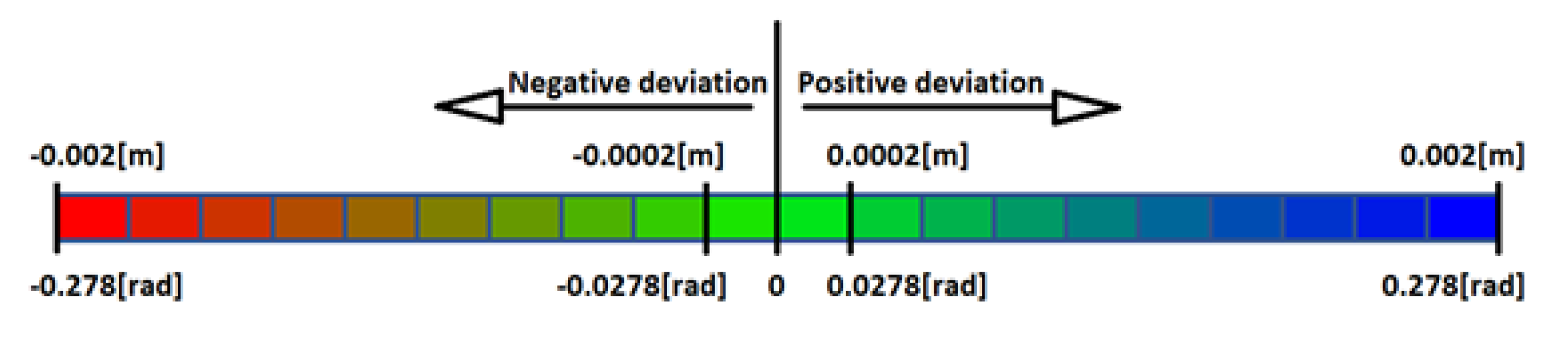

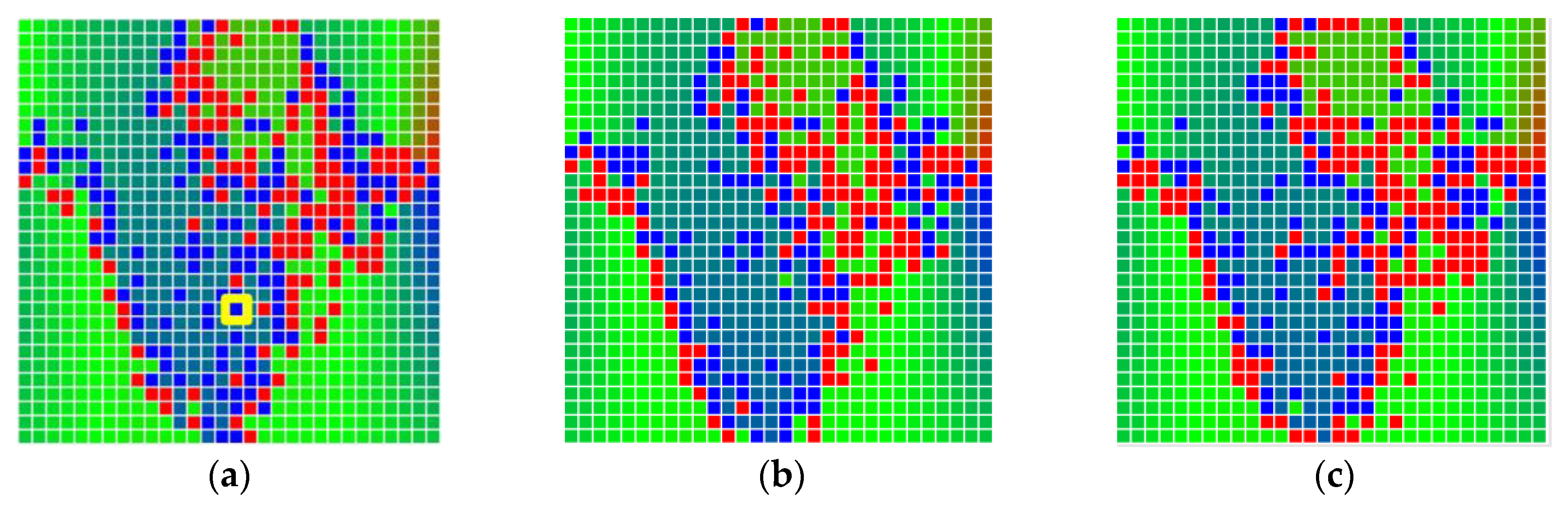

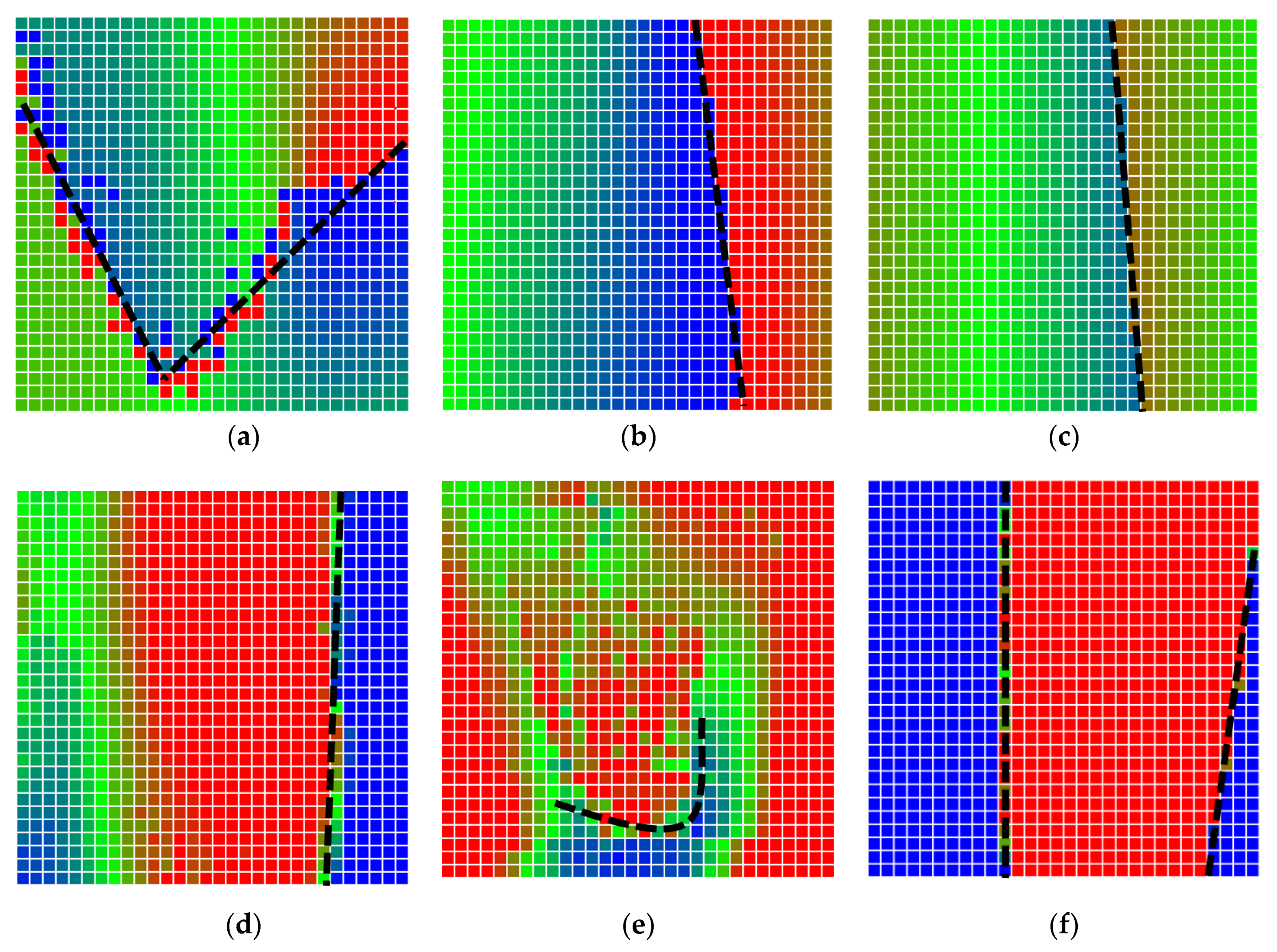

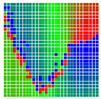

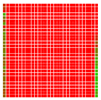

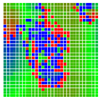

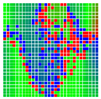

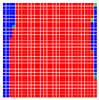

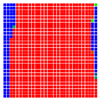

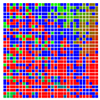

4. Benchmark Results for the 2D Aruco Board

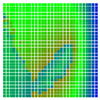

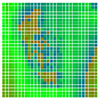

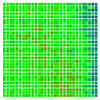

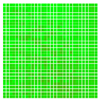

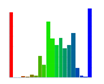

5. Recalibration with a Liquid-Crystal Display

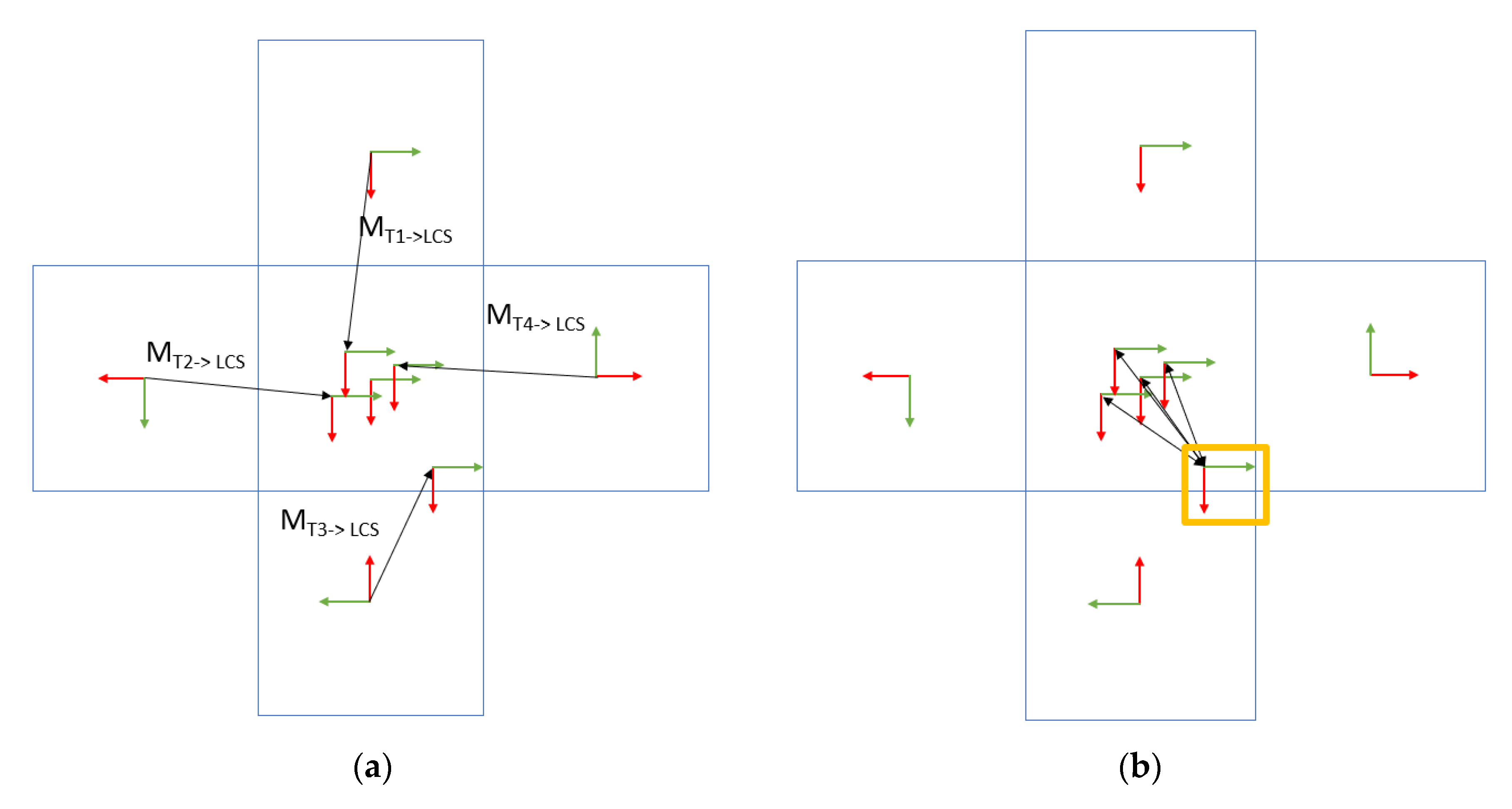

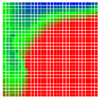

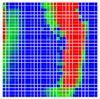

6. Three-dimensional Aruco Grid Board

- Pose estimates are unstable when the orientation of the markers relative to the camera is perpendicular, i.e., the camera Z-axis is overlapping with the marker Z-axis.

- An error detection metric with redundant tags being detected at the same time can be used to improve pose estimation, i.e., if we have five tags and we know one of them has a pose estimate which is considerably different from the others, we could discard it and use the other tags for pose estimation.

7. Error Metric for the 3D Grid Board

| Algorithm 2 Calculating the Error in the Net Pose Estimation of the 3D Grid Board |

| Detect tvecs of Tags //tvecs represent array of translations of the origin of detected tags in for i = 0 to length(tvecs) //camera’s coordinate frame for j = 0 to length(tvecs) deviation_distance[i] += (distance(tvecs[i] – tvecs[j])) //calculate distance between transformed //tag centers end for end for filtered_tvecs = remove_detections_with_high_deviation (tvecs, deviation_distance) volume = (get_max_x(filtered_tvecs)–get_min_x(filtered_tvecs)) * // get min and get max functions (get_max_y(filtered_tvecs)–get_min_y(filtered_tvecs)) * // return the min and max tvecs (get_max_z(filtered_tvecs)–get_min_z(filtered_tvecs)) // along each axis |

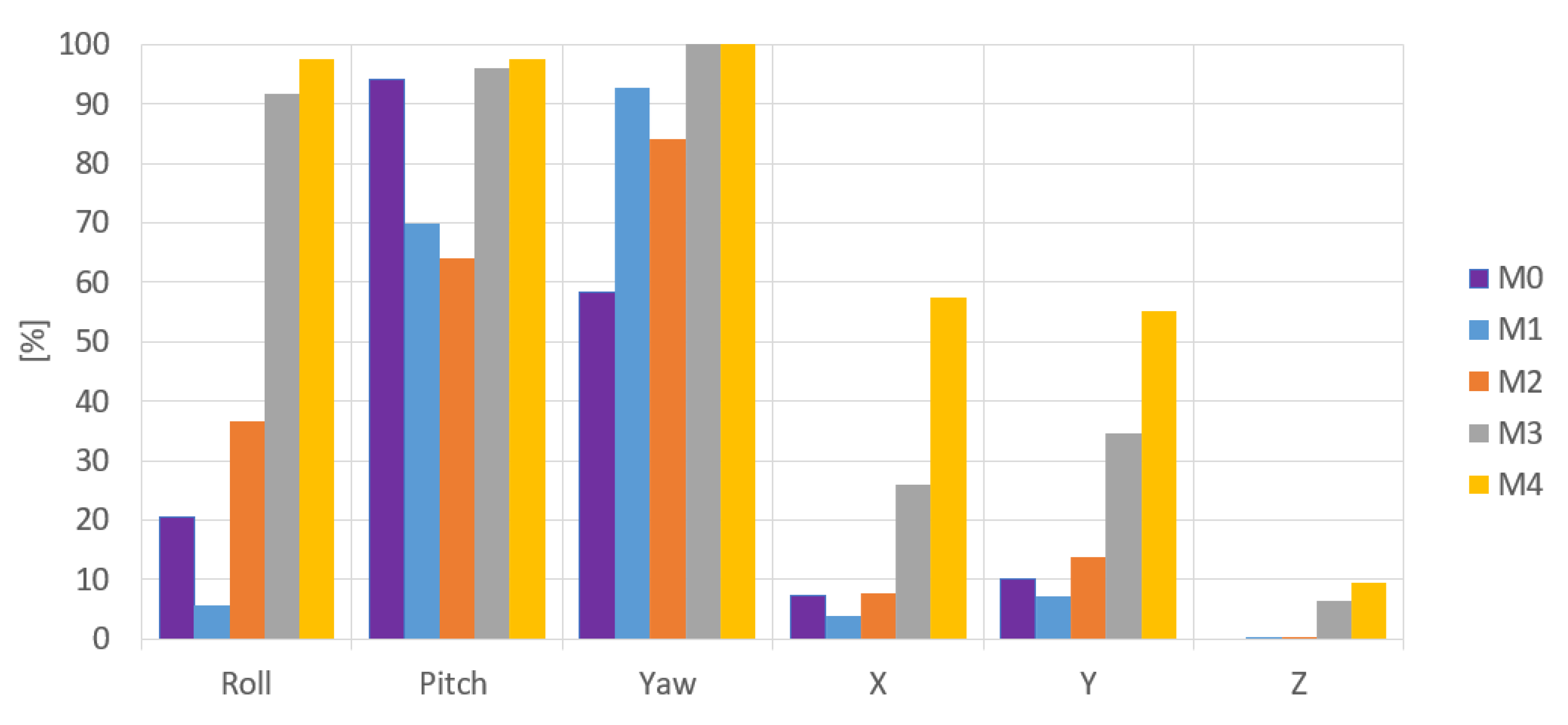

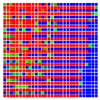

8. Results

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recogn. 2014. [Google Scholar] [CrossRef]

- Zhong, X.; Zhou, Y.; Liu, H. Design and recognition of artificial landmarks for reliable indoor self-localization of mobile robots. Int. J. Adv. Robot. Syst. 2017. [Google Scholar] [CrossRef]

- Chavez, A.G.; Mueller, C.A.; Doernbach, T.; Birk, A. Underwater navigation using visual markers in the context of intervention missions. Int. J. Adv. Robot. Syst. 2019. [Google Scholar] [CrossRef]

- Marut, A.; Wojtowicz, K.; Falkowski, K. ArUco markers pose estimation in UAV landing aid system. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Torino, Italy, 19 June 2019; pp. 261–266. [Google Scholar] [CrossRef]

- Zheng, J.; Bi, S.; Cao, B.; Yang, D. Visual localization of inspection robot using extended kalman filter and aruco markers. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12 December 2018; pp. 742–747. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, Y. A practical estimating method of camera’s focal length and extrinsic parameters from a planar calibration image. In Proceedings of the 2012 Second International Conference on Intelligent System Design and Engineering Application, Sanya, Hainan, 6 January 2012; pp. 138–142. [Google Scholar] [CrossRef]

- Fetić, A.; Jurić, D.; Osmanković, D. The procedure of a camera calibration using Camera Calibration Toolbox for MATLAB. In Proceedings of the 2012 35th International Convention MIPRO, Opatija, Croatia, 21 May 2012; pp. 1752–1757. [Google Scholar]

- Wang, X.; Zhao, Y.; Yang, F. Camera calibration method based on Pascal’s theorem. Int. J. Adv. Robot. Syst. 2019. [Google Scholar] [CrossRef]

- Ramírez-Hernández, L.R.; Rodríguez-Quiñonez, J.C.; Castro-Toscano, M.J.; Hernández-Balbuena, D.; Flores-Fuentes, W.; Rascón-Carmona, R.; Lindner, L.; Sergiyenko, O. Improve three-dimensional point localization accuracy in stereo vision systems using a novel camera calibration method. Int. J. Adv. Robot. Syst. 2020. [Google Scholar] [CrossRef]

- Bushnevskiy, A.; Sorgi, L.; Rosenhahn, B. Multimode camera calibration. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25 September 2016; pp. 1165–1169. [Google Scholar] [CrossRef]

- Schmidt, A.; Kasiński, A.; Kraft, M.; Fularz, M.; Domagała, Z. Calibration of the multi-camera registration system for visual navigation benchmarking. Int. J. Adv. Robot. Syst. 2014. [Google Scholar] [CrossRef]

- Dong, Y.; Ye, X.; He, X. A novel camera calibration method combined with calibration toolbox and genetic algorithm. In Proceedings of the 2016 IEEE 11th Conference on Industrial Electronics and Applications (ICIEA), Hefei, China, 5 June 2016; pp. 1416–1420. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV library. Dr. Dobbs J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Calibration Targets. Available online: https://calib.io (accessed on 20 August 2020).

- Atcheson, B.; Heide, F.; Heidrich, W. CALTag: High precision fiducial markers for camera calibration. In Proceedings of the Vision, Modeling, and Visualization Workshop 2010, Siegen, Germany, 15–17 November 2010; pp. 41–48. [Google Scholar]

- Xing, Z.; Yu, J.; Ma, Y. A new calibration technique for multi-camera systems of limited overlapping field-of-views. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24 September 2017. [Google Scholar] [CrossRef]

- Miseikis, J.; Glette, K.; Elle, O.J.; Torresen, J. Automatic calibration of a robot manipulator and multi 3D camera system. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Sapporo, Japan, 13 December 2016; pp. 735–741. [Google Scholar] [CrossRef]

- Sergio, G.; Nicholson, S. Detection of ArUco Markers. OpenCV: Open Source Computer Vision. 2020. Available online: https://docs.opencv.org/master/d5/dae/tutorial_aruco_detection.html (accessed on 1 February 2020).

- Camera Calibration with OpenCV. OpenCV: Open Source Computer Vision. 2020. Available online: https://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html (accessed on 1 February 2020).

- Dubonnet, Olivier Roulet. “python-urx”. GitHub Repository. 2020. Available online: https://github.com/SintefManufacturing/python-urx (accessed on 1 February 2020).

- Oščádal, P.; Heczko, D.; Bobovský, Z.; Mlotek, J. “3D-Gridboard-Pose-Estimation”. GitHub Repository. 2020. Available online: https://github.com/robot-vsb-cz/3D-gridboard-pose-estimation (accessed on 1 February 2020).

| Number of Tags on the Board | 2 × 2 |

| Tag edge length | 70 mm |

| Spacing between tags | 10 mm |

| Tag type | 6 × 6 × 1000 |

| Axis/° | −30° | −15° | 0° | 15° | 30° |

|---|---|---|---|---|---|

| Roll | YES | YES | YES | YES | NO |

| Pitch | NO | NO | YES | NO | |

| Yaw | NO | NO | YES | NO |

| Roll | Pitch | Yaw | X | Y | Z | |

|---|---|---|---|---|---|---|

| +15° Roll |  |  |  |  |  |  |

| −15° Roll |  |  |  |  |  |  |

| −30° Roll |  |  |  |  |  |  |

| +15° Pitch |  |  |  |  |  |  |

| +15° Yaw |  |  |  |  |  |  |

| 0° (Parallel to the imaging plane) |  |  |  |  |  |  |

| Roll | Pitch | Yaw | X | Y | Z | |

|---|---|---|---|---|---|---|

| +15° Roll |  |  |  |  |  |  |

| −15° Roll |  |  |  |  |  |  |

| −30° Roll |  |  |  |  |  |  |

| +15° Pitch |  |  |  |  |  |  |

| +15° Yaw |  |  |  |  |  |  |

| 0° (Parallel to the imaging plane) |  |  |  |  |  |  |

| Number of Tags on the Grid Board | 5 |

| Tag edge length | 70 mm |

| Spacing between tags | 30 mm |

| Tag type | 6 × 6 × 1000 |

| Old Calibration | New Calibration | |

|---|---|---|

| 2D grid board | Measurement 1 (M1) | Measurement 3 (M3) |

| 3D grid board | Measurement 2 (M2) | Measurement 4 (M4) |

| Roll | Pitch | Yaw | X | Y | Z | |

|---|---|---|---|---|---|---|

| M0 |  |  |  |  |  |  |

| M1 |  |  |  |  |  |  |

| M2 |  |  |  |  |  |  |

| M3 |  |  |  |  |  |  |

| M4 |  |  |  |  |  |  |

| Roll | Pitch | Yaw | X | Y | Z | |

|---|---|---|---|---|---|---|

| M0 |  |  |  |  |  |  |

| M1 |  |  |  |  |  |  |

| M2 |  |  |  |  |  |  |

| M3 |  |  |  |  |  |  |

| M4 |  |  |  |  |  |  |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oščádal, P.; Heczko, D.; Vysocký, A.; Mlotek, J.; Novák, P.; Virgala, I.; Sukop, M.; Bobovský, Z. Improved Pose Estimation of Aruco Tags Using a Novel 3D Placement Strategy. Sensors 2020, 20, 4825. https://doi.org/10.3390/s20174825

Oščádal P, Heczko D, Vysocký A, Mlotek J, Novák P, Virgala I, Sukop M, Bobovský Z. Improved Pose Estimation of Aruco Tags Using a Novel 3D Placement Strategy. Sensors. 2020; 20(17):4825. https://doi.org/10.3390/s20174825

Chicago/Turabian StyleOščádal, Petr, Dominik Heczko, Aleš Vysocký, Jakub Mlotek, Petr Novák, Ivan Virgala, Marek Sukop, and Zdenko Bobovský. 2020. "Improved Pose Estimation of Aruco Tags Using a Novel 3D Placement Strategy" Sensors 20, no. 17: 4825. https://doi.org/10.3390/s20174825

APA StyleOščádal, P., Heczko, D., Vysocký, A., Mlotek, J., Novák, P., Virgala, I., Sukop, M., & Bobovský, Z. (2020). Improved Pose Estimation of Aruco Tags Using a Novel 3D Placement Strategy. Sensors, 20(17), 4825. https://doi.org/10.3390/s20174825