Abstract

Due to the spectral complexity and high dimensionality of hyperspectral images (HSIs), the processing of HSIs is susceptible to the curse of dimensionality. In addition, the classification results of ground truth are not ideal. To overcome the problem of the curse of dimensionality and improve classification accuracy, an improved spatial–spectral weight manifold embedding (ISS-WME) algorithm, which is based on hyperspectral data with their own manifold structure and local neighbors, is proposed in this study. The manifold structure was constructed using the structural weight matrix and the distance weight matrix. The structural weight matrix was composed of within-class and between-class coefficient representation matrices. These matrices were obtained by using the collaborative representation method. Furthermore, the distance weight matrix integrated the spatial and spectral information of HSIs. The ISS-WME algorithm describes the whole structure of the data by the weight matrix constructed by combining the within-class and between-class matrices and the spatial–spectral information of HSIs, and the nearest neighbor samples of the data are retained without changing when embedding to the low-dimensional space. To verify the classification effect of the ISS-WME algorithm, three classical data sets, namely Indian Pines, Pavia University, and Salinas scene, were subjected to experiments for this paper. Six methods of dimensionality reduction (DR) were used for comparison experiments using different classifiers such as k-nearest neighbor (KNN) and support vector machine (SVM). The experimental results show that the ISS-WME algorithm can represent the HSI structure better than other methods, and effectively improves the classification accuracy of HSIs.

1. Introduction

With the development of science and technology, hyperspectral images (HSIs) have become the main research direction in the field of modern remote sensing technology. HSIs have a large number of spectral bands, which provide detailed spectral information about objects [1,2]. However, due to the strong correlation between adjacent spectra, there is much redundant information in HSIs, which take up a large storage space and require much computation time. Moreover, when classifying HSIs, classification accuracy is subject to the curse of dimensionality [3]. In order to improve classification accuracy, a dimensionality reduction (DR) method is a necessary and feasible preprocessing measure for HSI [4,5].

A DR method aims at extracting important features of images, mapping high-dimensional data to low-dimensional space, and using the data in low-dimensional space to describe high-dimensional features [5]. In recent years, scholars have put forward many DR methods, which can be divided into the following two categories: linear dimensionality reduction (LDR) algorithms and manifold dimensionality reduction (MDR) algorithms [6]. The former includes principal component analysis (PCA) [7], linear discriminant analysis (LDA) [8], and independent component analysis (ICA) [9], and so on. These methods project images to the low-dimensional space by linear transformation and find the optimal transformation projection. However, because ground-truth features reflected in HSI are often nonlinear topological structures, important features of the images are lost if only an LDR method is used. Therefore, MDR algorithms are gradually appearing, including local linear embedding (LLE) [10], local preserving project (LPP) [11], Laplacian eigenmaps (LE) [12], and so on. By learning the intrinsic geometric structure of data, manifold learning [13] can obtain the potential manifold structure of the high-dimensional data to achieve the goal of dimensionality reduction.

The purpose of the MDR method in HSI is to find the manifold structure in the high-dimensional space. LLE [14] obtains the reconstruction weight by characterizing the local adjacency sample of the data and keeps the neighborhood relationship in the local range unchanged when mapping to the low-dimensional space. However, an LLE algorithm only determines the neighbor relationship between points and cannot describe the structural features of data. Therefore, the linear neighbor representation weight matrix of different samples is different. When the LLE algorithm is used for different samples, the algorithm needs to be re-run, which is time consuming. It has a considerably low efficiency. Wu et al. [15] proposed an improved weighted local linear embedding (WLE-LLE) algorithm, which constructs the weight matrix by calculating the Euclidean distance and geodesic distance between samples. In addition, it merges LLE with LE algorithms to form a new objective function to effectively represent the topology structure of the data. Huang et al. [16] proposed a sparse discriminant manifold embedding (SDME) algorithm, which forms a dimensionality reduction framework based on graph embedding and sparse representation methods to make full use of the prior label information. Xu et al. [17] proposed a superpixel-based spatial–spectral dimension reduction (SSDR) algorithm by integrating the similarity between space and spectrum. The mapping matrix of the spatial domain is found by using superpixel segmentation to explore spatial similarity. Pixels from the same label construct a label-guided graph to explore the spectral similarity. Furthermore, integrating the labels and spatial information contributes to learning a discriminant projection matrix. Wu et al. [18] proposed a correlation coefficient-based supervised locally linear embedding (SC2SLLE) algorithm, which introduces the Spearman correlation coefficient to determine the appropriate nearest neighbor points, and increases the discriminability of embedding data on the basis of supervising the LLE method. Zhang et al. [19] proposed a SLIC (Sample Linear Iterative Clustering) superpixel based for Schroedinger eigenmaps (SSSE) algorithm, which uses SLIC segmentation to obtain spatial information for superpixels of different scales and sizes. The use of an SE method yields low-dimensional data. Hong et al. [20] proposed a robust local manifold representation (RLMR) algorithm based on LLE, to learn a novel manifold representation methodology, and then combine the new method with spatial–spectral information to improve the robustness of the algorithm.

In this paper, an improved spatial–spectral weight manifold embedding (ISS-WME) algorithm is proposed to combine spatial–spectral information and manifold structure to extract the features of HSI. First, the spatial–spectral information of HSI is extracted with the Gaussian variant function. The product of the spatial distance matrix and the spectral distance matrix is then used to be the distance weight matrix. Then, the collaborative representation method is used to express the characteristics of the HSI structure. Samples from the same class are as much as possible in the same hyperplane after projection, and samples from the different classes are as far apart as possible. The structural weight matrix is obtained by combining the within-class and between-class weight representation matrices. The product of the distance weight matrix and the structure weight matrix is used as the new weight matrix. When the data is mapped from high-dimensional manifold space to low-dimensional space, it is easy to make abnormal points appear if only considering the structural distribution between the data points. Furthermore, it is easy to cause the problem of sparseness if only keeping the data nearest neighbor relationship unchanged during projection transformation. To overcome abnormal points and the sparseness problem, both the structure and neighbor sample relationship are taken into account in this paper. Finally, the model can be efficiently solved by solving the minimum eigenvalue to the generalized eigenvalue problem and obtaining a projection matrix. The main contributions of the proposed algorithm are as follows:

- A new weight matrix is constructed to describe the structure between samples, in which the product of the spatial–spectral distance weight matrix and the structure weight matrix is taken as a new data weight matrix. Compared with the previous weight matrix, which only considers spectral distance or spatial distance, the new weight matrix integrates the spatial–spectral information and structural characteristic of the data.

- The model not only makes the manifold structure invariant, but also preserves the nearest neighbor relationship of the samples, when the high-dimensional data are projecting to the low-dimensional space.

This paper is arranged as follows. Section 2 briefly summarizes the LLE and LE methods and reviews the related works of these models. Section 3 provides the detailed description and the solving process of ISS-WME. Section 4 compares the performance of the proposed method and other DR methods with respect to three public data sets. Finally, the conclusions and perspectives are provided in Section 5.

2. Related Works

2.1. Local Linear Embedding

Given the data set , where , this denotes the ith sample with D-dimension features and N is the number of the samples. We assume that the D-dimensional-sample projects to d-dimension space M, . Therefore, the low-dimensional coordinate of the transformed data is , where . The core of the LLE algorithm is to retain and its local neighbor samples are unchanged after DR. We consider the point and its local neighbor points as belonging to the same class. Under the principle of minimizing reconstruction errors, the sample can be linearly represented by these neighbor samples. By reconstructing the weight matrix, the original space is connected with the low-dimensional embedding space. Moreover, the reconstruction weight matrix between each sample and its nearest neighbor samples is kept unchanged, and the embedding result in the low-dimensional space is obtained by minimizing the reconstruction errors. Therefore, the weight coefficient matrix of the relationship between and its local neighbors can be obtained by solving the following optimization problem [21]:

In Equation (1), is one of the k samples, which is closest to Xi(i = 1,⋯N), and , andstands for the weight neighbor relationship between samples and ; if they are not neighbors then . Assuming the projection of D-dimensional samples into d-dimension space, it is desirable to maintain the same linear relationship:

where I is the identity matrix and . Then, we have the following: , hence, Equation (2) can be changed into the following problem:

Using the method of Lagrangian multiplier, Equation (3) can be easily solved by the generalized eigenvalue decomposition approach as follows:

Then, we can obtain the eigenvector corresponding to the dth smallest non-zero eigenvalues, and the low-dimensional embedding matrix can be represented as .

The LLE algorithm [22] can successfully maintain the local neighbor geometric structure and have a fast calculation speed. However, as the number of data dimension and data size increases, it has large sparsity, poor noise, and other problems.

2.2. Laplacian Eigenmaps

Given the data set and using the KNN method to find the k-nearest neighbors of the sample , an overall data structure matrix is then formed, and is the jth nearest sample of . Then, there is a weight value as . Let , which denotes the low-dimensional embedding samples of data set X, and . Then, Y can be solved by constructing the following optimization problem [23]:

Similarly, Equation (5) constraints ensure it has a solution. And it can be solved by using the generalized eigenvalue decomposition approach as follows:

where is a diagonal matrix and is the Laplacian matrix. H is the weight matrix made up of . The embedding samples in the d-dimensional space are constructed by the eigenvectors corresponding to the d minimum eigenvalues.

The LE algorithm [24] introduces the graph theory to achieve the purpose of DR methods. Nevertheless, due to the inaccurate weight matrix in the LE algorithm, the traditional LE algorithm cannot accurately describe the structure for complex hyperspectral data, resulting in the fact that the data in the low-dimensional space cannot fully express the original data features.

3. Improved Spatial–Spectral Weight Manifold Embedding

To solve large sparsity, inexact weight, and other problems of the LLE and LE [25] algorithms, an improved spatial–spectral weight manifold embedding (ISS-WME) algorithm is proposed in this paper. It combines spatial–spectral and high-dimensional manifold structure information to construct a weight matrix corresponding to the HSI structure. Considering the multi-manifold structure of HSI, the combination of its structure and the nearest neighbor samples simultaneously makes the data neighbor relationship invariable, without breaking the original structure when embedding to the low-dimensional space. In this regard, Section 3.1 specifically analyzes how to construct a weight matrix that is more consistent with the sample structure. In addition, Section 3.2 describes the final optimization objective function.

3.1. Spatial–Spectral Weight Setting

Through experimental study, researchers have found that classification accuracy can be improved by combining the spatial information in the analysis of HSI. Hence, the ISS-WME method is based on spatial and spectral information. It uses the variation of Gaussian function to represent the spatial and spectral distance, respectively. Given the HSI data set , where is the spectral reflectance of a pixel and is the spatial coordinates of a pixel, to construct , we find each pair of samples and , where . Therefore, the spatial distance matrix and spectral distance matrix are represented, respectively, as follows:

Therefore, the spatial–spectral distance weight matrix is as follows:

In HSI, adjacent pixels in the same homogenous region usually belong to the same class, so any sample in the same class can be linearly represented by homogeneous neighbor samples. Similarly, the whole data sample centers can be represented by different classes of sample centers [26]. Hence, the HSI still maintains this characteristic after DR. We want to obtain a within-class representation coefficient matrix by minimizing the error of the collaboration representation model. To prevent overfitting, regularization constraints are added to the optimization model. The objective function of the within-class collaboration representation model is as follows:

In Equation (9), is the sample number in the kth class and is the sample set other than the kth class, and is expressed as the sample set from the same class as , except . is the within-class linear representation coefficient matrix of the kth class sample, and the within-class mean coefficient matrix is , and denotes all the within-class linear representation coefficients .

Likewise, the objective function of the between-class representation coefficient matrix is as follows:

In Equation (10), is the mean of the total samples, and is the central sample set of each class sample. denotes the between-class representation coefficient matrix of the kth class sample, and the between-class mean coefficient matrix is , and denotes all the between-class representation coefficients .

The within-class representation matrix is obtained by solving the minimum value of Equation (9) (setting the derivative of objective function about within-class representation coefficients to be 0):

Therefore, the within-class coefficient matrix is as follows:

In the same way, we can set the derivative of objective function about between-class representation coefficients to be 0, so the between-class coefficient matrix is as follows:

3.2. ISS-WME Model

Given the HSI data set , we assume that the projection matrix is expected to project the data X into the low-dimensional space. represents the samples in low-dimensional space, and . As proposed by Wu [15], both of the distance and structural factors are taken into account in this paper. Then, we regard the spatial–spectral matrix as the distance weight and coefficient matrices as the structure weight , then and constitute the new weight matrix between samples, such as the following:

where represents the proportion of the within-class matrix and the between-class matrix in the structure weight.

Furthermore, the high-dimensional data mapping to the low-dimensional space not only makes the local manifold structure unchanged, but also maintains the local neighbor relationship invariant. Introducing the weight of Equation (14) to increase the robustness of the model, the improved weight manifold embedding optimization problem is as follows:

where is a compromise parameter. is the spatial–spectral matrix in Equation (14) and is still the weight matrix representing the nearest neighbor relationship. If and are neighbors, represents the geodesic distance; otherwise, . According to Equations (2) and (5), the optimization problem (15) is equivalent to the following:

where is the Laplacian matrix, is a diagonal matrix, is a symmetric matrix, and . Moreover, . Finally, the objective function can be conducted as the following optimization problem:

With the method of Lagrange multiplier, the optimization problem is formed as follows:

where is the generalized eigenvector of Equation (18) according to their eigenvalue . Then, we can learn a projection matrix . In summary, Algorithm 1 is as follows:

| Algorithm 1 Process of the ISS-WME Algorithm |

| Input: HSI data set and , low-dimensional space , K is the nearest neighbor. 1: HSI is segmented into superpixels using the SLIC segmentation method and randomly select training samples (for Pavia University, training samples are 2%, 4%, 6%, 8%, 10%), and then use Equations (7) and (8) to calculate the spatial–spectral distance matrix between superpixels. In addition, make sure the number of superpixels and training samples is the same. 2: Then, use Equations (12) and (13) to obtain the structure representation matrix between training samples. The product of the two types of matrices is taken as the new matrix Equation (14). 3: According to the local manifold structure and nearest neighbor relationship of the samples, the objective function of Equation (16) is constructed. 4: By solving the generalized feature of Equation (18), the corresponding eigenvector is obtained. 5: Learn a projection matrix P. Output: The data in low-dimensional space is |

4. Experiments and Discussion

In order to verify the effectiveness of the proposed algorithm ISS-WME, we conducted experiments on three commonly used HSI data sets, namely Indian Pines, Pavia University, and Salinas scene. We considered the overall accuracy (OA) [19], classification accuracy (CA), average accuracy (AA), and kappa coefficient (kappa) [27] of the classification results as evaluation values. We compared the ISS-WME algorithm with six other representative DR algorithms, i.e., PCA, Isomap [28], LLE, LE, SSSE, and WLE-LLE. We used two more commonly used classifiers, i.e., the Euclidean distance-based k-nearest neighbor (KNN) algorithm [29] and the support vector machine (SVM), to classify the low-dimensional data. We performed the experiment using MATLAB on an Intel Core CPU 2.59 GHz and 8 GB RAM computer.

4.1. Data Sets and Parameter Setting

4.1.1. Data Sets

The Indian Pines, Pavia University, and Salinas scene data sets were subjected to experiments in the paper.

The Indian Pines data set [30,31] and Salinas scene data set [2,30] were the scenes gathered by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor. Indian Pines consisted of pixels and 220 spectral bands. However, several spectral bands with noise and water absorption phenomena were removed from the data set, leaving a total of 200 radiance channels to be used in the experiments. Salinas had pixels and 204 spectral bands.

The Pavia University data set [30,32] was acquired by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor. Its size was pixels. Some channels were removed due to noise and the remaining number of spectral bands was 103.

4.1.2. Experimental Parameter Settings

For this paper, six different DR algorithms were compared with the proposed ISS-WME method. These comparison algorithms are described as follows. PCA, Isomap, LLE, and LE are four classical DR algorithms. The SSSE algorithm combines the spectral and spatial information and WLE-LLE combines the spectral and structural information. In addition, for the LE, LLE, WLE-LLE, and SSSE algorithms, the number of nearest neighbor samples must be set in the experiment. To compare and analyze the classification results in the experiment, the nearest neighbor samples were set as 15 in all experiments. The SSSE and ISS-WME algorithms also require computational spatial and spectral information, so we set the parameters as .

In each experiment, each data set was divided into training samples and testing samples. We used different DR algorithms to learn a projection matrix on the training samples, and then utilized the acquired embedding matrix to project the testing samples into the low-dimensional space. Finally, we used a KNN or SVM classifier to classify the data in the low-dimensional space. Moreover, to reduce the systematic error, the results were computed 10 times to calculate the average value for each experimental result with the associated standard deviation. We used OA, CA, AA, and K to evaluate the different algorithm performances. In the Indian Pines experiment, the parameters were set to . In the same way, the parameters were set to in the Pavia University experiment. Finally, in the Salinas scene, the parameters were .

4.2. Results for the Indian Pines Data Set

To fully attest the algorithm performance of the ISS-WME method, experiments were carried out under the conditions of different numbers of training samples, different embedding dimensions, and different DR methods. We randomly selected n% (n = 10, 20, 30, 40, 50) samples from each class as the training sample set, and the rest were the testing sample set. We also set the hyperspectral dimensionality (HD) of low-dimensional embedding from 10 to 50. The results of the proposed ISS-WME method were compared with those of the other comparison DR methods.

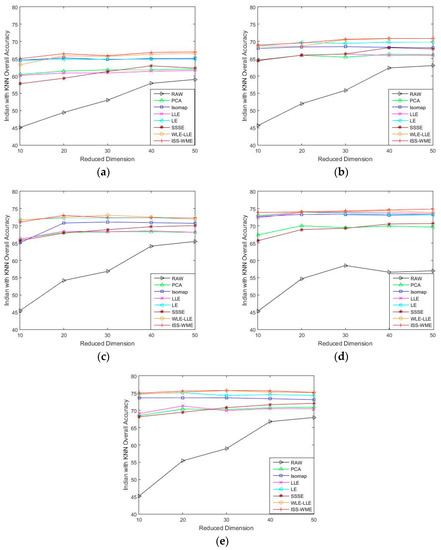

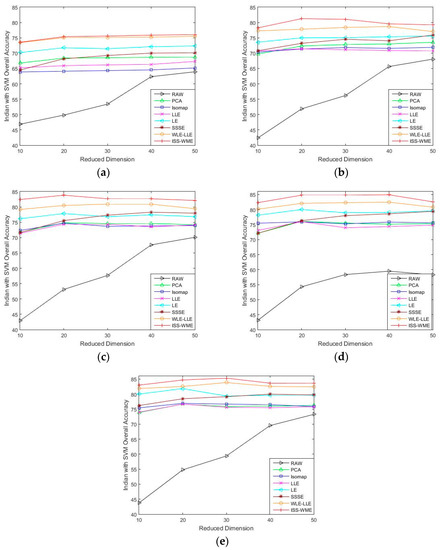

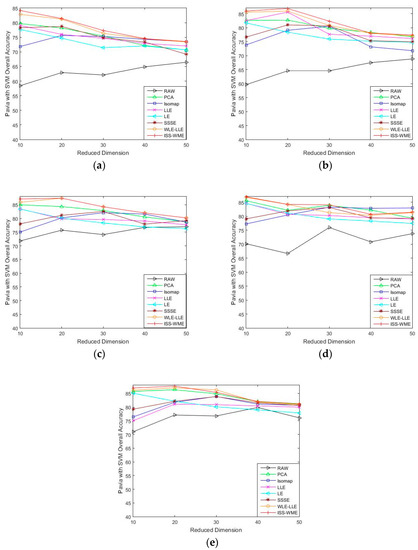

Figure 1 and Figure 2 show the OA of the KNN and SVM classifiers on different embedding dimensions using different DR methods. Specifically, (a)–(e) represent different training sample sets. The OA of Indian Pines with different training samples directly classified by a KNN or SVM classifier was used as the baseline. Compared with the five other dimensionality reduction methods, ISS-WME and WLE-LLE achieved the best and the second-best overall accuracy, respectively, under different dimensions or different training samples. Comparing Figure 1 and Figure 2, the overall accuracy of SVM is higher than KNN.

Figure 1.

OA obtained by using a k-nearest neighbor (KNN) classifier, with respect to (a–e), different numbers of training sets (10%, 20%, 30%, 40%, 50%) and different dimensions (from 10 to 50) for the Indian Pines data set.

Figure 2.

OA obtained by using a support vector machine (SVM) classifier, with respect to (a–e), different sizes of training sets (10%, 20%, 30%, 40%, 50%) and different hyperspectral dimensionality (HD) (from 10 to 50) for the Indian Pines data set.

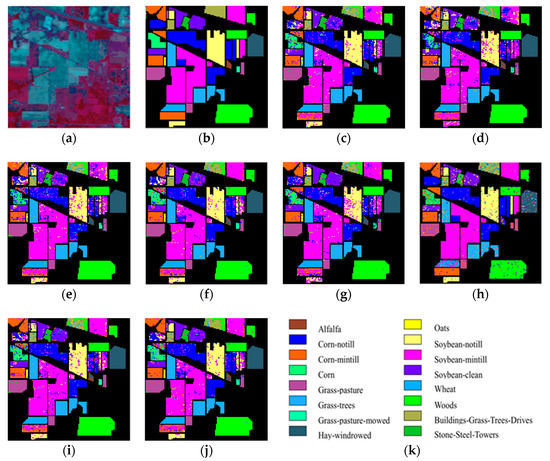

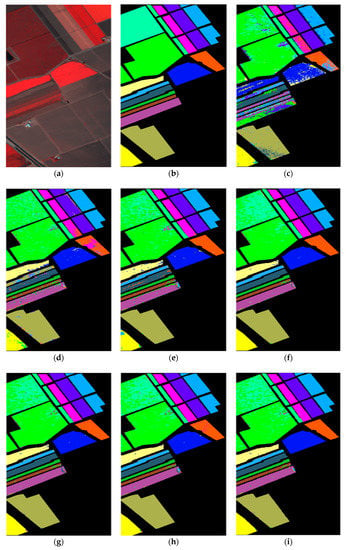

As can be seen in Figure 1 and Figure 2, the OA decreases as the dimension increases. In Figure 1, it can be observed that, for the KNN classifier, the proposed ISS-WME method obtains similar classification results with those of WLE-LLE in almost all cases of embedding dimensions, and achieves the best classification result in hyperspectral dimensionality (HD) = 50. Figure 2c shows the OA of the HD for the 30% samples of the Indian Pines data as training set. Compared with RAW, PCA, Isomap, LLE, LE, SSSE, and WLE-LLE, when HD = 50, ISS-WME increases the OA by 12.01%, 8.2%, 7.98%, 5.28%, 4.15%, and 2.69%, respectively. To further demonstrate intuitively the classification results of the DR algorithms, the comparison results for the 50% of the Indian Pines data trained with the SVM classifier in are presented visually in Figure 3 with the best overall accuracy. It includes (a) the false-color image, (b) the corresponding ground-truth map, and the different DR methods’ classification maps (c)–(j). It can be observed that the proposed ISS-WME algorithm performs better in land-over classes than the other compared DR methods.

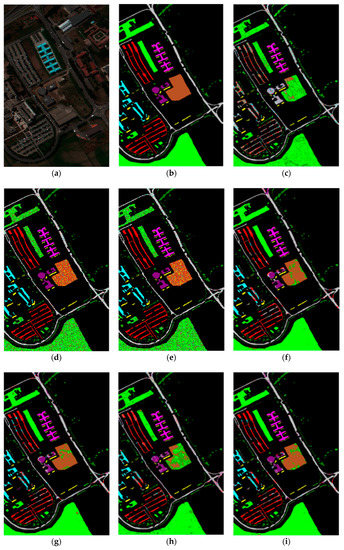

Figure 3.

Classification maps of an SVM classifier using different dimensionality reduction (DR) algorithms for the Indian Pines data in HD = 20: (a) false-color image (R:57,G:27,B:17); (b) ground-truth map; (c) original (SVM); (d) principal component analysis (PCA); (e) Isomap; (f) local linear embedding (LLE); (g) Laplacian eigenmaps (LE); (h) SLIC superpixel based for Schroedinger eigenmaps (SSSE); (i) weighted local linear embedding (WLE-LLE); and (j) improved spatial–spectral weight manifold embedding (ISS-WME); (k) representation of different classes.

In order to further describe the comparison results, the quantitative comparison of classification accuracy using SVM classifiers under HD = 20 for different DR methods is summarized in Table 1. The results include the OA and kappa coefficient for each method, and each result is the average of the results of 10 runs with the associated standard deviation. As can be seen in Table 1, in most cases, the classification results (OA and kappa) generated by ISS-WME are the best.

Table 1.

Results of the different DR methods for the Indian Pines data set .

Table 2 provides the training and testing sample numbers of each class in the Indian Pines data set in the experiment, as well as the classification results of the SVM classifier using different DR methods. Compared to Table 1, Table 2 shows the evaluation index CA, where the best results are shown in bold numbers. It can also be seen in Table 2 that the ISS-WME method achieves the best accuracy in 10 classes of samples.

Table 2.

Results of each class of samples in different DR methods for the Indian Pines data set (HD = 20).

4.3. Results for the Pavia University Data Set

In order to fully attest the algorithm performance of ISS-WME, experiments were carried out under the conditions of different numbers of training samples, different embedding dimensions, and different DR methods. We randomly selected n% (n = 2, 4, 6, 8, 10) samples from each class as the training set, and the rest were the testing set. We also set the hyperspectral dimensionality (HD) of low dimensional embedding from 10 to 50. The results of the proposed ISS-WME method were compared with those of the other DR methods.

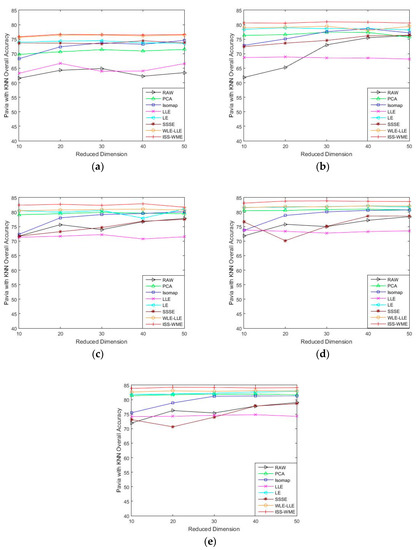

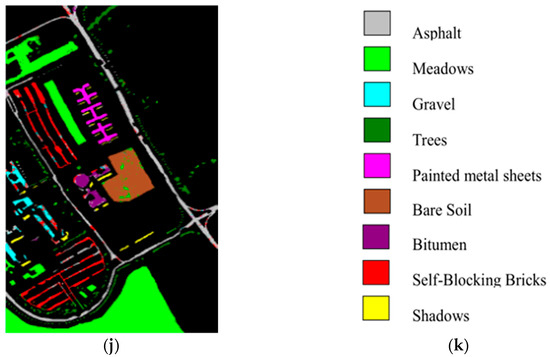

Figure 4 and Figure 5 show the OA of the KNN and SVM classifiers on different embedding dimensions using different DR methods. Specifically, (a)–(e) represent different training sets. The OA directly obtained by using different classifiers in dimensions was used as the baseline. Compared to the six other algorithms, ISS-WME achieved the best OA in almost all cases with different embedding dimensions under different numbers of training samples. As can be seen in Figure 5, image classification accuracies are more or less susceptible to distortion with the increase in embedding dimensions. No matter which DR algorithms are adopted, the curse of dimensionality occurs to a certain extent. Compared with Figure 4 and Figure 5, the distortion is serious when using the SVM classifier.

Figure 4.

OA obtained by using a KNN classifier, with respect to (a–e), different numbers of training sets (2%, 4%, 6%, 8%, 10%) and different HD (from 10 to 50) for the Pavia University data set.

Figure 5.

OA with respect to (a–e), different sizes of training sets (2%, 4%, 6%, 8%, 10%) and different HD (from 10 to 50) for the Pavia University data set, combined with the SVM classifier.

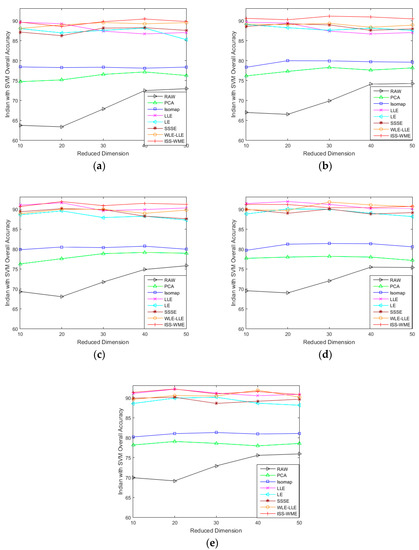

As can be seen in Figure 4, the OA of different DR methods is relatively stable with the increase in training sets when KNN is used as the classifier. Figure 5e shows the impact of the hyperspectral dimensionality (HD) on the OA for 10% samples of Pavia University data as training set. Compared with RAW, PCA, Isomap, LLE, LE, SSSE, and WLE-LLE, when HD = 50, ISS-WME increases the OA by 0.13%, 0.55%, 1.24%, 3.34%, 0.64%, and 0.31%, respectively. In order to further demonstrate the classification results of DR algorithms, the classification result maps for the 10% of the Pavia University data trained with the SVM classifier in HD = 20 are presented visually in Figure 6, including (a) the false-color image, (b) the corresponding ground-truth map, and the different DR methods’ classification maps (c)–(j). It can be observed that the proposed ISS-WME algorithm performs better than the other compared DR methods, in most land-over classes.

Figure 6.

SVM classification maps of the different methods with the Pavia University data set in HD=20: (a) false-color image (R:102,G:56,B:31), (b) ground-truth map, (c) original (SVM), (d) PCA, (e) Isomap, (f) LLE, (g) LE, (h) SSSE, (i) WLE-LLE, and (j) ISS-WME; (k) representation of different classes.

To further describe the comparison results, the quantitative comparison of OA of different DR methods at HD = 20 is summarized in Table 3. The results include the overall accuracy and kappa coefficients of each method, and each result is an average of the results of 10 runs with the associated standard deviation. As can be seen in Table 3, the classification results (OA and kappa) produced by ISS-WME are the best in most cases. In addition, it can be seen in Table 4 that ISS-WME obtained the best classification accuracy about six classes, and the best results of the indexes are shown in bold.

Table 3.

Results of the different DR methods for the Pavia University data set.

Table 4.

Results of each class of samples in different DR methods for the Pavia University data set (HD = 20).

Table 4 provides the number of training and test samples for each class in the Pavia University data set in the experiment, as well as the classification results under the SVM classifier using different dimensionality reduction methods. Compared with Table 3, the classification accuracy (CA) is displayed in Table 4, where the best results are shown in bold numbers. Moreover, it can be seen in Table 4 that the ISS-WME method achieves the best accuracy in sixclasses of samples.

4.4. Results for the Salinas Scene Data Set

To describe the comparison results, the quantitative comparison of OA of different DR methods when HD = 20 is summarized in Table 5. The results include the overall accuracy and kappa coefficients of each method, and each result is an average of the results of 10 runs with the associated standard deviation. As can be seen in Table 5, the classification results (OA and kappa) produced by ISS-WME are the best in most cases. In addition, it can be seen in Table 6 that ISS-WME obtained the best classification accuracy about 12 classes, and the best results of the indexes are shown in bold. Moreover, the results of three classes are the same as the WLE-LLE algorithm.

Table 5.

Results of the different DR methods for the Salinas scene data set (HD = 20).

Table 6.

Results of each class of samples in different DR methods for the Salinas scene data set (HD = 20).

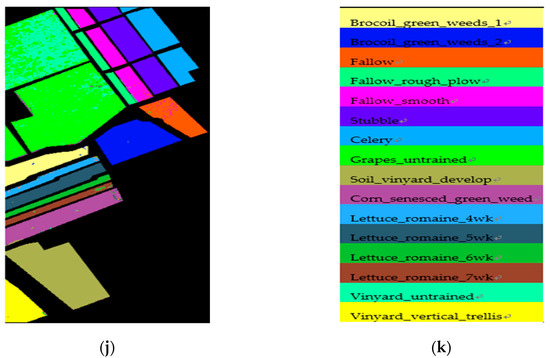

Table 5 provides the number of training and test samples for each class in the Salinas scene data set in the experiment, as well as the classification results under the SVM classifier using different dimensionality reduction methods. Compared with Table 5, the classification accuracy (CA) is displayed in Table 6, where the best results are shown in bold numbers. And the visual representation of different dimensional reduction methods of Salinas data set is supplemented in the Appendix A.

5. Conclusions

In this paper, a dimensionality reduction method combining the manifold structure of high-dimensional data with a linear nearest neighbor relationship was proposed. The method aimed to keep the data nearest neighbor relationship unchanged when the high-dimensional data were projecting to the low-dimensional space. Furthermore, the manifold structure of the data combined the spatial–spectral distance and structural features. To fully verify the superiority of the proposed method, the data obtained by the ISS-WME method and the six other dimensionality reduction methods were classified by two common classifiers. The results of several experiments show that the ISS-WME algorithm improves the ground object recognition ability of hyperspectral data, and the OA and kappa coefficients also support this conclusion. In the future, the dimensionality reduction labeling will be further considered to improve the classification effect through the framework of semi-supervised learning.

Author Contributions

Conceptualization, H.L., K.X., and E.O.; Methodology, H.L., and K.X.; Project administration, K.X., T.L., and J.M.; Software, H.L.; Supervision, K.X., T.L., and J.M.; Writing—original draft, H.L.; Writing—review and editing, H.L., K.X., and E.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. U1813222), the Tianjin Natural Science Foundation (No. 18JCYBJC16500), the Key Research and Development Project from Hebei Province (No. 19210404D), and the Hebei Natural Science Foundation (No. F2020202045).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

OA obtained by using an SVM classifier, with respect to (a–e), different numbers of training sets (2%, 4%, 6%, 8%, 10%) and different HD (from 10 to 50) for the Salinas scene data set.

Figure A2.

Classification maps of the different DR methods using an SVM classifier for the Salinas scene data in HD = 20: (a) false-color image (R:42,G:16,B:11), (b) ground-truth map, (c) original (SVM), (d) PCA, (e) Isomap, (f) LLE, (g) LE, (h) SSSE, (i) WLE-LLE, and (j) ISS-WME; (k) representation of different classes.

References

- Gao, F.; Wang, Q.; Dong, J. Spectral and Spatial Classification of Hyperspectral Images Based on Random Multi-Graphs. Remote Sens. 2018, 10, 1271. [Google Scholar] [CrossRef]

- Zu, B.; Xia, K.; Du, W.; Li, Y.; Ali, A.; Chakraborty, S. Classification of Hyperspectral Images with Robust Regularized Block Low-Rank Discriminant Analysis. Remote Sens. 2018, 10, 817. [Google Scholar] [CrossRef]

- Feng, Z.; Yang, S.; Wang, S.; Jiao, L. Discriminative Spectral–Spatial Margin-Based Semisupervised Dimensionality Reduction of Hyperspectral Data. IEEE Geosci. Remote Sens. Lett. 2014, 12, 224–228. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, H.; He, W.; Zhang, L. Superpixel based dimension reduction for hyperspectral imagery. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, IGARSS 2018, Valencia, Spain, 22–27 July 2018; pp. 2575–2578. [Google Scholar]

- Zu, B.; Xia, K.; Li, T.; He, Z.; Li, Y.; Hou, J.; Du, W. SLIC Superpixel-based l2, 1-norm robust principal component analysis for hyperspectral image classification. Sensors 2019, 19, 479. [Google Scholar] [CrossRef] [PubMed]

- Zeng, S.; Wang, Z.; Gao, C.; Kang, Z.; Feng, D.D. Hyperspectral image classification with global-local discriminant analysis and spatial-spectral context. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 5005–5018. [Google Scholar] [CrossRef]

- Huang, H.; Chen, M.; Duan, Y. Dimensionality Reduction of Hyperspectral Image Using Spatial-Spectral Regularized Sparse Hypergraph Embedding. Remote Sens. 2019, 11, 1039. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Chen, H.T.; Chang, H.W.; Liu, T.L. Local discriminant embedding and its variants. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 846–853. [Google Scholar]

- Stone, J.V. Independent component analysis: An introduction. Trends Cogn. Sci. 2002, 6, 59–64. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef]

- Wang, Z.Y.; He, B.B. Locality perserving projections algorithm for hyperspectral image dimensionality reduction. In Proceedings of the 2011 19th International Conference on Geoinformatics, Shanghai, China, 24–26 June 2011; pp. 1–4. [Google Scholar]

- Hou, B.; Zhang, X.; Ye, Q. A Novel Method for Hyperspectral Image Classification Based on Laplacian Eigenmap Pixels Distribution-Flow. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1602–1618. [Google Scholar] [CrossRef]

- Huang, K.K.; Dai, D.Q.; Ren, C.X. Regularized coplanar discriminant analysis for dimensionality reduction. Pattern Recognit. 2017, 62, 87–98. [Google Scholar] [CrossRef]

- Rajaguru, H.; Prabhakar, K.S. Performance analysis of local linear embedding (LLE) and Hessian LLE with Hybrid ABC-PSO for Epilepsy classification from EEG signals. In Proceedings of the 2018 International Conference on Inventive Research in Computing Applications(ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 1084–1088. [Google Scholar]

- Wu, Q.; Qi, Z.X.; Wang, Z.C. An improved weighted local linear embedding algorithm. In Proceedings of the 2018 14th International Conference on Computational Intelligence and Security(CIS), Hangzhou, China, 16–19 November 2018; pp. 378–381. [Google Scholar]

- Huang, H.; Luo, F.L.; Liu, J.M. Dimensionality reduction of hyperspectral images based on sparse discriminant manifold embedding. ISPRS J. Photogramm. Remote Sens. 2015, 106, 42–54. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, H.; He, W.; Zhang, L. Superpixel-based spatial-spectral dimension reduction for hyperspectral imagery classification. Neurocomputing 2019, 360, 138–150. [Google Scholar] [CrossRef]

- Wu, P.; Xia, K.; Yu, H. Correlation Coefficient based Supervised Locally Linear Embedding for Pulmonary Nodule Recognition. Comput. Methods Programs Biomed. 2016, 136, 97–106. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.W.; Chew, S.E.; Xu, Z.L. SLIC superpixels for efficient graph-based dimensionality reduction of hyperspectral imagery. In Proceedings of the XXI Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery, Baltimore, MD, USA, 21–23 April 2015; pp. 947209–947225. [Google Scholar]

- Sun, L.; Wu, Z.B.; Liu, J.J. Supervised spectral-spatial hyperspectral image classification with weighted markov random fields. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1490–1503. [Google Scholar] [CrossRef]

- Yu, W.B.; Zhang, M.; Shen, Y. Learning a local manifold representation based on improved neighborhood rough set and LLE for hyperspectral dimensionality reduction. Signal Process. 2019, 164, 20–29. [Google Scholar] [CrossRef]

- Yang, Y.; Hu, Y.L.; Wu, F. Sparse and low-rank subspace data clustering with manifold regularization learned by local linear embedding. Appl. Sci. 2018, 8, 2175. [Google Scholar] [CrossRef]

- Hong, D.F.; Yokoya, N.; Zhu, X.X. Learning a robust local manifold representation for hyperspectral dimensionality reduction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2960–2975. [Google Scholar] [CrossRef]

- Arena, P.; Patané, L.; Spinosa, A.G. Data-based analysis of Laplacian Eigenmaps for manifold reduction in supervised Liquid State classifiers. Inf. Sci. 2019, 478, 28–39. [Google Scholar] [CrossRef]

- Cahill, N.D.; Chew, S.E.; Wenger, P.S. Spatial-spectral dimensionality reduction of hyperspectral imagery with partial knowledge of class labels. In Proceedings of the XXI Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery, Baltimore, MD, USA, 21–23 April 2015; pp. 9472–9486. [Google Scholar]

- Kang, X.D.; Xiang, X.L.; Li, S.T. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Yang, W.K.; Wang, Z.Y.; Sun, C.Y. A collaborative representation based projections method for feature extraction. Pattern Recognit. 2015, 48, 20–27. [Google Scholar] [CrossRef]

- Tenenbaum, J.B.; De Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Hyperspectral Remote Sensing Scenes. Available online: http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 6 August 2020).

- Ou, D.P.; Tan, K.; Du, Q. A novel tri-training technique for the semi-supervised classification of hyperspectral images based on regularized local discriminant embedding feature extraction. Remote Sens. 2019, 11, 654. [Google Scholar] [CrossRef]

- Zhai, H.; Zhang, H.; Zhang, L.; Li, P. Total variation regularized collaborative representation clustering with a locally adaptive dictionary for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 166–180. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).