1. Introduction

Epidemiological surveillance is a crucial issue related to problems regarding food safety and security, public health and environmental protection. These problems are especially concern viticulture, where the use of phytosanitary products can be intensive, the staff exposition is frequent and the vicinity between residents and vineyards is a cause for concern or even conflicts.

Currently, crop protection practices in vineyards rely mostly on preventive chemical controls. Spraying strategies are usually scheduled according to climatic risks and to the health history of the vineyard [

1]. Over the last decade, several alternative spraying strategies have been developed to improve the inputs efficiency. These strategies consider local information regarding the vineyard health status [

2]. However, the assessment of risks and health scores (such as the frequency and severity of the disease) still requires the mobilisation of experts scouting vineyards for symptoms. This task is inherently time-consuming and labour-intensive and can only provide a partial and sparse picture of the vineyard’s health [

3]. In this context, image processing has been proven one of the most promising automated and non-intrusive techniques. With relatively low costs in terms of instrumentation, labour and time duty, it enables observation of the visible symptoms of grapevines at the scale of the plant and for large acreage [

4].

In the last decade, numerous studies investigated the use of imaging for the automated detection of phytopathologies, including those affecting the grapevine. This application scope can be divided between methods exploiting hyperspectral /multispectral images and methods exploiting Red Blue Green (RGB) images. The former exploits mainly the physio-chemical information of spectra which express plant pathogen interactions [

5], while the latter takes advantage of the geometrical, colorimetric or textural properties of visible symptoms.

Hyperspectral/multispectral imaging proved to be a very efficient tool to detect early symptoms, sometimes even before they become visible, in the case of grapevine diseases such as trunk diseases or flavescence dorée (or yellowing). However, most parts of the promising results found in the literature were obtained only in controlled laboratory conditions. When replicated for in-field conditions, it proved to be very difficult to achieve satisfying accuracies and to avoid confusions between pathologies and even abiotic stresses [

6,

7,

8,

9].

As for colour imaging, the most simple processing method is image binarisation, which consists in discriminating healthy tissues from abnormal ones, thanks to colorimetric thresholds or more rarely thanks to basic textural thresholds [

10,

11]. These approaches show numerous limitations. They are particularly sensitive to acquisitions conditions, such as, lighting, angle and shades, especially in outdoor conditions. They also perform poorly when confounding factors such as discolourations and other diseases are present in the scene. More elaborate methods rely on machine learning and especially deep learning. Machine learning-based methods exploit a wide range of classification algorithms such as Support Vector Machine (SVM), Random Forest or Bayesian classifiers. Usually, these classifiers are supplied with textural features, mainly Haralick indices and colour indices. Such applications deal with a wide variety of both arable and speciality crops [

11,

12,

13,

14]. With the recent development of Convolutional Neural Networks (CNNs) and Regional CNNs (RCNNs), disease classification and detection applications became a very prolific research subject [

15]. However, according to a recent review by Boulent et al. [

16], both deep learning and conventional machine learning applications deal with the classification of images depicting isolated tissues (mainly leaves), focused on the symptoms and acquired in ideal laboratory conditions. So far, very few studies deal with in-field detection of phytopathologies. The difference is that in the latter case, the problem is not only to differentiate healthy tissues from specific symptoms, but to identify symptoms within a complex pattern of entangled tissues, likely presenting numerous abnormalities and confounding factors. In addition, the resolution exploited in these cases is far lower and the acquisition conditions more variable and detrimental for the estimation of models. In addition these methods are data intensive and suffer from a lack of robustness (due to overfitting scenarios) and a lack of operability in farming applications.

Recent studies proposed more operable strategies for the automatic detection of grapevine diseases. The authors of [

17] proposed comparing methods based on Scale-Invariant Feature Transform (SIFT) encoding and deep learning strategies for the real-time detection of Esca and Flavescence dorée diseases. The authors of [

18] proposed a deep learning training strategy for the detection of downy mildew in real conditions and show the difficulties of building a robust and replicable model and the requirements in accurate annotations of the database. Both papers show that it is often the properties of the disease and the appearance of symptoms that drives the processing strategy

Authors previously presented a prototype approach, or the detection of downy mildew (

Plasmapora vticola), based on the statistical structure–colour modelling of proximal sensing RGB images [

19]. While able to identify a great diversity of complex symptoms within the grapevine canopy, the method resulted in a poor precision due to the substantial number of potential false positive within a single image of a plant. The preliminary observations drawn from this previous work led authors to propose a more in depth and complete processing strategy to ensure greater accuracy in the detection of the disease.

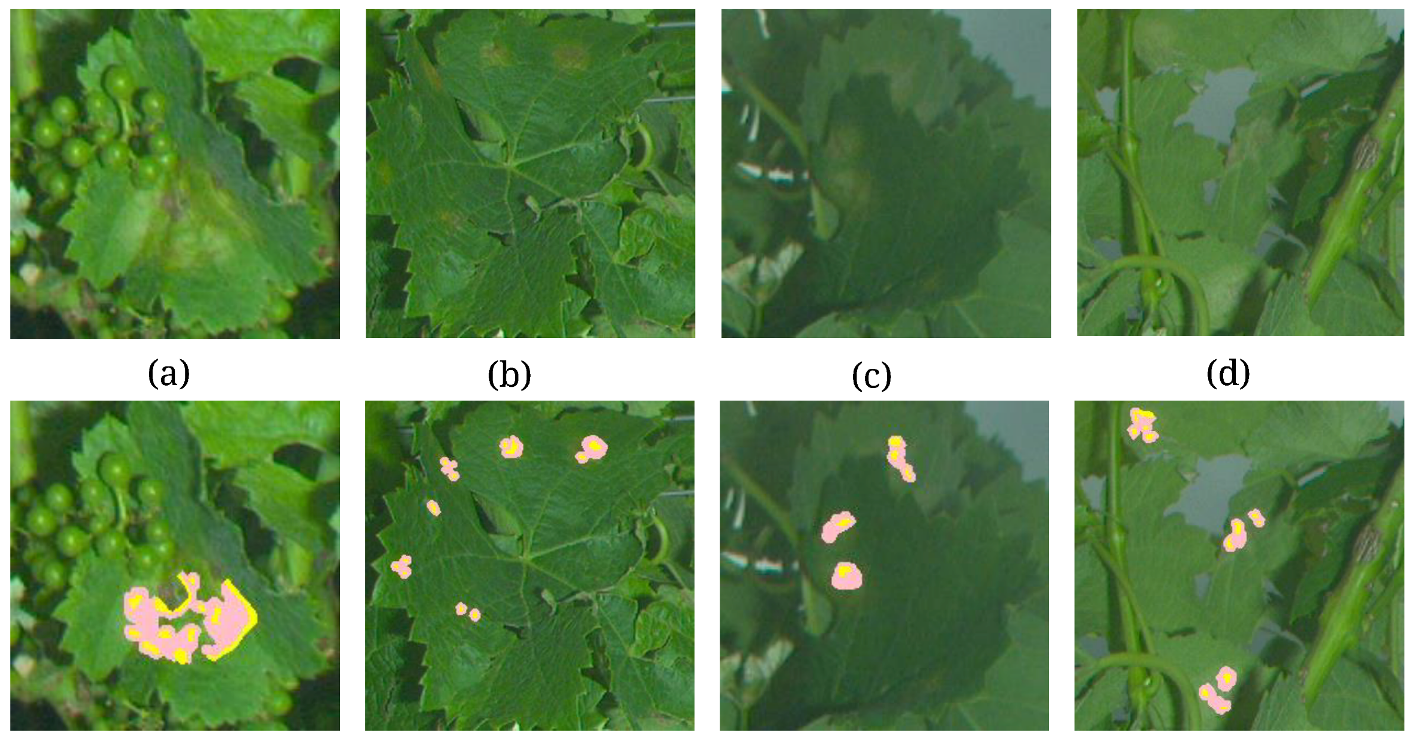

The purpose of this work is to propose methodological tools and an image processing chain. Both are dedicated to the detection of symptoms due to downy mildew (Plasmopara viticola) on proximal colour images acquired directly in the vineyard. These images are obtained thanks to an autonomous embedded device, able to operate in farming conditions with high throughput. It shows the potential and benefits of “frugal” artificial vision for farming applications. The advantages of this strategy is that it is operable “on the go”, i.e., in the same vein as a phytopathology expert that would browse the canopy seeking symptoms. In this case, it is a high-throughput sensor that acquires scenes of vegetation from a farming equipment cruising at a conventional working rate. It is designed to identify small symptoms (<5 cm), which sometimes represent only 12-pixel radius patches on the images, spread in a complex pattern of organs and textures. There is no manual extraction of the affected tissues from the plant nor specific targeting to constitute the database. Downy mildew is an interesting case study because it affects the majority of the world’s vineyards and represents a substantial financial and logistical cost and a major environmental impact. In addition, this pathology presents a wide variety of symptoms with very discrete forms for early occurrences, which is an ideal context to develop versatile algorithms able to adjust to different environments while being trained on moderate databases.

The proposed methodology relies on the parametric modelling of structure–colour features within leaves affected by Downy mildew as well as healthy vine tissues. Based on these models, the detection of symptoms is achieved through a seed growth segmentation. The paper presents a new structure–colour representation called

TC-LEST (Tensorial Colour Log-Euclidean Structure Tensor), which is an adaptation of the

representation, previously presented in [

20] for the pixel-wise classification of healthy vine organs. In addition, it introduces two statical criteria (“Within” and “Between”), based on Mahalanobis distances between features and models. These criteria enable to determine the affiliation of samples to statistical models. Altogether, these contributions are gathered within a framework dedicated to the application of interest, i.e., the detection of downy mildew symptoms. This strategy is conceived to be a relevant alternative to applications relying on deep learning. The purpose is to produce efficient models with minimal data, resulting in agronomically interpretable indicators.

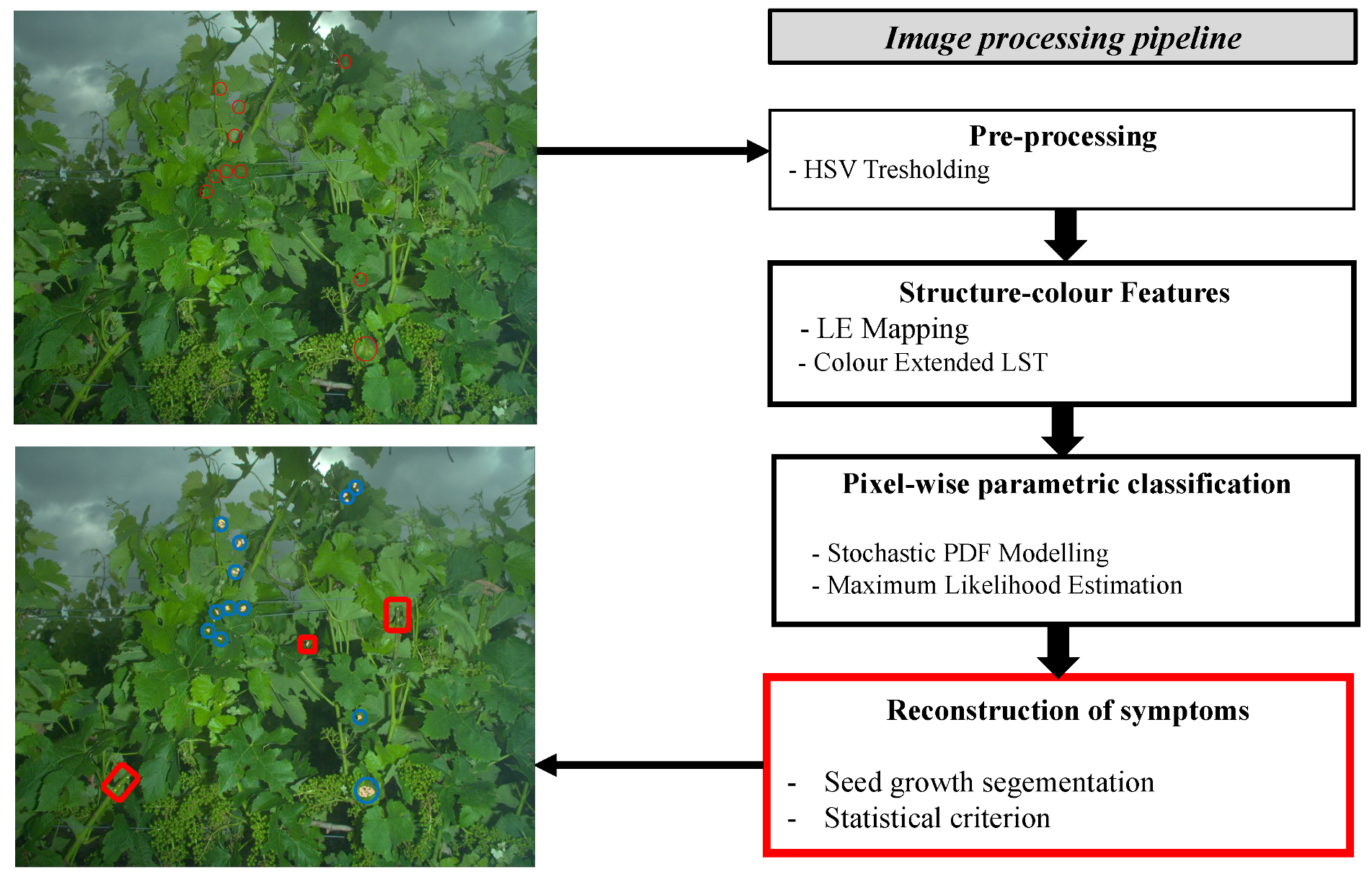

3. Image Processing Pipeline

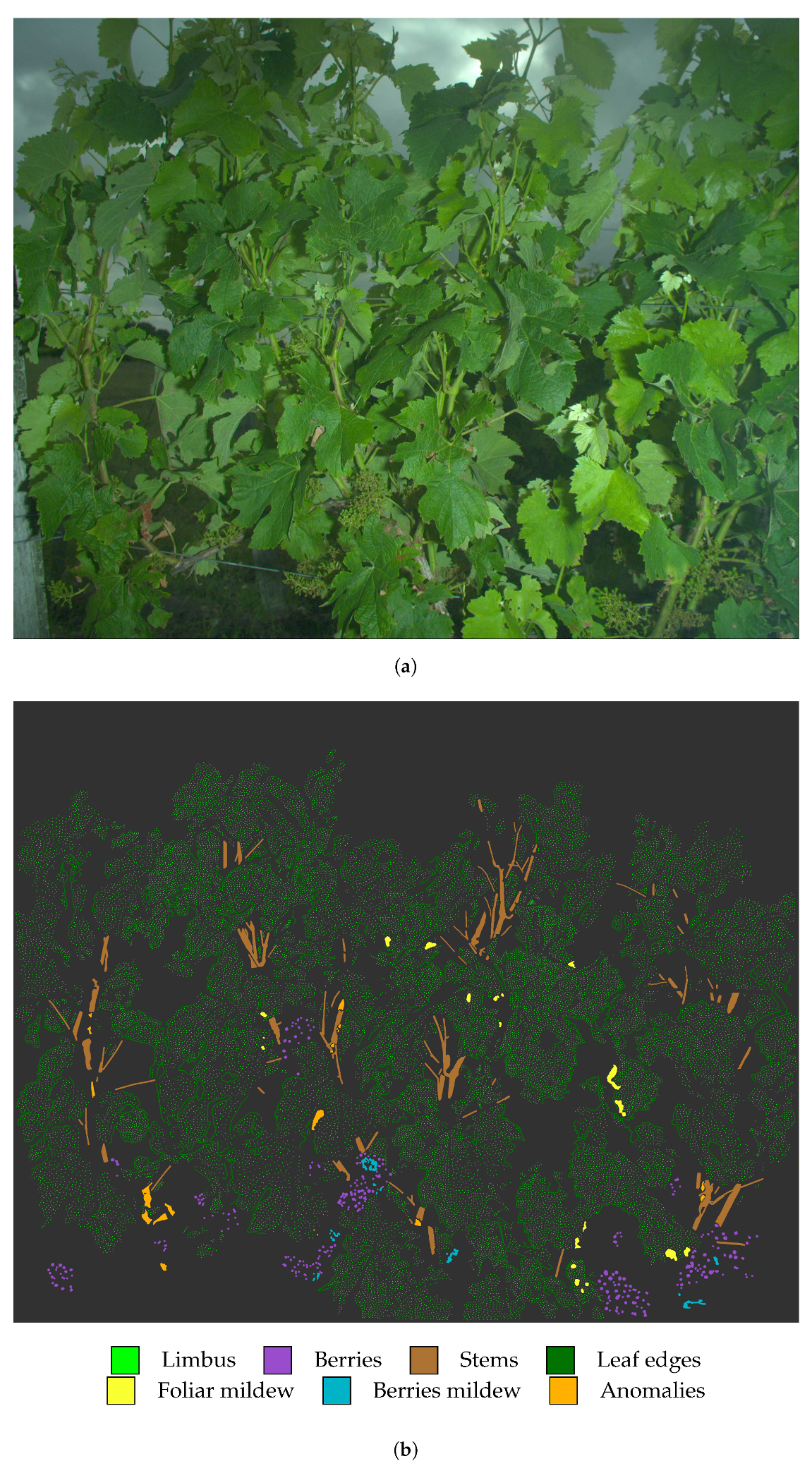

The image processing pipeline is designed to detect the presence of downy mildew symptoms among a wide range of organs and textures (leaves, fruits, stems, necrosis, etc.). The process is primarily based on the statistical modelling of the local structure–colour properties in each of the considered classes. The statistical models enable to determine the likelihoods to the considered classes for each pixel. Then, the likelihoods are evaluated through statistical tests combined with spatial coherence criteria within a seed growth segmentation that determines which pixels could constitute symptoms. The following sub-parts aim at describing the main steps of the proposed processing chain (

Figure 3).

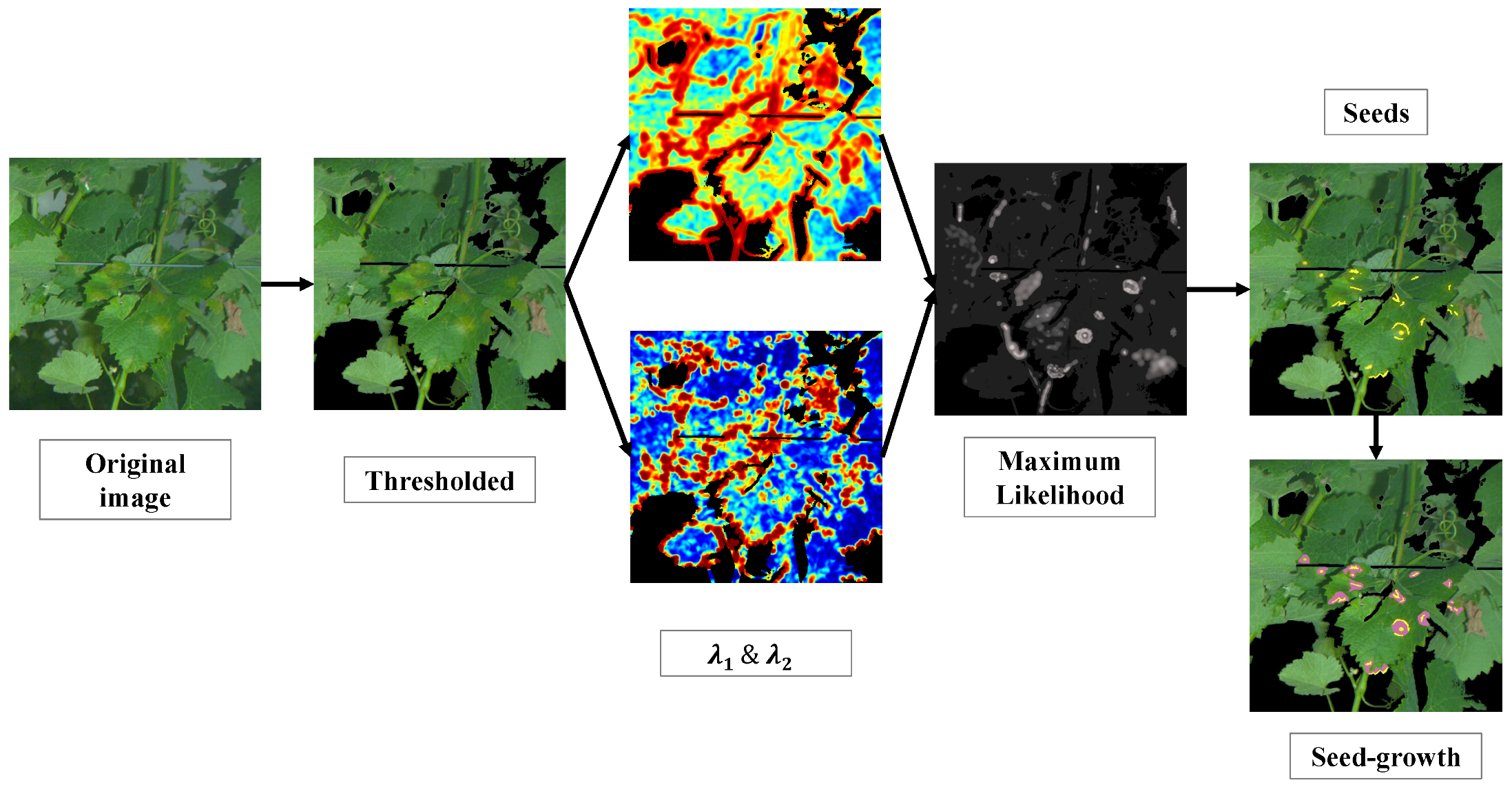

The first step of the pipeline is the thresholding of images in the Hue Saturation Value (HSV) colour space. The purpose is to discard, on the sole basis of colour, irrelevant pixels that do not constitute plant tissues (such as the sky, poles, wires, etc.). To a lesser extent, it also eliminates overly bright or overly dark pixels. This step is achieved simply by calculating the histogram of hue vales and then applying the Otsu threshold [

22]. After this step, the core processes are applied to a limited number of relevant pixels. Images are then processed through several filters to extract features, estimate models and eventually determine iteratively and for each pixel the affiliation to symptoms of mildew. These processes are illustrated with a practical example in

Figure 4. When applied to a single 5 Mpx image, the whole detection process requires a moderate computational cost with a unit execution time below 1 s. While the estimation of models from a hundred 5 Mpx images can require several hours with a standard high-frequency CPU, the application itself can be conducted in real-time once the offline modelling phase is achieved.

The remainder of the section will detail the three following major steps; LE mapping, modelling and seed growth algorithm.

3.1. Joint Structure–Colour Features

3.1.1. Local Structure Tensor: A Tool to Extract and Represent Textural Information

The LST is a reference tool [

23] that extracts geometric information and orientation trends in local patterns within greyscale images. It is commonly defined as the local covariance of gradients [

24,

25]. The computation of a LST field is a two step process, starting with estimating local gradients in the neighbourhood of every pixel in an image. Given a greyscale image

I of size

, the gradient image

is estimated as

where

t denotes the matrix transpose operator; ∗ denotes convolution; and

and

represent, respectively, the estimates of the horizontal and vertical derivatives of image

I obtained by applying Gaussian derivative kernels

and

. The LST field is then computed by smoothing the outer product

with a Gaussian filter

:

Thus, for every pixel

there is a corresponding local structure tensor,

in the form of a

symmetric matrix:

3.1.2. Log-Euclidean (LE) Mapping of LST’s

LST’s being covariance matrices, they belong to the Riemannian manifold of Symmetric Positive-Definite (SPD) matrices. The use of standard tools of Euclidean geometry and Gaussian statistics on such variables is not straightforward and shall be carried out by considering the properties of the Riemannian manifold [

26]. The mapping of LST’s into the Log-Euclidean (LE) space, as proposed by [

26], enables successful image classification in agricultural applications [

19,

20,

27].

The mapping of a tensor Y onto the LE space is achieved by computing its matrix logarithm. Let us consider the eigen decomposition of a LST

Y as

where

is the eigenvalues diagonal matrix with

≥

and

is the rotation matrix defined by its angle

. Then,

3.1.3. Rotation Invariance

Here, we propose to express a rotation invariant form of Arsigny’s representation in the LE space. Indeed, orientation itself is not a relevant information, as any given tissue, healthy or diseased, should be considered the same regardless of their orientation in the image. As the diagonal matrix of a given tensor provides a unique set of eigenvalues for different possible rotation matrices, it is possible to ensure rotation invariance by retaining only the eigenvalues.

Then, a rotation invariant [

20] form of the LE representation

can be easily expressed as

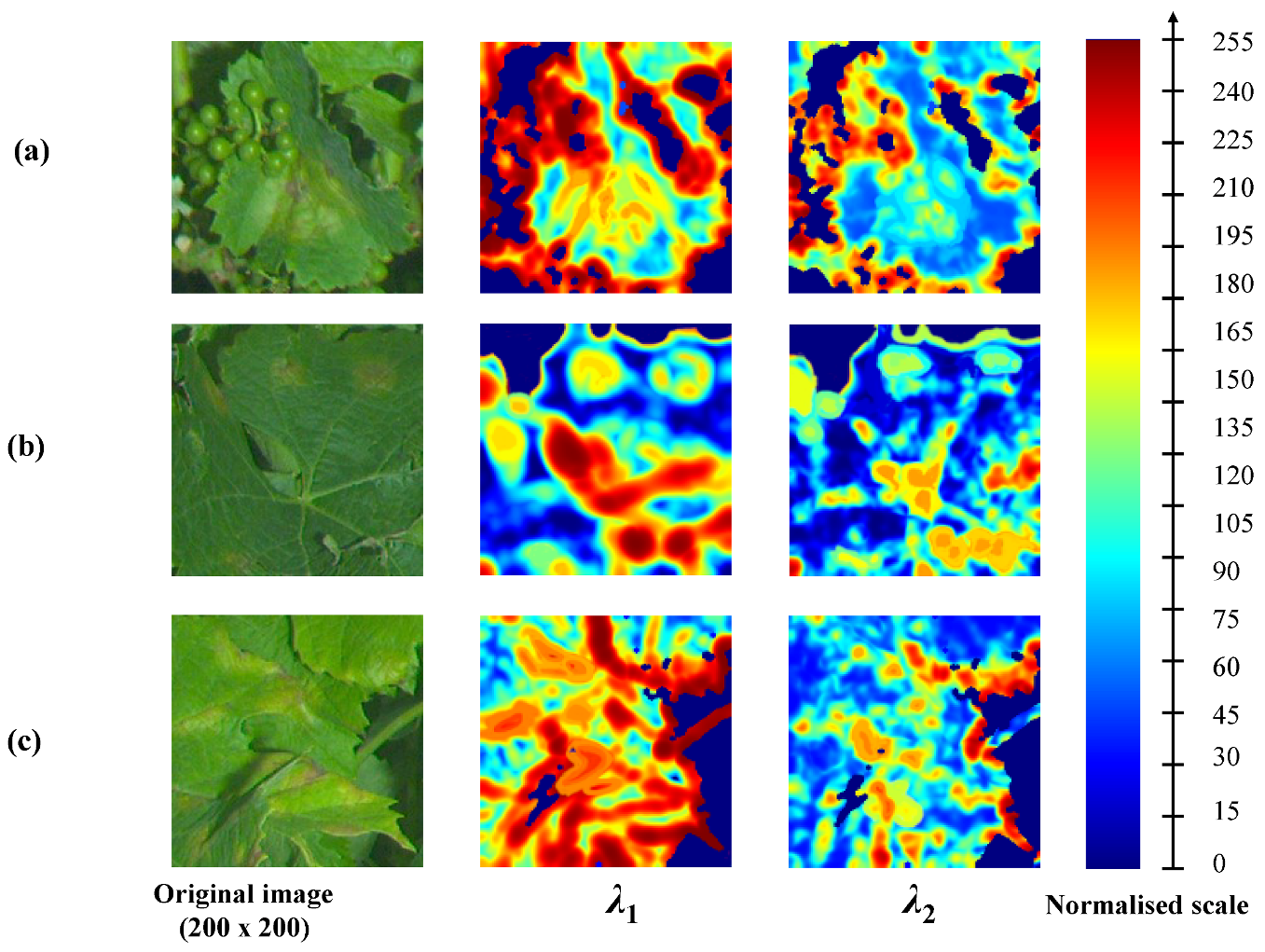

3.1.4. Describing Grapevine Healthy and Symptomatic Tissues with LSTs

Some textural differences between healthy limbus and downy mildew foliar symptoms can be highlighted through features derived from LSTs, computed from the luminance of the greyscale image.

Figure 5 illustrates the discriminative potential of LSTs for different facies of the disease. The figure presents the eigenvalues of the LST field mapped into the LE space. Eigenvalues are normalised into an 8-bit scale. Three examples are presented: (a) a large circular advanced oil spot, a corolla of small early oil spots and (c) irregular symptoms on edges. In case (a), it is simple to discern the spot, which medium eigenvalues ([~100, ~120]) differ greatly from smooth limbus presenting low values both for

and

. In case (b), it is also possible to distinguish some of the symptomatic spots. However, the eigenvalues, especially in the centre of the spots, can be easily mistaken with some leaf edges or some veinlets. In case (c), the interpretation of eigenvalues is more complex due to the irregular shape of symptoms. The inner parts of symptoms present properties and eigenvalues are similar to the previous cases. However, unlike cases (a) and (b), the texture in (c) is not isotropic, thus in the parts adjacent to edges, the structures present dominant orientations and are indistinguishable from healthy leaf edges or limits between organs. Therefore, the structural information alone is not always sufficient to properly discriminate healthy grapevine tissues from pathological ones [

19].

3.2. Joint Representation of Structure and Colour

Texture and colour are two naturally related properties. It is this relation that enables the human psychovisual system to construct images [

28]. Therefore, several methods were developed to extract and represent texture-colour features. In particular, different colour extended LST or LST defined within colour spaces proved to be relevant for image processing applications [

29,

30,

31,

32].

Considering the properties of the existing colour LST, and adapting them to the proposed modelling and likelihood based decisions, a novel structure–colour representation is introduced:

TC-LEST (Tensorial Colour Log-Euclidean Structure Tensor).

TC-LEST is a refinement of

(Colour Extended Log-Euclidean Structure Tensor), a previous representation proposed in [

20].

TC-LEST is obtained by mapping LST’s into the Log-Euclidean metric space and then concatenating local colorimetric information. The originality of

TC-LEST is that the colour components are expressed with as tensor. The result is a low-dimensional vectorial representation describing jointly structure and colour that can be modelled and exploited through common statistical and Bayesian tools.

Tensorial Representation of Colour in the HSL Colour Space: TC-LEST Representation

An astute method to concatenate colorimetric data to structural information is inspired by the authors of [

31]. They proposed to transform the RGB triplet into an Hue, Saturation, Luminance (HSL) triplet before representing it by an ellipse or, equivalently, by a tensor, i.e., a SPD matrix

Z. The ellipse is constructed so that its orientation

, eccentricity and magnitude are given respectively by the H, S and L channels. As for the tensor form

Z, the orientation

and eigenvalues

and

are deduced from the H, S and L channels as follows,

The tensorial representation of colours is then expressed as

In this form, the computation of dissimilarity measurements and likelihoods with colour components is more relevant and consistent with LST’s statistical models.

Subsequently, it is proposed to process colour with respect to the properties of SPD’s, following three steps. (i) The matrix Z is smoothed by convolution with a Gaussian kernel

. (ii) Then, Z’ is mapped into the LE space similar to LST’s:

. (iii) The matrix is transformed into a 3-dimensional vector:

(consistently with the work in [

26]).

Unlike for the structural component, it is senseless to produce rotation invariant colour matrix and it would discard the information contained in , i.e., relative to Hue.

Eventually,

representation is obtained by concatenating structure and colour into a single 5-dimensional vector:

3.3. Modelling Structure-Colour Features

Several authors successfully modelled different classes of texture with Gaussian probability density functions that describe the distribution of LSTs within each class. These models have been considered both for the matrix form with Gaussian Riemannian models and for the vectorial form in the Log-Euclidean space and have been applied to the classification of remote-sensed natural textures [

20,

33,

34]. In this article, it is proposed to evaluate such models in the LE space for structure–colour variables derived from proximal sensing images.

Within each of the considered classes, the distribution of colour extended LSTs can be described in the LE space by a multivariate Gaussian function. For a given class, the probability density function is defined by the following equation,

where

denotes the mean vector of size

,

, its

covariance matrix and

the corresponding determinant.

By nature, the classes of texture are inherently heterogeneous. Assuming that each class results from the grouping of sub-classes (e.g., leaves = upper limbus + under limbus ), it seems then relevant to consider Gaussian mixtures for stochastic modelling. The probability density function corresponding to a mixture of

K Gaussian multivariate distributions is defined by the following equation,

where

denotes the weight of the

Gaussian component of the mixture.

and

denote, respectively, the barycentre and the covariance matrix of the

component.

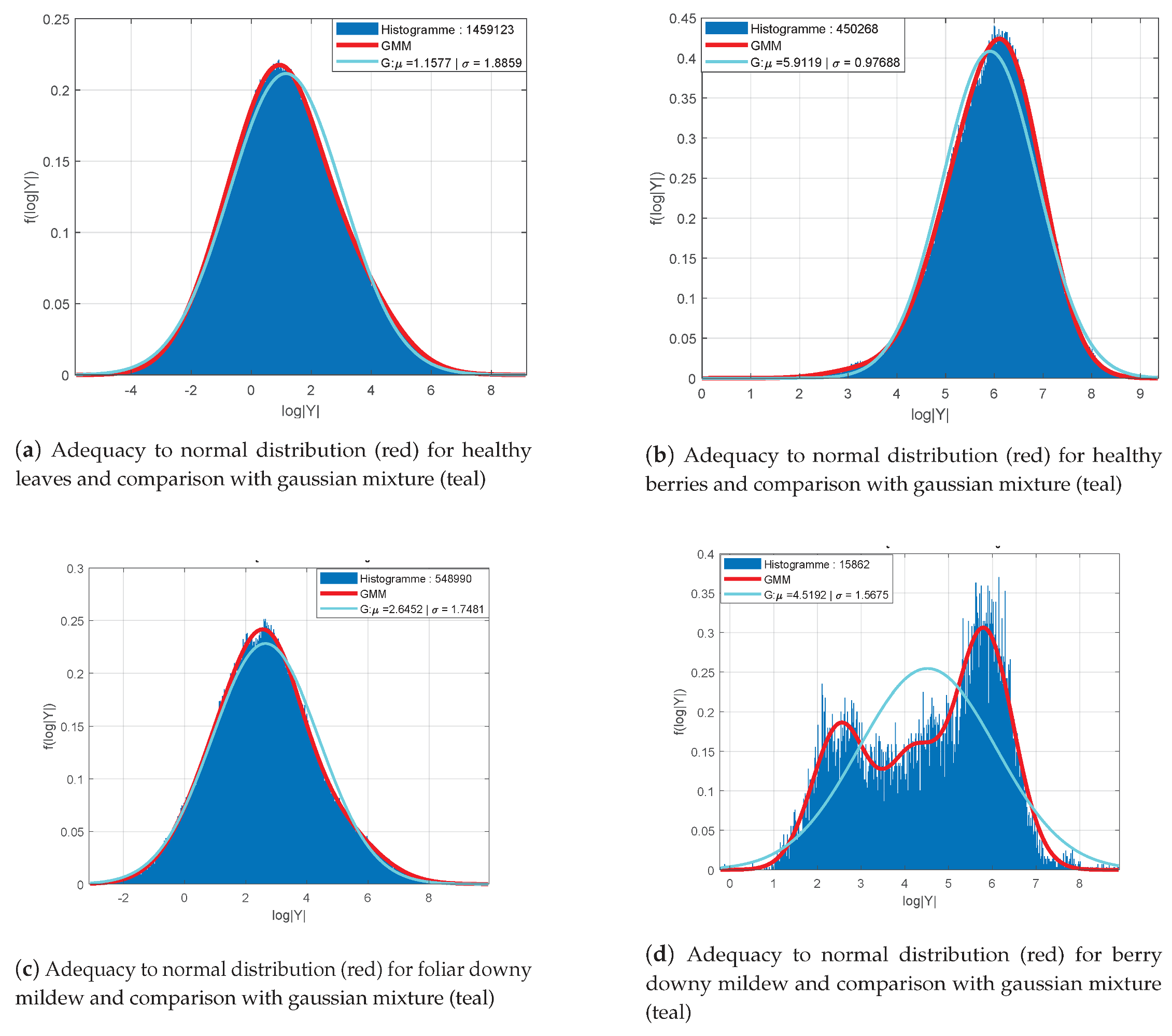

As the structure and colour joint representations presented above lie in high dimension (5) LE-spaces, observing the adequacy of their distributions to multivariate Gaussian probability is not trivial. A solution is to rely on a remarkable property of the transformation of matrices from the Riemannian manifold to the LE space. Saïd et al. [

33] enunciate the following equivalence: if the set of matrices is distributed according to a Gaussian Riemannian function, the distribution of the log-determinants is Gaussian as well and the vectorial representations in the LE space are distributed according to a multivariate Gaussian distribution.

Figure 6 presents the distribution of log-determinants of LST’s for the classes “healthy leaves” (a), “healthy berries” (b), “foliar symptoms of downy mildew” (c) and “symptoms on berries” (d). Samples are collected from 100 images with a headcount varying between

and

depending on the relative abundance of classes within the data base. The histogram of the log-determinants computed from the samples are shown in blue together with the Gaussian distributions of equivalent mean and standard deviation

represented in red. Gaussian mixtures distributions are shown for the foliar symptoms (c) and the symptoms on berries (d) in teal.

Apart from the class “symptoms on berries” (

Figure 6d), all distributions are assimilable to Gaussian probability density functions with their respective empirical mean and variance

as parameters. However, the distribution for the class “symptoms on berries” can be represented by a mixture of three Gaussian functions (

Figure 6d). In addition, the other classes seem to be better represented with mixture models. Indeed, their histograms present some moderate asymmetries and an offset of the distribution’s mode regarding the empirical mean. An example of the fitting improvement provided by mixture models (in red) compared to a single Gaussian model (in teal) is shown for the class “foliar symptoms of downy mildew” in

Figure 6c.

TC-LEST representations is obtained by concatenating vectorial forms of LSTs in the LE space with colorimetric information. To demonstrate the adequacy of these new variables it is then sufficient to observe their colorimetric components. TC-LEST, consists of a vectorial form resulting from the LE transform of a matrices holding the same properties as covariance matrices. Alike LSTs, the distributions of its log-determinants enables then to understand the adequacy to multivariate Gaussian probability functions.

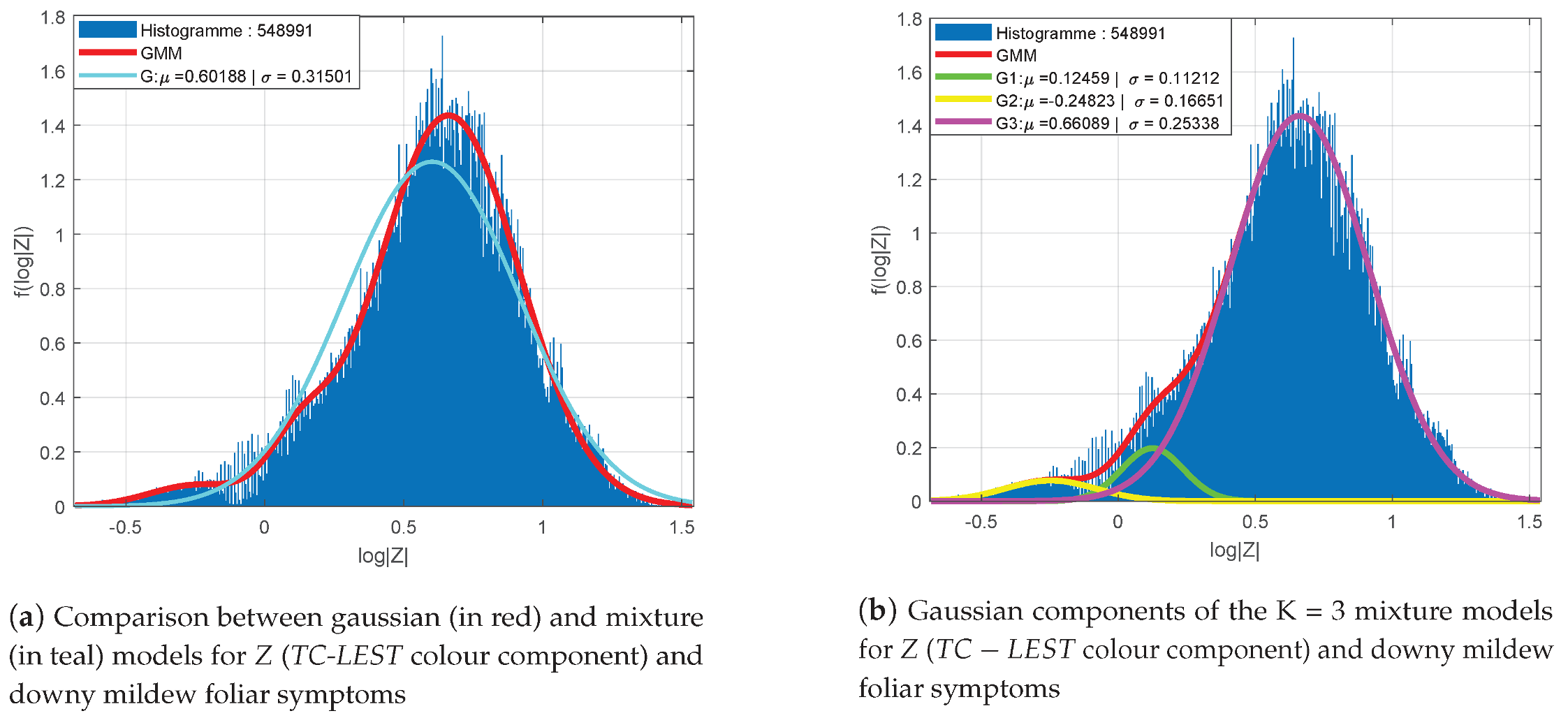

Figure 7 presents the distribution of the log-determinants of the colour component of

TC-LEST for foliar symptoms and their adequacy to a Gaussian probability density function (a) and to a mixture model (b). In this case, the colour component seem to be globally adequate to a Gaussian model, yet with difficulties to represent the mode and the tails. The mixture model is then much more appropriate. In conclusion, the structure–colour representation can altogether be modelled in each class of interest with Gaussian mixture probability density functions.

3.4. Seed Growth Segmentation

The use of this segmentation method is motivated by the analogy between “artificial vision” and the human psycho-visual perception system [

35]. Indeed, although symptoms of downy mildew present some very distinctive properties at a pixel-wise scale, it is mainly a larger pattern, i.e., a “spot”, which is recognised by an observer. In addition, some elements within symptoms are not easily differentiable from confounding factors. To address this problem, seed growth segmentation considers “spatial coherence”. The purpose is to reconstruct symptoms as continuous connected components constituted of distinctive pixels, arranged coherently in a spatial pattern.

Seed growth [

36] is a segmentation method intended to recover connected spaces from a pixel-based classification. This method hinges on two major steps. The first consists in detecting seeds, i.e., the most characteristics pixels within the objects to recover. The seeds are detected by applying restrictive criteria to the decision outcomes of the classification process. The purpose is to maximise the probability that the selected seeds are actually adequate to the model. The second steps consists in aggregating new pixels to the seeds thanks to more permissive and relaxed criteria and under the condition of connexity to the seeds. This second step is intended to propagate confident decisions to recover at best the targeted areas, i.e., foliar symptoms of downy mildew in this case.

In this case, the criteria used are related to the likelihoods between the local structure–colour properties of pixels and the models of the considered classes. These criteria are meant to evaluate Mahalanobis distances [

37] between the features describing pixels and the barycentres of the models. These distances convey an information very similar to likelihoods. However, in practice, it is much simpler to evaluate and compare distances than likelihoods. Two criteria of the sort are proposed and combined: a “within” criteria and a “between” criteria that are described in the following.

3.4.1. Within Criteria: Retaining the Most Relevant Pixels of Downy Mildew Symptoms

The within criteria is meant to assess the relevance of a pixel in the class foliar symptoms. It consists in comparing the Mahalanobis distance between a feature Y describing a pixel and the barycentre of the model describing the class of downy mildew foliar symptoms.

Under the hypothesis that a pixel described by

Y belongs to the mildew class under the normality hypothesis then:

et

being, respectively, the barycentre and the covariance matrix of the mildew model and

[

37].

Following this hypothesis,

where

is a Chi square distribution with

N degrees of freedom and

N is defined by the dimension of the considered descriptors.

A distance threshold

is then determined so that

, where

and

is the

-quantile of the law

. Discarding instances such as

is equivalent to retain only a proportion

of the most significant and of the closest instances to the barycentre of the mildew model:

3.4.2. “Between” Criteria: Discarding Uncertain Pixels

It is possible in some cases that a healthy tissue, yet presenting anomalies, displays properties similar to downy mildew symptoms. In the same way, it is possible that some pixels located at the edges of symptoms resemble, in terms of structure–colour properties, to healthy pixels. In these cases, likelihoods for both healthy and symptomatic classes could be high. Thus, the first criteria cannot prevent such errors.

The “between” criteria consists then in comparing distances between a descriptor and the barycentres of models describing respectively a healthy and a symptomatic class. It enables to determine the pixels for which there is no significant difference in likelihoods between two classes. Considering that symptoms are rare, in such a case where there is a reasonable doubt between two classes, it is more relevant to discard such instances from the mildew class.

This criteria consists then in determining a minimum ratio

, so that if the observed ratio

between the squared Mahalanobis distances is lower than this threshold, the instance is discarded from the mildew class:

Only values such as are considered so that the decision criteria always ensure that the maximum likelihood is obtained for the class mildew.

3.4.3. The Seed-Growth Process

To summarise the approach, for all pixels of a given image, the conditions (i) determined by Equation (

16) are checked. Pixels that satisfied this equation are considered as seeds. Then, the conditions (ii) determined by Equation (

17) are iteratively checked. For each iteration, the pixels satisfying the growth conditions are incorporate to the seeds for the next step. The process eventually stops when no more pixels satisfy the conditions.

(ii) Seed growth:

with

and

.

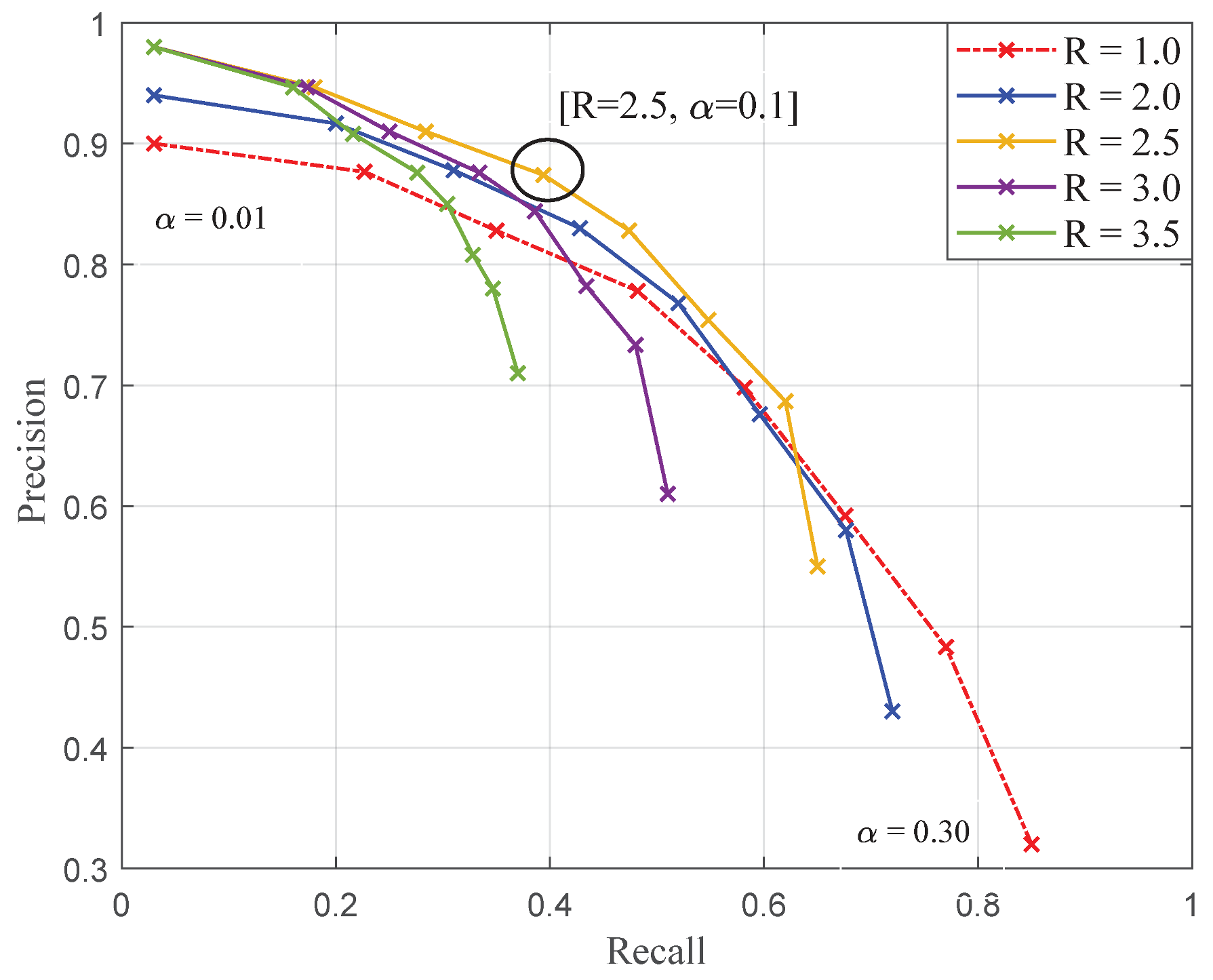

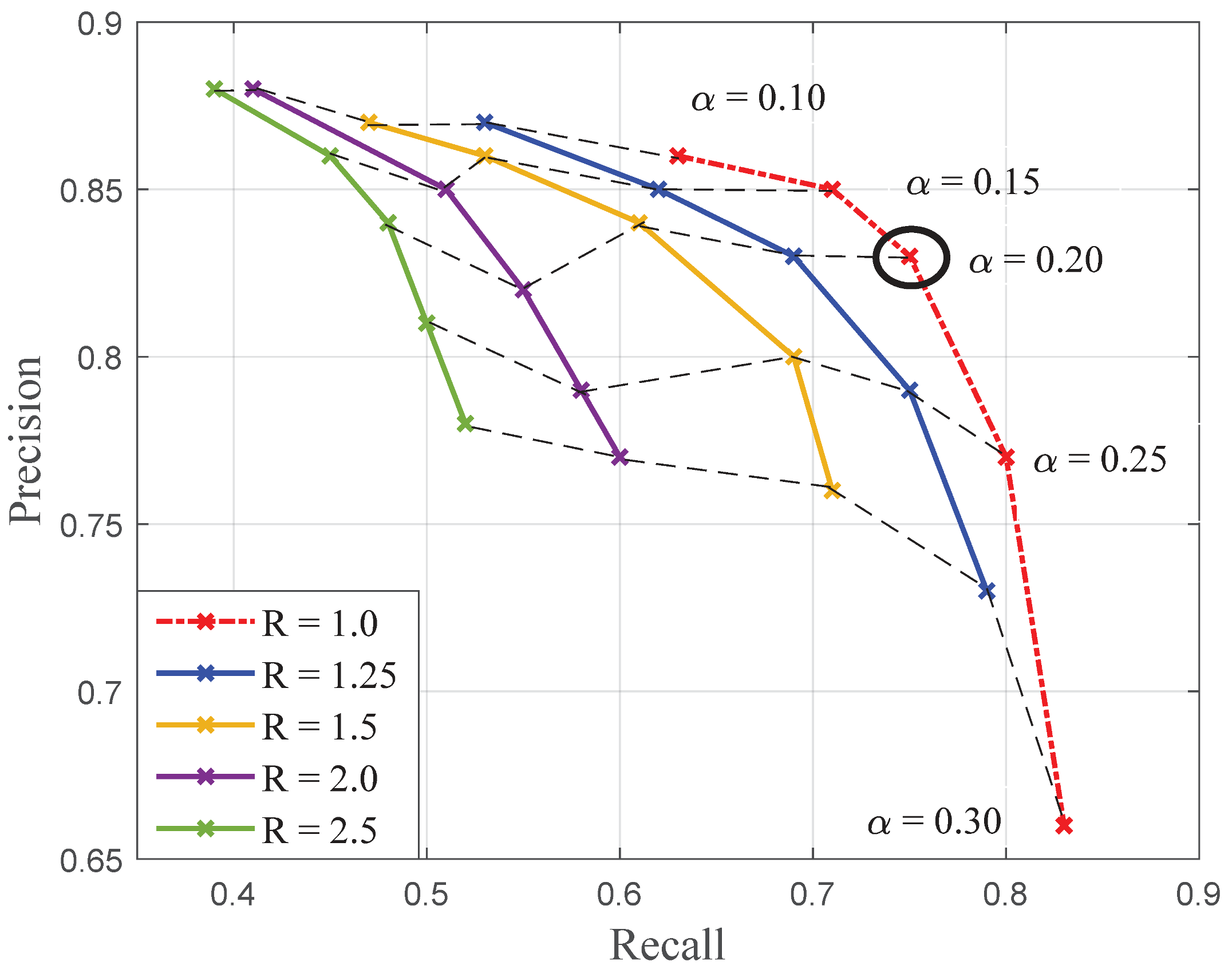

5. Conclusions

The purpose of this work was to evaluate the potential on on-board high-resolution colour imaging for the monitoring of cryptogamic diseases affecting grapevine thanks to a case study: downy mildew (Plasmopara viticola). To address the problem, a pixel-wise classification strategy has been validated. First, the relevance of local structure tensors when describing healthy or infected vine tissues was demonstrated. Then, it was proposed to enhance LSTs with colorimetric information. To do so, a novel structure–colour representation (TC-LEST) was developed. In addition, it has been shown that thanks to a logarithmic transformation, compact vectorial descriptors were conveniently obtained. These descriptors were proved to be easily modelled within different classes of vine tissues thanks to Gaussian mixture distributions. Finally, these models were used to define statistical criteria that were in turn integrated within a seed growth segmentation process. The proposed strategy was applied to database of a hundred images of vinestocks. Results were evaluated with a leave-one-out cross-validation process in terms of pixel-wise precision and recall. With the best parameters, classification performances reached precision and recall.

These first promising results show that it is possible to discriminate, count and measure foliar symptoms of downy mildew. This contribution should lead to further validation in conditions closer to the agronomical requirements.