Abstract

This paper proposes a solution for events classification from a sole noisy mixture that consist of two major steps: a sound-event separation and a sound-event classification. The traditional complex nonnegative matrix factorization (CMF) is extended by cooperation with the optimal adaptive L1 sparsity to decompose a noisy single-channel mixture. The proposed adaptive L1 sparsity CMF algorithm encodes the spectra pattern and estimates the phase of the original signals in time-frequency representation. Their features enhance the temporal decomposition process efficiently. The support vector machine (SVM) based one versus one (OvsO) strategy was applied with a mean supervector to categorize the demixed sound into the matching sound-event class. The first step of the multi-class MSVM method is to segment the separated signal into blocks by sliding demixed signals, then encoding the three features of each block. Mel frequency cepstral coefficients, short-time energy, and short-time zero-crossing rate are learned with multi sound-event classes by the SVM based OvsO method. The mean supervector is encoded from the obtained features. The proposed method has been evaluated with both separation and classification scenarios using real-world single recorded signals and compared with the state-of-the-art separation method. Experimental results confirmed that the proposed method outperformed the state-of-the-art methods.

1. Introduction

Surveillance systems have become increasingly ubiquitous in our living environment. These systems have been used in various applications including CCTV in traffic and site monitoring, and navigation. Automated surveillance is currently based on video sensory modality and machine intelligence. Recently, intelligent audio analysis has been taken into account in surveillance to improve the monitoring system via detection, classification, and recognition sound in a scenario. However, in a real-world situation, background noise has interfered in both the image and sound of a surveillance system. This will hinder the performance of a surveillance system. Hence, an automatic signal separation and event classification algorithm was proposed to improve the surveillance system by classifying the observed sound-event in noisy scenarios. The proposed noisy sound separation and event classification method is based on two approaches (i.e., blind signal separation and sound classification, which are introduced in the sections to follow, respectively).

The classical problem of blind source separation (BSS), the so-called “cocktail party problem”, is a psycho-acoustic spectacle that alludes to the significant human-auditory capability to selectively focus on and identify the sound-source speaker from the scenarios. The interference is produced by competing speech sounds or a variety of noises that are often assumed to be independent of each other. In the case of only a single microphone being available, this reduces to the single channel blind source separation (SCBSS) [1,2,3,4]. The majority of SCBSS algorithms work in time-frequency domain, for example, binary masking [5,6,7] or nonnegative matrix factorization (NMF) [8,9,10,11]. NMF has been continuously developed with great success for decomposing underlying original signals when a sole sensor is available. NMF was developed using the multiplicative update (MU) algorithm to solve its parametrical optimization based on a cost function such as the Kullback–Liebler divergence and the least square distance. Later, other families of cost functions have been continuously proposed, for example, the Beta divergence [12], Csiszar’s divergences, and Itakura–Saito divergence [13]. Additionally, iterative gradient update was presented where a sparsity constraint can be included into the optimizing function through regularization by minimizing penalized least squares [14] and using different sparsity constraints for dictionary and code [15]. The complex nonnegative matrix factorization (CMF) spreads the NMF model by combining a sparsity representation with the complex-spectrum domain to improve the audio separability. The CMF can extract the recurrent patterns of the phase estimates and magnitude spectra of constituent signals [16,17,18]. Nevertheless, the CMF lacks the generalized mechanics used for controlling the sparseness of the code. However, the sparsity parameter is manually determined for the above proposed methods. Approximate sparsity is an important consideration as they represent important information. Many sparse solutions have been proposed in the last decade [19,20,21,22,23,24,25]. Nonetheless, the optimal sparse solution remains an open issue.

Sound event classification (SEC) has vastly been exploited by many researchers. Sound can be categorized into speech, music, noise, environmental sound, or daily living sound [26]. Sound events are available in all classes, for example, car horn, traffic, walking, or knocking, etc. [27,28]. Sound-events contain significant information that can be used to describe what has happened or to predict what will happen next in the future. Most algorithms of the SEC methods are conveyed from sound classification approaches such as sparse coding, deep learning, and support vector machine (SVM). These approaches have been exploited to categorize a sound event in both indoor and outdoor scenarios. In recent years, the deep learning approach has been used to classify the sound-event. A deep learning framework can be established with two convolutional neural networks (CNNs) and a deep multi-layer perceptron (MLP) with rectified linear units (ReLU) as the activation function [29,30]. A Softmax function that is the final activation function is used to classify the sound into its corresponding class. The Softmax function is considered as the generalization of the logistic function, which aims to avoid overfitting. One of the advantages of deep learning is that it does not require feature extraction for the input sound. However, a deep neural network requires large training samples and despite a plethora of research, there is a general consensus that deep neural networks are still difficult to fine tune and generalize to test data. Moreover, it does not lend itself to the explanation as to why a certain decision is being made. Separate from the deep learning framework, another SEC approach is support vector machines [31,32], which has been practically presented to solve the classifier problem in various fields. The SVM algorithm relies on supervised learning by using the fundamental concept of statistic and risk minimization. The main process of the SVM is to draw the optimal separating hyperplane as the decision boundary located in such a way that the margin of separation between classes is maximized. The SVM approach is considered as supervised learning algorithm that is comprised of two sections: (1) a training section to model feature space and an optimal hyperplane, and (2) a testing section to use the SVM model for separating the observed data. The margin denotes the distance of the closest instance and the hyperplane. SVM has the desirable properties in that it requires only two differentiating factors to categorize two classes and a hyperplane that can be constructed to suit for an individual problem, even in the nonlinear case by selecting a kernel. Second, SVM provides a unique solution, since it is a convex optimization problem.

2. Background

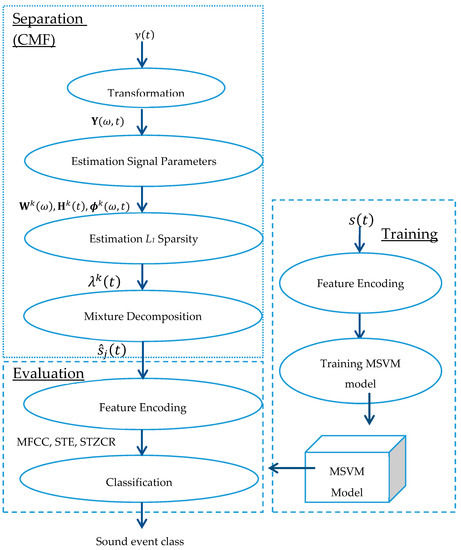

Noisy mixed signals observed via a recording device can be stated as: where and denote the original sounds, and is noise. This research is focused on two sound events in a single recorded signal. The proposed method consists of two steps: noisy sound separation and sound event classification, which is illustrated in Figure 1, where and denote a sound-event mixture in the time domain and time-frequency domain, respectively. The terms are spectral basis, temporal code or weight matrix, and phase information, respectively. The term represents sparsity and is an estimated sound event source. The abbreviations MFCC, STE, and STZCR stand for Mel frequency cepstral coefficients, short-time energy, and short-time zero-crossing rate, respectively. The proposed method is consecutively elaborated in the following parts.

Figure 1.

Signal flow of the proposed method.

2.1. Single-Channel Sound Event Separation

The problem formulation in time-frequency (TF) representation is given by an observed complex spectrum, , to estimate the optimal parameters of the model. A new factorization algorithm named as the adaptive L1-sparse complex non-negative matrix factorization (adaptive L1-SCMF) is derived in the following section. The generative model is given by

where and the reconstruction error is assumed to be independently and identically distributed (i.i.d.) with white noise having zero mean and variance . The term is used to denote a modeling error for each source. The likelihood of is thus written as

It is assumed that the prior distributions for and are independent, which yields

The prior corresponds to the sparsity cost, for which a natural choice is a generalized Gaussian prior. When , promotes the -norm sparsity. -norm sparsity has been shown to be probabilistically equivalent to the pseudo-norm, , which is the theoretically optimum sparsity [33,34]. However, -norm is non-deterministic polynomial-time (NP) hard and is not useful in large datasets such as audio. Given Equation (3), the posterior density [35,36] is defined as the maximum a posteriori probability (MAP) estimation problem, which leads to minimizing the following optimization problem with respect to . Equations of Gaussian prior and maximum a posteriori probability (MAP) estimation are expressed in Appendix A.

The CMF parameters have been upgraded by using an efficient auxiliary function for an iterative process. The auxiliary function for can be expressed as the following: for any auxiliary variables with , for any , , for any , and . The term with an auxiliary function was defined as:

where . The function is minimized w.r.t. when

2.2. Formulation of Proposed CMF Based Adaptive Variable Regularization Sparsity

2.2.1. Estimation of the Spectral Basis and Temporal Code

In Equation (4), the update rule for is derived by differentiating partially w.r.t. and , and setting them to zero, which yields the following:

The update rule for the phase, , can be derived by reformulating Equation (4) as follows:

where A denotes the terms that are irrelevant with , , , and . Derivation of (9) is elucidated in Appendix B. The auxiliary function, in Equation (4) is minimized when , namely, and . The update formula for eventually leads to

The update formula for and for projection onto the constraint space is set to

2.2.2. Estimation of L1-Optimal Sparsity Parameter

This section aims to facilitate spectral dictionaries with adaptive sparse coding. First, the CMF model is defined as the following terms:

where “⊗” and “” are the Kronecker product and the Hadamard product, respectively. The term vec(∙) denotes the column vectorization and the term is the identity matrix. The goal is then set to compute the regularization parameter related to each . To achieve the goal, the parameter in Equation (4) is set to 1 to acquire a linear expression (in ). In consideration of the noise variance , Equation (4) can concisely be rewritten as:

where the and terms indicate vectors of dimension (i.e., ), and the superscript ‘’ is used to denote complex Hermitian transpose (i.e., vector (or matrix) transpose followed by complex conjugate). The Expectation–Maximization (EM) algorithm will be used to determine and is the hidden variable where the log-likelihood function can be optimized with respect to . The log-likelihood function satisfies the following [12]:

by applying the Jensen’s inequality for any distribution . The distribution can simply verify the posterior distribution of , which maximizes the right-hand side of Equation (15), is given by . The posterior distribution in the form of the Gibbs distribution is proposed as follows:

The term in Equation (16) as the function of the Gibbs distribution is essential for simplifying the adaptive optimization of . The maximum-likelihood (ML) estimation of can be decomposed as follows:

In the same way,

Individual element of is required to be exponentially distributed with independent decay parameters that delivers , thus Equation (17) obtains

The term denotes the dependent variable of the distribution whereas other parameters are assumed to be constant. As such, the optimization in Equation (19) is derived by differentiating the parameters within the integral with respect to . As a result, the functional optimization [37] of then obtains

where , denotes the element of . Notice that the solution naturally splits its elements into distinct subsets and , consisting of components so that and components so that . The sparsity parameter is then obtained as presented in Equation (21):

and its covariance X is given by

where , and . The function will be expressed as the unconstrained Gaussion with mean and covariance based on a multivariate Gaussian distribution. Similarly, the inference for can be computed as

where

The core procedure of the proposed CMF method is based on -optimal sparsity parameters. The estimated sources are discovered by multiplying the respective rows of the components with the corresponding columns of the weights and time-varying phrase spectrum . The separated source is obtained by converting the time-frequency represented sources into the time domain. Derivation of L1-optimal sparsity parameter, is elucidated in the Appendix C.

2.3. Sound Event Classification

Once the separated sound signal is obtained, the next step is to identify the sound event. A multiclass support vector machine (MSVM) is employed to achieve the goal. The MSVM is comprised of two phases: the learning phase and the evaluation phase. The MSVM is based on one versus one strategy (OvsO) that splits observed classes into binary classification sub-problems. To train the MSVM model, the MSVM will construct hyperplanes for discriminating each observed data into its corresponding class by executing the series of binary classification. Starting from the learning phase, sound signatures are extracted from the training dataset in the time-frequency domain. The sound signatures that were studied in this research were the Mel frequency cepstral coefficients (MFCC: MF), short-time energy (STE: ), and short term zero-crossing rate (STZCR: ), which can be orderly expressed as: , , where denotes the windowing function. The training signals are segmented into small blocks, then the individual block is extracted to the three signature parameters. The mean supervector is then computed as an average of individual feature of all blocks for each sound event input. Thus, the mean feature supervector () with a corresponding sound-event-label vector () is paired together (i.e., ()) and supplied to the MSVM model. The discriminant formula can be expressed as:

where represents the separated sound signals; the weight vector is employed for individual class to compute a discriminant score for the ; the term is the index of the block order (); and the function measures a linear discriminant distance of the hyperplane with the extracted feature vector from the observed data. The MSVM based OvsO strategy for class and other, the hyperplane, can be maximized as and can then be learned via the following equation as

where , is a constant. The term denotes a penalty function for tradeoff between a large margin and a small error penalty. The optimal hyperplane can be determined by minimizing to maximize the condition (i.e., . If the conditional term is greater than , then the estimated sound event belongs to the class. Otherwise, the estimated sound event classifies into other classes.

The overview of the proposed algorithm is presented in the following table as Algorithm 1.

| Algorithm 1 Overview of the proposed algorithm. |

|

3. Experimental Results and Analysis

The performance was evaluated on recorded sound-event signals in a low noisy environment at 20 signal-to-noise ratios (SNRs). The sound-event database had a total of 500 recorded signals containing four event classes: speech (SP), door open (DO), door knocking (DK), and footsteps (FS). An overview of the experimental setup is given as the following: all signals had a 16-bit resolution and a sampling frequency of 44.1 KHz. A 2048 length of Hanning window with 50% overlap was used for signal processing. Nonlinear SVM with a Gaussian RBF kernel was used for constructing the MSVM learning model. Other kernels such as polynomials, sigmoid, and even linear function were tested, but the best performance was delivered by the Gaussian kernel. A 4-fold cross-validation strategy was used in the training phase for tuning the classifier parameters when using 80% of the recorded signals (n = 400) from the sound-event database.

The performance of the proposed noisy sound separation and event classification (NSSEC) method was demonstrated and presented into the following two sections: (1) the separating performance, and (2) the MSVM classifier.

3.1. Sound-Event Separation and Classification Performance

Event mixtures consist of two sound-event signals in low noisy environment at 20 dB SNRs. A hundred sound-event signals of four classes were randomly selected and then mixed to generate 120 mixtures of six types (i.e., DO + DK, DO + FS, DO + SP, DK + FS, DK + SP, and FS + SP). The separation performance measured the signal-to-distortion ratio (SDR) (i.e., where , , and ). The SDR represents the ratio of the magnitude distortion of the original signal by the interference from other sources. The proposed separation method was compared with the state-of-the-art NMF approach (i.e., CMF [38], NMF-ISD [14,39], and SNMF [40,41,42] methods). The cost function was the least squares with 500 maximum number of iterations.

3.1.1. Variational Sparsity Versus Fixed Sparsity

In this implementation, several experiments were conducted to investigate the effect of sparsity regularization on source separation performance. The proposed separation method was evaluated by variational sparsity in the case of (1) uniform constant sparsity with low sparseness e.g., and (2) uniform constant sparsity with high sparseness (e.g., . The hypothesis is that the proposed variational sparsity will significantly yield improvement of the audio source separation when compared with fixed sparsity.

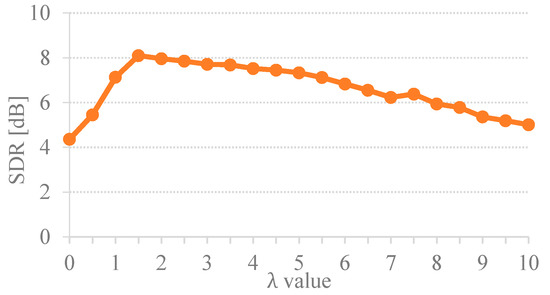

To investigate the impact of uniform sparsity parameter, the set of sparsity regularization values from 0 to 10 with a 0.5 interval were determined for each experiment of 60 mixtures of six types. Results of the uniform regularization given by various sparsity (i.e., ) is illustrated in Figure 2.

Figure 2.

Separation results of the proposed method by using different uniform regularization.

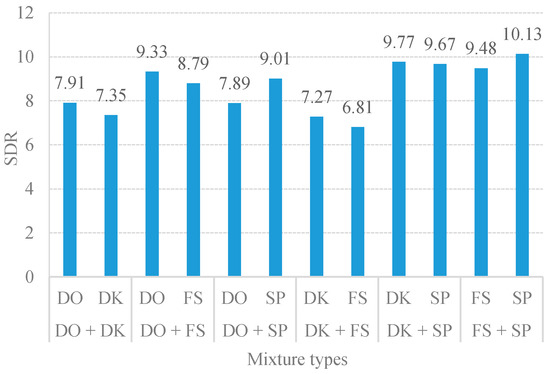

Figure 2 illustrates that the best performance of the unsupervised CMF was in a range of 1.5–3, which yielded the highest SDR of over 8dB. When the term was set too high, the low spectral values of sound-event signals were overly sparse. This overfitting sparsity caused the separation performance toward a tendency to degrade. Conversely, the underfitting sparsity occurred when the term was set too low. The coding parameter could not distinguish between the two sound-event signals. It was also noticed that if the factorization is non-regularized, this will cause the separation results to contain a mixed sound. According to the uniform sparsity results in Figure 2, the separation performance of the proposed method varies depending on the assigned sparsity values. Thus, it is challenging to find a solution for the indistinctness among the sound-event sources in the TF representation to determine the optimal value of sparseness. Thus, this introduces the importance of determining the optimal for separation. Table 1 presents the essential sparsity value on the separation performance by comparing the proposed method given by variational sparsity against the uniform sparsity scheme. The average performance improvement of the proposed adaptive CMF method against the uniform constant sparsity was 1.32 dB SDR. The SDR results clearly indicate that the adaptive sparsity yielded the surpass separation performance over the constant sparsity scheme. Hence, the proposed variational sparsity improves the performance of the discovered original sound-event signals by adaptively selecting the appropriate sparsity parameters to be individually adapted for each element code (i.e., and where ). Consequently, the optimal sparsity facilitates the estimated spectral dictionary via the estimated temporal code. The quantitative measures of separation performance were performed to assess the proposed single-channel sound event separation method. The overall average signal-to-distortion ratio (SDR) was 8.62 dB as illustrated in Figure 3.

Table 1.

Comparison of average SDR performance on three types of mixtures between uniform regularization methods and the proposed method.

Figure 3.

Average SDR results of six-mixture types.

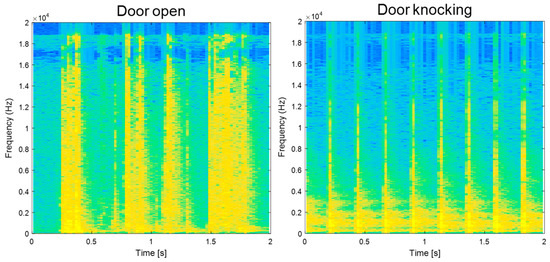

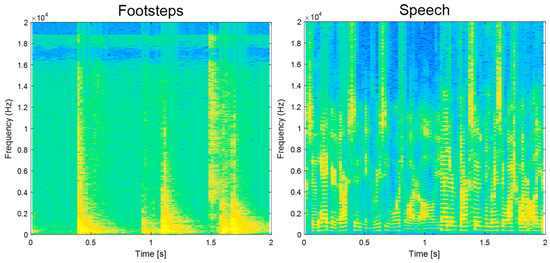

Each sound-event signal has its own temporal pattern that can be clearly noticed in TF representation. Examples of sound-event signals in the TF domain are illustrated in Figure 4. Through the adaptive L1-SCMF method, the proposed single-channel separation method can generate complex temporal patterns such as speech. Thus, the separation results clearly indicate that the performances of noisy source separation perform with high SDR values.

Figure 4.

Example of time-frequency representation of four sound event classes.

3.1.2. Comparison of the Proposed Adaptive CMF with Other SCBSS Methods Based on NMF

This section presents the adaptive CMF separating performance against the state-of-the-art NMF methods (i.e., CMF, SNMF, and NMF-ISD). In the compared methods, the experimental variables such as the normalizing time-frequency domain were computed by using the short-time Fourier transform (i.e., 1024-point Hanning window with 50% overlap). The number of factors was two, with a sparsity weight of 1.5. One hundred random realizations of twenty second-event mixtures were executed. As a result, the average SDRs are presented in Table 2. The proposed adaptive CMF method yielded the best separating performance over the CMF, SNMF, and NMF_ISD methods with the average improvement SDR at 2.13 dB. The estimated door open signals obtained the highest SDR among the four event categories.

Table 2.

Comparison of average SDR and SIR performance on three types of mixtures between SCICA, NMF-ISD, SNMF, CMF, and the proposed method.

The sparsity parameter was carefully adapted using the proposed adaptive L1-SCMF method exploiting the phase information and temporal code of the sources, which is inherently ignored by SNMF and NMF-ISD and has led to an improved performance of about 2 dB in SDR. On the other hand, the parts decomposed by the CMF, SNMF, and NMF-ISD methods were unable to capture the phase spectra and the temporal dependency of the frequency patterns within the audio signal.

Additionally, the CMF and NMF-ISD are unique when the signal adequately spans the positive octant. Thus, the rotation of and opposite can obtain the same results. The CMF method can easily be over or under sparse resolution of the factorization due to manually determining the sparsity value.

3.2. Performance of Event Classification Based on MSVM Algorithm

This section elucidates the features and performance of the MSVM-learning model. The MSVM-learning model was investigated to obtain the optimal size of the sliding window and then determine the significant features that led to the classification performance. Finally, the efficiency of the MSVM model was evaluated. These topics are presented in order in the following parts.

3.2.1. Determination Optimal Window Length for Feature Encoding

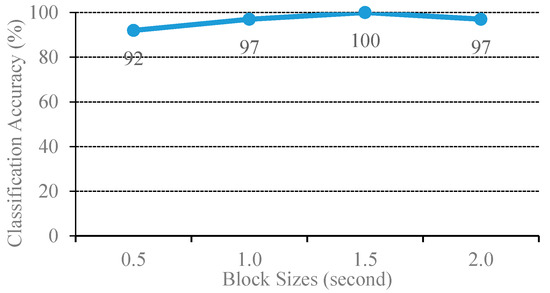

For the MSVM method, sound-event signals are segmented into small blocks for encoding feature parameters by using a fixed-length of the sliding window. The sets of feature vectors are computed using the mean supervector and then loaded to the MSVM model for learning and constructing the hyperplane. The size of blocks can affect the information of the feature vectors, which leads to the classifier performance. The block’s size will affect the , hence modifying the block size will mark the learning efficiency of the MSVM model. Therefore, in order to obtain the optimal value of , the optimal block size was exploited by training the MSVM model given various lengths of window sizes (i.e., 0.5, 1, 1.5, and 2.0 s) to learn the 400 noisy sound-event signals of four event classes with cross-validation.

The experimental results are plotted in Figure 5, where the block size varies from 0.5 to 2.0 with 0.5 increments. The MSVM model of the 1.5 s block size yielded the best sound-event classification at 100% accuracy. The sliding window function benefits from SVM to learn an unknown sound event by generating the set of blocks from the observed event, regarded as a number of observed events. As a result, a set of sound event characteristics were computed for each block (i.e., in Equation (24)).

Figure 5.

Classification performance of the original and combination source MSVM with various block sizes.

The optimal length of the window size can capture the signature of the sound event. If the window length is too short, the encoded features will then deviate from the character of the sound event. In addition, the mean supervector is computed from the set of features of all blocks, which can be regarded as the mean of the probability distribution of the features. This mean supervector advantages the MSVM to reduce misclassifications when compared to the conventional SVM. Hence, the STFT of all experiments set the window function at 1.5 s.

3.2.2. Determination of Sound-Event Features

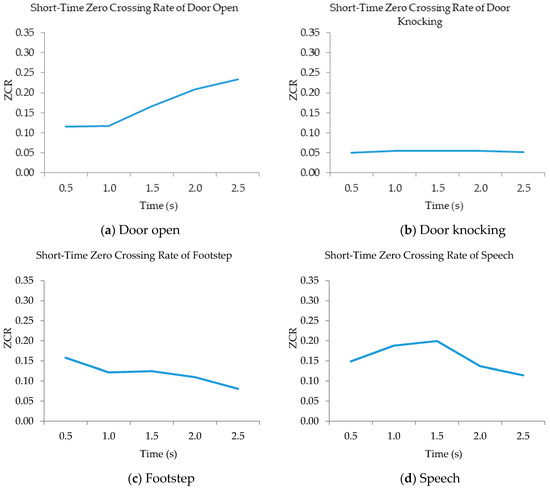

Each sound-event signal was encoded with three features: Mel frequency cepstral coefficients (MFCCs), short-time energy (STE), and short-time zero-crossing rate (STZCR). MFCCs are represented as a frequency domain feature that is evaluated in a similar assembly to the human ear (i.e., logarithmic frequency perception). STE is the total spectrum power of an observed event. The STZCR denotes the number of times that the signal amplitude interval satisfies the condition (i.e., where is 1 if the condition is true and 0 otherwise). The STZCR features of four sound-event classes are illustrated in Figure 6.

Figure 6.

STZCR patterns of four sound-events (a–d).

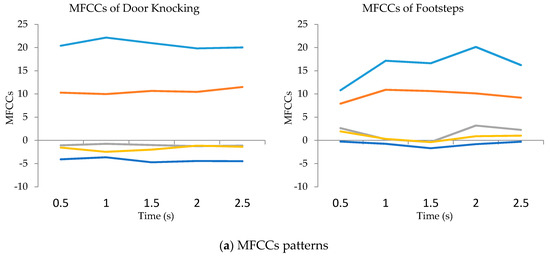

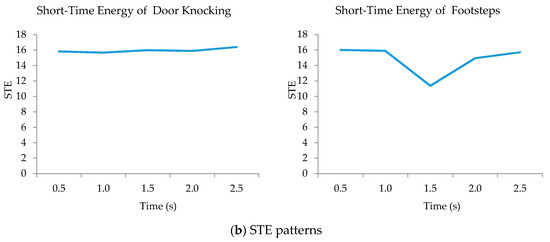

The STZCR feature represents unique patterns of four sound-event classes. The four sound-event patterns are different in shape and data range. Similarly, the MCFFs and STE features extract distinctive patterns of all event classes, except for the patterns between door knocking and footstep, as illustrated in Figure 7.

Figure 7.

MFCCs (a) and STE (b) patterns of door knocking and footstep.

Figure 7 aims to compare the characteristics of similar sound events such as door knocking and footsteps. Thus, MFCCs and STE features were used to illustrate the patterns of sound events. Figure 7a represents the five orders of MFCC features to compare patterns between door knocking and walking while the STE features are shown in Figure 7b.

The proposed method separated the six categories of mixtures, then classified each estimated sound event signal into its corresponding class. Classified results of the six categories are presented as confusion matrixes below:

| Actual | ||||||||

| Predict | DO | DK | DO | FS | DO | SP | ||

| DO | 19 | 3 | DO | 12 | 8 | DO | 19 | 5 |

| DK | 3 | 15 | FS | 4 | 16 | SP | 3 | 13 |

| DK | FS | DK | SP | FS | SP | |||

| DK | 12 | 4 | DK | 16 | 2 | FS | 14 | 6 |

| FS | 9 | 15 | SP | 5 | 17 | SP | 3 | 17 |

The classification of the proposed method was measured by Precision = TP/(TP + FP), Recall = TP/(TP + FN), and F1-score = 2 × (Precision × Recall)/(Precision + Recall). The TP and TN terms refer to the true positive and true negative, while the FP and FN terms mean false positive and false negative. The scores of Precision, Recall, and F1-score were 0.7667, 0.7731, and 0.7699, respectively.

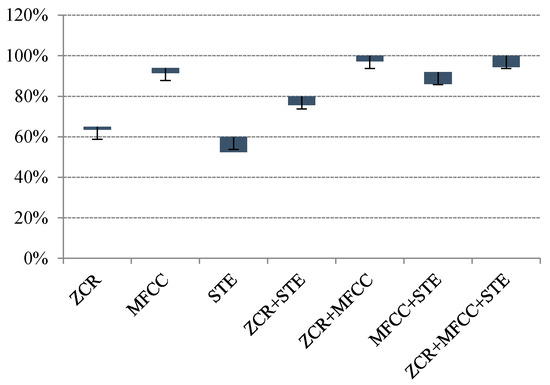

Each feature represents unique characteristics of an individual sound event. Thus, features were matched into seven cases for exploiting their influence on the MSVM classifiers (i.e., {(MFCC), (STE), (STZCR), (MFCC, STE), (MFCC, STZCR), (STE, STZCR), (MFCC, STE, STZCR)}).

As shown in Figure 8, the MSVM model given by MFCCs and STZCR yielded the best classified accuracy at 100%, with less deviation among the other cases. Therefore, the separated signals were then classified by the proposed MSVM method given by the MFCC and STZCR vectors and the 1.5 s window function. The computational complexity of the proposed method was analyzed by two steps. First, the adaptive L1-SCMF method was NP-hard. Big-O of the adaptive L1-SCMF method consists of spectral basis (), temporal code (), and phase information that rely on components (). Thus, Big-O of the separation step is . For MSVM steps, it is a polynomial algorithm where Big-O is . Therefore, the computational complexity of the proposed method based on Big-O is . All experiments were performed by a PC with Intel® Core™ i7-4510U CPU2.00 GHz and 8 GB RAM. MATLAB was used as the programming platform.

Figure 8.

Classification performances of multi-class MSVM of various sets of features and length of event signal.

3.2.3. Performance of MSVM Classifier

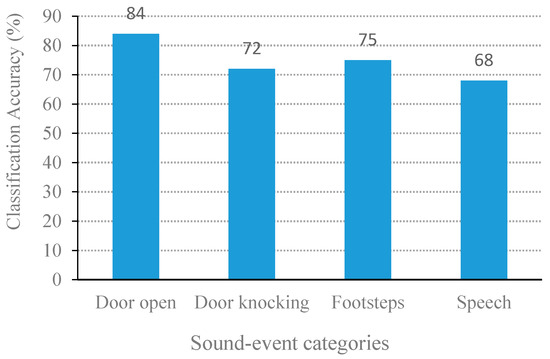

The MSVM-classifier performance is presented in terms of percentage of the corrected sound-event classification. The 240 separated signals of four classes from the proposed separation method were individually identified by the MSVM classifier.

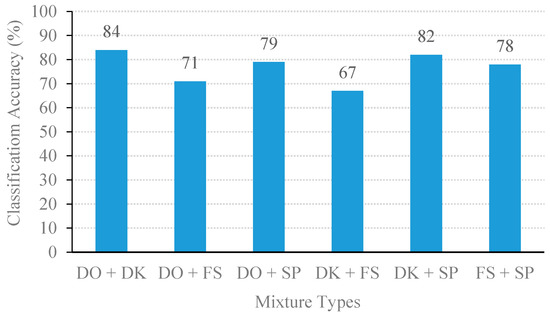

Figure 9 compares the classification performance on the four classes of individual sound events. The best classification accuracy was door open, followed by footstep, door knocking, and speech. On the other hand, the classification results based on the mixed sound events are illustrated in Figure 10. The MSVM model delivered the highest performance of the door-open event with 84% accuracy.

Figure 9.

Average percentage of classification accuracy from the perspective of event group of the proposed NSSEC method.

Figure 10.

Classification performance of NSSEC model with 1.5 s block size.

From the above experiments, the proposed method yields an average classification accuracy of 76.67%. The MSVM method can well discriminate and classify the mixed event signals with high classification accuracy (i.e., the mixture of door open with door knocking and door knocking with speech were correctly classified with above 80% accuracy). Due to the MFCC and STZCR features in the individual event, these signals had obvious distinguishable patterns, as shown in the example of STZCR plots in Figure 6. Despite the SDR scores of the separated signals between door open and door knocking being relatively low (as given in Figure 3), the MSVM yielded the highest classification accuracy for the door open with door knocking mixture (DO + DK). This is attributed to the fact that interference remaining in the separated event signals causes the extracted MFCC and STZCR vectors to deviate from their original sound event vectors.

4. Conclusions

A novel solution for classification of the noisy mixtures using a single microphone was presented. The complex matrix factorization was proposed and extended by adaptively tuning the sparse regularization. Thus, the desired L1-optimal sparse decomposition was obtained. In addition, the phase estimates of the CMF could extract the recurrent pattern of the magnitude spectra. The updated equation was derived through an auxiliary function. For classification, the multiclass support vector was used as the mean supervector for encoding the sound-event signatures. The proposed noisy sound separation and event classification method was demonstrated by using four sets of noisy sound-event mixtures, which were door open, door knocking, footsteps, and speech. Based on the experimental results, first, the optimal window length of STFT was found where 1.5 s of the sliding window yielded the best separation performance. The second was two significant features that were ZCR and MFCCs. These parameters were set for examining the proposed method. The proposed method achieved outstanding results in both separation and classification. In future work, the proposed method will be evaluated on a public dataset such as the DCASE 2016, alongside the comparison with other machine learning algorithms.

Author Contributions

Conceptualization, P.P. and W.L.W.; Methodology, P.P. and N.T.; Software, P.P.; Validation, N.T. and W.L.W.; Investigation, P.P. and N.T.; Writing—original draft preparation, P.P. and W.L.W.; Writing—review and editing, N.T., M.A.M.A., O.A., and G.R.; Visualization, M.A.M.A.; Supervision, W.L.W.; Project administration, N.T., M.A.M.A., and O.A.; Funding acquisition, W.L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the UK Global Challenge Research Fund, the National Natural Science Foundation of China (No. 61971093, No. 61401071, No. 61527803), and supported by the NSAF (Grant No. U1430115) and EPSRC IAA Phase 2 funded project: “3D super-fast and portable eddy current pulsed thermography for railway inspection (EP/K503885/1).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Single-Channel Sound Event Separation

The prior corresponds to the sparsity cost, for which a natural choice is a generalized Gaussian prior:

where and are the shape parameters of the distribution. When , promotes the -norm sparsity. -norm sparsity has been shown to be probabilistically equivalent to the pseudo-norm, , which is the theoretically optimum sparsity [29,30]. However, -norm is non-deterministic polynomial-time (NP) hard and is not useful in large datasets such as audio. Given Equation (3), the posterior density is defined as

The maximum a posteriori probability (MAP) estimation problem leads to minimizing the following optimization problem with respect to :

subject to .

The CMF parameters has been upgraded by using an efficient auxiliary function for an iterative process. The auxiliary function for can be expressed as the following: for any auxiliary variables with , for any , , for any , and . The term with an auxiliary function was defined as

where . The function is minimized w.r.t. when

Appendix B. Estimation of the Spectral Basis and Temporal Code

In Equation (4), the update rule for is derived by differentiating . partially w.r.t. and , and setting them to zero, which yields the following:

The update rule for the phase, , can be derived by reformulating Equation (A1) as follows:

where A denotes the terms that are irrelevant with , , , and . The auxiliary function, in (A4) is minimized when , namely, and . The update formula for eventually leads to

The update formula for and for projection onto the constraint space is set to

Appendix C. Estimation of L1-Optimal Sparsity Parameter

This section aims to facilitate spectral dictionaries with adaptive sparse coding. First, the CMF model is defined as the following terms:

where “⊗” and “” are the Kronecker product and the Hadamard product, respectively. The term vec(∙) denotes the column vectorization and the term is the identity matrix. The goal is then set to compute the regularization parameter related to each . To achieve the goal, the parameter in Equation (A3) was set at 1 to acquire a linear expression (in ). In consideration of the noise variance , Equation (A3) can concisely be rewritten as:

where the and terms indicate vectors of dimension (i.e., ), and the superscript ‘’ is used to denote complex Hermitian transpose (i.e., vector (or matrix) transpose), followed by complex conjugate. The Expectation–Maximization (EM) algorithm is used to determine and is the hidden variable, where the log-likelihood function can be optimized with respect to . The log-likelihood function satisfies the following [12]:

by applying the Jensen’s inequality for any distribution . The distribution can simply verify the posterior distribution of that maximizes the right-hand side of Equation (A19) is given by . The posterior distribution in the form of the Gibbs distribution is proposed as follows:

The term in Equation (A16) as the function of the Gibbs distribution is essential for simplifying the adaptive optimization of . The maximum-likelihood (ML) estimation of can be decomposed as follows:

In the same way,

Individual element of is required to be exponentially distributed with independent decay parameters that delivers , thus Equation (20) obtains

The term denotes the dependent variable of the distribution whereas other parameters are assumed to be constant. As such, the optimization in (A19) is derived by differentiating the parameters within the integral with respect to . As a result, the functional optimization of then obtains

where , denotes the element of . The iterative update for is given by

where and . However, the integral forms in Equations (A20) and (A21) are complex to compute and analyzed analytically. Thus, an approximation to is exploited. Notice that the solution naturally splits its elements into distinct subsets and consisting of components such that and components such that . Hence, this can be derived as follows:

Defined, , and . Here, the term is a constant and the cross-term measures the orthogonality between and , where and denote the sub-matrix of that corresponds to and . To obtain a simplified expression in Equation (A22), the function can be approximated as and the can be safely discounted since its value is typically much smaller than and . Thus, the approximation of can be expressed as

Defining and . With the purpose of characterizing , some positive deviation to is needed to be allowed for, whereas the values will reject all negative values due to CMF only accepting zero and positive values. Thus, admits zero and positive values in . The approximation of the distribution is then utilized in the Taylor expansion as the maximum a posterior probability (MAP) estimate. Therefore, with , one obtains

where and . The integration of the term in Equation (A24) is hard to derive in its closed form expression for analytical evaluation, which subsequently prohibits inference of the sparsity parameters. A fixed form distribution is employed for computing variational approximate . As a result, the closed form expression is obtained. Subsequently, the term only takes on nonnegative values, so a suitable fixed form distribution is to use the factorized exponential distribution given by

By minimizing the Kullback–Leibler divergence between and , the variational parameters where can be derived as:

Solving Equation (A26) for leads to the following update [37]:

The approximate distribution for components can be obtained by substituting into as follows:

In Equation (A28), the function will be expressed as the unconstrained Gaussion with mean and covariance based on a multivariate Gaussian distribution. The term denotes the sub-matrix of . The sparsity parameter is then obtained by substituting Equations (A24), (A25), and (A28) into Equation (A20) as presented in Equation (A29):

and its covariance X is given by

Similarly, the inference for can be computed from Equation (24) as

where

The core procedure of the proposed CMF method is based on -optimal sparsity parameters. The estimated sources are discovered by multiplying the respective rows of the components with the corresponding columns of the weights and time-varying phrase spectrum . The separated sources are obtained by converting the time-frequency represented sources into time domain.

References

- Wang, Q.; Woo, W.L.; Dlay, S. Informed single-channel speech separation using hmm–gmm user-generated exemplar source. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 2087–2100. [Google Scholar] [CrossRef]

- Gao, B.; Bai, L.; Woo, W.L.; Tian, G.; Cheng, Y. Automatic defect identification of eddy current pulsed thermography using single channel blind source separation. IEEE Trans. Instrum. Meas. 2013, 63, 913–922. [Google Scholar] [CrossRef]

- Yin, A.; Gao, B.; Tian, G.; Woo, W.L.; Li, K. Physical interpretation and separation of eddy current pulsed thermography. J. Appl. Phys. 2013, 113, 64101. [Google Scholar] [CrossRef]

- Cheng, L.; Gao, B.; Tian, G.; Woo, W.L.; Berthiau, G. Impact damage detection and identification using eddy current pulsed thermography through integration of PCA and ICA. IEEE Sens. J. 2014, 14, 1655–1663. [Google Scholar] [CrossRef]

- Cholnam, O.; Chongil, G.; Chol, R.K.; Gwak, C.; Rim, K.C. Blind signal separation method and relationship between source separation and source localisation in the TF plane. IET Signal Process. 2018, 12, 1115–1122. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Woo, W.L.; Dlay, S.S.; Gao, B. Online noisy single-channel blind separation by spectrum amplitude estimator and masking. IEEE Trans. Signal Process 2016, 64, 1881–1895. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Gao, B.; Woo, W.L.; Dlay, S.S. Single-Channel Blind Separation Using Pseudo-Stereo Mixture and Complex 2-D Histogram. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 1722–1735. [Google Scholar] [CrossRef]

- Koundinya, S.; Karmakar, A. Homotopy optimisation based NMF for audio source separation. IET Signal Process. 2018, 12, 1099–1106. [Google Scholar] [CrossRef]

- Kim, M.; Smaragdis, P. Single channel source separation using smooth Nonnegative Matrix Factorization with Markov Random Fields. In Proceedings of the 2013 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Southapmton, UK, 22–25 September 2013; pp. 1–6. [Google Scholar]

- Yoshii, K.; Itoyama, K.; Goto, M. Student’s T nonnegative matrix factorization and positive semidefinite tensor factorization for single-channel audio source separation. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 51–55. [Google Scholar]

- Al-Tmeme, A.; Woo, W.L.; Dlay, S.; Gao, B. Underdetermined convolutive source separation using gem-mu with variational approximated optimum model order NMF2D. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2016, 25, 35–49. [Google Scholar] [CrossRef]

- Woo, W.L.; Gao, B.; Bouridane, A.; Ling, B.W.-K.; Chin, C.S. Unsupervised learning for monaural source separation using maximization–minimization algorithm with time–frequency deconvolution. Sensors 2018, 18, 1371. [Google Scholar] [CrossRef]

- Gao, B.; Woo, W.L.; Dlay, S.S. Unsupervised single channel separation of non-stationary signals using Gammatone filterbank and Itakura-Saito nonnegative matrix two-dimensional factorizations. IEEE Trans. Circuits Syst. I 2013, 60, 662–675. [Google Scholar] [CrossRef]

- Févotte, C.; Bertin, N.; Durrieu, J.-L. Nonnegative matrix factorization with the itakura-saito divergence: With application to music analysis. Neural Comput. 2009, 21, 793–830. [Google Scholar] [CrossRef] [PubMed]

- Pu, X.; Yi, Z.; Zheng, Z.; Zhou, W.; Ye, M. Face recognition using fisher non-negative matrix factorization with sparseness constraints. Comput. Vis. 2005, 3497, 112–117. [Google Scholar] [CrossRef]

- Magron, P.; Virtanen, T. Towards complex nonnegative matrix factorization with the beta-divergence. In Proceedings of the 2018 16th International Workshop on Acoustic Signal Enhancement (IWAENC), Tokyo, Japan, 17–20 September 2018; pp. 156–160. [Google Scholar]

- King, B. New Methods of Complex Matrix Factorization for Single-Channel Source Separation and Analysis. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 2012. [Google Scholar]

- Parathai, P.; Tengtrairat, N.; Woo, W.L.; Gao, B. Single-channel signal separation using spectral basis correlation with sparse nonnegative tensor factorization. Circuits Syst. Signal Process. 2019, 38, 5786–5816. [Google Scholar] [CrossRef]

- Woo, W.L.; Dlay, S.; Al-Tmeme, A.; Gao, B. Reverberant signal separation using optimized complex sparse nonnegative tensor deconvolution on spectral covariance matrix. Digit. Signal Process. 2018, 83, 9–23. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Parathai, P.; Woo, W.L. Blind 2D signal direction for limited-sensor space using maximum likelihood estimation. Asia-Pac. J. Sci. Technol. 2017, 22, 42–49. [Google Scholar]

- Gao, B.; Woo, W.L.; Tian, G.Y.; Zhang, H. Unsupervised diagnostic and monitoring of defects using waveguide imaging with adaptive sparse representation. IEEE Trans. Ind. Inform. 2016, 12, 405–416. [Google Scholar] [CrossRef]

- Gao, B.; Woo, W.L.; He, Y.; Tian, G.Y. Unsupervised sparse pattern diagnostic of defects with inductive thermography imaging system. IEEE Trans. Ind. Inform. 2016, 12, 371–383. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Woo, W.L. Single-channel separation using underdetermined blind autoregressive model and lest absolute deviation. Neurocomputing 2015, 147, 412–425. [Google Scholar] [CrossRef]

- Gao, B.; Woo, W.; Ling, B.W.-K. Machine learning source separation using maximum a posteriori nonnegative matrix factorization. IEEE Trans. Cybern. 2013, 44, 1169–1179. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Woo, W. Extension of DUET to single-channel mixing model and separability analysis. Signal Process. 2014, 96, 261–265. [Google Scholar] [CrossRef]

- Zhou, Q.; Feng, Z.; Benetos, E. Adaptive noise reduction for sound event detection using subband-weighted NMF. Sensors 2019, 19, 3206. [Google Scholar] [CrossRef] [PubMed]

- Yan, L.; Zhang, Y.; He, Y.; Gao, S.; Zhu, D.; Ran, B.; Wu, Q. Hazardous traffic event detection using markov blanket and sequential minimal optimization (MB-SMO). Sensors 2016, 16, 1084. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.-L.; Chiang, H.-H.; Chiang, C.-Y.; Liu, J.; Yuan, S.-M.; Wang, J.-H. A vision-based driver nighttime assistance and surveillance system based on intelligent image sensing techniques and a heterogamous dual-core embedded system architecture. Sensors 2012, 12, 2373–2399. [Google Scholar] [CrossRef] [PubMed]

- McLoughlin, I.V.; Zhang, H.; Xie, Z.; Song, Y.; Xiao, W. Robust sound event classification using deep neural networks. IEEE/ACM Trans. Audio. Speech. Lang. Process. 2015, 23, 540–552. [Google Scholar] [CrossRef]

- Noh, K.; Chang, J.-H. Joint optimization of deep neural network-based dereverberation and beamforming for sound event detection in multi-channel environments. Sensors 2020, 20, 1883. [Google Scholar] [CrossRef]

- Hsu, C.W.; Lin, C.J. A comparison of methods for multi-class support vector machines. IEEE Trans. Neural Netw. 2002, 13, 415–425. [Google Scholar]

- Martin-Morato, I.; Cobos, M.; Ferri, F.J. A case study on feature sensitivity for audio event classification using support vector machines. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Salerno, Italy, 13–16 September 2016; pp. 1–6. [Google Scholar]

- Candès, E.J.; Romberg, J.K.; Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef]

- Selesnick, I. Resonance-based signal decomposition: A new sparsity-enabled signal analysis method. Signal Process. 2011, 91, 2793–2809. [Google Scholar] [CrossRef]

- Al-Tmeme, A.; Woo, W.L.; Dlay, S.; Gao, B. Single channel informed signal separation using artificial-stereophonic mixtures and exemplar-guided matrix factor deconvolution. Int. J. Adapt. Control. Signal Process. 2018, 32, 1259–1281. [Google Scholar] [CrossRef]

- Gao, B.; Woo, W.L.; Dlay, S.S. Single channel blind source separation using EMD-subband variable regularized sparse features. IEEE Trans. Audio. Speech Lang. Process. 2011, 19, 961–976. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Nonlinear Programming, 2nd ed.; Athena Scientific: Belmont, MA, USA, 1999. [Google Scholar]

- Kameoka, H.; Ono, N.; Kashino, K.; Sagayama, S. Complex NMF: A new sparse representation for acoustic signals. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 3437–3440. [Google Scholar] [CrossRef]

- Parathai, P.; Woo, W.L.; Dlay, S.; Gao, B. Single-channel blind separation using L1-sparse complex non-negative matrix factorization for acoustic signals. J. Acoust. Soc. Am. 2015, 137, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Zdunek, R.; Cichocki, A. Nonnegative matrix factorization with constrained second-order optimization. Signal Process. 2007, 87, 1904–1916. [Google Scholar] [CrossRef]

- Yu, K.; Woo, W.L.; Dlay, S. Variational regularized two-dimensional nonnegative matrix factorization. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 703–716. [Google Scholar]

- Gao, B.; Woo, W.L.; Dlay, S. Adaptive sparsity non-negative matrix factorization for single-channel source separation. IEEE J. Sel. Top. Signal Process. 2011, 5, 989–1001. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).