MeshLifter: Weakly Supervised Approach for 3D Human Mesh Reconstruction from a Single 2D Pose Based on Loop Structure

Abstract

1. Introduction

2. Related Work

2.1. Optimization-Based SMPL Parameter Fitting

2.2. Deep-Learning-Based SMPL Parameter Regression

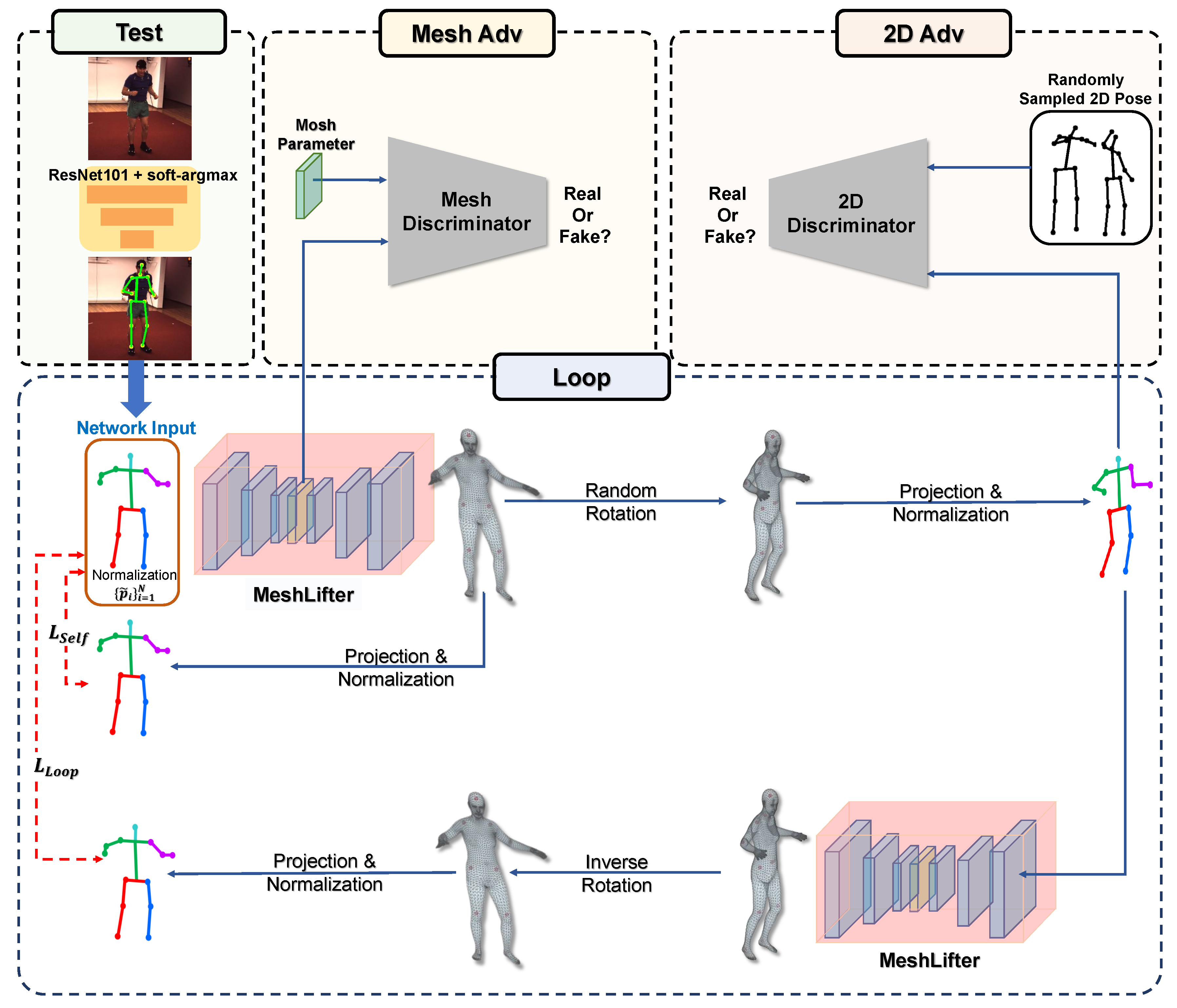

3. Proposed Method

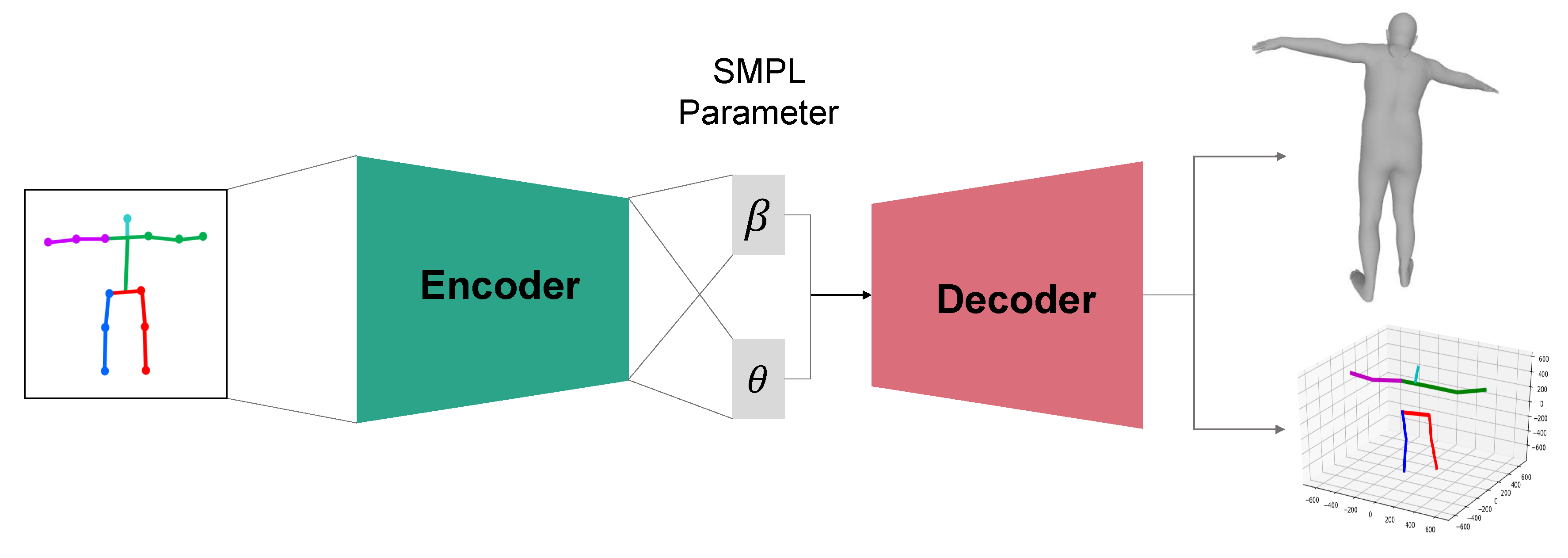

3.1. MeshLifter

3.1.1. SMPL

3.1.2. Encoder

3.1.3. Decoder

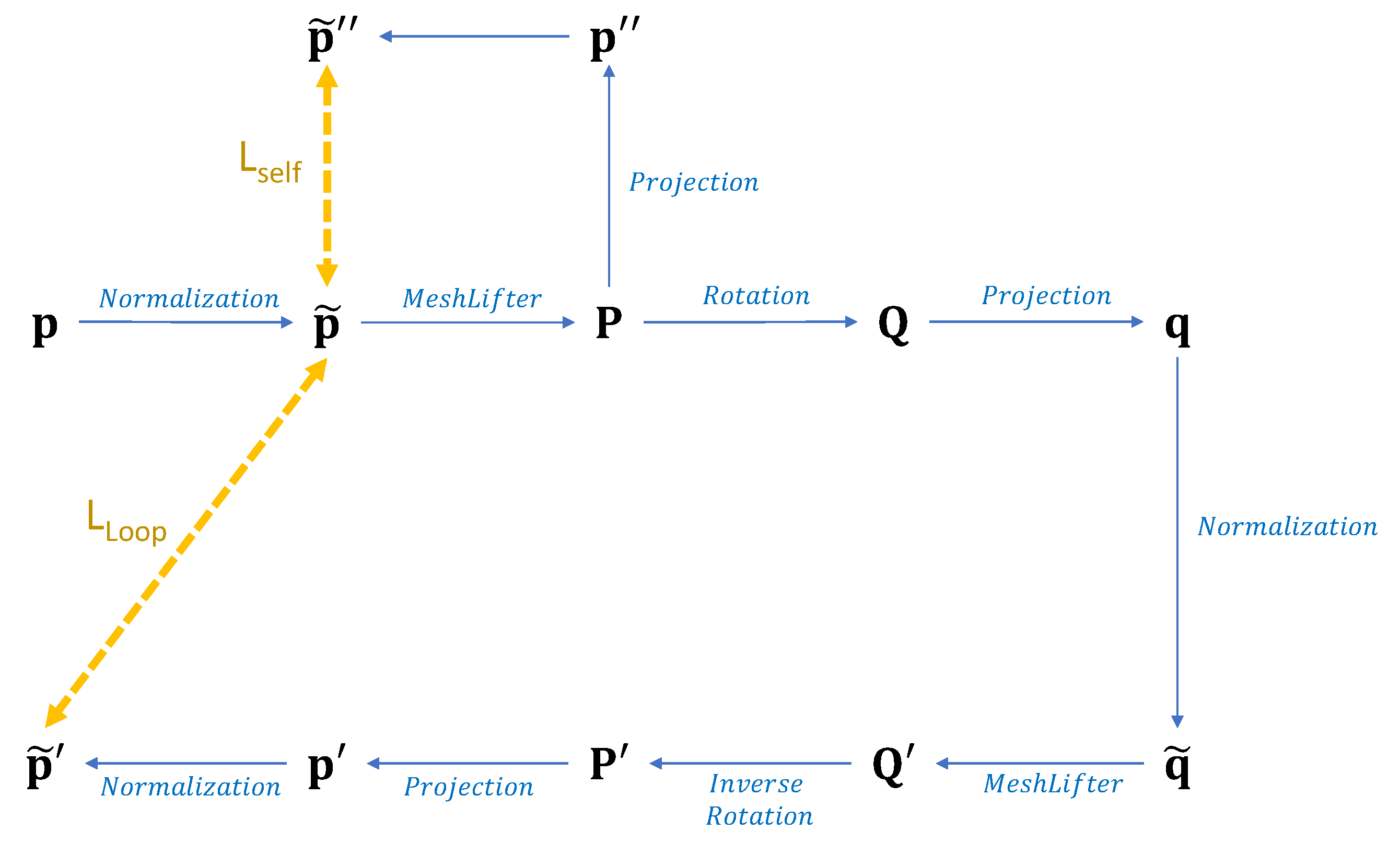

3.2. Weakly Supervised Learning Based on Loop Structure

3.3. Adversarial Training

3.3.1. Mesh Adversarial Training

3.3.2. 2D Pose Adversarial Training

3.4. Regularization

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

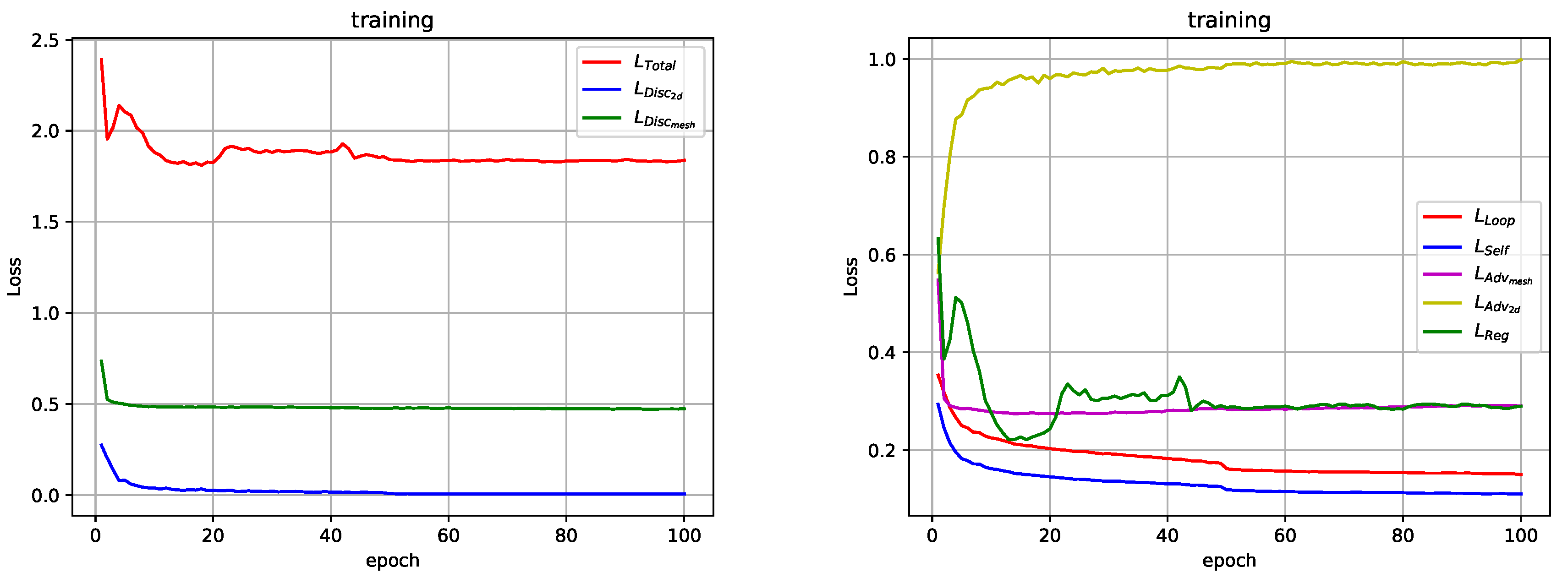

4.3. Implementation Details

4.4. Ablation Study

4.5. Quantitative Result

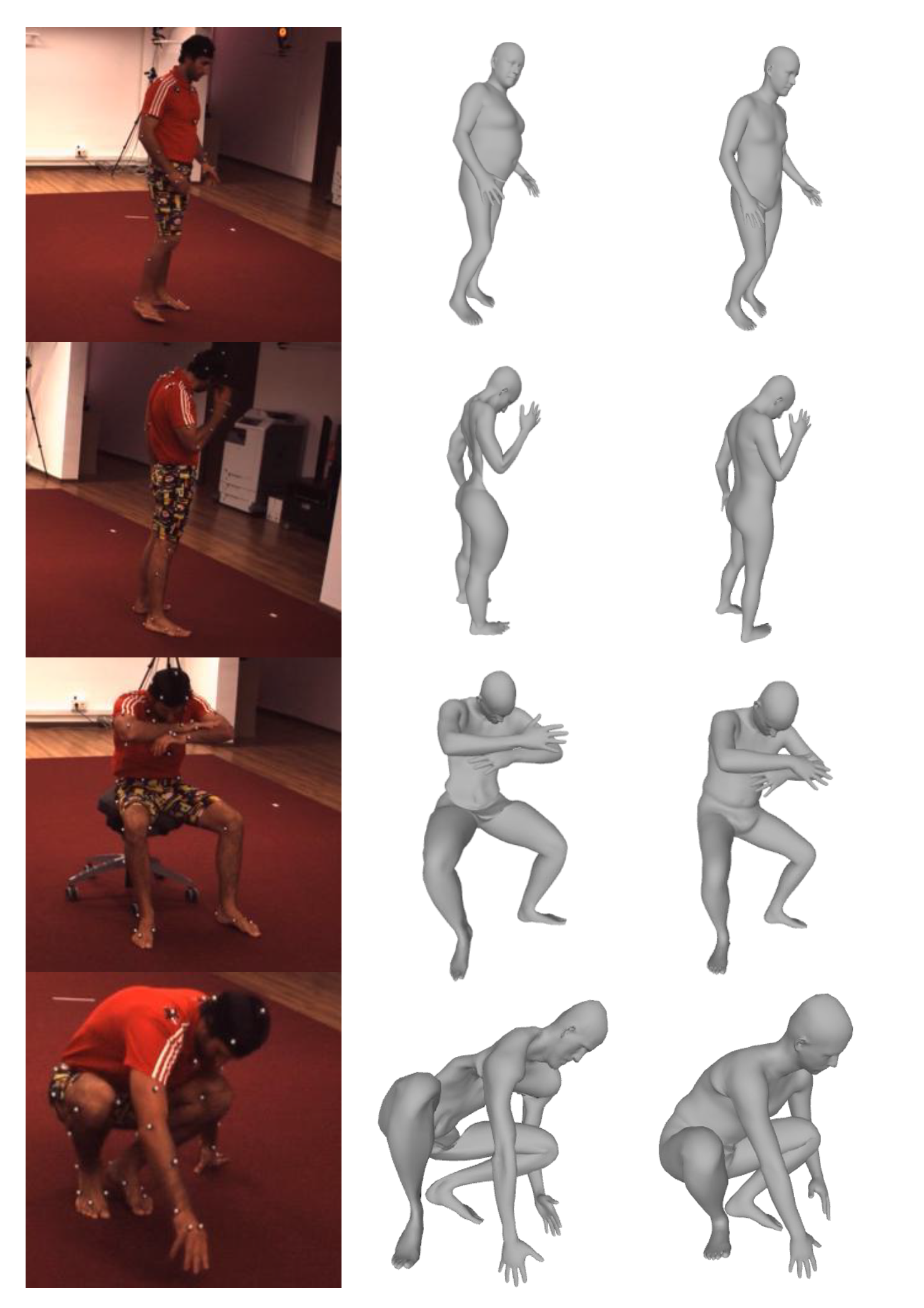

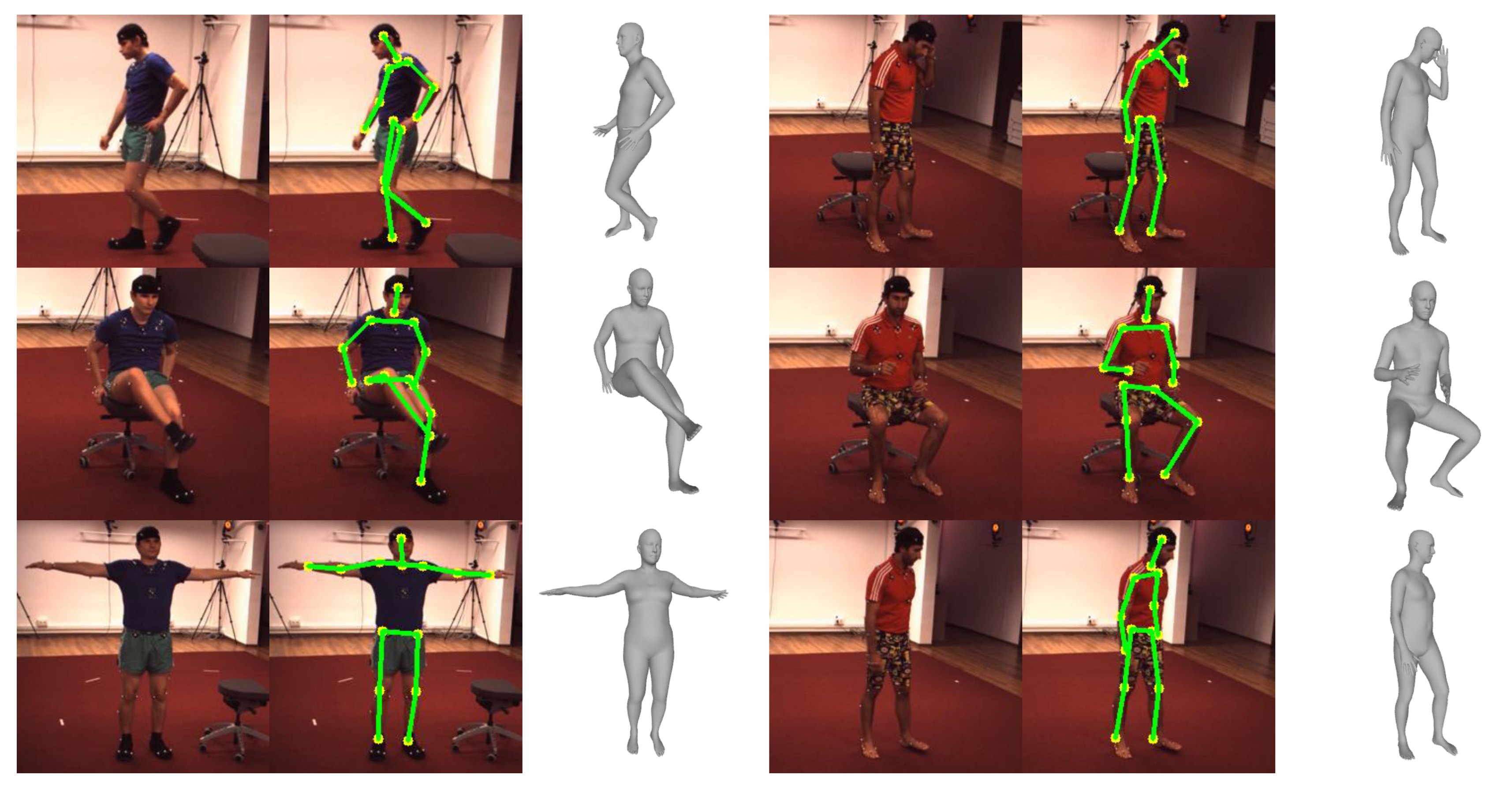

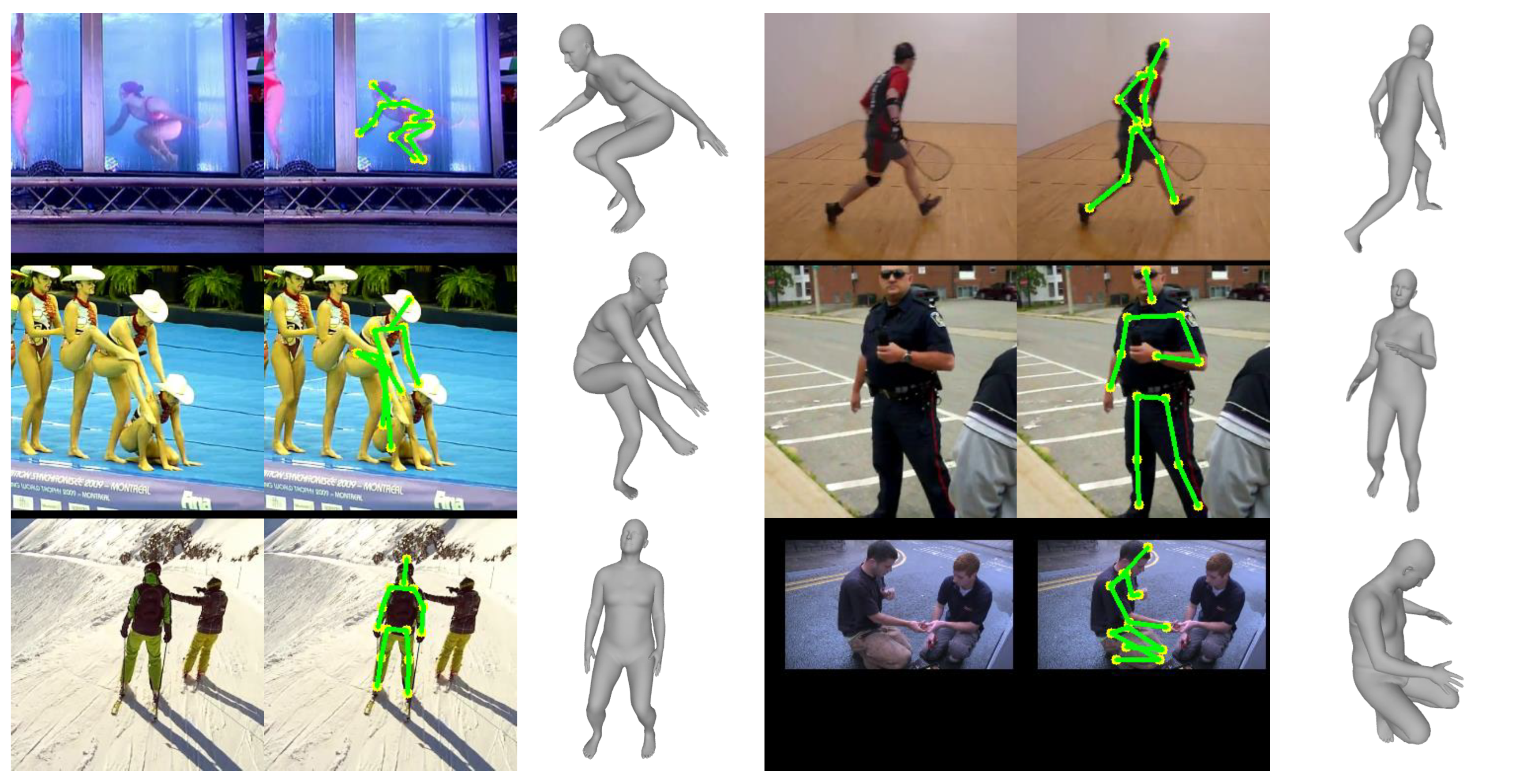

4.6. Qualitative Result

4.7. 3D Hand Mesh Reconstruction

4.8. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Zheng, X.; Chen, X.; Lu, X. A joint relationship aware neural network for single-image 3d human pose estimation. IEEE Trans. Image Process. 2020, 29, 4747–4758. [Google Scholar] [CrossRef] [PubMed]

- Loper, M.; Mahmood, N.; Black, M.J. SMPL: A skinned multi-person linear model. Acm Trans. Graph. TOG 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Chen, C.-H.; Tyagi, A.; Agrawal, A.; Drover, D.; Stojanov, S.; Rehg, J.M. Unsupervised 3d pose estimation with geometric self-supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6m: Large scale datasets and predictive methods for 3d human sensing in natural enviornments. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Mehta, D.; Rhodin, H.; Casas, D.; Fua, P.; Sotnychenko, O.; Xu, W.; Theobalt, C. Monocular 3d human pose estimation in the wild using improved cnn supervision. In Proceedings of the International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2d human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Bogo, F.; Kanazawa, A.; Lassner, C.; Gehler, P.; Romero, J.; Black, M.J. Keep it SMPL: Automatic estimation of 3d human pose and shape from a single image. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Lassner, C.; Romero, J.; Kiefel, M.; Bogo, F.; Black, M.J.; Gehler, P.V. Unite the people: Closing the loop between 3d and 2d human representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kanazawa, A.; Black, M.J.; Jacobs, D.W.; Malik, J. End-to-end recovery of human shape and pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Kolotouros, N.; Pavlakos, G.; Daniilidis, K. Convolutional mesh regression for single-image human shape reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Li, R.; Cai, C.; Georgakis, G.; Karanam, S.; Chen, T.; Wu, Z. Towards robust rgb-d human mesh recovery. arXiv 2019, arXiv:1911.07383. [Google Scholar]

- Kolotouros, N.; Pavlakos, G.; Black, M.J.; Daniilidis, K. Learning to reconstruct 3d human pose and shape via model-fitting in the loop. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2252–2261. [Google Scholar]

- Loper, M.; Mahmood, N.; Black, M.J. MoSh: Motion and shape capture from sparse markers. ACM Trans. Graph. TOG 2014, 33, 1–13. [Google Scholar] [CrossRef]

- Gower, J.C. Generalized procrustes analysis. Psychometrika 1975, 40, 33–51. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Sun, X.; Xiao, B.; Wei, F.; Liang, S.; Wei, Y. Integral human pose regression. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 529–545. [Google Scholar]

- Pavlakos, G.; Zhu, L.; Zhou, X.; Daniilidis, K. Learning to estimate 3d human pose and shape from a single color image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 459–468. [Google Scholar]

- Mehta, D.; Sridhar, S.; Sotnychenko, O.; Rhodin, H.; Shafiei, M.; Seidel, H.P.; Xu, W.; Casas, D.; Theobalt, C. Vnect: Real-time 3d human pose estimation with a single rgb camera. ACM Trans. Graph. TOG 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. ACM Trans. Graph. TOG 2017, 36, 245. [Google Scholar] [CrossRef]

- Zimmermann, C.; Brox, T. Learning to estimate 3d hand pose from single rgb images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4903–4911. [Google Scholar]

- Yang, L.; Yao, A. Disentangling latent hands for image synthesis and pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9877–9886. [Google Scholar]

- Spurr, A.; Song, J.; Park, S.; Hilliges, O. Cross-modal deep variational hand pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 89–98. [Google Scholar]

- Yang, L.; Li, S.; Lee, D.; Yao, A. Aligning latent spaces for 3d hand pose estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2335–2343. [Google Scholar]

| Method | Optimization | Regression | Paired 2D–3D | 2D Pose Input |

|---|---|---|---|---|

| SMPLify [8] | √ | √ | ||

| UP-3D [9] | √ | |||

| HMR [10] | √ | |||

| CMR [11] | √ | √ | ||

| RGB-D [12] | √ | √ | ||

| SPIN [13] | √ | √ | ||

| Ours | √ | √ |

| Dataset | Human3.6M [5] | MPI-INF-3DHP [6] | Mosh [14] | MPII [7] |

|---|---|---|---|---|

| Data acquisition | Marker-based motion capture | Marker-less motion capture | Marker-based motion capture | YouTube search |

| 2D image | √ | √ | √ | |

| 2D human pose | √ | √ | √ | |

| 3D human pose | √ | √ | √ | |

| SMPL parameters | √ | |||

| Number of subjects | 11 | 8 | 39 | 40K |

| Number of examples | 3.6M | 100K | 410K | 40K |

| Purpose of use | Training and evaluation | Training and evaluation | Adversarial training | Qualitative evaluation |

| Dataset | Pixel Error |

|---|---|

| Human3.6M | 3.2 |

| MPII | 6.3 |

| Loss Variations | Reconstruction Error |

|---|---|

| Self (baseline) | 157.0 |

| Self + Loop | 136.1 |

| Self + Loop + Mesh | 83.5 |

| Self + Loop + Mesh + 2D | 58.8 |

| Self + Loop + Mesh + 2D + Reg | 59.1 |

| Method | Reconstruction Error |

|---|---|

| SMPLify [8] | 82.0 |

| Pavlakos et al. [22] | 75.9 |

| HMR-unpaired [10] | 66.5 |

| SPIN-unpaired [13] | 62.0 |

| Ours | 59.1 |

| Method | Reconstruction Error |

|---|---|

| HMR-unpaired [10] | 113.2 |

| VNect [23] | 98.0 |

| Ours | 96.0 |

| SPIN-unpaired [13] | 80.4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, S.; Chang, J.Y. MeshLifter: Weakly Supervised Approach for 3D Human Mesh Reconstruction from a Single 2D Pose Based on Loop Structure. Sensors 2020, 20, 4257. https://doi.org/10.3390/s20154257

Jeong S, Chang JY. MeshLifter: Weakly Supervised Approach for 3D Human Mesh Reconstruction from a Single 2D Pose Based on Loop Structure. Sensors. 2020; 20(15):4257. https://doi.org/10.3390/s20154257

Chicago/Turabian StyleJeong, Sunwon, and Ju Yong Chang. 2020. "MeshLifter: Weakly Supervised Approach for 3D Human Mesh Reconstruction from a Single 2D Pose Based on Loop Structure" Sensors 20, no. 15: 4257. https://doi.org/10.3390/s20154257

APA StyleJeong, S., & Chang, J. Y. (2020). MeshLifter: Weakly Supervised Approach for 3D Human Mesh Reconstruction from a Single 2D Pose Based on Loop Structure. Sensors, 20(15), 4257. https://doi.org/10.3390/s20154257