Monitoring of Assembly Process Using Deep Learning Technology

Abstract

1. Introduction

- (1)

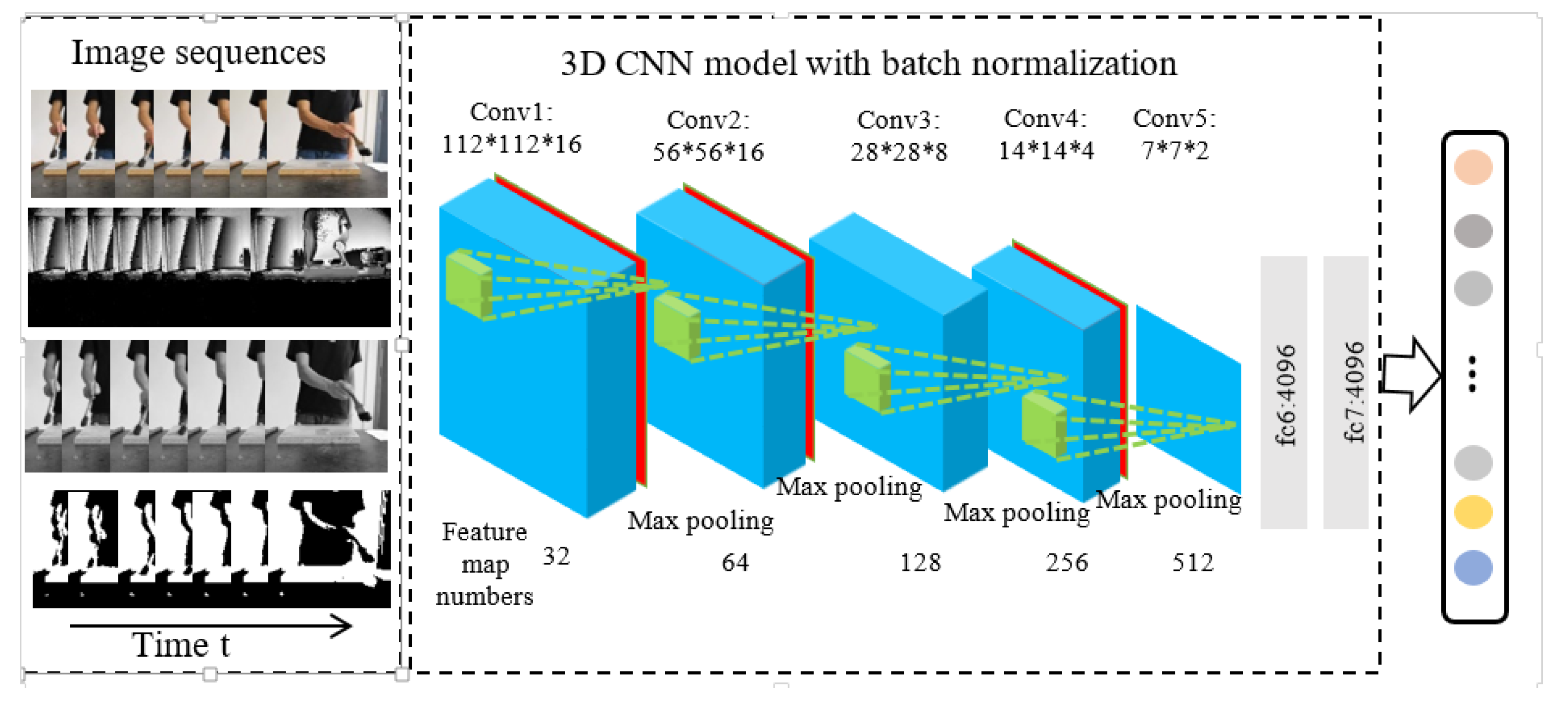

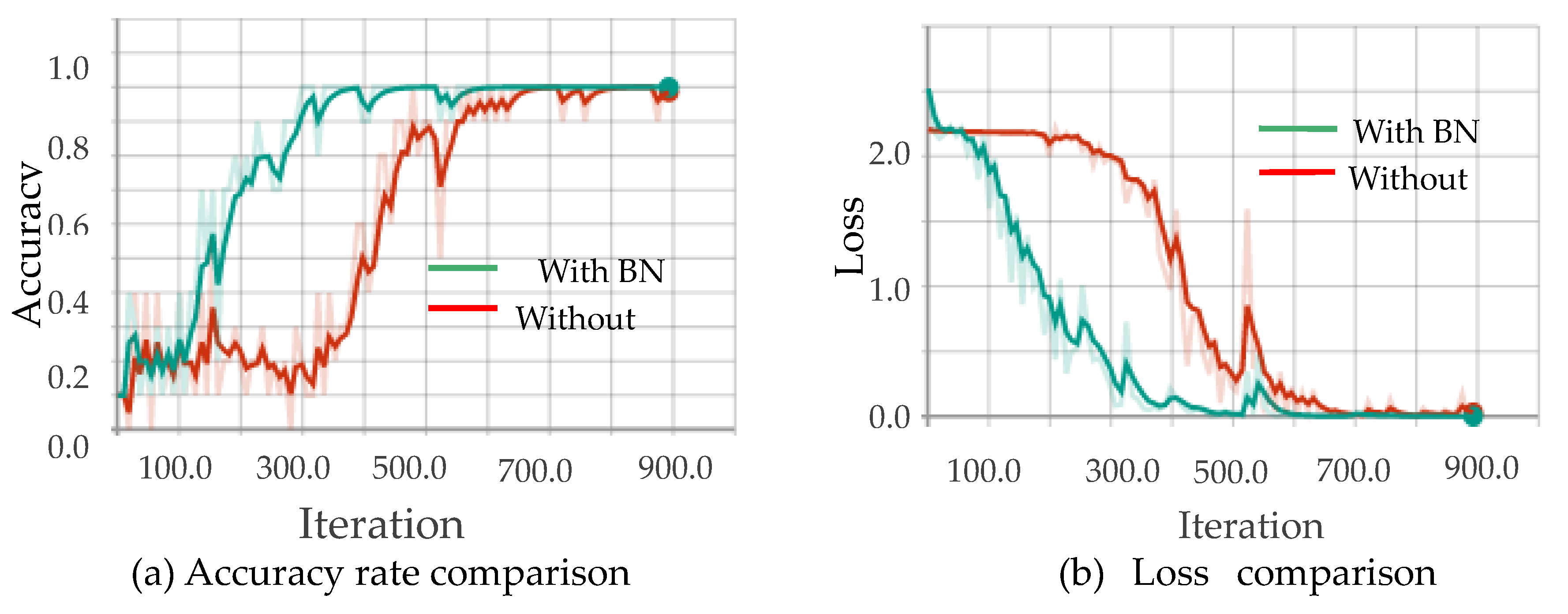

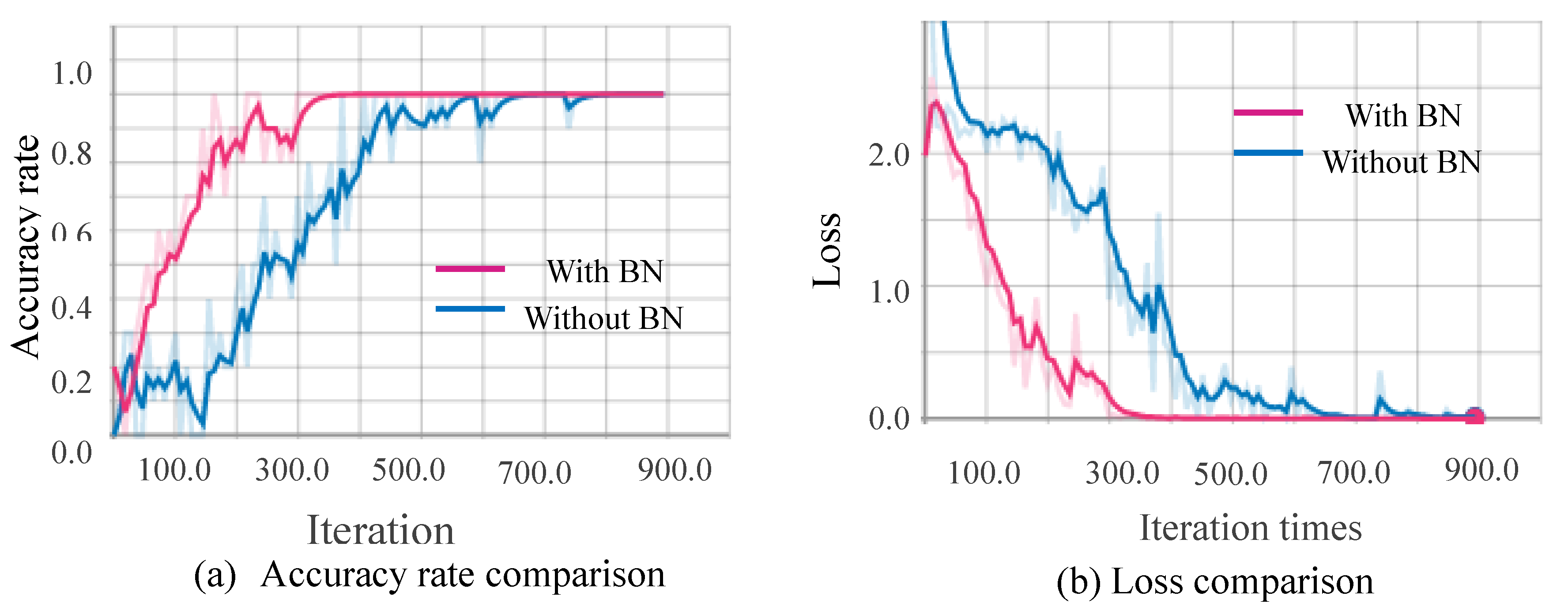

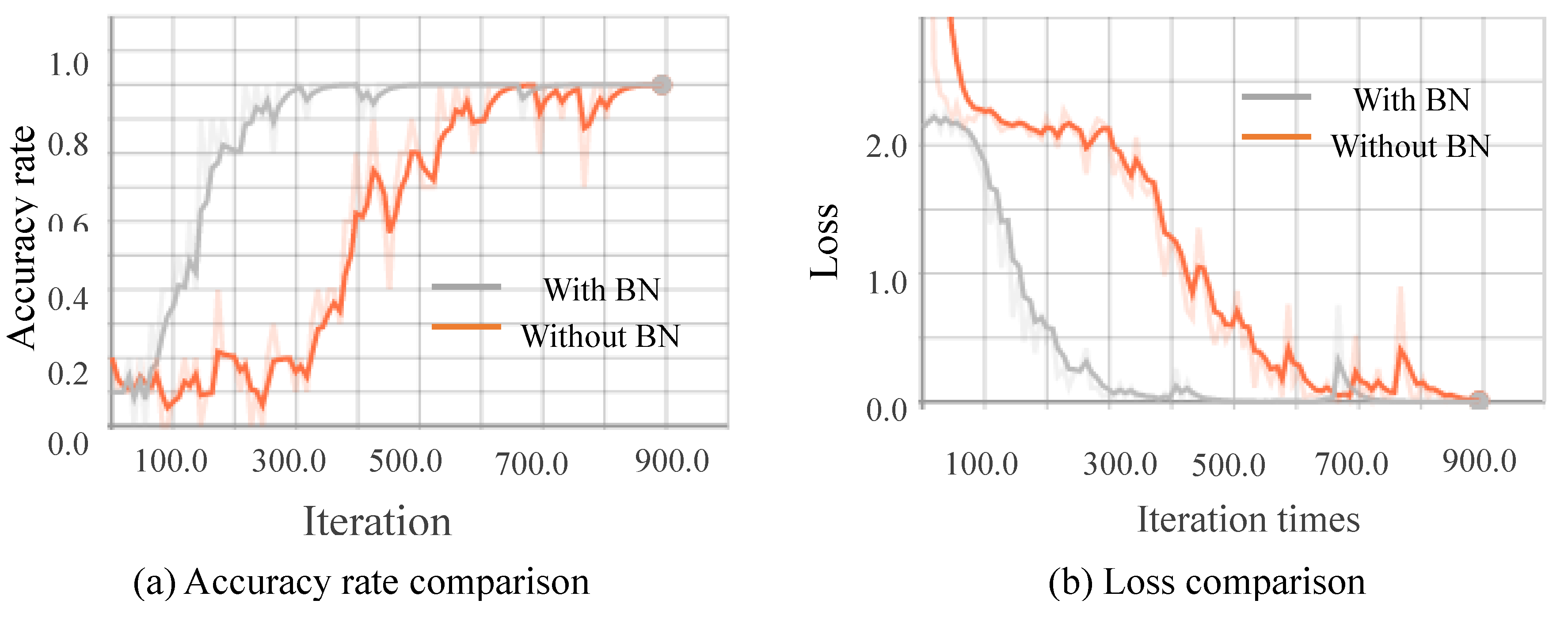

- We propose a three-dimensional convolutional neural network (3D CNN) model with batch normalization to recognize assembly actions. The proposed 3D CNN model with batch normalization can effectively reduce the number of training parameters and improving the convergence speed.

- (2)

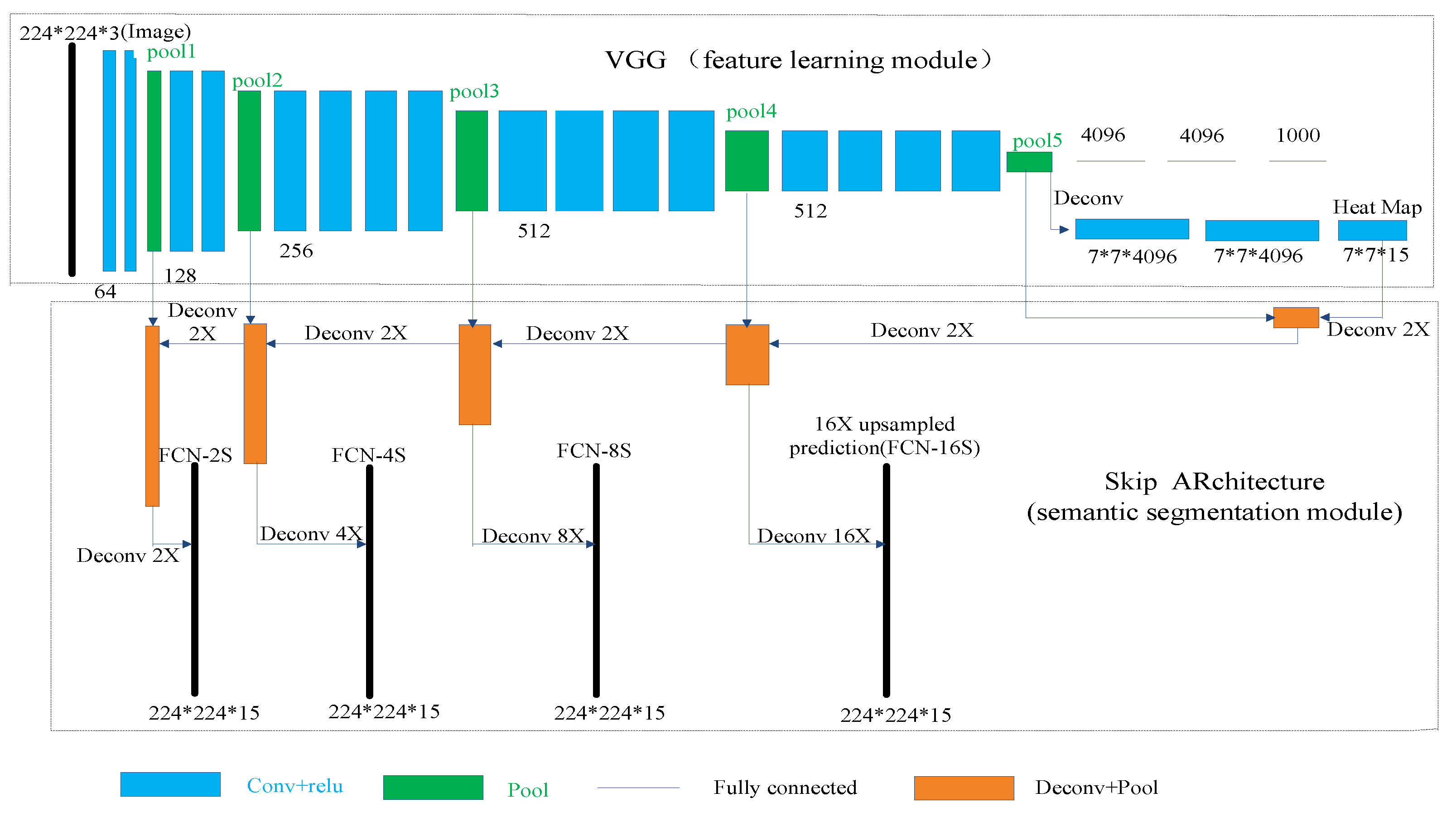

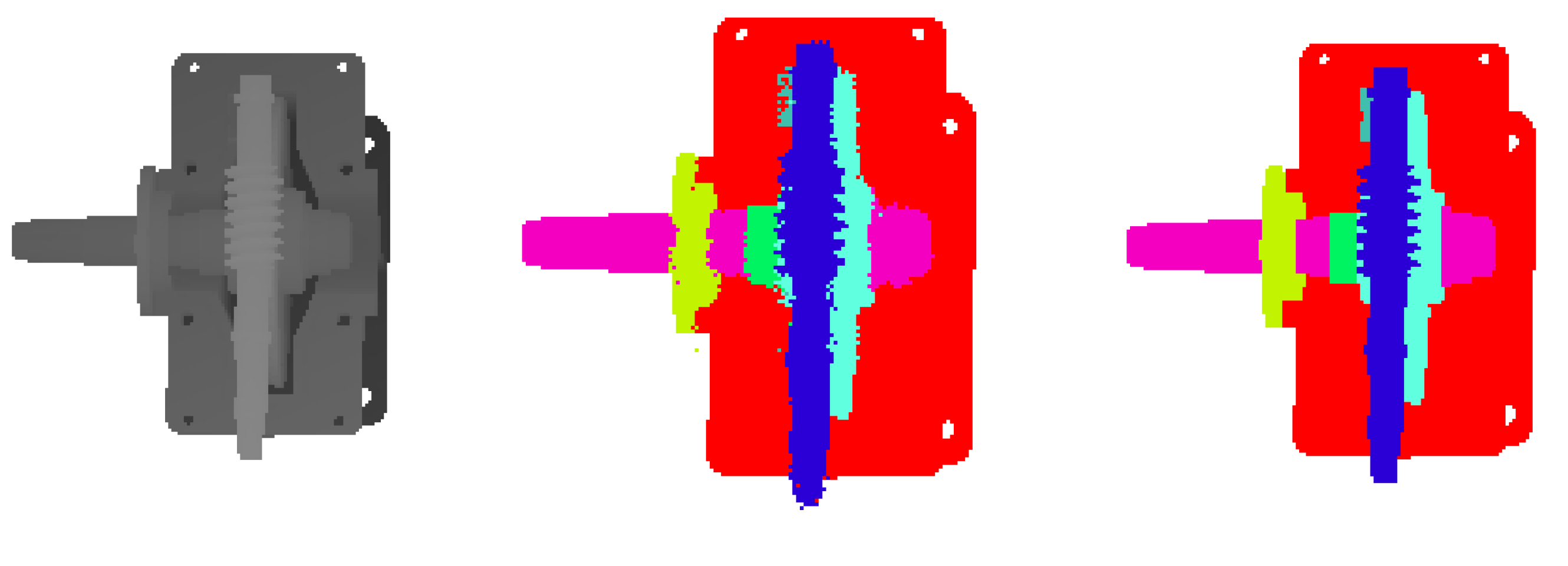

- The fully convolutional networks (FCN) is employed for segmenting different parts from complicated assembled product. After parts segmentation, the recognition of different parts from complicated assembled products is conducted to check the assembly sequence for missing or misaligned parts. As far as we know, we are the first to apply depth image segmentation technology to the application of monitoring of assembly process.

2. Related Work

3. Three-Dimensional CNN Model with Batch Normalization

4. FCN for Semantic Segmentation of Assembled Product

5. Creating Data Sets

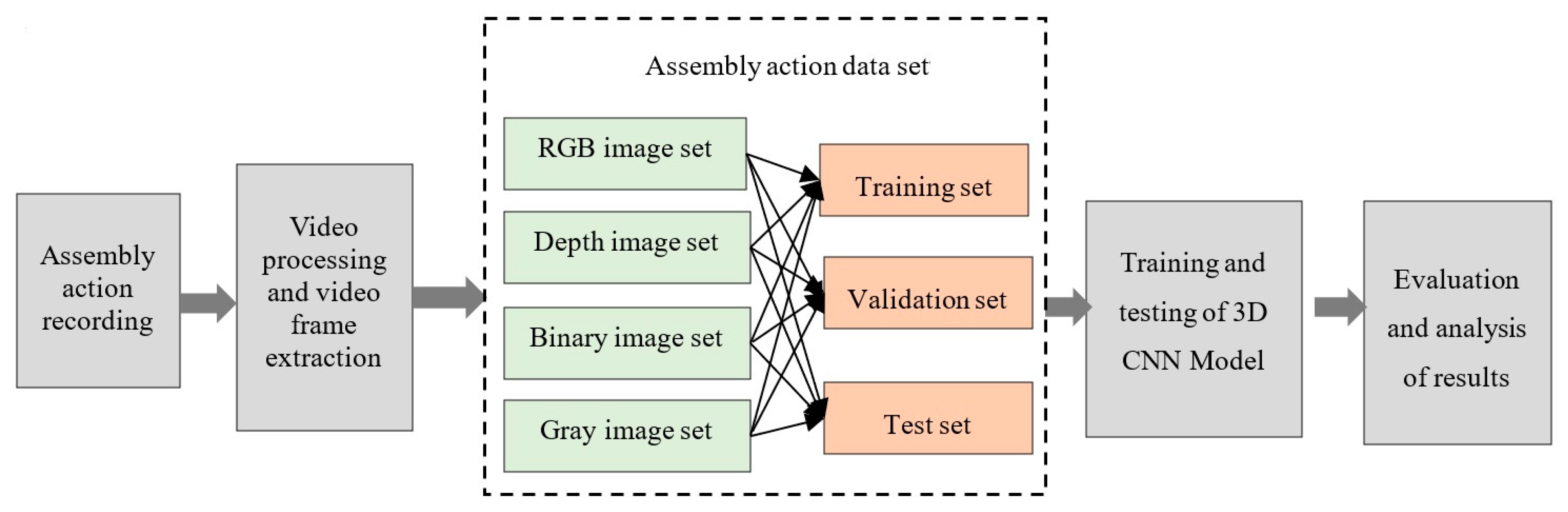

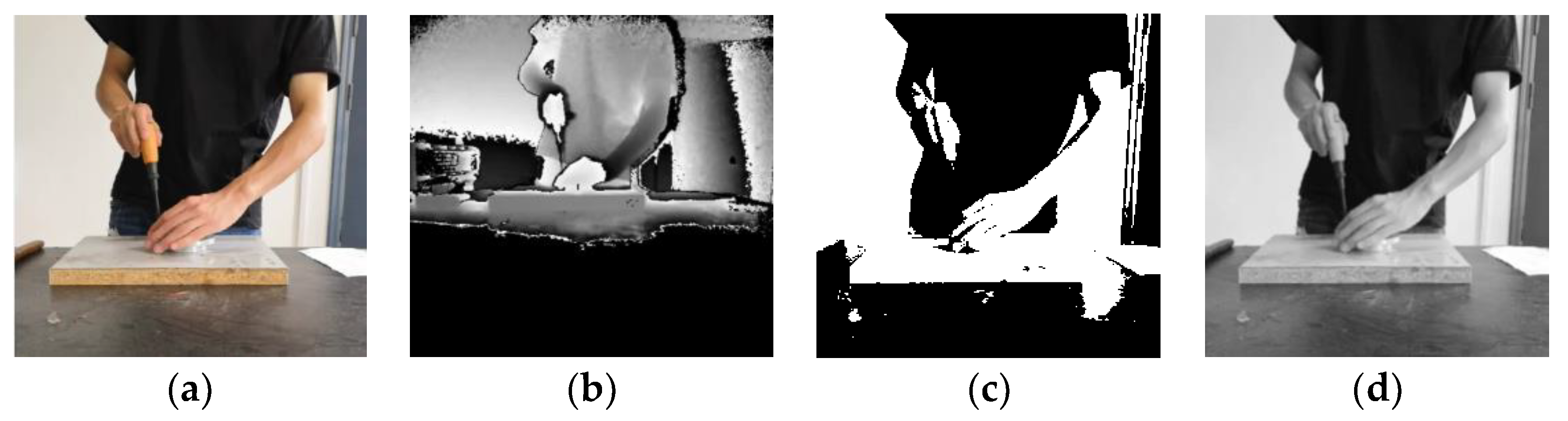

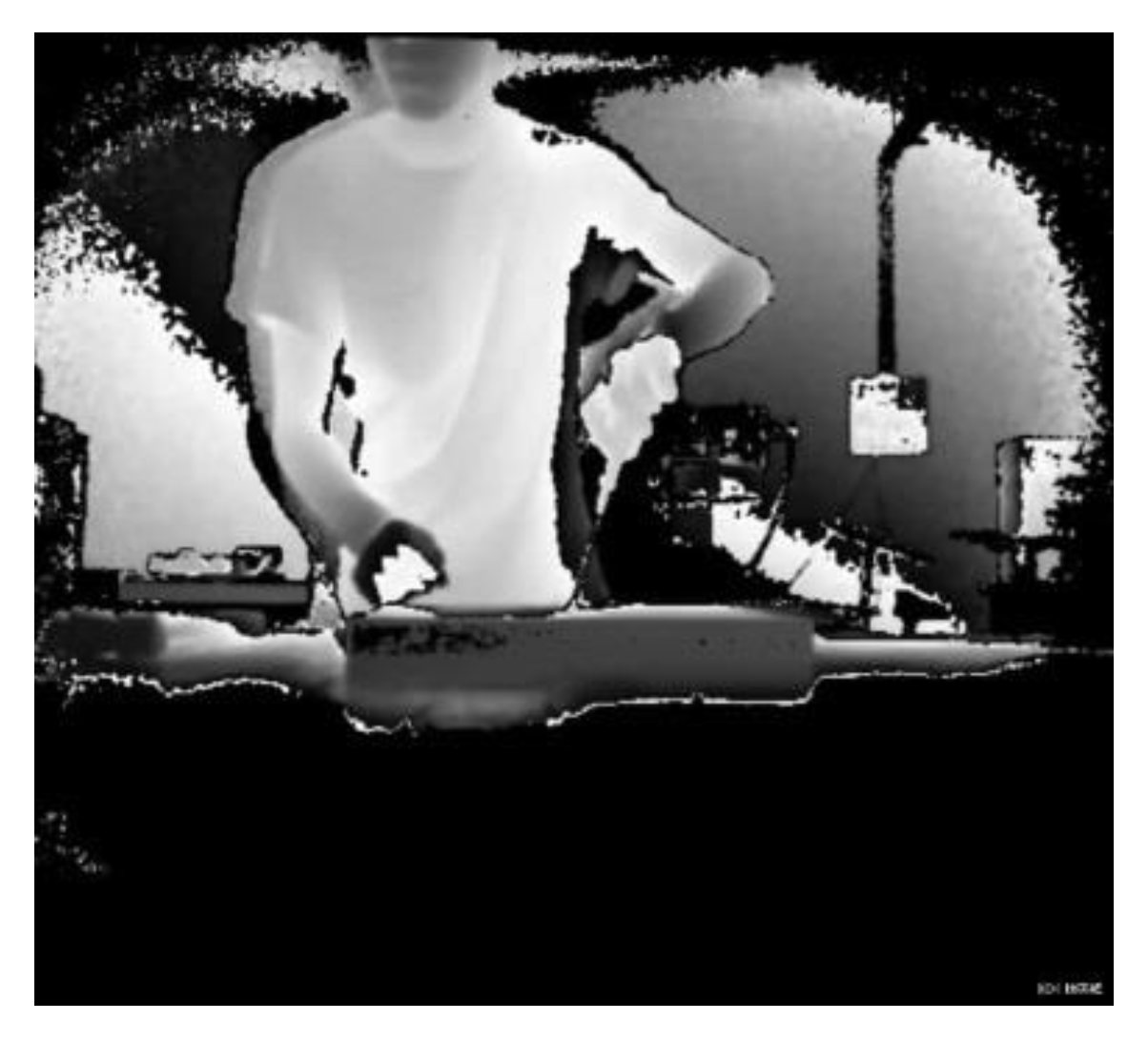

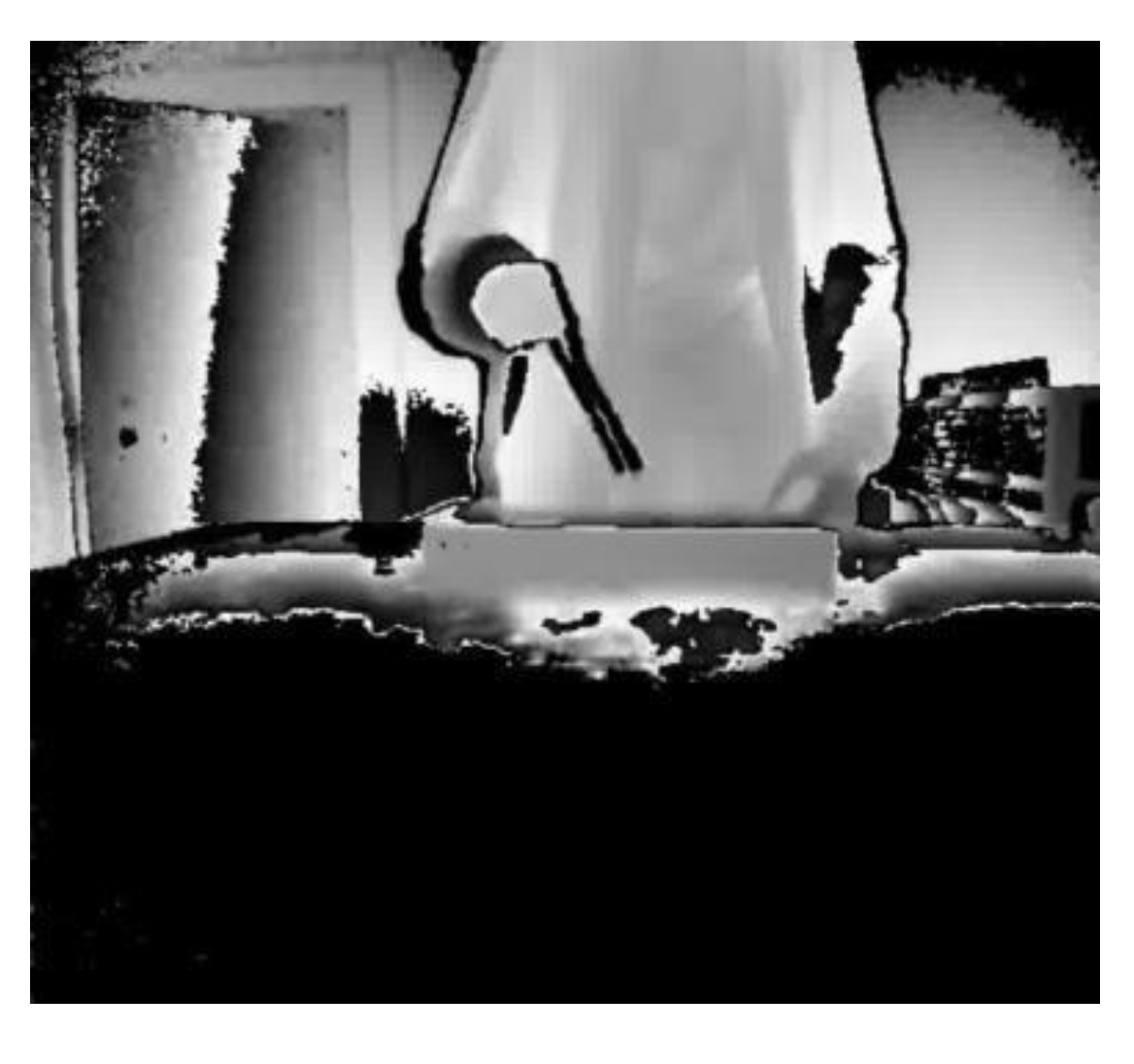

5.1. Creating the Data Set for Assembly Action Recognition

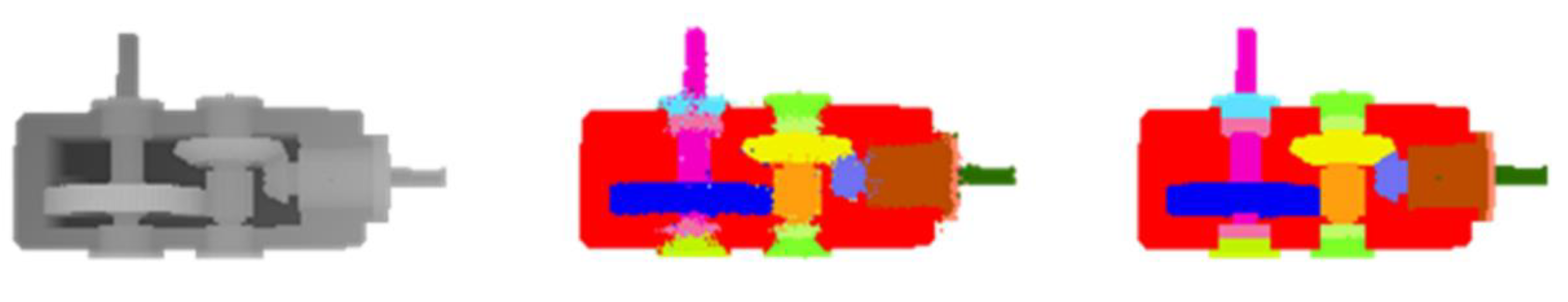

5.2. Creating the Data Set for Image Segmentation of Assembled Products

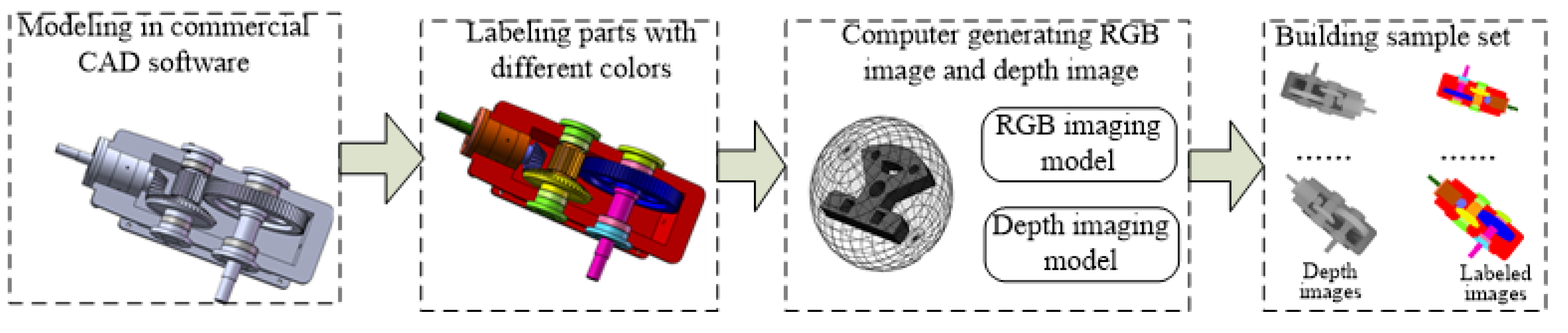

- (1)

- Commercial CAD software such as SolidWorks is selected to build the CAD model of the product and the CAD model of the product is saved in obj format.

- (2)

- Mutigen Creator modeling software is used to load the assembly model in obj format. Each part in the assembly model is labeled with one unique color. Therefore, different parts correspond to different RGB values. The assembly models for different assembly stages are saved in OpenFlight format.

- (3)

- The Open Scene Graph (OSG) 3D rendering engine is used to design an assembly labeling software, which can load and render the assembly model in OpenFlight format, and establish the depth camera imaging model and RGB camera imaging model. By changing the viewpoint orientation of the depth camera imaging model and RGB camera imaging model, the depth images and RGB images of product in different assembly stages and different perspectives can be synthesized by a computer.

6. Experiments and Results Analysis

6.1. Assembly Action Recognition Experiments and Results Analysis

6.1.1. Assembly Action Recognition Experiments

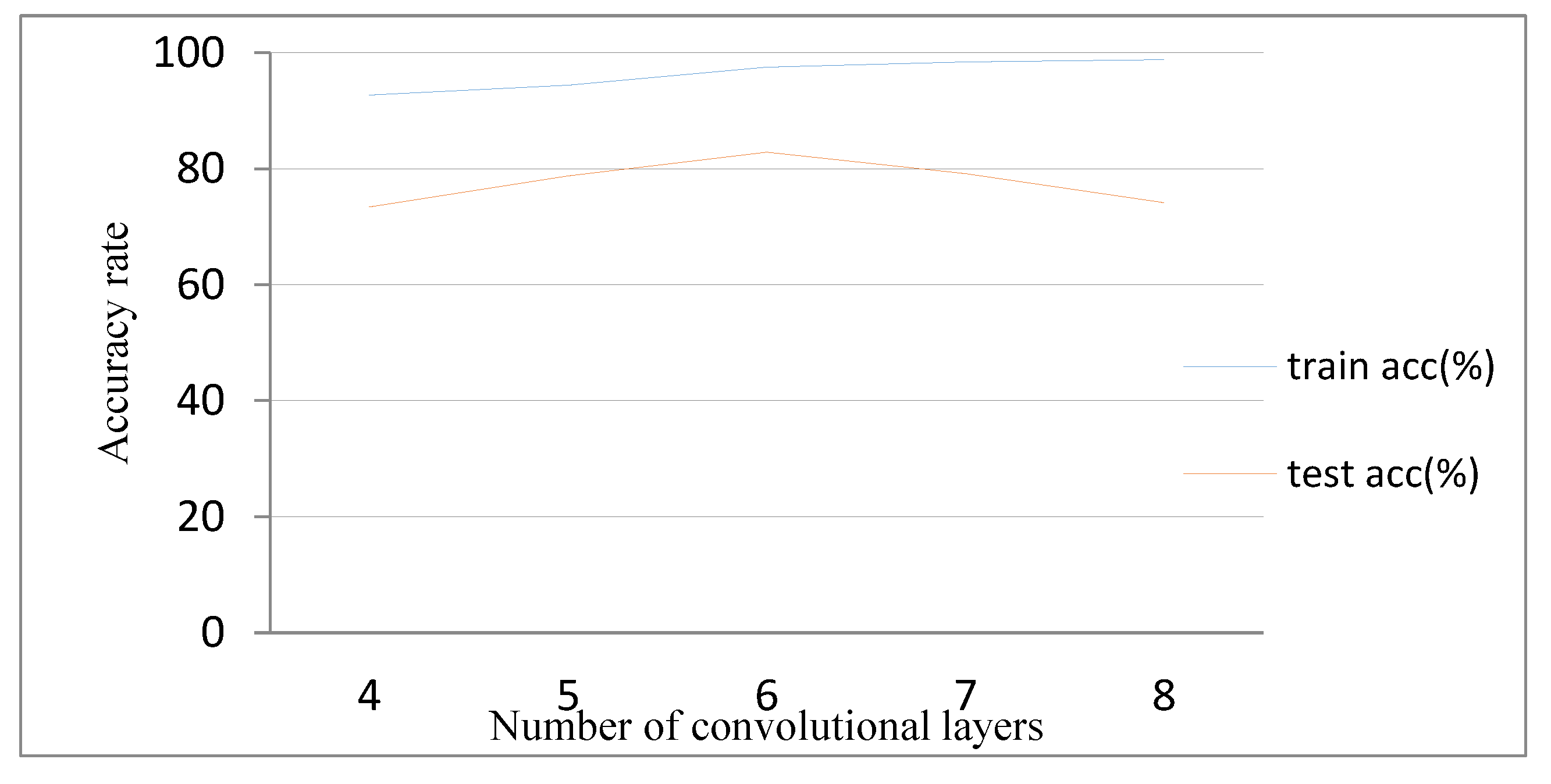

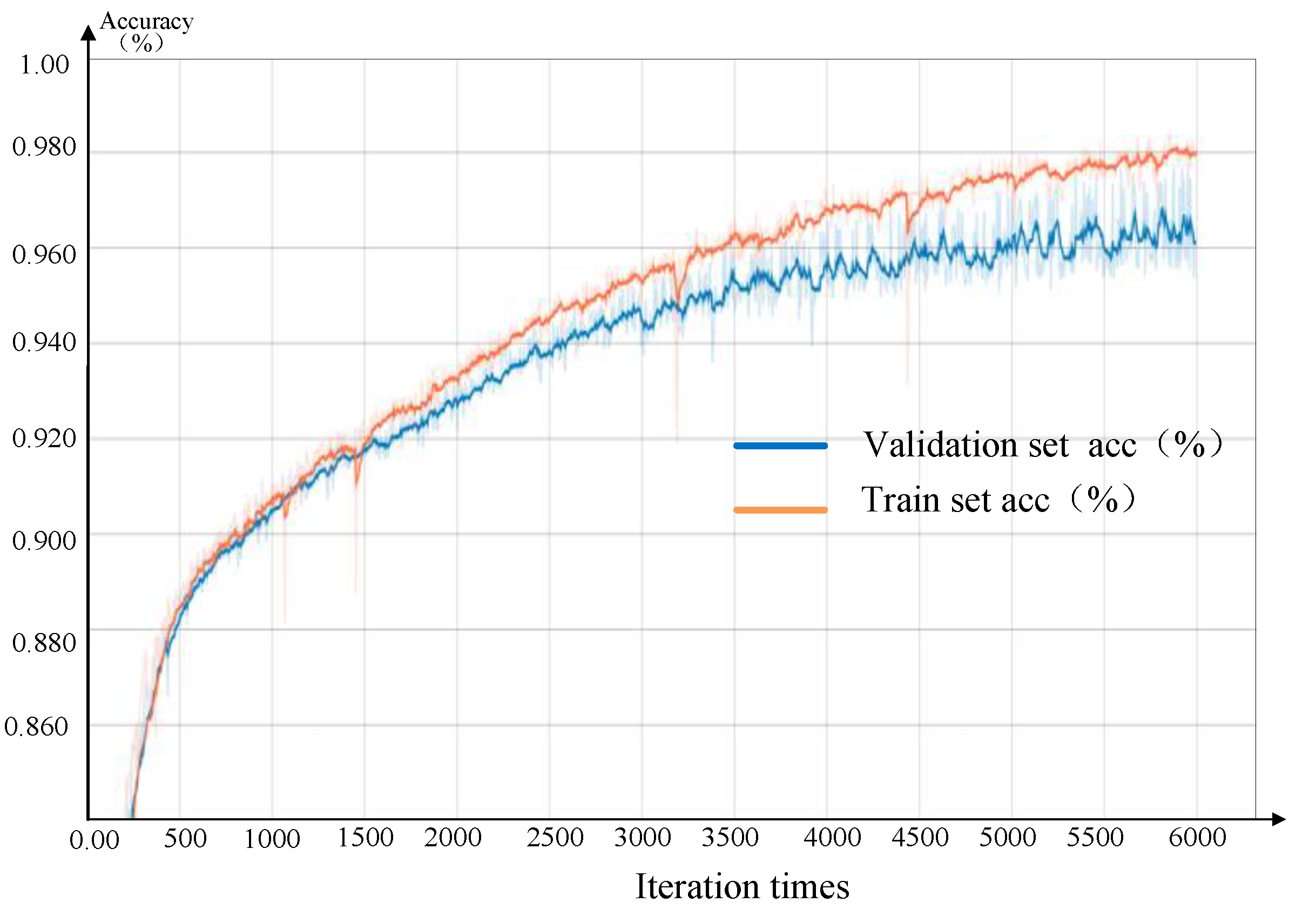

6.1.2. Analysis of Experimental Results

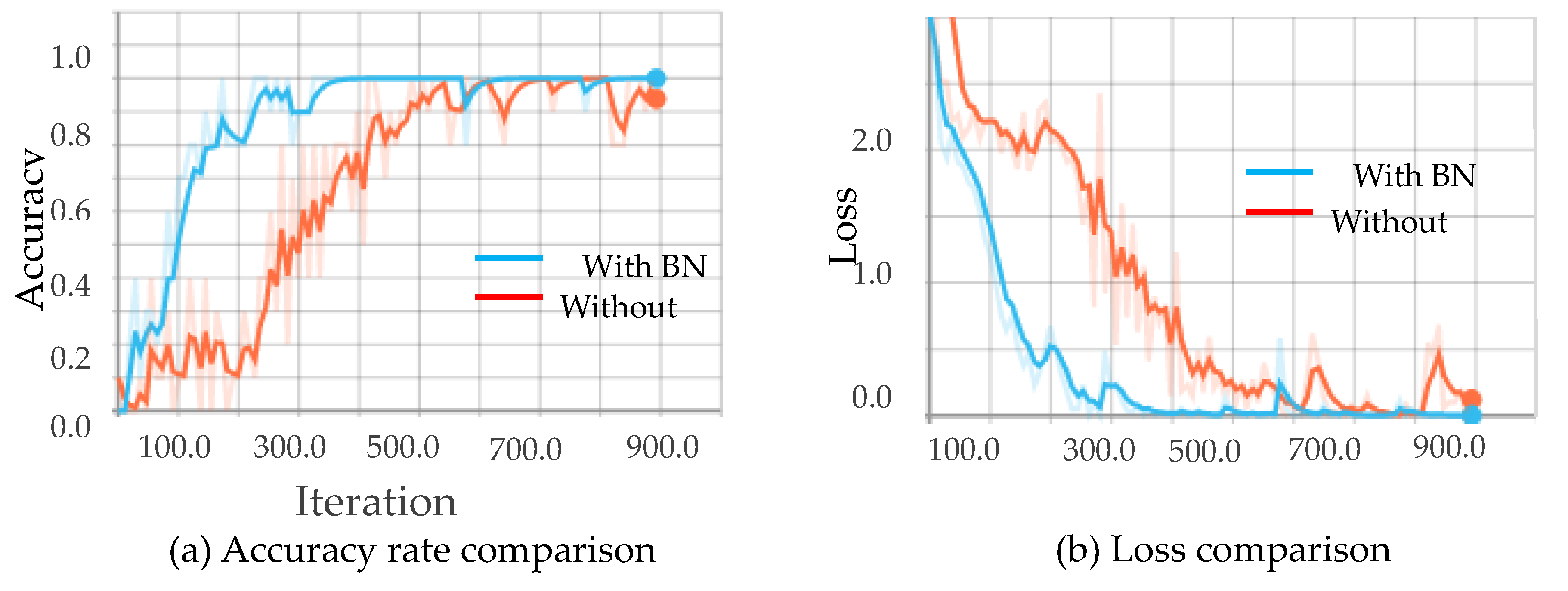

Comparison of Stability and Convergence Speed

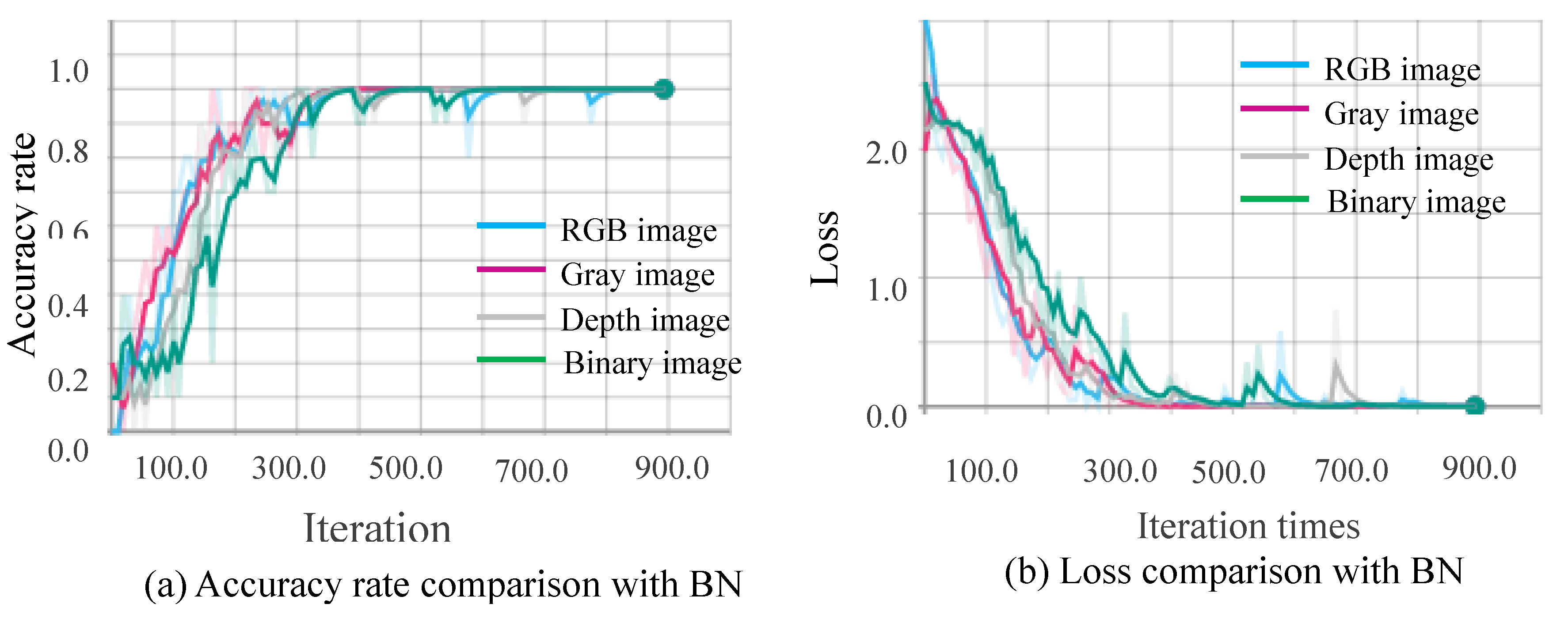

Comparison of Accuracy and Training Time

6.2. Parts Recognition Experiments and Results Analysis

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bobick, A.; Davis, J. An appearance-based representation of action. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; pp. 307–312. [Google Scholar]

- Weinland, D.; Ronfard, R.; Boyer, E. Free viewpoint action recognition using motion history volumes. Comput. Vis. Image Underst. 2006, 104, 249–257. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Chaudhry, R.; Ravichandran, A.; Hager, G.; Vidal, R. Histograms of oriented optical flow and Binet-Cauchy kernels on nonlinear dynamical systems for the recognition of human actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1932–1939. [Google Scholar]

- Schuldt, C.; Laptev, I.; Caputo, B. Recognizing human actions: A local SVM approach. In Proceedings of the 17th International Conference on Pattern Recognitio, Cambridge, UK, 26 August 2004; Volume 3, pp. 32–36. [Google Scholar]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.L. Dense trajectories and motion boundary descriptors for action recognition. Int. J. Comput. Vis. 2013, 103, 60–79. [Google Scholar] [CrossRef]

- Chen, C.; Wang, T.; Li, D.; Hong, J. Repetitive assembly action recognition based on object detection and pose estimation. J. Manuf. Syst. 2020, 55, 325–333. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Kim, M.; Choi, W.; Kim, B.C.; Kim, H.; Seol, J.H.; Woo, J.; Ko, K.H. A vision-based system for monitoring block assembly in shipbuilding. Comput. Aided Des. 2015, 59, 98–108. [Google Scholar] [CrossRef]

- Židek, K.; Hosovsky, A.; Piteľ, J.; Bednár, S. Recognition of Assembly Parts by Convolutional Neural Networks. In Advances in Manufacturing Engineering and Materials; Lecture Notes in Mechanical Engineering; Springer: Cham, Switzerland, 2019; pp. 281–289. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Lecture Notes in Computer Science. Volume 9912, pp. 20–36. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Du, W.; Wang, Y.; Qiao, Y. RPAN: An end-to-end recurrent pose-attention network for action recognition in videos. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3745–3754. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Darrell, T.; Saenko, K. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Xu, H.; Das, A.; Saenko, K. R-C3D: Region Convolutional 3D Network for Temporal Activity Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5783–5792. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 11 February 2015. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the 24th IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Joo, S.I.; Weon, S.H.; Hong, J.M.; Choi, H.I. Hand detection in depth images using features of depth difference. In Proceedings of the International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV). The Steering Committee of the World Congress in Computer Science, Computer Engineering and Applied Computing (World Comp), Las Vegas, NV, USA, 22–25 July 2013; Volume 1. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large kernel matters—Improve semantic segmentation by global convolutional network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4353–4361. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Yang, Y.; Zhao, Q.; Shen, T.; Lin, Z.; Liu, H. Spatial pyramid based graph reasoning for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 8950–8959. [Google Scholar]

- Zhong, Z.; Lin, Z.Q.; Bidart, R.; Hu, X.; Daya, I.B.; Li, Z.; Wong, A. Squeeze-and-attention networks for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 13065–13074. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 603–612. [Google Scholar]

- Fu, J.; Liu, J.; Wang, Y.; Zhou, J.; Wang, C.; Lu, H. Stacked deconvolutional network for semantic segmentation. IEEE Trans. Image Process. 2019. [CrossRef] [PubMed]

- Artacho, B.; Savakis, A. Waterfall atrous spatial pooling architecture for efficient semantic segmentation. Sensors 2019, 19, 5361. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Ball, J.E.; Tang, B.; Carruth, D.W.; Doude, M.; Islam, M.A. Semantic segmentation with transfer learning for off-road autonomous driving. Sensors 2019, 19, 2577. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 315–323. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Method | Crop Size | Loss Function | Optimizer | Learning Rate | Batch Size | Decay Rate | Decay Steps |

|---|---|---|---|---|---|---|---|

| 3D CNN | 112 × 112 | Cross_entropy | Adam | 0.0001 | 10 | 0.5 | 2 |

| Data Set Type | RBG Image | Binary Image | Gray Image | Depth Image |

|---|---|---|---|---|

| Accuracy (without BN) | 82.85% | 79.78% | 80.86% | 70% |

| Accuracy (with BN) | 83.70% | 79.88% | 81.89% | 68.75% |

| Training Time (without BN) | 50 m 37 s | 54 m 34 s | 46 m 45 s | 55 m 9 s |

| Training Time (with BN) | 51 m 10 s | 54 m 35 s | 48 m 3 s | 55 m 50 s |

| Method | Image Size | Loss Function | Optimizer | Learning Rate | Batch Size | The Number of Parameters |

|---|---|---|---|---|---|---|

| FCN-16S | 224 * 224 | Cross_entropy | Adam | 0.00001 | 1 | 145259614 |

| FCN-8S | 224 * 224 | Cross_entropy | Adam | 0.00001 | 1 | 139558238 |

| FCN-4S | 224 * 224 | Cross_entropy | Adam | 0.00001 | 1 | 140139998 |

| FCN-2S | 224 * 224 | Cross_entropy | Adam | 0.00001 | 1 | 140163614 |

| Method | Data Set | Pixel Classification Accuracy (PA) (Gear Reducer) | Pixel Classification Accuracy (PA) (Worm Gear Reducer) |

|---|---|---|---|

| FCN-16S | validation set | 93.84% | 97.64% |

| FCN-8S | 96.10% | 97.83% | |

| FCN-4S | 97.72% | 98.59% | |

| FCN-2S | 98.80% | 99.53% | |

| FCN-2S | test set | 94.95% | 96.52% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Zhang, C.; Wang, T.; Li, D.; Guo, Y.; Zhao, Z.; Hong, J. Monitoring of Assembly Process Using Deep Learning Technology. Sensors 2020, 20, 4208. https://doi.org/10.3390/s20154208

Chen C, Zhang C, Wang T, Li D, Guo Y, Zhao Z, Hong J. Monitoring of Assembly Process Using Deep Learning Technology. Sensors. 2020; 20(15):4208. https://doi.org/10.3390/s20154208

Chicago/Turabian StyleChen, Chengjun, Chunlin Zhang, Tiannuo Wang, Dongnian Li, Yang Guo, Zhengxu Zhao, and Jun Hong. 2020. "Monitoring of Assembly Process Using Deep Learning Technology" Sensors 20, no. 15: 4208. https://doi.org/10.3390/s20154208

APA StyleChen, C., Zhang, C., Wang, T., Li, D., Guo, Y., Zhao, Z., & Hong, J. (2020). Monitoring of Assembly Process Using Deep Learning Technology. Sensors, 20(15), 4208. https://doi.org/10.3390/s20154208