A Novel Surface Electromyographic Signal-Based Hand Gesture Prediction Using a Recurrent Neural Network

Abstract

1. Introduction

- A hand gesture dataset containing 21 short-term gestures of 13 subjects is recorded by the Myo armband, which is publicly available on the Github that also includes our code (https://github.com/ChauncyHe/HandGesturePrediction).

- A novel RNN model to predict hand gesture is proposed, which is able to predict the gesture in the process of the gesture. When sEMG data points of 200 ms are used, which are generated after the motion start of the gesture, the accuracy could be about 89.6%.

2. Materials and Methods

2.1. sEMG Dataset

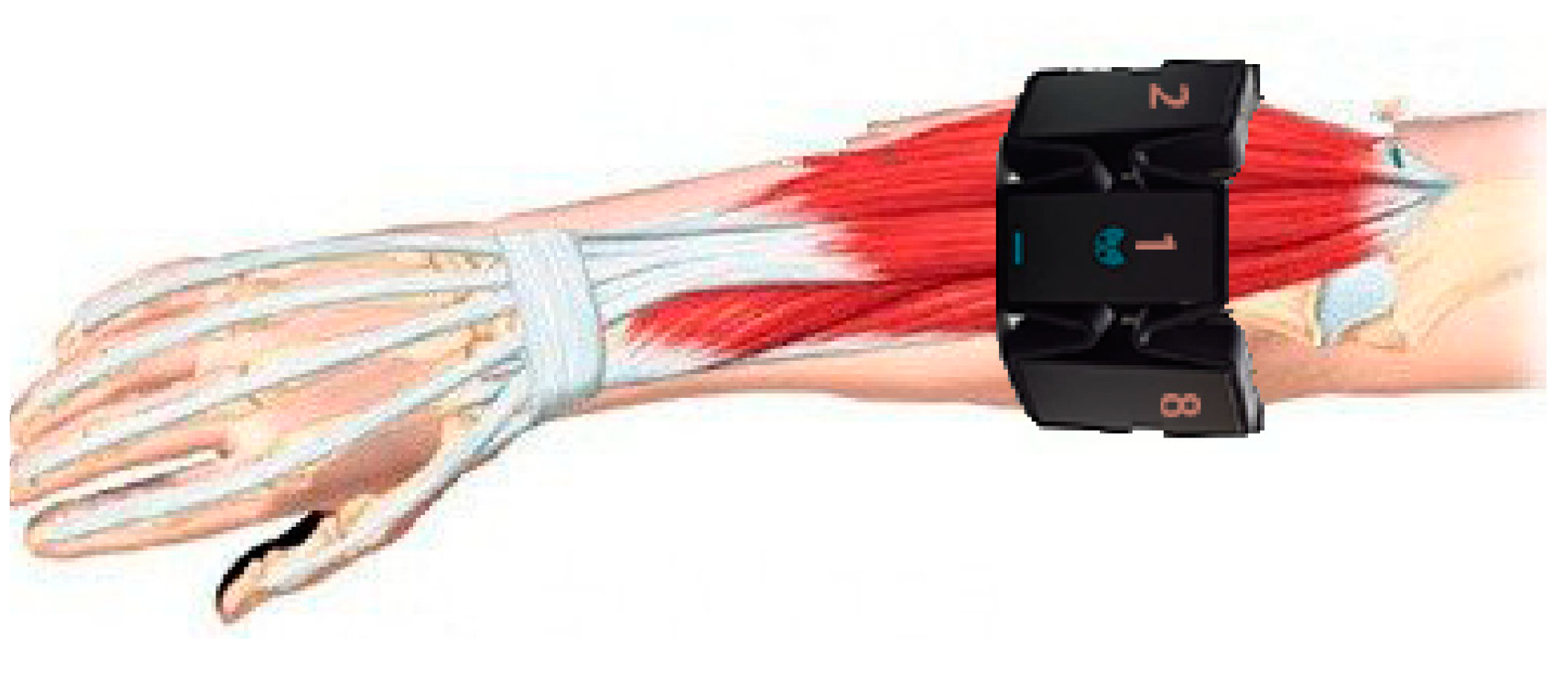

2.1.1. Recording Device

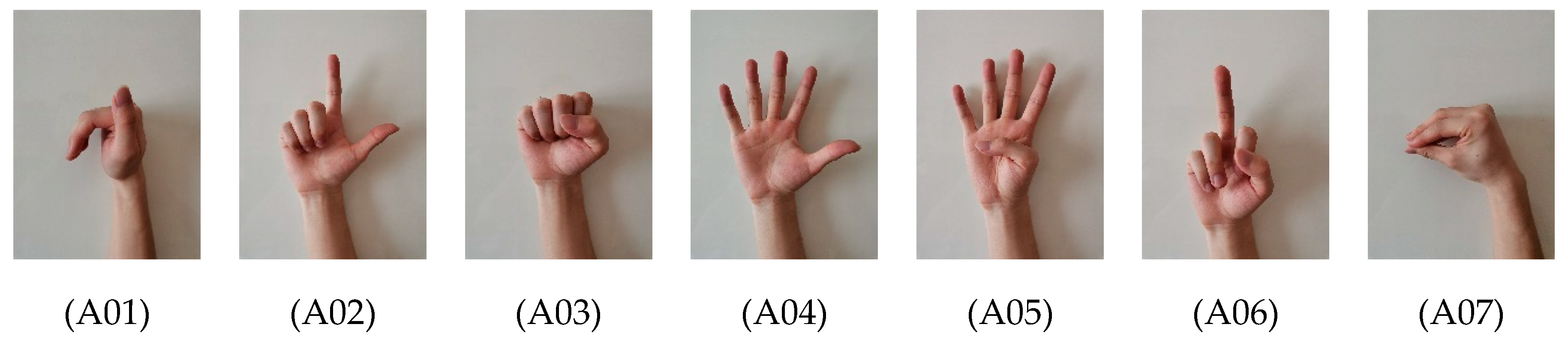

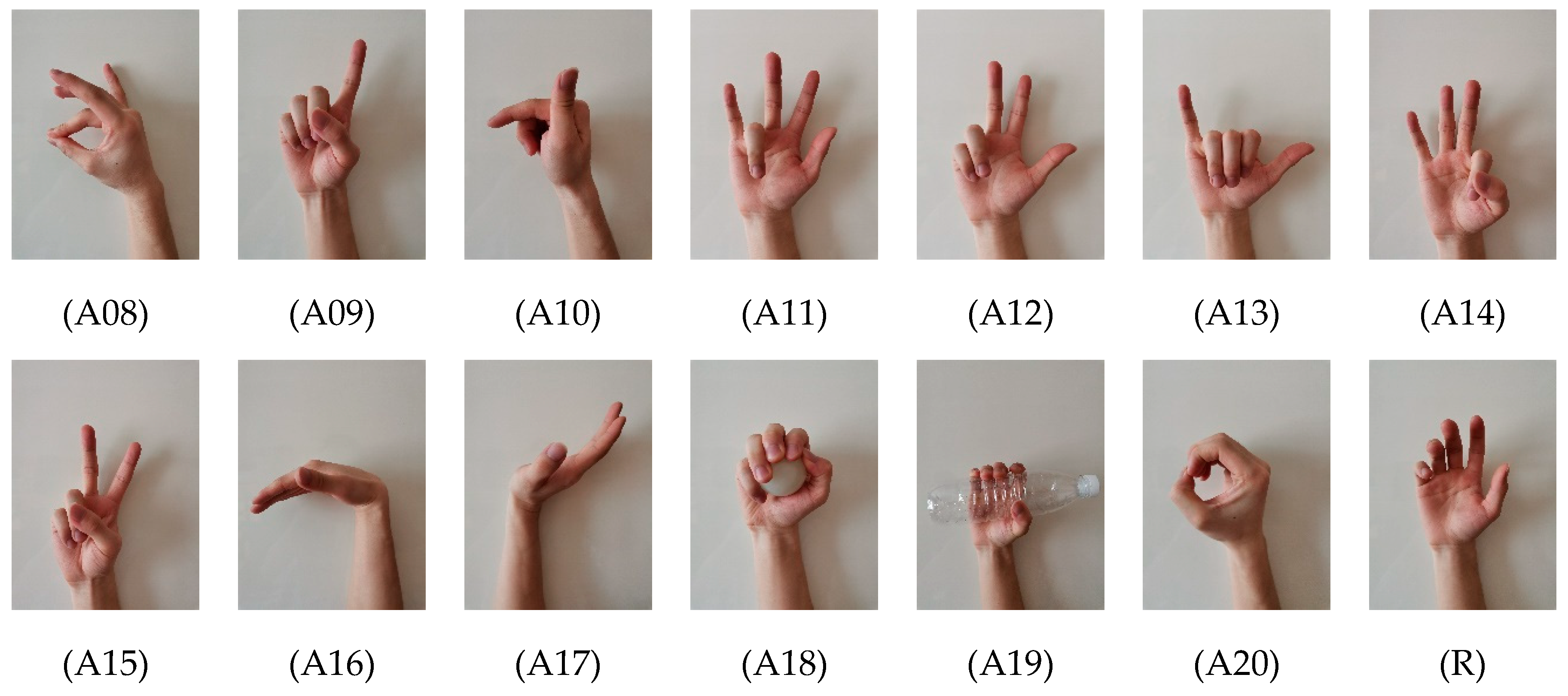

2.1.2. Hand Gestures

2.1.3. Acquisition Protocol

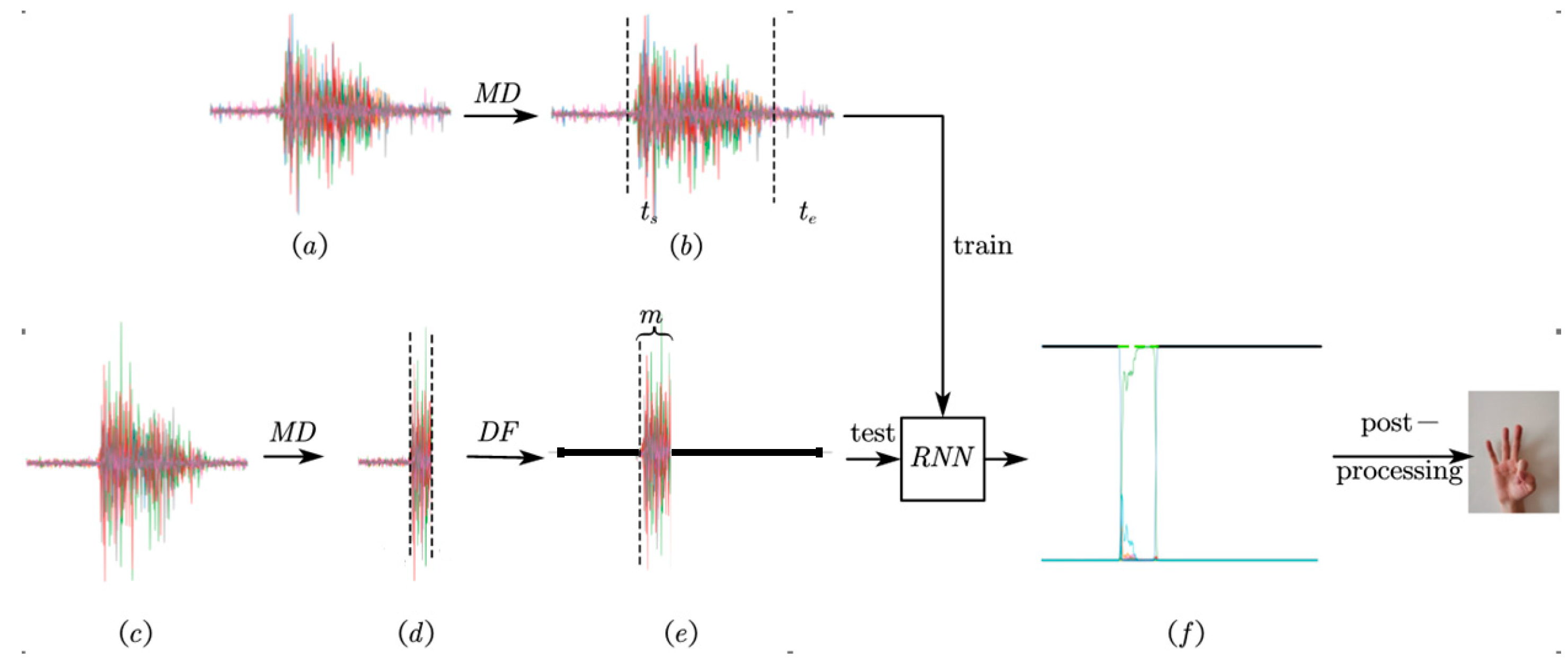

2.2. Methods

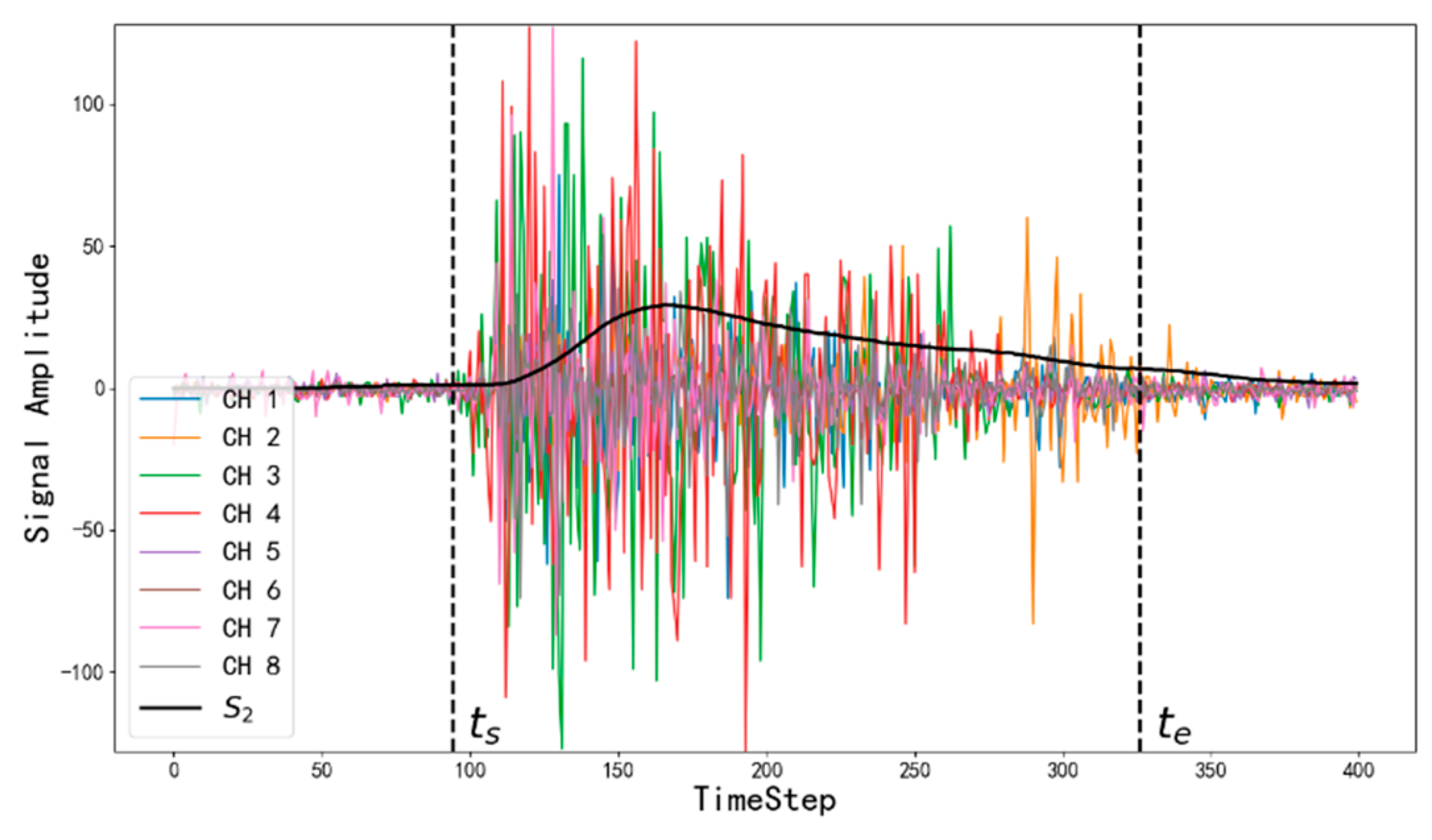

2.2.1. Motion Detection

2.2.2. Model Structure

2.2.3. Post-Processing

2.3. Training and Test Details

3. Results and Analysis

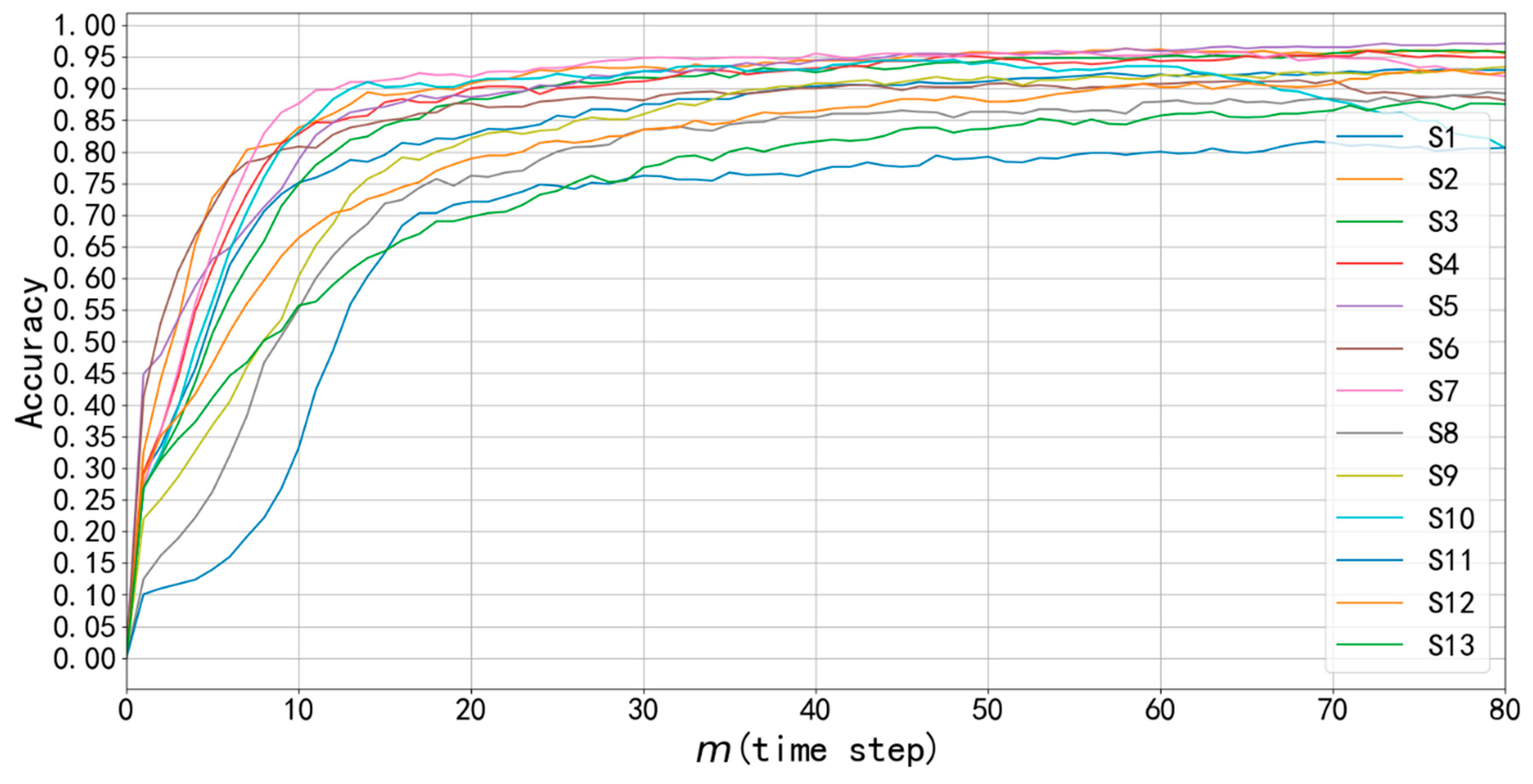

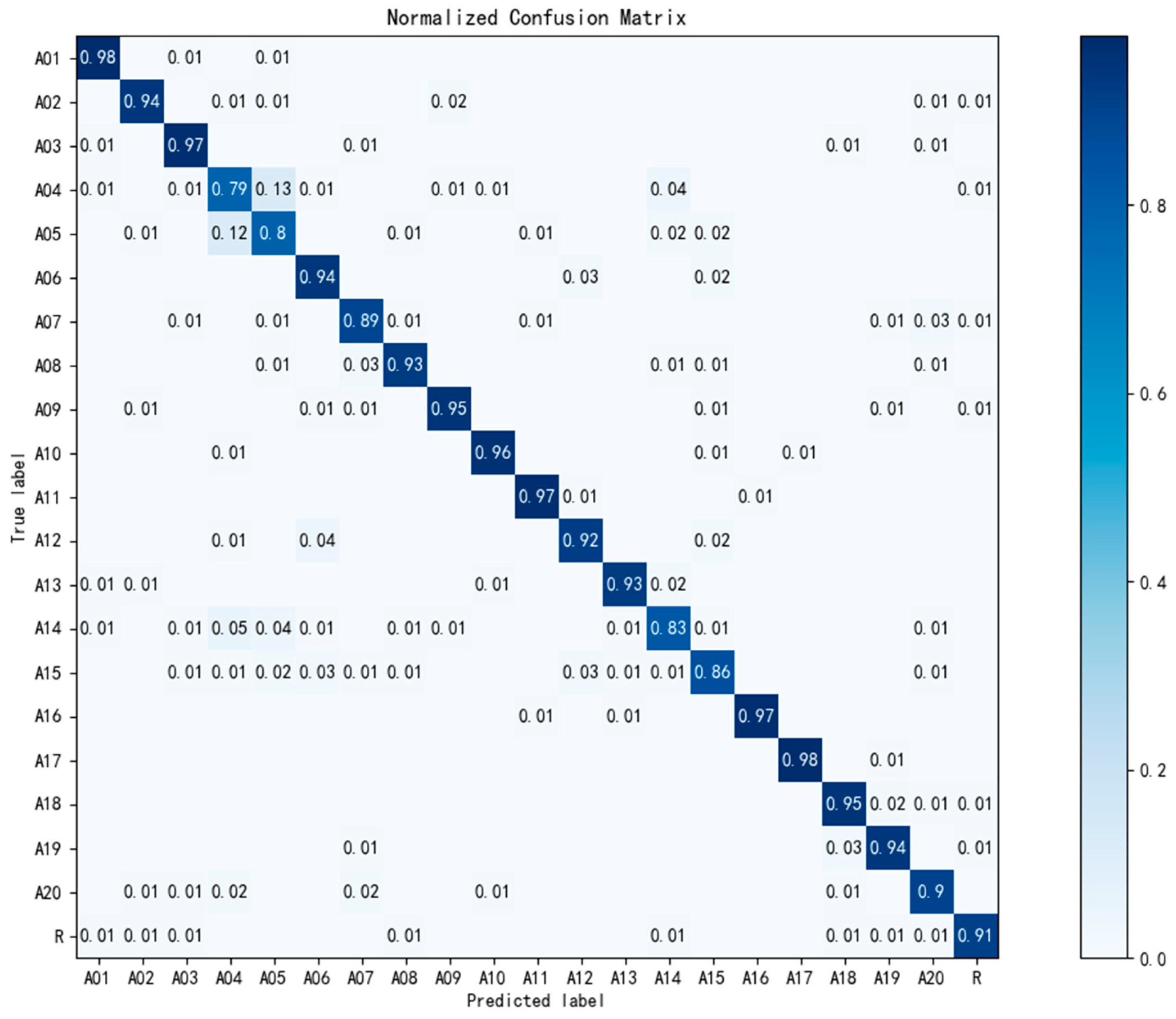

3.1. Accuracy Performance

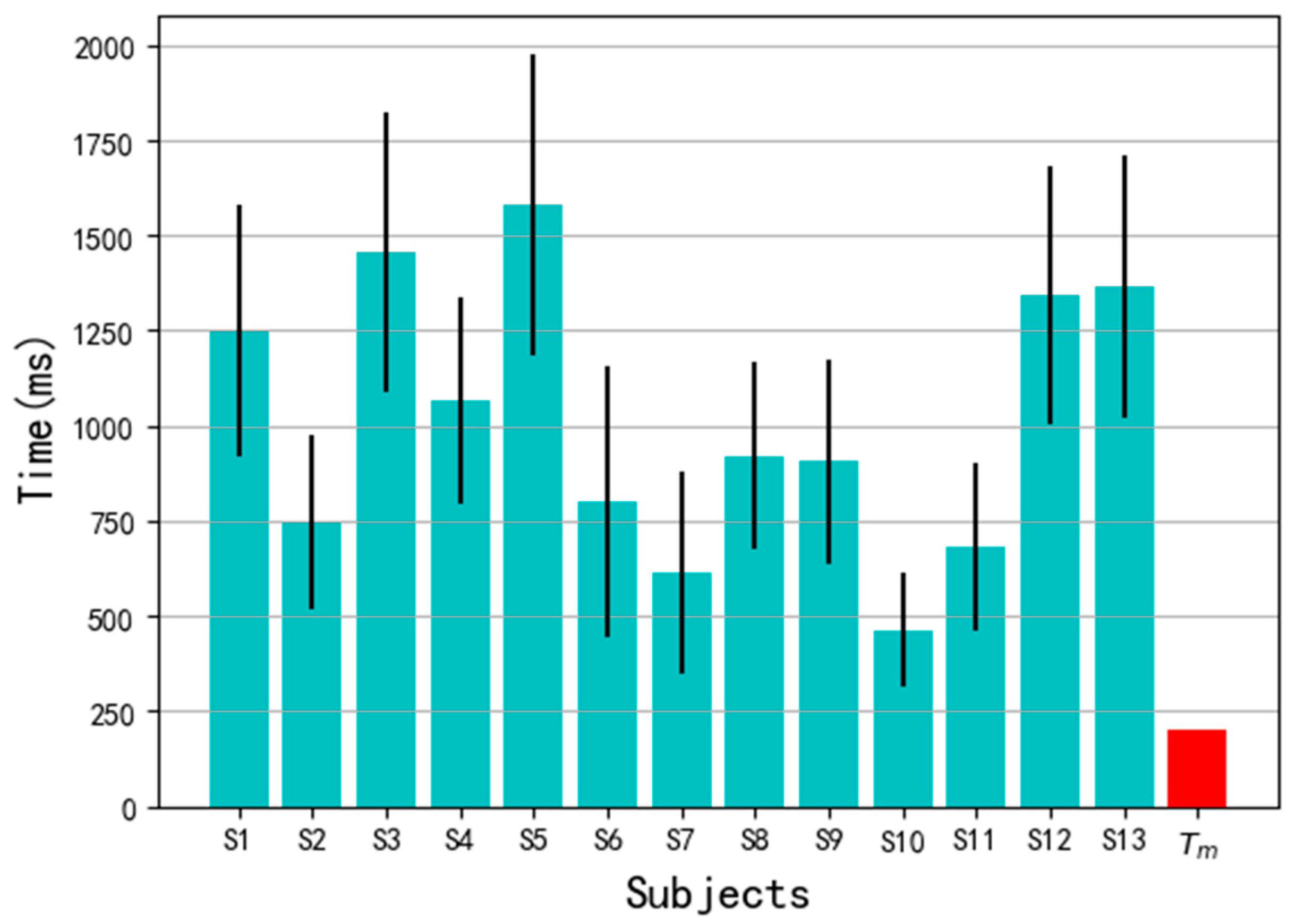

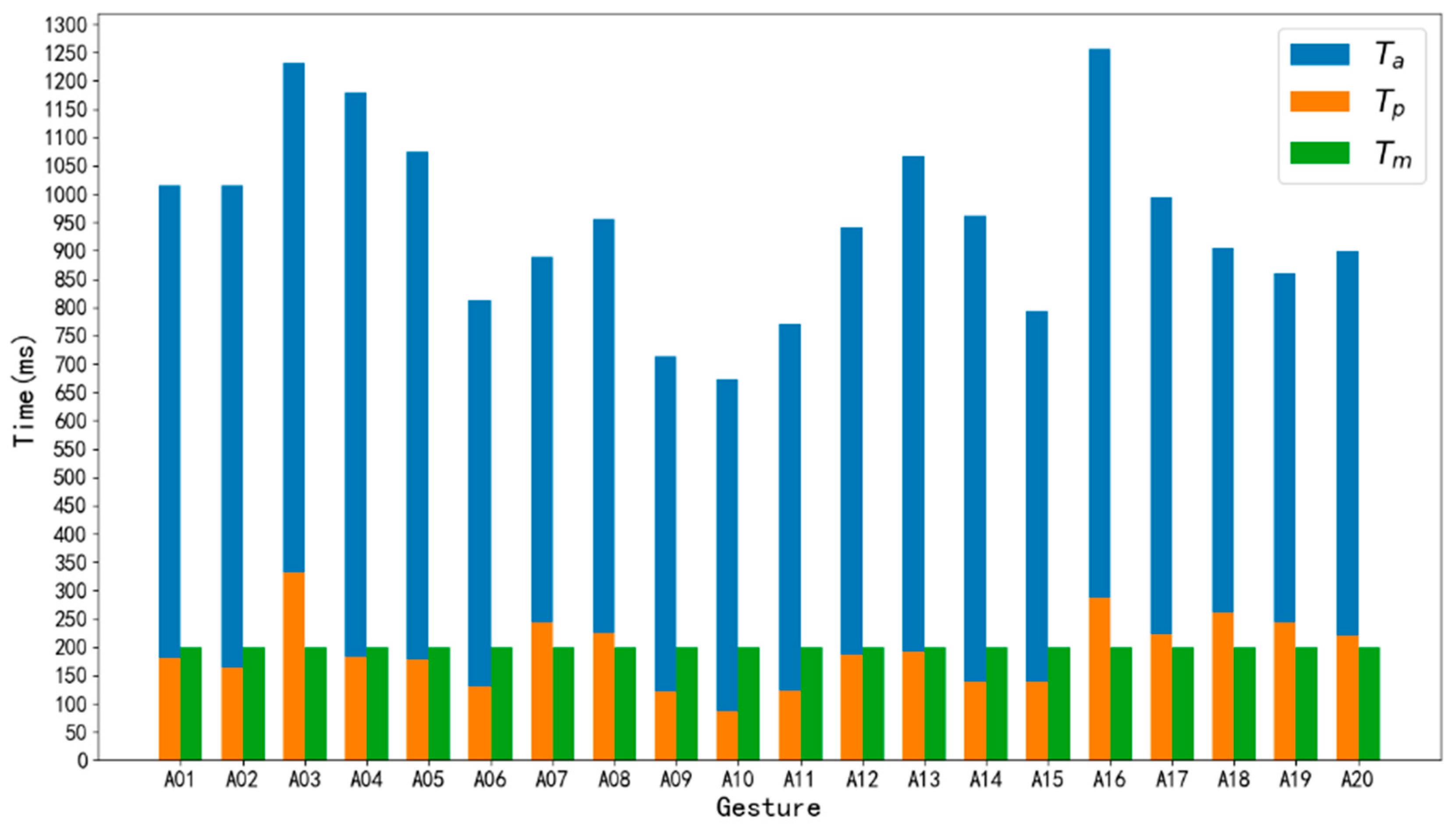

3.2. Real-Time Performance

3.3. The Feasibility of Prediction

3.4. Comparison with Other Methods

3.5. Limitations

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, Y.; Englehart, K.; Hudgins, B. A Gaussian Mixture Model Based Classification Scheme for Myoelectric Control of Powered Upper Limb Prostheses. IEEE Trans. Biomed. Eng. 2005, 52, 1801–1811. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, X. Bio-inspired motion planning for reaching movement of a manipulator based on intrinsic tau jerk guidance. Adv. Manuf. 2019, 7, 315–325. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, X.; Li, Y. A framework for hand gesture recognition based on accelerometer and emg sensors. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2011, 41, 1064–1076. [Google Scholar] [CrossRef]

- Yang, X.; Chen, X.; Cao, X.; Wei, S.; Zhang, X. Chinese sign language recognition based on an optimized tree-structure framework. IEEE J. Biomed. Health Inf. 2016, 21, 994–1004. [Google Scholar] [CrossRef] [PubMed]

- Kundu, A.S.; Mazumder, O.; Lenka, P.K.; Bhaumik, H. Hand Gesture Recognition Based Omnidirectional Wheelchair Control Using Imu and Emg Sensors. J. Intell. Rob. Syst. 2018, 91, 529–541. [Google Scholar] [CrossRef]

- Allard, U.C.; Nougarou, F.; Fall, C.L.; Giguère, P.; Gosselin, C.; Laviolette, F.; Gosselin, B. A convolutional neural network for robotic arm guidance using sEMG based frequency-features. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2464–2470. [Google Scholar]

- Xiao, S.; Liu, S.; Wang, H.; Lin, Y.; Song, M.; Zhang, H. Nonlinear dynamics of coupling rub-impact of double translational joints with subsidence considering the flexibility of piston rod. Nonlinear Dyn. 2020, 100, 1203–1229. [Google Scholar] [CrossRef]

- Fang, G.; Gao, W.; Zhao, D. Large Vocabulary Sign Language Recognition Based on Fuzzy Decision Trees. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2004, 34, 305–314. [Google Scholar] [CrossRef]

- Starner, T.; Weaver, J.; Pentland, A. Real-time American Sign Language Recognition Using Desk and Wearable Computer Based Video. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1371–1375. [Google Scholar] [CrossRef]

- Zhang, Z.; Yu, X.; Qian, J. Classification of Finger Movements for Prosthesis Control with Surface Electromyography. Sens. Mater. 2020, 32, 1523–1532. [Google Scholar]

- Xu, Z.; Shen, L.; Qian, J.; Zhang, Z. Advanced Hand Gesture Prediction Robust to Electrode Shift with an Arbitrary Angle. Sensors 2020, 20, 1113. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, K. Real-time Surface Emg Pattern Recognition for Hand Gestures Based on an Artificial Neural Network. Sensors 2019, 19, 3170. [Google Scholar] [CrossRef]

- Kong, D.; Zhu, J.; Duan, C.; Lu, L.; Chen, D. Bayesian linear regression for surface roughness prediction. Mech. Syst. Sig. Process. 2020, 142, 106770. [Google Scholar] [CrossRef]

- Al-Angari, H.; Kanitz, G.; Tarantino, S.; Cipriani, C. Distance and mutual information methods for emg feature and channel subset selection for classification of hand movements. Biomed. Signal Process. Control 2016, 27, 24–31. [Google Scholar] [CrossRef]

- Benatti, S.; Milosevic, B.; Farella, E.; Gruppioni, E.; Benini, L. A Prosthetic Hand Body Area Controller Based on Efficient Pattern Recognition Control Strategies. Sensors 2017, 17, 869. [Google Scholar] [CrossRef] [PubMed]

- Kakoty, N.M.; Hazarika, S.M.; Gan, J.Q. EMG Feature Set Selection Through Linear Relationship for Grasp Recognition. J. Med. Biol. Eng. 2016, 36, 883–890. [Google Scholar] [CrossRef]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.; Yang, C. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Yang, C.; Long, J.; Urbin, M.A.; Feng, Y.; Song, G.; Weng, J.; Li, Z. Real-Time Myocontrol of a Human–Computer Interface by Paretic Muscles After Stroke. IEEE T. Cogn. Dev. Syst. 2018, 10, 1126–1132. [Google Scholar] [CrossRef]

- Dardas, N.H.; Georganas, N.D. Real-time hand gesture detection and recognition using bag-of-features and support vector machine techniques. IEEE Trans. Instrum. Meas. 2011, 60, 3592–3607. [Google Scholar] [CrossRef]

- Li, Z.; Guan, X.; Zou, K.; Xu, C. Estimation of Knee Movement From Surface EMG Using Random Forest with Principal Component Analysis. Electronics 2020, 9, 43. [Google Scholar] [CrossRef]

- Wu, Y.; Zheng, B.; Zhao, Y. Dynamic Gesture Recognition Based on LSTM-CNN. In Proceedings of the 2018 Chinese Automation Congress, Xi’an, China, 30 November–2 December 2018; pp. 2446–2450. [Google Scholar]

- Ding, Z.; Yang, C.; Tian, Z.; Yi, C.; Fu, Y.; Jiang, F. sEMG-based Gesture Recognition with Convolution Neural Networks. Sustainability 2018, 10, 1865. [Google Scholar] [CrossRef]

- Geng, W.; Du, Y.; Jin, W.; Wei, W.; Hu, Y.; Li, J. Gesture Recognition By Instantaneous Surface Emg Images. Sci. Rep. 2016, 6, 36571. [Google Scholar] [CrossRef]

- Wei, W.; Wong, Y.; Du, Y.; Hu, Y.; Kankanhalli, M.S.; Geng, W. A Multi-stream Convolutional Neural Network for sEMG-based Gesture Recognition in Muscle-computer Interface. Pattern Recognit. Lett. 2019, 119, 131–138. [Google Scholar] [CrossRef]

- Shen, S.; Gu, K.; Chen, X.; Yang, M.; Wang, R. Movements Classification of Multi-channel sEMG Based on CNN and Stacking Ensemble Learning. IEEE Access 2019, 7, 137489–137500. [Google Scholar] [CrossRef]

- Coteallard, U.; Fall, C.L.; Drouin, A.; Campeaulecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Wong, Y.; Wei, W.; Du, Y.; Kankanhalli, M.S.; Geng, W. A novel attention-based hybrid CNN-RNN architecture for sEMG-based gesture recognition. PLoS One 2018, 13, 10. [Google Scholar] [CrossRef]

- Simao, M.A.; Neto, P.; Gibaru, O. EMG-based Online Classification of Gestures with Recurrent Neural Networks. Pattern Recognit. Lett. 2019, 128, 45–51. [Google Scholar] [CrossRef]

- He, Y.; Fukuda, O.; Bu, N.; Okumura, H.; Yamaguchi, N. Surface EMG Pattern Recognition Using Long Short-Term Memory Combined with Multilayer Perceptron. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 5636–5639. [Google Scholar]

- Nasri, N.; Orts-Escolano, S.; Gomez-Donoso, F.; Cazorla, M. Inferring Static Hand Poses from a Low-Cost Non-Intrusive sEMG Sensor. Sensors 2019, 19, 371. [Google Scholar] [CrossRef]

- Xie, B.; Li, B.; Harland, A.R. Movement and Gesture Recognition Using Deep Learning and Wearable-sensor Technology. In Proceedings of the 2018 International Conference on Artificial Intelligence and Pattern Recognition, Beijing, China, 18–20 August 2018; pp. 26–31. [Google Scholar]

- Ali, S. Gated Recurrent Neural Networks for EMG-based Hand Gesture Classification. A Comparative Study. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1094–1097. [Google Scholar]

- Tuncer, T.; Dogan, S.; Subasi, A. Surface EMG signal classification using ternary pattern and discrete wavelet transform based feature extraction for hand movement recognition. Biomed. Signal Process. Control 2020, 58, 101872. [Google Scholar] [CrossRef]

- Wang, C.; Guo, W.; Zhang, H.; Guo, L.; Huang, C.; Lin, C. sEMG-based continuous estimation of grasp movements by long-short term memory network. Biomed. Signal Process. Control 2020, 59, 101774. [Google Scholar] [CrossRef]

- Zhou, Y.; Huang, Y.; Pang, J.; Wang, K. Remaining useful life prediction for supercapacitor based on long short-term memory neural network. J. Power Sources 2019, 440, 227149. [Google Scholar] [CrossRef]

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef] [PubMed]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Muller, H. Electromyography Data for Non-invasive Naturally-controlled Robotic Hand Prostheses. Sci. Data 2014, 1, 1–13. [Google Scholar]

- Benalcazar, M.E.; Motoche, C.; Zea, J.A.; Jaramillo, A.G.; Anchundia, C.E.; Zambrano, P.; Segura, M.; Benalcazar, P.F.; Perez, M. Real-time hand gesture recognition using the Myo armband and muscle activity detection. In Proceedings of the 2017 IEEE 2nd Ecuador Technical Chapters Meeting, Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar]

- Welcome to Myo Support. Available online: https://support.getmyo.com/hc/en-us (accessed on 15 July 2020).

- gForce-100 Gesture Armband. Available online: http://oymotion.com/en/product32/150 (accessed on 15 July 2020).

| Acquisition Device | Myo Armband | Gestures | 21 |

|---|---|---|---|

| Sampling frequency | 200 Hz | Repetition times | 30 |

| Channel number | 8 | Sampling time of a repetition | 2 s |

| Subject number | 13 | Finish time of a gesture | 0.5–1.5 s |

| Age range of subjects | 22–26 | Repetition interval | 2 s |

| Health state | Intact subjects | Gesture interval | 5 min |

| m (Time Steps) | 20 | 40 | 60 | 80 |

|---|---|---|---|---|

| (ms) | 100 | 200 | 300 | 400 |

| Accuracy | 83.8 ± 7.5% | 89.6 ± 5.5% | 91.5 ± 4.7% | 90.8 ± 5.4% |

| Work | Channels | Device | Sampling Rate (Hz) | Gestures | Repetition | Gesture Duration (s) | Subjects | Classifier | Accuracy (%) | RTP (ms) |

|---|---|---|---|---|---|---|---|---|---|---|

| Hu [27] | 10 | Myo(8+2) | 100 | 52 | 10 | 5 | 27 | LCNN | 87.0 | NRT |

| Xie [31] | 16 | Myo(8+8) | 200 | 17 | 6 | 5 | 10 | LCNN | 83.6 | NRT |

| Wu [21] | 8 | Myo(8) | 200 | 5 | 12 | 5 | NI | LCNN | 98.0 | NRT |

| Simao [28] | 16 | Myo(8+8) | 200 | 8 | NI | NI | NI | LSTM/GRU | 95.0 | NRT |

| Samadani [32] | 12 | Myo(8+4) | 100 | 18 | 6 | 5 | 40 | LSTM | 89.5 | NRT |

| Nasri [30] | 8 | Myo(8) | 200 | 6 | 195 | 10 | 35 | GRU | 99.8 | 940 |

| He [29] | 12 | Myo(8+4) | 100 | 52 | 10 | 5 | 27 | LSTM | 75.5 | 400 |

| Ours | 8 | Myo(8) | 200 | 21 | 30 | 2 | 13 | GRU | 89.6 | 200 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; He, C.; Yang, K. A Novel Surface Electromyographic Signal-Based Hand Gesture Prediction Using a Recurrent Neural Network. Sensors 2020, 20, 3994. https://doi.org/10.3390/s20143994

Zhang Z, He C, Yang K. A Novel Surface Electromyographic Signal-Based Hand Gesture Prediction Using a Recurrent Neural Network. Sensors. 2020; 20(14):3994. https://doi.org/10.3390/s20143994

Chicago/Turabian StyleZhang, Zhen, Changxin He, and Kuo Yang. 2020. "A Novel Surface Electromyographic Signal-Based Hand Gesture Prediction Using a Recurrent Neural Network" Sensors 20, no. 14: 3994. https://doi.org/10.3390/s20143994

APA StyleZhang, Z., He, C., & Yang, K. (2020). A Novel Surface Electromyographic Signal-Based Hand Gesture Prediction Using a Recurrent Neural Network. Sensors, 20(14), 3994. https://doi.org/10.3390/s20143994